A Recommender System Model for Presentation Advisor Application Based on Multi-Tower Neural Network and Utility-Based Scoring

Abstract

1. Introduction

- A personalized recommendation system is designed and implemented that supports skill building in presentation creation through contextualized feedback and resources;

- A hybrid recommendation model is proposed that combines deep learning and utility-based scoring to enhance the relevance and adaptability of the suggestions;

- The cold-start challenge is addressed by leveraging feature-based modeling and problem sequence learning to improve recommendations for new users and content.

2. Related Work

2.1. Presentation Advisor Tools

- Font and image management helps ensure that the fonts are consistent and images are optimized;

- Slide consistency: checks for uniformity in design elements like colors, fonts, and layouts;

- Presentation optimization identifies and automatically resolves technical issues within the presentation, such as missing fonts or large file sizes.

- Does not offer educational content or recommendations on design principles, although it excels in technical presentation management;

- Does not measure whether the content in a presentation is eye-catching;

- Price: the free version provides only a few essential features; the paid version is licensed as part of the user’s annual subscription.

- AI coaching for public speaking provides real-time feedback on delivery, such as tone, pacing, and filler words;

- A simulated practice environment offers a virtual audience to make practice sessions feel more realistic;

- Skill improvement: helps users build confidence and improve their presentation delivery over time.

- The lack of slide integration: does not interact with or analyze PowerPoint slides, making it less useful for content or design feedback;

- Content evaluation: does not assess whether the slides or spoken messages are engaging or well structured;

- Price: the pricing may vary and it is typically offered as part of educational or organizational packages.

2.2. Recommender Systems

- Content-Based RSs: suggest items like those the user has previously liked, based on the attributes or features of the items [10];

- Utility-Based RSs: estimate the usefulness of a product or service to a particular user based on a utility function [24,25]. The utility function is often unique for each user and can be influenced by the various attributes of the items being recommended. The utility is a measure of satisfaction or benefit that a user receives from consuming an item, and it can be derived from explicit preferences, such as ratings, or inferred from implicit signals, such as their browsing history or purchase patterns.

2.3. Popular Recommender Systems

2.3.1. Netflix Recommender System

- Matrix factorization extracts latent user–item preferences from interaction data;

- Personalized ranking: focuses on ranking content based on user preferences rather than predicting ratings;

- Deep learning models use convolutional neural networks (CNNs) for visual analysis, recurrent neural networks (RNNs) and transformers for sequential patterns, and autoencoders for cold-start scenarios;

- The hybrid approach combines collaborative filtering with content-based methods to improve recommendation accuracy;

- Contextual bandits and graph neural networks (GNNs): adapt recommendations dynamically based on real-time user behavior. When a user visits Netflix, the system must select and rank content to display. Instead of relying solely on historical data, contextual bandits explore and exploit by testing different recommendations and learning from user feedback (e.g., clicks and watch duration). This allows recommendations to adapt dynamically, considering factors like time of day, device type, and recent viewing behavior;

- Diversity and serendipity models ensure that users receive a mix of relevant and unexpected content to enhance engagement;

- Multi-armed bandits for cold-start problems: to address the case in which there is not much information about a new user or item. This approach carefully balances exploring new options and using what is already known to give good recommendations. This balance helps the system learn user preferences step by step, even with very few data at the beginning.

2.3.2. Amazon Recommender System

2.3.3. LinkedIn Learning Recommendation Engine

- Computes learner and course embeddings: generates embeddings for all courses based on past engagement;

- Ranks courses for each learner: computes ranking scores using the Neural CF output layer;

- Filters and finalizes recommendations: selects the top k courses for final recommendations.

- Neural collaborative filtering [43]: Neural CF consists of two multi-layer neural networks: the learner network and the course network. The learner network encodes a learner’s past watched courses into a sparse vector representation, while the course network represents similarities between courses based on co-watching patterns. These networks feed into an embedding layer, which generates learner and course embeddings, effectively capturing relationships between users and content. Finally, the output layer computes a personalized recommendation score ranking courses for each learner based on their predicted relevance. The big advantage of this two-tower architecture is the separate training of the learner and the course embeddings, thus allowing for the reuse of tasks, such as finding related courses and supporting the model’s explainability;

- Response prediction model: the response prediction component improves course recommendations by modeling learner–course interactions using a generalized linear mixture model (GLMix) [44]. The GLMix learns global model coefficients shared across all learners as well as the per-learner and per-course coefficients. The recommendations are customized based on individual learning patterns as well as recent improvements that integrate course watch time as a weight factor, ensuring that courses with higher engagement are prioritized.

2.3.4. YouTube Recommender System

3. Data Generation and Exploratory Analysis for Presentation Recommender System

3.1. Data Generation

- Common presentation challenges, such as lack of engagement, poor readability, or inconsistent design. These challenges are systematically listed and categorized in Table 2;

- Presentation types, which define the context or purpose of a presentation such as academic lectures, business pitches, or training sessions. A detailed classification is presented in Table 3;

- Audience types, encompassing different viewer profiles like executives, students, or the general public, each with distinct expectations and comprehension levels. A comprehensive overview of these audience categories is shown in Table 4.

3.1.1. Article Generation

- The article’s name and summary;

- A predefined primary issue (e.g., “text-heavy”);

- A list of related common presentation challenges (Table 2);

- A presentation type that the article is best suited for (Table 3);

- A relevant audience type (Table 4);

- A popularity score representing prior user engagement;

- The date when the article was submitted.

3.1.2. User Profile Generation

3.1.3. Presentation Generation

- A user ID and unique presentation ID;

- A submission timestamp (randomized between 2005 and the present);

- A set of issues identified in the presentation (the same as the presentation challenges that are presented in Table 2).

3.1.4. Rating Generation

- User preferences matching article attributes: higher scores for aligned articles;

- Presentation type match: boost for articles matching the user’s preferred style;

- Audience type match: preference is given to content suitable for the user’s target audience;

- Historical article popularity: articles with high past engagement receive a slight rating boost;

- Temporal weighting: recent presentation problems influence ratings more than older issues.

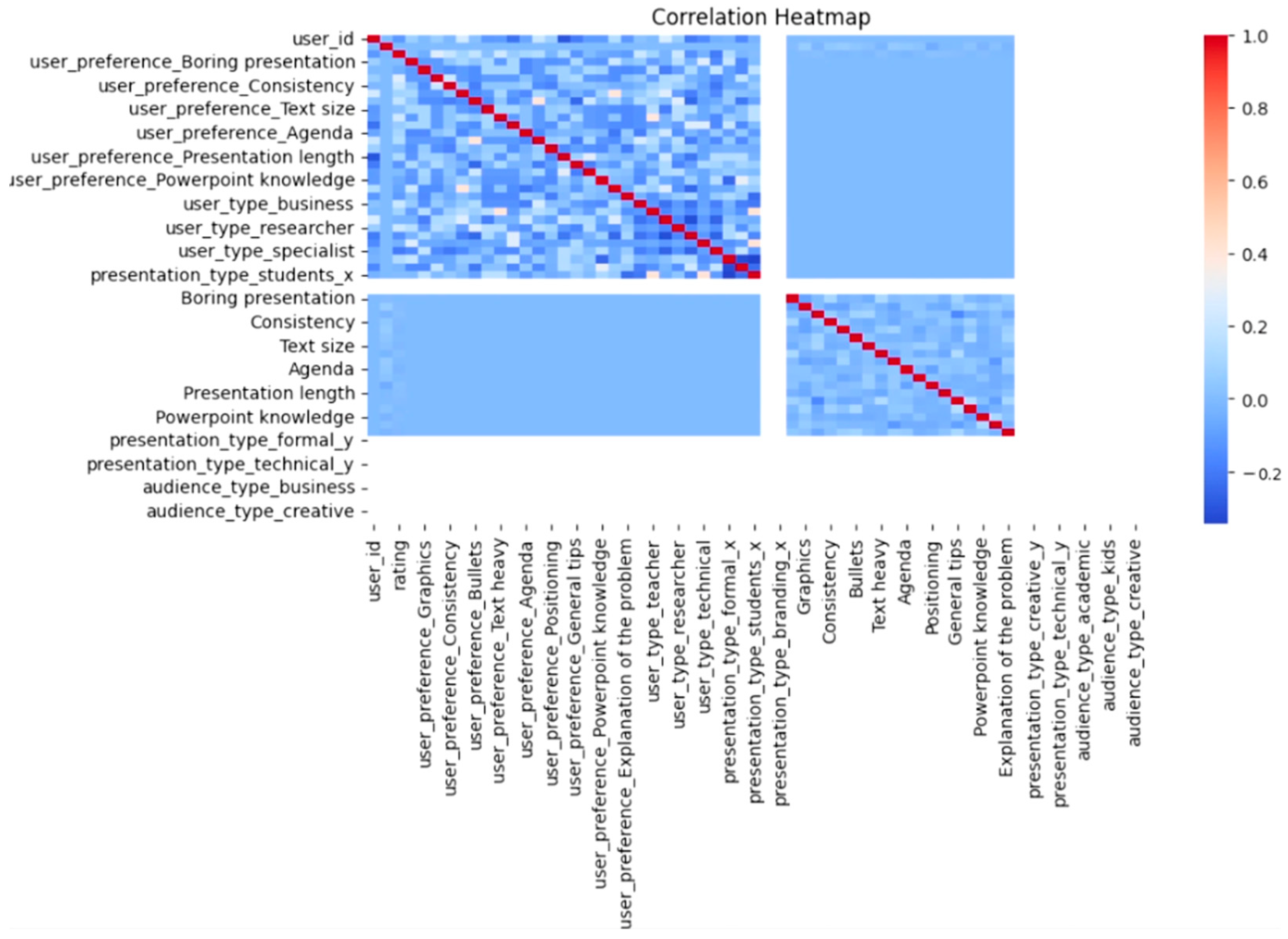

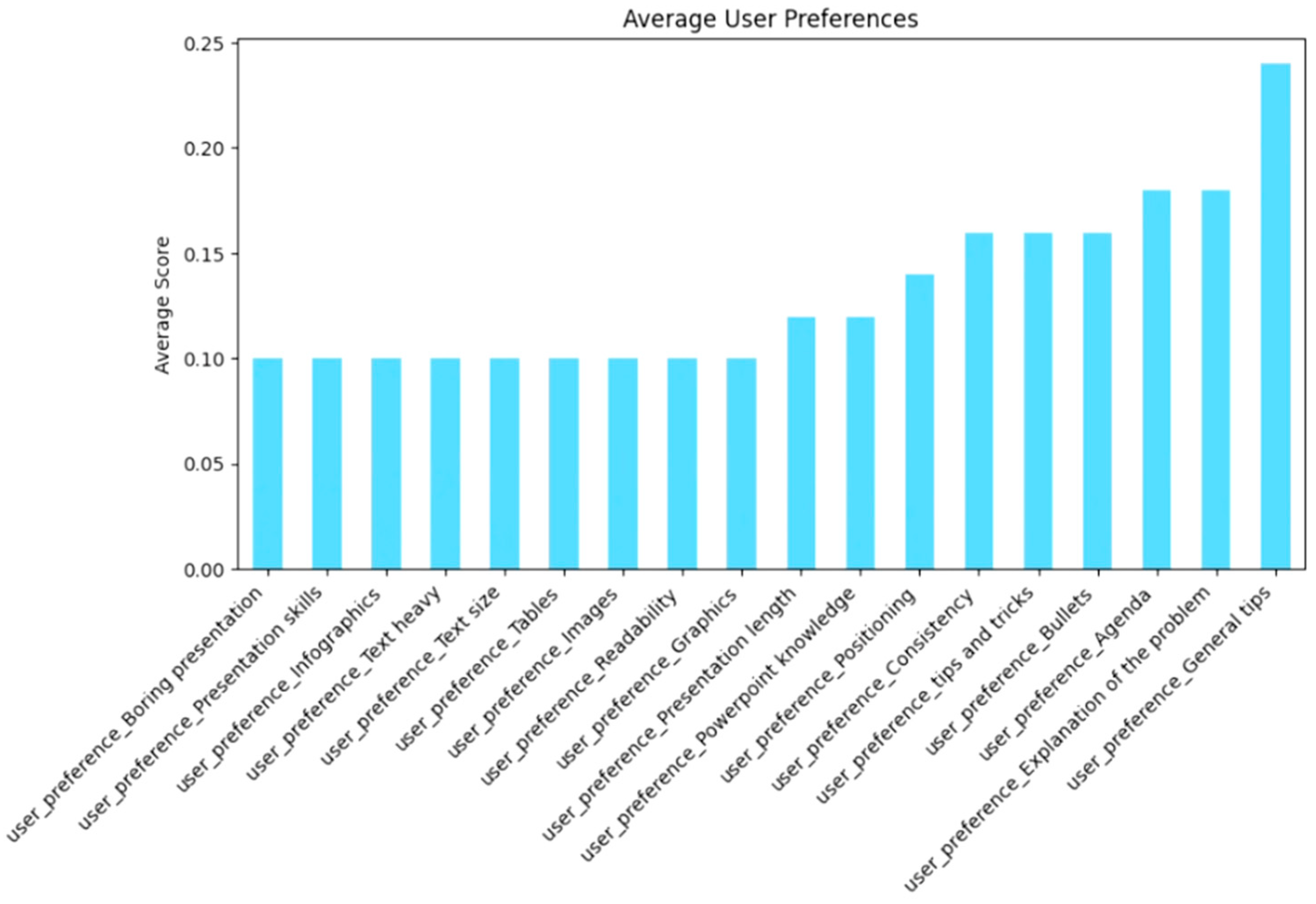

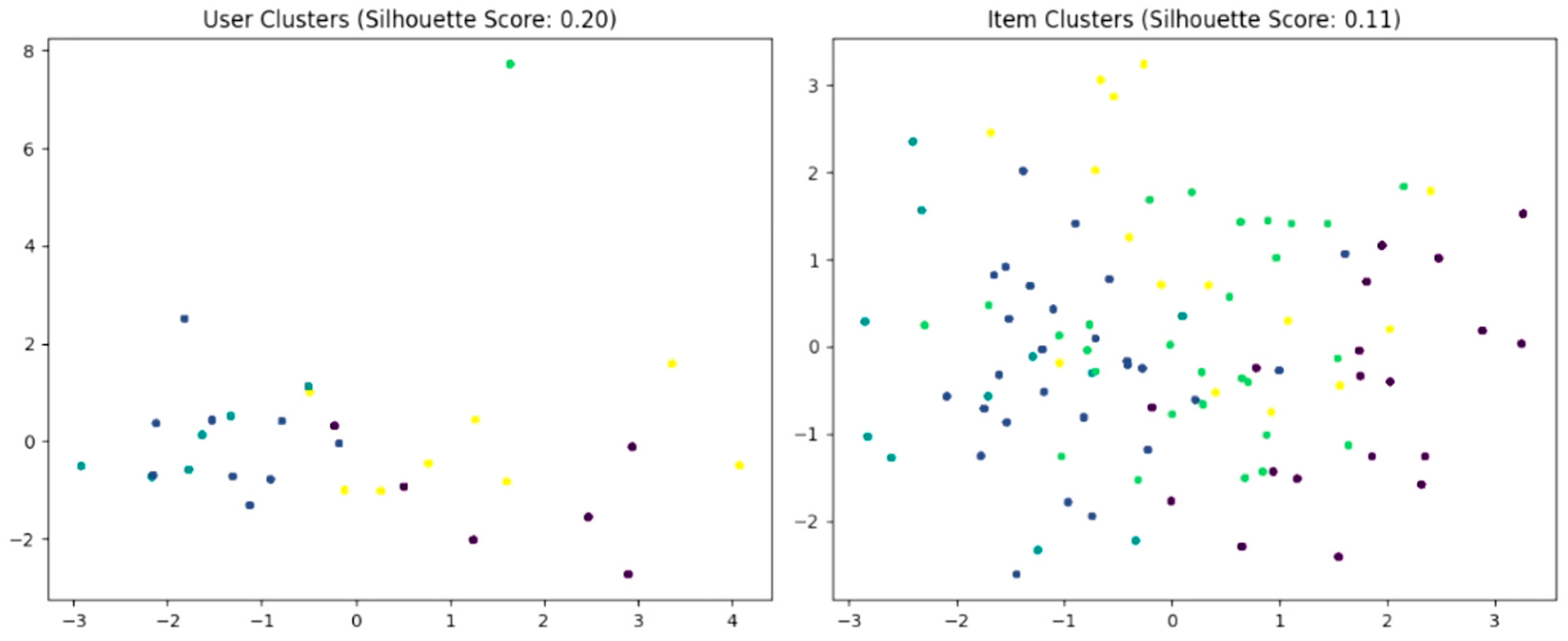

3.2. Exploratory Analysis

4. Recommender System Design

4.1. Recommender System’s Role in the Presentation Advisor

- User profile: This includes the user’s personal preferences, expertise level, and previous interactions with the system. The system leverages this information to provide personalized and context-aware suggestions;

- Current presentation: The content of the ongoing presentation is analyzed to identify its style, structure, and potential areas for improvement. This allows the system to recommend resources that align closely with the specific needs of the current work;

- Previous presentations: Insights are drawn from past presentations, especially the problems or areas of improvement identified in those projects. This historical data ensure that recommendations address recurring issues and help the user build on their past experiences;

- User preferences and constraints: The system takes into account user-defined preferences, such as the following:

- the type of presentation (e.g., formal, educational, or persuasive);

- the target audience (e.g., executives or students).

The system also takes into account several constraints:- Attention span: Users who spend less time on the system receive fewer, more concise recommendations to align with their limited availability;

- Location-based accessibility: Certain articles or materials are filtered out based on the user’s region, recognizing cultural sensitivity. For example, what works well in the United States might be inappropriate or even offensive in parts of Asia, so recommendations are curated to ensure cultural relevance and respect.

4.2. Recommendation System Architecture

- PStyle checker service: Evaluates the coherence of the presentation slides using a custom-built AI model to assess the consistency of a presentation’s visual style [53]. It compares the slides in pairs and identifies any slides that deviate from the overall style. If all slides are consistent, it returns a value of one; otherwise, the value returned is an index of the inconsistent slide(s) as an array. In the Presentation Advisor (PA), a score of zero indicates inconsistency, while one indicates consistency;

- Presentation checker service: This module identifies structural and content-related issues in the presentation, such as overcrowded slides, a poor layout, and the ineffective use of multimedia via multiple AI modules [54]. The detected issues are mapped to specific presentation problem categories (as described in Table 2) and are fed into the Presentation Advisor (PA) as an array of corresponding values.

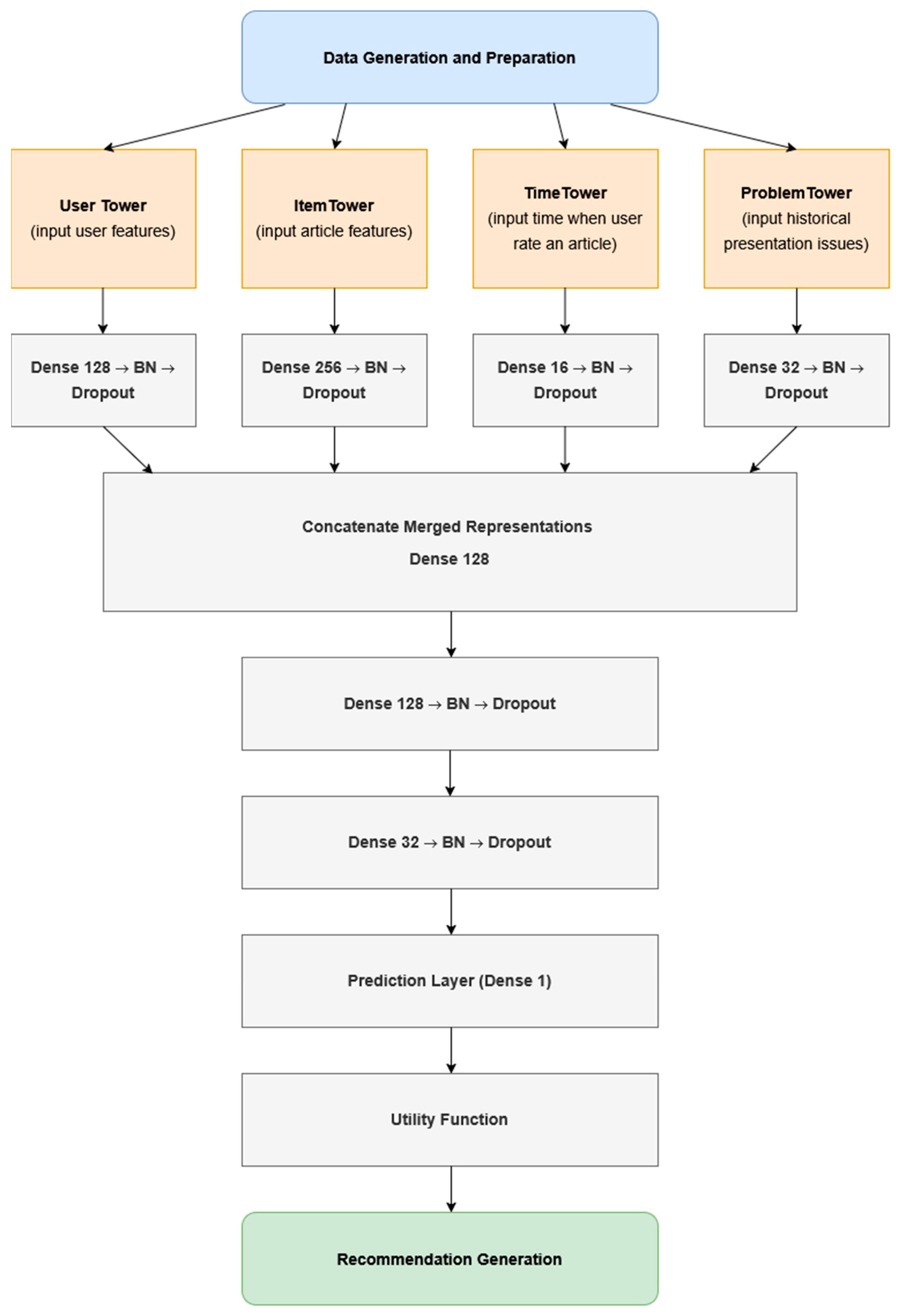

4.3. Recommender Model Architecture

5. Methodology for Training the Recommender Model

5.1. Feature Extraction

5.2. Model Fine-Tuning

- The units per tower: {64, 128, 256};

- The embedding size: {16, 32, 64};

- The dropout rate: [0.1–0.5];

- The learning rate: [10−5, 10−3] (log-uniform distribution).

5.3. Model Training

5.4. Cold-Start Probem

- Cold-start for users: Since the suggested recommender model is feature-based, it can leverage available user attributes, such as demographics, past interactions, and engagement history, even if the user has minimal interaction data. This allows the system to generate reasonable recommendations from the very beginning;

- Cold-start for items (articles): To mitigate this issue, the suggested model’s item branch is only feature-based, thus ensuring that it can adapt to new articles effectively, without even requiring prior interactions with them. This feature-based approach allows for the seamless integration of new items while also preventing overfitting to older, more familiar content;

- Problem sequence tower (pre-trained): Even if a new user has limited history (to only the current presentation), the pre-training on problem sequences allows the model to generalize issue patterns, thus allowing it to make knowledge-based guesses justified on the basis of similar users.

5.5. Model Retraining Strategy

5.6. Utility-Based Scoring/Filtering

- α is a tunable weight that controls the strength of the audience preference boost;

- β is a tunable weight for the long-tail boost that promotes less popular or newer items. A small β ensures diversity and freshness without over-prioritizing niche content;

- predicted_rating reflects the user’s preference as predicted by the multi-tower model;

- audience_boost enhances content aligned with the user’s preferred audience type, helping personalize results without making them too narrow;

- long_tail_boost enhances the visibility of lesser-known or recently added content by combining two factors: content freshness and inverse popularity. This boost is integrated into the overall recommendation score using carefully tuned weights, ensuring that diverse and underexposed items gain exposure without overshadowing highly relevant results. The goal is to improve discovery, diversity, and catalog fairness while preserving strong personalization and content relevance.

- freshness_score is normalized based on how recently the article is submitted;

- inverse_popularity rewards rare or under-engaged items;

- γ controls the balance between freshness and rarity.

- Presentation type matching: Articles matching the user’s preferred presentation style are given higher priority;

- Engagement time adjustment: The number of recommendations is adjusted based on the user’s time spent in the system, reducing suggestions for less engaged users;

- Audience type matching: Each article is assigned a binary boost depending on whether its tagged audience matches the user profile;

- Enhanced utility calculation: All factors are combined to compute a final utility score for each recommendation, ensuring high levels of relevance and impact;

- Long-tail boosting: A combined metric derived from both submission freshness and rarity (inverse popularity), enhancing the visibility of under-represented content.

6. Challenges and Solutions

6.1. Data Sparsity

6.2. Context Understanding

6.3. Technical Challenges

6.3.1. Incremental Learning

6.3.2. Time-Dependent Data

6.3.3. Long-Tail Recommendations

7. Evaluation and Experimental Results

7.1. Evaluated Models

7.1.1. Content-Based Recommendations and Collaborative Filtering

7.1.2. Autoencoders

7.1.3. Reinforcement Learning

- New User: When a new user joins, the RL model initially explores by recommending a diverse set of articles. Based on user interactions (e.g., clicks), it assigns rewards and learns preferences. For example, if the user engages in technology-related articles, the model remembers this interaction and prioritizes similar content in future recommendations;

- New Item: When a new article is added, the RL model includes it in random recommendations to evaluate user interest. If users engage positively, the model assigns higher Q-values to the article, increasing its likelihood of being recommended to relevant users. Conversely, if engagement is low, the article gradually becomes less recommended.

7.1.4. Hybrid Model with Custom Pre-Trained Embeddings

- Custom pre-trained user–item embeddings: User and item features (e.g., profile data, metadata, and behavioral attributes) are encoded using embeddings that are pre-trained on domain-specific interactions. These embeddings are learned via a separate unsupervised model;

- Hand-crafted presentation embeddings: The presentations themselves significantly influence the ratings, and their dynamic nature makes it difficult to capture them accurately with learned embeddings. Users frequently upload new presentations, often at every session, making it computationally expensive and impractical to learn embeddings for them. Therefore, hand-crafted embeddings for presentations are used instead;

- Multi-tower architecture: The model uses dual-tower (or multi-tower) neural architecture with the user–item embeddings as one tower and presentations embeddings as the other. The output of the model is the predicted relevance score. The model is trained with the mean squared error (MSE) as the optimization goal;

- Utility-based scoring: For downstream ranking or evaluation, a post-processing layer adjusts the predicted scores using utility-based criteria, balancing relevance with novelty and diversity.

7.2. Performance Comparison

- A paired t-test: t = −4.8362, p = 0.0084;

- The Wilcoxon signed-rank test: W = 0.0000, p = 0.0625.

- Loss (0.2122): Loss is a general measure of model error during training, typically representing the difference between predicted and actual outcomes. Lower values indicate better performance in terms of minimizing prediction errors;

- Precision (1): Precision evaluates the proportion of recommended items in the top-k list that are relevant to the user. A higher precision value indicates that the model often recommends items that the user is likely to find valuable;

- Novelty (0.45): Novelty measures how “unknown” or uncommon the recommended items are to the user. A higher novelty score indicates that the system is suggesting less popular or previously unseen items, contributing to increased user interest and engagement;

- Serendipity (0.42): Serendipity assesses how surprising yet relevant the recommendations are. A high serendipity score shows that the system can recommend unexpected items that still align with the user’s preferences, providing delightful discoveries;

- Diversity (0.49): Diversity measures how varied the recommended items are. A higher diversity score suggests that the system provides a broader range of items, reducing the likelihood of recommending highly similar or redundant items;

- Item Coverage (0.15): Item coverage reflects the proportion of the total item catalog that is recommended. A low score indicates that the model focuses on a small subset of items, potentially limiting the variety of recommendations and excluding some relevant items from the suggestions.

8. System Deployment and Maintenance Strategy

- PStyle checker: Assesses the visual and structural coherence of presentation slides;

- Presentation checker: Identifies common issues in slides using classification models;

- Recommendation engine: Suggests relevant articles based on user behavior and context.

8.1. Deployment Infrastructure

8.2. Real-Time Inference Pipeline

8.3. CI/CD and Monitoring

8.4. Periodic Retraining via Replay-Based Learning

- Data preparation and model training: The model is retrained on a combined dataset of new and sampled old data for a small number of epochs. EarlyStopping and ModelCheckpoint callbacks are used to avoid overfitting;

- Validation: The updated model is validated on both a static test set to ensure historical performance is preserved and a new test set to assess performance on recent data;

- Deployment: If the validation criteria are met, the model is versioned and deployed through the CI/CD pipeline.

- Maintains historical knowledge while adapting to new trends;

- Efficiently uses computational resources;

- Reduces risk of catastrophic forgetting;

- Enables targeted improvements in underperforming areas.

9. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Neuxpower: Slidewise PowerPoint Add-In. Available online: https://neuxpower.com/slidewise-powerpoint-add-in (accessed on 27 May 2025).

- PitchVantage. Available online: https://pitchvantage.com (accessed on 27 May 2025).

- Ricci, F.; Rokach, L.; Shapira, B. Recommender systems: Techniques, applications, and challenges. In Recommender Systems Handbook, 3rd ed.; Ricci, F., Rokach, L., Shapira, B., Eds.; Springer: New York, NY, USA, 2022; pp. 1–35. [Google Scholar]

- Raza, S.; Rahman, M.; Kamawal, S.; Toroghi, A.; Raval, A.; Navah, F.; Kazemeini, A. A comprehensive review of recommender systems: Transitioning from theory to practice. arXiv 2024, arXiv:2407.13699. [Google Scholar]

- Fayyaz, Z.; Ebrahimian, M.; Nawara, D.; Ibrahim, A.; Kashef, R. Recommendation systems: Algorithms, challenges, metrics, and business opportunities. Appl. Sci. 2020, 10, 7748. [Google Scholar] [CrossRef]

- Saifudin, I.; Widiyaningtyas, T. Systematic literature review on recommender system: Approach, problem, evaluation techniques, datasets. IEEE Access 2024, 12, 19827–19847. [Google Scholar] [CrossRef]

- Alfaifi, Y.H. Recommender systems applications: Data sources, features, and challenges. Information 2024, 15, 660. [Google Scholar] [CrossRef]

- Jeong, S.-Y.; Kim, Y.-K. State-of-the-art survey on deep learning-based recommender systems for e-learning. Appl. Sci. 2022, 12, 11996. [Google Scholar]

- Zhang, Y.; Chen, X. Explainable recommendation: A survey and new perspectives. arXiv 2020, arXiv:1804.11192. [Google Scholar] [CrossRef]

- Ko, H.; Lee, S.; Park, Y.; Choi, A. A Survey of recommendation systems: Recommendation models, techniques, and application fields. Electronics 2022, 11, 141. [Google Scholar] [CrossRef]

- Aljunid, M.; Manjaiah, D.; Hooshmand, M.; Ali, W.; Shetty, A.; Alzoubah, S. A collaborative filtering recommender systems: Survey. Neurocomputing 2025, 617, 128718. [Google Scholar] [CrossRef]

- Kim, T.-Y.; Ko, H.; Kim, S.-H.; Kim, H.-D. Modeling of recommendation system based on emotional information and collaborative filtering. Sensors 2021, 21, 1997. [Google Scholar] [CrossRef]

- Beheshti, A.; Yakhchi, S.; Mousaeirad, S.; Ghafari, S.M.; Goluguri, S.R.; Edrisi, M.A. Towards cognitive recommender systems. Algorithms 2020, 13, 176. [Google Scholar] [CrossRef]

- Chicaiza, J.; Valdiviezo-Diaz, P. A comprehensive survey of knowledge graph-based recommender systems: Technologies, development, and contributions. Information 2021, 12, 232. [Google Scholar] [CrossRef]

- Troussas, C.; Krouska, A.; Tselenti, P.; Kardaras, D.K.; Barbounaki, S. Enhancing Personalized educational content recommendation through cosine similarity-based knowledge graphs and contextual signals. Information 2023, 14, 505. [Google Scholar] [CrossRef]

- Uta, M.; Felfernig, A.; Le, V.; Tran, T.; Garber, D.; Lubos, S.; Burgstaller, T. Knowledge-based recommender systems: Overview and research directions. Front. Big Data 2024, 7, 1304439. [Google Scholar] [CrossRef] [PubMed]

- Chaudhari, A.; Hitham Seddig, A.; Sarlan, A.; Raut, R. A hybrid recommendation system: A review. IEEE Access 2024, 12, 157107–157126. [Google Scholar] [CrossRef]

- Mouhiha, M.; Oualhaj, O.; Mabrouk, A. Combining collaborative filtering and content based filtering for recommendation systems. In Proceedings of the 2024 11th International Conference on Wireless Networks and Mobile Communications (WINCOM), Leeds, UK, 23–25 July 2024. [Google Scholar]

- Singh, K.; Dhawan, S.; Bali, N. An Ensemble learning hybrid recommendation system using content-based, collaborative filtering, supervised learning and boosting algorithms. Autom. Control. Comput. Sci. 2024, 58, 491–505. [Google Scholar] [CrossRef]

- Shahbazi, Z.; Byun, Y.C. Toward social media content recommendation integrated with data science and machine learning approach for e-learners. Symmetry 2020, 12, 1798. [Google Scholar] [CrossRef]

- Al-Nafjan, A.; Alrashoudi, N.; Alrasheed, H. Recommendation System algorithms on location-based social networks: Comparative study. Information 2022, 13, 188. [Google Scholar] [CrossRef]

- Bakhshizadeh, M. Supporting Knowledge workers through personal information assistance with context-aware recommender systems. In Proceedings of the 18th ACM Conference on Recommender Systems (RecSys’24), Bari, Italy, 14–18 October 2024; pp. 1296–1301. [Google Scholar]

- Afzal, I.; Yilmazel, B.; Kaleli, C. An Approach for multi-context-aware multi-criteria recommender systems based on deep learning. IEEE Access 2024, 12, 99936–99948. [Google Scholar] [CrossRef]

- Shrivastava, R.; Sisodia, D.; Nagwani, N. Utility optimization-based multi-stakeholder personalized recommendation system. Data Technol. Appl. 2022, 56, 782–805. [Google Scholar] [CrossRef]

- Tansuchat, R.; Kosheleva, O. How to make recommendation systems fair: An adequate utility-based approach. Asian J. Econ. Bank. 2022, 6, 308–313. [Google Scholar] [CrossRef]

- Gheewala, S.; Xu, S.; Yeom, S. In-depth survey: Deep learning in recommender systems—exploring prediction and ranking models, datasets, feature analysis, and emerging trends. Neural Comput. Appl. 2025, 37, 10875–10947. [Google Scholar] [CrossRef]

- Devika, P.; Milton, A. Book recommendation using sentiment analysis and ensembling hybrid deep learning models. Knowl. Inf. Syst. 2025, 67, 1131–1168. [Google Scholar] [CrossRef]

- Tran, H.; Chen, T.; Hung, N.; Huang, Z.; Cui, L.; Yin, H. A thorough performance benchmarking on lightweight embedding-based recommender systems. ACM Trans. Inf. Syst. 2025, 43, 1–32. [Google Scholar] [CrossRef]

- Gomez-Uribe, C.A.; Hunt, N. The Netflix recommender system: Algorithms, business value, and innovation. ACM Trans. Manag. Inf. Syst. 2015, 6, 1–19. [Google Scholar] [CrossRef]

- Steck, H.; Baltrunas, L.; Elahi, E.; Liang, D.; Raimond, Y.; Basilico, J. Deep learning for recommender systems: A Netflix case study. AI Magazine 2021, 42, 7–18. [Google Scholar] [CrossRef]

- Nagrecha, K.; Liu, L.; Delgado, P.; Padmanabhan, P. InTune: Reinforcement learning-based data pipeline optimization for deep recommendation models. In Proceedings of the 17th ACM Conference on Recommender Systems (RecSys’23), Singapore, 18–22 September 2023; pp. 430–442. [Google Scholar]

- Barwal, D.; Joshi, S.; Obaid, A.J.; Abdulbaqi, A.S.; Al-Barzinji, S.M.; Alkhayyat, A.; Hachem, S.K.; Muthmainnah. The impact of netflix recommendation engine on customer experience. AIP Conf. Proc. 2023, 2736, 060005. [Google Scholar]

- Smith, B.; Linden, G. Two decades of recommender systems at Amazon.com. IEEE Internet Comput. 2017, 21, 12–18. [Google Scholar] [CrossRef]

- Hardesty, L. The history of Amazon’s recommendation algorithm. Amaz. Sci. 2019. Available online: https://www.amazon.science/the-history-of-amazons-recommendation-algorithm (accessed on 18 June 2025).

- Zhao, J.; Wang, L.; Xiang, D.; Johanson, B. Collaborative denoising auto-encoders for top-N recommender systems. In Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR’19), Paris, France, 21–25 July 2019. [Google Scholar]

- Zhao, Z.; Fan, W.; Li, J.; Liu, Y.; Mei, X.; Wang, Y. Recommender systems in the era of large language models (LLMs). IEEE Trans. Knowl. Data Eng. 2024, 36, 6889–6907. [Google Scholar] [CrossRef]

- Lin, J.; Dai, X.; Xi, Y.; Liu, W.; Chen, B.; Zhang, H.; Liu, Y.; Wu, C.; Li, X.; Zhu, C.; et al. How can recommender systems benefit from large language models: A survey. ACM Trans. Inf. Syst. 2025, 43, 1–47. [Google Scholar] [CrossRef]

- Pellegrini, R.; Zhao, W.; Murray, I. Don’t recommend the obvious: Estimate probability ratios. In Proceedings of the 16th ACM Conference on Recommender Systems (RecSys’22), Seattle, WA, USA, 18–23 September 2022; pp. 188–197. [Google Scholar]

- Zhang, Y.; Ding, H.; Shui, Z.; Ma, Y.; Zou, J.; Deoras, A.; Wang, H. Language models as recommender systems: Evaluations and limitations. In Proceedings of the NeurIPS 2021 Workshop on I (Still) Can’t Believe It’s Not Better, Virtual, 13 December 2021. [Google Scholar]

- Yu, T.; Ma, Y.; Deoras, A. Achieving diversity and relevancy in zero-shot recommender systems for human evaluations. In Proceedings of the NeurIPS 2022 Workshop on Human in the Loop Learning, New Orleans, LA, USA, 2 December 2022. [Google Scholar]

- Lessa, L.F.; Brandao, W.C. Filtering Graduate Courses based on LinkedIn Profiles. In Proceedings of the WebMedia 2018, Salvador, Brazil, 16–19 October 2018. [Google Scholar]

- Urdaneta-Ponte, M.C.; Oleagordia-Ruíz, I.; Méndez-Zorrilla, A. Using linkedin endorsements to reinforce an ontology and machine learning-based recommender system to improve professional skills. Electronics 2022, 11, 1190. [Google Scholar] [CrossRef]

- He, X.; Liao, L.; Zhang, H.; Nie, L.; Hu, X.; Chua, T.S. Neural collaborative filtering. In Proceedings of the 26th International Conference on World Wide Web, Perth, Australia, 3–7 April 2017; pp. 173–182. [Google Scholar]

- Zhang, X.; Zhou, Y.; Ma, Y.; Chen, B.C.; Zhang, L.; Agarwal, D. GLMix: Generalized linear mixed models for large-scale response prediction. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 363–372. [Google Scholar]

- Davidson, J.; Liebald, B.; Liu, J.; Nandy, P.; Van Vleet, T.; Gargi, U.; Gupta, S.; He, Y.; Lambert, M.; Livingston, B.; et al. The YouTube video recommendation system. In Proceedings of the 4th ACM Conference on Recommender Systems (RecSys’10), Barcelona, Spain, 26–30 September 2010; pp. 293–296. [Google Scholar]

- Ma, J.; Zhao, Z.; Yi, X.; Chen, J.; Hong, L.; Chi, E.H. Modeling task relationships in multi-task learning with multi-gate mixture-of-experts. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 1930–1939. [Google Scholar]

- Covington, P.; Adams, J.; Sargin, E. Deep neural networks for YouTube recommendations. In Proceedings of the 10th ACM Conference on Recommender Systems (RecSys’16), Boston, MA, USA, 15–19 September 2016; pp. 191–198. [Google Scholar]

- Vlahova, M.; Lazarova, M. Collecting a custom database for image classification in recommender systems. In Proceedings of the 10th International Scientific Conference on Computer Science (COMSCI), Sofia, Bulgaria, 30 May–2 June 2022. [Google Scholar]

- Green, E. The basics of slide design. In Healthy Presentations; Springer: Cham, Switzerland, 2021; pp. 37–62. [Google Scholar]

- Duarte, N. Slide:ology—The Art and Science of Creating Great Presentations; O’Reilly: Sebastopol, CA, USA, 2008. [Google Scholar]

- Gallo, G. Talk Like TED: The 9 Public Speaking Secrets of the World’s Top Minds; St. Martin’s Press: New York, NY, USA, 2014. [Google Scholar]

- Jambor, H.; Bornhäuser, M. Ten simple rules for designing graphical abstracts. PLoS Comput. Biol. 2024, 20, e1011789. [Google Scholar] [CrossRef]

- Vlahova-Takova, M.; Lazarova, M. Dual-branch convolutional neural network for image comparison in presentation style coherence. Eng. Technol. Appl. Sci. Res. 2025, 15, 21719–21727. [Google Scholar] [CrossRef]

- Vlahova-Takova, M.; Lazarova, M. CNN based multi-label image classification for presentation recommender system. Int. J. Inf. Technol. Secur. 2024, 16, 73–84. [Google Scholar] [CrossRef]

- Bernardini, L.; Bono, F.M.; Collina, A. Drive-by damage detection based on the use of CWT and sparse autoencoder applied to steel truss railway bridge. Adv. Mech. Eng. 2025, 17. [Google Scholar] [CrossRef]

- Qian, F.; Cui, Y.; Xu, M.; Chen, H.; Chen, W.; Xu, Q.; Wu, C.; Yan, Y.; Zhao, S. IFM: Integrating and fine-tuning adversarial examples of recommendation system under multiple models to enhance their transferability. Knowl.-Based Syst. 2025, 11, 113111. [Google Scholar] [CrossRef]

- Tiep, N.; Jeong, H.-Y.; Kim, K.-D.; Xuan Mung, N.; Dao, N.-N.; Tran, H.-N.; Hoang, V.-K.; Ngoc Anh, N.; Vu, M. A New Hyperparameter Tuning Framework for Regression Tasks in Deep Neural Network: Combined-Sampling Algorithm to Search the Optimized Hyperparameters. Mathematics 2024, 12, 3892. [Google Scholar] [CrossRef]

- Moscati, M.; Deldjoo, Y.; Carparelli, G.; Schedl, M. Multiobjective hyperparameter optimization of recommender systems. In Proceedings of the 3rd Workshop Perspectives on the Evaluation of Recommender Systems (PERSPECTIVES 2023), Singapore, 19 September 2023. [Google Scholar]

- Panteli, A.; Boutsinas, B. Addressing the cold-start problem in recommender systems based on frequent patterns. Algorithms 2023, 16, 182. [Google Scholar] [CrossRef]

- Jeong, S.-Y.; Kim, Y.-K. Deep Learning-Based Context-Aware Recommender System Considering Contextual Features. Appl. Sci. 2021, 12, 45. [Google Scholar] [CrossRef]

- Lv, X.; Fang, K.; Liu, T. Content-aware few-shot meta-learning for cold-start recommendations using cross-modal attention. Sensors 2024, 24, 5510. [Google Scholar] [CrossRef]

- Luo, Y.; Jiang, Y.; Jiang, Y.; Chen, G.; Wang, J.; Bian, K.; Li, P.; Zhang, Q. Online item cold-start recommendation with popularity-aware meta-learning. In Proceedings of the 31st ACM SIGKDD Conference on Knowledge Discovery and Data Mining, V.1 (KDD’25), Toronto, Canada, 3–7 August 2025; Association for Computing Machinery: New York, NY, USA; pp. 927–937. [Google Scholar]

- Ding, S.; Feng, F.; He, X.; Liao, Y.; Shi, J.; Zhang, Y. Causal incremental graph convolution for recommender system retraining. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 4718–4728. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Yao, L.; Sun, A.; Tay, Y. Deep learning-based recommender system: A survey and new perspectives. ACM Comput. Surv. 2020, 52, 1–38. [Google Scholar] [CrossRef]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous methods for deep reinforcement learning. In Proceedings of the 33rd International Conference on Machine Learning, Proceedings of Machine Learning Research 2016, New York City, NY, USA, 19–24 June 2016; Volume 48, pp. 1928–1937. [Google Scholar]

- TensorFlow Serving. Available online: https://github.com/tensorflow/serving (accessed on 18 June 2025).

- Parmar, T. Implementing CI/CD in Data Engineering: Streamlining Data Pipelines for Reliable and Scalable Solutions. Int. J. Innov. Res. Eng. Multidiscip. Phys. Sci. 2025, 13. [Google Scholar] [CrossRef]

- Rensing, F.; Lwakatare, L.E.; Nurminen, J.K. Exploring the application of replay-based continuous learning in a machine learning pipeline. In Proceedings of the Workshops of the EDBT/ICDT 2025 Joint Conference, Barcelona, Spain, 25–28 March 2025; Boehm, M., Daudjee, K., Eds.; [Google Scholar]

| Tool | Features | Limitations | Competitor |

|---|---|---|---|

| “Slidewise” [1] | Identifies and corrects font mismatches, image sizes, and layout discrepancies | No educational feedback; Only paid version is available | Yes |

| “PitchVantage” [2] | Presentation delivery coaching with AI | Does not support PPT slides | Not a competitor but an integration candidate |

| Challenge | Brief Description |

|---|---|

| Boring presentation | Slides fail to engage the audience; lacking energy or visual interest |

| Graphics | Graphics are unclear, overly complex, or not suitable for the content |

| Readability | Text is hard to read due to the font choice, color contrast, or background |

| Consistency | Inconsistent styles across slides—the fonts, colors, or layouts vary too much |

| Images | Poor-quality, irrelevant, or excessive images |

| Bullets | Overuse of bullet points, making slides feel monotonous and cluttered |

| Text size | Text is either too small or too large, disrupting visual hierarchy and clarity |

| Text-heavy | Slides contain too much text, making them hard to scan or follow |

| Tables | Tables are overly detailed, dense, or hard to interpret quickly |

| Agenda | Lacks clear structure or navigation—audience may feel lost during the presentation |

| Infographics | Infographics are confusing, overly complex, or do not effectively support the message |

| Positioning | Elements on slides are misaligned or poorly arranged, affecting readability and flow |

| Presentation Type | Brief Description |

|---|---|

| Formal | Structured and professional; often used in corporate or official settings |

| Creative | Visually engaging and innovative; uses storytelling, design, and non-linear flow |

| Technical | Focused on detailed data, processes, or research; often for expert or specialized audiences |

| Business | Aimed at decision making, pitching, or reporting in a business context |

| Educational | Designed to teach or explain a topic; commonly used in classrooms or workshops |

| Persuasive | Intended to convince or influence the audience to move towards a viewpoint or action |

| Audience Type | Brief Description |

|---|---|

| Academic | Students, researchers, or educators in educational institutions |

| Business | Professionals in corporate environments; decision makers, managers, or teams |

| Technical | Engineers, developers, analysts, or any audience with specialized technical expertise |

| Kids | Young children or school-age students; requires simple, engaging, and visual content |

| General | Broad public audience without specific background knowledge; mixed skill levels |

| Executive | Senior leaders or C-level stakeholders; values clarity, high-level summaries, and impact |

| Investor | Individuals or groups evaluating opportunities, such as startups or funding pitches |

| Government | Policy makers, civil servants, or public sector professionals |

| Workshop/training | Participants in hands-on or skill-building sessions |

| Conference audience | Mixed-level audience at events; may include peers, experts, or media |

| Layer Name | Type | Output Shape | Parameters | Connected To |

|---|---|---|---|---|

| user_feat_input | InputLayer | (None, 30) | 0 | - |

| item_feat_input | InputLayer | (None, 29) | 0 | - |

| problem_seq_input | InputLayer | (None, 12) | 0 | - |

| time_input | InputLayer | (None, 5) | 0 | - |

| dense_user | Dense | (None, 128) | 3968 | user_feat_input |

| dense_item | Dense | (None, 256) | 7680 | item_feat_input |

| dense_problem_seq | Dense | (None, 32) | 416 | problem_seq_input |

| dense_time | Dense | (None, 16) | 96 | time_input |

| bn_user | BatchNormalization | (None, 128) | 512 | dense_user |

| bn_item | BatchNormalization | (None, 256) | 1024 | dense_item |

| bn_time | BatchNormalization | (None, 16) | 64 | dense_time |

| bn_problem_seq | BatchNormalization | (None, 32) | 128 | dense_problem_seq |

| dropout_user | Dropout | (None, 128) | 0 | bn_user |

| dropout_item | Dropout | (None, 256) | 0 | bn_item |

| dropout_time | Dropout | (None, 16) | 0 | bn_time |

| dropout_problem_seq | Dropout | (None, 32) | 0 | bn_problem_seq |

| dense_user_embed | Dense | (None, 16) | 2064 | dropout_user |

| dense_item_embed | Dense | (None, 16) | 4112 | dropout_item |

| dense_problem_seq_proj | Dense | (None, 32) | 1056 | dropout_problem_seq |

| concat_embedding | Concatenate | (None, 80) | 0 | dense_user_embed, dense_item_embed, dropout_time, dense_problem_seq_proj |

| dense_combined | Dense | (None, 128) | 10,368 | concat_embedding |

| bn_combined | BatchNormalization | (None, 128) | 512 | dense_combined |

| dropout_combined | Dropout | (None, 128) | 0 | bn_combined |

| dense_hidden | Dense | (None, 32) | 4128 | dropout_combined |

| output | Dense | (None, 1) | 33 | dense_hidden |

| Model | MAE | MSE | RMSE | Timing |

|---|---|---|---|---|

| CBF+CF | 1.13 | 1.96 | 1.40 | 0.29 s |

| Autoencoders | 3.05 | 10.46 | 3.23 | ~1 h |

| RL (DQN) | 3.22 | 12.22 | 3.49 | ~15 min |

| Hybrid model | 0.11 | 0.033 | 0.18 | ~25 min |

| Hybrid model + custom pre-trained embeddings | 0.49 | 0.36 | 0.60 | ~1 h |

| Rank | Article ID | True Rating | Predicted Score | Days Since User Rated |

|---|---|---|---|---|

| 1 | 9 | 4.5 | 4.77493 | 3413.88 |

| 2 | 8 | 5.0 | 4.717092 | 3587.75 |

| 3 | 6 | 4.5 | 4.642761 | 1771.29 |

| 4 | 35 | 4.5 | 4.627602 | 2197.96 |

| 5 | 17 | 4.5 | 4.605495 | 3002.75 |

| 6 | 3 | 4.5 | 4.59516 | 7124.75 |

| 7 | 41 | 4.5 | 4.577928 | 560.04 |

| 8 | 26 | 4.5 | 4.568518 | 7069.38 |

| 9 | 46 | 4.5 | 4.562939 | 6663.63 |

| 10 | 25 | 5.0 | 4.555358 | 7126.96 |

| Rank | Article ID | True Rating | Predicted Score | Days Since User Rated | Utility Score |

|---|---|---|---|---|---|

| 1 | 9 | 4.5 | 4.77493 | 3413.88 | 5.48542 |

| 2 | 8 | 5.0 | 4.717092 | 3587.75 | 5.407775 |

| 3 | 6 | 4.5 | 4.642761 | 1771.29 | 5.174015 |

| 4 | 17 | 4.5 | 4.605495 | 3002.75 | 5.149448 |

| 5 | 25 | 5.0 | 4.555358 | 7126.96 | 5.100156 |

| 6 | 35 | 4.5 | 4.627602 | 2197.96 | 5.088142 |

| 7 | 31 | 4.5 | 4.423069 | 5007.63 | 5.083353 |

| 8 | 3 | 4.5 | 4.59516 | 7124.75 | 5.005856 |

| 9 | 26 | 4.5 | 4.568518 | 7069.38 | 4.926949 |

| 10 | 63 | 4.5 | 4.27723 | 3257.38 | 4.887227 |

| Before (Ranked by Predicted Rating) | After (Ranked by Utility Score) |

|---|---|

| The top recommendations are ranked only by model prediction score | Recommendations are ranked by utility score, balancing a blend of predicted score and time-aware utility |

| Items with high rating but old interactions (e.g., articles 25, 26, and 3) are placed at the top | Newer articles (e.g., articles 6, 8, and 9) are boosted, given priority due to recency |

| Older articles with high rating but low levels of recent user interaction are over-recommended | Time decay effect: older but still relevant articles are considered but pushed lower |

| No time sensitivity; stale but high-scoring items remain on top | Recency of interactions reorders results for better engagement |

| Metric | Value | Metric | Value |

|---|---|---|---|

| Loss | 0.2122 | Serendipity | 0.42 |

| Precision | 1 | Diversity | 0.49 |

| Novelty | 0.45 | Item Coverage | 0.15 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vlahova-Takova, M.; Lazarova, M. A Recommender System Model for Presentation Advisor Application Based on Multi-Tower Neural Network and Utility-Based Scoring. Electronics 2025, 14, 2528. https://doi.org/10.3390/electronics14132528

Vlahova-Takova M, Lazarova M. A Recommender System Model for Presentation Advisor Application Based on Multi-Tower Neural Network and Utility-Based Scoring. Electronics. 2025; 14(13):2528. https://doi.org/10.3390/electronics14132528

Chicago/Turabian StyleVlahova-Takova, Maria, and Milena Lazarova. 2025. "A Recommender System Model for Presentation Advisor Application Based on Multi-Tower Neural Network and Utility-Based Scoring" Electronics 14, no. 13: 2528. https://doi.org/10.3390/electronics14132528

APA StyleVlahova-Takova, M., & Lazarova, M. (2025). A Recommender System Model for Presentation Advisor Application Based on Multi-Tower Neural Network and Utility-Based Scoring. Electronics, 14(13), 2528. https://doi.org/10.3390/electronics14132528