Abstract

Efficient and accurate license plate recognition (LPR) in unconstrained environments remains a critical challenge, particularly when confronted with skewed imaging angles and the limited computational capabilities of edge devices. In this study, we propose a high-performance, FPGA-based license plate alignment and recognition (LPAR) system to address these issues. Our LPAR system integrates lightweight deep learning models, including YOLOv4-tiny for license plate detection, a refined convolutional pose machine (CPM) for pose estimation and alignment, and a modified LPRNet for character recognition. By restructuring the pose estimation and alignment architectures to enhance the geometric correction of license plates and adding channel and spatial attention mechanisms to LPRNet for better character recognition, the proposed LPAR system improves recognition accuracy from 88.33% to 95.00%. The complete pipeline achieved a processing speed of 2.00 frames per second (FPS) on a resource-constrained FPGA platform, demonstrating its practical viability for real-time deployment in edge computing scenarios.

1. Introduction

License plate recognition (LPR) has become an essential component in modern intelligent transportation systems, enabling automated traffic surveillance, electronic toll collection, and smart parking management. Despite its widespread deployment, achieving reliable LPR performance in unconstrained environments remains challenging. Factors such as oblique viewing angles, varying illumination, motion blur, and partial occlusion frequently degrade the accuracy of both detection and recognition stages. Moreover, the reliance of conventional LPR systems on cloud-based computer or high-performance industrial PCs introduces network latency and hardware overhead, limiting their applicability in real-time edge scenarios [1,2,3].

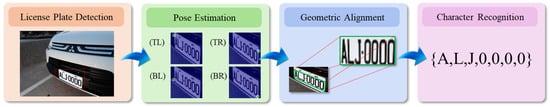

To overcome these limitations, this paper presents a high-efficiency LPR system implemented onto a field-programmable gate array (FPGA) specifically designed for edge computing environments. The proposed system integrates three key modules—license plate detection, pose estimation and geometric alignment, and character recognition—into a low-latency and cost-effective streamlined pipeline, as illustrated in Figure 1. Many lightweight models are suitable for use as license plate detectors [4,5,6]. YOLOv4-tiny [4] was selected as the front-end plate detector due to its favorable trade-off between speed and accuracy, making it well suited for resource-constrained hardware. However, the geometric integrity of the detected plates cannot be guaranteed, especially under perspective distortion, necessitating a robust correction mechanism.

Figure 1.

LPR framework incorporating pose estimation and alignment.

To address the issue of geometric correction and edge-computing efficiency, we propose a license plate alignment and recognition (LPAR) system that integrates the functions of license plate detection, pose estimation, perspective transformation, and character recognition. The license plate pose estimation method is based on a refined version of the convolutional pose machine (CPM) [7] framework. This deep learning-based module directly predicts the four corner points of a license plate and uses these points for a perspective transformation to rectify the image to a canonical frontal view. For the final recognition stage, a modified attention-based LPRNet [8] is employed. The proposed LPAR system was optimized through restructuring, pruning, and quantization to ensure high accuracy and low computational load on FPGA hardware. The contributions of this work are fourfold:

- An efficient pose estimation method based on a refined and pruned CPM model is proposed for license plate alignment. This method enables robust corner-point prediction for perspective transformation, ensuring accurate geometric correction across a wide range of viewing angles.

- A lightweight character recognition module was constructed by modifying the LPRNet model and utilizing an attention-based mechanism to capture more spatial information and detailed features, thereby improving recognition accuracy.

- A fully integrated and FPGA-compatible LPAR pipeline was established, incorporating model restructuring, pruning, and quantization. The FPGA-based LPAR system achieved millisecond-level inference speed and watt-level power consumption, outperforming GPU-based implementations in terms of efficiency and suitability for edge deployment.

- An end-to-end license plate detection, alignment, and recognition (LPAR) system was developed. On skewed license plate datasets, the proposed LPAR system achieved a recognition accuracy of 95.00%, significantly outperforming the original LPRNet [8] (88.33%), demonstrating its effectiveness in practical scenarios.

2. Related Work

2.1. License Plate Recognition Techniques

License plate recognition (LPR) is one of the earliest real-world applications of computer vision [1,2,3]. Earlier works on general LPR tasks consisted of character segmentation and classification stages [1], where the effectiveness of character recognition was highly dependent on the segmentation method used. With the rise of deep learning, both the speed and accuracy of LPR systems have seen significant improvements [3,9,10]. Li and Shen proposed solutions based on convolutional neural networks (CNNs) to tackle the character segmentation challenge [9]. The model described in [10] enhanced character recognition performance by converting the CNN output into an input sequence for recurrent neural networks (RNNs) [11]. More recently, researchers have integrated channel and spatial attention mechanisms into RNN models to capture more detailed features for character recognition [12,13]. Additionally, Ismail et al. [14] approached the LPR task as a sequence labeling problem, predicting character sequences by extending the vision transformer with a self-attention mechanism [15].

However, RNN models increase computational complexity and hardware overhead [11,12], limiting their applicability in real-time edge-computing devices. To address this, Zherzdev and Gruzdev proposed LPRNet [8], a lightweight architecture built on CNNs that deliberately omits traditional RNNs. LPRNet significantly reduces computational complexity while supporting real-time inference. Several enhancements have been proposed to further improve recognition accuracy. For example, Wang et al. [16] combined character segmentation and recognition to increase the robustness of the system in complex environments. In this study, we added an attention-based mechanism to the RNN-free LPRNet model to improve recognition accuracy while reducing computational complexity.

Furthermore, with the growing importance of edge computing, LPR models are increasingly being adapted for deployment on resource-constrained edge devices, reducing reliance on cloud-based infrastructure and enhancing inference speed [3,4]. This study continued in that direction by developing an FPGA-compatible LPR pipeline optimized for embedded environments.

2.2. License Plate Pose Estimation and Alignment

In unconstrained environments, skewed license plates introduce significant challenges to recognition performance. Many researchers have addressed this problem [17,18,19]. Kim et al. [17] corrected the perspective distortion of license plate images using a linear approximation to detect the quadrangle corresponding to the license plate area. However, this image processing method is unreliable due to the environmental image noise and the difficulty of tuning the binarization parameters. Špaňhel et al. [18] demonstrated that geometric correction via perspective transformation can greatly improve recognition accuracy using simpler models. Their approach utilized a deep learning-based Hourglass Network [20] to estimate the four corner points of a license plate, followed by a perspective transformation to align it to a standard frontal view. Yoo and Jun [19] proposed a MobileNet-based deep neural network model consisting of two sequential parts: a convolutional part to extract the features of the four corner plate positions, and a regression part with fully connected layers to predict the corner coordinates of the plates. However, the MobileNet-based deep neural network relies on a pre-trained model with an approximate size of 16 MB [19], which limits its applicability in real-time edge scenario.

In the field of human pose estimation, the convolutional pose machine (CPM) proposed by Wei et al. [7] has been widely used for keypoint detection and has inspired numerous improvements [21,22,23]. OpenPose [21], a well-known open-source multi-person keypoint estimator, is also built upon the CPM architecture. Motivated by this, our work adapted the CPM structure to predict the corner points of a license plate, enabling more accurate geometric correction through perspective transformation and improving robustness under multi-view scenarios. Recently, more sophisticated models for human pose estimation, such as HRNet [24] and LitePose [25], have been developed. We conducted experiments to compare these keypoint detection models, which are discussed in later sections.

2.3. Edge Computing and FPGA Deployment

Edge computing has become increasingly prevalent in scenarios such as traffic enforcement systems, smart vehicle navigation, and driver-assistance technologies. The core goal of edge computing is to offload computation from the cloud, reducing bandwidth usage and latency while improving real-time responsiveness.

This work leveraged the Xilinx field-programmable gate array (FPGA) platform [26] equipped with the KV260 board and its deep learning processing unit (DPU) [27,28] for AI inference acceleration. To deploy models on this platform, it is essential to quantize and compile floating-point models into integer form for efficient FPGA execution. The Vitis-AI toolkit [29] provided by Xilinx supports this transformation. Given that Vitis-AI is relatively new and still undergoing rapid development, this study also discusses practical issues and solutions encountered during deployment.

3. Methodology

This study introduces a real-time license plate alignment and recognition (LPAR) system specifically designed for FPGA-based edge computing platforms. The system consists of three primary modules:

- License Plate Detection: A lightweight YOLOv4-tiny model is employed to efficiently and accurately localize license plates in input images.

- Pose Estimation and Alignment: A deep learning-based model is utilized to detect the four corner points of the license plate, followed by perspective transformation to rectify the image.

- Character Recognition: The aligned license plate image is fed into a modified LPRNet for end-to-end character recognition.

To ensure efficient deployment, the entire pipeline was quantized and pruned using the Xilinx Vitis-AI toolkit [29], enabling high-speed, low-power inference on FPGA platforms.

3.1. License Plate Detection

The YOLOv4-tiny [4] model was selected for license plate detection due to its lightweight architecture and fast inference speed, making it well suited for resource-constrained edge environments. The detection process begins with capturing images from a vehicle-facing camera. The YOLOv4-tiny [4] model then identifies the bounding box of the license plate. To ensure complete character coverage, the detected bounding box is enlarged by 150% before passing the cropped image to the next module, as shown in Figure 2.

Figure 2.

(a) License plate region detected by YOLOv4-tiny; (b) enlarged bounding box to ensure full character coverage.

3.2. Pose Estimation and Alignment

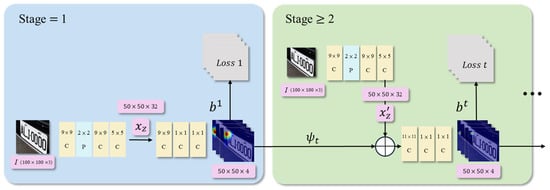

In real-world applications, oblique viewing angles of images often degrade recognition accuracy. To address this issue, we designed a specialized deep learning model for license plate pose estimation, based on a refined convolutional pose machine (CPM) architecture [7]. The model adopts a multi-stage convolutional framework that iteratively refines the prediction of the license plate’s four corner points, as shown in Figure 3. Each stage receives both the image features and the belief maps generated by the previous stage, allowing for progressive refinement of the corner point predictions. The input images are uniformly resized to 100 × 100 pixels to balance computational cost and precision.

Figure 3.

Initial architecture of the pose estimation model.

Let denote the input image and a target corner point. The belief map at stage , where is a pixel location, represents the likelihood that corresponds to point . The first stage belief map is computed as follows:

where denotes the feature extracted from the image at pixel . The function is a deep convolutional estimator used to predict the belief map for assigning the feature in the first stage. For , each subsequent stage integrates contextual information from the previous belief map:

where represents the re-extracted feature at , and extracts contextual cues from . The use of a multi-stage structure and a large receptive field enables the pose estimation model to capture spatial dependencies and generalize well across different viewing angles.

To improve training stability and reduce vanishing gradients, a multi-stage supervision mechanism was adopted. At each stage, the predicted belief map is compared against a ground-truth Gaussian heatmap using the mean squared error (MSE):

The ground-truth belief map is defined as:

Here, represents the true position of point , is the coordinate on the belief map, and controls the spread of the Gaussian heatmap, enabling broader focus during early stages and more precise refinement in later ones. The total loss across all stages is given by

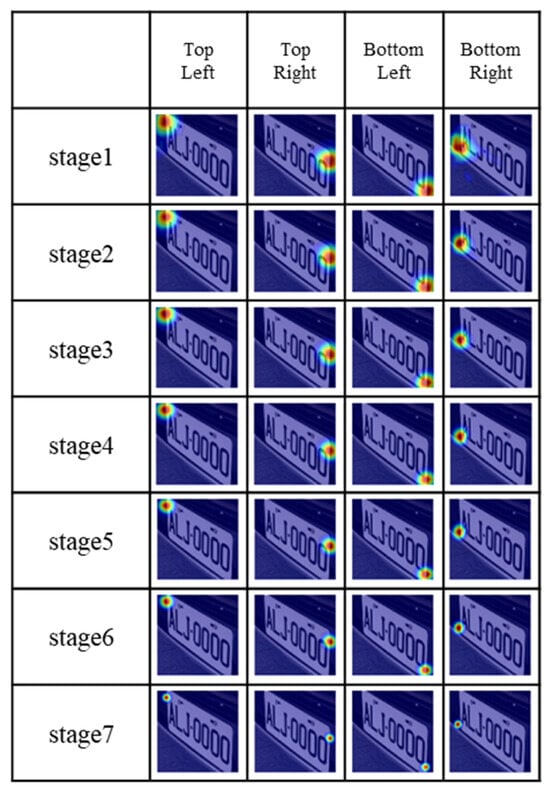

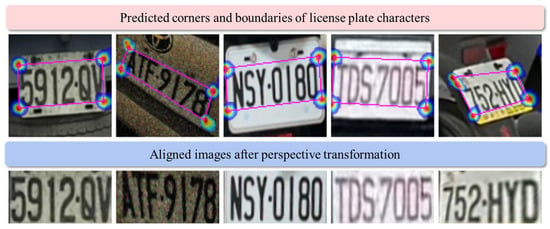

This loss design facilitates effective training and reduces gradient degradation of the pose estimation network at each stage [22,30]. A prediction example of a license plate’s four corners is shown in Figure 4, where the belief maps produced by the pose estimation model across seven stages display clear convergence behavior. The estimated corner positions were then provided to a perspective transformation algorithm to rectify the skewed image of license plates [31]. Some examples of license plate alignment are depicted in Figure 5, where the aligned images were each transformed from the predicted four corners.

Figure 4.

Confidence maps from the pose estimation model at each stage.

Figure 5.

Predicted license plate corners used to transform and rectify the skewed images.

3.3. LPRNet: License Plate Recognition Model

To perform end-to-end character recognition, we adopted LPRNet [8], a lightweight deep learning model originally proposed for fast and accurate license plate recognition. Unlike traditional methods that require character segmentation, LPRNet directly processes the entire license plate image, significantly reducing system complexity. Moreover, its efficient architecture makes it highly suitable for deployment on resource-constrained platforms, such as FPGAs [26].

3.3.1. LPRNet Architecture and Model Optimization

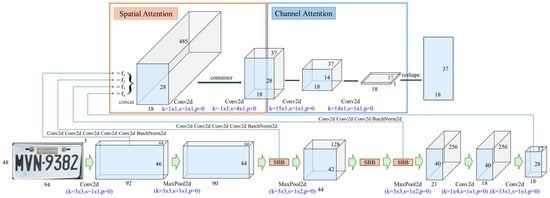

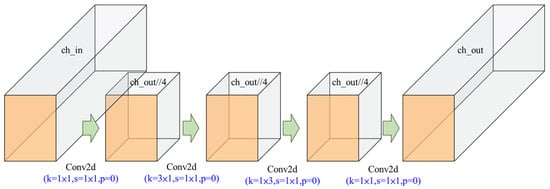

The original LPRNet architecture, introduced by Zherzdev and Gruzdev [8], utilizes small basic blocks for feature extraction and depth-wise separable convolutions [32] to reduce computational load. It also applies MaxPool3D for spatial compression and employs connectionist temporal classification (CTC) as the sequence learning loss function, enabling training without precise character-level segmentation. However, certain operations in LPRNet, such as nonlinear arithmetic functions, are incompatible with FPGA-based deep learning processing units (DPUs) [28]. To address the issue of unsupported operations in the DPU, we redesigned and optimized the original LPRNet architecture for the Xilinx KV260 platform [27]. As shown in Figure 6, the modified architecture incorporates several structural modifications to improve compatibility and performance. First, we adjusted the input size to 94 × 48 to preserve complete character information. As illustrated in Figure 7, we retained the small basic blocks (SBBs) and asymmetric convolution kernels (3 × 1 and 1 × 3) to maintain strong edge feature representation for improved character boundary localization. Second, to meet the hardware constraints of the DPU, operations such as addition and subtraction were replaced with equivalent convolution patterns. In addition, MaxPool3D layers were replaced by MaxPool2D layers to reduce computation load and improve inference compatibility. As shown in Table 1, these architectural refinements not only resolve hardware limitations but also enhance model efficiency for deployment in edge computing.

Figure 6.

Network architecture of the modified LPRNet backbone. (The grey sticker on top-middle of the license plate was usually placed by a local car vendor. The Chinese characters and digital numbers on the sticker do not affect the model experiments).

Figure 7.

Small basic block (SBB) used for edge feature extraction.

Table 1.

Comparison of the architecture between the original LPRNet and our modified LPRNet.

The pre-decoder feature map in the original LPRNet [8] was augmented with a global context embedding [33] through a fully connected layer applied to the backbone output. This output was then tiled to the required size and concatenated with backbone output. Additionally, the feature map’s depth was adjusted to match the number of character classes by applying a 1 × 1 convolution. In this study, we enhanced recognition accuracy by replacing the fully connected and average pooling layers in the original LPRNet with a multi-scale feature fusion block. This block concatenates features from multiple layers and applies convolution followed by a nonlinearity function:

Here, represents feature maps from different scales, and is an activation function. This approach improves multi-level feature representation and enables high-accuracy recognition on low-power FPGA platforms. Furthermore, we developed an attention-based mechanism to replace the 1 × 1 convolution in the original LPRNet for adjusting the feature map’s depth to match the number of character classes. As shown in Figure 6, spatial and channel attention were added to capture more spatial information and detailed features for the decoding procedure, thereby improving recognition accuracy.

3.3.2. CTC Loss and Greedy Decoding

License plate recognition is a sequential prediction problem in which the number and position of characters may vary across samples. Traditional sequence models often require frame-wise labeled data, which is both difficult and time-consuming to annotate. To address this, we adopted connectionist temporal classification (CTC) [8], a loss function specifically designed to align input sequences with unsegmented or weakly labeled outputs. CTC allowed the model to learn mappings between input features and label sequences without explicit alignment by introducing a special “blank” token and considering all possible alignments. The loss function is defined as follows:

where represents the input feature sequence, and is the ground-truth character sequence. The conditional probability is computed by summing over all valid alignment paths using a dynamic programming algorithm. During inference, a greedy decoding algorithm was employed to select the most probable character at each timestep. This is followed by a post-processing step to remove repeated characters and blank tokens:

where is the final predicted license plate string, and denotes a candidate character. This decoding process ensures that the output sequence accurately reflects the license plate content even in the presence of blank or repeated predictions [8]. Through these architectural optimizations and hardware-aware adjustments, the modified LPRNet achieves high recognition accuracy with minimal computational cost. This makes it well suited for real-time inference on FPGA-based edge computing platforms.

4. Experiment Setup

4.1. Experimental Environment

The experiments were conducted on a system equipped with an Intel Core i9-13900 processor (Intel Corporation, Santa Clara, CA, USA), an NVIDIA RTX 4090 GPU(NVIDIA Corporation, Santa Clara, CA, USA), and 128 GB of RAM. The software environment included Ubuntu 20.04 LTS (Canonical Ltd., London, UK) as the operating system, CUDA 12.4 (NVIDIA Corporation, Santa Clara, CA, USA) for GPU acceleration, and PyTorch 2.0.1 (https://pytorch.org, accessed on 17 June 2025) as the deep learning framework.

4.2. Dataset Preparation

The Taiwan License Plate Dataset (TLPD) comprises 3032 images of Taiwanese license plates, which were collected and manually annotated [34]. Each image is labeled with the four corner points of the license plate and its corresponding character sequence. These images were captured under various real-world conditions, including both daytime and nighttime scenarios, with most night images taken using flash. Although rainy scenes were not directly captured, the dataset includes common challenges, such as reflections, dirt, and tilted angles. It also covers both stationary and moving vehicles across diverse environments, including city streets, parking lots, and campus roads. While further diversity is certainly valuable, we believe the current dataset already reflects many practical situations and demonstrates the robustness of our system. To enhance the model’s generalization capability, various data augmentation techniques were also applied, including rotation, perspective transformation, and brightness adjustment. These augmentations were designed to simulate real-world conditions, such as varying angles and lighting.

4.3. Model Training and Parameter Settings

The proposed LPAR system incorporates three deep learning models: YOLOv4-tiny, a refined CPM-based pose estimation and alignment model, and a modified LPRNet. All models were trained on the TLPD dataset [34] for 500 epochs, with a batch size of 64 and an initial learning rate of 0.001. The refined CPM-based pose estimation module took approximately 3 h to train, while the character recognition module (modified LPRNet) required about 6 h. Both modules used the Adam optimizer with a fixed batch size and can be fully trained within a day on a single high-end GPU. This makes the system feasible for practitioners with limited resources.

To enable deployment on FPGA-based edge devices, the trained models were further optimized through pruning and quantization. The quantized models were then compiled using the Xilinx Vitis-AI toolchain (Xilinx, San Jose, CA, USA) [29], which converted floating-point operations into integer operations (e.g., INT8 format) suitable for FPGA execution. This step was essential for achieving real-time performance on resource-constrained hardware.

5. Experiment Results

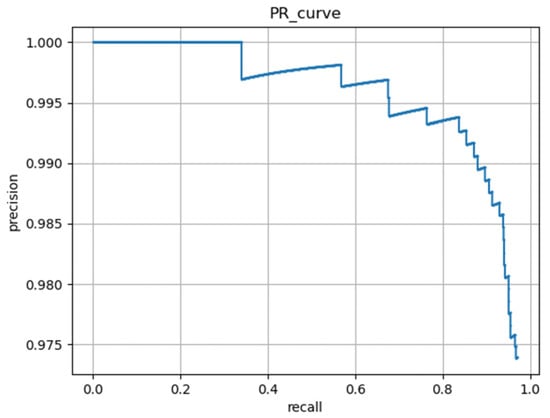

5.1. YOLOv4-Tiny Detection Results

The YOLOv4-tiny model was evaluated on the license plate detection task using the collected Taiwanese dataset. As shown in Figure 8, the model achieved an average precision (AP) of 0.97, demonstrating excellent detection performance. The high precision ensured that the cropped plate regions provided to subsequent modules were accurate and consistent, enabling reliable downstream processing.

Figure 8.

PR curve of YOLOv4-tiny for license plate detection.

5.2. Pose Estimation and Alignment Results and Ablation Study

5.2.1. Keypoint Detection Accuracy

To evaluate the localization accuracy of the proposed pose estimation model, we adopted the percentage of correct keypoints (PCK) metric. A predicted keypoint was considered correct if the Euclidean distance between its location on the predicted belief map and the corresponding ground-truth location was less than a threshold . The PCK is formally defined as follows:

where N is the total number of predicted keypoints. The function is an indicator that returns 1 if the condition is met. The threshold was defined as the total boundary length of the license plate divided by a constant m, i.e.,

where denotes the length of the -th edge of the license plate (e.g., top, bottom, left, right), and is the number of edges considered. This formulation allowed the to adapt to the plate size while preserving evaluation consistency. In this study, we set , which empirically provides a good trade-off between tolerance and strictness, and aligns with prior keypoint evaluation protocols [7,35]. Under this criterion, the belief maps produced by the pose estimation model, as shown in Figure 4, demonstrated that the original 7-stage unpruned model achieved a PCK of 96.67%, validating the accuracy and reliability of the proposed corner-point estimation method.

5.2.2. Ablation Studies

Several ablation studies were conducted to evaluate the effects of different architectural settings:

- Stage Pruning: Reducing the number of stages from 7 to 2 maintained a PCK of 96.67%, indicating redundancy in the later stages (Table 2).

Table 2. PCK accuracy of the pose estimation model under different stage counts and thresholds.

Table 2. PCK accuracy of the pose estimation model under different stage counts and thresholds.

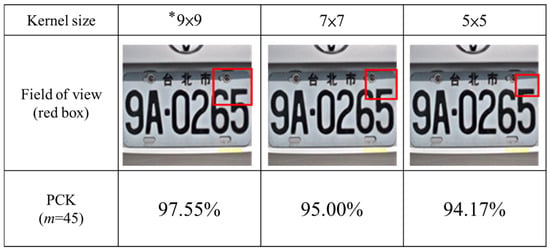

- Kernel Size Modification: Reducing the number of 9 × 9 kernels from two to one increased PCK accuracy to 97.55%. In contrast, replacing them with smaller 7 × 7 or 5 × 5 kernels led to performance degradation (Figure 9).

Figure 9. PCK under different kernel sizes. The asterisk (*) indicates the kernel size that achieved the highest PCK accuracy in the experiment. The red boxes represent the receptive field corresponding to each kernel size. The Chinese text “台北市“ on the license plate means “Taipei City“.

Figure 9. PCK under different kernel sizes. The asterisk (*) indicates the kernel size that achieved the highest PCK accuracy in the experiment. The red boxes represent the receptive field corresponding to each kernel size. The Chinese text “台北市“ on the license plate means “Taipei City“.

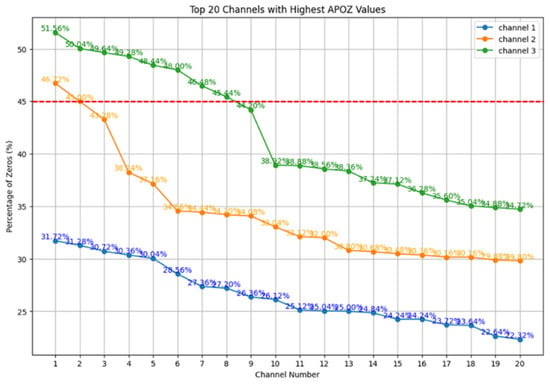

- Channel Pruning with APoZ: Using the average percentage of zeros (APoZ) method, channels with over 45% zero activation were pruned. As shown in Figure 10, pruning 2 channels from layer 2 and 8 from layer 3 led to a slight PCK accuracy drop to 96.7%, which is acceptable given the computational savings. This pruning significantly reduces multiply–accumulate (MAC) operations and memory access, aligning with the logic and memory constraints of our target FPGA platform.

Figure 10. Top 20 APoZ-ranked channels. The red dashed line indicates the 45% pruning threshold, above which channels are removed.

Figure 10. Top 20 APoZ-ranked channels. The red dashed line indicates the 45% pruning threshold, above which channels are removed.

- Additionally, internal operations were adjusted to meet deployment requirements. Due to hardware limitations on FPGA, operations such as basic arithmetic operations, mean pooling, and 3D convolutions are not supported. Therefore, we redesigned the network to rely solely on convolutional layers, replacing unsupported components and aligning with the operator set supported by Xilinx Vitis-AI [29], as listed in Table 1. This approach preserves recognition performance while ensuring compatibility with accelerated deployment on the Xilinx KV260 FPGA platform.

5.2.3. Angle Robustness Test

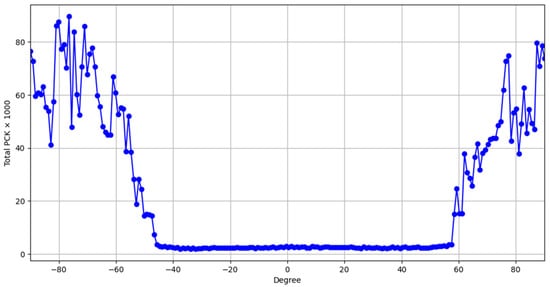

Figure 11 shows that the pose estimation model maintained over 90% PCK accuracy within ±45° of rotation range. However, beyond this range, accuracy significantly dropped, indicating that practical deployment should limit plate skew to within this threshold.

Figure 11.

Pose estimation accuracy vs. rotation angle. Total PCK values are multiplied by 1000 for visualization. The model maintains high accuracy within ±45°, beyond which performance drops significantly.

5.2.4. Optimizer Comparison

During retraining after pruning, the Nadam optimizer demonstrated more stable convergence and higher final accuracy compared to Adam, which often fell into local minima. This improvement is attributed to Nadam’s use of Nesterov momentum, which accelerates convergence in sparse gradients.

5.3. Performance on PC-Based Platform

Two test scenarios on character recognition were conducted on a PC-based platform. The first scenario tested the models on an aligned license plate dataset, while the second one tested them on a skewed dataset. For the first test scenario, Table 3 presents the results of 10-fold cross-validation using the aligned license plate dataset. The results show that the modified LPRNet has an average accuracy of 88.92%, which is a 1.78% improvement over the original model [8] (87.14%).

Table 3.

Recognition accuracy of original and modified LPRNet under 10-fold cross-validation.

The results of the second test scenario are shown in Table 4. Three experiments were carried out to evaluate the recognition accuracy of the modified LPRNet and LPAR system tested on aligned and unaligned license plate datasets:

Table 4.

Comparison of recognition accuracy between the modified LPRNet and the LPAR system.

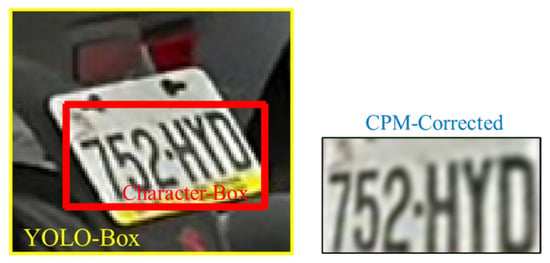

- Baseline model: The unaligned license plate test data from the TLPD dataset with basic data transformation (cropping) were directly utilized to test the modified LPRNet, resulting in 88.33% accuracy. The test data, consisting of images from the YOLOv4-tiny bounding box (YOLO-Box), were cropped to obtain the rectangular character region (Character-Box). In this instance, CPM was used to identify the rectangle’s four corners, as shown in Figure 12. Note that the plate images in the Character-Box were further transformed using perspective to obtain the corrected plate images (CPM-Corrected).

Figure 12. The YOLOv4-tiny bounding box (YOLO-Box), the character region (Character-Box), and the corrected plate image (CPM-Corrected).

Figure 12. The YOLOv4-tiny bounding box (YOLO-Box), the character region (Character-Box), and the corrected plate image (CPM-Corrected). - Manual-aligned dataset: Manually rectified images were utilized to test the modified LPRNet, improving accuracy to 98%, though this is impractical for real-time systems.

- License Plate Alignment and Recognition (LPAR): The modified LPRNet was integrated with the refined CPM module and tested on the unaligned license plate dataset, achieving 95% accuracy.

5.4. Quantization and Speed Evaluation

To deploy the LPAR system to FPGA-based edge devices, the models were quantized to INT8 format using the Vitis-AI toolkit [29]. As shown in Table 5, the pose estimation model’s PCK dropped slightly by 0.05% after quantization. However, quantization had no effect on the recognition accuracy of the proposed LPAR system, which remained at 95%.

Table 5.

Performance comparison of pose estimation and LPAR before and after quantization.

In terms of inference speed on the Xilinx FPGA, the refined CPM model’s inference time was reduced from 0.11 s to 0.013 s after pruning from stages 7 to 2, resulting in a nearly 10× speed improvement. Additionally, recognition accuracy increased by 1.67%. The complete LPAR pipeline achieved an inference speed of 2 FPS on the Xilinx FPGA, as illustrated in Table 6, confirming its suitability for real-time edge deployment.

Table 6.

Inference speed of LPAR before and after refined CPM model stage pruning.

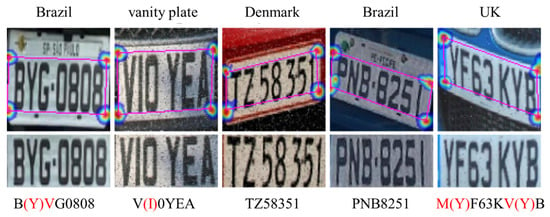

5.5. Zero-Shot Testing on Untrained Plate Formats

The CPM-based LPAR model requires manual annotation of four corner points per plate for geometric correction, which limits direct adaptation to foreign plate formats due to high labeling costs. Most public datasets only provide bounding boxes and are not directly applicable to our task. We conducted zero-shot tests on international plates not seen during training. The results are shown in Figure 13. When the plate structure is similar to Taiwanese ones—e.g., rectangular shape and clearly separated characters—the model still achieves reasonable correction and transferability. Future work will include expanding the dataset to cover more international formats and introducing data synthesis or semi-automatic labeling to reduce cross-domain adaptation costs and enhance generalization.

Figure 13.

Zero-shot testing LPAR on untrained international plate formats. The Hangul character “애” (highlighted on the Korean plate) corresponds to a Korean syllable and is included here as part of the plate number. Red characters and red parentheses indicate positions where the recognition result does not match the ground-truth string.

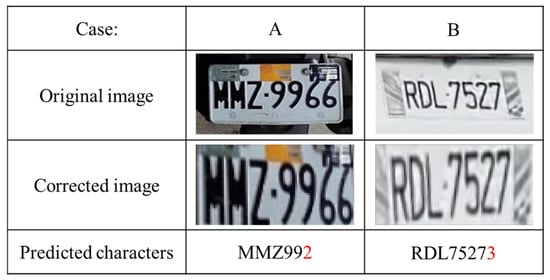

5.6. Analysis of LPAR Failure Cases

In certain environmental conditions, the LPAR system failed to align and recognize the license plate correctly, as illustrated in Figure 14. In Case A, a sticker on the top-right corner of the license plate was connected to the characters, causing local visual confusion. Although the keypoint localization and perspective correction were accurate, the interference led to incorrect character recognition. In Case B, a zebra-patterned background on the right side of the plate created strong edge features. While this did not affect keypoint prediction, the background texture remained after correction, confusing the model in identifying the character region and resulting in recognition failure.

Figure 14.

Failure cases of license plate alignment and recognition. Red characters indicate the positions where the predicted plate string differs from the ground truth.

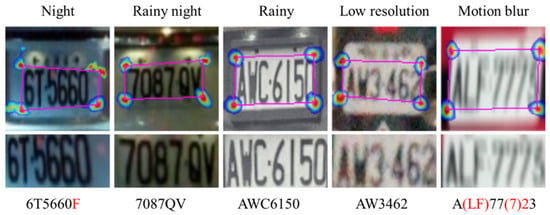

5.7. LPAR Under Challenging Conditions

Additionally, we evaluated the LPAR system under more challenging conditions, such as night, rainy night, rainy day, low resolution, and motion blur (as shown in Figure 15). While character recognition performance may have declined in these scenarios, keypoint detection remained stable, indicating that the geometric correction process continued to function effectively.

Figure 15.

The results of LPAR under some challenging conditions. Red characters indicate the positions where the predicted license-plate string differs from the ground truth.

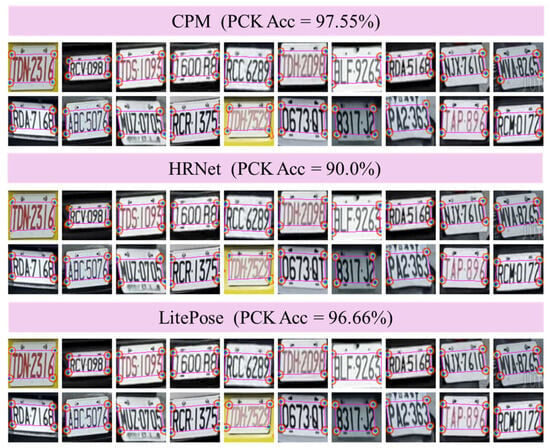

5.8. Experimental Results of Keypoint Detection

5.8.1. Comparison of Models for Keypoint Detection

We conducted experiments to compare the performance of keypoint detection models, including refined CPM [7], HRNet [24], and LitePose [25]. The results are listed in Table 7. The second column in the table indicates the PCK accuracy of the three models tested on 120 frames of regular images, with 20 frames shown in Figure 16. Although LitePose model achieved accuracy closer to the refined CPM pose estimation model, its architecture relies on residual addition, which is not supported on our target deployment platform (Xilinx KV260 FPGA [27]), making it infeasible for practical use. The FPGA deployability is listed in the last column of Table 7. The HRNet model, while a representative modern architecture, performed worse than CPM in our task. Moreover, the refined CPM architecture is structurally simpler and more compatible with FPGA toolchains, allowing for smoother model conversion to deployable formats, such as .xmodel. Considering accuracy, computational cost, and hardware compatibility, the refined CPM model remains the most suitable choice for our system.

Table 7.

Comparison of models for keypoint detection on regular and fisheye images.

Figure 16.

Keypoint detection results for 20 regular image frames.

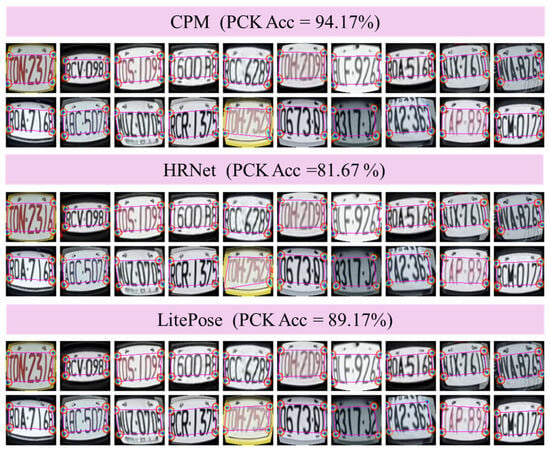

5.8.2. Comparison of Models for Keypoint Detection on Fisheye Images

To further compare the generalizability of our rectification mechanism with HRNet [24] and LitePose [25] under geometric distortions, we constructed a test set of 120 frames of fisheye-distorted license plate images. The tested results are listed in Table 7. The third column in the table indicates the PCK accuracy of the three models tested on 120 frames of fisheye-distorted images, with 20 frames shown in Figure 17. The results show that our refined CPM model performed better than the HRNet and LitePose models.

Figure 17.

Keypoint detection results for 20 fisheye-distorted image frames.

5.9. Comparative Experiments on License Plate Recognition

We conducted experiments to compare the performance of license plate recognition (LPR) models, including our modified LPR model and the CRNN model by Rao et al. [12]. The recognition accuracy results are presented in Table 8. The second, third, and fourth columns in the table indicate the accuracy of the two models tested on the license plate data detected by YOLOv4-tiny (YOLO-Box), the rectangular character region (Character-Box), and the CPM model with correction (CPM-Corrected), respectively. Each test dataset contains 120 frames of plate images, with 10 frames displayed in Figure 18, Figure 19 and Figure 20, respectively. The results show that our modified LPR model outperformed the CRNN model on all datasets. The properties of these two models are compared and listed in Table 9. The results show that our modified LPR model has advantages such as a shorter training time, higher inference speed (FLOPs), smaller model size (Parameters), and FPGA deployability. Moreover, the modified LPR architecture is structurally simpler and more compatible with FPGA toolchains, allowing for smoother model conversion to deployable formats, such as .xmodel.

Table 8.

Comparison of LPR systems on recognition accuracy.

Figure 18.

Character recognition results for the plates detected by YOLOv4-tiny (YOLO-Box). Red characters indicate the positions where the predicted license-plate string differs from the ground truth.

Figure 19.

Character recognition results for the plates cropped by the rectangular region (Character-Box). Red characters indicate the positions where the predicted license-plate string differs from the ground truth.

Figure 20.

Character recognition results for the geometrically corrected plate images (CPM-Corrected). Red characters indicate the positions where the predicted license-plate string differs from the ground truth.

Table 9.

Comparison of LPR systems on model properties.

6. Conclusions

The proposed license plate alignment and recognition (LPAR) system effectively addresses the challenges of geometric distortion and limited computational resources in real-world environments. By combining a lightweight detection network, a refined deep-learning-based pose estimator, and an optimized attention-based recognition model, the LPAR system achieved a high balance between accuracy and efficiency. The experimental results validate its robustness across various viewing angles, with a keypoint detection accuracy of 97.5% and overall recognition accuracy improved from 88.33% to 95.00% after integration. Running at 2 FPS on a Xilinx FPGA, the system also demonstrated practical viability for real-time edge deployment. These outcomes highlight both the engineering applicability and research novelty of the proposed framework.

This study still has several limitations and areas for future improvement:

- When the license plate is tilted beyond 45 degrees, keypoint prediction errors increase significantly, leading to failed geometric correction and lower recognition accuracy. To address this, we plan to include more heavily rotated images during data augmentation to improve robustness under extreme angles.

- The current FPGA platform requires specific model conversion tools (e.g., Xilinx Vitas-AI [29]), and not all PyTorch functions are supported. This limits architectural flexibility and requires hardware-aware design. Future work will explore models more compatible with FPGA toolchains to enhance system scalability and integration.

- To support deployment in GPU-free edge environments, we reduced the model from 7 stages to 2 and applied post-training quantization. In future work, we plan to train larger models and compress them using knowledge distillation to balance performance and generalization.

Additional future work will focus on improving its adaptability under complex conditions, such as low lighting and adverse weather, and extending its use to other intelligent transportation scenarios. We will also expand the dataset to cover more international formats and introduce data synthesis or semi-automatic labeling to reduce cross-domain adaptation costs and enhance generalization. Furthermore, YOLOv5-Nano [5] and NanoDet [6] may offer higher accuracy. We will include deployments with these models to further assess their impact on overall performance.

Author Contributions

Conceptualization, C.-H.H. and H.L.; methodology, C.-H.H.; software, C.-H.H. and H.L.; validation, Y.-T.W. and M.-J.H.; formal analysis, M.-J.H.; investigation, H.L.; resources, Y.-T.W.; data curation, C.-H.H.; writing—original draft preparation, C.-H.H.; writing—review and editing, Y.-T.W.; visualization, C.-H.H.; supervision, Y.-T.W.; project administration, Y.-T.W.; and funding acquisition, Y.-T.W. and M.-J.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Science and Technology Council, Taiwan, under Grant NSTC 113-2221-E-032-016 and Grant NSTC 113-2222-E-032-004.

Data Availability Statement

All data and source code presented in this study are openly available at https://github.com/evan6007/FPGA-LPR (accessed on 17 June 2025), under the MIT License.

Acknowledgments

The authors would like to thank Innodisk Corporation for their technical support and collaborative resources during this research. The authors also sincerely appreciate the constructive comments and valuable suggestions from the anonymous reviewers, which greatly improved the quality of this manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Anagnostopoulos, C.-N.E.; Anagnostopoulos, I.E.; Psoroulas, I.D.; Loumos, V.; Kayafas, E. License plate recognition from still images and video sequences: A survey. IEEE Trans. Intell. Transp. Syst. 2008, 9, 377–391. [Google Scholar] [CrossRef]

- Du, S.; Ibrahim, M.; Shehata, M.; Badawy, W. Automatic license plate recognition (ALPR): A state-of-the-art review. IEEE Trans. Circuits Syst. Video Technol. 2012, 23, 311–325. [Google Scholar] [CrossRef]

- Khan, M.M.; Ilyas, M.U.; Khan, I.R.; Alshomrani, S.M.; Rahardja, S. A review of license plate recognition methods employing neural networks. IEEE Access 2023, 11, 73613–73646. [Google Scholar] [CrossRef]

- Jiang, Z.; Zhao, L.; Li, S.; Jia, Y. Real-time object detection method based on improved YOLOv4-tiny. arXiv 2020, arXiv:2011.04244. [Google Scholar]

- Zendehdel, N.; Chen, H.; Leu, M.C. Real-time tool detection in smart manufacturing using You-Only-Look-Once (YOLO) v5. Manuf. Lett. 2023, 35, 1052–1059. [Google Scholar] [CrossRef]

- NanoDet. Available online: https://github.com/RangiLyu/nanodet (accessed on 7 May 2025).

- Wei, S.-E.; Ramakrishna, V.; Kanade, T.; Sheikh, Y. Convolutional pose machines. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 4724–4732. [Google Scholar] [CrossRef]

- Zherzdev, S.; Gruzdev, A. Lprnet: License plate recognition via deep neural networks. arXiv 2018, arXiv:1806.10447. [Google Scholar]

- Li, H.; Shen, C. Reading car license plates using deep convolutional neural networks and LSTMs. arXiv 2016, arXiv:1601.05610. [Google Scholar]

- Cheang, T.K.; Chong, Y.S.; Tay, Y.H. Segmentation-free vehicle license plate recognition using ConvNet-RNN. arXiv 2017, arXiv:1701.06439. [Google Scholar]

- Recurrent Neural Network. Available online: https://en.wikipedia.org/wiki/Recurrent_neural_network. (accessed on 15 December 2024).

- Rao, Z.; Yang, D.; Chen, N.; Liu, J. License plate recognition system in unconstrained scenes via a new image correction scheme and improved CRNN. Expert Syst. Appl. 2024, 243, 122878. [Google Scholar] [CrossRef]

- Liu, Q.; Liu, Y.; Chen, S.-L.; Zhang, T.-H.; Chen, F.; Yin, X.-C. Improving multi-type license plate recognition via learning globally and contrastively. IEEE Trans. Intell. Transp. Syst. 2024, 25, 11092–11102. [Google Scholar] [CrossRef]

- Ismail, A.; Mehri, M.; Sahbani, A.; Amara, N.E.B. A dual-stage system for real-time license plate detection and recognition on mobile security robots. Robotica 2025, 1–22. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Wang, D.; Tian, Y.; Geng, W.; Zhao, L.; Gong, C. LPR-Net: Recognizing Chinese license plate in complex environments. Pattern Recognit. Lett. 2020, 130, 148–156. [Google Scholar] [CrossRef]

- Kim, T.-G.; Yun, B.-J.; Kim, T.-H.; Lee, J.-Y.; Park, K.-H.; Jeong, Y.; Kim, H.D. Recognition of vehicle license plates based on image processing. Appl. Sci. 2021, 11, 6292. [Google Scholar] [CrossRef]

- Špaňhel, J.; Sochor, J.; Juránek, R.; Herout, A. Geometric alignment by deep learning for recognition of challenging license plates. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 3524–3529. [Google Scholar] [CrossRef]

- Yoo, H.; Jun, K. Deep corner prediction to rectify tilted license plate images. Multimed. Syst. 2021, 27, 779–786. [Google Scholar] [CrossRef]

- Newell, A.; Yang, K.; Deng, J. Stacked hourglass networks for human pose estimation. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part VIII 14. pp. 483–499. [Google Scholar]

- Cao, Z.; Simon, T.; Wei, S.-E.; Sheikh, Y. Realtime multi-person 2d pose estimation using part affinity fields. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar] [CrossRef]

- Wang, K.; Lin, L.; Jiang, C.; Qian, C.; Wei, P. 3D human pose machines with self-supervised learning. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 1069–1082. [Google Scholar] [CrossRef]

- Zhang, T.; Lin, H.; Ju, Z.; Yang, C. Hand Gesture recognition in complex background based on convolutional pose machine and fuzzy Gaussian mixture models. Int. J. Fuzzy Syst. 2020, 22, 1330–1341. [Google Scholar] [CrossRef]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X. Deep high-resolution representation learning for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3349–3364. [Google Scholar] [CrossRef]

- Wang, Y.; Li, M.; Cai, H.; Chen, W.-M.; Han, S. Lite pose: Efficient architecture design for 2d human pose estimation. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13126–13136. [Google Scholar] [CrossRef]

- Field Programmable Gate Array (FPGA). Available online: https://en.wikipedia.org/wiki/Field-programmable_gate_array (accessed on 1 June 2024).

- Xilinx KV260. Available online: https://xilinx.github.io/kria-apps-docs/kv260/2022.1/build/html/index.html (accessed on 17 June 2025).

- The Xilinx Deep Learning Processing Unit (DPU). Available online: https://docs.xilinx.com/r/3.2-English/pg338-dpu/Introduction?tocId=iBBrgQ7pinvaWB_KbQH6hQ (accessed on 1 June 2024).

- Xilinx Vitis-AI. Available online: https://www.xilinx.com/products/design-tools/vitis/Vitis-AI.html (accessed on 1 August 2024).

- Gong, F.; Ma, Y.; Zheng, P.; Song, T. A deep model method for recognizing activities of workers on offshore drilling platform by multistage convolutional pose machine. J. Loss Prev. Process Ind. 2020, 64, 104043. [Google Scholar] [CrossRef]

- Xu, H.; Zhou, X.-D.; Li, Z.; Liu, L.; Li, C.; Shi, Y. EILPR: Toward end-to-end irregular license plate recognition based on automatic perspective alignment. IEEE Trans. Intell. Transp. Syst. 2022, 23, 2586–2595. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

- Liu, W.; Rabinovich, A.; Berg, A.C. Parsenet: Looking wider to see better. arXiv 2015, arXiv:1506.04579. [Google Scholar]

- Lee, H.; Hsiao, C.-H. Taiwan License Plate Dataset. Available online: https://huggingface.co/datasets/evan6007/TLPD (accessed on 4 June 2025).

- Osokin, D. Global context for convolutional pose machines. arXiv 2019, arXiv:1906.04104. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).