Abstract

With the rapid development of synthetic speech and deepfake technology, fake speech poses a severe challenge to voice authentication systems. Traditional detection methods generally rely on manual feature extraction, facing problems such as limited feature expression ability and insufficient cross-scenario generalization performance. To this end, this paper proposes an improved ResNet network based on a Convolutional Block Attention Module (CBAM) for end-to-end fake speech detection. This method introduces channel attention and spatial attention mechanisms into the ResNet network structure to enhance the model’s attention to the temporal characteristics of speech, thereby improving the ability to distinguish between real and fake speech. The proposed model adopts an end-to-end training strategy, directly processes the original spectrogram input, uses the residual structure to alleviate the gradient vanishing problem in the deep network, and enhances the collaborative expression ability of local details and global context through the CBAM module. The experiment is conducted on the ASVspoof2019 LA dataset, and the equal error rate (EER) is used as the main evaluation indicator. The experimental results show that compared with traditional deepfake speech detection methods, the proposed model achieves better performance in indicators such as EER, verifying the effectiveness of the CBAM attention mechanism in forged speech detection.

1. Introduction

1.1. Research Status

Voice forgery technology aims to achieve deceptive attacks on human hearing or Automatic Speaker Verification (ASV) by generating voiceprint features that are highly similar to the target speaker. Current mainstream forgery methods can be divided into five categories based on technical principles: Voice Mimicry [1], replay attack [2], Text-to-Speech Synthesis (TTS) [3], voice conversion (VC) [4], and Adversarial Examples in Speech [5]. With the development of generative artificial intelligence and equipped with powerful deep neural networks (DNNs) [6], voice forgery technology has become more mature, and the difficulty of forgery has been reduced, posing new challenges to audio deepfake detection.

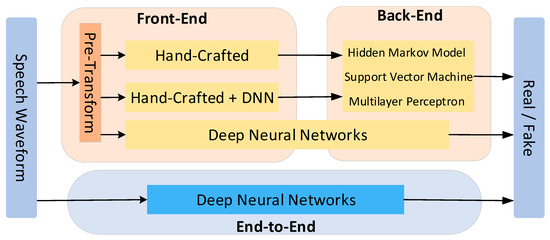

In the process of language deepfake detection technology evolution [7], detection methods have undergone a significant paradigm shift. Early research was mainly based on traditional statistical methods, such as the Hidden Markov Model (HMM) [8] and the Gaussian Mixture Model (GMM), and then gradually developed into a two-stage architecture with front-end feature extraction and back-end classifier’s collaborative processing [9,10,11,12,13]. With the breakthrough of deep learning technology, end-to-end detection schemes based on DNNs are becoming increasingly popular, using pre-transformed features as inputs [14,15,16] to achieve a deep fusion of feature learning and decision-making. In traditional voice deepfake detection systems, the front-end module is responsible for parsing acoustic representations from speech signals, while the back-end module completes the nonlinear mapping of features to detection confidence. Traditional front-end processing mostly uses digital signal processing technology. The widely used acoustic features include fundamental frequency; power spectrum; Linear Frequency Cepstrum Coefficients (LFCCs); Mel-frequency Cepstral Coefficients (MFCCs); Cepstral Mean and Variance Normalization (CMVN); Corrected Group Delay (MGD); Relative Phase Shift (RPS); spectrograms; Constant-Q Cepstral Coefficients (CQCCs); Constant-Q Transform (CQT); and other spectral features and their various variations and combinations [17,18,19,20,21]. These artificially designed features capture the time–frequency characteristics of speech signals through mathematical transformations.

The front-end discriminative features of traditional detection systems use hand-crafted features designed by experts, and the back end directly uses the Gaussian Mixture Model (GMM) or Support Vector Machine (SVM) for classification and decision-making. Among the hand-crafted acoustic features, the CQCC is established as a core feature by the ASVspoof challenge evaluation benchmark due to its excellent time–frequency resolution [22]. Yang et al. proposed sub-band CQCC features to enhance frequency band sensitivity for improving detection performance [23]. Das et al. [24] achieved cross-feature domain information complementarity by fusing eight artificial features and introducing a Multilayer Perceptron (MLP) classifier. Sahidullah et al. [25] used the LFCC, the MFCC, and their first-order and second-order dynamic differential coefficients for forged speech detection and demonstrated that detailed spectral information and dynamic features are beneficial for forged speech detection. With the development of deep neural networks, forged speech detection systems based on deep neural networks have gradually become mainstream. The front end extracts basic acoustic representations through signal processing or shallow networks, and the back end relies on deep networks to autonomously mine high-order semantic features and complete true–false classification. Lavrentyeva et al. [26] constructed a joint detection framework of FFT-LFCC-CMVN multimodal features and a convolutional neural network (CNN) classifier. Li et al. [27] verified the synergistic advantages of CQT features and Residual Convolutional Neural Networks (ResNet), achieved good results, and demonstrated the excellent performance of CQT features in the task of forged speech detection. Alzanot et al. [28] used the spectrum extracted by Short-Time Fourier Transform (STFT) as features and combined it with the residual convolutional network for detection, achieving good detection performance. Chen et al. [5] used the fusion of GMM + CQCC − ResNet + MFCC − ResNet in three models to provide a highly robust solution for speech anti-spoofing tasks, especially in the scenario of a positional replay attack. Monteiro et al. [29] proposed a ResNet model combined with multiple speech feature training and integration to enable the detection model to effectively detect various spoofing attacks.

The current research focus in the field of forged speech detection is gradually shifting to end-to-end models. The architecture represented by the Sinc convolution layer directly captures the key frequency domain representation from the original audio waveform through a parameterized filter group, which shows significant advantages in training stability and classification accuracy [30,31,32]. Among these, the RawNet2 model, using the Sinc convolution layer, was adopted as the baseline model for the ASVspoof2021 challenge [33]. The classification of the forged speech detection system architecture is shown in Figure 1.

Figure 1.

Classification of the forged voice detection system architecture.

1.2. Proposed Method

The design of the network structure, loss function, and training method can improve the performance of the voice deepfake detection model, but the potential of the model is fundamentally determined by the information captured in the initial features. Traditional hand-crafted features are not only time consuming and labor intensive but also prone to losing certain information in the speech, which greatly affects the detection of unknown attacks. For example, the CQT feature has high computational complexity and contains a lot of redundant information. The CQCC feature needs to be further processed based on the CQT, which has high computational overhead. The linear frequency axis of the LFCC does not match the nonlinear perception of the human ear and has a low-frequency resolution in the low-frequency area. Based on this, we use the one-dimensional (1D) original waveform of the voice as a direct input into the model for feature learning. We pay special attention to the performance of forged speech in the time domain, use the residual connection of the ResNet network to retain the original features of the speech, and introduce the CBAM attention mechanism to capture forged features in the two dimensions of channel and space. Different from the traditional architecture, the input of the one-dimensional original waveform can maximize the original features of the speech and ensure the integrity of the speech information. Channel attention dynamically focuses on key feature channels to suppress noise, and spatial attention focuses on key time areas, adjusts the attention weight in the time dimension, and increases the ability to extract local features so that the network can better capture the subtle differences between real and fake voices during the learning process, thereby improving the accuracy and generalization ability of fake voice detection.

The main contributions of this study are as follows:

- The CBAM-ResNet model is proposed, which uses 1D convolution and residual blocks, greatly reducing the amount of model calculation and making it more lightweight.

- We introduce a 1D-CBAM attention mechanism, which combines channel attention and spatial attention to dynamically focus on the key features of the 1D signal and accurately capture the different features from forged speech, thereby improving the model’s sensitivity to real and forged speech.

- During the training process, random noise, time-shift perturbation, and volume scaling are added to the speech to enhance the robustness of the model. Adding a Dropout module to the ResNet model improves the generalization of the model. We conducted a cross-dataset evaluation between ASVspoof2019 and ASVspoof2015, and the experimental results proved that the model has good generalization.

The rest of this paper is organized as follows. Section 2 introduces the ResNet network structure, CBAM attention mechanism, and Focal Loss function. Section 3 elaborates on the structure and training strategy of the proposed model. Section 4 introduces the experimental configuration, the ASVspoof2019 dataset, and evaluation metrics. Section 5 analyzes the experimental results and conducts cross-dataset experiments. Section 6 summarizes the current work and proposes the focus of the next work.

2. Methods

2.1. ResNet Network

The earliest ResNet network was proposed by He et al. [34]. Its core innovation lies in the residual module design that introduces skip connections. By directly adding the input signal to the output of the nonlinear transformation layer, this structure allows the gradient to bypass the deep layer parameters and reach the shallow layer directly during the backpropagation process, effectively alleviating the gradient vanishing problem caused by layer stacking, thereby breaking through the optimization bottleneck of traditional deep networks. The introduction of ResNet not only solves the problem of deep model training but also becomes a milestone in the design of deep model architecture. Although it was originally applied to image classification tasks, its powerful feature abstraction ability has gradually been extended to the field of voice security [35,36], especially in the detection of deepfake voice, where it has shown the ability to efficiently capture traces of time and frequency tampering. The residual connection can retain the original features so that the network can better capture the subtle differences between real and forged voices during the learning process, thereby improving the accuracy and generalization ability of forged voice detection. This section will introduce the ResNet18 network, which is the most commonly used network in the field of deepfake speech detection. Since the input size of speech spectrum features varies, the work of Zhang et al. [37] is taken as an example here. The network structure is shown in Table 1.

Table 1.

ResNet network structure.

The residual module in the ResNet18 network mainly includes two designs, as shown in Figure 2. The standard residual block uses a 3 × 3 convolution kernel to construct a stacked convolution layer, and its jump connection is realized through identity mapping. The final output feature is consistent with the input in terms of spatial dimension and number of channels. The downsampling residual block achieves feature compression by introducing a 3 × 3 convolution layer with stride = 2, which halves the size of the input feature map and doubles the channel dimension. To match the output dimension, its jump connection simultaneously uses a convolution operation with a stride of 2, so the final output feature size is 1/2 of the original input, and the number of channels is doubled.

Figure 2.

ResNet18 residual block.

2.2. CBAM Attention Mechanism

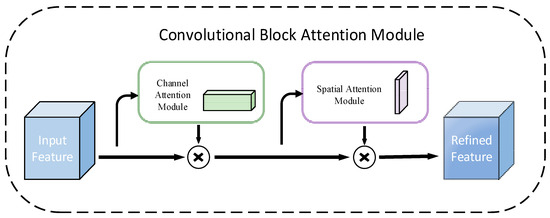

The CBAM attention mechanism is an efficient and lightweight two-way attention mechanism, which consists of a cascade of channel attention and spatial attention modules. It can dynamically enhance the weights of key features in convolutional neural networks and suppress irrelevant noise. Its core idea is to improve the model’s sensitivity to important areas through adaptive feature calibration. It is widely used in tasks such as image classification [38], object detection [39], and speech forgery detection [40]. CBAM is a modular design that can be easily inserted into existing CNN structures to enhance the representation ability of features [41].

As shown in Figure 3, the CBAM module adopts a dual-branch attention mechanism design, which includes two sub-modules: channel attention and spatial attention. The input features first generate the weight distribution of the channel dimension through the channel attention module, and based on this, the input features are weighted and calibrated channel by channel; then, the calibrated features are input to the spatial attention module to further learn the saliency distribution of the spatial dimension, and finally, the optimized output features are obtained through weighted fusion. This cascade structure achieves adaptive enhancement of the original features by focusing on key information of different dimensions in stages. Its mathematical formula is as follows:

Figure 3.

CBAM block.

In the formula, represents element-by-element multiplication, is the input feature, is the channel attention feature output by the channel attention module, is the intermediate feature after the input feature is calibrated by the channel attention weight, is the spatial attention feature output by the spatial attention module, and is the final output feature of the CBAM module.

2.3. Focal Loss

Fake speech datasets usually contain a variety of speech spoofing attacks, and different attack types have significant intra-class differences and detection difficulty differences. Focal Loss is a loss function specially designed to deal with the problem of class imbalance. Therefore, this study uses Focal Loss [42] as the loss function to solve the problem of class imbalance.

Focal Loss has a dual adjustment mechanism. The balancing factor is used to compensate for the sample quantity disadvantage of the minority class, alleviate the model bias caused by the imbalance between the number of real speech and high-variability deepfake speech, and achieve class imbalance adaptation. The focus parameter is used to nonlinearly modulate the prediction confidence, suppress the gradient contribution of easy-to-classify samples, and enhance the loss weight of highly simulated deceptive samples, forcing the model to optimize the generalization of the decision boundary in the low-confidence region to achieve dynamic focusing on difficult samples. This mechanism can significantly improve the model’s ability to identify adversarial generated samples and cross-domain attacks [43]. At the same time, it can enhance the robustness of the model in complex acoustic environments by suppressing the overfitting tendency dominated by the majority class. The formula is as follows:

In the formula, is the model’s predicted probability of the true category, is the category weight, which is set to 0.25 in this paper to balance the difference in the number of positive and negative samples, and is the focusing parameter, which is set to 2 in this experiment to control the weight attenuation of difficult and easy samples.

3. Network Model Structure

3.1. Model Structure

The proposed network model structure is shown in Figure 4. The input one-dimensional speech original waveform first passes through a 1 × 7 convolution kernel along the time axis for wide kernel convolution to capture long-term speech features, accelerates convergence through Batch Normalization (BN), and then uses the ReLU activation function to introduce nonlinearity. In this stage, the Max Pooling operation with stride = 4 is used to achieve preliminary downsampling. Downsampling compresses the time dimension, expands the receptive field, and enhances the robustness to local disturbances. In the multi-level residual module stack, there are three ResNet-Style blocks and max pool layers. Each residual block contains three 1 × 3 Conv1D layers, with a BN layer and a ReLU inserted in the middle, and cooperates with the CBAM block and skip connection to gradually extract high-order time domain features. Then, the max pooling layer with a stride of 4 is connected to gradually compress the time resolution. After extracting the high-order time domain features of speech, the CBAM attention module is connected. This module dynamically calibrates the feature response weights through the dual paths of channel attention and spatial attention, enhances the model’s sensitivity to forgery traces, and suppresses irrelevant noise interference. The global max pool takes the maximum value along the time dimension, compresses the feature map into a vector, and retains the most significant discriminant features. Two linear layers perform feature mapping to expand nonlinear expression capabilities and then randomly mask neurons through Dropout to prevent the model from overfitting to local artifacts in the training data. Finally, the linear layer outputs the probability distribution of forged and real categories.

Figure 4.

Network model structure.

3.2. 1D Attention Module

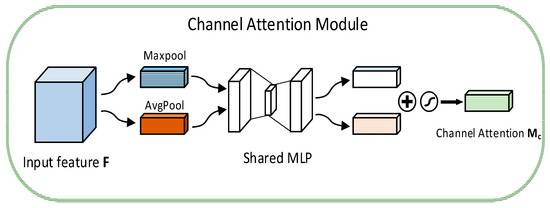

Channel Attention Module: In Figure 5, the input features are first subjected to dual-path spatial information aggregation. Average Pooling and Max Pooling operations are used to extract the average feature vector , reflecting the global distribution characteristics and the max feature vector and capturing the significant local response. Subsequently, the two types of feature vectors are input into the parameter-sharing MLP for joint learning to generate the channel-dimensional attention weight map . In the 1D channel attention, a 1D convolutional layer is used instead of the MLP, and the size of the hidden activation intermediate layer dimension is reduced to by setting the compression ratio to control the complexity of the model. Finally, the outputs of the two branches are added element by element to complete the calculation of the channel attention weight. The formula is as follows:

where represents the Sigmoid function and and are the weights of the MLP.

Figure 5.

Channel attention module structure.

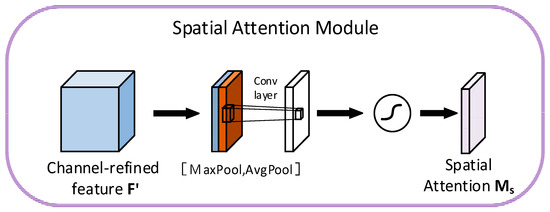

Spatial Attention Module: In Figure 6, spatial attention uses the channel-enhanced feature as the input feature. First, Global Max Pooling and Global Average Pooling are performed along the channel dimension to generate two single-channel statistical features and . Then, the two features are concatenated into along the channel dimension, and the channel information is fused through the 1D convolution kernel . Finally, the spatial attention weight map is normalized by the Sigmoid function. The formula is as follows:

where represents the Sigmoid function, , and the output dimension is .

Figure 6.

Spatial attention module structure.

3.3. Data Processing

When processing a dataset containing raw speech recordings of different lengths, it is usually necessary to align the data. In this study, we adopt the processing method in the literature [44] and choose to retain each sample for 6 s and process it at a sampling rate of 16 kHz. Subsequently, these 6 s samples are directly input into the network for end-to-end training. Since all convolutional layers use the “SAME” padding method, the length of the feature vector at the input is 9.6 × 104 and is only reduced by the pooling layer.

3.4. Data Augmentation

Generally speaking, the dataset of fake speech detection shows that the training set data are far less than the evaluation set data, and the technology of generating fake samples in the training set is also less than that in the evaluation set. This shows that fake speech detection aims to train the model with a small amount of data and attack methods to deal with unknown attacks. Therefore, we add a data enhancement module in the training process to improve the generalization ability of the model.

To simulate the background noise in real scenes, we add Gaussian noise with a mean of 0 and a standard deviation of 0.005 to the speech waveform with a probability of 30%. By slightly perturbing the input data, we prevent the model from overfitting the “pure” speech features in the training set. We add time-shift perturbation to randomly and cyclically shift the speech waveform on the time axis. The shift amount is between [−100, 100] sampling points, breaking the absolute time position dependence of the speech signal and forcing the model to focus on local timing features. We multiply the amplitude of the speech waveform by a random factor in the interval [0.8, 1.2] and adjust the volume globally to simulate the volume differences caused by different recording devices, speaker habits, or environments to prevent the model from relying on absolute amplitude features.

4. Experimental Configuration

4.1. Training Strategy

The experiment uses the AdamW optimizer during training, with the learning rate set to 1 × 10−4, the weight decay to 1 × 10−4, the momentum parameters , and the batch size to 32. The cosine annealing learning rate scheduler is used, the cycle length is set to 50, and the learning rate completes a complete cycle of decrease and increase within 50 steps. The experimental environment is shown in Table 2.

Table 2.

Experimental environment.

4.2. Experimental Dataset

This paper selects the ASVspoof2019 dataset [22] as the experimental dataset, which includes two typical attack scenarios: Logical Access (LA) and Physical Access (PA). The LA scenario is aimed at the scenario where remote users use uncontrolled terminal devices to access the ASV system. The attack methods are mainly based on generative forgery technology (such as speech synthesis, speech conversion, and their combination methods). The PA scenario is the speech signal collection process in a controlled hardware environment, and the attack type is an acoustic repetition attack. In order to focus on the detection of generative forged speech, the experimental design of this paper only detects deep forged speech in the LA scenario.

ASVspoof2019 LA is divided into a training set, a development set, and an evaluation set. The training set contains 2580 real speech samples and 22,800 synthetic forged samples. The forged samples use four TTS technologies and two VC technologies; the development set consists of 1484 real speeches of the target speaker, 1064 real speeches of the non-target speaker, and 22,296 forged samples based on the same forgery technology; and the evaluation set contains 5370 real speeches of the target speaker, 1985 real speeches of the non-target speaker, and 63,882 forged samples generated by seven TTS and six VC technologies. It is worth noting that two of the thirteen forgery technologies involved in the evaluation set overlap with the training set technologies. Such samples are defined as known attack types, and the remaining 11 are unknown technologies to evaluate the generalization ability of the model. Table 3 shows the data distribution of the dataset, and Table 4 and Table 5 show the attack types in the dataset.

Table 3.

Distribution of the dataset.

Table 4.

Techniques used by known attack types in the ASVspoof2019 LA dataset.

Table 5.

Techniques used by unknown attack types in the ASVspoof2019 LA dataset.

4.3. Evaluation Metrics

The deepfake voice detection task is a binary classification problem. The equal error rate (EER) is the core indicator for evaluating the performance of the classification model, so the EER is selected as the evaluation indicator of this experiment. The deepfake voice detection model classifies the fake voice as real voice as false acceptance and classifies the real voice as fake voice as false rejection. The EER refers to the error rate when the False Acceptance Rate (FAR) and the False Rejection Rate (FRR) are equal. The calculation formulas for the FAR, FRR, and EER are as follows:

where and are the total number of samples of forged speech and real speech, respectively. is the number of fake speech samples, with a detection score greater than threshold . is the number of real speech samples, with a detection score less than or equal to threshold . The smaller the EER, the better the performance of the detection model.

4.4. Lightweight Analysis

We conducted a lightweight model analysis based on the experimental configuration in Table 2. The total training parameters of the model are 0.36 M, and the model file size is only 1.38 MB, which is very lightweight and suitable for embedded devices and mobile deployment. The inference speed on the GPU reaches 4.86 ms, which is extremely fast, indicating that the model is well optimized. The video memory occupies only 23.08 MB, allowing the model to run on mid- and low-end GPUs. The inference speed on the CPU is 158.50 ms, which is close to the real-time audio detection requirement (<100 ms delay). Table 6 shows the analysis data.

Table 6.

Model efficiency analysis table.

5. Results Analysis

5.1. Comparisons with Other Methods

In Table 7, we show the main results obtained by comparing the proposed network with baselines and advanced solutions on the ASVspoof2019 dataset.

Table 7.

All experiments use the comparison of the EER (%) results obtained on the LA part of the dataset ASVspoof2019 and on the development and evaluation sets.

The baseline models used are two baseline models (LFCC + GMM, CQCC + GMM) from the ASVspoof2019 challenge and an end-to-end baseline model (RawNet) from the ASVspoof2021 challenge.

Our model shows significant advantages in both performance and efficiency. Compared with the baseline method in the ASVspoof challenge, the EER of this model on the development set and evaluation set reached 0.55% and 1.94%, respectively, which is significantly better than most baseline methods (for example, the EER of LFCC + GMM is 9.57%, CQCC + GMM is 8.09%, and RawNet is 7.46%). Compared with the advanced end-to-end detection model (Sinc + RawNet), our model also shows excellent performance. At the same time, by introducing a lightweight design, the number of parameters is only 0.36 M, which is less than 1/85 of the comparison model 3 features + CNN. The model greatly reduces the computational and storage overhead while ensuring detection accuracy. This advantage stems from the coordinated optimization of the CBAM attention mechanism and the 1D ResNet network. The CBAM module significantly improves the feature discrimination by dynamically focusing on the key forgery artifacts in the speech time domain, while the 1D convolutional structure effectively avoids the redundant calculation of traditional 2D spectral feature extraction. Therefore, our solution not only achieves better detection performance but also provides an efficient and reliable technical path for real-time deployment at the edge.

5.2. Cross-Dataset Experiments

Since the ASVspoof2015 training set contains relatively old voice forgery methods, we focus on using the pre-trained model trained on the more advanced ASVspoof2019 dataset to test the ASVspoof2015 evaluation set. As shown in Table 8, our pre-trained model achieves an EER of 4.94% on the ASVspoof2015 validation set, which is significantly better than the baseline model. This proves that the model has a strong generalization ability and can effectively cope with different attack types and changes in data distribution. It also proves that the CBAM attention mechanism still has excellent forgery feature capture ability when facing synthesized voices from different forged voice technologies. Figure 7 shows the (Receiver Operating Characteristic) ROC curve and (Detection Error Tradeoff) DET curve of the cross-dataset experiment. The AUC is the area under the ROC curve, which is used to quantify the overall classification ability of the model. An AUC of 0.953 means that in 95.3% of the cases, the positive sample score is higher than the negative sample score. The model has a very high discrimination ability and extremely high overall performance in distinguishing real voices from forged voices. In the voice anti-spoofing task, an AUC exceeding 0.9 is usually regarded as an excellent level. The DET curve shows the relationship between the FPR and FRR. The closer the curve is to the lower left corner, the better the performance of the model. The validation set curve in the figure is near the lower left corner, indicating that the model can maintain a low FRR at a lower FPR.

Table 8.

EER (%) obtained by testing the pre-trained model using the ASVSpoof2015 development set and evaluation set.

Figure 7.

ROC curve and DET curve of cross-dataset test of ASVspoof2015 development set and evaluation set. The auxiliary line in the ROC curve graph represents the performance of a completely random guessing classifier. The ROC curve is above the auxiliary line, indicating that the classifier is better than random guessing. The auxiliary line in the DET curve graph helps locate the EER point. The intersection of the auxiliary line and the DET curve is the EER point, indicating that the error rates of the two types are equal at this time.

5.3. Ablation Experiment

In order to evaluate the contribution of data augmentation and CBAM attention mechanism to the performance of the forged speech detection model, we conducted a series of ablation experiments on the ASVspoof2019 dataset. As shown in Table 9, we compared various model configurations by selectively disabling or replacing key modules in the model. Comparison between Experiment 1 and Experiment 2: Adding only the data augmentation module reduced the EER by 2.24%. By increasing the diversity of training data, the model’s robustness to input changes was improved. Comparison between Experiment 1 and Experiment 3, Experiment 1 and Experiment 4: Adding only the channel attention module reduced the EER by 3.26%, and adding only the spatial attention module reduced the EER by 3.21%. The channel attention mechanism suppresses noisy channels and enhances key feature channels and discriminative features through adaptive feature channel weighting. The spatial attention mechanism focuses on key time regions, improves local feature extraction capabilities, and highlights time segments through attention weights in the time dimension. Comparison between Experiment 3, Experiment 4, and Experiment 5: The effects of using channel or spatial attention alone are similar, and the combination of the two shows better performance, indicating that the two pay attention to different aspects of feature information, which further strengthens the model’s learning of forged features and improves model performance. We added the ECA-NET (Efficient Channel Attention Network) attention mechanism as a control experiment to verify the effectiveness of the CBAM module. During the experiment, only the CBAM module was replaced, and all other hyperparameters were kept the same. The ECA-NET attention mechanism uses one-dimensional convolution to capture local cross-channel interactions. Comparing Experiments 3, 5, and 7, the performance of the CBAM module in the forged language detection task is significantly better than that of the ECA-NET attention. Comparison of Experiment 2, Experiment 5, and Experiment 7: Adding data augmentation on the basis of channel and spatial attention brings an additional improvement of 0.39%. Compared with using data augmentation alone, the combined effect is nearly 2 times better. Data augmentation provides richer training samples, enabling the attention module to learn more robust feature selection strategies. The experimental findings confirm the necessity of incorporating these two techniques into the proposed model, which not only enhances the model’s learning of forged features but also improves the model’s generalization ability and robustness.

Table 9.

Ablation results on the ASVspoof2019 dataset.

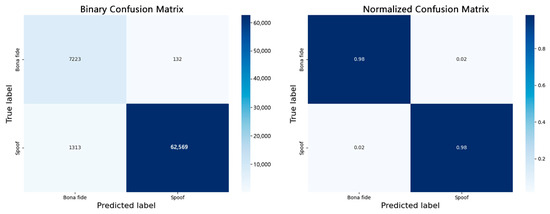

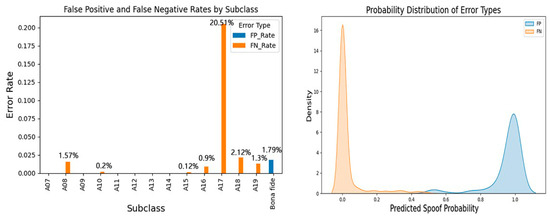

5.4. Discussion of Classification Results

In order to further analyze the performance of the model on various samples, we introduce the confusion matrix as a supplementary evaluation indicator. In forged speech detection, two types of errors are usually of concern: false negatives (FNs), misclassifying forged speech as real speech, and false positives (FPs), misclassifying real speech as forged speech. Figure 8 shows the binary confusion matrix and the normalized confusion matrix. A total of 132 real speech samples were misclassified as forged speech, accounting for 1.79% of the real speech samples. This shows that the model has high specificity in recognizing real speech. A total of 1313 forged speech samples were misclassified as real speech, accounting for 2.06% of the forged speech samples. Figure 9 shows the classification performance of the model when facing unknown attack types. The model has perfect detection capabilities (100% accuracy) for attack types A07, A09, A11, A12, A13, and A14 and is close to perfect detection (>99% accuracy) for attack types A10, A15, and A19. It maintains excellent detection capabilities (>97% accuracy) for attack types A08, A16, and A18. Among the total FP samples, the number of missed detections for the A17 attack type is 1008, accounting for 76.77%. The A17 attack type (waveform filtering) is a voice conversion technology that changes the voice features by filtering the original voice waveform, directly operating on the time domain waveform to avoid modifying the spectral features. Our model has not yet achieved the optimal detection effect for this type of attack. This finding provides a useful reference for further model optimization. The confusion matrix reveals that the model still has a certain misjudgment rate on some forged samples, especially in low-quality forged samples (such as VC type), which is more likely to produce false negatives.

Figure 8.

Binary classification confusion matrix and normalized confusion matrix.

Figure 9.

False positive rate and false negative rate of each subclass and probability distribution of error type.

6. Conclusions

In this paper, we combine the 1D-CBAM attention mechanism with the ResNet network and propose a lightweight forged speech detection model. By embedding the channel–space dual attention mechanism in the one-dimensional convolutional layer, the model can adaptively focus on the key temporal features related to forgery in the speech signal. The experimental results show that the model performs well in the LA scenario of the ASVspoof2019 dataset. Compared with other models, the EER evaluation index has been significantly improved, and the number of parameters is less, which provides feasibility for the deployment of anti-spoofing systems in edge computing environments.

In addition to its effectiveness in forged speech detection, the proposed model with the CBAM module also shows good application potential as a discriminator in the Generative Adversarial Network (GAN) architecture [48]. CBAM combines channel attention and spatial attention mechanisms, allowing the discriminator to adaptively focus on more discriminative frequency channels and time–frequency regions in the speech signal. This attention-driven enhancement mechanism is expected to improve the discriminator’s ability to identify subtle differences between real and synthesized speech, further enabling the generator to generate more natural and realistic speech in adversarial training.

Integrating CBAM into the GAN discriminator may bring many advantages. First, it can enhance the model’s sensitivity to common artifacts in synthesized speech, thereby improving the detection performance and robustness of deepfake audio. Stronger discriminative ability will drive the generator to synthesize speech with better perceptual quality and semantic consistency. The visualization of the attention map generated by CBAM also helps to understand the key time–frequency regions that the model focuses on when identifying forged speech, improving the interpretability of the overall model.

However, this integration approach also faces certain challenges. On the one hand, the introduction of the attention mechanism will increase the complexity of the model and may have an adverse effect on the training stability of the GAN, such as problems such as mode collapse or gradient disappearance. On the other hand, the spatial attention mechanism in particular will bring about a large computational overhead when processing high-resolution time–frequency features, and it is necessary to carefully weigh it for real-time or resource-constrained application scenarios. Subsequent research can explore combining spectral normalization, gradient penalty, and other methods to improve training stability and try to design a lightweight attention module to reduce the computational burden.

In terms of forged speech detection, future work will focus on exploring multimodal feature-level fusion strategies for one-dimensional time series data to improve the ability to distinguish forged speech while keeping the number of parameters as small as possible. For example, it is planned to construct a joint feature learning framework for time domain waveform and phase features. On the one hand, the original waveform is used to maintain high-frequency detail information, and on the other hand, the spectrum reconstruction defects of the generative model are captured through short-term phase derivative, thereby breaking through the cognitive limitations of single feature modeling and improving the generalization detection ability of new forgery methods, such as diffusion models.

Author Contributions

Conceptualization, Y.W. and H.H.; methodology, H.H.; software, H.H.; validation, Y.W., H.H., Z.L. and S.Z.; formal analysis, Y.W.; investigation, H.H.; writing—original draft preparation, Y.W. and H.H.; writing—review and editing, Y.W., H.H., Z.L. and S.Z.; project administration, Y.W.; funding acquisition, Y.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research is funded by the National Key R&D Program of China (Project No. 2021YFF0603904), the Henan Province Key R&D Special Fund (Project No. 251111242100), the Civil Aviation Information Technology Research Center, the Civil Aviation Flight University of China (Project No. 25CAFUC09010), the Civil Aviation Flight Academy of China Project (Project No. 25CAFUC10028), and the Flight Technology and Flight Safety Key Laboratory of CAAC (Project No. FZ2025ZX34 Research on aircraft engine fault diagnosis technology based on multi-channel acoustic perception).

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CBAM | Convolutional Block Attention Module |

| ResNet | Residual Network |

| ASV | Automatic Speaker Verification |

| EER | Equal Error Rate |

| TTS | Text-to-Speech Synthesis |

| VC | Voice Conversion |

| DNN | Deep Neural Network |

| HMM | Hidden Markov Model |

| GMM | Gaussian Mixture Model |

| LFCC | Linear Frequency Cepstrum Coefficient |

| MFCC | Mel-frequency Cepstral Coefficient |

| CMVN | Cepstral Mean and Variance Normalization |

| MGD | Corrected Group Delay |

| RPS | Relative Phase Shift |

| CQCC | Constant-Q Cepstral Coefficient |

| CQT | Constant-Q Transform |

| SVM | Support Vector Machine |

| MLP | Multilayer Perceptron |

| STFT | Short-Time Fourier Transform |

| 1D | One-Dimensional |

| BN | Batch Normalization |

| LA | Logical Access |

| PA | Physical Access |

| ECA-NET | Efficient Channel Attention Network |

| FAR | False Acceptance Rate |

| FRR | False Rejection Rate |

| ROC | Receiver Operating Characteristic |

| DET | Detection Error Tradeoff |

| AUC | Area Under the ROC Curve |

| FN | False Negative |

| FP | False Positive |

| GAN | Generative Adversarial Network |

References

- Wu, Z.; Evans, N.; Kinnunen, T.; Yamagishi, J.; Alegre, F.; Li, H. Spoofing and countermeasures for speaker verification: A survey. Speech Commun. 2015, 66, 130–153. [Google Scholar] [CrossRef]

- Shchemelinin, V.; Simonchik, K. Examining vulnerability of voice verification systems to spoofing attacks by means of a TTS system. In Proceedings of the International Conference on Speech and Computer, Pilsen, Czech Republic, 1–5 September 2013; Springer International Publishing: Cham, Switzerland, 2013; pp. 132–137. [Google Scholar]

- Kinnunen, T.; Wu, Z.Z.; Lee, K.A.; Sedlak, F.; Chng, E.S.; Li, H. Vulnerability of speaker verification systems against voice conversion spoofing attacks: The case of telephone speech. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; IEEE: New York, NY, USA, 2012; pp. 4401–4404. [Google Scholar]

- Das, R.K.; Tian, X.; Kinnunen, T.; Li, H. The attacker’s perspective on automatic speaker verification: An overview. arXiv 2020, arXiv:2004.08849. [Google Scholar]

- Chen, Z.; Xie, Z.; Zhang, W.; Xu, X. ResNet and Model Fusion for Automatic Spoofing Detection. In Proceedings of the Interspeech, Stockholm, Sweden, 20–24 August 2017; pp. 102–106. [Google Scholar]

- Ren, Y.; Hu, C.; Tan, X.; Qin, T.; Zhao, S.; Zhao, Z.; Liu, T.-Y. Fastspeech 2: Fast and high-quality end-to-end text to speech. arXiv 2020, arXiv:2006.04558. [Google Scholar]

- Bevinamarad, P.R.; Shirldonkar, M.S. Audio forgery detection techniques: Present and past review. In Proceedings of the 2020 4th International Conference on Trends in Electronics and Informatics (ICOEI)(48184), Tirunelveli, India, 15–17 June 2020; IEEE: New York, NY, USA, 2020; pp. 613–618. [Google Scholar]

- Tokuda, K.; Nankaku, Y.; Toda, T.; Zen, H.; Yamagishi, J.; Oura, K. Speech synthesis based on hidden Markov models. Proc. IEEE 2013, 101, 1234–1252. [Google Scholar] [CrossRef]

- Yang, J.; Das, R.K.; Zhou, N. Extraction of octave spectra information for spoofing attack detection. IEEE/ACM Trans. Audio Speech Lang. Process. 2019, 27, 2373–2384. [Google Scholar] [CrossRef]

- Tian, X.; Xiao, X.; Chng, E.S.; Li, H. Spoofing voice detection using temporary convolutional neurons. In Proceedings of the 2016 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA), Jeju, Republic of Korea, 13–16 December 2016; IEEE: New York, NY, USA, 2016; pp. 1–6. [Google Scholar]

- Muckenhirn, H.; Korshunov, P.; Magimai-Doss, M.; Marcel, S. Long-term spectral statistics for voice presentation attack detection. IEEE/ACM Trans. Audio Speech Lang. Process. 2017, 25, 2098–2111. [Google Scholar] [CrossRef]

- Yu, H.; Tan, Z.H.; Ma, Z.; Martin, R.; Guo, J. Spoofing detection in automatic speaker verification systems using DNN classifiers and dynamic acoustic features. IEEE Trans. Neural Netw. Learn. Syst. 2017, 29, 4633–4644. [Google Scholar] [CrossRef]

- Zhang, C.; Yu, C.; Hansen, J.H.L. An investigation of deep-learning frameworks for speaker verification antispoofing. IEEE J. Sel. Top. Signal Process. 2017, 11, 684–694. [Google Scholar] [CrossRef]

- Chettri, B.; Stoller, D.; Morfi, V.; Ramírez, M.A.M.; Benetos, E.; Sturm, B.L. Ensemble models for spoofing detection in automatic speaker verification. arXiv 2019, arXiv:1904.04589. [Google Scholar]

- Lai, C.I.; Chen, N.; Villalba, J.; Dehak, N. ASSERT: Anti-spoofing with squeeze-excitation and residual networks. arXiv 2019, arXiv:1904.01120. [Google Scholar]

- Zeinali, H.; Stafylakis, T.; Athanasopoulou, G.; Rohdin, J.; Gkinis, I.; Burget, L.; Černocký, J. Detecting spoofing attacks using vgg and sincnet: But-omilia submission to asvspoof 2019 challenge. arXiv 2019, arXiv:1907.12908. [Google Scholar]

- Sanchez, J.; Saratxaga, I.; Hernaez, I.; Navas, E.; Erro, D.; Raitio, T. Toward a universal synthetic speech spoofing detection using phase information. IEEE Trans. Inf. Forensics Secur. 2015, 10, 810–820. [Google Scholar] [CrossRef]

- Saratxaga, I.; Sanchez, J.; Wu, Z.; Hernaez, I.; Navas, E. Synthetic speech detection using phase information. Speech Commun. 2016, 81, 30–41. [Google Scholar] [CrossRef]

- Patel, T.B.; Patil, H.A. Significance of source–filter interaction for classification of natural vs. spoofed speech. IEEE J. Sel. Top. Signal Process. 2017, 11, 644–659. [Google Scholar] [CrossRef]

- Todisco, M.; Delgado, H.; Evans, N. Constant Q cepstral coefficients: A spoofing countermeasure for automatic speaker verification. Comput. Speech Lang. 2017, 45, 516–535. [Google Scholar] [CrossRef]

- Pal, M.; Paul, D.; Saha, G. Synthetic speech detection using fundamental frequency variation and spectral features. Comput. Speech Lang. 2018, 48, 31–50. [Google Scholar] [CrossRef]

- Wang, X.; Yamagishi, J.; Todisco, M.; Delgado, H.; Nautsch, A.; Evans, N.; Sahidullah; Vestman, V.; Kinnunen, T.; Lee, K.A.; et al. ASVspoof 2019: A large-scale public database of synthesized, converted and replayed speech. Comput. Speech Lang. 2020, 64, 101114. [Google Scholar] [CrossRef]

- Yang, J.; Das, R.K.; Li, H. Significance of subband features for synthetic speech detection. IEEE Trans. Inf. Forensics Secur. 2019, 15, 2160–2170. [Google Scholar] [CrossRef]

- Das, R.K.; Yang, J.; Li, H. Long Range Acoustic Features for Spoofed Speech Detection. In Proceedings of the 2019 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Singapore, 14–18 December 2019; pp. 1058–1062. [Google Scholar]

- Sahidullah, M.; Kinnunen, T.; Hanilçi, C. A comparison of features for synthetic speech detection. In Proceedings of the 16th Annual Conference of the International Speech Communication Association, Dresden, Germany, 6–10 September 2015. [Google Scholar]

- Lavrentyeva, G.; Novoselov, S.; Tseren, A.; Volkova, M.; Gorlanov, A.; Kozlov, A. STC antispoofing systems for the ASVspoof2019 challenge. arXiv 2019, arXiv:1904.05576. [Google Scholar]

- Li, X.; Li, N.; Weng, C.; Liu, X.; Su, D.; Yu, D. Replay and synthetic speech detection with res2net architecture. In Proceedings of the ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; IEEE: New York, NY, USA, 2021; pp. 6354–6358. [Google Scholar]

- Alzantot, M.; Wang, Z.; Srivastava, M.B. Deep residual neural networks for audio spoofing detection. arXiv 2019, arXiv:1907.00501. [Google Scholar]

- Monteiro, J.; Alam, J.; Falk, T.H. Generalized end-to-end detection of spoofing attacks to automatic speaker recognizers. Comput. Speech Lang. 2020, 63, 101096. [Google Scholar] [CrossRef]

- Tak, H.; Patino, J.; Todisco, M.; Nautsch, A.; Evans, N.; Larcher, A. End-to-end anti-spoofing with rawnet2. In Proceedings of the ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; IEEE: New York, NY, USA, 2021; pp. 6369–6373. [Google Scholar]

- Wang, X.; Yamagishi, J. Investigating self-supervised front ends for speech spoofing countermeasures. arXiv 2021, arXiv:2111.07725. [Google Scholar]

- Wang, C.; Yi, J.; Zhang, X.; Tao, J.; Xu, L.; Fu, R. Low-rank adaptation method for wav2vec2-based fake audio detection. arXiv 2023, arXiv:2306.05617. [Google Scholar]

- Liu, X.; Wang, X.; Sahidullah, M.; Patino, J.; Delgado, H.; Kinnunen, T.; Todisco, M.; Yamagishi, J.; Evans, N.; Nautsch, A.; et al. Asvspoof 2021: Towards spoofed and deepfake speech detection in the wild. IEEE/ACM Trans. Audio Speech Lang. Process. 2023, 31, 2507–2522. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Lei, Z.; Yan, H.; Liu, C.; Zhou, Y.; Ma, M. GMM-ResNet2: Ensemble of group ResNet networks for synthetic speech detection. In Proceedings of the ICASSP 2024-2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; IEEE: New York, NY, USA, 2024; pp. 12101–12105. [Google Scholar]

- Ma, Y.; Ren, Z.; Xu, S. RW-Resnet: A novel speech anti-spoofing model using raw waveform. arXiv 2021, arXiv:2108.05684. [Google Scholar]

- Zhang, Y.; Jiang, F.; Duan, Z. One-class learning towards synthetic voice spoofing detection. IEEE Signal Process. Lett. 2021, 28, 937–941. [Google Scholar] [CrossRef]

- Chen, L.; Yao, H.; Fu, J.; Ng, C.T. The classification and localization of crack using lightweight convolutional neural network with CBAM. Eng. Struct. 2023, 275, 115291. [Google Scholar] [CrossRef]

- Fu, H.; Song, G.; Wang, Y. Improved YOLOv4 marine target detection combined with CBAM. Symmetry 2021, 13, 623. [Google Scholar] [CrossRef]

- Zhao, Y.; Ding, Q.; Wu, L.; Lv, R.; Du, J.; He, S. Synthetic Speech Detection Using Extended Constant-Q Symmetric-Subband Cepstrum Coefficients and CBAM-ResNet. In Proceedings of the 2023 7th International Conference on Imaging, Signal Processing and Communications (ICISPC), Kumamoto, Japan, 21–23 July 2023; IEEE: New York, NY, USA, 2023; pp. 70–74. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Dou, Y.; Yang, H.; Yang, M.; Xu, Y.; Ke, D. Dynamically mitigating data discrepancy with balanced focal loss for replay attack detection. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; IEEE: New York, NY, USA, 2021; pp. 4115–4122. [Google Scholar]

- Hua, G.; Teoh, A.B.J.; Zhang, H. Towards end-to-end synthetic speech detection. IEEE Signal Process. Lett. 2021, 28, 1265–1269. [Google Scholar] [CrossRef]

- Todisco, M.; Wang, X.; Vestman, V.; Sahidullah, M.; Delgado, H.; Nautsch, A.; Yamagishi, J.; Evans, N.; Kinnunen, T.; Lee, K.A. ASVspoof 2019: Future horizons in spoofed and fake audio detection. arXiv 2019, arXiv:1904.05441. [Google Scholar]

- Zhu, Y.; Powar, S.; Falk, T.H. Characterizing the temporal dynamics of universal speech representations for generalizable deepfake detection. In Proceedings of the 2024 IEEE International Conference on Acoustics, Speech, and Signal Processing Workshops (ICASSPW), Seoul, Republic of Korea, 14–19 April 2024; IEEE: New York, NY, USA, 2024; pp. 139–143. [Google Scholar]

- Sun, C.; Jia, S.; Hou, S.; Lyu, S. Ai-synthesized voice detection using neural vocoder artifacts. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 904–912. [Google Scholar]

- Ko, K.; Kim, S.; Kwon, H. Selective Audio Perturbations for Targeting Specific Phrases in Speech Recognition Systems. Int. J. Comput. Intell. Syst. 2025, 18, 103. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).