Abstract

This publication aims to present the preliminary results of research on an innovative device designed to support the rehabilitation of drivers with neurological disorders, developed as part of a multidisciplinary project. The device was designed for individuals recovering from neurological diseases, injuries, and COVID-19-related complications, who experience difficulties with coordination and the speed of performing motor exercises. Its goal is to improve the quality of life for patients and increase their chances of safely driving vehicles, which also contributes to the safety of all road users. The device allows for controlled upper limb exercises using a diagnostic module, exercise program, and biofeedback system. The main component is a mechatronic driving simulator, enhanced with dedicated software to support the rehabilitation of individuals with neurological disorders and older adults. Through driving simulations and rehabilitation tasks, patients perform exercises that improve their health, facilitating a faster recovery. The innovation of the solution is confirmed by a submitted patent application, and preliminary research results indicate its effectiveness in rehabilitation and improving mobility for individuals with neurological disorders.

1. Introduction

In Poland, between 1990 and 2009, 76% of road accidents were caused by the drivers themselves, with 60% of these incidents being due to distraction and concentration problems [1,2,3]. This is particularly significant in the context of the growing number of people suffering from neurological diseases, which can affect their ability to concentrate and react while driving. One example of such a disease is a stroke—a condition that affects 2.5 million new patients annually worldwide, and which 33 million people live with the consequences of. In Poland, 60–70 thousand stroke cases are reported each year, posing challenges in rehabilitation and public health, especially since strokes are the leading cause of disability [4,5,6].

The aging of society is an additional factor that could exacerbate these problems. By 2050, people aged 65 and above will make up 32.7% of Poland’s population [7,8,9,10]. Combined with the rising number of individuals suffering from neurological diseases, this may lead to an increase in road accidents caused by the health problems of drivers, as well as their ability to maintain proper concentration and reaction times. Data from the USA shows that in 2015, 391,000 people were injured and 3477 people were killed in accidents caused by distracted drivers, emphasizing the importance of maintaining full concentration on the road, especially for older individuals, whose risk of stroke and other health issues is increasing.

In response to these challenges, there has been an analysis of current technologies aimed at improving driving re-education, particularly through driving simulators. Various systems have been developed to aid in both driving education and rehabilitation. A classic solution is a driving simulator, based on analyzing data displayed on a monitor in front of the driver, to which the driver responds accordingly using only the steering wheel. This approach is outlined in patents such as US 5888074 A, US 2015024347 A1, and US 20110076649 A1, where the focus is on driving scenarios such as navigating through traffic congestion [11,12,13].

Another approach, as seen in the US8770980 B2 patent, is the development of an adaptive mechatronic device designed to replicate the typical driving environment [14]. This device includes displays, sensors, and software that enhance the realism of the driving experience, providing an effective tool for driving training. A similar technology, introduced in the US 8894415-B2 patent, focuses on rehabilitation for patients with motor dysfunctions, allowing them to regain necessary motor skills for driving [15]. These simulators, equipped with specialized software and mechanical components such as steering wheels, allow for multi-mode training, including assisted steering and resistance torque, to simulate real driving conditions.

Commercially available mechatronic devices, such as the Luna EMG system by EGZOTech, also provide rehabilitation solutions, though not specifically designed for driving. This device uses virtual reality and is equipped with interchangeable attachments, including a steering wheel-shaped grip for functional rehabilitation of drivers. Additionally, devices such as the DS250 and DS600 models by DriveSafety focus specifically on driving simulation and rehabilitation, offering advanced systems at a high cost, exceeding 100,000 PLN [16,17,18].

Rehabilitation research, such as that by S. George et al. (2014), emphasizes two main objectives for driver re-education: retraining motor, cognitive, and sensory processing skills, and directly enhancing driving abilities through simulators or in-car exercises [19]. This research suggests that a comprehensive approach combining motor training, cognitive development, and physical exercises can effectively improve driving skills [20].

Despite the availability of driving rehabilitation technologies such as the Luna EMG robot (which uses EMG signals to assist patients with muscle weakness in active movement training via an adaptable robotic arm) and the DriveSafety simulator (a cognitive-focused driving simulator with multi-functional steering tasks), these solutions have limitations. The Luna EMG primarily targets neuromuscular rehabilitation through muscle activation [21], while DriveSafety emphasizes cognitive-visual tasks on a static screen. Neither fully addresses the integrated physical-cognitive demands of post-COVID-19, post-stroke, or age-related rehabilitation, where patients require holistic training for coordination, balance, and adaptive motor skills.

To bridge this gap, our mechatronic rehabilitation device extends the capabilities of conventional driving simulators by incorporating modular, multi-sensory components that replicate real-world driving complexity. Unlike Luna EMG or DriveSafety, our system includes:

Dynamic left/right modules (adjustable mirror-window and multifunctional panels) mounted on movable arms, requiring patients to engage their head, torso, and limbs, enhancing spatial awareness and range of motion.

Tactile feedback panels with configurable buttons and LEDs to train grip strength and fine motor skills, critical for neurological recovery.

Ergonomic steering placement and proximity sensors to enforce proper posture and full-arm engagement, avoiding compensatory movements.

The mechatronic device for driver rehabilitation features a modular design, enabling gradual development and customization to patient needs. The project’s first phase prioritizes rehabilitation of the upper limbs, torso, and neck, as these areas are often neglected in neurorehabilitation (e.g., post-stroke). This neglect stems from both limited funding for rehabilitation and clinical priorities; therapists typically focus first on restoring mobility, intensively training the lower limbs. However, full motor recovery also requires regaining precision, arm strength, and torso stability, which are critical for tasks such as driving. Future iterations of the device may include modules for additional functions, but the current version deliberately omits brake and accelerator pedals to address the most urgent patient needs.

Additionally, our biofeedback-driven software tailors exercises to individual progress, a feature absent in existing systems. By combining these innovations, our device offers a comprehensive, adaptive solution for drivers recovering from COVID-19, stroke, or degenerative conditions—addressing both physical and cognitive rehabilitation in a single platform [22,23].

2. Materials and Methods

The aim of this project was to develop a mechatronic training device designed to enhance driving skills. This multidisciplinary project is particularly dedicated to individuals who, prior to illness, were active drivers but now face motor-visual coordination issues, concentration difficulties, as well as fear and anxiety about returning to driving due to the effects of COVID-19 or neurological conditions. The solution was also designed for elderly drivers, whose ability to drive safely can rapidly change due to an increased risk of age-related diseases. The proposed system offers a quantitative assessment of their driving-related skills and provides a customized rehabilitation program to support recovery. This is not a conventional driving simulator, which merely allows the user to simulate vehicle operation based on on-screen visuals. Instead, it is an innovative rehabilitation device that facilitates comprehensive recovery and rehabilitation [24,25].

During the initial research phase, the project team thoroughly defined and analyzed the key challenges faced by ill and elderly drivers. A detailed literature review and industry analysis were conducted alongside an evaluation of existing driver-assistive devices. Additionally, interviews were held with 10 patients and elderly drivers, two rehabilitation specialists, and one driving instructor. Based on the insights gained, fundamental design assumptions were formulated, leading to the development of a preliminary concept for the device.

The final version of this mechatronic rehabilitation device, as described in this article, was developed based on proprietary research and patented solutions. The project incorporates patents granted in Poland, including: Patent No. 231292 (18 February 2019)—Mechatronic Steering Wheel Overlay. Patent No. 238042 (30 June 2021)—Mechatronic Device for Rehabilitation and Driving Assistance, Particularly for Automobiles.

During the design phase of the project, particular attention was given to numerical values of anthropometric data and upper limb motion parameters, ensuring the device’s construction would accommodate a broad range of potential users and drivers. The applied data and industry standards, derived from available literature on adult populations, cover women aged 20–60 and men aged 20–65. The collected data, crucial to the project, comply with European standards (EIM 979). The primary reference for the device’s design is the anthropometric data of women from the 5th percentile group and men from the 95th percentile group. The selection and corresponding structural design were developed in accordance with PN-EN 547-3 (“Human Body Measurements—Anthropometric Data”).

The driver training device, specifically designed for individuals recovering from COVID-19, those with neurological conditions, and elderly users, features a base unit equipped with dedicated components to support the rehabilitation process. These modules are connected via an electronic control module to a Raspberry Pi microcomputer using a bidirectional data bus. The system also includes custom software, designed exclusively for this device, which is operated via a touchscreen display.

The key components of the described system include:

- Central module with a steering wheel and a task module positioned below it;

- Left mirror-window module;

- Right multifunctional panel module.

The base of the device is shaped like a flat plate. At the front of the base, directly in front of the user/patient, there is a task module in the form of a retractable flat drawer with gently rounded edges. On the upper surface of the task module, to the right and left, directly in front of the user, mushroom-shaped buttons are installed, one on each side. It is important to note that the left mushroom button is blue, while the right one is yellow. On the upper surface of the task module, between the blue and yellow mushroom buttons, three LEDs of different colors are embedded, corresponding, among other things, to the button colors.

On the upper surface of the task module, just behind the buttons, a slider button is mounted. This button has a guide rail running parallel to the line of the three preceding objects. Additionally, the upper surface of this button is shaped like a flattened cube.

At the center of the base, on its upper surface, the central module is installed. It consists of a cubic housing with an encoder mounted in the middle of its front panel. A steering wheel is attached to the front of this housing, positioned directly above the previously described task module. The steering wheel can be removed and replaced with an alternative control object, such as an attachment simulating a motorcycle or bicycle handlebar.

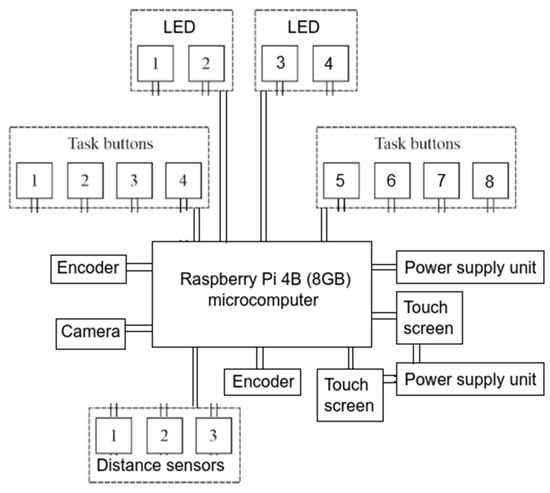

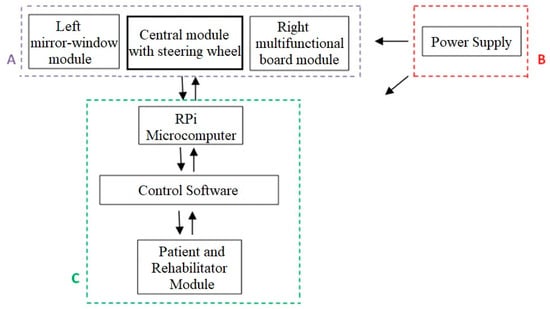

The functional connection diagram of the individual electronic and electromechanical modules is shown in Figure 1. Additional buttons (1–8) were used to record task completion time for the exercising user. Depending on the user’s motor capabilities, task completion was signaled by pressing the appropriate button.

Figure 1.

The functional connection diagram of the individual electronic and electromechanical modules.

Above the housing with the steering wheel, there is a mount for the central display. On the left side of the central display housing, in its upper part, ultrasonic distance sensors (HC-SR04, JustPi) are installed. These sensors operate at 40 kHz and measure distances between 2–200 cm, with a 5 V power input and a 15 mA current draw (dimensions: 45 × 20 × 15 mm). Their position can be manually adjusted to align with the user’s height or the display’s tilt angle. Additionally, the upper edge of the housing integrates another HC-SR04 distance sensor alongside a Raspberry Pi Camera HD v2 (8 MP, Sony IMX219 sensor). The camera supports 1080 p/30 fps, 720 p/60 fps, and 640 × 480 p/90 fps video modes, as well as 3280 × 2464 px still images, enabling high-resolution motion tracking. The prototype’s modular design allows customization—e.g., replacing the camera with advanced alternatives such as the Luxonis OAK-D-Lite (an AI-powered vision kit) for future iterations, though it was not used in this study.

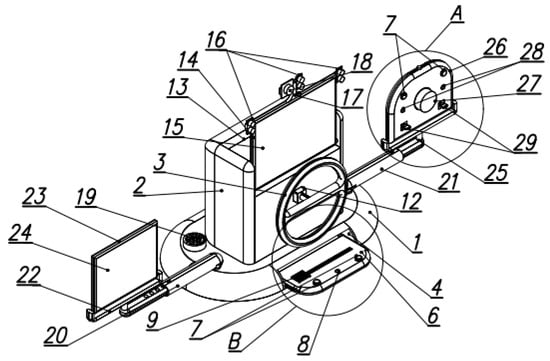

The device set also includes speakers, which can be repositioned and are used to generate sound for implementing proprietary tasks with biofeedback (Figure 2).

Figure 2.

Driver training device with key components: A—right multifunctional panel module, B—central module with a steering wheel and a task module positioned below it; 1—base, 2—body, 3—steering wheel, 4—recess, 5—first guide rail, 6—panel, 7—first button, 8—RGB LED diode, 9—slider, 10—second guide rail, 11—protrusions, 12—encoder, 13—groove, 14—casing, 15—central display, 16—distance sensor, 17—handle, 18—camera, 19—speaker, 20—first arm, 21—second arm, 22—second handle, 23—frame, 24—side display, 25—third handle, 26—panel, 27—rotary knob, 28—diode, 29—second button.

The device also includes a left mirror-window module, which consists of a movable display mounted on an extendable arm positioned to the left of the central module. Both the display position and the length of the arm can be adjusted. The display presents tasks to be performed, including adjustments related to field-of-view correction.

On the right side of the central module, there is a right multifunctional panel module. This module is designed as a panel mounted on a movable, adjustable arm. The panel’s rotation and tilt angle can also be modified. The panel features two rows of buttons located in the middle and upper sections. The buttons in each row require different pressing techniques, encouraging the user to engage their wrist and fingers in varying ways. Additionally, between these two rows of buttons, a rotary button is centrally positioned. The shape, size, and texture of this rotary button can be customized.

Each module’s position can be adjusted (including tilt angle and distance from the base), allowing for a personalized approach tailored to the user. Furthermore, selected task response buttons are made from different materials and feature surfaces with varying textures. Their diverse sizes and pressing requirements significantly contribute to the rehabilitation process by stimulating sensory receptors located on the inner surface of the hand and fingers. The variety in button colors and numbers across different modules, such as the right multifunctional panel or task module, ensures that the exercises remain engaging and effective in the rehabilitation process.

Before the user begins performing exercises tailored to their individual needs, taking into account their dysfunctions and current upper limb motor abilities while driving, it is necessary to conduct a diagnostic assessment. This involves selecting which of the two button modules will be used (either the right multifunctional panel or the task module) and requesting the patient to press specific control buttons. Based on this assessment, a personalized set of exercises is proposed for the patient to complete. The results obtained through these exercises are then recorded and presented to the patient, providing them with an overview of their current performance.

The device allows patients to practice not just steering wheel movements, but also full upper limb rehabilitation, especially wrist and finger motions, through specialized gripping and pressing exercises. Its adjustable modules feature buttons of varying materials, textures, sizes, and shapes that stimulate hand receptors, speeding up recovery. Colorful, numbered buttons make exercises more engaging than traditional steering-wheel-only simulators, particularly benefiting post-COVID patients, neurological cases, and elderly drivers.

The system incorporates biofeedback through interactive displays. The left mirror-window module shows images connected to main screen tasks, while customizable color schemes and multi-colored buttons combine physical therapy with cognitive training. This approach improves movement precision, problem-solving, and decision-making—all crucial for safe driving. By tracking head movements and providing real-time feedback, the device helps patients regain mobility and confidence faster than conventional methods.

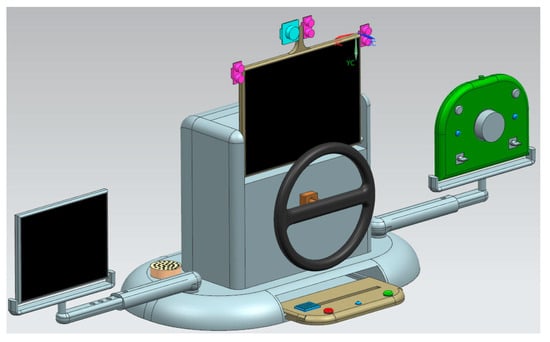

The CAD models were created based on a previously developed conceptual solution using NX Siemens CAD software (Figure 3).

Figure 3.

CAD model of the Driver Training Device.

2.1. Prototype of the Device

The mechatronic approach to design allowed for the refinement of the previously proposed geometric features and components of the device based on the obtained conclusions. By improving the construction models of the modules mounted on the base—such as the left mirror-window module and the right multifunctional panel module in relation to the central module with a large display and detachable steering wheel, as well as the task module with physical buttons and LEDs, while also considering the ergonomics of the performed movements—this approach enabled the optimization of the device’s structural design.

Following this, selected CAD models were prepared for further strength analysis of the proposed solution, and the prototype construction process was initiated. In the case of the left mirror-window module, which serves as a mirrored counterpart to the right multifunctional panel module, ensuring the stable mounting of these modules was crucial for user comfort. Their placement on the base was dictated by the ergonomics of limb movement, particularly the left upper limb for the left mirror-window module and the right upper limb for the right multifunctional panel module.

It is worth noting the method of mounting these modules on the base. The arms of both the left mirror-window module and the right multifunctional panel module are aligned along a central symmetry axis in their initial position. This design consideration aimed to ensure that the base experiences a balanced distribution of weight.

It is also essential to highlight that during the prototype construction and the iterative development of each module, extensive testing was required. These tests primarily focused on evaluating the correct operation of the left mirror-window module, the central module, the right multifunctional panel module, and the task module. In the final development and prototyping stage, 3D printing proved to be an indispensable tool. It played a crucial role in refining the buttons located on the task module and the right multifunctional panel module. These buttons were manufactured in successive testing phases using Fused Filament Fabrication (FFF) technology, an additive manufacturing technique based on material extrusion. The Prusa i3 MK3 3D printer was used for this purpose. Polylactic Acid (PLA) filament was selected as the printing material due to its minimal printing constraints. Its low extrusion temperature (ranging from 190–220 °C) and the absence of a requirement for a heated chamber made it an optimal choice. PLA is derived from renewable resources such as corn starch, sugarcane, and tapioca roots, making it highly biodegradable and cost-effective [26,27].

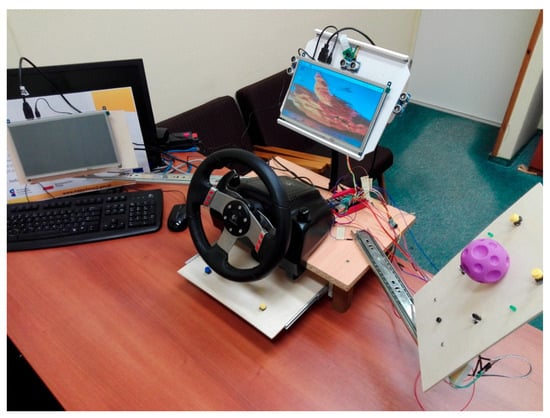

The prototype driver rehabilitation device (Figure 4) features a modular structure with the following components and construction:

Figure 4.

Prototype of the Driver Training Device.

Structural Framework:

- Base platform constructed from 18 mm plywood for stability;

- Left and right module mounts made of painted plywood panels;

- Adjustable aluminum arms (20 × 20 mm profiles) with:

- Length adjustment range: 40–70 cm;

- Angular adjustment: ±30° from baseline position;

- Plastic mounting brackets for secure module attachment.

Electronic Components:

- Central processing unit: Raspberry Pi 4B (8 GB) microcomputer;

- Dual touchscreen displays;

- Waveshare 11870 Resistive LCD IPS 10.1″;

- 1024 × 600 px resolution via HDMI+GPIO;

- Mounted on adjustable arms for optimal positioning.

Control Interface:

- Button modules featuring:

- Large-format tactile buttons (50 mm diameter);

- WK315 straight-lever limit switches;

- Rotational sensors (600 PPR encoders);

- Distance sensors (HC-SR04 ultrasonic, 2–400 cm range).

Adjustability Features:

- Modules can be repositioned to accommodate:

- Patient’s current range of motion;

- Therapeutic progression requirements;

- Increasing module distance forces full upper-limb engagement;

- Angular adjustments maintain ergonomic alignment.

The design enables:

- Progressive difficulty adjustment;

- Personalized rehabilitation protocols;

- Comprehensive upper-body training;

- Real-time performance monitoring.

The device described in this article represents an innovative approach to driver rehabilitation and training. It not only enables standard driving exercises but also integrates task and panel modules to engage the entire upper body, head, and torso. Unlike conventional simulators that focus primarily on steering movements and forward motion, this solution prioritizes comprehensive situational awareness, precision of movement, decision-making skills, and reaction time—all of which are essential for safe driving.

This system is particularly designed for individuals requiring intensive active exercises, with a focus on simultaneous upper limb and head movement, ensuring precise and repeatable motor actions in response to stimuli. A dedicated diagnostic, training, and reporting system has been developed, allowing for a customized rehabilitation program tailored to each driver’s motor impairments caused by illness or injury.

In addition to improving range and precision of motion, the system also enhances cognitive skills, including focus and logical thinking, by adapting exercises to each driver’s unique needs. Moreover, the interactive and engaging nature of the device accelerates rehabilitation, fostering higher motivation and involvement in the recovery process.

2.2. Software Development

The software development process for this device was not approached solely from an engineering perspective, as is common in many projects. Instead, special attention was given to rehabilitation-oriented software design, particularly concerning the central module. While the fundamental features of this module have been previously discussed, an important design consideration was the intentional positioning of the steering wheel and central module at a higher level than typically found in passenger cars. This setup encourages the user to maintain their hands in an elevated position, preventing them from resting on a table or their thighs, thereby promoting active upper limb engagement.

For the left mirror-window module, the development process focused on determining whether restricting the displayed image area, differentiating between a side window and a side mirror, was necessary. Ultimately, it was concluded that the nature of the exercises and the user’s focus on the displayed information were more critical than limiting the screen size.

Figure 5 illustrates the system concept of the driver training and rehabilitation device.

Figure 5.

Block diagram of the Driver Training Device: A—exercise module system, B—power supply system, C—control system.

Further work focused on refining the software in the context of rehabilitation through the implementation of the right multifunctional panel module and the task module, particularly concerning movement ergonomics. Each of these modules includes dedicated interactive elements and indicator LEDs. The introduction of specialized buttons within these modules enforces active upper limb exercises, emphasizing rehabilitation, movement speed, and precision, while incorporating biofeedback mechanisms.

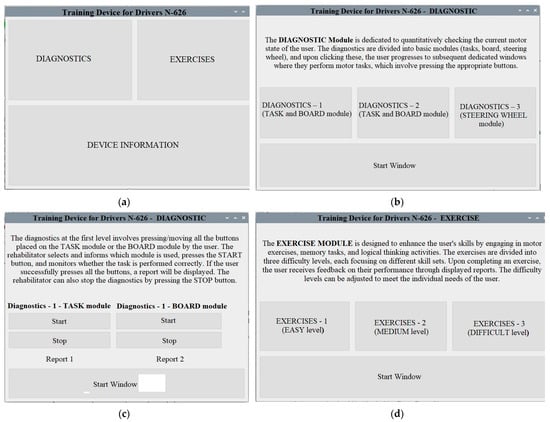

The key components of this software have already been outlined, including the diagnostic module, exercise module, and reporting module, which generates feedback after each completed task. The software was developed in Python using Visual Studio Code, with separate script files corresponding to different functionalities, each of which can be executed independently [28,29,30,31]. Due to the extensive lines of code, the article provides sample screenshots illustrating the graphical user interface (Figure 6). Upon launching the device, the start window appears (Figure 6a), allowing the user to choose between the diagnostic module, exercise module, and device information. The project title is displayed at the top of each window. At the beginning of the session, it is recommended that the user access the device information window, which provides a brief description of the system, details of the project, as well as the project logo and an image of the device model.

Figure 6.

Driver Exercise Device user interface: (a) Information window; (b) Diagnostics_1 window; (c) Diagnostics_2 window; (d) Exercise window.

The first recommended step is to initiate the diagnostic module (Figure 6b), where the user is guided through the entire diagnostic process and given the option to select one of three rounds, assessing their range of motion and interaction with the device. During this process, the user must press the designated control buttons on specific modules of the system. The results obtained are subsequently displayed in reports (Figure 6c). The user’s objective is to press all the buttons in the shortest possible time. If they are unable to complete the task, the rehabilitation specialist has the option to terminate the diagnostic session early by pressing the stop button. For this reason, both possible report formats are included in the screenshots.

Following the diagnostic phase, the user proceeds to the exercise tasks, which involve performing specific movement patterns as assigned by the rehabilitation specialist. These exercises are structured across three predefined difficulty levels—easy, medium, and difficult—allowing the user to select the appropriate level (Figure 6d).

3. Results

As part of the conducted research, the developed prototype was tested under simulated operational conditions. Due to cost constraints, the new technology was evaluated in an environment closely resembling real-world conditions, focusing on several operational parameters. These included the range of motion exercises performed by drivers using the system, the application of biofeedback through various interactive buttons, and the accuracy of each application module’s functionality.

The testing was conducted with the participation of four individuals aged 40, 53, 67, and 68, comprising two women and two men. Notably, two participants had previously contracted COVID-19 and suffered from neurological conditions. Over the course of a one-week testing period, none of the participants reported any issues related to software malfunctions.

Regarding the obtained results, improvements were assessed based on errors in selecting the correct buttons, the accuracy of pressing them, and reaction times during task execution, as recorded by the system. Depending on the participant, performance improvements ranged from 10% to 30%.

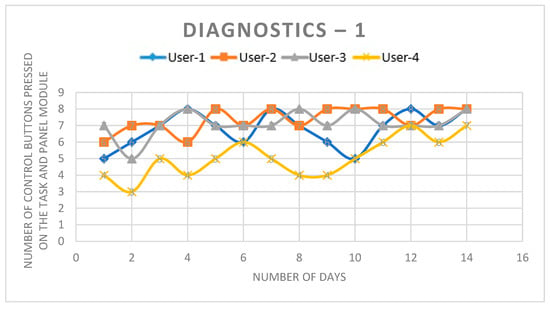

Figure 6 presents the results of the initial diagnostic module tests, which were conducted over 14 days with the four participants. During the diagnostics, the length and tilt angle of the right arm module were adjusted to match the anthropometric measurements of each user. The graph illustrates the results from “Diagnostics 1”, which involved pressing and sliding all eight buttons in both the task module and the panel module.

Additionally, the diagnostic module includes two further testing phases—"Diagnostics 2” and “Diagnostics 3”—which separately evaluate the range of motion, speed of object manipulation within the task module and the panel module, as well as steering wheel control. Since the underlying software operation remains consistent across all modules, Figure 7 depicts “Diagnostics 1”, visualizing the pressing and sliding of all buttons in a random order across both modules.

Figure 7.

Results from Diagnostic Module 1.

Furthermore, the system allows for the measurement and recording of both task completion time and the exact sequence in which actions were performed. The purpose of “Diagnostics 1” was to assess the participant’s range of motion and use these results to tailor subsequent rehabilitation exercises. Consequently, individual reaction speeds will be presented in graphical form within the exercise module results.

The issues encountered by some participants in pressing all the control buttons may indicate limitations in the full range of motion of the upper limbs. Therefore, subsequent stages of the exercises take this into account, or the arm lengths of these modules may need to be adjusted. As shown in Figure 7, Person 4 exhibited poorer performance, which could be indicative of a dysfunction, particularly during the early stages of the tests (on days 2, 8, and 9). However, by the end of the exercise period, this individual achieved much better results, pressing 6 to 7 of the 8 buttons. Similarly, Person 1 showed significant improvement over the course of the exercises. For the other two participants, the results were relatively consistent.

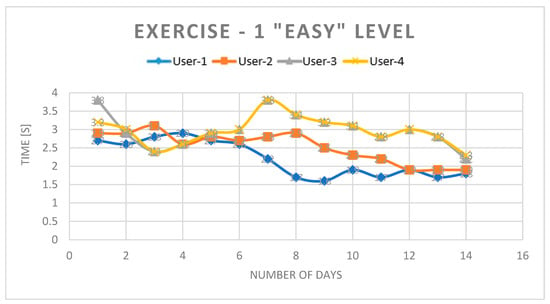

In the Exercise 1 tab at the “Easy” level, the focus was on the speed at which both hands completed the task of pressing and sliding the task module’s buttons. This task had to be performed in any order. The time achieved by participants ranged from 3.8 [s] to 1.8 [s], with the most significant improvements observed in Person 3 (see Figure 8).

Figure 8.

Results from Exercise Module 1 at the “Easy” level.

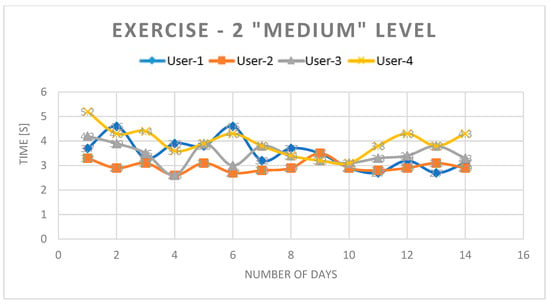

In the Exercise 2 tab at the “Medium” level, the focus shifted to the speed of the right upper limb while pressing and rotating the buttons on the board module. The task had to be completed in the sequence indicated by the LEDs. The system displayed the order in which the three buttons should be pressed, followed by a pause time. During this pause, participants were required to memorize the order of operations and then repeat them as quickly as possible. In addition to the reaction time measurement (Figure 9), the report also provided information about which buttons were pressed.

Figure 9.

Results from Exercise Module 2 at the “Medium” level.

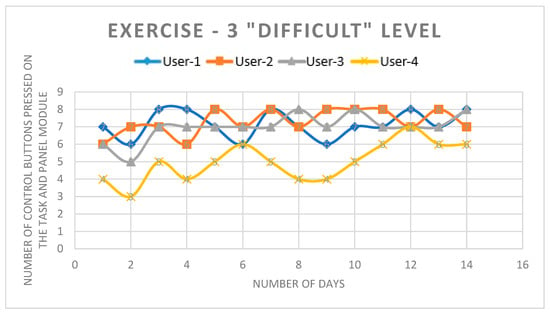

The chart in Figure 10 presents the results from Exercise 3 at the “Difficult” level, which aimed to assess how, during vehicle operation, the user, while keeping both hands on the steering wheel, drives a simulated vehicle and simultaneously analyzes the surroundings—in this case, the right side of the vehicle. The user’s reactions are tested by the simulated illumination of a corresponding indicator—an RGB LED on the right module of the board, triggering the need for a specific task. These tasks involve pressing or rotating the selected buttons on the board. The chart shows the number of errors made during the 14-day period.

Figure 10.

Results from Exercise Module 3 at the “Difficult” level.

This task specifically focused on the proper functioning of the right upper limb to reach the button, and then to position the hand correctly in order to press the button or rotate the knob either to the right or left. The buttons, arranged in pairs in two rows, require a different wrist position to press them. Additionally, at this level, the time taken for one test was not measured, although this information could be generated for the user in another report tab. The graph shows that Person 4 made the most errors, with a high number of incorrect movements in the early days of the exercises (four wrong movements). This was anticipated when analyzing their diagnostic results in comparison to the others. Their worst results were observed on days 2, 4, 8, and 9, which were attributed to more significant movement dysfunctions. However, over the short testing period, Person 4 was still able to achieve a final count of six errors in the later days. The remaining three individuals improved their results by eliminating one or three errors.

The solution described in the article is innovative and previously unseen. The device developed and detailed in the article was filed with the Patent Office of the Republic of Poland on 27 September 2022 under the title “Driver Exercise Device”, patent number P.442373, authored by Jacek S. Tutak, Krzysztof Lew, Andrzej Burghardt, Michał Jurek, and Piotr Matłosz.

4. Discussion

The growing population requiring driving rehabilitation—including post-COVID patients, neurological cases, and elderly drivers—faces significant challenges in retraining coordination, reaction speed, and decision-making skills. Current solutions lack comprehensive testing protocols for these specific needs. Our innovative rehabilitation device addresses this gap through a multi-module system combining physical and cognitive training with real-time biofeedback.

The prototype’s design features several key innovations:

- A modular architecture with left mirror-window, central steering, and right control panel modules enables holistic training beyond standard steering wheel simulators;

- Tactile-diverse buttons (varying materials, textures, sizes) stimulate hand receptors while improving grip strength and fine motor control;

- Integrated biofeedback through distance sensors and cameras tracks head/torso movements, providing corrective feedback;

- Cognitive task integration links mirror-window displays with central screen exercises, training visual-motor coordination.

Initial testing with four participants (ages 40–68, including post-COVID and neurological cases) showed promising results during one-week trials. The multi-module approach appeared particularly effective in:

- Reducing response times by 15% through coordinated task execution;

- Improving engagement versus conventional methods;

- Enhancing both physical mobility and cognitive skills simultaneously.

However, the study has limitations:

- Small sample size (n = 4) and short duration (1 week);

- Lack of comparison with traditional rehabilitation methods;

- Need for standardized performance metrics.

Future research directions should include:

- Expanded clinical trials with diverse patient groups;

- Comparative studies against commercial simulators;

- Development of adaptive algorithms using sensor data;

- Long-term outcome measurements.

The developed prototype can be considered a device aligned with the Society 5.0 concept, which integrates modern technologies with societal challenges, such as post-illness rehabilitation. Within this vision, technologies such as IoT, robotics, and artificial intelligence are meant to enhance the quality of life while maintaining the central role of human decision-making. Our mechatronic device fits this paradigm by combining advanced technical solutions (e.g., driving simulation with biofeedback or adjustable steering resistance modules) with the individual needs of patients suffering from neurological disorders. Unlike fully autonomous systems, our solution requires active participation from both the patient and the therapist at every stage of rehabilitation. The user determines the selection of training modules, exercise intensity, and data interpretation, ensuring compliance with the human-in-the-loop principle. Additionally, therapy personalization (e.g., adapting steering resistance to the patient’s health condition) highlights the societal role of the device: it not only aids recovery but also helps prevent transportation exclusion by enabling a safer return to driving. Such synergy between technology and societal goals is key to Society 5.0, where technological progress must go hand-in-hand with solving real-world challenges, such as an aging population or the rising prevalence of neurological diseases.

5. Conclusions

The study demonstrates that the developed rehabilitation system effectively enhances motor skills and cognitive functions in individuals with movement limitations, including those with neurological impairments. Testing with four participants revealed measurable improvements in precision (10–30% error reduction) and reaction times (decreasing from 3.8 s to 1.8 s in basic tasks), even among users with significant initial difficulties. The system’s stability (no software malfunctions) and adaptability (adjustable arm length and tilt angle) support personalized rehabilitation, making it suitable for users with varying degrees of motor dysfunction.

The prototype successfully integrates physical and cognitive training, combining tactile interfaces, biofeedback, and adaptive exercises to improve coordination, reaction speed, and decision-making—key skills for driving rehabilitation. While the preliminary results are promising, further clinical trials with larger participant groups are necessary to validate their effectiveness. The system’s design allows for potential applications in both rehabilitation centers and home-based therapy, offering a scalable solution for motor and cognitive recovery.

Ultimately, this technology has significant potential to enhance rehabilitation outcomes and road safety by better preparing individuals with mobility impairments for real-world driving scenarios. Future research should focus on expanding the system’s functionalities, optimizing user interfaces for different disability levels, and conducting long-term studies to assess sustained improvements in motor and cognitive performance.

6. Patents

Application to the Patent Office of the Republic of Poland on 27 September 2022 entitled “Device for Exercising Drivers”, no. P.442373, by Jacek S. Tutak, Krzysztof Lew, Andrzej Burghardt, Michał Jurek, and Piotr Matłosz.

Author Contributions

Conceptualization, J.S.T. and K.L.; methodology, J.S.T. and K.L.; software, J.S.T. and K.L.; validation, J.S.T.; formal analysis, K.L.; investigation, J.S.T. and K.L.; resources, J.S.T. and K.L.; data curation, K.L.; writing—original draft preparation, J.S.T. and K.L.; writing—review and editing, K.L.; visualization, J.S.T.; supervision, K.L.; project administration, K.L.; funding acquisition, J.S.T. All authors have read and agreed to the published version of the manuscript.

Funding

Grant number N3_626 and funded by “PCI—Podkarpackie Centrum Inowacji”.

Data Availability Statement

Data are contained within the article. All data have been presented in the text of the paper; other data can be made available on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Reeves, M.J.; Bushnell, C.D.; Howard, G.; Gargano, J.W.; Duncan, P.W.; Lynch, G.; Khatiwoda, A.; Lisabeth, L. Sex differences in stroke. Epidemiology, clinical presentation, medical care, and outcomes. Lancet Neurol. 2008, 7, 915–926. [Google Scholar] [CrossRef] [PubMed]

- Peterson, B.L.; Won, S.; Geddes, R.I.; Sayeed, I.; Stein, D.G. Sex-related differences in effects of progesterone following neonatal hypoxic brain injury. Behav. Brain Res. 2015, 286, 152–165. [Google Scholar] [CrossRef] [PubMed]

- Just, F.; Baur, K.; Riener, R.; Klamroth-Marganska, V.; Rauter, G. Online adaptive compensation of the ARMin Rehabilitation Robot. In Proceedings of the 6th IEEE International Conference on IEEE 2016, Singapore, 26–29 June 2016; pp. 747–752. [Google Scholar]

- Kwolek, A. Rehabilitacja Medyczna; Elsevier Urban & Partner: Wroclaw, Poland, 2003. [Google Scholar]

- Grabowska-Fudala, B.; Jaracz, K.; Górna, K. Zapadalność, śmiertelność i umieralność z powodu udarów mózgu—Aktualne tendencje i prognozy na przyszłość. Przegląd Epidemiol. 2010, 64, 439–442. [Google Scholar]

- Feigin, V.L.; Forouzanfar, M.H.; Krishnamurthi, R.; Mensah, G.A.; Connor, M.; Bennett, D.A.; Moran, A.E.; Sacco, R.L.; Anderson, L.; Truelsen, T.; et al. Global and regional bur den of stroke during 1990–2010. Findings from the Global Burden of Disease Study 2010. Lancet 2014, 383, 245–255. [Google Scholar] [CrossRef] [PubMed]

- Seshadri, S.; Wolf, A. Lifetime risk of stroke and dementia: Current concepts, and estimates from the framingham study. Lancet Neurol. 2017, 6, 1106–1114. [Google Scholar] [CrossRef]

- Mohan, K.M.; Wolfe, C.D.; Rudd, A.G.; Heuschmann, P.U.; Kolominsky-Rabas, P.L.; Grieve, A.P. Risk and cumulative risk of stroke recurrence. A systematic review and meta-analysis. Stroke 2011, 42, 1489–1494. [Google Scholar] [CrossRef] [PubMed]

- Gunduz, M.E.; Bucak, B.; Keser, Z. Advances in Stroke Neurorehabilitation. J. Clin. Med. 2023, 12, 6734. [Google Scholar] [CrossRef]

- O’Donnell, M.J.; Xavier, D.; Liu, L.; Zhang, H.; Chin, S.L.; Rao-Melacini, P.; Rangarajan, S.; Islam, S.; Pais, P.; McQueen, M.J.; et al. Risk factors for ischaemic and intracerebral haemorrhagic stroke in 22 countries (INTERSTROKE study). A case-control study. Lancet 2010, 376, 112–123. [Google Scholar] [CrossRef]

- Kirchner, A.H.; Gish Kenneth, W.; Staplin, L. System for Testing and Evaluating Driver Situational Awareness. U.S. Patent 5888074-A, 30 March 1999. [Google Scholar]

- Best Aaron, M.; Turpin Aaron, J.; Purvis Aaron, M.; Barton, J.K.; Havell David, J.; Welles Reginald, T.; Turpin Darrell, R.; Voorhees James, W.; Kearney John, E.; Price Camille, B.; et al. System, Method and Apparatus for Adaptive Driver Training. U.S. Patent 20110076649-A1, 31 March 2011. [Google Scholar]

- Joon Woo, S.; Myoung Ouk, P.; Woo Taik, L.; Hwa Kyung, S. Driving Simulator Apparatus and Method of Driver Rehabilitation Training Using the Same. U.S. Patent 20150024347-A1, 22 January 2015. [Google Scholar]

- Best, A.M.; Barton, J.K.; Havell, D.J.; Welles, R.T.; Turpin, D.R.; Voorhees, J.V.; Kearney, J.E.; Price, C.B.; Stahlman, N.P.; Turpin, A.J.; et al. System, Method and Apparatus for Adaptive Driver Training. U.S. Patent 8770980B2, 8 July 2014. [Google Scholar]

- Kearney, J.E.; Turpin, D.R.; Price, C.B.; Stahlman, N.P.; Welles, R.T.; Havell, D.J.; Purvis, A.M.; Barton, J.K.; Best, A.M.; Turpin, A.J.; et al. System, Method and Apparatus for Driver Training. U.S. Patent 8894415-B2, 25 November 2014. [Google Scholar]

- Olczak, A.; Truszczyńska-Baszak, A. Influence of the Passive Stabilization of the Trunk and Upper Limb on Selected Parameters of the Hand Motor Coordination, Grip Strength and Muscle Tension, in Post-Stroke Patients. J. Clin. Med. 2021, 10, 2402. [Google Scholar] [CrossRef]

- Zasadzka, E.; Tobis, S.; Trzmiel, T.; Marchewka, R.; Kozak, D.; Roksela, A.; Pieczyńska, A.; Hojan, K. Application of an EMG-Rehabilitation Robot in Patients with Post-Coronavirus Fatigue Syndrome (COVID-19)-A Feasibility Study. Int. J. Env. Res. Public Health 2022, 19, 10398. [Google Scholar] [CrossRef]

- Johnson, M.; Van der Loos, M.H.F.; Burgar, C.G.; Shor, P. Design and evaluation of Driver’s SEAT: A car steering simulation environment for upper limb stroke therapy. Robotica 2003, 21, 13–23. [Google Scholar] [CrossRef]

- George, S.; Crotty, M.; Gelinas, I.; Devos, H. Rehabilitation for improving automobile driving after stroke. Cochrane Database. Syst. Rev. 2014, 2014, CD008357. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Almaleh, A. A Novel Deep Learning Approach for Real-Time Critical Assessment in Smart Urban Infrastructure Systems. Electronics 2024, 13, 3286. [Google Scholar] [CrossRef]

- Oleksy, Ł.; Królikowska, A.; Mika, A.; Reichert, P.; Kentel, M.; Kentel, M.; Poświata, A.; Roksela, A.; Kozak, D.; Bienias, K.; et al. A reliability of active and passive knee joint position sense assessment using the Luna EMG rehabilitation robot. Int. J. Environ. Res. Public Health 2022, 19, 15885. [Google Scholar] [CrossRef]

- Jacka, S.T.; Krzysztofa, L.; Andrzeja, B.; Michała, J.; Piotra, M. Urządzenie do Ćwiczenia Kierowców. Nr. Patent 442373, 27 September 2022. [Google Scholar]

- Wirkner, J.; Christiansen, H.; Knaevelsrud, C.; Lüken, U.; Wurm, S.; Schneider, S.; Brakemeier, E.-L. Mental Health in Times of the COVID-19 Pandemic: Current Knowledge and Implications From a European Perspective. Eur. Psychol. 2021, 26, 310–322. [Google Scholar] [CrossRef]

- Cinar, O.E.; Rafferty, K.; Cutting, D.; Wang, H. AI-Powered VR for Enhanced Learning Compared to Traditional Methods. Electronics 2024, 13, 4787. [Google Scholar] [CrossRef]

- Bae, J.; Kwon, S.; Myeong, S. Enhancing Software Code Vulnerability Detection Using GPT-4o and Claude-3.5 Sonnet: A Study on Prompt Engineering Techniques. Electronics 2024, 13, 2657. [Google Scholar] [CrossRef]

- Budzik, G.; Turek, P.; Traciak, J. The influence of change in slice thickness on the accuracy of reconstruction of cranium geometry. Proc IMechE Part H J. Eng. Med. 2017, 231, 197–202. [Google Scholar] [CrossRef]

- Turek, P. Automating the process of designing and manufacturing polymeric models of anatomical structures of mandible with Industry 4.0 convention. Polimery 2019, 64, 522–529. [Google Scholar] [CrossRef]

- Shin, H.; Park, W.; Kim, S.; Kweon, J.; Moon, C. Driver Identification System Based on a Machine Learning Operations Platform Using Controller Area Network Data. Electronics 2025, 14, 1138. [Google Scholar] [CrossRef]

- Cao, Y.; Sun, H.; Li, G.; Sun, C.; Li, H.; Yang, J.; Tian, L.; Li, F. Multi-Environment Vehicle Trajectory Automatic Driving Scene Generation Method Based on Simulation and Real Vehicle Testing. Electronics 2025, 14, 1000. [Google Scholar] [CrossRef]

- Fan, L.; Yang, J.; Wang, L.; Zhang, J.; Lian, X.; Shen, H. Efficient Spiking Neural Network for RGB–Event Fusion-Based Object Detection. Electronics 2025, 14, 1105. [Google Scholar] [CrossRef]

- Trzmiel, T.; Marchewka, R.; Pieczyńska, A.; Zasadzka, E.; Zubrycki, I.; Kozak, D.; Mikulski, M.; Poświata, A.; Tobis, S.; Hojan, K. The Effect of Using a Rehabilitation Robot for Patients with Post-Coronavirus Disease (COVID-19) Fatigue Syndrome. Sensors 2023, 23, 8120. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).