Abstract

This study aims to effectively defend against complex and diverse adversarial attacks, enhance adversarial defense performance in the field of textual adversarial examples, and address existing issues in current defense methods such as models’ inability to accurately detect perturbations and perform effective error correction. To this end, we propose a Large Language Model Adversarial Defense (LLMAD) method based on perturbation detection and correction. The LLMAD framework consists of two modules: a perturbation detection module and a perturbation correction module. The detection module combines pre-trained models with soft-masking layers to rapidly determine whether samples contain adversarial perturbations. The correction module employs large language models fine-tuned using an adversarial example perturbation dataset constructed through data augmentation, enhancing task performance in adversarial defense and enabling efficient and accurate error correction of adversarial samples. Experimental results demonstrate that, across multiple datasets, various target models defended through the LLMAD method achieve satisfactory defense effectiveness against different types of adversarial attacks. The classification accuracy of defended models shows an average improvement of 66.8% compared to undefended baselines and outperforms existing methods by 13.4% on average. Additional perturbation detection performance tests and ablation studies further validate the accuracy of detection capabilities and the effectiveness of module combinations. A series of experiments confirm that the LLMAD method can significantly enhance defensive effectiveness against textual adversarial attacks.

1. Introduction

Deep learning technology, as a research hotspot and frontier, has been widely applied in natural language processing [1], computer vision [2], and other research directions, achieving unprecedented breakthroughs. Although deep learning models have been extensively adopted in academia and industry, demonstrating performance surpassing traditional methods, they still face numerous security threats and vulnerabilities [3]. Among these, adversarial examples are one critical issue [4]. Adversarial examples are input samples crafted by attackers through imperceptible, subtle perturbations intentionally added to datasets, causing models to misjudge and output erroneous results [5]. Initial research on adversarial examples primarily focused on the image domain, where methods like FGSM [6] achieved relatively effective results in tasks such as image classification.

With the expanding application of natural language processing in artificial intelligence, researchers have identified the existence of adversarial examples in the text domain, raising growing concerns about textual security. In recent years, adversarial example research in the image domain has matured, while studies in the text domain remain relatively limited [7,8]. This disparity primarily stems from the fact that images are typically two-dimensional or three-dimensional continuous data, whereas text is one-dimensional discrete data, conveying information through combinations of numbers, letters, and symbols. Consequently, most adversarial attack and defense methods applicable to the image domain cannot be transferred to the text domain.

The phenomenon of textual adversarial examples has become pervasive across various NLP downstream tasks. In the context of opinion manipulation, a 2019 investigation exposed that Google’s online translation platform could transform the sentiment polarity of user-input sentences from negative to positive. Furthermore, the 2022 EU Cybersecurity Report highlighted that adversarially generated reviews could bypass content moderation systems, with specific emoji-embedded text reducing misinformation detection accuracy from 98% to 32%. In autonomous driving applications, textual adversarial attacks on traffic signs through added special characters have caused vehicular NLP systems to misinterpret “speed limit 60” as “speed limit 80”, directly elevating collision risk indices. The widespread existence of textual adversarial phenomena underscores the critical necessity of enhancing application security research for corresponding models.

Before the emergence of large language models (LLMs), researchers proposed adversarial defense methods from multiple perspectives to counter malicious textual adversarial attacks and enhance model robustness. However, significant challenges persist. First, detection-based defense methods in deep learning models predominantly rely on masked language models for adversarial example detection. These methods tend to identify errors without correcting them, and their performance requires improvement. This limitation arises because only 10–20% of characters are masked for prediction during pre-training, resulting in insufficient error detection and correction capabilities. Second, current adversarial defense methods struggle to balance robustness improvements with classification accuracy on clean samples. Furthermore, their effectiveness may vary inconsistently across different NLP tasks (e.g., sentiment analysis, natural language inference) [9], and in some cases, they may even reduce model robustness. Finally, models face difficulties in countering diverse adversarial attacks. The rich semantic-syntactic structures and varied expressions in textual data enable attackers to generate adversarial examples through multiple strategies, bypassing existing defense frameworks and causing erroneous text classification.

Therefore, to address the aforementioned issues, we first apply LLM technology to textual adversarial example defense. This paper proposes Large Language Model Adversarial Defense (LLMAD), a defense method based on textual perturbation detection and correction. LLMAD consists of a perturbation detection module and a perturbation correction module. Leveraging the exceptional semantic understanding and text processing capabilities of large language models, it detects and corrects perturbations introduced via attacks, thereby accomplishing adversarial example defense tasks. The main contributions of this paper are as follows:

(1) This paper proposes a large language model-based adversarial defense method for textual perturbation detection and correction. Applying large models to textual adversarial defense deepens expertise in adversarial defense and significantly enhances the model’s capability to defend against adversarial attacks.

(2) This paper rigorously validates the feasibility and effectiveness of integrating large language models into adversarial defense through a series of experiments. The method enables error detection and semantic understanding in text, achieves efficient and precise correction of adversarial examples, and strengthens the model’s ability to detect and eliminate adversarial samples.

(3) This paper proposes and constructs a scalable, comprehensive defense framework. It explores the integration of adversarial defense and text correction technologies to improve defense task performance, providing technical pathways and theoretical foundations for enhancing model security.

2. Related Work

Szegedy et al. [10] first proposed the concept of adversarial examples by constructing adversarial samples through subtle perturbations added to images, attacking classification models and causing deep learning models to make erroneous predictions. Papernot et al. [11] introduced adversarial attacks into the text domain for the first time, utilizing the fast gradient sign method to compute gradients of loss functions and generate word-level adversarial examples. Hou et al. [12] proposed the Multi-Level Disturbance Localization Method (MDLM) method, which integrates heterogeneous deep neural network models as attack targets and combines an improved word importance evaluation function to localize perturbations.

A primary objective of adversarial defense research is to minimize the impact of attacks on model robustness and accuracy by leveraging adversarial examples generated through the aforementioned attack methods, thereby enhancing model security in practical applications. Adversarial detection, the most studied defense approach, identifies adversarial perturbations in input samples and replaces or restores them to clean samples. Zhou et al. [13] proposed the Discriminate Perturbations (DISP) framework, which identifies perturbed words via a perturbation discriminator and finds replacement words through hierarchical classification and K-nearest neighbors search, all while preserving the original model architectures and training processes. Mozes et al. [14] introduced the Frequency Guided Word Substitutions (FGWS) method, which replaces low-frequency words with semantically similar high-frequency words to restore adversarial examples to clean samples. Huang et al. [15] developed the Siamese Calibrated Reconstruction Network (SCRN) method, which optimizes classification consistency via symmetric Kullback–Leibler divergence, forcing models to rely on high-level contextual features and improving resistance to adversarial perturbations. While these defense methods achieve promising results, most focus on specific attack types. Their error detection and correction capabilities degrade in complex textual environments, and they are vulnerable to bypassing by attackers, highlighting insufficient generalization.

LLMs [16] exhibit significant advantages in addressing these challenges. During pre-training, LLMs learn fundamental language structures and general knowledge from large-scale text corpora. Leveraging their exceptional generalization and emergent capabilities [17], they can handle diverse tasks, mitigating the limitations of low generalization and poor transferability in existing adversarial defense methods. The self-attention mechanism [18] in LLMs enables them to process complex syntactic structures in text data and generate semantically similar, natural substitutions, thereby enhancing model robustness and classification accuracy. Prior to the proposal of this paper, large language models had already been applied in the field of adversarial sample attacks. Xu et al. [19] proposed PromptAttack, which converts adversarial textual attacks into an attack prompt that can cause the victim LLM to output the adversarial sample to fool itself. In the field of adversarial example defense, there existed no methods integrated with large language models.

To address the critical limitations of existing adversarial defense methods—including excessive reliance on surface features like word frequency analysis, difficulty in handling semantically coherent perturbations, the accuracy–robustness trade-off dilemma, and insufficient generalization capability—this paper proposes LLMAD, a large language model-based adversarial defense method for textual perturbation detection and correction. LLMAD successfully distinguishes adversarial perturbations from natural variations (e.g., detecting “suttle”→“subtle” substitutions overlooked with FGWS) by leveraging the contextual understanding capabilities of large language models. Through its synergistic dual-module design combining detection and correction, subsequent experiments demonstrate enhanced perturbation detection capability while minimizing the impact on clean samples. These results highlight LLMAD’s unique ability to balance robustness and accuracy, bridging gaps in existing methods and providing an innovative solution for adversarial example defense tasks.

3. Approach

3.1. Problem Formulation

Adversarial examples constitute a class of specialized input samples targeting deep neural network models, typically generated by introducing imperceptible perturbations to original data. These carefully crafted perturbations, though often human-indiscernible, suffice to induce significant output deviations, transforming correct predictions into erroneous ones. This work specifically addresses two adversarial attack typologies: character-level and word-level perturbations in textual domains. The primary objective of our adversarial defense framework lies in enabling deep neural network-based classification models to accurately process adversarial samples through dual enhancements: enhancing both perturbation detection rates and classification accuracy while systematically improving the model’s adversarial robustness.

This paper focuses on adversarial defense methods in the direction of adversarial example detection. Thus, adversarial defense can be formulated as the following task: Given a classification model, M, original sample data , and classification labels, , the model, M, learns a correct mapping, , through training, which maps an input, , to its corresponding label, in Y. The maximum probability formula for classification is as follows:

Given an adversarial example, , the model, M, misclassifies , as expressed by the following:

By applying adversarial defense methods to M, adversarial example detection and correction are performed to convert into , where the adversarial perturbation in is restored or replaced to obtain . This results in a defended model , which restores the correct label for while maintaining accurate classification for normal inputs:

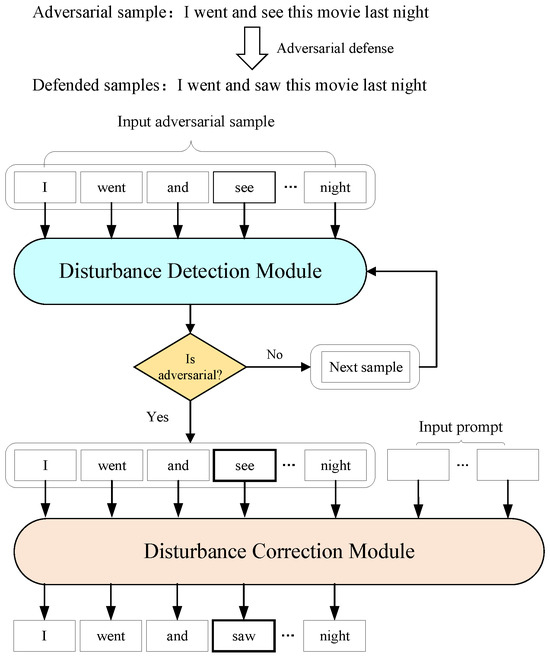

3.2. Model

To address adversarial examples generated via contextual perturbations caused by adversarial attacks on original samples, this paper proposes LLMAD, a textual adversarial defense method based on perturbation detection and correction, with its detailed structure illustrated in Figure 1. LLMAD consists of a perturbation detection module and a perturbation correction module. The detection module localizes and detects perturbations in adversarial samples, predicts error probabilities, determines whether an input sample is adversarial, and forwards adversarial examples to the correction module. The correction module restores or replaces adversarial samples with clean counterparts, thereby eliminating adversarial perturbations and ensuring correct model outputs.

Figure 1.

Structure of LLMAD.

3.3. Disturbance Detection Module

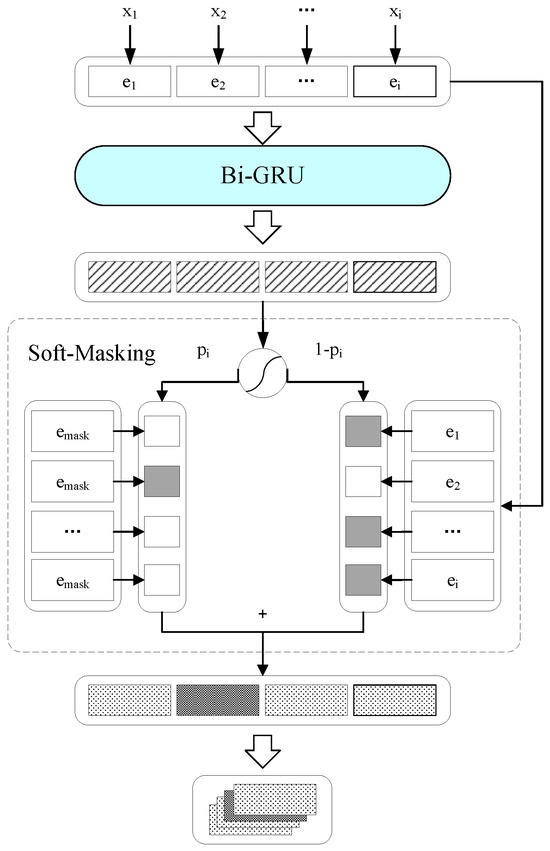

The perturbation detection module in this study consists of a Bi-GRU model and a soft-masking layer, designed to determine whether input samples are adversarial. This module aims to distinguish between adversarial examples and clean samples, forwarding detected adversarial examples to the perturbation correction module to eliminate their adversarial nature, thereby achieving defense against adversarial attacks. On the one hand, the module avoids unnecessary modifications to clean samples; on the other hand, it enhances the efficiency of the defense framework. The architecture of the perturbation detection module in the LLMAD method is illustrated in Figure 2.

Figure 2.

Structure of disturbance detection module.

The input is an embedding sequence , where represents the embedding of the word , which is the sum of the word embedding, positional embedding, and segment embedding of . The output is a label sequence , where denotes the label of the i-th character, with 1 indicating an incorrect character and 0 indicating a correct one. For each word, there is a probability , representing the likelihood of being labeled 1. A higher corresponds to a greater probability of incorrectness. For each word in the sequence, the error probability is defined as shown in Equation (4), where denotes the conditional probability provided via the detection network, represents the sigmoid function, is the hidden state of the Bi-GRU, and and are learnable parameters:

Additionally, the hidden states are defined in Equations (5)–(7), where GRU denotes the GRU function, and represents the concatenation of the hidden states from both directions of the Bi-GRU:

The soft-masking layer corresponds to a weighted sum of the input embeddings and mask embeddings, with the error probability serving as the weight. The soft-masked embedding for the i-th character is defined in Equation (8), where is the input embedding, and is the mask embedding:

The perturbation detection module employs Equation (9) to determine whether an input sample is adversarial, where and are hyperparameters. If , the sample is non-adversarial; otherwise, it is forwarded to the perturbation correction module:

The training of the perturbation detection module follows an end-to-end approach. Given training data pairs consisting of original sequences and corrected sequences, , where represents an error-containing sequence and denotes the corresponding error-free sequence, (). The training objective is to optimize the loss function for improved detection performance:

3.4. Perturbation Correction Module

3.4.1. Construction of Adversarial Perturbation Dataset

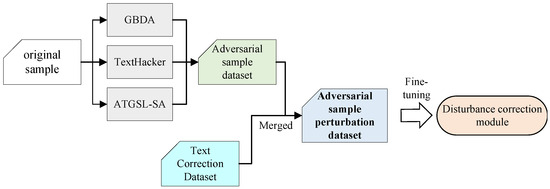

Due to the scarcity of publicly available high-quality adversarial example datasets, this paper employs data augmentation on adversarial examples to enhance the capability of large models in textual adversarial defense tasks and improve the performance of the perturbation correction module. Specifically, the adversarial example dataset is combined with a text correction dataset to construct an adversarial perturbation dataset, which is then used to fine-tune the large model, as illustrated in Figure 3.

Figure 3.

Construction of adversarial perturbation dataset. First, state-of-the-art adversarial attack algorithms are applied to original samples to generate three adversarial examples, which are combined into an adversarial example dataset. This dataset is then merged with the text correction dataset, ultimately forming the adversarial perturbation dataset used in the fine-tuning phase of large language models.

On the one hand, state-of-the-art adversarial attack algorithms are applied to original samples to generate adversarial examples, which are combined to form the adversarial example dataset. These include the ATGSL-SA [20], TextHacker [21], and GBDA [22] algorithms:

- ATGSL-SA employs a heuristic search-based attack algorithm combined with a linguistic lexicon to generate adversarial examples with high semantic similarity;

- TextHacker utilizes a learning-based hybrid local search algorithm that estimates word importance through prediction label changes induced via word substitutions and optimizes adversarial perturbations to minimize deviations from original samples;

- GBDA searches for an adversarial distribution, leveraging BERTScore and language model perplexity to enhance perceptual quality and fluency. The integration of these components enables powerful, efficient, and gradient-based textual adversarial attacks.

On the other hand, the text correction dataset is constructed using the FEC dataset and NUCLE dataset [23], both widely adopted as baseline datasets for text correction tasks:

- The FEC dataset consists of 1244 scripts written by international learners of English as a second language. Each script typically contains two errors, with each error annotated and corrected by human annotators based on a framework of 88 error types;

- The NUCLE dataset comprises 1397 argumentative essays covering diverse topics such as technology, healthcare, and finance. Each essay is corrected by an annotator following a taxonomy of 28 error types.

3.4.2. Fine-Tuning of the Perturbation Correction Module

The perturbation correction module in this study is based on the ChatGLM3-6b large language model fine-tuned with low-rank adaptation (LoRA), designed to restore or replace adversarial samples with clean counterparts. The input consists of an adversarial sequence, , and a prompt, while the output is the corrected sequence . The ChatGLM model architecture comprises 28 GLM blocks. Unlike standard transformer modules, each GLM block contains a multi-query attention (MQA) layer and a gated linear unit (GLU), defined as follows:

where the following applies: Q, K, and V denote the query, key, and value matrices, respectively; i represents the current head number, and h represents the total number of attention heads; are learnable weight matrices; represents the output of the i-th attention head; and is the dimensionality of the key vectors. In MQA, each head maintains its own matrix, while all heads share the same and matrices. Despite sharing and , each head independently computes its attention distribution. Compared to multi-head attention, MQA reduces memory consumption and improves computational efficiency. denotes vector concatenation, and replaces the standard feed-forward network (FFN) layer with a gated linear unit. The parameters are optimized during training.

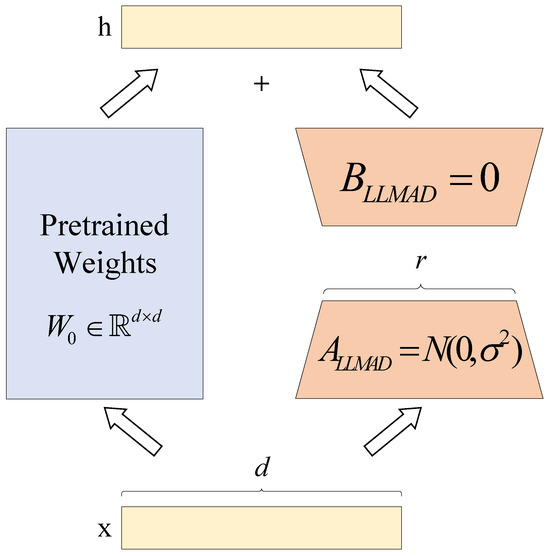

LLMs, pre-trained on massive datasets with substantial computational resources, have acquired extensive general features and patterns, making them foundational for diverse tasks. Building upon these pre-trained models, this study fine-tunes them using an adversarial example perturbation dataset to enhance their performance on downstream specific tasks and meet required benchmarks. Compared to the prohibitive computational costs of full-parameter fine-tuning, LoRA offers a more efficient alternative. LoRA indirectly trains specific dense layers by optimizing rank-decomposition matrices that approximate changes in dense layers during adaptation, while keeping pre-trained weights frozen. This approach significantly improves training efficiency and lowers hardware requirements. The architecture of the perturbation correction module in the LLMAD method is illustrated in Figure 4.

Figure 4.

LoRA in the disturbance correction module. This figure illustrates the low-rank adaptation (LoRA) architecture in the perturbation correction module, including the frozen pre-trained parameter , input x, output h, zero-initialized matrix , and randomly initialized matrix , with data-flow arrows and color coding (blue for static parameters and orange for trainable parameters) clarifying parameter interactions.

During the training of the perturbation correction module, for a predicted weight matrix, , we approximate the weight update via low-rank decomposition using matrices and :

During training, remains frozen and receives no gradient updates, while and contain trainable parameters. For , the modified forward propagation result is given via Equation (16):

During training, is random Gaussian-initialized, and is zero-initialized, ensuring that is initially zero. Subsequently, is scaled by , yielding the final output as Equation (17):

Inference process of the perturbation correction module: By integrating LoRA, the low-rank matrices are merged with the pre-trained weights. This reduces the number of fine-tuned parameters from to while preserving the output dimensionality. The modified model then performs standard forward inference, achieving efficient parameter adaptation without compromising the high performance of the pre-trained model.

When applying the perturbation correction module, adversarial samples are input into the prompt template of the perturbation correction module to construct prompts for the fine-tuned large language model. In Table 1, adversarial sample denotes the adversarial input, while correct sample represents the corrected output.

Table 1.

Prompt template for perturbation correction module.

In the perturbation correction model, the prompt templates need to constrain the model’s modification range and minimize changes to sentence length, guiding the model to accomplish adversarial example defense tasks with minimal cost, which aligns with real-world perturbation correction scenarios. To address this, this paper applies the constraint statements “minimal modifications” and “try not to change the sentence length” to construct prompt templates.

4. Experiments

4.1. Dataset and Metrics

The datasets used in the study are as follows: the movie review dataset IMDB [24], the news classification dataset AG’s News [25], and the social Q&A dataset Yahoo!Answers [25]. Detailed information about the datasets is shown in Table 2.

Table 2.

Experimental dataset. IMDB for sentiment analysis (positive/negative movie reviews), AG’s News for news topic classification (world/sports/business/sci-tech news topics), and Yahoo!Answers for multi-domain Q&A categorization (health/education/travel, etc.). IMDB uses an official balanced split (50% positive/negative); AG’s News follows a temporal split (pre-2012 for training and 2014 for testing); Yahoo!Answers a adopts random split (5% held out for testing).

To assess the performance of perturbation detection, three metrics are employed: precision, recall, and F1-score, as defined in Equations (18)–(20):

where denotes correctly predicted positive samples; denotes Correctly predicted negative samples; denotes Incorrectly predicted positive samples; denotes Incorrectly predicted negative samples.

The effectiveness of adversarial defense methods is measured using the classification accuracy (accuracy) of the model under attack. A higher accuracy after applying defense methods indicates stronger defensive capabilities. The accuracy formula is given in Equation (21):

4.2. Baselines

For comparison, we chose the following models as the target models and adopted the following methods as baselines.

CNN [26], LSTM [27], and BERT [28], as classic target models extensively utilized in the adversarial defense field with widespread adoption in numerous studies, are shown below:

- CNN: CNN uses multi-scale convolutional kernels, embedding layers, varied convolutions, max-pooling, fully-connected layers, and softmax for text feature extraction and classification.

- LSTM: LSTM network introduces a gated mechanism, employing forget gates, input gates, and output gates to dynamically control the retention, updating, and transmission of sequential state information.

- BERT: BERT, a bidirectional transformer model, uses self-attention and masked language tasks to learn global context. Fine-tuning adapts it to downstream tasks with improved transfer.

Adversarial attack methods are shown below:

- PWWS [29]: PWWS creates text adversarial examples by replacing words with synonyms weighted by word saliency and classification probability change.

- TextFooler [30]: TextFooler generates adversarial text by replacing important words with semantically similar synonyms to alter model predictions while preserving semantic meaning.

- DeepWordBug [31]: DeepWordBug generates adversarial text sequences by identifying critical tokens and applying small character-level transformations to evade deep learning classifiers.

Adversarial defense baselines are shown below:

- FGWS [14]: FGWS detects adversarial examples by replacing infrequent words with semantically similar, more frequent ones based on a frequency analysis of adversarial substitutions.

- WDR [32]: WDR detects adversarial text examples by analyzing logits variation to identify suspicious word-level changes in classifier reactions.

- SEM [33]: SEM defends against synonym substitution attacks by encoding synonyms to unique tokens before the input layer.

4.3. Experiment Setting

The runtime environment is Ubuntu OS with 4 NVIDIA Tesla T4 GPUs and 128 GB of memory. The deep learning framework uses Python 3.12.4 and PyTorch 2.4.1. The key model fine-tuning parameters are as follows: a learning rate of 5 × 10−5, 3.0 training epochs, a maximum of 100,000 samples, a batch size of 8, gradient accumulation steps of 8, a LoRA rank of 8, a LoRA scaling factor of 16, and other hyperparameters set to default values.

FGWS and WDR algorithms are text-adversarial detection methods, and the minimum confidence threshold for detecting adversarial samples is set to 0.9, with a true positive rate parameter . For the SEM algorithm, which is a synonym-encoding method, the synonym set for each word is defined as the k nearest words within the Euclidean distance in the embedding space. Here, , and .

4.4. Main Results

To validate the performance of the proposed LLMAD method in adversarial defense, this section compares it with three baseline methods by evaluating the classification accuracy of the CNN, LSTM, and BERT models under different adversarial attacks. A stronger defense method is indicated by higher classification accuracy and enhanced robustness across various adversarial attacks. Key results are summarized in Table 3, Table 4 and Table 5, where “None” indicates that the model was not subjected to any attacks or defenses.

Table 3.

Defense effectiveness of LLMAD and baseline defense methods on IMDB dataset. We show the Accuracy, with the best performance metrics highlighted in bold for all methods except the None-defense baseline.

Table 4.

Defense effectiveness of LLMAD and baseline defense methods on AG’s News dataset. We show the accuracy, with the best performance metrics highlighted in bold for all methods except the none-defense baseline.

Table 5.

Defense effectiveness of LLMAD and baseline defense methods on Yahoo! dataset. We show the accuracy, with the best performance metrics highlighted in bold for all methods except the none-defense baseline.

As shown in Table 3, on the IMDB dataset, LLMAD improves the classification accuracy by 6.2–39.6% for the CNN model, 2.8–30.1% for the LSTM model, and 11.8–43.3% for the BERT model. In Table 4, on the AG’s News dataset, LLMAD enhances classification accuracy by 5.3–24.8% for the CNN model, 8.0–29.4% for the LSTM model, and 4.3–26.2% for the BERT model. Table 5 demonstrates that, on the Yahoo! Answers dataset, LLMAD achieves accuracy improvements of 3.2–28.5% for the CNN model, 7.8–34.4% for the LSTM model, and 4.3–34.1% for the BERT model. Under various attack scenarios, the classification accuracy of the models improved by an average of 66.8% compared to the undefended state and by an average of 13.4% compared to baseline methods. LLMAD achieved the most optimal defense performance across all three datasets, demonstrating a significant advantage over baseline defense methods.

Additionally, in the absence of attacks, after the LLMAD defense was applied, the classification accuracy of the models on the IMDB dataset showed the least decline, while the performance on the other two datasets remained close to the original sample classification accuracy—defined as the classification accuracy under no attack or defense conditions, corresponding to the value at the intersection of the “None” row and “None” column in the tables—with a decline of no more than 3.3%. Furthermore, compared to the three baseline defense methods, LLMAD achieved classification accuracy closest to the original sample classification accuracy on the IMDB dataset. On the other two datasets, the classification accuracy of LLMAD was slightly lower than that of WDR and SEM methods, but the difference did not exceed 2.7%. These results confirm that LLMAD minimally impacts the correct classification of clean, non-attacked samples while providing robust adversarial defense.

4.5. Perturbation Detection Performance

To validate the perturbation detection performance of the proposed LLMAD method, this section compares it with two baseline methods, FGWS and WDR, by evaluating the recall and F1-scores of CNN, LSTM, and BERT models under diverse adversarial attacks. SEM, which employs synonym encoding, is excluded from perturbation detection comparisons. As shown in Table 6, across 27 experimental groups involving different adversarial attacks, LLMAD achieves stable and superior detection performance. On the IMDB dataset, the LLMAD method achieved an average F1-score improvement of 18% over the FGWS method and 2.9% over the WDR method; on the AG’s News dataset, it improved the average F1-score by 9.5% compared to FGWS and 3% compared to WDR; on the Yahoo!Answers dataset, LLMAD outperformed FGWS by an average F1-score margin of 15.1% and WDR by 2.5%. The experimental results demonstrate that the LLMAD method attained higher recall rates and F1-scores in most cases, consistently surpassing baseline methods and exhibiting comprehensive detection performance advantages.

Table 6.

Perturbation detection performance on three datasets. We show the Rec (recall) and F1 (F1-scores), with the best performance metrics highlighted in bold.

4.6. Semantic Similarity Performance

To validate that the text samples defended with LLMAD are semantically closer to the original samples, this section presents comparative experiments with two baseline methods (FGWS and WDR) on the IMDB dataset. We measure the semantic similarity between the original texts and the adversarial-defended texts across CNN, LSTM, and BERT models under different attacks using the Universal Sense Encoder (USE). As shown in Table 7, our proposed LLMAD method consistently achieves higher semantic similarity scores than baseline methods, demonstrating that samples processed via LLMAD better preserve the original semantic content compared to those modified with existing defense approaches.

Table 7.

Semantic similarity performance on the IMDB dataset. We approximate the semantic similarity between the defended sample and the original sample by demonstrating the cosine similarity calculated using USE, with the best performance metrics highlighted in bold.

4.7. Ablation Study

To validate the impact of different modules in the LLMAD method on defense performance, ablation experiments were conducted on the IMDB dataset using CNN, LSTM, and BERT models by removing either the perturbation detection module or the perturbation correction module during adversarial defense tasks. The experimental groups included ODDMAD (adversarial defense with only disturbance detection module), which retains the detection module and employs BERT to generate replacement words for defense, and ODCMAD (adversarial defense with only disturbance correction module), which retains only the correction module. The results of the ablation experiments in this paper are shown in Table 8.

Table 8.

Ablation results on IMDB datasets. We show the accuracy, with the best performance metrics highlighted in bold for all methods.

As shown in the table, compared to the full LLMAD framework, models defended with ODDMAD exhibit average accuracy reductions of 5.3–7.2% under various attacks, while those defended with ODCMAD show average reductions of 2.5–2.9%. These results indicate that using either module alone degrades defense effectiveness relative to LLMAD. The combined integration of the perturbation detection module and perturbation correction module yields effective performance improvement, demonstrating their synergistic necessity for robust adversarial defense.

5. Discussion

5.1. Future Research Directions

The proposed LLMAD method demonstrates significant potential in text adversarial defense tasks, yet further optimization and expansion necessitate exploration across multiple dimensions for future research directions. Technical pathway optimization could involve investigating the performance disparities of alternative large language models in adversarial defense, particularly validating whether retrieval-augmented architectures can reduce computational costs while preserving high correction accuracy. Additionally, cross-lingual generalization represents a critical direction for validation—for instance, adapting the framework to morphologically complex languages (e.g., Chinese polyphonic characters or Arabic agglutinative roots). This necessitates the construction of multilingual adversarial perturbation datasets and the design of language-adaptive detection modules.

At the data and experimental design level, it is advisable to build larger-scale adversarial example perturbation datasets that incorporate diverse attack types (e.g., text style transfer, syntax perturbations with semantic preservation) and explore an “adversarial co-training” mechanism to jointly optimize detection and correction modules, enabling iterative robustness enhancement in dynamic adversarial environments. Systematic experiments should also be designed to compare the impact of fine-tuning strategies (e.g., LoRA adapters, prompt engineering, and full-parameter fine-tuning) on defense performance, particularly under resource-constrained or few-shot scenarios. Future work ought to incorporate domain-specific knowledge (e.g., medical or legal texts) to establish vertical domain-specific defense benchmarks, thereby driving the transition from generic defense frameworks to task-specific paradigms. This progression would address domain-tailored adversarial threats while maintaining cross-task generalizability.

5.2. Practical Application

In practical applications, LLMAD can be integrated into online review analysis systems to provide defense against adversarial attacks.

Typical application scenarios encompass sentiment analysis of user reviews on e-commerce platforms and public opinion monitoring on social media platforms. The workflow of such systems generally consists of four sequential stages: data input, preprocessing, sentiment analysis, and result output. Within this framework, the perturbation detection module can identify adversarial perturbations in input texts during the preprocessing phase. Subsequently, the perturbation correction module transforms detected adversarial examples into semantically consistent clean text prior to sentiment analysis. By strategically positioning LLMAD at these two critical junctures, the collaborative mechanism between perturbation detection and correction effectively mitigates adversarial attacks while maintaining textual integrity.

5.3. Statistical Significance Test

To evaluate the statistical significance of performance improvements on the IMDB dataset, we conducted independent two-sample t-tests comparing LLMAD against both FGWS and WDR baselines across 20 independent experimental runs, with statistical significance determined at = 0.05 and adjusted for multiple comparisons using Bonferroni correction. Given the extensive scope covering four attack types and two baseline comparisons per model (totaling 24 test cases), we present only the most critical results, focusing on the most significant comparisons per model or attack method. The results of the statistical significance test in this paper are shown in Table 9.

Table 9.

On the IMDB dataset, independent two-sample t-tests were conducted for the LLMAD method with Bonferroni correction applied for multiple comparison adjustments. The p-value served as the significance threshold, where the null hypothesis was rejected only when p < 0.0083 under the Bonferroni-adjusted significance level ( = 0.0083).

The t-test results conclusively demonstrate that LLMAD’s accuracy gains are not attributable to random variation, with statistically significant superiority over baselines across all attack scenarios (all p-values < 0.0083 after Bonferroni correction). This robust statistical evidence validates our hypothesis that LLMAD enhances adversarial defense capability.

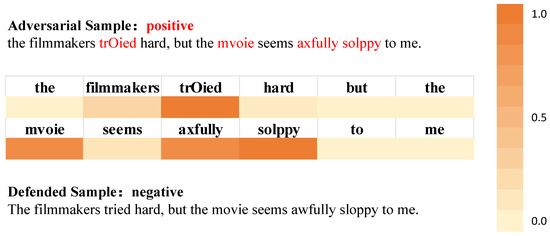

5.4. Case Study

To validate the practical defensive efficacy of LLMAD, we conducted case analyses on specific adversarial instances. The purpose of our case study was to validate the effectiveness of LLMAD’s adversarial perturbation detection and correction mechanisms through comparative analysis of adversarial and post-defense samples, and to demonstrate its ability to preserve semantic integrity and rectify classification outcomes.

As illustrated in Figure 5, adversarial perturbations in the sample (e.g., “trOied”, “mvoie”, “axfully”, and “solppy”) are detected as adversarial modifications. The sample is consequently identified as adversarial and forwarded to the large language model for perturbation correction. Post-defense, these perturbations are successfully eliminated and corrected to “tried”, “movie”, “awfully”, and “sloppy”, respectively. The specific insights we can obtain from the case study are as follows:

- Adversarial perturbation detection: The heatmap highlights significantly elevated perturbation probabilities (darker hues) in tampered words (e.g., “trOied”), confirming the detection module’s precision in localizing adversarial modifications.

- Linguistic error correction: the defense module not only corrects adversarial perturbations (e.g., “mvoie”→“movie”) but also adaptively fixes grammatical errors in original samples (e.g., capitalizing “The”).

- Classification outcome rectification: the post-defense sample’s classification shifts from “positive” to “negative”, empirically proving that perturbation correction restores the model’s understanding of true semantic meaning.

Figure 5.

Probability heatmap visualization. The perturbation detection module generates probability heatmaps where darker hues indicate higher probabilities of adversarial perturbation predictions. Color intensity → adversarial perturbation probability (0–1); tampered tokens in adversarial samples (e.g., ’trOied’, ’mvoie’) exhibit high perturbation probabilities (dark hues).

6. Conclusions

This paper has proposed LLMAD, a text disturbance detection and correction-based adversarial sample defense method for large language models. The method comprises two core modules: a disturbance detection module that identifies potential disturbances in input text and assesses their adversarial nature, and a disturbance correction module that replaces disturbances in adversarial samples to accomplish adversarial defense. The experimental results demonstrate that LLMAD achieves 81.5% average accuracy on the IMDB dataset, 56.8% on AG’s News, and 68.9% on Yahoo!Answers, significantly outperforming baseline defense methods. The perturbation detection module improves F1-scores by 0.3% to 26.7% compared to existing approaches. Ablation studies reveal that LLMAD enhances accuracy by 2.5% to 7.2% over single-module variants. These findings validate the method’s capability of enhancing model robustness against diverse adversarial attacks while ensuring the accuracy and reliability of results. For future work, the authors of this paper plan to integrate adversarial defense with diverse large models to explore the development of more efficient and accurate text adversarial defense methods.

Author Contributions

Conceptualization, C.W. and Y.H.; methodology, C.W.; software, C.W.; validation, C.W.; formal analysis, C.W.; investigation, C.W.; resources, C.W. and L.C.; data curation, C.W.; writing—original draft preparation, C.W.; writing—review and editing, L.C. and Y.H.; visualization, C.W.; supervision, L.C.; project administration, C.W.; funding acquisition, C.W. and L.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Industry-Academia Collaborative Education Program, Department of Higher Education, Ministry of Education of the People’s Republic of China, first batch 2024 (231100007141233).

Data Availability Statement

The IMDB dataset is available from the following website: https://huggingface.co/datasets/stanfordnlp/imdb (accessed on 30 November 2024). The AG’s News dataset is available from the following website: https://huggingface.co/datasets/fancyzhx/ag_news (accessed on 15 December 2024). The Yahoo!Answers dataset is available from the following website: https://huggingface.co/datasets/community-datasets/yahoo_answers_topics (accessed on 15 December 2024).

Conflicts of Interest

The authors C.W., L.C. and Y.H. are affiliated with Beijing Information Science and Technology University. This work was funded by Beijing Information Science and Technology University and C.W. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| LLMAD | Large Language Model Adversarial Defense |

| LLMs | Large Language Models |

| MDLM | Multi-level Disturbance Localization Method |

| DISP | DIScriminate Perturbations |

| FGWSs | Frequency-Guided Word Substitutions |

| SCRN | Siamese Calibrated Reconstruction Network |

| MQA | Multi-Query Attention |

| GLU | Gated Linear Unit |

| FFN | Feed-forward Network |

| ODDMAD | Adversarial Defense with Only Disturbance Detection Module |

| ODCMAD | Adversarial Defense with Only Disturbance Correction Module |

References

- Joshi, A.; Dabre, R.; Kanojia, D.; Li, Z.; Zhan, H.; Haffari, G.; Dippold, D. Natural Language Processing for Dialects of a Language: A Survey. ACM Comput. Surv. 2025, 57, 1–37. [Google Scholar] [CrossRef]

- Noor, M.H.M.; Ige, A.O. A Survey on State-of-the-art Deep Learning Applications and Challenges. arXiv 2024, arXiv:2403.17561. [Google Scholar]

- Xiao, Q.; Li, K.; Zhang, D.; Xu, W. Security Risks in Deep Learning Implementations. In Proceedings of the 2018 IEEE Security and Privacy Workshops (SPW), San Francisco, CA, USA, 24 May 2018; pp. 123–128. [Google Scholar]

- Behjati, M.; Moosavi-Dezfooli, S.M.; Baghshah, M.S.; Frossard, P. Universal Adversarial Attacks on Text Classifiers. In Proceedings of the 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 7345–7349. [Google Scholar]

- Zhou, L.; Cui, P.; Zhang, X.; Jiang, Y.; Yang, S. Adversarial Eigen Attack on Black-Box Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 15254–15262. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Liu, H.; Zhao, B.; Guo, J.B.; Peng, Y.F. A Survey of Adversarial Attacks Against Deep Learning. J. Cryptol. 2021, 8, 202–214. [Google Scholar]

- Zhao, H.; Chang, Y.K.; Wang, W.J. Adversarial Attacks and Defense Methods for Deep Neural Networks: A Survey. Comput. Sci. 2022, 49, 662–672. [Google Scholar]

- Wu, H.; Liu, Y.; Shi, H.; Zhao, H.; Zhang, M. Toward Adversarial Training on Contextualized Language Representation. arXiv 2023, arXiv:2305.04557. [Google Scholar]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing Properties of Neural Networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Papernot, N.; McDaniel, P.; Swami, A.; Harang, R. Crafting Adversarial Input Sequences for Recurrent Neural Networks. In Proceedings of the 2016 IEEE Military Communications Conference, Baltimore, MD, USA, 1–3 November 2016; pp. 49–54. [Google Scholar]

- Hou, Y.; Che, L.; Li, H. A Multi-level Perturbation Localization Method for Generating Chinese Textual Adversarial Examples. Comput. Eng. 2024, 1–11. [Google Scholar] [CrossRef]

- Zhou, Y.; Jiang, J.Y.; Chang, K.W.; Wang, W. Learning to Discriminate Perturbations for Blocking Adversarial Attacks in Text Classification. arXiv 2019, arXiv:1909.03084. [Google Scholar]

- Mozes, M.; Stenetorp, P.; Kleinberg, B.; Griffin, L.D. Frequency-Guided Word Substitutions for Detecting Textual Adversarial Examples. arXiv 2020, arXiv:2004.05887. [Google Scholar]

- Huang, G.; Zhang, Y.; Li, Z.; You, Y.; Wang, M.; Yang, Z. Are AI-Generated Text Detectors Robust to Adversarial Perturbations? arXiv 2024, arXiv:2406.01179. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models Are Few-Shot Learners. In Proceedings of the Advances in Neural Information Processing Systems, Online, 6–12 December 2020; pp. 1877–1901. [Google Scholar]

- Wei, J.; Tay, Y.; Bommasani, R.; Raffel, C.; Zoph, B.; Borgeaud, S.; Yogatama, D.; Bosma, M.; Zhou, D.; Metzler, D.; et al. Emergent Abilities of Large Language Models. arXiv 2022, arXiv:2206.07682. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems 30, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Xu, X.; Kong, K.; Liu, N.; Cui, L.; Wang, D.; Zhang, J.; Kankanhalli, M. An LLM Can Fool Itself: A Prompt-Based Adversarial Attack. arXiv 2023, arXiv:2310.13345. [Google Scholar]

- Li, G.; Shi, B.; Liu, Z.; Kong, D.; Wu, Y.; Zhang, X.; Huang, L.; Lyu, H. Adversarial Text Generation by Search and Learning. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2023, Singapore, 6–10 December 2023; pp. 15722–15738. [Google Scholar]

- Yu, Z.; Wang, X.; Che, W.; He, K. TextHacker: Learning-Based Hybrid Local Search Algorithm for Text Hard-Label Adversarial Attack. arXiv 2022, arXiv:2201.08193. [Google Scholar]

- Guo, C.; Sablayrolles, A.; Jégou, H.; Kiela, D. Gradient-Based Adversarial Attacks Against Text Transformers. arXiv 2021, arXiv:2104.13733. [Google Scholar]

- Bryant, C.; Yuan, Z.; Qorib, M.R.; Cao, H.; Ng, H.T.; Briscoe, T. Grammatical Error Correction: A Survey of the State of the Art. Comput. Linguist. 2023, 49, 643–701. [Google Scholar] [CrossRef]

- Maas, A.; Daly, R.E.; Pham, P.T.; Huang, D.; Ng, A.Y.; Potts, C. Learning word vectors for sentiment analysis. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, Portland, OR, USA, 19–24 June 2011; pp. 142–150. [Google Scholar]

- Zhang, X.; Zhao, J.; LeCun, Y. Character-level convolutional networks for text classification. In Proceedings of the 28th Annual Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 649–657. [Google Scholar]

- Zhang, Y.; Wallace, B. A sensitivity analysis of (and practitioners’ guide to) convolutional neural networks for sentence classification. arXiv 2015, arXiv:1510.0382. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, pp. 4171–4186. [Google Scholar]

- Ren, S.; Deng, Y.; He, K.; Che, W. Generating natural language adversarial examples through probability weighted word saliency. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 1085–1097. [Google Scholar]

- Jin, D.; Jin, Z.; Zhou, J.T.; Szolovits, P. Is BERT Really Robust? A Strong Baseline for Natural Language Attack on Text Classification and Entailment. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 8018–8025. [Google Scholar]

- Gao, J.; Lanchantin, J.; Soffa, M.L.; Qi, Y. Black-box Generation of Adversarial Text Sequences to Evade Deep Learning Classifiers. In Proceedings of the 2018 IEEE Security and Privacy Workshops (SPW), San Francisco, CA, USA, 24 May 2018; pp. 50–56. [Google Scholar]

- Mosca, E.; Agarwal, S.; Rando, J.; Groh, G. “That Is a Suspicious Reaction!”: Interpreting logits variation to detect NLP adversarial attacks. arXiv 2022, arXiv:2204.04636. [Google Scholar]

- Wang, X.; Hao, J.; Yang, Y.; He, K. Natural language adversarial defense through synonym encoding. In Proceedings of the 37th Conference on Uncertainty in Artificial Intelligence, Online, 27–30 July 2021; pp. 823–833. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).