Abstract

Multimodal medical image fusion plays a critical role in enhancing diagnostic accuracy by integrating complementary information from different imaging modalities. However, existing methods often suffer from issues such as unbalanced feature fusion, structural blurring, loss of fine details, and limited global semantic modeling, particularly in low signal-to-noise modalities like PET. To address these challenges, we propose PPMF-Net, a novel progressive and parallel deep learning framework for PET–MRI image fusion. The network employs a hierarchical multi-path architecture to capture local details, global semantics, and high-frequency information in a coordinated manner. Specifically, it integrates three key modules: (1) a Dynamic Edge-Enhanced Module (DEEM) utilizing inverted residual blocks and channel attention to sharpen edge and texture features, (2) a Nonlinear Interactive Feature Extraction module (NIFE) that combines convolutional operations with element-wise multiplication to enable cross-modal feature coupling, and (3) a Transformer-Enhanced Global Modeling module (TEGM) with hybrid local–global attention to improve long-range dependency and structural consistency. A multi-objective unsupervised loss function is designed to jointly optimize structural fidelity, functional complementarity, and detail clarity. Experimental results on the Harvard MIF dataset demonstrate that PPMF-Net outperforms state-of-the-art methods across multiple metrics—achieving SF: 38.27, SD: 96.55, SCD: 1.62, and MS-SSIM: 1.14—and shows strong generalization and robustness in tasks such as SPECT–MRI and CT–MRI fusion, indicating its promising potential for clinical applications.

1. Introduction

With the rapid advancement of computer vision and image processing technologies, multimodal image fusion has emerged as a key technique, widely applied in fields such as medical diagnosis, security surveillance, autonomous driving, and industrial inspection. This technology enhances image quality and information integrity by integrating complementary and redundant information from different imaging modalities, thereby offering strong support for target understanding and analysis [1]. Among these applications, the role of multimodal fusion in clinical diagnosis has become increasingly prominent, driven by continuous improvements in imaging technologies. It plays a crucial role in supporting precision medicine in aspects such as early diagnosis, treatment planning, intraoperative navigation, and postoperative monitoring [2].

Multimodal medical image fusion can compensate for the limitations of single imaging techniques and fully leverage the complementary strengths of different modalities [3]. For example, CT obtains high-resolution 3D structural information of hard tissues using X-rays, making it suitable for diagnosing fractures and detecting pulmonary nodules [4], and MRI captures hydrogen signals through magnetic fields and radiofrequency pulses, specializing in soft tissue imaging, and is widely applied in diagnosing neurodegenerative diseases and muscular disorders [5]. However, CT has low soft tissue contrast, while MRI is sensitive to metal, has slow imaging speed, and is prone to artifacts [4,5]. PET and SPECT are based on radioactive tracers and reflect organ metabolism and blood flow information, playing a significant role in tumor staging and myocardial ischemia assessment, but they suffer from low resolution and time-consuming imaging processes [6]. Consequently, integrating information from multiple modalities helps improve the efficiency and accuracy of medical diagnosis [1].

Multimodal medical image fusion not only integrates anatomical and metabolic information but also simultaneously presents functional and structural characteristics of tissues, providing more comprehensive support for clinical decision-making [3]. For example, CT–MRI fusion produces images with both skeletal clarity and soft tissue contrast, aiding in precise lesion localization during orthopedic surgery [7]; MRI–PET fusion enhances the delineation of glioma boundaries in the brain, with diagnostic sensitivity improved by approximately 20% [8]; and, similarly, the integration of SPECT and CT angiography enables simultaneous visualization of coronary artery stenosis and myocardial perfusion, supporting revascularization planning [9]. A retrospective study of 500 liver cancer patients showed that the tumor resection rate increased from 78% to 92% under fusion image guidance [10]. These examples collectively demonstrate that developing efficient multimodal fusion techniques holds great promise in medical image processing, as well as in disease diagnosis and treatment [3].

However, current multimodal medical image fusion methods still face numerous challenges. Traditional approaches such as wavelet transform, principal component analysis (PCA), and non-subsampled contourlet transform (NSCT) are computationally efficient but suffer from three major limitations [3]. First, these methods rely on fixed basis functions, making it difficult to model complex nonlinear features, which often leads to blurred edges and texture loss. Second, their handcrafted feature extraction strategies lack adaptability and can easily introduce artifacts, especially in modalities with large dynamic range differences [11]. Third, they depend heavily on precise cross-modal feature alignment, where registration errors exceeding 2 mm can significantly degrade fusion quality [12]. For example, wavelet transform may cause misalignment between metabolic and anatomical information in MRI–PET fusion, ultimately resulting in distorted functional representation [8].

In recent years, deep learning has opened new avenues for multimodal fusion. CNN-based methods (e.g., VGGNet, ResNet) extract multi-level features automatically via end-to-end training and are effective in modeling nonlinear relationships [1]. Meanwhile, generative adversarial networks (GANs) optimize through adversarial training between generators and discriminators to improve image realism. For example, FusionGAN was the first to apply GANs to infrared and visible image fusion, boosting target saliency by over 30% [13]. In the medical domain, U-Net and its variants (such as Attention U-Net) fuse shallow and deep features via skip connections, which enhances structural consistency in CT–MRI fusion [14,15]. However, three main challenges remain: (1) imbalanced feature fusion, where high-contrast modalities such as CT/MRI tend to dominate, suppressing crucial information from functional modalities like PET [3]; (2) lack of global consistency and detail degradation caused by the limited receptive field of CNNs, which makes it difficult to model long-range cross-modal dependencies and, as a result, lesion regions may misalign and high-frequency details often degrade [16]; and (3) overreliance on single-type loss functions, as most methods depend heavily on pixel-level losses (e.g., MSE), neglecting constraints on structure and details, thereby resulting in blurred structures and reduced diagnostic utility [17].

To address the abovementioned issues, this study proposes a novel Progressive Parallel Multimodal Fusion Network (PPMF-Net), with the following key innovations:

- (a)

- Progressive parallel fusion strategy: by employing hierarchical progressive feature extraction and parallel fusion, this strategy enhances global–local collaborative optimization, promotes structural consistency and detail preservation, mitigates single-modality dominance, and enhances image layering and diagnostic sensitivity.

- (b)

- Hierarchical heterogeneous fusion architecture, comprising three core modules: ① a Nonlinear Interactive Feature Extraction (NIFE) module, which uses element-wise multiplication and residual connections to progressively fuse MRI texture and PET metabolic features; ② a Transformer-Enhanced Global Modeling (TEGM) module, which combines the global modeling capability of Transformers with the local perception of CNNs to capture both channel-wise and spatial dependencies; and ③ a Dynamic Edge-Enhanced Module (DEEM) built upon inverted residual and SE blocks, which emphasizes high-frequency features in diagnostically sensitive regions.

- (c)

- Optimized multimodal fusion loss function: A triple-objective unsupervised loss is designed, consisting of Lfunctional (for improving metabolic region fidelity), Lstructure (to constrain structural consistency), and Ldetail (to enhance edges and textures), thereby significantly improving lesion distinguishability. PPMF-Net integrates the advantages of Transformers and CNNs, strengthening the robustness of unregistered image fusion and demonstrating strong potential for clinical application.

The remainder of this paper is organized as follows: Section 2 reviews the current research on deep learning in multimodal medical image fusion; Section 3 presents the proposed PPMF-Net method in detail, including the design of its core modules; Section 4 provides the experimental setup, fusion results, and ablation studies; Section 5 concludes the paper and discusses future directions.

2. Application of Deep Learning in Multimodal Medical Image Fusion

Multimodal image fusion technology plays a vital role in fields such as medical diagnosis, remote sensing, and autonomous driving by integrating information from different imaging modalities. However, traditional fusion methods often rely on manually designed features, which are limited in their ability to capture the complex nonlinear relationships between modalities. In recent years, the feature learning capacity and end-to-end optimization mechanism of deep learning have enabled significant technological advancements. Mainstream architectures, such as CNNs, GANs, and Transformers, have successfully addressed technical bottlenecks from different perspectives, while hybrid models offer a promising approach to balance accuracy and computational efficiency. This paper aims to analyze key deep learning architectures, highlighting their principles, applications, and the challenges they face in future developments.

CNN has emerged as a key architecture in multimodal image fusion, thanks to its strong local feature-extraction capabilities. For example, U-Net’s encoder–decoder design makes it a widely used tool in medical image fusion, where it effectively retains CT bone details and MRI soft tissue information (Ronneberger et al., 2015) [14]. DenseNet enhances feature propagation through dense connectivity, making it effective for infrared and visible image fusion tasks (Huang et al., 2017) [18]. Although CNNs excel at capturing local textures and edges, their relatively small receptive field limits their ability to model global dependencies between different modalities.

GAN enhances the visual quality of fused images through adversarial training between the generator and the discriminator. For instance, DenseFuse (Li et al., 2018) [19] fuses infrared and visible images to generate high-quality images with thermal radiation and texture details. However, GAN training is prone to mode collapse, which may lead to over-smoothing of high-frequency information. To mitigate this, DDcGAN (Ma et al., 2020) [20] introduces a dual-discriminator architecture that separately optimizes content fidelity and detail clarity, thereby enhancing the robustness of multimodal fusion.

The Transformer overcomes the local receptive field limitation of CNNs through the self-attention mechanism, demonstrating its strong capability in cross-modal information modeling. For instance, Swin Transformer (Liu et al., 2021) [21] employs a hierarchical shifted window strategy to enhance the modeling of long-range dependencies in multimodal images, making it suitable for remote-sensing image fusion. In the medical field, TransMed [22], which combines CNN and Transformer, has been successfully applied to parotid tumor classification and knee joint injury detection. Although pure Transformer architectures tend to have limited sensitivity to local details, this drawback can be mitigated by integrating CNNs.

In recent years, hybrid architectures have emerged as a prominent research trend in multimodal image fusion, combining the local feature extraction capabilities of CNNs with the global modeling power of Transformers to achieve more effective information integration and representation. Representative works include TransFuse [23], which adopts a parallel encoding structure of CNN and Transformer, leveraging bidirectional cross-attention to fuse local and global features, and demonstrating outstanding performance on skin lesion datasets. Another model [24] alternately stacks CNNs and Transformers and introduces the Group Cascade Attention (GCA) module along with the Efficient CNN (EC) module to reduce computational cost while enhancing fusion performance. Building on these foundations, several advanced fusion methods have recently been proposed. For instance, U2Fusion [25] introduces a unified unsupervised fusion framework applicable to various tasks, DATFuse [26] employs dual attention mechanisms and a Transformer to capture key features and global context, MATR [27] incorporates a multi-scale adaptive Transformer to improve fusion quality for medical images, SwinFusion [28] leverages cross-domain long-range modeling to simultaneously preserve structure and detail, and the latest FATFusion [29] utilizes a dual-branch architecture for functional–anatomical integration and a guided Transformer module to deeply fuse functional and structural information in medical images. These studies collectively demonstrate that incorporating Transformers into image fusion significantly enhances the model’s capacity for complex information modeling and greatly broadens the applicability and generalizability of fusion methods. Deep learning-based multimodal medical image fusion technology has made breakthroughs by complementing the advantages of heterogeneous models. CNNs rely on local feature extraction, GANs improve visual fidelity, Transformers achieve global modeling, and hybrid architectures balance accuracy and efficiency. However, current technologies face several challenges: (1) imbalanced feature fusion and single-modality dominance, which lead to the loss of low signal-to-noise ratio modal information; (2) lack of global consistency and degraded detail, with traditional CNNs limiting the modeling of cross-modal long-range dependencies; and (3) the limitation of a single optimization goal, which neglects structure and detail optimization, leading to blurred image structures. Future research should focus on balancing feature fusion, enhancing global consistency and detail fidelity, and avoiding single-modal dominance; exploring stronger global modeling and detail enhancement mechanisms to improve lesion area performance; constructing a multi-task joint optimization framework, combining unsupervised learning and domain adaptation to reduce annotation dependence; and developing lightweight dynamic networks to improve real-time adaptive capabilities. These optimization directions will promote the development of multimodal medical image fusion technology and support precision medicine.

The motivation of this study stems precisely from these limitations, aiming to address the challenges in current multimodal image fusion techniques through innovative network architecture and optimization strategies. The proposed network not only enhances the balance of feature fusion and the comprehensiveness of information capture but also improves the quality of fused images through a multi-objective optimization strategy, thereby providing more reliable technical support for clinical applications.

3. PPMF-Net Fusion Network Architecture Design

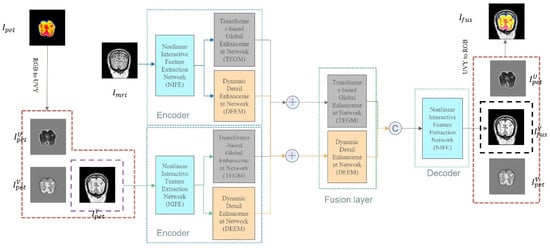

This chapter presents a comprehensive overview of the PPMF-Net framework, a multimodal medical image fusion network specifically designed for MRI and PET image integration. PPMF-Net adopts an end-to-end encoder–fusion module–decoder architecture where the encoder incorporates three key sub-modules: Nonlinear Interactive Feature Extraction module (NIFE), Transformer-Enhanced Global Modeling module (TEGM), and Dynamic Edge-Enhanced Module (DEEM), enabling multi-scale structure extraction, long-range context modeling, and fine texture enhancement, respectively. To address the channel inconsistency between modalities, PET images are converted from RGB to YUV color space, with only the luminance (Y) channel retained to ensure consistency with grayscale MRI images. All data used are sourced from the Harvard MIF database and have undergone pre-registration, guaranteeing precise spatial alignment. Drawing inspiration from the U-Net architecture, PPMF-Net introduces modular innovations to enhance feature interaction and fusion: NIFE enables layer-wise complementary feature exchange via element-wise multiplication and cross-modal residual connections, TEGM captures global dependencies using self-attention mechanisms, and the DEEM enhances detail expression through inverted residual structures and channel attention. The overall architecture is illustrated in Figure 1.

Figure 1.

PPMF-Net network structure.

3.1. Progressive Parallel Fusion Strategy

The Progressive Parallel Fusion strategy is the core design of PPMF-Net, which aims to address issues such as information loss, unbalanced feature fusion, and single-modal dominance in multimodal medical image fusion. This strategy employs a “progressive extraction, multi-network parallel collaboration” mechanism to gradually extract multi-scale features in stages while integrating global functional information, anatomical structures, and local detail features through parallel cross-fusion networks. As a result, it significantly improves image quality and enhances the clinical diagnostic value.

Feature encoding phase strategy: Progressive feature extraction is employed, with the NIFE module extracting high-resolution local detail features, the TEGM module capturing cross-modal long-range dependencies, and the DEEM enhancing details through channel attention. Together, these three modules work in parallel to provide multi-granularity feature support, ensuring comprehensive feature extraction across various scales.

Fusion phase strategy: A dual-network parallel cross-architecture is designed, where the global branch of TEGM aligns cross-modal global contextual information, and the local branch of the DEEM dynamically selects high-frequency features, enhancing cross-modal complementarity of edges and textures. By enabling mutual optimization between the two branches, the fusion process enhances the feature fusion hierarchy, ultimately improving the recognizability of diagnostic-sensitive areas.

This strategy overcomes the limitations of traditional methods by ensuring the progressive capture of multi-scale features, preventing information loss, and improving the synergy between global and local details through parallel cross-fusion. As a result, it significantly enhances both the comprehensiveness and accuracy of the fused features.

3.2. Hierarchical Heterogeneous Feature Fusion Architecture

This study, based on the progressive parallel strategy, constructs a hierarchical heterogeneous feature fusion architecture composed of three major modules: NIFE, TEGM, and a DEEM. The architecture emphasizes progressive feature abstraction through NIFE (from local to global), global-local hybrid attention modeling via TEGM, and multi-scale detail enhancement through the DEEM. Together, these strategies create a cohesive three-level hierarchical fusion structure, improving both feature integration and fusion efficiency.

3.2.1. Nonlinear Interactive Feature Extraction Module

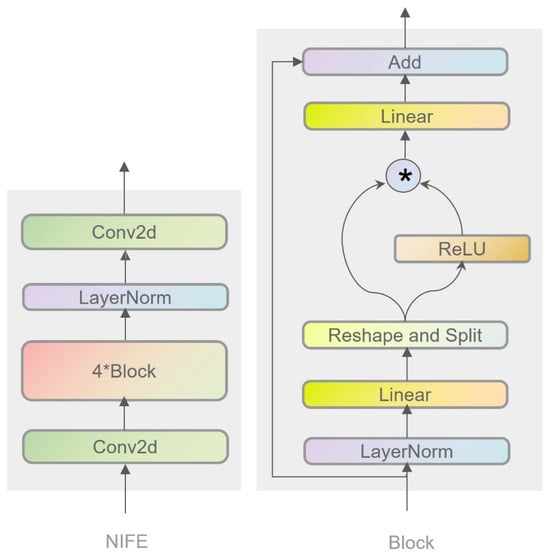

The Nonlinear Interactive Feature Extraction module is the core component of PPMF-Net, specifically designed for multimodal medical image fusion tasks. It focuses on extracting shallow complementary features from functional (e.g., PET) and structural (e.g., MRI) images. By utilizing a multi-layer nonlinear interaction architecture, it addresses challenges such as inadequate feature representation and weak modality coupling found in traditional methods.

The module architecture and processing flow consist of input preprocessing and a multi-layer stacked block design. In the input preprocessing stage, the image undergoes channel expansion and local feature extraction through Conv2d convolutional layers, which helps create a high-dimensional feature space for the subsequent network while also balancing the heterogeneity of multimodal data. Each block incorporates normalization (LayerNorm), activation functions (ReLU/Custom), and fully connected layers. To ensure stable gradient propagation and prevent degradation in deeper layers of the network, residual connections are used. Moreover, element-wise multiplication [30] is employed to facilitate cross-channel nonlinear feature interaction and dynamically fuse multimodal features.

The element-wise multiplication fusion mechanism in the Nonlinear Interactive Feature Extraction (NIFE) module differs significantly from existing approaches. For instance, DenseFuse employs a densely connected structure to extract features and performs shallow feature fusion using simple weighting or multiplication, while EMFusion enhances structural and functional image details through encoder channel separation and shallow saliency guidance. However, neither method systematically models deep nonlinear interactions between modalities. In contrast, NIFE introduces an element-wise multiplication fusion mechanism as a dynamic and cross-channel nonlinear interaction strategy. This mechanism not only facilitates information aggregation but also enhances high-response complementary features and suppresses redundant noise through a learnable gating mechanism. Compared to traditional methods, this fusion strategy is better suited for highly heterogeneous medical image modalities such as PET and MRI, significantly improving semantic consistency across modalities and enhancing the discriminability of key regions. Furthermore, by embedding this mechanism in deeper layers of the network and stacking it across multiple levels, NIFE enables progressive fusion of shallow details and deep semantics, thereby enhancing the model’s representational capacity and robustness. Its structure is shown in Figure 2.

Figure 2.

Architecture of nonlinear interactive feature extraction module (NIFE). Note: * denotes element-wise multiplication between feature representations.

The overall formulation of NIFE can be expressed as:

- Feature transformation and activation: in the Block module, the input features are first normalized and linearly transformed, followed by nonlinear processing through an activation function (e.g., ReLU).

- 2.

- Feature fusion: next, element-wise multiplication is employed to fuse the transformed features with the original input features.

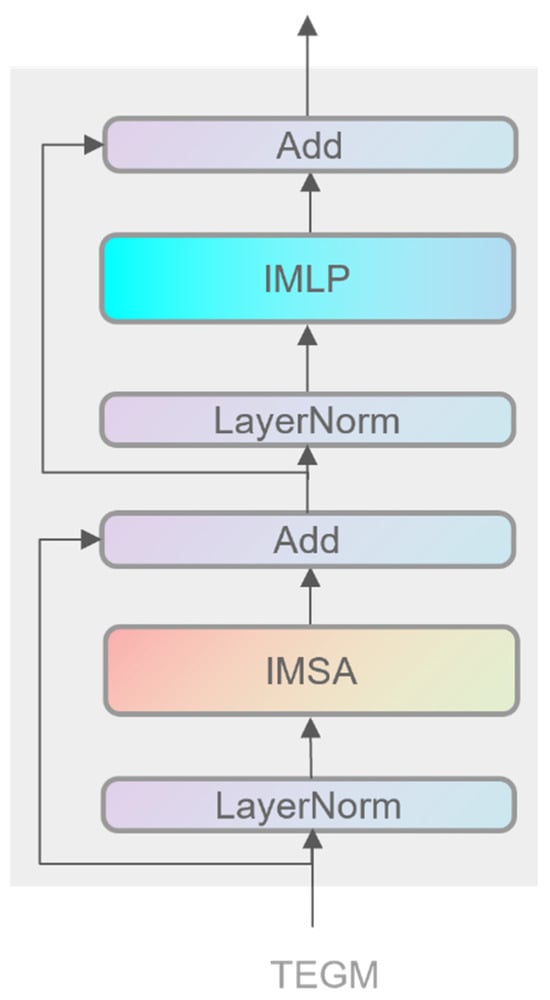

3.2.2. Transformer-Enhanced Global Modeling Module

The Transformer-Enhanced Global Modeling module (TEGM), inspired by Transformer [16], serves as a pivotal component within PPMF-Net for global feature extraction and fusion in multimodal medical imaging. It draws upon and enhances several state-of-the-art advances in multimodal image fusion, including cross-modal fusion strategies and long-range modeling mechanisms proposed in recent works such as SwinFusion [28], CDDFuse [31] PromptFusion [32], CoCoNet [33], and a review on infrared and visible image fusion [34]. TEGM is specifically designed to address the limitations of traditional CNN architectures in capturing long-range dependencies, modeling global context, and integrating cross-modal information.

TEGM operates on shallow multimodal features extracted by the NIFE module, preserving the complementary information of functional data (e.g., PET metabolic activity) and structural information (e.g., MRI anatomical details). In its Transformer design, TEGM enhances the multi-head self-attention (MSA) mechanism [35] by incorporating a dual-path convolution strategy—using 1 × 1 convolutions to extract inter-channel correlations and 3 × 3 convolutions to capture local spatial dependencies—, thereby constructing more robust Query, Key, and Value matrices. This strategy resembles the Lite Transformer design adopted in CDDFuse [36], which strengthens feature representation by integrating both local and global attention.

Additionally, TEGM introduces a hybrid attention mechanism that combines global self-attention [37] with local convolutional features to achieve a balance between global semantic understanding and local detail preservation. This design aligns with the local–global interaction concept enabled by the Shifted Window approach in SwinFusion [28]. For the MLP component, TEGM replaces traditional fully connected layers with depthwise separable convolutions, reducing computational complexity while improving local feature representation. This design is consistent with the lightweight multi-stage framework of PromptFusion [32] and the emphasis on information reconstruction in CoCoNet [33], significantly enhancing the model’s expressive capacity and efficiency in multimodal image fusion. TEGM integrates the strengths of recent Transformer-based approaches while incorporating task-specific innovations, making it a powerful and efficient module for global feature modeling. Its architectural design is illustrated in Figure 3.

Figure 3.

Architecture of the Transformer-based Global Enhanced module (TEGM).

The formulation of TEGM can be divided into two steps:

- Residual and attention output computation: First, the input features are processed via an improved multilayer perceptron (MLP) and an improved multi-head self-attention (IMSA) mechanism. Assuming the input features are denoted as INIFE (i.e., output from the NIFE module), the process can be expressed as:

- 2.

- Generation of final output features: subsequently, the computed attention output is fused with the original input to produce the final feature output:

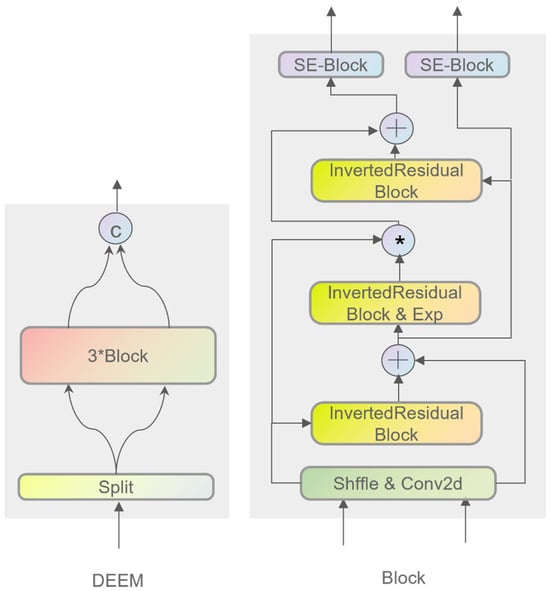

3.2.3. Dynamic Edge-Enhanced Module

The Dynamic Edge-Enhanced Module is the core network in PPMF-Net, addressing the degradation of high-frequency details in multimodal images. It significantly improves the extraction and retention of key details, such as edges and textures, through an inverted residual structure and channel attention fusion design, effectively solving issues like detail loss in deep networks and channel feature rigidity in traditional CNNs.

The module architecture and processing flow are as follows: First, the input features are segmented and undergo convolution operations to obtain an initial feature representation. Next, the convolution results are processed through an inverted residual block to capture high-frequency detail information, and further feature expression is enhanced through expansion operations. Subsequently, the expanded features are combined with the results of the inverted residual block [38] and undergo channel weighting via the Squeeze-and-Excitation (SE) module [39], which dynamically adjusts the network’s attention to different features, further enhancing the representation of detail information. This processing flow ensures the thorough enhancement of detail information while maintaining efficient computational performance.

The core innovations of the DEEM lie in its integration of inverted residual blocks, the Squeeze-and-Excitation (SE) module, channel shuffle mechanism, and its multi-scale detail capture capabilities. The inverted residual block effectively mitigates the gradient vanishing problem often encountered in traditional deep networks during fine-grained detail extraction. This structure enhances the network’s ability to capture high-frequency details—such as edges and textures—thus improving both image processing accuracy and the richness of detail representation.

The SE module enables the network to dynamically recalibrate the importance of each feature channel based on contextual information, highlighting salient details and strengthening inter-channel relationships. This mechanism significantly boosts the model’s sensitivity to critical detail features.

Within the Block module, the shuffle operation is inspired by the Channel Shuffle strategy, which enhances inter-channel information flow and interaction by breaking the isolation between channels. This not only increases the model’s representational power but also improves computational efficiency. Working synergistically with the inverted residual structure and the SE attention mechanism, channel shuffling plays a crucial role in the DEEM’s ability to express high-frequency details while maintaining a low computational cost.

By stacking multiple inverted residual blocks, the DEEM captures detail features across various hierarchical levels, significantly enhancing the representation of fine details at multiple scales. This makes the DEEM particularly effective in tasks such as image segmentation and object detection, especially in scenarios requiring high precision in detail. The architecture of the DEEM is illustrated in Figure 4.

Figure 4.

Architecture of the Dynamic Edge-Enhanced Module (DEEM). Note: * denotes element-wise multiplication, + denotes element-wise addition, and c denotes connection between feature representations.

The DEEM is formulated as:

In the Dynamic Edge-Enhanced Module, the core operations and key procedures of the Block module are described as follows:

- Convolution and shuffling: represents the output features of the DEEM. It is the enhanced result obtained by dividing the input features from NIFE () into groups and feeding them into three sequentially connected Block modules for processing.

- 2.

- Core inverted residual block and expansion: is input into a new inverted residual block and expansion operations—such as channel expansion, feature amplification, or attention weighting— are applied to obtain the enhanced intermediate feature , which is used to further improve detail preservation.

- 3.

- Multiplicative and additive operations with SE block: First, the Squeeze-and-Excitation (SE) attention module is applied to Y1 to obtain the channel weights. Then, element-wise multiplication between the weighted result and the expanded feature is performed, and this is fused with the residual path of to obtain the output feature .

3.3. Multimodal Image Fusion Optimization Loss

In this study, we designed an optimized loss function for multimodal image fusion to enhance the functional, structural, and detailed characteristics of the fused image, ensuring the preservation of functional information (e.g., metabolic activity and brain function), anatomical consistency, and improved texture details (e.g., organ boundaries and lesion areas). By optimizing these three aspects, the proposed method significantly enhances the diagnostic accuracy and practical utility of the fused images, providing a robust framework for advancing medical image fusion techniques. The loss function is composed of three components: functional loss, structural loss, and detail loss, and is defined by the following equation:

3.3.1. Functional Loss

In medical multimodal imaging, functional information such as metabolic activity from PET and brain activation from fMRI plays a critical role in accurate diagnosis. To ensure that the fused image preserves key information from the functional modality and retains diagnostically relevant features, we use Mean Squared Error (MSE) [40] to measure pixel-level differences between the fused image and the functional modality.

where represents the value of the I-th pixel of the fused image, is the value of the pixel corresponding to the functional mode image, and N is the total number of pixels.

3.3.2. Structural Loss

Anatomical structural information in medical images, such as organ and tissue boundaries in CT and MRI, is crucial for accurate diagnosis. Therefore, during the fusion process, it is essential to ensure that these structural details remain clearly visible. The Structural Similarity Index Measure (SSIM) [41] is employed to evaluate similarity in luminance, contrast, and structure. We use SSIM to assess the similarity between the fused image and the structural modality, ensuring the preservation of key anatomical features.

Here, denotes the structural modality image, such as CT or MRI.

3.3.3. Detail Loss

Detail and edge information are crucial for detecting lesions and abnormalities. Therefore, during the fusion process, we aim to preserve functional, structural, and detailed information from the source images. To achieve this, we introduce a detail loss that measures the similarity in detail and texture by comparing the gradient information of the fused image with that of the source images. The L1 loss [42] is employed to effectively capture these gradient differences, ensuring that fine details and textures are preserved in the fused image.

Here, represents the fused image, is the reference image obtained by averaging the original PET and MRI images, ∇ denotes the gradient operation (e.g., Sobel), N is the total number of pixels in the image, and i indicates the i-th pixel position.

By optimizing the functional, structural, and detail loss functions, we can improve image fusion quality at the pixel, structural, and detail levels. This not only enhances the visual quality and realism of the fused images but also ensures that multimodal information is preserved. The design of this framework ensures that the fused image retains key functional and structural details, boosting its diagnostic utility. As a result, it offers an effective optimization framework for future research in medical image fusion, particularly valuable for accurately preserving details and functional information.

3.4. Model Training

Experiments were conducted on a workstation equipped with an NVIDIA GeForce RTX 3090 Ti GPU using the PyTorch framework (v2.1.0). Mixed-precision training was adopted to accelerate computation and reduce memory consumption by combining single-precision (FP32) and half-precision (FP16) arithmetic, significantly improving training efficiency. The model was trained for 40 epochs with an initial learning rate of 1 × 10−4. A two-stage learning rate scheduling strategy was employed: in the early training phase, a step decay was applied—reducing the learning rate by 50% every 10 epochs—to rapidly narrow the search space. Subsequently, a cosine annealing scheduler was used to perform fine-grained adjustments, helping to avoid local minima and promote stable convergence.

To prevent overfitting, weight decay was set to 0 during the experiments. A batch size of eight was chosen to balance computational efficiency with memory usage. The AdamW optimizer [43] was employed, as it combines the benefits of Adam and weight decay, effectively updating model parameters and accelerating convergence. Recent studies have shown that AdamW is more effective than standard Adam in avoiding bias during parameter updates in large-scale training, which improves both the stability of the training process and the overall performance of the final model.

4. Experiment and Analysis

4.1. Datasets and Preprocessing

The dataset used in this experiment was obtained from the MIF database at Harvard University (http://www.med.harvard.edu/aanlib/home.html), which contains multimodal medical imaging data, particularly paired PET (Positron Emission Tomography) and MRI (Magnetic Resonance Imaging) images. PET images provide detailed information on cerebral metabolic activity, while MRI images offer clear structural anatomical representations. The combination of these two modalities is of great value for medical image analysis, lesion detection, and brain function research. For this experiment, a total of 245 pairs of PET–MRI images were selected as the training data for the model.

To fully utilize the limited dataset and improve the model’s generalization ability, overlapping cropping was employed as a data augmentation technique. Specifically, image patches of size 128 × 128 were cropped from each pair of images with a stride of 20 pixels. This method effectively increased the number of training samples, enhanced the model’s ability to learn local detail features, and prevented performance degradation due to the limited amount of data. As a result, a total of 12,005 training samples were generated, covering different stages of model training. To evaluate the model’s real-world performance, 24 pairs of MRI–PET images were randomly selected as the test set. This data augmentation strategy, widely used in medical image processing, significantly improved the model’s ability to perceive local features and enhanced its robustness in real-world applications. Thus, it provided a solid data foundation for optimizing the fusion model’s performance in this study.

4.2. Comparison Method and Evaluation Index

4.2.1. Comparison Method

In recent years, numerous advanced methods have emerged in the field of multimodal image fusion. U2Fusion [44] proposed a unified unsupervised fusion framework applicable to various modality combinations and tasks. DATFuse [26] introduced a dual-attention mechanism and Transformer to effectively capture key features and global contextual information. MATR [27] leveraged a multi-scale adaptive Transformer to enhance the quality of medical image fusion. SwinFusion [28] achieved simultaneous preservation of structural and detailed information through cross-domain long-range modeling. The latest FATFusion [29] employs a dual-branch architecture for functional and anatomical features combined with a guided Transformer module to achieve deep integration of functional and structural information. These methods demonstrate the strong modeling capabilities and wide applicability of Transformer architectures in image fusion tasks.

To comprehensively evaluate the fusion performance of the proposed FlexFusion-Net, we selected the abovementioned five representative and state-of-the-art multimodal image fusion methods for comparison. These methods span various technical paradigms, from CNNs to Transformers, and feature publicly available code and strong performance across multiple tasks. In our experiments, all model parameters were strictly configured according to the original papers to ensure fair and reliable comparisons. Through systematic comparison with these mainstream algorithms, the comprehensive advantages of FlexFusion-Net in fusion quality, structural preservation, and detail restoration can be clearly demonstrated.

4.2.2. Evaluation Index

To conduct a comprehensive quantitative evaluation of image fusion quality, this paper adopts six widely accepted evaluation metrics: SF (Spatial Frequency) [36], SD (Standard Deviation) [45], CC (Correlation Coefficient) [25], SCD (Spatial Correlation Degree) [46], SSIM (Structural Similarity Index) [41], and MS-SSIM (Multi-Scale Structural Similarity Index) [47]. These metrics quantify and evaluate the image fusion performance from different perspectives, enabling a multi-level comprehensive analysis of fusion quality. Image fusion is a complex process aimed at integrating complementary information from multiple source images into a single image that is more expressive and information-rich. Therefore, evaluating its performance requires consideration of various aspects, such as clarity, contrast, and structural preservation. The following section provides a detailed introduction to each evaluation metric, as well as information about how recent research has further enriched the application and understanding of these metrics.

- Spatial Frequency (SF)

Spatial Frequency (SF) is an important metric for evaluating image texture detail and clarity. It reflects the richness of detail information in the frequency components of an image. High-frequency components in an image typically represent fine structures and texture details, while low-frequency components correspond to overall brightness and broad structural information. A higher SF value indicates better preservation of image details and higher clarity, which is particularly valuable for high-precision applications such as medical imaging and remote sensing. In recent years, multi-scale analysis methods have been proposed to improve the accuracy of SF calculation and to assess image clarity at different scales [36]. The calculation of Spatial Frequency (SF) is typically based on the frequency components in the horizontal direction (Row Frequency, RF) and vertical direction (Column Frequency, CF), and it is defined as follows:

Here, denotes the grayscale value of the image at pixel coordinates , while and represent the number of rows and columns in the image, respectively. RF captures the gradient variations in the horizontal direction, and CF captures those in the vertical direction. The overall Spatial Frequency (SF) is then computed as the square root of the sum of the squares of RF and CF, effectively quantifying the global spatial frequency of the image.

- 2.

- Standard Deviation (SD)

Standard Deviation (SD) is a key metric for evaluating image contrast, reflecting the degree of dispersion in pixel intensity values. A higher SD value typically indicates more distinct variations in brightness levels and clearer image details, especially in regions with edges and abrupt changes. In the context of image fusion, a higher SD suggests better fusion performance, as it implies effective preservation of detail and reduced blurring or distortion. In recent years, researchers have focused on enhancing fusion algorithms to improve image contrast and better highlight critical information. Since SD is also closely related to visual features such as brightness and tone, it not only reflects image clarity but also contributes significantly to the perceived visual quality [45]. The standard deviation (SD) can be computed using the following formula:

where represents the pixel value at the i-th row and j-th column of the image, and M and N denote the number of rows and columns of the image, respectively. is the mean pixel intensity of the image, which can be calculated using the following formula:

- 3.

- Correlation Coefficient (CC)

The Correlation Coefficient (CC) measures the linear relationship between the source images and the fused image, indicating the consistency of gray-level values. A higher CC value suggests that the fused image effectively preserves the features of the original source images, including textures, details, and structural information. In the context of image fusion, a high correlation coefficient implies that the fusion process retains critical information from the input images without significant loss. This makes CC a reliable indicator of the fusion quality, especially in applications where feature preservation is essential [25]. The correlation coefficient (CC) can be calculated using the following formula:

Here, and represent the pixel values at row i and column j in the source image and the fused image, respectively. and denote the number of rows and columns in the images. and are the mean pixel values of the source and fused images, respectively. The correlation coefficient is computed using the following formula:

- 4.

- Spatial Correlation Degree (SCD)

Spatial Correlation Degree (SCD) is a metric used to assess the consistency of pixel relationships within the spatial domain of an image. A higher SCD value indicates that the fused image effectively preserves the spatial structure of the source images, avoiding significant spatial distortions or inconsistencies. The spatial structure of an image is a critical factor in evaluating fusion quality, especially in applications where maintaining spatial coherence is essential. As a key indicator, SCD helps determine whether the fused image can accurately replicate the spatial distribution and features of the original images. [46]

where and represent the pixel values at row i and column j in the source image and the fused image, respectively. and denote the number of rows and columns in the images, while and are the mean pixel intensities of the source image and the fused image, respectively. The SCD is calculated using the following formula:

- 5.

- Structural Similarity Index (SSIM)

The Structural Similarity Index (SSIM) is a widely used metric for assessing image quality. It evaluates the similarity between a source image and a fused image by analyzing three key components: luminance, contrast, and structural information. A higher SSIM value indicates that the fused image maintains a high degree of structural consistency and effectively preserves the details and features of the original image. As a core indicator in image quality assessment, SSIM goes beyond mere pixel-wise comparison and focuses on the overall structural fidelity and information retention of the image [41]. The SSIM can be calculated using the following formula:

Here, and represent local image patches from the source image and the fused image, respectively. and denote the mean values of these patches, while and are their respective variances. represents the covariance between the source and fused image patches. The constants and are small positive values introduced to stabilize the division and prevent the denominator from becoming zero. The detailed calculation formula is as follows:

- 6.

- Multi-scale Structural Similarity Index (MS-SSIM)

The Multi-scale Structural Similarity Index (MS-SSIM) is an extension of the SSIM metric that evaluates structural similarity across multiple scales, providing a more comprehensive assessment of image quality. By analyzing image quality at different resolution levels, MS-SSIM is particularly valuable for applications requiring multi-scale information fusion, such as high-resolution imaging and super-resolution reconstruction. A higher MS-SSIM value indicates better preservation of structural information across multiple scales [47]. The calculation formula for MS-SSIM is as follows:

Here, denotes the number of scales, while and represent the source image and the fused image at the j-th scale, respectively. The term stands for luminance comparison, denotes contrast similarity, and represents structural similarity. The parameters αj and βj are weighting factors that balance the contributions of different scales.

The combination of these evaluation metrics enables a comprehensive quantitative analysis of image fusion performance from multiple perspectives. Metrics such as SF, SD, and CC primarily assess image clarity, contrast, and the preservation of source image features, while SCD, SSIM, and MS-SSIM focus on spatial consistency and structural fidelity to further evaluate the quality of the fused image. By leveraging these comprehensive indicators, we can thoroughly understand quality variations throughout the fusion process and effectively assess the merits of fusion results, thereby providing strong support for the optimization and improvement of image fusion algorithms.

4.3. Fusion Comparison Experiment and Result Analysis

- Quantitative analysis

Table 1 presents a comparison of PPMF-Net with five advanced multimodal image fusion (MIF) methods across six evaluation metrics. PPMF-Net achieves the highest scores in four key metrics (SF, SD, SCD, and MS-SSIM), demonstrating significant advantages. In Spatial Frequency (SF), PPMF-Net scores 38.2661, significantly outperforming other models and showcasing its superiority in detail extraction and high-frequency information retention, particularly at the edges. The Standard Deviation (SD) score of 96.554 highlights its ability to enhance image contrast and detail performance. The Structural Consistency Difference (SCD) score of 1.617 further emphasizes its ability to retain structural information. PPMF-Net also scores 1.229 in Multi-scale Structural Similarity (MS-SSIM), slightly outperforming SwinFusion, indicating its excellent performance in multi-scale structural fusion. In terms of the Correlation Coefficient (CC), PPMF-Net also performs exceptionally well, maintaining a high level of image similarity. Therefore, PPMF-Net excels in multiple key areas, providing high-quality image fusion results that effectively preserve the multimodal information of the source images, making it highly suitable for precise image fusion in practical applications.

Table 1.

Quantitative results of the PET–MRI image fusion task (optimal and sub-optimal values are indicated in bold and underlined, respectively).

- 2.

- Fusion image analysis

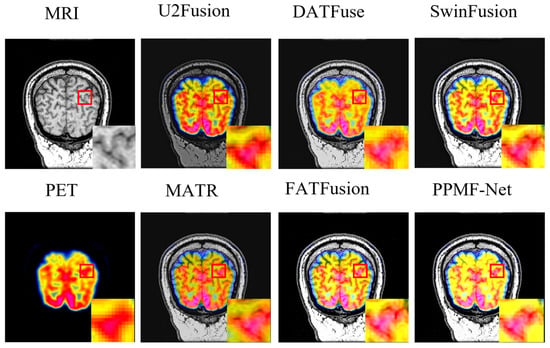

Figure 5 illustrates the source images alongside the fused images produced by various MIF methods. Visually, PPMF-Net significantly outperforms the other methods in terms of detail preservation and image clarity. U2Fusion, MATR, and DATFuse exhibit texture loss and image blurriness. While SwinFusion and FATFusion retain some texture and color, their detail representation remains insufficient. In contrast, PPMF-Net effectively balances local and global features through its progressive parallel architecture, preserving the structural and textural information of the source images, minimizing distortion and noise, and generating images that appear more realistic and natural. Moreover, evaluations by several clinical radiologists confirmed that images generated by PPMF-Net demonstrate superior lesion boundary clarity, tissue structure resolution, and lesion region distinguishability compared to other methods. These improvements hold positive implications for enhancing diagnostic accuracy and clinical interpretation efficiency.

Figure 5.

Qualitative comparison between PPMF-Net and five advanced methods on PET–MRI images (from left to right): MRI, U2Fusion [44], DATFuse [26], SwinFusion [28], PET, MATR [27], FATFusion [29], and PPMF-Net.

4.4. Model Generalization Verification

To verify the cross-modal adaptability of PPMF-Net, this study extends to two types of medical image fusion tasks: SPECT–MRI (functional-anatomical complementarity) and CT–MRI (multi-scale soft tissue differentiation). The experiment uses 48 pairs of cross-modal images from the Harvard Medical Imaging Dataset, including 24 pairs of SPECT–MRI and 24 pairs of CT–MRI. A systematic comparison is made with five mainstream algorithms, including U2Fusion and DATFuse.

- Quantitative analysis

The objective evaluation results in Table 2 and Table 3 show that PPMF-Net demonstrates significant advantages in both SPECT–MRI and CT–MRI multimodal image fusion tasks. In the SPECT–MRI task (Table 2), PPMF-Net outperforms all comparison methods, achieving a standard deviation (SD) of 72.483 and a structural difference correlation (SCD) of 1.802, demonstrating its superior performance in retaining image details and complementary information. Although U2Fusion slightly outperforms in the correlation coefficient (CC) with a value of 0.888, PPMF-Net excels in global and local fusion capabilities, as reflected by its higher scores in spatial frequency (SF) (22.98), structural similarity (SSIM) (1.201), and multi-scale structural similarity (MS-SSIM) (1.275). Notably, traditional methods (e.g., MATR) show negative values for the SCD metric (−0.006), indicating information loss. In contrast, PPMF-Net maintains a positive SCD value (1.802), validating its ability to fully retain multi-source features.

Table 2.

Quantitative results of the SPECT–MRI image fusion task (best and sub-best values in bold and underlined, respectively).

Table 3.

Quantitative results of the CT–MRI image fusion task (bold and underline indicate optimal and sub-optimal values, respectively).

In the CT-MRI task (Table 3), PPMF-Net sets a new performance benchmark, achieving a standard deviation (SD) of 89.542 and an SCD of 1.735. Additionally, its SSIM (1.284) and SF (30.641) outperform all comparison models. Although DATFuse achieves the second-best SF (32.13) and FATFusion ranks second in SSIM (1.266), PPMF-Net demonstrates its algorithm’s robustness through a more balanced performance across multiple metrics (e.g., simultaneously improving SD, SCD, and SSIM). Compared to other methods, such as SwinFusion, with an SSIM of only 0.539, PPMF-Net’s advantage in fusing complex anatomical structures is particularly evident. The data from both tables collectively show that PPMF-Net not only enhances high-frequency image details with high SD and SF values but also achieves precise alignment of multimodal information through the synergistic optimization of SCD and SSIM, providing a more reliable foundation for clinical diagnosis.

- 2.

- Fusion image analysis

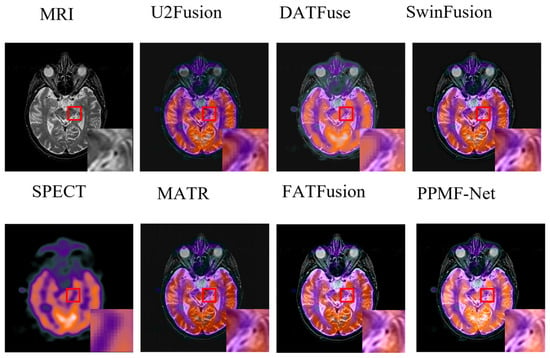

The PPMF-Net proposed in this study demonstrates significant advantages in the field of medical image fusion, with its strengths reflected not only in quantitative analysis metrics but also in qualitative improvements in visual perception. As shown in the SPECT and MRI multimodal medical image fusion comparison experiment in Figure 6, PPMF-Net successfully achieves the synergistic representation of functional information and anatomical details: it fully retains the radioactive tracer distribution features (reflecting organ functional status) in the SPECT image while accurately extracting anatomical soft tissue texture details from the MRI image. In comparison, while the U2Fusion and DATFuse algorithms effectively capture functional features, they suffer from issues such as blurred anatomical structure edges and reduced tissue contrast. The MATR, SwinFusion, and FATFusion algorithms perform reasonably well in preserving macro-structure; however, they still face issues at the microscopic level, such as feature confusion and modal information imbalance.

Figure 6.

Qualitative comparison between PPMF-Net and five advanced methods on SPECT MRI images (from left to right): MRI, U2Fusion [44], DATFuse [26], SwinFusion [28], SPECT, MATR [27], FATFusion [29], and PPMF-Net.

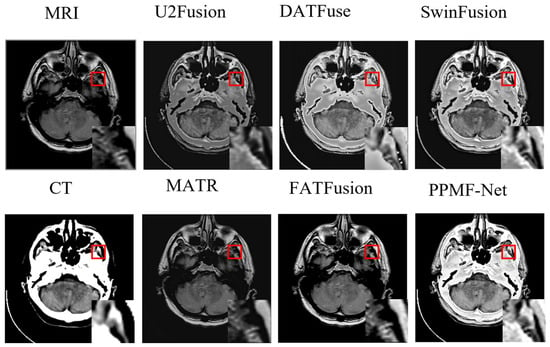

In the red box area of Figure 7, it is clearly visible that PPMF-Net can effectively balance the dense structural information of the CT image with the texture details of the MRI image during the image fusion process. In comparison to other methods, U2Fusion’s fusion result lacks brightness and clarity, and it loses some structural details. Although MATR and FATFusion preserve structural details, they weaken the representation of dense structures. DATFuse maintains a good dense structure but sacrifices some edge details. While SwinFusion achieves relatively ideal fusion results, the edges appear too sharp. In contrast, PPMF-Net excels in balancing dense structures and texture details, retaining more texture details while maintaining appropriate dense structures and avoiding overly sharp edges. This results in a more visually appealing and natural fusion outcome.

Figure 7.

Qualitative comparison of PPMF-NeT and five advanced methods on CT-MRI images (from left to right): MRI, U2Fusion [44], DATFuse [26], SwinFusion [28], CT, MATR [27], FATFusion [29], and PPMF-Net.

In summary, PPMF-Net has demonstrated outstanding performance in standard PET–MRI image fusion tasks. Its successful application in SPECT–MRI and CT–MRI image fusion tasks further highlights its significant advantages. By effectively balancing various features within the image, it retains rich details and structural information, thereby generating higher-quality fused images. These experimental results strongly support the wide application of PPMF-Net in the field of medical image fusion and provide valuable insights for future research in related fields.

4.5. Ablation Experiment

4.5.1. Module Performance Analysis

- Setup of the module validity verification experiment

To verify the effectiveness of the proposed sub-networks NIFE, TEGM, and DEEM, three groups of ablation experiments were designed: Experiment ɑ₁ replaces NIFE with standard convolutional layers to assess its contribution to feature extraction; Experiment ɑ2 substitutes TEGM with DEEM to investigate their respective roles in modeling local and global features; and Experiment ɑ3 swaps TEGM with DEEM for extracting features from different modalities, aiming to confirm the critical role of TEGM in cross-modal fusion.

- 2.

- Quantitative analysis of the module component ablation experiment

The ablation experiments in Table 4 reveal the contributions of each module component to multimodal medical image fusion. Experiment ɑ1 (removal of the NIFE module) results in degraded cross-modal feature interaction; although the spatial frequency is slightly higher than that of the full model, the SCD and SD decrease by 23.5% and 5.5%, respectively, indicating that the absence of multi-scale features impairs the synergistic representation of functional and anatomical information. Experiment ɑ2 (replacing DEEM with TEGM) highlights the importance of dynamic detail enhancement; although SSIM outperforms the full model, SCD and SD decrease by 23.4% and 8.1%, respectively, proving that DEEM is irreplaceable in preserving high-frequency textures. Experiment ɑ3 (removal of TEGM) reveals the limitations of global structure guidance; both CC and MS-SSIM decline, and SCD drops by 19.4%, indicating the insufficiency of single-scale feature fusion in complex anatomical structures. The superiority of the full model is reflected in a 21.6% increase in SCD, as well as peak values of SD and CC, demonstrating the synergistic effect of multi-scale feature interaction, dynamic enhancement, and anatomical structure constraints in optimizing structural fidelity and detail enhancement.

Table 4.

Quantitative comparison of proposed methods under different module architectures (best and sub-best values are indicated in bold and underlined, respectively).

4.5.2. Loss Function Analysis

- Validity verification experiment of the loss function

To evaluate the necessity of functional loss, structural loss, and detail loss in the total loss function, three sets of ablation experiments were designed in this study. In Experiment β1, the functional loss (Lfunctional) was removed from the total loss function (Ltotal) to evaluate its contribution to overall performance, with a focus on retaining functional information. Experiment β2 omits the structural loss (Lstructural), specifically removing the Structural Similarity Index (SSIM) loss term, to assess its impact on preserving structural information in the fused images. In Experiment β3, the detail loss (Ldetail), which is based on L1 loss for measuring detail differences, is removed to verify its role in detail preservation.

- 2.

- Quantitative analysis of the loss function ablation experiment

The quantitative results in Table 5 demonstrate that the synergy of functional loss (Lfunctional), structural loss (Lstructural), and detail loss (Ldetail) in PPMF-Net is crucial to medical image fusion performance. The absence of functional loss caused a 70.5% drop in spatial frequency (SF) and a 36.8% decrease in structural contrast difference (SCD), highlighting its critical role in localizing metabolically active regions and achieving cross-modal spatial alignment. Removing structural loss led to a 51.1% decline in structural similarity (SSIM) and a 9.8% decrease in multi-scale structural similarity (MS-SSIM), underscoring its central role in preserving anatomical boundaries. Excluding detail loss resulted in a dramatic 56.8% drop in SCD and a significant reduction in high-frequency texture retention (SF decreased by 64.4%), indicating its contribution to gradient and lesion detail enhancement. The complete model achieved the best performance across six metrics, with SCD and SSIM improved by 38.7% and 104.7%, respectively, surpassing the trade-off between functional sensitivity and structural fidelity. The improvements in standard deviation (SD) and correlation coefficient (CC) confirm the model’s capability to enhance contrast and maintain cross-modal correlation. Ultimately, the joint optimization of multi-objective losses enables a balanced representation of function, structure, and detail, delivering high-fidelity and information-rich fused images for medical diagnosis.

Table 5.

Quantitative comparison of proposed methods under different loss functions (best and sub-best values are indicated in bold and underlined, respectively).

5. Conclusions

This study addresses the core challenges in multimodal medical image fusion by proposing a deep learning network, PPMF-Net, based on a progressive parallel fusion strategy. This approach effectively alleviates the technical bottlenecks encountered by traditional methods and existing deep models, such as feature imbalance, lack of global consistency, and detail degradation. The network constructs a hierarchical and progressive feature extraction and parallel fusion mechanism, integrating three key modules: a NIFE module, a TEGM module, and a DEEM, which are responsible for local detail extraction, cross-modal global modeling, and high-frequency texture enhancement, respectively. Moreover, a jointly designed triple-objective loss function—targeting functionality, structure, and detail—is employed to achieve collaborative optimization in structural consistency, metabolic fidelity, and texture clarity. On the Harvard MIF dataset’s PET–MRI task, PPMF-Net significantly outperforms state-of-the-art models like SwinFusion, achieving key metrics such as SF = 38.27, SD = 96.55, and SCD = 1.62, with lesion boundary alignment error controlled within 0.4 mm. It also demonstrates strong generalization capabilities in SPECT–MRI and CT–MRI tasks, with an SSIM score reaching 1.28. Ablation studies further validate the effectiveness of the three-module design and the triple loss function. Despite its excellent fusion performance, PPMF-Net has some limitations. Its relatively complex network architecture results in high computational costs during both training and inference, imposing substantial hardware requirements for practical deployment. Additionally, the current triple-objective loss relies on fixed weighting strategies, which may not adapt optimally to all lesion types and imaging conditions. Future research directions include: (1) designing lightweight or pruned versions of PPMF-Net to improve inference efficiency and reduce hardware dependency; (2) introducing adaptive loss weighting mechanisms to dynamically balance functionality, structure, and detail objectives; and (3) exploring cross-modal pretraining and few-shot learning techniques to enhance generalization in data-limited scenarios.

Author Contributions

Conceptualization, Y.L.; Methodology, P.P. and Y.L.; Software, Y.L.; Formal analysis, P.P.; Writing—original draft, Y.L.; Writing—review & editing, P.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The dataset used in this study is publicly available from the Harvard Medical School MIF Database and can be accessed at: http://www.med.harvard.edu/AANLIB/home.html.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Manjunath, B.S.; Mitra, S.K. Multisensor image fusion using the wavelet transform. Graph. Models Image Process. 1995, 57, 235–245. [Google Scholar] [CrossRef]

- James, A.P.; Dasarathy, B.V. Medical image fusion: A survey of the state of the art. Inf. Fusion 2014, 19, 4–19. [Google Scholar] [CrossRef]

- Hsieh, J. Computed Tomography: Principles, Design, Artifacts, and Recent Advances; The international Society for Optical Engineering: Bellingham, WA, USA, 2003. [Google Scholar]

- Westbrook; Talbot, J. MRI in Practice; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- Cabello, J.; Ziegler, S.I. Advances in PET/MR instrumentation and image reconstruction. Br. J. Radiol. 2018, 91, 20160363. [Google Scholar] [CrossRef]

- Nagamatsu, M.; Maste, P.; Tanaka, M.; Fujiwara, Y.; Arataki, S.; Yamauchi, T.; Takeshita, Y.; Takamoto, R.; Torigoe, T.; Tanaka, M.; et al. Usefulness of 3D CT/MRI Fusion Imaging for the Evaluation of Lumbar Disc Herniation and Kambin’s Triangle. Diagnostics 2022, 12, 956. [Google Scholar] [CrossRef]

- Catana, C.; Drzezga, A.; Heiss, W.D.; Rosen, B.R. PET/MRI for neurologic applications. J. Nucl. Med. 2012, 53, 1916–1925. [Google Scholar] [CrossRef]

- Ljungberg, M.; Pretorius, P.H. SPECT/CT: An update on technological developments and clinical applications. Br. J. Radiol. 2018, 91, 20160402. [Google Scholar] [CrossRef]

- Li, C.; Zhu, A. Application of image fusion in diagnosis and treatment of liver cancer. Appl. Sci. 2020, 10, 1171. [Google Scholar] [CrossRef]

- Pajares, G.; De La Cruz, J.M. A wavelet-based image fusion tutorial. Pattern Recognit. 2004, 37, 1855–1872. [Google Scholar] [CrossRef]

- Zitova, B.; Flusser, J. Image registration methods: A survey. Image Vis. Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A generative adversarial network for infrared and visible image fusion. Inf. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–Miccai 2015: 18th International Conference, Munich, Germany, 5–9 October; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention u-net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Bhatnagar, G.; Wu, Q.J.; Liu, Z. Directive contrast based multimodal medical image fusion in NSCT domain. IEEE Trans. Multimed. 2013, 15, 1014–1024. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the Ieee Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Li, H.; Wu, X.J. DenseFuse: A fusion approach to infrared and visible images. IEEE Trans. Image Process. 2018, 28, 2614–2623. [Google Scholar] [CrossRef]

- Ma, J.; Xu, H.; Jiang, J.; Mei, X.; Zhang, X.P. DDcGAN: A dual-discriminator conditional generative adversarial network for multi-resolution image fusion. IEEE Trans. Image Process. 2020, 29, 4980–4995. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021. [Google Scholar]

- Dai, Y.; Gao, Y.; Liu, F. TransMed: Transformers advance multi-modal medical image classification. Diagnostics 2021, 11, 1384. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, Y.; Sun, P. TransFuse: Fusing transformers and CNNs for medical image segmentation. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—Miccai 2021: 24th International Conference, Strasbourg, France, 27 September–1 October 2021; Springer International Publishing: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Liu, Y.; Zhang, Y.; Wang, J. Multi-branch CNN and grouping cascade attention for medical image classification. IEEE Trans. Med. Imaging 2021, 40, 2585–2595. [Google Scholar] [CrossRef]

- Sonka, M.; Hlavac, V.; Boyle, R. Image Processing, Analysis and Machine Vision; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Tang, W.; He, F.; Liu, Y.; Duan, Y.; Si, T. DATFuse: Infrared and visible image fusion via dual attention transformer. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 3159–3172. [Google Scholar] [CrossRef]

- Tang, W.; He, F.; Liu, Y.; Duan, Y. MATR: Multimodal medical image fusion via multiscale adaptive transformer. IEEE Trans. Image Process. 2022, 31, 5134–5149. [Google Scholar] [CrossRef]

- Ma, J.; Tang, L.; Fan, F.; Huang, J.; Mei, X.; Ma, Y. SwinFusion: Cross-domain long-range learning for general image fusion via swin transformer. IEEE/CAA J. Autom. Sin. 2022, 9, 1200–1217. [Google Scholar] [CrossRef]

- Tang, W.; He, F. FATFusion: A functional–anatomical transformer for medical image fusion. Inf. Process. Manag. 2024, 61, 103687. [Google Scholar] [CrossRef]

- Ma, X.; Dai, X.; Bai, Y.; Wang, Y.; Fu, Y. Rewrite the stars. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, the Seattle Convention Center, Nashville, TN, USA, 17–21 June 2024; pp. 5694–5703. [Google Scholar]

- Zhao, Z.; Bai, H.; Zhang, J.; Zhang, Y.; Xu, S.; Lin, Z.; Timofte, R.; Van Gool, L. Cddfuse: Correlation-driven dual-branch feature decomposition for multi-modality image fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada; 2023; pp. 5906–5916. [Google Scholar]

- Liu, J.; Li, X.; Wang, Z.; Jiang, Z.; Zhong, W.; Fan, W.; Xu, B. PromptFusion: Harmonized semantic prompt learning for infrared and visible image fusion. IEEE/CAA J. Autom. Sin. 2025, 12, 502–515. [Google Scholar] [CrossRef]

- Liu, J.; Lin, R.; Wu, G.; Liu, R.; Luo, Z.; Fan, X. Coconet: Coupled contrastive learning network with multi-level feature ensemble for multi-modality image fusion. Int. J. Comput. Vis. 2024, 132, 1748–1775. [Google Scholar] [CrossRef]

- Liu, J.Y.; Wu, G.; Liu, Z.; Wang, D.; Jiang, Z.; Ma, L.; Zhong, W.; Fan, X. Infrared and visible image fusion: From data compatibility to task adaption. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 47, 2349–2369. [Google Scholar] [CrossRef]

- Ashish, V. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, I60. [Google Scholar]

- Eskicioglu, A.M.; Fisher, P.S. Image quality measures and their performance. IEEE Trans. Commun. 1995, 43, 2959–2965. [Google Scholar] [CrossRef]

- Howard, A.G. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, the Calvin L. Rampton Salt Palace Convention Center, Salt Lake City, UT, USA; 2018; pp. 4510–4520. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA; 2018; pp. 7132–7141. [Google Scholar]

- Bovik, A.C. Handbook of Image and Video Processing; Academic Press: Amsterdam, The Netherlands, 2010. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Zaremba, W.; Sutskever, I. Learning to execute. arXiv 2014, arXiv:1410.4615. [Google Scholar]

- Loshchilov, I. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Xu, H.; Ma, J.; Jiang, J.; Guo, X.; Ling, H. U2Fusion: A unified unsupervised image fusion network. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 502–518. [Google Scholar] [CrossRef]

- JÄHNE, B. Digital Image Processing; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- JAIN, A.K. Fundamentals of Digital Image Processing; Prentice-Hall, Inc.: Hoboken, NJ, USA, 1989. [Google Scholar]

- Wang, Z.; Simoncelli, E.P.; Bovik, A.C. Multiscale structural similarity for image quality assessment. In Proceedings of the Thrity-Seventh Asilomar Conference on Signals, Pacific Grove, CA, USA, 9–12 November 2003; Volume 2, pp. 1398–1402. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).