Cognitive Insights into Museum Engagement: A Mobile Eye-Tracking Study on Visual Attention Distribution and Learning Experience

Abstract

1. Introduction

2. Literature Review

2.1. Museums as Contexts for Visual and Cognitive Engagement

2.2. Visual Attention and Mobile Eye Tracking Technology

2.3. Use of Mobile Eye Tracking in the Visitor Studies

3. Method

3.1. Research Setting

3.2. Survey for Information About Visitors

3.3. Eye-Tracking Experiment

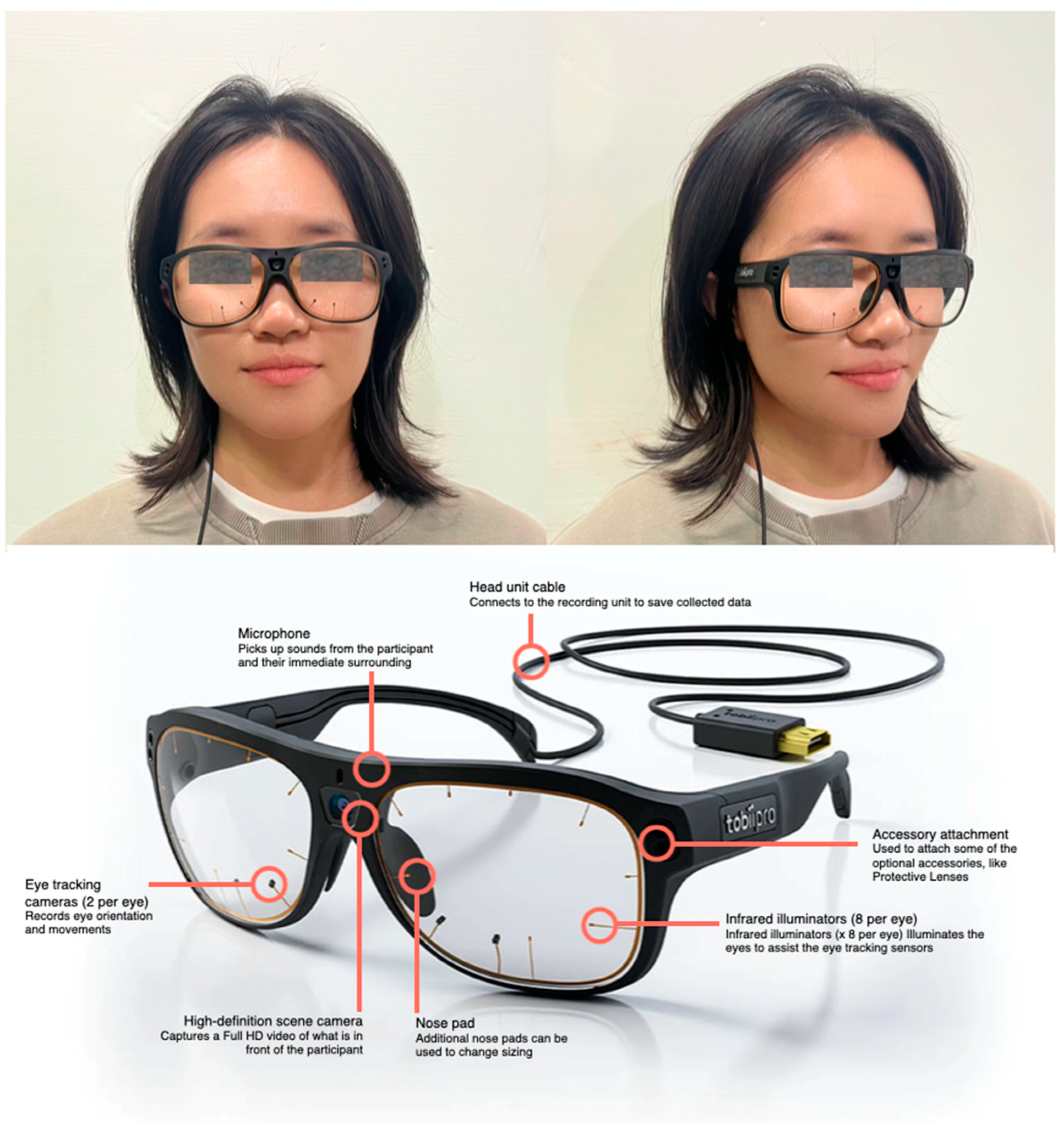

3.3.1. Apparatus

3.3.2. Participants

3.3.3. Procedure

3.4. Post-Experiment Interview

3.5. Data Analysis

- Time to First Fixation (TFF);

- Total Fixation Duration (TFD);

- Average Fixation Duration (AFD);

- Ratio On-Target: All Fixation Time (ROAFT).

4. Results

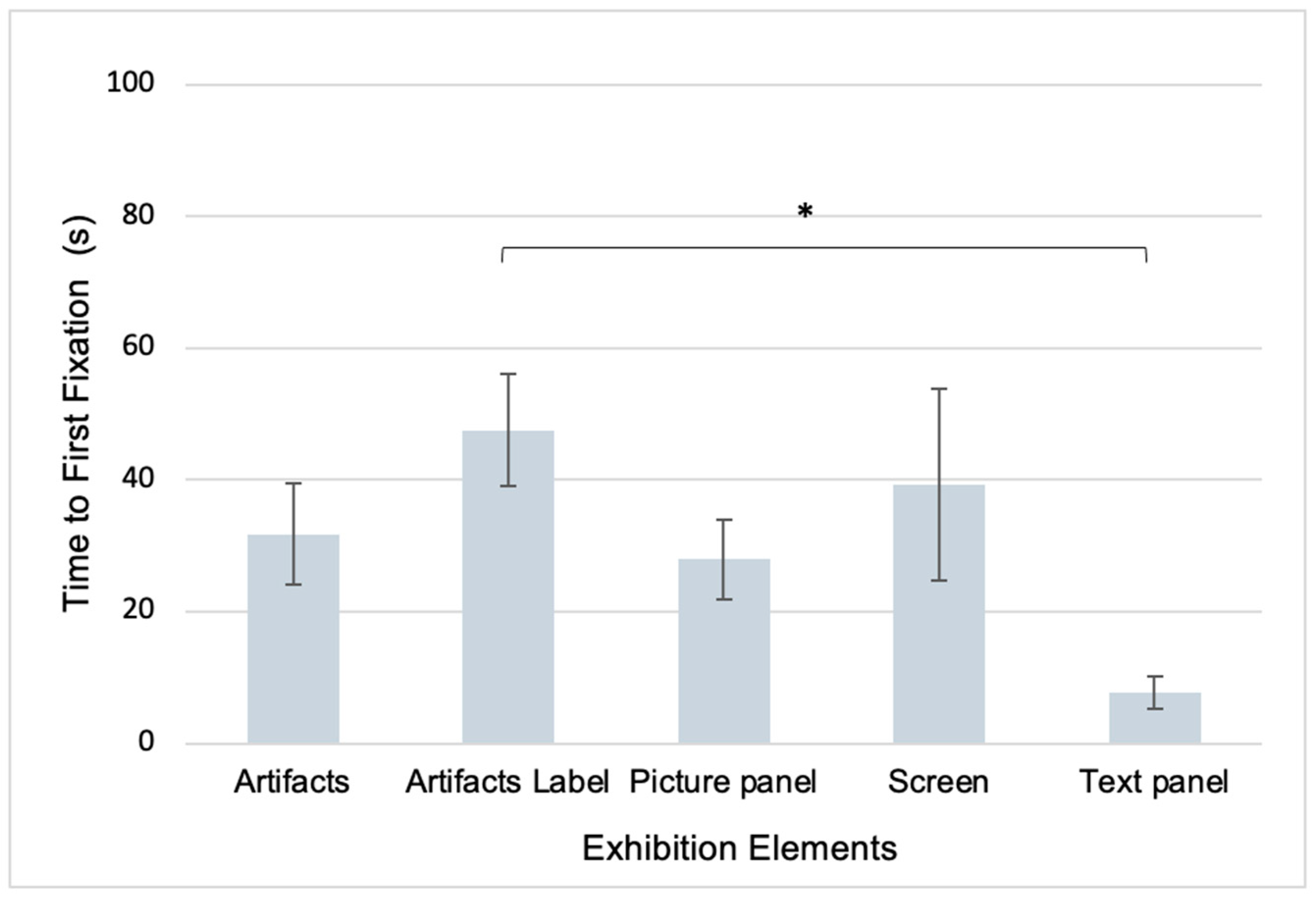

4.1. Descriptive Statistics

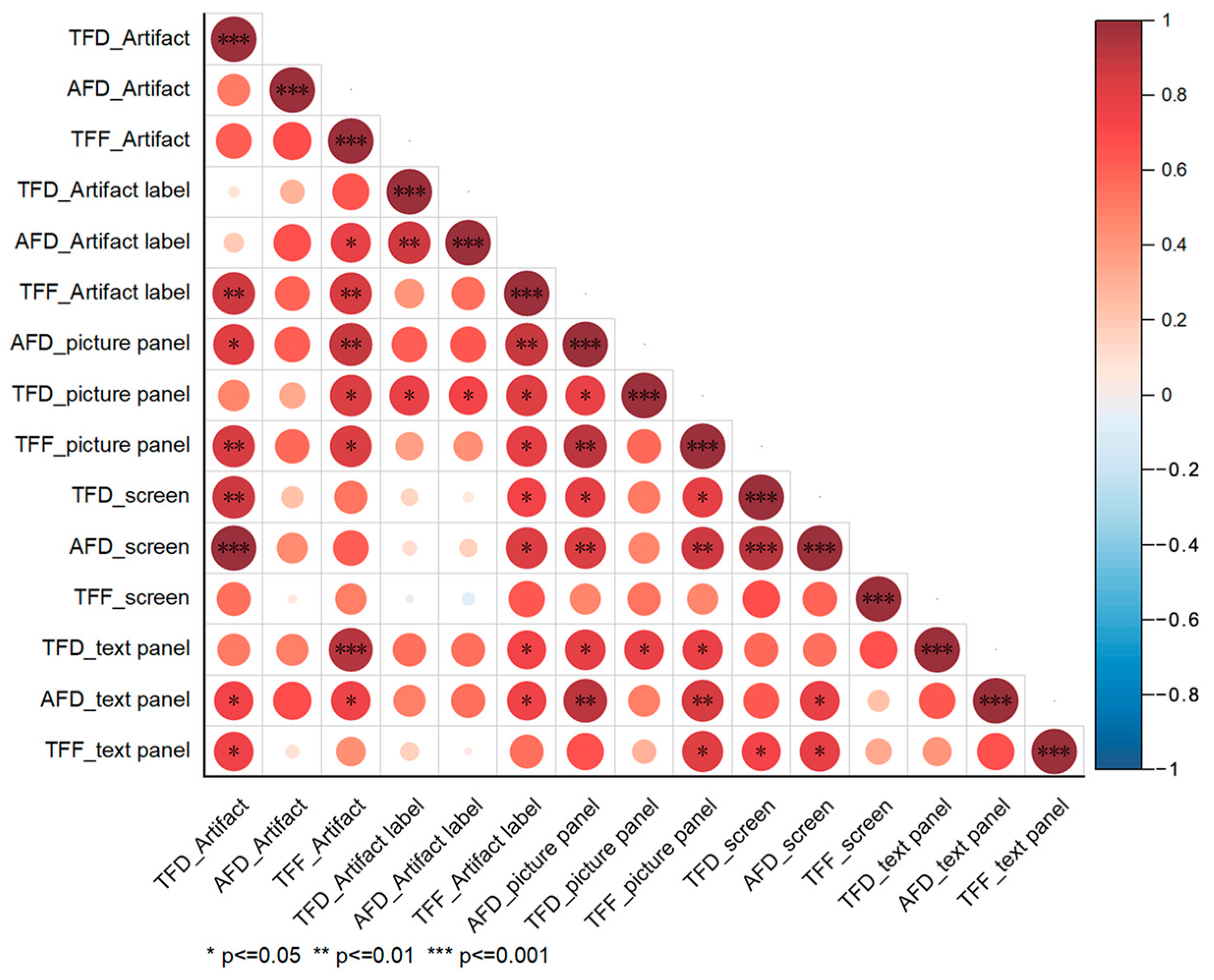

4.2. Main Analysis

5. Findings and Discussion

6. Conclusions

6.1. Theoretical and Practical Implications

6.2. Limitations and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| MET | Mobile eye tracking |

| ROAFT | Ratio On-Target: All Fixation Time |

| TFD | Total fixation duration |

| AFD | Average fixation duration |

| TFF | Time to first fixation |

Appendix A

| No | Direction | Question |

|---|---|---|

| 1 | Demographical Information | 1. Age |

| 2. Gender | ||

| 3. Education and Major | ||

| 2 | Visiting an Archaeological Site Museum | 1. How often do you generally visit an Archaeological Site Museum in a normal year? |

| 2. How interested are you in artifacts? | ||

| 3. What is your purpose when visiting an Archaeological Site Museum? | ||

| (Images of Liang Zhu artifacts are presented) | ||

| 3 | Interests of artifact | 1. Please write down what you are curious about the presented artifact. |

| 2. Please write down the reason for your curiosity. | ||

References

- Su, C.; Huang, M.; Zhang, J.; Yang, R. The Application of Eye Tracking on User Experience in Virtual Reality. In Proceedings of the 2023 IEEE 2nd International Conference on Cognitive Aspects of Virtual Reality (CVR), Veszprém, Hungary, 26–27 October 2023; IEEE: Veszprém, Hungary, 2023; pp. 000057–000062. [Google Scholar]

- Scott, N.; Zhang, R.; Le, D.; Moyle, B. A review of eye-tracking research in tourism. Curr. Issues Tour. 2019, 22, 1244–1261. [Google Scholar] [CrossRef]

- Buquet, C.; Charlier, J.R.; Paris, V. Museum application of an eye tracker. Med. Biol. Eng. Comput. 1988, 26, 277–281. [Google Scholar] [CrossRef] [PubMed]

- Horsley, M.; Eliot, M.; Knight, B.A.; Reilly, R. (Eds.) Current Trends in Eye Tracking Research; Springer International Publishing: Cham, Switzerland, 2014; ISBN 978-3-319-02867-5. [Google Scholar]

- Kastner, S.; Pinsk, M.A. Visual attention as a multilevel selection process. Cogn. Affect. Behav. Neurosci. 2004, 4, 483–500. [Google Scholar] [CrossRef] [PubMed]

- Duchowski, A.T. Eye Tracking Methodology: Theory and Practice, 2nd ed.; Springer: London, UK, 2007; ISBN 978-1-84628-608-7. [Google Scholar]

- Bojko, A. Eye Tracking the User Experience: A Practical Guide to Research; Rosenfeld Media: Brooklyn, NY, USA, 2013; ISBN 978-1-933820-10-1. [Google Scholar]

- Yalowitz, S.S.; Bronnenkant, K. Timing and Tracking: Unlocking Visitor Behavior. Visit. Stud. 2009, 12, 47–64. [Google Scholar] [CrossRef]

- Mayr, E.; Knipfer, K.; Wessel, D. In-Sights into Mobile Learning An Exploration of Mobile Eye Tracking Methodology for Learning in Museums. In Researching Mobile Learning: Frameworks, Tools and Research Designs; Peter Lang Verlag: Oxford, UK, 2009; pp. 189–204. [Google Scholar]

- Mokatren, M.; Kuflik, T. Exploring the potential contribution of mobile eye-tracking technology in enhancing the museum visit experience. In Proceedings of the 1st Workshop on Advanced Visual Interfaces for Cultural Heritage, AVI*CH 2016, Bari, Italy, 7–10 June 2016. [Google Scholar]

- Falk, J.H.; Dierking, L.D. Learning from Museums: Visitor Experiences and the Making of Meaning; American Association for State and Local History book series; AltaMira Press: Walnut Creek, CA, USA, 2000; ISBN 978-0-7425-0294-9. [Google Scholar]

- Falk, J.H.; Dierking, L.D. The Museum Experience Revisited; Routledge: London, UK; Taylor & Francis Group: New York, NY, USA, 2016; ISBN 978-1-61132-044-2. [Google Scholar]

- Hooper-Greenhill, E. Museums and the Interpretation of Visual Culture, 1st ed.; Routledge: London, UK, 2020; ISBN 978-1-00-312445-0. [Google Scholar]

- Bitgood, S. Environmental psychology in museums, zoos, and other exhibition centers. In Handbook of Environmental Psychology; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2002; pp. 461–480. [Google Scholar]

- Hein, G.E. Learning in the Museum; Routledge: London, UK, 2002. [Google Scholar]

- Black, G. (Ed.) The Engaging Museum: Developing Museums for Visitor Involvement; Taylor and Francis: Hoboken, NJ, USA, 2012; ISBN 978-0-415-34556-9. [Google Scholar]

- Pekarik, A.J.; Doering, Z.D.; Karns, D.A. Exploring Satisfying Experiences in Museums. Curator Mus. J. 1999, 42, 152–173. [Google Scholar] [CrossRef]

- Falk, J.H. Identity and the Museum Visitor Experience; Left Coast Press: Walnut Creek, CA, USA, 2009; ISBN 978-1-59874-162-9. [Google Scholar]

- Neuhofer, B.; Buhalis, D.; Ladkin, A. A Typology of Technology-Enhanced Tourism Experiences. Int. J. Tour. Res. 2014, 16, 340–350. [Google Scholar] [CrossRef]

- Tzortzi, K. Movement in museums: Mediating between museum intent and visitor experience. Mus. Manag. Curatorship 2014, 29, 327–348. [Google Scholar] [CrossRef]

- Simon, N. The Participatory Museum; Museum 2.0: Santa Cruz, CA, USA, 2010. [Google Scholar]

- Posner, M.I. Orienting of Attention. Q. J. Exp. Psychol. 1980, 32, 3–25. [Google Scholar] [CrossRef]

- Itti, L.; Koch, C. Computational modelling of visual attention. Nat. Rev. Neurosci. 2001, 2, 194–203. [Google Scholar] [CrossRef]

- Theeuwes, J. Top–down and bottom–up control of visual selection. Acta Psychol. 2010, 135, 77–99. [Google Scholar] [CrossRef]

- Henderson, J. Human gaze control during real-world scene perception. Trends Cogn. Sci. 2003, 7, 498–504. [Google Scholar] [CrossRef] [PubMed]

- Rayner, K. Eye Movements in Reading and Information Processing: 20 Years of Research. Psychol. Bull. 1998, 124, 372–422. [Google Scholar] [CrossRef] [PubMed]

- Vernet, M.; Kapoula, Z. Binocular motor coordination during saccades and fixations while reading: A magnitude and time analysis. J. Vis. 2009, 9, 2. [Google Scholar] [CrossRef] [PubMed]

- Duchowski, A.T. A breadth-first survey of eye-tracking applications. Behav. Res. Methods Instrum. Comput. 2002, 34, 455–470. [Google Scholar] [CrossRef]

- Klaib, A.F.; Alsrehin, N.O.; Melhem, W.Y.; Bashtawi, H.O.; Magableh, A.A. Eye tracking algorithms, techniques, tools, and applications with an emphasis on machine learning and Internet of Things technologies. Expert Syst. Appl. 2021, 166, 114037. [Google Scholar] [CrossRef]

- Nyström, M.; Holmqvist, K. An adaptive algorithm for fixation, saccade, and glissade detection in eyetracking data. Behav. Res. Methods 2010, 42, 188–204. [Google Scholar] [CrossRef]

- Chen, H.-C.; Wang, C.-C.; Hung, J.C.; Hsueh, C.-Y. Employing Eye Tracking to Study Visual Attention to Live Streaming: A Case Study of Facebook Live. Sustainability 2022, 14, 7494. [Google Scholar] [CrossRef]

- Carter, B.T.; Luke, S.G. Best practices in eye tracking research. Int. J. Psychophysiol. 2020, 155, 49–62. [Google Scholar] [CrossRef]

- Lai, M.-L.; Tsai, M.-J.; Yang, F.-Y.; Hsu, C.-Y.; Liu, T.-C.; Lee, S.W.-Y.; Lee, M.-H.; Chiou, G.-L.; Liang, J.-C.; Tsai, C.-C. A review of using eye-tracking technology in exploring learning from 2000 to 2012. Educ. Res. Rev. 2013, 10, 90–115. [Google Scholar] [CrossRef]

- Mokatren, M.; Kuflik, T.; Shimshoni, I. Exploring the potential of a mobile eye tracker as an intuitive indoor pointing device: A case study in cultural heritage. Future Gener. Comput. Syst. 2018, 81, 528–541. [Google Scholar] [CrossRef]

- Rainoldi, M.; Yu, C.-E.; Neuhofer, B. The Museum Learning Experience Through the Visitors’ Eyes: An Eye Tracking Exploration of the Physical Context. In Eye Tracking in Tourism; Rainoldi, M., Jooss, M., Eds.; Tourism on the Verge; Springer International Publishing: Cham, Switzerland, 2020; pp. 183–199. ISBN 978-3-030-49708-8. [Google Scholar]

- Sheng, C.-W.; Chen, M.-C. A study of experience expectations of museum visitors. Tour. Manag. 2012, 33, 53–60. [Google Scholar] [CrossRef]

- Krogh-Jespersen, S.; Quinn, K.A.; Krenzer, W.L.D.; Nguyen, C.; Greenslit, J.; Price, C.A. Exploring the awe-some: Mobile eye-tracking insights into awe in a science museum. PLoS ONE 2020, 15, e0239204. [Google Scholar] [CrossRef]

- Pelowski, M.; Leder, H.; Mitschke, V.; Specker, E.; Gerger, G.; Tinio, P.P.L.; Vaporova, E.; Bieg, T.; Husslein-Arco, A. Capturing Aesthetic Experiences With Installation Art: An Empirical Assessment of Emotion, Evaluations, and Mobile Eye Tracking in Olafur Eliasson’s “Baroque, Baroque!”. Front. Psychol. 2018, 9, 1255. [Google Scholar] [CrossRef] [PubMed]

- Eghbal-Azar, K.; Widlok, T. Potentials and Limitations of Mobile Eye Tracking in Visitor Studies: Evidence From Field Research at Two Museum Exhibitions in Germany. Soc. Sci. Comput. Rev. 2013, 31, 103–118. [Google Scholar] [CrossRef]

- Rainoldi, M.; Neuhofer, B.; Jooss, M. Mobile Eyetracking of Museum Learning Experiences. In Information and Communication Technologies in Tourism 2018; Stangl, B., Pesonen, J., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 473–485. ISBN 978-3-319-72922-0. [Google Scholar]

- Teo, T.W.; Loh, Z.H.J.; Kee, L.E.; Soh, G.; Wambeck, E. Mediational Affordances at a Science Centre Gallery: An Exploratory and Small Study Using Eye Tracking and Interviews. Res. Sci. Educ. 2024, 54, 775–795. [Google Scholar] [CrossRef]

- Land, M.; Tatler, B. Looking and ActingVision and Eye Movements in Natural Behaviour; Oxford University Press: Oxford, UK, 2009; ISBN 978-0-19-857094-3. [Google Scholar]

- Homavazir, T.G.; Parupudi, V.S.R.; Pilla, S.L.S.R.; Cosman, P. Slippage-robust linear features for eye tracking. Expert Syst. Appl. 2025, 264, 125799. [Google Scholar] [CrossRef]

- Holmqvist, K.; Nyström, M.; Andersson, R.; Dewhurst, R.; Jarodzka, H. Eye Tracking: A Comprehensive Guide to Methods and Measures; Oxford University Press: Oxford, UK, 2011. [Google Scholar]

- Sharafi, Z.; Shaffer, T.; Sharif, B.; Gueheneuc, Y.-G. Eye-Tracking Metrics in Software Engineering. In Proceedings of the 2015 Asia-Pacific Software Engineering Conference (APSEC), New Delhi, India, 1–4 December 2015; IEEE: New Delhi, India, 2015; pp. 96–103. [Google Scholar]

- Schwan, S.; Gussmann, M.; Gerjets, P.; Drecoll, A.; Feiber, A. Distribution of attention in a gallery segment on the National Socialists’ Führer cult: Diving deeper into visitors’ cognitive exhibition experiences using mobile eye tracking. Mus. Manag. Curatorship 2020, 35, 71–88. [Google Scholar] [CrossRef]

- Venkatraman, V.; Dimoka, A.; Vo, K.; Pavlou, P.A. Relative Effectiveness of Print and Digital Advertising: A Memory Perspective. J. Mark. Res. 2021, 58, 827–844. [Google Scholar] [CrossRef]

- Bitgood, S. The Role of Attention in Designing Effective Interpretive Labels. J. Interpret. Res. 2000, 5, 31–45. [Google Scholar] [CrossRef]

- Jung, Y.J.; Zimmerman, H.T.; Pérez-Edgar, K. A Methodological Case Study with Mobile Eye-Tracking of Child Interaction in a Science Museum. TechTrends 2018, 62, 509–517. [Google Scholar] [CrossRef]

- Fantoni, S.F.; Jaebker, K.; Bauer, D.; Stofer, K. Capturing Visitors’ Gazes: Three Eye Tracking Studies in Museums. In Proceedings of the Annual Conference of Museums and the Web, Portland, OR, USA, 17–20 April 2013. [Google Scholar]

- Wooding, D.S. Fixation Maps: Quantifying Eye-movement Traces. In Proceedings of the Eye Tracking Research & Application Symposium, ETRA 2002, New Orleans, LA, USA, 25–27 March 2002. [Google Scholar]

| Article (Author/Title /Year) | Research Questions | Methods | Conclusions and Key Contribution |

|---|---|---|---|

| Mayr, Knipfer & Wessel: In-Sights into Mobile Learning An Exploration of Mobile Eye Tracking Methodology for Learning in Museums (2009) [9] | What are the methodological challenges and opportunities of applying mobile eye tracking (MET) to investigate informal learning in museum–like settings? | Device: ASL MobileEye head-mounted eye tracker (one scene camera + one eye camera; 30 Hz sampling) Participants: n = 3 adult volunteers Location: Nanotechnology mini-exhibition at a research institute Procedure: Calibration → 17–57 min naturalistic exploration with synchronous video + gaze overlay → post-visit interview; video coded in Video graph | MET captured natural in situ gaze paths and fixation durations. Triangulating gaze data with interviews enriched understanding of exploratory learning behaviors. Methodological Innovation: First systematic deployment of head-mounted eye tracking in a museum-like exhibition, demonstrating the feasibility of capturing naturalistic gaze paths in situ. |

| Eghbal-Azar & Widlok: Potentials and Limitations of Mobile Eye Tracking in Visitor Studies: Evidence From Field Research at Two Museum Exhibitions in Germany (2013) [39] | How feasible is MET for collecting valid gaze data under real-world museum conditions? Which contextual factors impede data quality and interpretation? | Devices: ASL MobileEye and Locarna PT Mini (25 Hz sampling) Participants: n = 16 (8 experts + 8 novices) Locations: Two thematic exhibitions in Germany Procedure: On-site calibration; free-flow recordings (12–76 min per visitor); manual post-processing to generate gaze-overlaid video | MET produced high-resolution heatmaps and scan-path data in authentic settings. Core gaze patterns consistent across venues. Field Validation: Conducted dual-site deployments (cultural and science exhibitions), establishing MET’s robustness across heterogeneous museum contexts. |

| Mokatren & Kuflik: Exploring the potential contribution of mobile eye-tracking technology in enhancing the museum visit experience (2016) [10] | How can MET inform gaze-based mobile guide design? How do visual strategies differ across physical objects, projections, and multimedia installations? | Device: Pupil Dev mobile eye-tracker Participants: n = 5 (grid-test); n = 1 (prototype evaluation) Location: Hecht Museum, University of Haifa Procedure: Accuracy/precision grid test; single volunteer exhibit exploration; prototype evaluation | In this work-in-progress, they have demonstrated the feasibility of employing mobile eye tracking to enrich the museum visit experience. Applied Prototype Development: Demonstrated how MET can drive “gaze-triggered” mobile guides by evaluating Pupil Labs hardware performance and latency in real exhibit conditions. |

| Rainoldi, Neuhofer & Jooss: Mobile Eye tracking of Museum Learning Experiences (2019) [40] | How is the museum learning experience contextually influenced by the surrounding physical context of the visitor? | Device: Tobii Pro Glasses 2 (4 eye cameras + scene camera; 100 Hz) Participants: n = 31 recruited; n = 24 valid after exclusions Location: Special exhibition, Salzburg Museum, Austria Procedure: Pre-visit survey; free-flow MET; post-visit survey; gaze data mapped to floor plan → heatmaps | Gaze hotspots aligned with key exhibit features, supporting contextual learning theory. Introduced room-by-room heatmap aggregation mapped onto floor plans, providing an emic perspective on how spatial arrangement shapes visitor attention. |

| Krogh-Jespersen et al.: Exploring the awe-some: Mobile eye-tracking insights into awe in a science museum (2020) [37] | Can “awe” be quantified via MET indicators? Which exhibits elicit awe-related gaze patterns? | Device: Tobii Pro Glasses 2 (4 eye cameras + scene camera; 100 Hz) Participants: n = 31 guests (15 Rotunda, 16 submarine) Location: Museum of Science and Industry, Chicago, USA Procedure: Between-subjects design; calibration; free-flow exploration; post-visit situational awe scale; frame-by-frame AOI coding | High awe ratings are associated with increased fixation durations and AOI-switching. Immersive exhibits most effectively elicited awe. Emotion-Vision Mapping: Pioneered integration of real-time situational awe self-reports with concurrent MET, enabling dissection of gaze correlates of complex emotional states in museum visitors. |

| Teo, Loh, Kee et al.: Mediational Affordances at a Science Centre Gallery: An Exploratory and Small Study Using Eye Tracking and Interviews (2024) [41] | How do the visitors interact with the artifacts, and how do the artefacts mediate the experiences of the visitors in the Energy Story gallery at the science center? | Device: Dikablis mobile eye tracker (scene + eye camera) Participants: N = 16 recruited; N = 15 valid Location: Energy Story gallery, Science Centre Singapore Procedure: Calibration; sequential exploration of three interactive stations (8–10 min each); concurrent MET + audio; post-visit interviews | The study suggests that overloading a single artifact with multiple affordances may impede engagement, whereas distributing interactivity across several artifacts can optimize visitor experience. Mixed-Methods Model: Combined high-resolution MET trajectories with thematic interview data to formulate a three-phase “Perceive–Explore–Understand” model of visitor meaning-making in interactive STEM exhibits. |

| TFF | TFD | AFD | ||

|---|---|---|---|---|

| [Min, Max] | M | M | M | |

| (SD) | (SD) | (SD) | ||

| Artifacts | [3.53, 61.48] | 31.77 | 29.32 | 1.77 |

| (21.66) | (19.03) | (0.97) | ||

| Artifacts label | [4.00, 74.86] | 47.57 | 7.82 | 1.51 |

| (24.13) | (7.20) | (1.32) | ||

| Picture Panels | [8.47, 58.80] | 27.94 | 40.14 | 0.85 |

| (17.00) | (30.17) | (0.40) | ||

| Text Panels | [0, 22.12] | 7.69 | 29.81 | 1.36 |

| (6.84) | (27.03) | (0.84) | ||

| Video Screen | [0.13, 112.16] | 39.19 | 53.69 | 0.62 |

| (41.15) | (65.12) | (0.33) |

| Exhibits 01 | Exhibits 02 | Exhibits 03 | Exhibits 04 | Exhibits 05 | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Elements | M | SD | M | SD | M | SD | M | SD | M | SD | |

| ROAFT | Artifacts | 0.19 | 0.1 | 0.2 | 0.1 | 0.19 | 0.11 | 0.2 | 0.2 | 0.71 | 0.11 |

| Labels | 0.10 | 0.11 | 0.0 | 0.02 | 0.1 | 0.18 | 0.05 | 0.06 | 0.03 | 0.04 | |

| Picture panel | 0.21 | 0.14 | 0.68 | 0.20 | 0.07 | 0.09 | 0.14 | 0.10 | 0.21 | 0.20 | |

| Text panel | 0.46 | 0.11 | 0.00 | 0.00 | 0.25 | 0.31 | 0.22 | 0.28 | 0.32 | 0.27 | |

| Screen | 0.00 | 0.00 | 0.14 | 0.04 | 0.36 | 0.19 | 0.12 | 0.11 | 0.23 | 0.12 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, W.; Ono, K.; Li, L. Cognitive Insights into Museum Engagement: A Mobile Eye-Tracking Study on Visual Attention Distribution and Learning Experience. Electronics 2025, 14, 2208. https://doi.org/10.3390/electronics14112208

Shi W, Ono K, Li L. Cognitive Insights into Museum Engagement: A Mobile Eye-Tracking Study on Visual Attention Distribution and Learning Experience. Electronics. 2025; 14(11):2208. https://doi.org/10.3390/electronics14112208

Chicago/Turabian StyleShi, Wenjia, Kenta Ono, and Liang Li. 2025. "Cognitive Insights into Museum Engagement: A Mobile Eye-Tracking Study on Visual Attention Distribution and Learning Experience" Electronics 14, no. 11: 2208. https://doi.org/10.3390/electronics14112208

APA StyleShi, W., Ono, K., & Li, L. (2025). Cognitive Insights into Museum Engagement: A Mobile Eye-Tracking Study on Visual Attention Distribution and Learning Experience. Electronics, 14(11), 2208. https://doi.org/10.3390/electronics14112208