A Method for Improving the Robustness of Intrusion Detection Systems Based on Auxiliary Adversarial Training Wasserstein Generative Adversarial Networks

Abstract

1. Introduction

- Research on the effectiveness and sensitivity of adversarial training has shown that adversarial training has its limitations, and the lack of ability to cover the test samples as well as the sensitivity to the distribution of the input data may lead to a lack of applicability and reliability of adversarial training.

- The auxiliary network model’s defense operates independently of the target model, preserving training resources by eliminating the need for model retraining. This approach maintains the target model’s accuracy on its original task and keeps the original samples largely intact. The model’s robustness is not inherently enhanced; instead, it functions as a filtering network that removes adversarial perturbations external to the target model. Consequently, the entire model remains vulnerable to a complete white-box attack, and the auxiliary network model fails to reconstruct adversarial samples while maintaining the integrity of the original samples.

- First, one-dimensional traffic data are downscaled and processed into two-dimensional image data via a stacked autoencoder (SAE) [13], and mixed adversarial samples are generated using the FGSM, PGD, and C&W adversarial attacks.

- Second, the improved WGAN network with an integrated perceptual network module [14] is adversarially trained by the hybrid adversary training set consisting of adversarial and normal samples.

- Finally, the adversary trained with the AuxAtWGAN model is auxiliary to the original model for adversarial sample detection, and the detected adversarial samples are removed and input into the original model, thus improving the robustness of the original model.

2. Related Work

3. Methodology

3.1. AuxAtWGAN System Block Diagram

- (1)

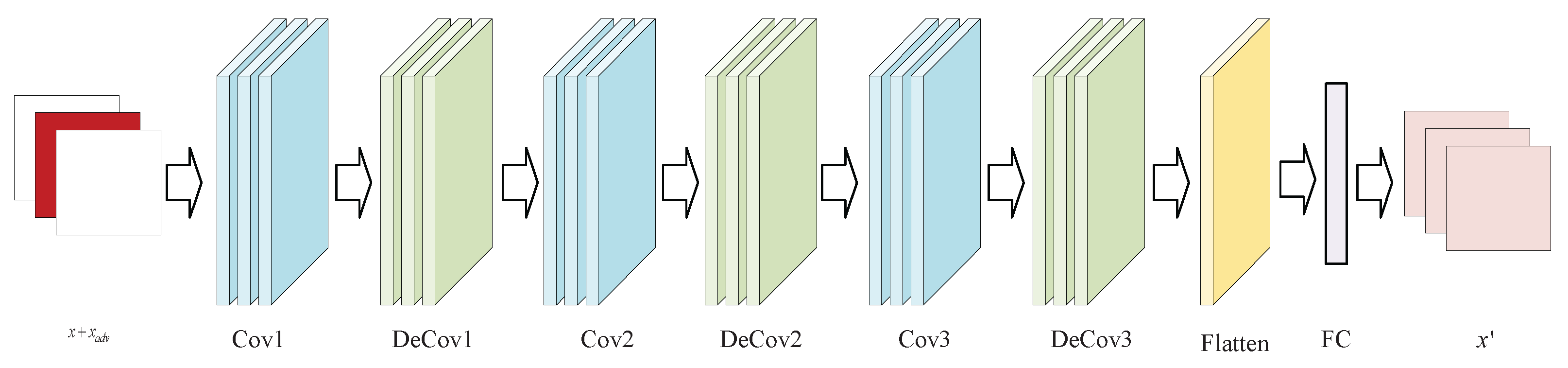

- Generator (G): The role of the generator is to receive the mixed samples that have been disturbed by the adversarial attack and map them close to normal samples. The generator aims to process the input data through a CNN to mitigate the adversarial perturbation and make the adversarial sample closer to the normal sample.

- (2)

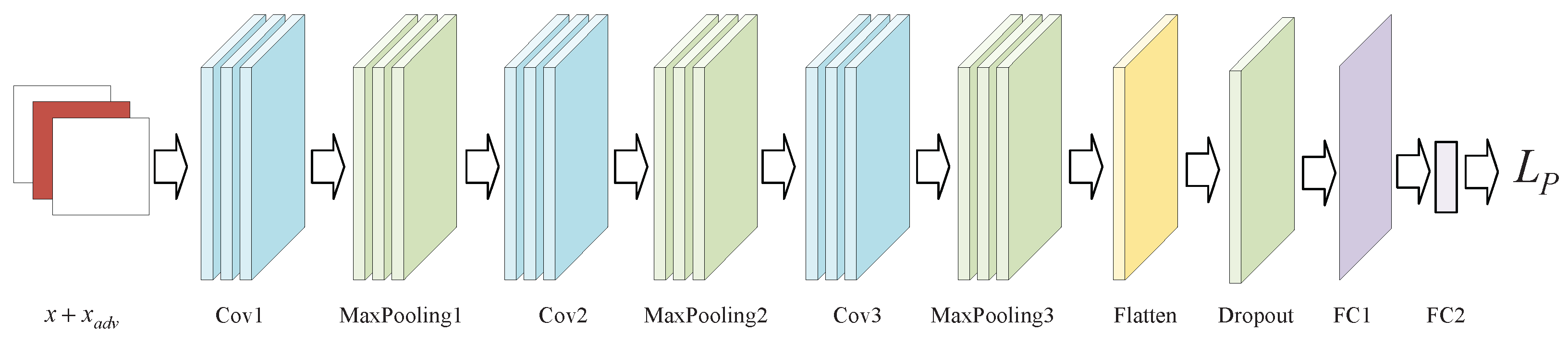

- Discriminator (D): The discriminator of AuxAtWGAN is exclusively responsible for the categorization of adversarial samples, whereas the categorization of normal and attack samples is still carried out by the classifier of the original model. The discriminator’s function is to differentiate between normal and adversarial inputs. It extracts features from the input samples using a CNN architecture and performs classification.

- (3)

- Perceptual network (PN): The perceptual network calculates the perceptual loss between real samples. Through the perceptual loss, the generator helps optimize the adversarial samples, generates adversarial samples closer to the real samples, and ensures the consistency of the generated adversarial samples in the feature space.

3.2. AuxAtWGAN Model Design

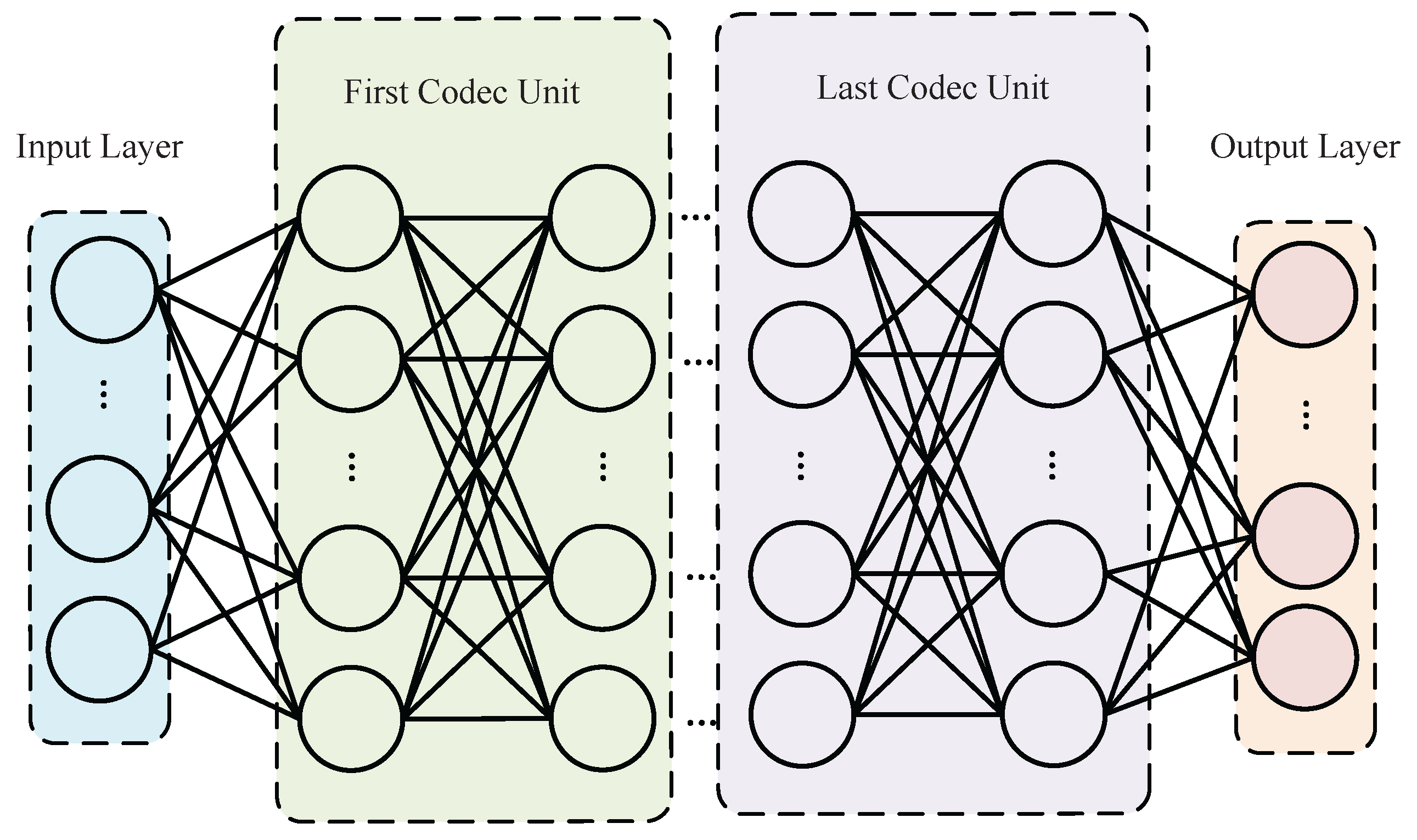

3.2.1. SAE Data Downscaling

3.2.2. Adversarial Sample Generation

- (1)

- The gradient of the loss function is calculated with respect to the input x.

- (2)

- The adversarial samples are updated according to the direction of the gradient:where is the perturbation strength parameter that controls the maximum differences between the antagonistic sample and the original sample.

- (1)

- The adversarial sample is randomly initialized to the original sample x, or its vicinity is randomly perturbed.

- (2)

- In each iteration, the gradient of the loss function concerning the input x is computed as .

- (3)

- The adversarial samples are updated according to the direction of the gradient:where is the step size, is the upper limit of the perturbation, and ensures that the generated adversarial samples are within the allowed perturbation range.

- (4)

- This optimization loop terminates when either the predefined iteration limit is exhausted, or the desired adversarial condition is achieved.

- (1)

- First, for the stability of the optimization, the variable substitution method is used:where w is an optimized variable that replaces to ensure that x is in the range .

- (2)

- The appropriate perturbation is then found by optimizing the variable w with Equation (12).

- (3)

- Finally, w is optimized iteratively using the Adam optimizer until the smallest perturbation that can trick the model is found or the maximum number of iterations is reached.

3.2.3. WGAN

3.2.4. Generator

3.2.5. Perception Networks

3.2.6. Discriminator

4. Experiments

4.1. Dataset

4.2. Evaluation Indicators

- True Positive (TP): the count of samples accurately identified as belonging to the positive category.

- False Positive (FP): the count of negative samples mistakenly classified as positive.

- True Negative (TN): the count of instances accurately identified as belonging to the negative category when they indeed belong there.

- False Negative (FN): the count of positive samples mistakenly classified as negative.

4.3. Experimental Configuration

- Convolutional neural network (CNN): a CNN is good at processing data with spatial local correlation of two-dimensional grayscale images. The feature extraction of two-dimensional network traffic data after SAE conversion was used for comparison with the WGAN model used. The CNN model structure was the same as the discriminator structure.

- Long Short-Term Memory (LSTM): LSTM is a very suitable neural network model for partitioning one-dimensional time series data, such as network traffic data. Consequently, LSTM was chosen for its prevalent application in time series analysis within intrusion detection systems. The LSTM network used for the experiment consisted of two layers consisting of an LSTM layer, a dropout layer, and fully connected layers. The model could better capture the time-dependent features in the sequence data and improve the performance of the classification task.

- Residual Neural Network (ResNet): As a representative of deep networks, the method could be tested for its adaptability to deeper and more complex models and compared with LSTM in terms of spatial and temporal features. ResNet34 contains an initial convolutional layer; four residual layers with three, four, six, and three residual modules in each layer; and a fully connected layer [36].

- FGSM: ;

- PGD: , , ;

- C&W: , .

4.4. Experiment Results and Discussion

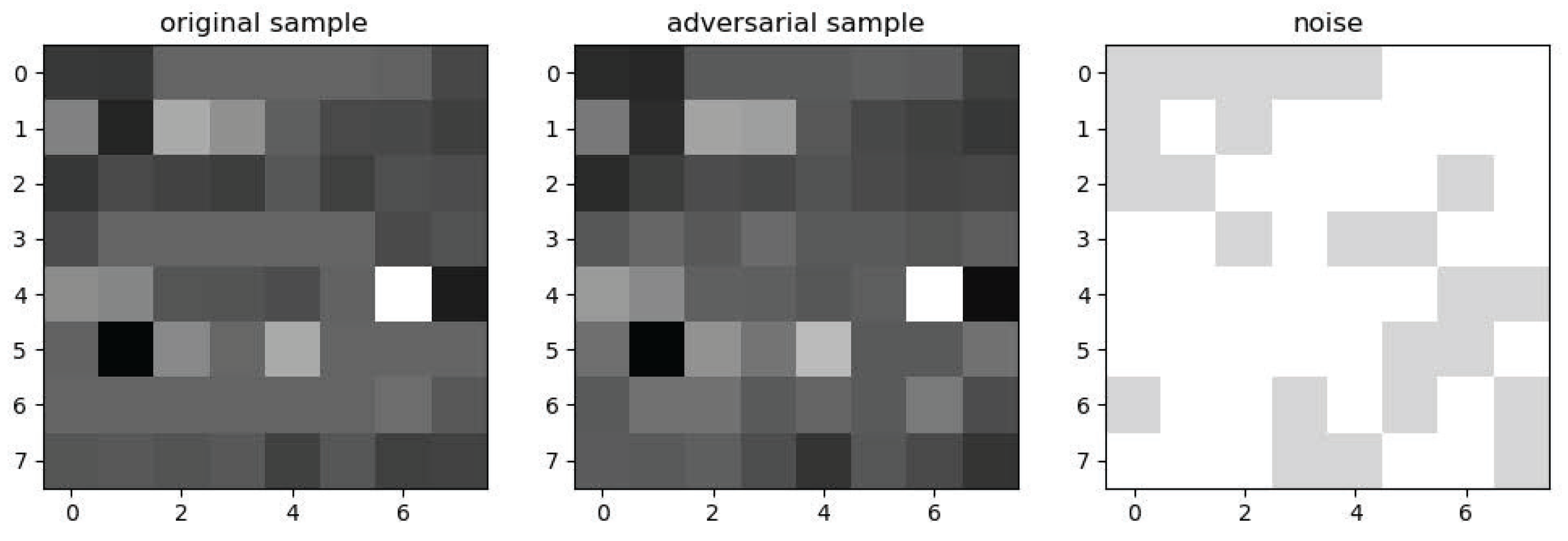

4.4.1. SAE and Data Visualization Results

4.4.2. Generating Adversarial Sample Results

4.4.3. AuxAtWGAN Comparison Experiments

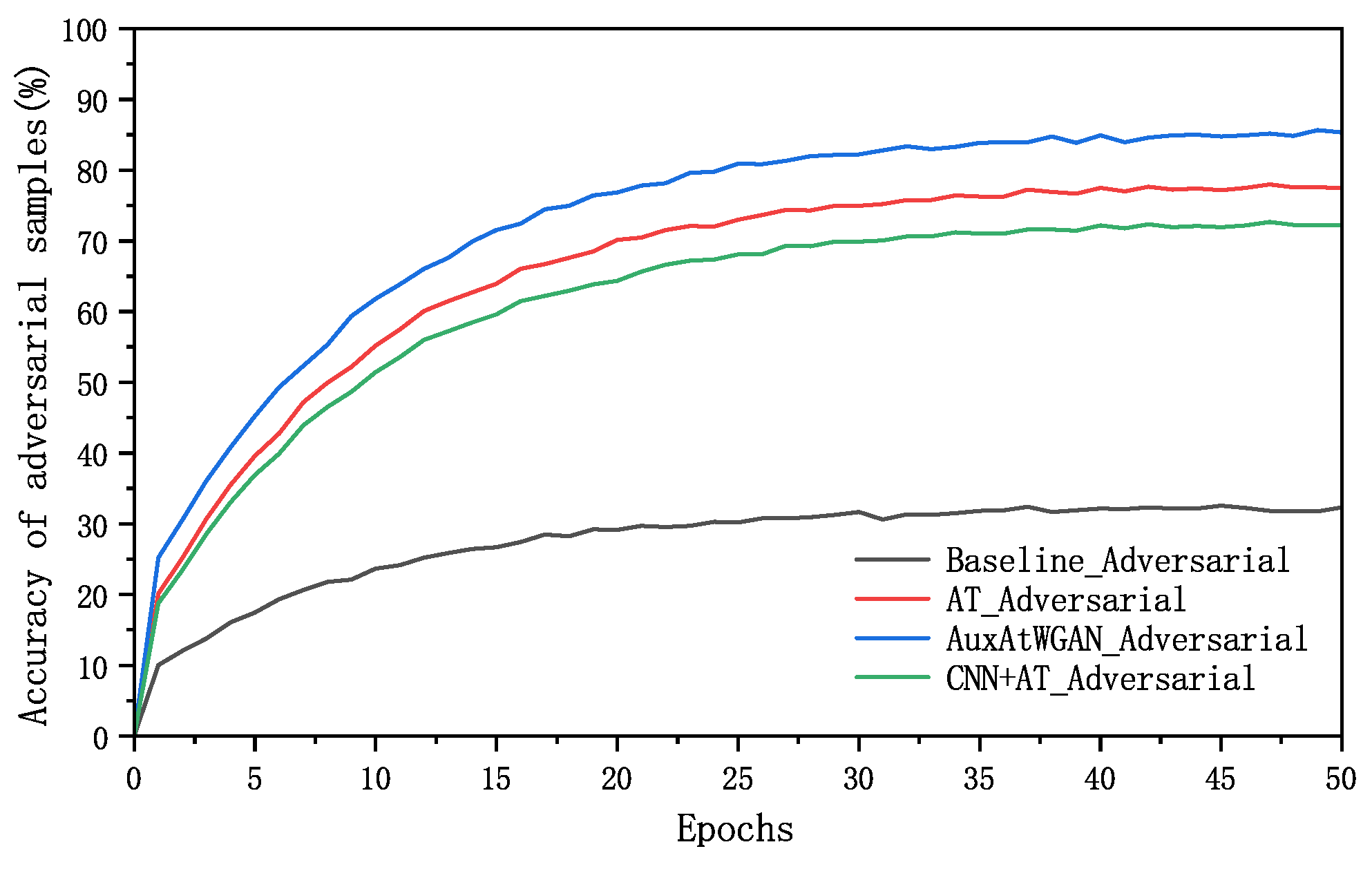

5. Conclusions

- (1)

- Firstly, the SAE network traffic data were downscaled by the SAE and transformed into 2D data suitable for model processing, and mixed adversarial samples were generated by the FGSM, PGD, and C&W adversarial attack methods, which significantly increased the diversity of adversarial training data and improved the generalization ability of the model. Data were then visualized to directly observe the perturbation effects on the samples.

- (2)

- Secondly, the normal samples and the mixed adversarial samples were used as hybrid samples, which were fed into the AuxAtWGAN proposed in this paper. The feature space consistency of the generator was maintained through perceptual loss constraints, and adversarial training utilizing the generative network optimized the correction quality of the adversarial samples, thus improving the adversarial sample detection accuracy to 85.77%.

- (3)

- Finally, the pretrained model can be attached to various deep learning models to eliminate the adversarial samples in the input data and improve robustness. Maintaining the original model’s detection accuracy for normal samples resulted in a reduction in the average attack success rate from 75.17% to 27.56%. The strong generalizability of the model was validated on models such as LSTM and ResNet.

- (1)

- Firstly, the current defense methods are based mainly on adversarial training and auxiliary networks, which improve robustness but still rely on known adversarial samples. In the future, the introduction of an adaptive defense mechanism can be considered so that the IDS can dynamically adjust the adversarial training strategy and improve the defense ability against unknown attacks.

- (2)

- Secondly, adversarial defense methods have some overhead in computational resources, especially the WGAN training process, which is more complicated. In the future, knowledge distillation or pruning techniques can be explored to optimize the model in a lightweight way so that it can operate efficiently in resource-constrained environments.

- (3)

- Finally, the current experiments are based on public datasets such as CICIDS-2017 and still need to be further verified for applicability in real network environments. In the future, IDSs can be deployed in real application scenarios, and their robustness and detection performance in dynamic traffic environments can be evaluated.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Anderson, J.P. Computer Security Threat Monitoring and Surveillance; Technical report; James P. Anderson Company: Fort Washington, PA, USA, 1980. [Google Scholar]

- Lansky, J.; Ali, S.; Mohammadi, M. Deep learning-based intrusion detection systems: A systematic review. IEEE Access 2021, 9, 101574–101599. [Google Scholar] [CrossRef]

- Farahnakian, F.; Heikkonen, J. A deep auto-encoder based approach for intrusion detection system. In Proceedings of the 20th International Conference on Advanced Communication Technology (ICACT), Chuncheon-si, Republic of Korea, 1–14 February 2018; pp. 178–183. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Mohammadpour, L.; Ling, T.C.; Liew, C.S.; Aryanfar, A. A survey of CNN-based network intrusion detection. Appl. Sci. 2022, 12, 8162. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Laghrissi, F.; Douzi, S.; Douzi, K.; Hssina, B. Intrusion detection systems using long short-term memory (LSTM). J. Big Data 2021, 8, 65. [Google Scholar] [CrossRef]

- Guo, J.; Bao, W.; Wang, J.; Ma, Y.; Gao, X.; Xiao, G.; Wu, W. A comprehensive evaluation framework for deep model robustness. Pattern Recognit. 2023, 137, 109308. [Google Scholar] [CrossRef]

- Sauka, K.; Shin, G.Y.; Kim, D.W.; Han, M.M. Adversarial robust and explainable network intrusion detection systems based on deep learning. Appl. Sci. 2022, 12, 6451. [Google Scholar] [CrossRef]

- Zhang, S.; Chen, S.; Liu, X.; Hua, C.; Wang, W.; Chen, K.; Wang, J. Detecting adversarial samples for deep learning models: A comparative study. IEEE Trans. Netw. Sci. Eng. 2021, 9, 231–244. [Google Scholar] [CrossRef]

- Yang, K.; Liu, J.; Zhang, C.; Fang, Y. Adversarial examples against the deep learning based network intrusion detection systems. In Proceedings of the MILCOM 2018—2018 IEEE Military Communications Conference (MILCOM), Los Angeles, CA, USA, 29 October–1 November 2018; pp. 559–564. [Google Scholar]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein generative adversarial networks. In Proceedings of the 34th International Conference on Machine Learning (ICML), Sydney, Australia, 6–11 August 2017; PMLR: Sydney, Australia, 2017; Volume 70, pp. 214–223. [Google Scholar]

- Yan, B.; Han, G. Effective feature extraction via stacked sparse autoencoder to improve intrusion detection system. IEEE Access 2018, 6, 41238–41248. [Google Scholar] [CrossRef]

- Pan, Y.; Pi, D.; Chen, J.; Meng, H. FDPPGAN: Remote sensing image fusion based on deep perceptual patchGAN. Neural Comput. Appl. 2021, 33, 9589–9605. [Google Scholar] [CrossRef]

- Zhao, W.; Alwidian, S.; Mahmoud, Q.H. Adversarial training methods for deep learning: A systematic review. Algorithms 2022, 15, 283. [Google Scholar] [CrossRef]

- He, K.; Kim, D.D.; Asghar, M.R. Adversarial machine learning for network intrusion detection systems: A comprehensive survey. IEEE Commun. Surv. Tutor. 2023, 25, 538–566. [Google Scholar] [CrossRef]

- Li, Y.; Cheng, M.; Hsieh, C.J.; Lee, T.C. A review of adversarial attack and defense for classification methods. Am. Stat. 2022, 76, 329–345. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards deep learning models resistant to adversarial attacks. In Proceedings of the 6th International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Bai, T.; Luo, J.; Zhao, J.; Wen, B.; Wang, Q. Recent advances in adversarial training for adversarial robustness. In Proceedings of the 30th International Joint Conference on Artificial Intelligence (IJCAI-21), Montreal, QC, Canada, 19–27 August 2021; pp. 4312–4321. [Google Scholar]

- Chauhan, R.; Sabeel, U.; Izaddoost, A.; Shah Heydari, S. Polymorphic adversarial cyberattacks using WGAN. J. Cybersecur. Priv. 2021, 1, 767–792. [Google Scholar] [CrossRef]

- Shieh, C.S.; Nguyen, T.T.; Lin, W.W.; Huang, Y.L.; Horng, M.F.; Lee, T.F.; Miu, D. Synthesis of adversarial DDoS attacks using Wasserstein generative adversarial networks with gradient penalty. In Proceedings of the 2021 6th International Conference on Computational Intelligence and Applications (ICCIA), Xiamen, China, 11–13 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 118–122. [Google Scholar]

- Yu, Y.; Yu, P.; Li, W. Auxblocks: Defense adversarial examples via auxiliary blocks. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–8. [Google Scholar]

- Samangouei, P.; Kabkab, M.; Chellappa, R. Defense-GAN: Protecting classifiers against adversarial attacks using generative models. In Proceedings of the 6th International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Jin, G.; Shen, S.; Zhang, D.; Dai, F.; Zhang, Y. APE-GAN: Adversarial perturbation elimination with GAN. In Proceedings of the 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 3842–3846. [Google Scholar]

- Wang, D.; Jin, W.; Wu, Y.; Khan, A. ATGAN: Adversarial training-based GAN for improving adversarial robustness generalization on image classification. Appl. Intell. 2023, 53, 24492–24508. [Google Scholar] [CrossRef]

- Song, R.; Huang, Y.; Xu, K.; Ye, X.; Li, C.; Chen, X. Electromagnetic inverse scattering with perceptual generative adversarial networks. IEEE Trans. Comput. Imaging 2021, 7, 689–699. [Google Scholar] [CrossRef]

- Mahmoud, M.; Kang, H.S. Ganmasker: A two-stage generative adversarial network for high-quality face mask removal. Sensors 2023, 23, 7094. [Google Scholar] [CrossRef] [PubMed]

- Papernot, N.; McDaniel, P.; Wu, X.; Jha, S.; Swami, A. Distillation as a defense to adversarial perturbations against deep neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 1631–1643. [Google Scholar]

- Lyu, C.; Huang, K.; Liang, H.N. A unified gradient regularization family for adversarial examples. In Proceedings of the 2015 IEEE International Conference on Data Mining (ICDM), Atlantic City, NJ, USA, 14–17 November 2015; pp. 301–309. [Google Scholar]

- Kurakin, A.; Goodfellow, I.J.; Bengio, S. Adversarial machine learning at scale. In Proceedings of the International Conference on Learning Representation (ICLR), Toulon, France, 24–26 April 2017. [Google Scholar]

- Carlini, N.W.; Wagner, D. Towards evaluating the robustness of neural networks. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–24 May 2017; pp. 39–57. [Google Scholar]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A.C. Improved training of Wasserstein GANs. Adv. Neural Inf. Process. Syst. 2017, 30, 5767–5777. [Google Scholar]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. Toward generating a new intrusion detection dataset and intrusion traffic characterization. In Proceedings of the International Conference on Information Security and Privacy (ICISSP), Funchal, Portugal, 22–24 January 2018; pp. 108–116. [Google Scholar]

- Li, Y.; Jiang, Y.; Li, Z.; Xia, S.T. Backdoor learning: A survey. IEEE Trans. Neural Netw. Learn. Syst. 2022, 35, 5–22. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

| Proportion of Adversarial Samples | Normal Sample Accuracy (%) | Adversarial Sample Accuracy (%) |

|---|---|---|

| 10% | 93.97 | 63.03 |

| 20% | 93.01 | 80.96 |

| 50% | 93.57 | 85.73 |

| Methodologies | Normal Sample ACC (%) | Adversarial Sample ACC (%) |

|---|---|---|

| Baseline | 93.30 | 32.49 |

| AT | 88.31 | 78.16 |

| CNN+AT | 84.52 | 72.21 |

| AuxAtWGAN | 93.57 | 85.77 |

| Mean (%) | Std (%) | 95% CI (%) | |

|---|---|---|---|

| Normal sample ACC (%) | 93.57 | 0.23 | [93.37, 93.77] |

| Adversarial sample ACC (%) | 85.73 | 0.44 | [85.35, 86.12] |

| Methodologies | Baseline | AT | AuxAtWGAN |

|---|---|---|---|

| FGSM (ASR/%) | 68.94 | 23.10 | 19.28 |

| PGD (ASR/%) | 74.28 | 35.41 | 27.82 |

| C&W (ASR/%) | 82.31 | 41.69 | 35.60 |

| Average (ASR/%) | 75.17 | 33.40 | 27.56 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, G.; Yan, Q. A Method for Improving the Robustness of Intrusion Detection Systems Based on Auxiliary Adversarial Training Wasserstein Generative Adversarial Networks. Electronics 2025, 14, 2171. https://doi.org/10.3390/electronics14112171

Wang G, Yan Q. A Method for Improving the Robustness of Intrusion Detection Systems Based on Auxiliary Adversarial Training Wasserstein Generative Adversarial Networks. Electronics. 2025; 14(11):2171. https://doi.org/10.3390/electronics14112171

Chicago/Turabian StyleWang, Guohua, and Qifan Yan. 2025. "A Method for Improving the Robustness of Intrusion Detection Systems Based on Auxiliary Adversarial Training Wasserstein Generative Adversarial Networks" Electronics 14, no. 11: 2171. https://doi.org/10.3390/electronics14112171

APA StyleWang, G., & Yan, Q. (2025). A Method for Improving the Robustness of Intrusion Detection Systems Based on Auxiliary Adversarial Training Wasserstein Generative Adversarial Networks. Electronics, 14(11), 2171. https://doi.org/10.3390/electronics14112171