Predicting User Attention States from Multimodal Eye–Hand Data in VR Selection Tasks

Abstract

1. Introduction

2. Related Work

2.1. Intention and Attention

2.2. Cognitive-Related Data and Modeling

3. Dataset

3.1. Participants

3.2. Apparatus and Materials

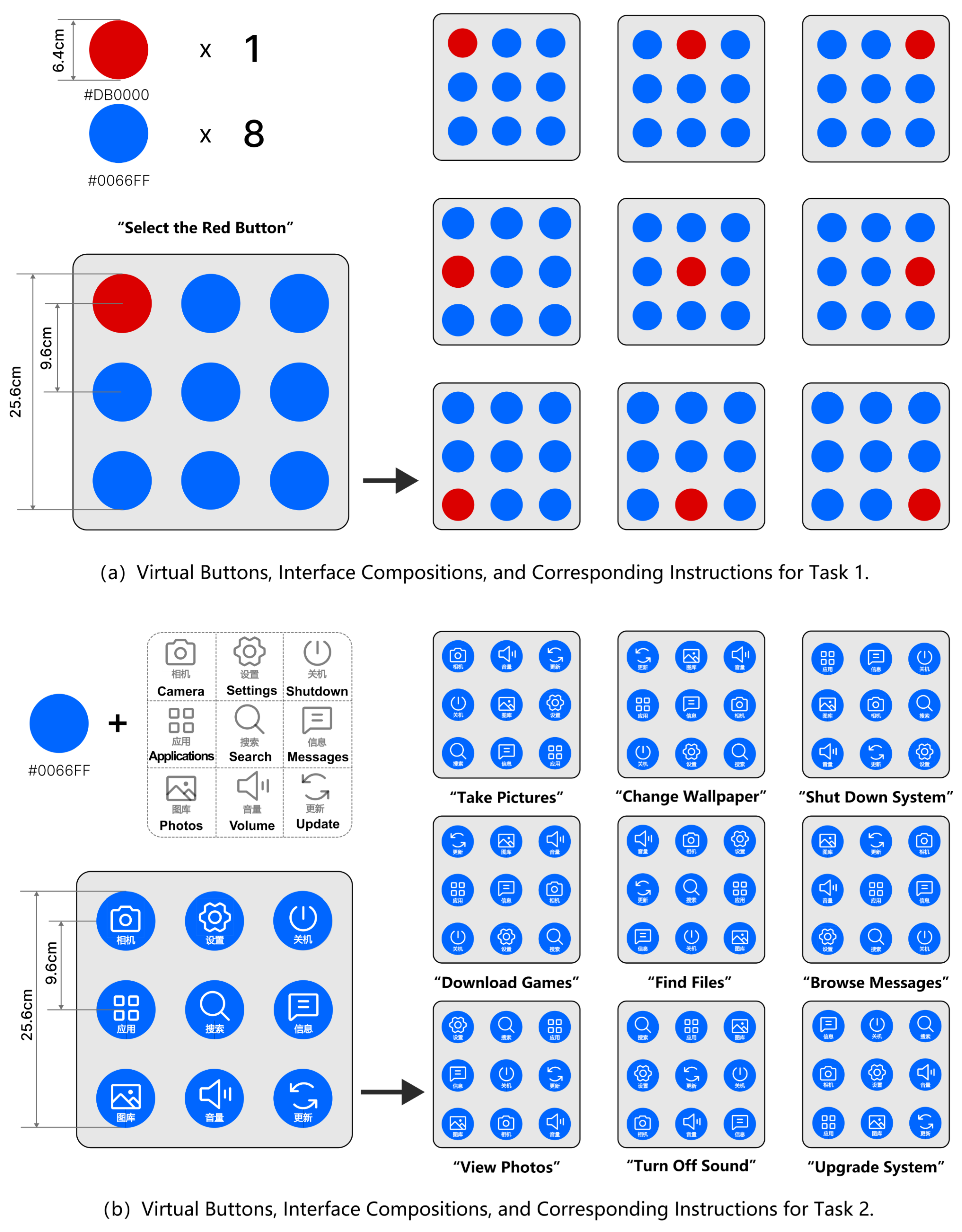

- Non-semantic, color-coded button interface: This interface consists of eight blue buttons and one red button, as shown in Figure 3a. Each button position hosted the red button once, resulting in nine unique interfaces.

- Semantic icon-and-text button interface: This interface contains nine icon-based buttons, each featuring both graphics and text on a blue background with white icons (Figure 3b). By permuting the positions of these nine buttons, we generated nine distinct semantic interfaces.

3.3. Experimental Design

- Task 1—stimulus-driven button selection: In each trial, one red button randomly appeared among the nine buttons on the virtual interface (see in Figure 3a). Each position hosting the red button was repeated three times. Participants tapped the red button with the index finger of their virtual right hand, upon which the entire interface vanished immediately.

- Task 2—goal-driven button selection: Before each trial, the virtual environment displayed a random instruction in Chinese indicating a specific functional requirement (i.e., a target button and its position; see Figure 3b). Each instruction was repeated three times. Participants tapped the corresponding target button with their right index finger in the virtual environment, causing the interface to disappear automatically.

3.4. Experimental Procedure

4. Attention State Prediction Model Development

4.1. Data Preprocessing

4.2. Feature Extraction and Ground Truth

4.3. Model Development

5. Results

5.1. Comparative Performance Across Models

5.2. Effects of Different Time Windows

5.3. Analysis of the Optimal Window and Model

5.4. Impact of Different Feature Categories on Prediction Results

6. Discussion

6.1. Model Performance Comparison

6.2. Effect of Time-Window Size

6.3. Feature Importance

6.4. Differences in Eye–Hand Coordination Across Attentional States

7. Implications and Limitations

7.1. Implications

7.2. Limitations

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Schmidt, A. Implicit human computer interaction through context. Pers. Technol. 2000, 4, 191–199. [Google Scholar] [CrossRef]

- Karaman, Ç.Ç.; Sezgin, T.M. Gaze-based predictive user interfaces: Visualizing user intentions in the presence of uncertainty. Int. J. Hum.-Comput. Stud. 2018, 111, 78–91. [Google Scholar] [CrossRef]

- Baker, C.; Fairclough, S.H. Adaptive virtual reality. In Current Research in Neuroadaptive Technology; Elsevier: Amsterdam, The Netherlands, 2022; pp. 159–176. [Google Scholar]

- Ajzen, I. Understanding Attitudes and Predictiing Social Behavior; Prentice-Hall: Englewood Cliffs, NJ, USA, 1980. [Google Scholar]

- Ajzen, I. The theory of planned behavior. Organ. Behav. Hum. Decis. Process. 1991, 50, 179–211. [Google Scholar] [CrossRef]

- Katsuki, F.; Constantinidis, C. Bottom-up and top-down attention: Different processes and overlapping neural systems. Neuroscientist 2014, 20, 509–521. [Google Scholar] [CrossRef]

- Du, X.; Yu, M.; Zhang, Z.; Tong, M.; Zhu, Y.; Xue, C. A Task-and Role-Oriented Design Method for Multi-User Collaborative Interfaces. Sensors 2025, 25, 1760. [Google Scholar] [CrossRef]

- Kang, J.S.; Park, U.; Gonuguntla, V.; Veluvolu, K.C.; Lee, M. Human implicit intent recognition based on the phase synchrony of EEG signals. Pattern Recognit. Lett. 2015, 66, 144–152. [Google Scholar] [CrossRef]

- Castner, N.; Geßler, L.; Geisler, D.; Hüttig, F.; Kasneci, E. Towards expert gaze modeling and recognition of a user’s attention in realtime. Procedia Comput. Sci. 2020, 176, 2020–2029. [Google Scholar] [CrossRef]

- Lochbihler, A.; Wallace, B.; Van Benthem, K.; Herdman, C.; Sloan, W.; Brightman, K.; Goubran, R.; Knoefel, F.; Marshall, S. Metrics in a Dynamic Gaze Environment. In Proceedings of the 2024 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Eindhoven, The Netherlands, 26–28 June 2024; pp. 1–6. [Google Scholar]

- Jiang, G.; Chen, H.; Wang, C.; Zhou, G.; Raza, M. Analysis of Flight Attention State Based on Visual Gaze Behavior. In Proceedings of the International Conference on Multi-Modal Information Analytics, Hohhot, China, 22–23 April 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 942–950. [Google Scholar]

- Huang, J.; White, R.; Buscher, G. User see, user point: Gaze and cursor alignment in web search. In Proceedings of the Sigchi Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; pp. 1341–1350. [Google Scholar]

- Rappa, N.A.; Ledger, S.; Teo, T.; Wai Wong, K.; Power, B.; Hilliard, B. The use of eye tracking technology to explore learning and performance within virtual reality and mixed reality settings: A scoping review. Interact. Learn. Environ. 2022, 30, 1338–1350. [Google Scholar] [CrossRef]

- Clay, V.; König, P.; Koenig, S. Eye tracking in virtual reality. J. Eye Mov. Res. 2019, 12, 10–16910. [Google Scholar] [CrossRef]

- Wozniak, P.; Vauderwange, O.; Mandal, A.; Javahiraly, N.; Curticapean, D. Possible applications of the LEAP motion controller for more interactive simulated experiments in augmented or virtual reality. In Proceedings of the Optics Education and Outreach IV, SPIE, San Diego, CA, USA, 28 August–1 September 2016; Volume 9946, pp. 234–245. [Google Scholar]

- Scheggi, S.; Meli, L.; Pacchierotti, C.; Prattichizzo, D. Touch the virtual reality: Using the leap motion controller for hand tracking and wearable tactile devices for immersive haptic rendering. In Proceedings of the ACM SIGGRAPH 2015 Posters, Los Angeles, CA, USA, 9–13 August 2015; p. 1. [Google Scholar]

- Cariani, P.A. On the Design of Devices with Emergent Semantic Functions. Ph.D. Thesis, State University of New York Binghamton, Binghamton, NY, USA, 1989. [Google Scholar]

- Ajzen, I. From intentions to actions: A theory of planned behavior. In Action Control: From Cognition to Behavior; Springer: Berlin/Heidelberg, Germany, 1985. [Google Scholar]

- Borji, A.; Itti, L. State-of-the-art in visual attention modeling. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 185–207. [Google Scholar] [CrossRef]

- Itti, L.; Koch, C. Computational modelling of visual attention. Nat. Rev. Neurosci. 2001, 2, 194–203. [Google Scholar] [CrossRef] [PubMed]

- Egeth, H.E.; Yantis, S. Visual attention: Control, representation, and time course. Annu. Rev. Psychol. 1997, 48, 269–297. [Google Scholar] [CrossRef]

- Lau, H.C.; Rogers, R.D.; Haggard, P.; Passingham, R.E. Attention to intention. Science 2004, 303, 1208–1210. [Google Scholar] [CrossRef] [PubMed]

- Boussaoud, D. Attention versus intention in the primate premotor cortex. Neuroimage 2001, 14, S40–S45. [Google Scholar] [CrossRef][Green Version]

- Castiello, U. Understanding other people’s actions: Intention and attention. J. Exp. Psychol. Hum. Percept. Perform. 2003, 29, 416. [Google Scholar] [CrossRef]

- Wolfe, J.M.; Cave, K.R.; Franzel, S.L. Guided search: An alternative to the feature integration model for visual search. J. Exp. Psychol. Hum. Percept. Perform. 1989, 15, 419. [Google Scholar] [CrossRef]

- Wolfe, J.M. Guided Search 6.0: An updated model of visual search. Psychon. Bull. Rev. 2021, 28, 1060–1092. [Google Scholar] [CrossRef]

- Shen, I.C.; Cherng, F.Y.; Igarashi, T.; Lin, W.C.; Chen, B.Y. EvIcon: Designing High-Usability Icon with Human-in-the-loop Exploration and IconCLIP. In Computer Graphics Forum; Wiley Online Library: Hoboken, NJ, USA, 2023; Volume 42, p. e14924. [Google Scholar]

- Hou, G.; Hu, Y. Designing combinations of pictogram and text size for icons: Effects of text size, pictogram size, and familiarity on older adults’ visual search performance. Hum. Factors 2023, 65, 1577–1595. [Google Scholar] [CrossRef]

- Reijnen, E.; Vogt, L.L.; Fiechter, J.P.; Kühne, S.J.; Meister, N.; Venzin, C.; Aebersold, R. Well-designed medical pictograms accelerate search. Appl. Ergon. 2022, 103, 103799. [Google Scholar] [CrossRef]

- Xie, J.; Unnikrishnan, D.; Williams, L.; Encinas-Oropesa, A.; Mutnuri, S.; Sharma, N.; Jeffrey, P.; Zhu, B.; Lighterness, P. Influence of domain experience on icon recognition and preferences. Behav. Inf. Technol. 2022, 41, 85–95. [Google Scholar] [CrossRef]

- Ding, Y.; Naber, M.; Paffen, C.; Gayet, S.; Van der Stigchel, S. How retaining objects containing multiple features in visual working memory regulates the priority for access to visual awareness. Conscious. Cogn. 2021, 87, 103057. [Google Scholar] [CrossRef] [PubMed]

- Alebri, M.; Costanza, E.; Panagiotidou, G.; Brumby, D.P.; Althani, F.; Bovo, R. Visualisations with semantic icons: Assessing engagement with distracting elements. Int. J. Hum.-Comput. Stud. 2024, 191, 103343. [Google Scholar] [CrossRef]

- Treisman, A.M.; Gelade, G. A feature-integration theory of attention. Cogn. Psychol. 1980, 12, 97–136. [Google Scholar] [CrossRef] [PubMed]

- Quinlan, P.T. Visual feature integration theory: Past, present, and future. Psychol. Bull. 2003, 129, 643. [Google Scholar] [CrossRef]

- Bacon, W.F.; Egeth, H.E. Overriding stimulus-driven attentional capture. Percept. Psychophys. 1994, 55, 485–496. [Google Scholar] [CrossRef]

- Belopolsky, A.V.; Zwaan, L.; Theeuwes, J.; Kramer, A.F. The size of an attentional window modulates attentional capture by color singletons. Psychon. Bull. Rev. 2007, 14, 934–938. [Google Scholar] [CrossRef]

- Yamin, P.A.; Park, J.; Kim, H.K.; Hussain, M. Effects of button colour and background on augmented reality interfaces. Behav. Inf. Technol. 2024, 43, 663–676. [Google Scholar] [CrossRef]

- Milne, A.J. Hex Player—A Virtual Musical Controller. In Proceedings of the International Conference on New Interfaces for Musical Expression, Oslo, Norway, 30 May–1 June 2011; pp. 244–247. [Google Scholar]

- Yarbus, A.L.; Yarbus, A.L. Eye movements during perception of complex objects. In Eye Movements and Vision; Springer: Boston, MA, USA, 1967; pp. 171–211. [Google Scholar]

- Borji, A.; Itti, L. Defending Yarbus: Eye movements reveal observers’ task. J. Vis. 2014, 14, 29. [Google Scholar] [CrossRef]

- Jang, Y.M.; Mallipeddi, R.; Lee, S.; Kwak, H.W.; Lee, M. Human intention recognition based on eyeball movement pattern and pupil size variation. Neurocomputing 2014, 128, 421–432. [Google Scholar] [CrossRef]

- Joseph MacInnes, W.; Hunt, A.R.; Clarke, A.D.; Dodd, M.D. A generative model of cognitive state from task and eye movements. Cogn. Comput. 2018, 10, 703–717. [Google Scholar] [CrossRef]

- Kootstra, T.; Teuwen, J.; Goudsmit, J.; Nijboer, T.; Dodd, M.; Van der Stigchel, S. Machine learning-based classification of viewing behavior using a wide range of statistical oculomotor features. J. Vis. 2020, 20, 1. [Google Scholar] [CrossRef] [PubMed]

- Kotseruba, I.; Tsotsos, J.K. Attention for vision-based assistive and automated driving: A review of algorithms and datasets. IEEE Trans. Intell. Transp. Syst. 2022, 23, 19907–19928. [Google Scholar] [CrossRef]

- Huang, J.; White, R.W.; Dumais, S. No clicks, no problem: Using cursor movements to understand and improve search. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; pp. 1225–1234. [Google Scholar]

- Raghunath, V.; Braxton, M.O.; Gagnon, S.A.; Brunyé, T.T.; Allison, K.H.; Reisch, L.M.; Weaver, D.L.; Elmore, J.G.; Shapiro, L.G. Mouse cursor movement and eye tracking data as an indicator of pathologists’ attention when viewing digital whole slide images. J. Pathol. Inform. 2012, 3, 43. [Google Scholar] [CrossRef] [PubMed]

- Goecks, J.; Shavlik, J. Learning users’ interests by unobtrusively observing their normal behavior. In Proceedings of the 5th International Conference on Intelligent User Interfaces, New Orleans, LA, USA, 9–12 January 2000; pp. 129–132. [Google Scholar]

- Xu, H.; Xiong, A. Advances and disturbances in sEMG-based intentions and movements recognition: A review. IEEE Sens. J. 2021, 21, 13019–13028. [Google Scholar] [CrossRef]

- He, P.; Jin, M.; Yang, L.; Wei, R.; Liu, Y.; Cai, H.; Liu, H.; Seitz, N.; Butterfass, J.; Hirzinger, G. High performance DSP/FPGA controller for implementation of HIT/DLR dexterous robot hand. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA’04), New Orleans, LA, USA, 26 April–1 May 2004; Volume 4, pp. 3397–3402. [Google Scholar]

- Zhang, D.; Chen, X.; Li, S.; Hu, P.; Zhu, X. EMG controlled multifunctional prosthetic hand: Preliminary clinical study and experimental demonstration. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 4670–4675. [Google Scholar]

- Zhang, H.; Zhao, Z.; Yu, Y.; Gui, K.; Sheng, X.; Zhu, X. A feasibility study on an intuitive teleoperation system combining IMU with sEMG sensors. In Proceedings of the Intelligent Robotics and Applications: 11th International Conference, ICIRA 2018, Newcastle, NSW, Australia, 9–11 August 2018; Proceedings, Part I 11. Springer: Berlin/Heidelberg, Germany, 2018; pp. 465–474. [Google Scholar]

- Buerkle, A.; Eaton, W.; Lohse, N.; Bamber, T.; Ferreira, P. EEG based arm movement intention recognition towards enhanced safety in symbiotic Human-Robot Collaboration. Robot. Comput.-Integr. Manuf. 2021, 70, 102137. [Google Scholar] [CrossRef]

- Schreiber, M.A.; Trkov, M.; Merryweather, A. Influence of frequency bands in eeg signal to predict user intent. In Proceedings of the 2019 9th International IEEE/EMBS Conference on Neural Engineering (NER), San Francisco, CA, USA, 20–23 March 2019; pp. 1126–1129. [Google Scholar]

- Baruah, M.; Banerjee, B.; Nagar, A.K. Intent prediction in human–human interactions. IEEE Trans. Hum.-Mach. Syst. 2023, 53, 458–463. [Google Scholar] [CrossRef]

- Sharma, M.; Chen, S.; Müller, P.; Rekrut, M.; Krüger, A. Implicit Search Intent Recognition using EEG and Eye Tracking: Novel Dataset and Cross-User Prediction. In Proceedings of the 25th International Conference on Multimodal Interaction, Paris, France, 9–13 October 2023; pp. 345–354. [Google Scholar]

- Mathis, F.; Williamson, J.; Vaniea, K.; Khamis, M. Rubikauth: Fast and secure authentication in virtual reality. In Proceedings of the Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–9. [Google Scholar]

- Agtzidis, I.; Startsev, M.; Dorr, M. Smooth pursuit detection based on multiple observers. In Proceedings of the Ninth Biennial ACM Symposium on Eye Tracking Research & Applications, Charleston, SC, USA, 14–17 March 2016; pp. 303–306. [Google Scholar]

- Meyer, D.E.; Abrams, R.A.; Kornblum, S.; Wright, C.E.; Keith Smith, J. Optimality in human motor performance: Ideal control of rapid aimed movements. Psychol. Rev. 1988, 95, 340. [Google Scholar] [CrossRef]

- Galazka, M.A.; Åsberg Johnels, J.; Zürcher, N.R.; Hippolyte, L.; Lemonnier, E.; Billstedt, E.; Gillberg, C.; Hadjikhani, N. Pupillary contagion in autism. Psychol. Sci. 2019, 30, 309–315. [Google Scholar] [CrossRef]

- Krejtz, K.; Duchowski, A.; Szmidt, T.; Krejtz, I.; González Perilli, F.; Pires, A.; Vilaro, A.; Villalobos, N. Gaze transition entropy. ACM Trans. Appl. Percept. (TAP) 2015, 13, 1–20. [Google Scholar] [CrossRef]

- Sun, Q.; Zhou, Y.; Gong, P.; Zhang, D. Attention Detection Using EEG Signals and Machine Learning: A Review. Mach. Intell. Res. 2025, 22, 219–238. [Google Scholar] [CrossRef]

- Acı, Ç.İ.; Kaya, M.; Mishchenko, Y. Distinguishing mental attention states of humans via an EEG-based passive BCI using machine learning methods. Expert Syst. Appl. 2019, 134, 153–166. [Google Scholar] [CrossRef]

- Vulpe-Grigorasi, A.; Kren, Z.; Slijepčević, D.; Schmied, R.; Leung, V. Attention performance classification based on eye tracking and machine learning. In Proceedings of the 2024 IEEE 17th International Scientific Conference on Informatics (Informatics), Poprad, Slovakia, 13–15 November 2024; pp. 431–435. [Google Scholar]

- Du, N.; Zhou, F.; Pulver, E.M.; Tilbury, D.M.; Robert, L.P.; Pradhan, A.K.; Yang, X.J. Predicting driver takeover performance in conditionally automated driving. Accid. Anal. Prev. 2020, 148, 105748. [Google Scholar] [CrossRef] [PubMed]

- Kramer, S.E.; Lorens, A.; Coninx, F.; Zekveld, A.A.; Piotrowska, A.; Skarzynski, H. Processing load during listening: The influence of task characteristics on the pupil response. Lang. Cogn. Process. 2013, 28, 426–442. [Google Scholar] [CrossRef]

- Rayner, K. The 35th Sir Frederick Bartlett Lecture: Eye movements and attention in reading, scene perception, and visual search. Q. J. Exp. Psychol. 2009, 62, 1457–1506. [Google Scholar] [CrossRef]

- Castelhano, M.S.; Rayner, K. Eye movements during reading, visual search, and scene perception: An overview. In Cognitive and Cultural Influences on Eye Movements; Tianjin People’s Publishing House: Tianjin, China, 2023; pp. 3–34. [Google Scholar]

- Binsted, G.; Chua, R.; Helsen, W.; Elliott, D. Eye–hand coordination in goal-directed aiming. Hum. Mov. Sci. 2001, 20, 563–585. [Google Scholar] [CrossRef]

- Smith, B.A.; Ho, J.; Ark, W.; Zhai, S. Hand eye coordination patterns in target selection. In Proceedings of the 2000 Symposium on Eye Tracking Research & Applications, Palm Beach Gardens, FL, USA, 6–8 November 2000; pp. 117–122. [Google Scholar]

- Chowdhury, M.Z.I.; Turin, T.C. Variable selection strategies and its importance in clinical prediction modelling. Fam. Med. Community Health 2020, 8, e000262. [Google Scholar] [CrossRef]

| Feature Category | Subcategory | Feature Description | Unit | Count | Selected Count |

|---|---|---|---|---|---|

| Eye movement | Fixation behavior | Fixation count | N | 1 | – |

| Fixation rate | N/s | 1 | 1 | ||

| Fixation duration (Average, Maximum, Total) | s | 3 | 2 | ||

| Saccadic behavior | Saccade count | N | 1 | – | |

| Saccade rate | N/s | 1 | 1 | ||

| Saccade speed (Average, Maximum) | deg/s | 2 | 1 | ||

| Saccade acceleration (Average, Maximum) | deg2/s | 2 | 1 | ||

| Saccade amplitude (Average, Maximum) | degree | 2 | 2 | ||

| Blink behavior | Blink count | N | 1 | – | |

| Blink rate | N/s | 1 | 1 | ||

| Interest of area | Transfer entropy [60] | – | 1 | 1 | |

| Static entropy [60] | – | 1 | 1 | ||

| Hand dynamics | Motion speed | Overall velocity (Average, Maximum) | m/s | 2 | 2 |

| Velocity of X, Y, Z directions (Average, Maximum) | m/s | 6 | 3 | ||

| Motion acceleration | Overall acceleration (Average, Maximum) | m2/s | 2 | 2 | |

| Acceleration of X, Y, Z directions (Avg, Max) | m2/s | 6 | 3 | ||

| Others | Motion peak count | N | 1 | 1 | |

| Pupil signals | Pupil changes | Pupil diameter change (Average, Maximum) | mm | 2 | 2 |

| Pupil-iris ratio change (Average, Maximum) | – | 2 | – | ||

| Total | 38 | 24 | |||

| Time Window | Model | Weighted F1-Score | Accuracy |

|---|---|---|---|

| 0.5 s | RF | 0.7265 | 0.7368 |

| 1.0 s | XG | 0.8669 | 0.8690 |

| 1.5 s | XG | 0.8444 | 0.8476 |

| 2.0 s | LR | 0.8065 | 0.8097 |

| 2.5 s | XG | 0.8482 | 0.8500 |

| 3.0 s | GB | 0.8835 | 0.8860 |

| 3.5 s | LDA | 0.8790 | 0.8839 |

| 4.0 s | GB | 0.8657 | 0.8676 |

| Feature | Importance Score |

|---|---|

| Maximum Acceleration | 0.1864 |

| Fixation Rate | 0.1731 |

| Mean Saccadic Amplitude | 0.1651 |

| Mean Velocity in Z-Direction | 0.0716 |

| Total Fixation Duration | 0.0548 |

| Maximum Pupil Diameter Difference | 0.0427 |

| Maximum Saccadic Amplitude | 0.0383 |

| Saccadic Rate | 0.0349 |

| Mean Velocity | 0.0334 |

| Mean Fixation Duration | 0.0330 |

| Feature | Bottom-Up | Top-Down | Difference |

|---|---|---|---|

| Maximum Acceleration | −0.0111 | 0.0111 | 0.0221 |

| Mean Acceleration | 0.0069 | −0.0069 | −0.0138 |

| Total Fixation Duration | −0.0054 | 0.0054 | 0.0109 |

| Saccadic Rate | −0.0052 | 0.0052 | 0.0104 |

| Fixation Rate | −0.0038 | 0.0038 | 0.0076 |

| Mean Velocity in Z-Direction | −0.0030 | 0.0030 | 0.0061 |

| Mean Acceleration in X-Direction | 0.0025 | −0.0025 | −0.0051 |

| Transition Entropy | 0.0024 | −0.0024 | −0.0048 |

| Stationary Entropy | −0.0021 | 0.0021 | 0.0042 |

| Blink Rate | −0.0015 | 0.0015 | 0.0030 |

| Maximum Velocity | 0.0001 | −0.0001 | −0.0002 |

| Feature | Bottom-Up | Feature | Top-Down | |

|---|---|---|---|---|

| 1 | Mean Acceleration | 0.0068 | Maximum Acceleration | 0.0111 |

| 2 | Mean Saccadic Amplitude | 0.0068 | Total Fixation Duration | 0.0054 |

| 3 | Mean Acceleration in X-Direction | 0.0025 | Saccadic Rate | 0.0052 |

| 4 | Transition Entropy | 0.0024 | Fixation Rate | 0.0038 |

| 5 | Maximum Saccadic Amplitude | 0.0014 | Mean Velocity in Z-Direction | 0.0030 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, X.; Wu, J.; Tang, X.; Lv, X.; Jia, L.; Xue, C. Predicting User Attention States from Multimodal Eye–Hand Data in VR Selection Tasks. Electronics 2025, 14, 2052. https://doi.org/10.3390/electronics14102052

Du X, Wu J, Tang X, Lv X, Jia L, Xue C. Predicting User Attention States from Multimodal Eye–Hand Data in VR Selection Tasks. Electronics. 2025; 14(10):2052. https://doi.org/10.3390/electronics14102052

Chicago/Turabian StyleDu, Xiaoxi, Jinchun Wu, Xinyi Tang, Xiaolei Lv, Lesong Jia, and Chengqi Xue. 2025. "Predicting User Attention States from Multimodal Eye–Hand Data in VR Selection Tasks" Electronics 14, no. 10: 2052. https://doi.org/10.3390/electronics14102052

APA StyleDu, X., Wu, J., Tang, X., Lv, X., Jia, L., & Xue, C. (2025). Predicting User Attention States from Multimodal Eye–Hand Data in VR Selection Tasks. Electronics, 14(10), 2052. https://doi.org/10.3390/electronics14102052