FCT: An Adaptive Model for Classification of Mixed Radio Signals

Abstract

1. Introduction

- (1)

- A Transformer-based model is proposed to improve the classification of radio signals at a low SNR.

- (2)

- Based on FNN, CNN, and Transformer, a new adaptive model, FCT, is proposed to achieve better classification performance on the mixed radio signals. It achieves good performance at both low SNR signals and high SNR signals.

2. Related Work

3. Methodology

3.1. The FCT Model for Mixed Radio Signal Classification

3.2. The FNN for Recognizing SNR

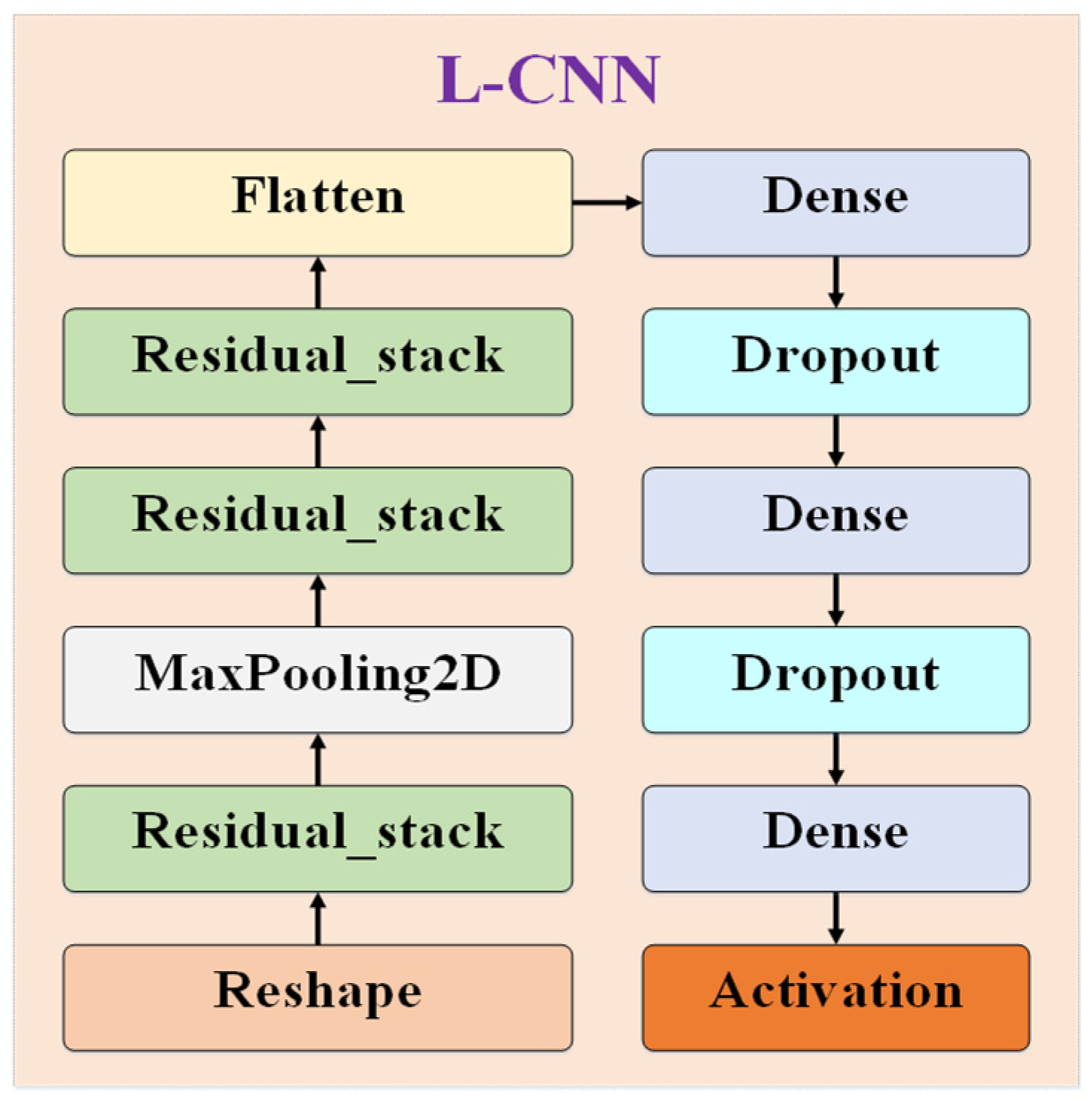

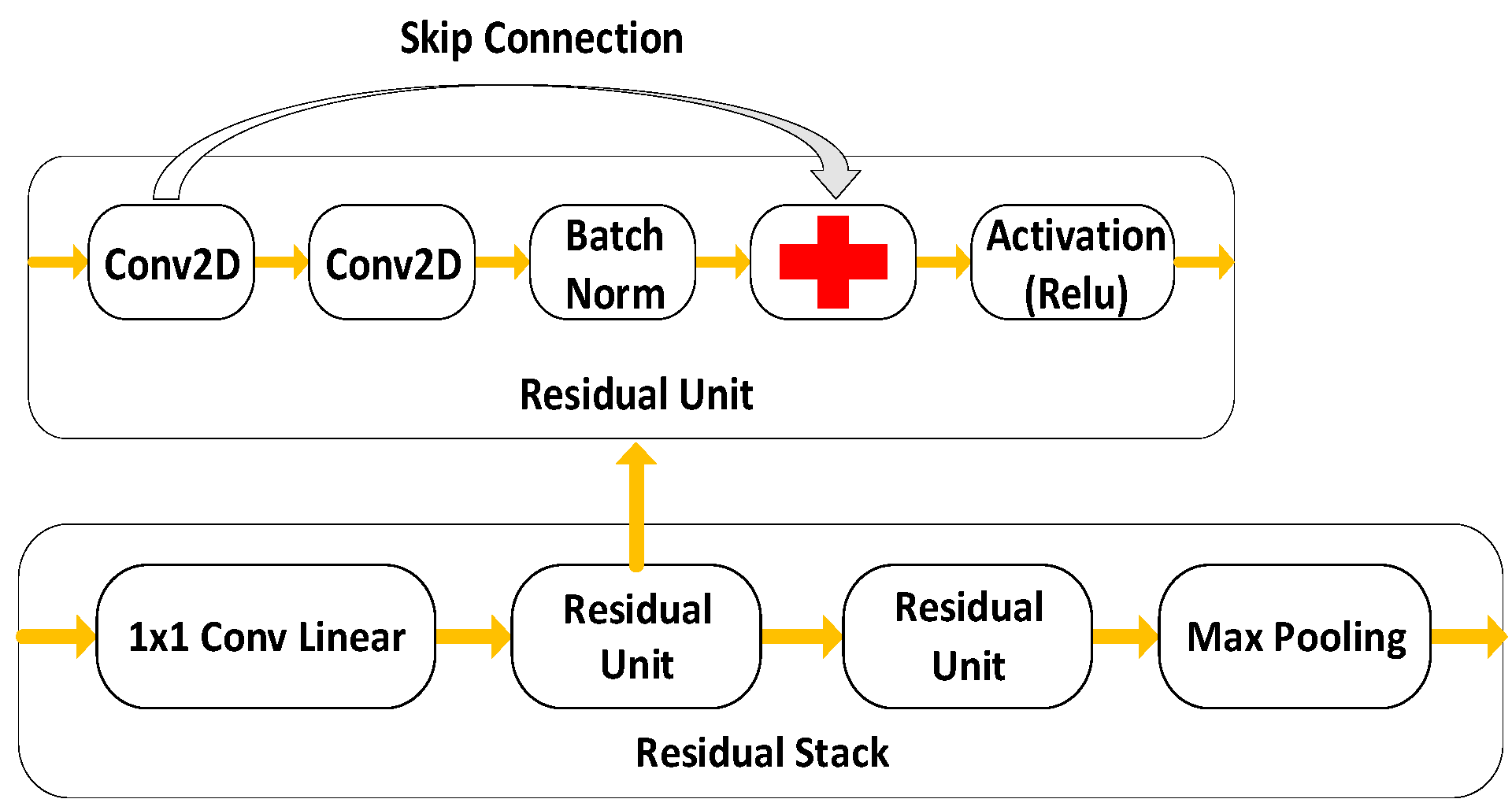

3.3. The CNN for High SNR Signal Classification (L-CNN)

3.4. The Transformer for Low SNR Signal Classification (L-Transformer)

3.5. The Training Method

4. Experiments

4.1. Dataset Description

4.2. Experimental Settings

4.3. Baseline Model

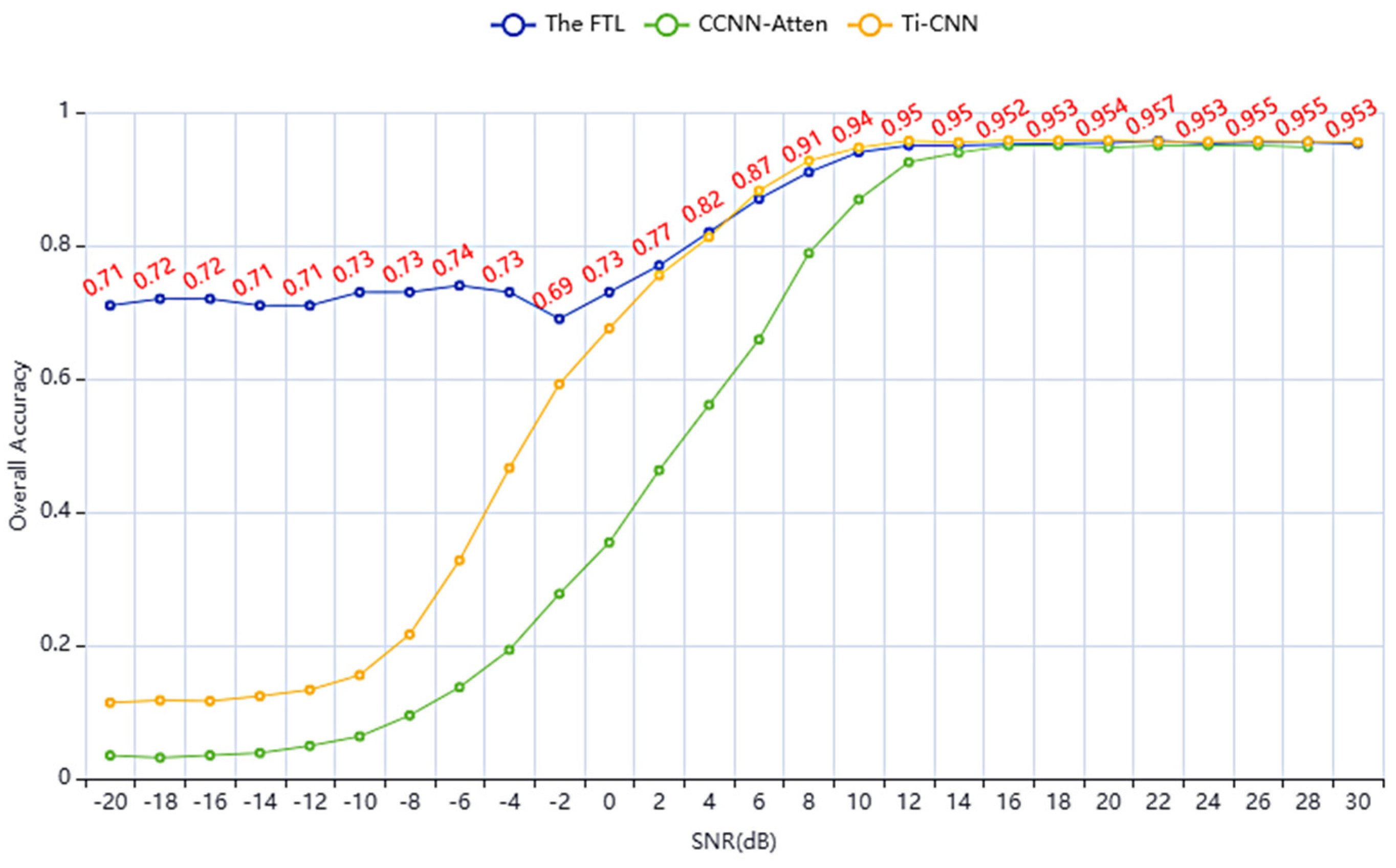

4.4. Comparison with Baseline Method

4.4.1. The Result of Classification Accuracy

4.4.2. The Result of the Confusion Matrix

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Xie, X.; Yang, G.; Jiang, M.; Ye, Q.; Yang, C.-F. A Kind of Wireless Modulation Recognition Method Based on DenseNet and BLSTM. IEEE Access 2021, 9, 125706–125713. [Google Scholar] [CrossRef]

- Liu, D.; Ergun, K.; Rosing, T.Š. Towards a Robust and Efficient Classifier for Real World Radio Signal Modulation Classification. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Luo, R.; Hu, T.; Tang, Z.; Wang, C.; Gong, X.; Tu, H. A Radio Signal Modulation Recognition Algorithm Based on Residual Networks and Attention Mechanisms. arXiv 2019, arXiv:1909.12472. [Google Scholar]

- Fonseca, E.; Santos, J.; Paisana, F.; DaSilva, L.A. Radio Access Technology Characterisation Through Object Detection; Elsevier: Amsterdam, The Netherlands, 2021. [Google Scholar] [CrossRef]

- Konan, O.J.E.Y.; Mishra, A.K.; Lotz, S. Machine Learning Techniques to Detect and Characterise Whistler Radio Waves. arXiv 2020, arXiv:2002.01244. [Google Scholar]

- Xu, J.; Wu, C.; Ying, S.; Li, H. The Performance Analysis of Complex-Valued Neural Network in Radio Signal Recognition. IEEE Access 2022, 10, 48708–48718. [Google Scholar] [CrossRef]

- Xiao, W.; Luo, Z.; Hu, Q. A Review of Research on Signal Modulation Recognition Based on Deep Learning. Electronics 2022, 11, 2764. [Google Scholar] [CrossRef]

- Wang, T.; Yang, G.; Chen, P.; Xu, Z.; Jiang, M.; Ye, Q. A Survey of Applications of Deep Learning in Radio Signal Modulation Recognition. Appl. Sci. 2022, 12, 12052. [Google Scholar] [CrossRef]

- Li, X.; Dong, F.; Zhang, S.; Guo, W. A Survey on Deep Learning Techniques in Wireless Signal Recognition. Wirel. Commun. Mob. Comput. 2019, 2019, 5629572. [Google Scholar] [CrossRef]

- Elyousseph, H.; Altamimi, M.L. Deep Learning Radio Frequency Signal Classification with Hybrid Images. In Proceedings of the 2021 IEEE International Conference on Signal and Image Processing Applications (ICSIPA), Kuala Terengganu, Malaysia, 13–15 September 2021; pp. 7–11. [Google Scholar]

- Wang, F.; Zhou, Y.; Yan, H.; Luo, R. Enhancing the generalization ability of deep learning model for radio signal modulation recognition. Appl. Intell. 2023, 53, 18758–18774. [Google Scholar] [CrossRef]

- Xu, Y.; Li, D.; Wang, Z.; Guo, Q.; Xiang, W. A deep learning method based on convolutional neural network for automatic modulation classification of wireless signals. Wireless Netw. 2019, 25, 3735–3746. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, Y. Modulation recognition with pre-denoising convolutional neural network. Electron. Lett. 2019, 56, 3586. [Google Scholar] [CrossRef]

- Zeng, Y.; Zhang, M.; Han, F.; Gong, Y.; Zhang, J. Spectrum Analysis and Convolutional Neural Network for Automatic Modulation Recognition. IEEE Wirel. Commun. Lett. 2019, 8, 929–932. [Google Scholar] [CrossRef]

- Jiang, K.; Zhang, J.; Wu, H.; Wang, A.; Iwahori, Y. A Novel Digital Modulation Recognition Algorithm Based on Deep Convolutional Neural Network. Appl. Sci. 2020, 10, 1166. [Google Scholar] [CrossRef]

- Huang, Z.; Li, C.; Lv, Q.; Su, R.; Zhou, K. Automatic Recognition of Communication Signal Modulation Based on the Multiple-Parallel Complex Convolutional Neural Network. Wirel. Commun. Mob. Comput. 2021, 2021, 5006248. [Google Scholar] [CrossRef]

- Jiang, K.; Qin, X.; Zhang, J.; Wang, A. Modulation Recognition of Communication Signal Based on Convolutional Neural Network. Symmetry 2021, 13, 2302. [Google Scholar] [CrossRef]

- Jiao, J.; Sun, X.; Zhang, Y.; Liu, L.; Shao, J.; Lyu, J.; Fang, L. Modulation Recognition of Radio Signals Based on Edge Computing and Convolutional Neural Network. J. Commun. Inf. Netw. 2021, 6, 280–300. [Google Scholar] [CrossRef]

- Du, R.; Liu, F.; Xu, J.; Gao, F.; Hu, Z.; Zhang, A. D-GF-CNN Algorithm for Modulation Recognition. Wireless Pers. Commun. 2022, 124, 989–1010. [Google Scholar] [CrossRef]

- Liang, Z.; Tao, M.; Xie, J.; Yang, X.; Wang, L. A Radio Signal Recognition Approach Based on Complex-Valued CNN and Self-Attention Mechanism. IEEE Trans. Cogn. Commun. Netw. 2022, 8, 1358–1373. [Google Scholar] [CrossRef]

- Liang, J.; Li, X.; Liang, C.; Tong, H.; Mai, X.; Kong, R. JCCM: Joint conformer and CNN model for overlapping radio signals recognition. Electron. Lett. 2023, 59, 13006. [Google Scholar] [CrossRef]

- Liu, F.; Zhang, Z.; Zhou, R. Automatic modulation recognition based on CNN and GRU. Tsinghua Sci. Technol. 2022, 27, 422–431. [Google Scholar] [CrossRef]

- Chen, S.; Qiu, K.; Zheng, S.; Xuan, Q.; Yang, X. Radio-Image:Bridging Radio Modulation Classification and ImageNet Classification. Electronics 2020, 9, 1646. [Google Scholar] [CrossRef]

- Zhang, L.; Lambotharan, S.; Zheng, G. Adversarial Learning in Transformer Based Neural Network in Radio Signal Classification. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 22–27 May 2022; pp. 9032–9036. [Google Scholar] [CrossRef]

- Zheng, Y.; Ma, Y.; Tian, C. TMRN-GLU: A Transformer-Based Automatic Classification Recognition Network Improved by Gate Linear Unit. Electronics 2022, 11, 1554. [Google Scholar] [CrossRef]

- Xu, J.; Lin, Z. Modulation and Classification of Mixed Signals Based on Deep Learning. arXiv 2022, arXiv:2205.09916. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS’17), Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 6000–6010. [Google Scholar]

- O’Shea, T.J.; Roy, T.; Clancy, T.C. Over-the-Air Deep Learning Based Radio Signal Classification. IEEE J. Sel. Top. Signal Process. 2018, 12, 168–179. [Google Scholar] [CrossRef]

| Model | Ti-CNN | CCNN-Atten | FCT |

|---|---|---|---|

| Accuracy | 65.11% | 57.92% | 84.04% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liao, M.; Liang, Y.; Lv, P. FCT: An Adaptive Model for Classification of Mixed Radio Signals. Electronics 2025, 14, 2028. https://doi.org/10.3390/electronics14102028

Liao M, Liang Y, Lv P. FCT: An Adaptive Model for Classification of Mixed Radio Signals. Electronics. 2025; 14(10):2028. https://doi.org/10.3390/electronics14102028

Chicago/Turabian StyleLiao, Mingxue, Yuanyuan Liang, and Pin Lv. 2025. "FCT: An Adaptive Model for Classification of Mixed Radio Signals" Electronics 14, no. 10: 2028. https://doi.org/10.3390/electronics14102028

APA StyleLiao, M., Liang, Y., & Lv, P. (2025). FCT: An Adaptive Model for Classification of Mixed Radio Signals. Electronics, 14(10), 2028. https://doi.org/10.3390/electronics14102028