1. Introduction

Cloud computing has emerged as a fundamental component of modern technology, offering scalable solutions for data management, computational power, and operational flexibility across various industries [

1]. As organizations migrate their operations and data to cloud environments, the need for efficient and resilient cloud infrastructures has significantly increased. Cloud services enable users to dynamically scale resources, manage large datasets, and optimize performance, transforming how businesses and individuals interact with technology [

2]. However, alongside these advantages, significant security and performance challenges persist. One of the most pressing concerns in cloud computing is ensuring system reliability against security threats. Distributed denial-of-service (DDoS) attacks, in particular, have become one of the most prevalent methods of targeting cloud environments. These attacks aim to disrupt network resources and compromise system functionality, often through malware infections such as viruses [

3]. By overloading system resources, DDoS attacks can severely degrade performance, cause service disruptions, and, in severe cases, lead to prolonged outages that impact both providers and users [

4]. Such interruptions not only threaten the operational stability of cloud systems but also undermine user confidence in these platforms, especially when handling sensitive data and critical computational tasks. Additionally, inefficient task scheduling in virtualized environments exacerbates these issues by increasing task completion time (makespan), ultimately raising costs for cloud consumers. Prolonged makespan further contributes to increased energy consumption and maintenance costs for cloud providers. Given these challenges, the implementation of advanced scheduling mechanisms and security strategies is essential to enhancing cloud system resilience while ensuring optimal performance under adversarial conditions.

Given the critical importance of maintaining uninterrupted cloud services, this study examines task scheduling challenges in cloud computing with a primary focus on security-driven performance optimization. Task scheduling, which involves the organization and management of computational workloads within a cloud environment, plays a pivotal role in ensuring both system efficiency and security [

5]. Traditional scheduling approaches often fall short in mitigating the effects of malicious activities, necessitating the adoption of more advanced and adaptive strategies [

6,

7,

8]. In response to these limitations, this study explores the use of metaheuristic algorithms, renowned for their effectiveness in solving complex optimization problems, to develop scheduling mechanisms under simulated DDoS attack conditions.

This research aims to provide a comprehensive analysis of how cloud task scheduling can be optimized to withstand malicious activities. By examining the use of metaheuristic algorithms under adversarial conditions, this study not only offers new insights into resilient cloud scheduling practices but also contributes to the development of proactive security strategies. As discussed by Verma and Kaushal, task scheduling in cloud computing is classified as an NP-hard problem [

9,

10,

11,

12,

13,

14,

15,

16]. Due to its complexity, metaheuristic approaches are often preferred over deterministic methods for achieving performance-efficient solutions. However, search-based metaheuristic algorithms are prone to becoming trapped in local optima, a challenge exacerbated by increasing task and virtual machine counts, as well as the presence of malware, including DDoS attacks.

To address these challenges, this study leverages well-established metaheuristic techniques, including GAs, PSO, and ABC algorithms, which are commonly employed in job-shop scheduling problems. These algorithms are evaluated in both normal and DDoS-affected cloud environments to determine their effectiveness in task scheduling under varying conditions. Additionally, a hybrid approach is included among the metaheuristic algorithms to assess its relative effectiveness under adversarial conditions.

The findings of this study address a notable gap in the existing literature by offering practical insights into improving the resilience of cloud computing environments against evolving cybersecurity threats. By demonstrating the applicability of hybrid metaheuristic scheduling techniques in hostile settings, this research contributes to the development of robust and adaptable cloud computing frameworks capable of sustaining high performance in the face of security threats.

This study differentiates itself from prior works by evaluating the stability and adaptability of the hybrid GA–PSO algorithm specifically under adversarial conditions caused by DDoS attacks. Furthermore, the integration of a stagnation-based control mechanism within the hybrid model, which has not been explored in previous studies, represents a novel contribution aimed at improving scheduling consistency in disrupted cloud environments. This focus on adversarial environments and the inclusion of stagnation control provide a unique perspective on how hybrid algorithms can be fine-tuned for better performance under real-world cybersecurity threats.

The remainder of this study is structured to provide a comprehensive analysis of cloud task scheduling challenges and possible solutions.

Section 2 details the problem and examines the current state of workflow scheduling, with a particular emphasis on existing scheduling algorithms and the challenges posed by infrastructure-as-a-service (IaaS) environments.

Section 3 presents the details of the simulation environment and experimental setup, outlining various cloud computing scenarios designed to evaluate scheduling performance under different conditions.

Section 4 offers an in-depth performance evaluation of task scheduling, comparing results in both normal and DDoS-affected cloud environments. This section also discusses experimental findings, performance metrics, and their broader implications. Finally,

Section 5 concludes the study by summarizing key insights and proposing future research directions aimed at optimizing cloud task scheduling while enhancing security and system resilience.

2. Related Work

A significant number of software vendors and developers have integrated cloud-hosted application programming interfaces (APIs) into their systems to build innovative and value-added cloud-based solutions. However, executing applications in cloud environments inherently exposes system components to persistent security threats, particularly distributed denial-of-service (DDoS) attacks. The degradation of performance or runtime anomalies in remote system components can destabilize the entire cloud infrastructure. To assess system component reliability under these conditions, a novel approach has been introduced, leveraging the concept of concept drift. Specifically, a singular value decomposition (SVD)-based technique has been proposed for detecting runtime reliability anomalies (RAD), offering a systematic method for identifying deviations in system behavior [

17].

Despite the advantages of cloud computing, both cloud users (CUs) and cloud service providers (CSPs) may experience severe operational challenges due to DDoS attacks, including service unavailability and prolonged response times. To mitigate the impact of such attacks, a threshold anomaly-based DDoS detection method has been developed. This approach is designed to reduce the consequences of DDoS attacks on CSPs by identifying and responding to potential security breaches in real time [

18].

Edge computing has emerged as a solution to address the high latency and performance limitations associated with cloud computing; however, it also introduces new security risks. One of the most significant concerns is the vulnerability of edge servers to DDoS attacks, particularly when tasks are offloaded from centralized cloud environments. Research on countermeasures against edge-based DDoS attacks remains limited. To address this gap, a task offloading strategy known as EDM_TOS has been introduced. This method enhances security by redirecting tasks to reliable edge nodes based on risk assessment mechanisms, effectively reducing the risk of DDoS attacks at the edge computing layer [

19].

The infrastructure-as-a-service (IaaS) model in cloud computing provides computing, networking, and storage resources as services available from a shared resource pool. One of the most significant challenges in this domain is the ability to accurately predict resource utilization in real time. By anticipating future demands, cloud data centers can dynamically scale resources, ensuring high service quality while minimizing energy consumption. However, due to the constantly fluctuating nature of cloud resource usage, generating precise predictions remains a complex task. To address this issue, recent research has explored the use of evolutionary neural networks (NNs) to predict CPU consumption levels in host machines. This approach integrates advanced optimization techniques such as PSO, differential evolution (DE), and covariance matrix adaptation evolution strategy (CMA-ES) to enhance the predictive capabilities of neural networks and improve resource allocation efficiency [

20].

Task scheduling plays a fundamental role in cloud computing by optimizing resource utilization, reducing execution time, and enhancing overall system performance. However, the exponential increase in the number of tasks and the inherent complexity of scheduling problems create a vast search space, making efficient scheduling solutions highly challenging. To address this issue, a multi-objective cloud task scheduling approach has been proposed, leveraging the strengths of GAs and gravitational search algorithms (GSAs). This hybrid methodology aims to optimize key quality of service (QoS) parameters such as energy consumption, task completion time, resource utilization, and system throughput. To validate its effectiveness, the proposed scheduling method was tested in CloudSim using both real-time and synthetic workloads. Comparative analysis demonstrated that this approach outperforms conventional metaheuristic techniques evaluated under similar conditions [

21].

Furthermore, a hybrid algorithm combining the grey wolf optimization (GWO) algorithm and a GA has been introduced to enhance cloud task scheduling. This hybrid GWO–GA approach aims to minimize execution time, energy consumption, and operational costs by integrating the GA’s crossover and mutation operators to improve optimization performance. Evaluations conducted using the CloudSim framework confirmed the efficiency of this hybrid method, demonstrating superior performance compared to existing scheduling techniques [

22].

Another critical challenge in cloud computing lies in efficiently mapping tasks to available resources under uncertain user request patterns. While most scheduling approaches primarily focus on algorithmic design and optimization, they often neglect the influence of key uncertainty factors such as millions of instructions per second (MIPS) and network bandwidth on scheduling performance. To address this limitation, a novel scheduling method known as the chameleon and remora search optimization algorithm (CRSOA) has been proposed. By accounting for the direct impact of MIPS and bandwidth variations on VM performance, this approach enhances scheduling efficiency and ensures more effective task allocation in dynamic cloud environments [

23]. Note that while the CRSOA has been identified as a suitable approach for handling uncertain request patterns, its implementation and evaluation under stochastic workload arrivals will be addressed in future work.

Attacks targeting cloud components can lead to unpredictable losses for both cloud service providers and users. One of the most critical categories of these attacks is the distributed denial-of-service (DDoS) attack, which can severely disrupt customer experience, cause service outages, and, in extreme cases, lead to complete system failure and economic unsustainability. The rapid advancements in the Internet of Things (IoT) and network connectivity have inadvertently facilitated the spread of DDoS attacks by increasing their volume, frequency, and intensity. This study primarily focuses on identifying and addressing the gaps between emerging DDoS threats and the latest scientific and commercial defense mechanisms.

In particular, it presents an up-to-date review of DDoS detection methods, with an emphasis on anomaly-based detection techniques. Additionally, it provides an overview of current research tools, platforms, and datasets used in DDoS mitigation studies. A detailed analysis of machine learning-based detection approaches is conducted, examining their characteristics, strengths, limitations, and practical applications in cloud environments. By evaluating the effectiveness of these methods within the cloud computing context, this research contributes to a deeper understanding of their potential and limitations in mitigating evolving cyber threats [

24].

Cloud computing environments face significant challenges in task scheduling and security, which have been the focus of numerous studies aimed at optimizing performance while addressing critical issues such as energy efficiency and cyber threats. Mansour Aldawood investigated VM placement challenges, emphasizing security risks like side-channel attacks arising from malicious VMs co-located on the same physical infrastructure. Aldawood proposed a secure VM placement model using optimization-based approaches, comparing stacking, random, and spread-based algorithms. Results demonstrated improved security through resource diversity and timing considerations, making a substantial contribution to the secure management of cloud environments [

1].

The RADISH model tackled load balancing and resource optimization in IoT-fog multi-cloud setups. By integrating task classification and scheduling mechanisms, the model enhanced service level agreements (SLAs) and quality of service (QoS), as validated through CloudSim. However, the absence of a security framework limited its applicability in cyberattack scenarios [

2]. Prathyusha and Govinda addressed DDoS attacks using hash-based detection techniques. Cryptographic functions like MD5 and SHA-256 were implemented, with SHA-256 outperforming in detection accuracy and DDoS mitigation. This study underscored the necessity of robust security protocols in virtualized environments [

3].

Using CloudSim for performance analysis, a study on economic denial of sustainability (EDoS) attacks revealed significant resource degradation due to the presence of malicious scripts. These findings highlighted the importance of proactive defenses to maintain resource accessibility under hostile conditions [

4]. With regard to task scheduling, Mangalampalli and Reddy developed a whale optimization algorithm (WOA)-based mechanism, which outperformed PSO, Cuckoo Search (CS), and GAs in terms of optimizing metrics such as makespan, migration time, and energy consumption. This approach provided effective solutions to scheduling inefficiencies [

5].

Similarly, the improved coati optimization algorithm (ICOATS) introduced a multi-objective fitness function for VM assignments, achieving enhanced resource utilization and reduced makespan. This study showcased the potential of metaheuristic techniques to address performance challenges in cloud computing [

6]. Advanced security frameworks have also been proposed. For instance, a hidden Markov model (HMM) integrated with honeypots identified malicious optical edge devices in SDN-based fog/cloud networks. The system demonstrated high attack detection rates and reduced false positives in simulated environments [

7]. While task scheduling studies have primarily focused on metrics such as reliability and energy efficiency, the impact of security services on workflow execution remains underexplored. Researchers have extended CloudSim to evaluate the overheads of security services such as encryption and integrity verification, revealing trade-offs between enhanced security and increased execution times [

8].

Nayebalsadr and Analoui’s research investigates the security vulnerabilities inherent in virtualization technologies. Virtualization facilitates the sharing of physical resources among VMs to reduce costs and enhance system reliability. However, the absence of performance isolation renders it susceptible to security vulnerabilities. The research focuses on the resource release attack; simulations conducted using CloudSim demonstrate that this attack significantly impacts VM behavior. The findings of this study are crucial for cloud providers in terms of identifying and mitigating such attacks [

9].

A similar study focuses on detecting malicious activities in cloud computing environments. While cloud infrastructure offers scalable resources and services, it remains susceptible to exploitation by malicious actors as a platform for attacks. Specifically, a VM in the cloud can operate as a botnet member, generating excessive traffic directed at victims. To address this challenge, the study proposes a detection method based on the analysis of network parameters, aiming to prevent the misuse of cloud infrastructure. Source-based attack detection is performed using entropy and clustering techniques, achieving high performance and accurate VM classification rates in simulations conducted on CloudSim [

10].

Another study explores the intersection of optimization and security in cloud computing, highlighting the need for integrated solutions that address both system performance and protection. Within this framework, metaheuristic algorithms, commonly utilized in the literature, are applied to solve the task scheduling problem in cloud environments. Hybridization experiments combining PSO and GAs were conducted to achieve enhanced results. This innovative approach leverages metaheuristic techniques to effectively schedule tasks while addressing diverse security challenges in cloud computing. The performance of task scheduling, evaluated with regard to makespan, was tested under normal conditions, as well as in environments affected by viruses and DDoS attacks, and compared with other studies in the literature, demonstrating its potential to balance optimization and security considerations [

11].

The growing prevalence of DDoS attacks in cloud computing environments and their disruptive impact underscore the need for evaluating task scheduling performance under both normal and adversarial conditions. Given the gaps in the literature regarding this issue, there is a critical need for research that explores scheduling strategies capable of maintaining efficiency and resilience in the face of security threats [

25,

26]. This study addresses this need by examining the cloud task scheduling problem through the application of traditional metaheuristic algorithms, including GAs, PSO, and ABC. Furthermore, a hybrid GA–PSO approach is proposed to enhance scheduling efficiency and improve system robustness [

27,

28,

29]. By systematically comparing scheduling performance in standard and DDoS-compromised environments, this research offers new insights into optimizing cloud task execution while mitigating the adverse effects of cyber threats. The findings contribute to the advancement of cloud scheduling methodologies by presenting a framework that can enhance computational efficiency and security, thereby ensuring sustainable and resilient cloud service operations in dynamic and high-risk environments [

30,

31].

3. Materials and Methods

In this section, we first introduce the dataset used in the study, followed by a detailed explanation of the experimental environment and the methodological steps applied to enhance the performance of PSO, GAs, ABC, and the GA–PSO hybrid algorithm. To ensure a controlled and efficient analysis, all computational experiments were conducted on a DELL Latitude 3540 system equipped with a 13th-generation Intel Core i5-1335U processor (1.30 GHz), 8 GB RAM, and a 500 GB SSD, operating on Windows 11 Pro. To support reproducibility, the simulation was implemented using CloudSim v3.0.3, which is an open-source software framework developed by the CLOUDS Laboratory at the University of Melbourne, Melbourne, Australia. CloudSim is a Java-based application and has open-source usage. Parameters such as the number of tasks, number of VMs, and number of repetitions used in the CloudSim platform are shown in

Table 1.

All experiments were executed deterministically using a fixed base seed of 0. For each independent repetition, the random number generator was seeded by adding the repetition index to this base seed. This approach ensures that rerunning any given scenario with the same seed and repetition index reproduces the exact same sequence of random values, cloudlet workloads, and algorithm behaviors, allowing bit-for-bit replication of all results.

This setup provided a stable and consistent environment for evaluating algorithmic efficiency and reliability under different conditions, ensuring accurate and reproducible results. This hardware configuration was chosen to provide a standardized mid-range workstation environment commonly used in comparable studies, ensuring reproducible and consistent results.

3.1. The Proposed Algorithm and Methods

To address the complexities of task scheduling in cloud computing, this study utilizes GAs, PSO, and ABC as benchmark metaheuristic methods [

31]. The research examines the resilience and efficiency of these approaches under both standard and DDoS-compromised cloud environments. Recognizing the individual strengths and weaknesses of GAs and PSO, a hybrid GA–PSO algorithm was introduced to enhance scheduling performance and system robustness. The hybrid GA-PSO method used in this study is detailed in Algorithm 1. This approach combines the global search capabilities of Genetic Algorithm with the convergence speed of PSO.

| Algorithm 1: Algorithm Steps |

- -

GA: ‘CreateRandomPopulation()’ - -

PSO: ‘CreateRandomSwarm()’

- 2.

Iteration Loop:

- -

PSO: Update ‘velocity’ & ‘position’, evaluate fitness. - -

GA: Selection, crossover, mutation, evaluate fitness. - -

If PSO is stagnant → inject GA’s fittest individual into PSO.

- 3.

Termination:

- -

When max iterations or evaluations reached → return global best (‘g_best’).

|

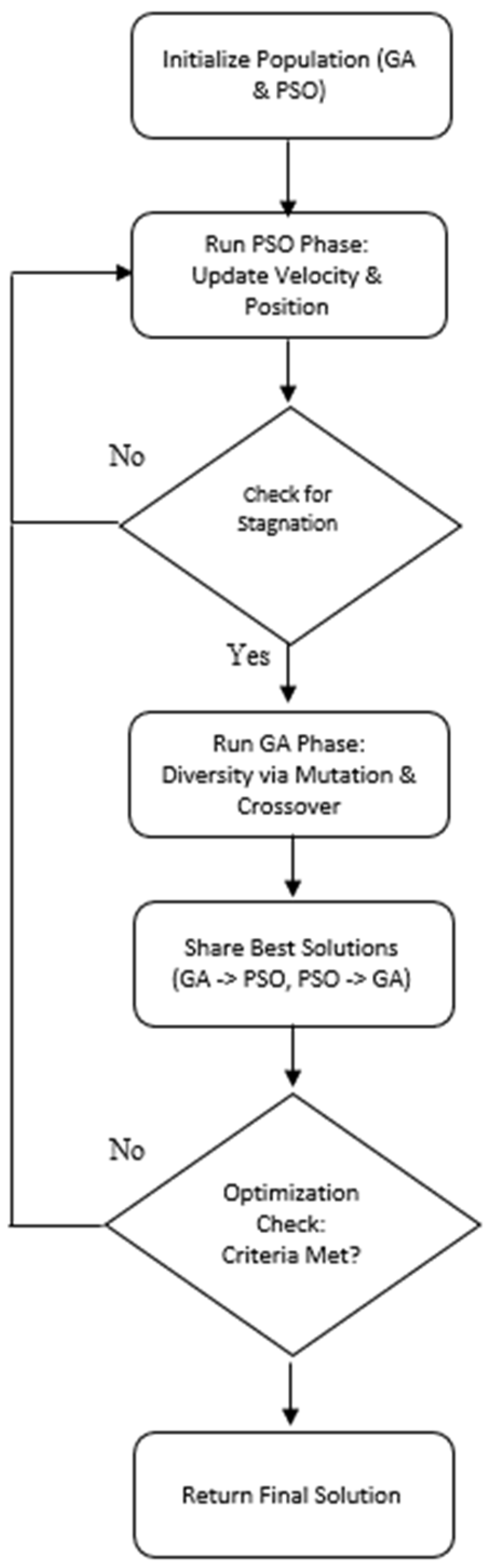

PSO offers rapid convergence, but it tends to become trapped in local optima. On the other hand, the GA provides a more extensive global search capability but suffers from slow convergence, which can be computationally expensive. The proposed hybrid GA–PSO algorithm integrates the GA’s genetic diversity with PSO’s fast convergence to achieve an optimal balance. The GA’s mutation and crossover mechanisms introduce variation when PSO encounters stagnation, while PSO enhances the GA’s search efficiency, enabling quicker and more effective task scheduling solutions.

A common challenge in PSO is its susceptibility to early convergence at suboptimal solutions, whereas the GA often requires a large number of generations to reach a global optimum, increasing computational overheads. This issue is particularly pronounced in cloud computing, where large-scale scheduling tasks demand a high degree of efficiency. Furthermore, in DDoS-affected cloud environments, the complexity of task allocation increases as a result of dynamic disruptions, making traditional methods less effective. The hybrid GA–PSO approach mitigates these challenges by leveraging PSO’s adaptability and the GA’s evolutionary search efficiency, ensuring a more adaptive and resilient scheduling strategy capable of handling both routine and adversarial conditions in cloud systems.

The workflow of the hybrid GA–PSO algorithm is depicted in

Figure 1. The algorithm initiates by generating a random population, where each individual represents a candidate solution for the cloud computing task scheduling problem. Each solution corresponds to a distinct allocation of tasks across VMs within the cloud environment.

For GAs, PSO, and the ABC, the number of iterations is denoted as n. However, to maintain computational efficiency and ensure a balanced comparison across methods, the hybrid GA–PSO algorithm adopts n/2 iterations. This reduction prevents excessive complexity while enabling meaningful performance evaluation.

To determine the most effective configuration, preliminary experiments were conducted, systematically adjusting iteration counts, cloudlet numbers, and other parameters to assess their impact on scheduling performance. The results of these experiments guided the selection of optimal parameter values, as outlined in

Table 1 and

Table 2. The final parameter tuning ensures that the hybrid GA–PSO algorithm maintains a balance between computational efficiency and scheduling effectiveness, allowing it to perform optimally across diverse cloud computing scenarios.

3.1.1. Population Initialization

In the case of the hybrid GA–PSO algorithm, the initialization phase begins by generating a random population for the GA and a swarm for PSO. Each PSO particle is assigned a random velocity and position, while the GA initializes its population with randomly generated individuals representing potential scheduling solutions. Once the population is established, the GA’s fittest individual is selected to assist in guiding PSO particles during their optimization process. Within PSO, the best local (pBest) and global (gBest) solutions are continuously updated. If PSO encounters stagnation and becomes trapped in a local optimum, the GA introduces genetic diversity to restore exploration capabilities. The GA population undergoes evolutionary adaptation, incorporating crossover and mutation operations to refine the search process, improving the global exploration of the solution space. This interaction between the GA and PSO ensures a balanced trade-off between exploration and exploitation, enabling a more adaptive and efficient task scheduling mechanism in cloud computing environments. The population initialization of this hybrid approach are detailed in Algorithm 2, where both GA and PSO populations are created and evaluated to set the foundation for the optimization process.

| Algorithm 2: Initialize Population |

Input Initial GA population, PSO swarm, max iterations/evaluations

Output Best solution found (fitness)

GA_population = CreateRandomPopulation() PSO_swarm = CreateRandomSwarm()

For each individual in GA_population:

EvaluateFitness(individual)

End For

For each particle in PSO_swarm: EvaluateFitness(particle)

End For

Fittest_GA = GetFittestIndividual(GA_population)

g_best = GetGlobalBest(PSO_swarm)

p_best = GetLocalBests(PSO_swarm) |

This step initializes the GA population using CreateRandomPopulation() and the PSO swarm using CreateRandomSwarm(). It then evaluates each GA individual and PSO particle by computing their fitness based on the makespan of their task-to-VM mappings, establishing the initial pBest and gBest values for the subsequent optimization process.

3.1.2. Hybridization Strategy

In the case of the hybrid GA–PSO algorithm, optimization is enhanced by establishing a mutual reinforcement mechanism between the GA and PSO. The hybridization strategy extends the mechanism described in

Section 3.1.1 by reinforcing PSO with the GA’s best individuals and vice versa.

By integrating the GA’s evolutionary operators with PSO’s adaptive learning, this hybrid approach balances exploration and exploitation, mitigating the risk of premature convergence while maintaining computational efficiency. The synergy between the GA’s genetic adaptability and PSO’s rapid optimization ensures solution diversity and enhanced task scheduling accuracy in dynamic cloud computing environments, particularly under adversarial conditions such as those relating to DDoS attacks. The hybridization strategy employed in this study is detailed in Algorithm 3.

| Algorithm 3: Hybridization Strategy |

while eval < max_evaluations:

UpdateSwarmWithGA(PSO_swarm, fittest_GA)

If IsStagnant(PSO_swarm) Then

GA_populations = ApplyCrossoverAndMutation(GA_population)

Fittest_GA = GetFittestIndividual(GA_population)

End If

G_best = GetGlobalBest(PSO_swarm)

DefineGAPopulation(GA_population, g_best) |

In this step, the GA’s fittest individual is used to guide the PSO swarm; if the swarm remains stagnant (no improvement for the specified stagnation count), the GA applies crossover and mutation to reintroduce genetic diversity and restore exploration capability.

3.1.3. Iterative Optimization Loop

The hybrid GA–PSO algorithm follows an iterative optimization process, where the GA and PSO work in tandem to refine task scheduling solutions dynamically. In each iteration, the GA evolves the population, searching for the most optimal solution through selection, crossover, and mutation. Meanwhile, PSO adjusts the velocity and position of particles, ensuring efficient exploration and fast convergence towards promising solutions. The specific steps of this iterative co-optimization process are outlined in Algorithm 4.

This loop continues the integrated GA–PSO interaction described in

Section 3.1.1, leveraging genetic operations to ensure convergence stability when PSO stagnates.

| Algorithm 4: Iterative Optimization Algorithm |

for each individual in GA_population. ApplySelection(individual)

ApplyCrossover(individual)

ApplyMutation(individual)

EvaluateFitness(individual)

End for

Fittest_GA = GetFittestIndividual(GA_population)

For each particle in PSO_swarm:

UpdateVelocity(particle, g_best, p_best)

UpdatePosition(particle)

EvaluateFitness(particle)

End for

g_best = GetGlobalBests(PSO_swarm)

p_best = GetLocalBests(PSO_swarm)

End While |

In each iteration, the GA evolves its population via selection, crossover, and mutation while PSO simultaneously updates particle velocities and positions; after evaluating both GA individuals and PSO particles, the global best solution is updated to drive convergence.

3.1.4. Termination and Result

The optimization process runs until the maximum iteration threshold is reached. Throughout the execution, the GA and PSO continuously exchange their best solutions, refining their search strategies to achieve higher efficiency and adaptability. Upon reaching the termination condition, the algorithm selects the final optimized solution, representing the best task scheduling configuration obtained during the process. This solution reflects an optimal balance between execution time, resource allocation, and computational efficiency, ensuring improved performance in dynamic cloud computing environments.

3.1.5. Hybrid GA–PSO Summary

The unified pseudocode first initializes both the GA population and the PSO swarm, then evaluates their fitness to establish initial pBest and gBest values. During each iteration, PSO particles update their velocities and positions and refresh pBest/gBest, while the GA population undergoes selection, crossover, and mutation to produce new candidate solutions. If the PSO swarm remains stagnant for the specified threshold, the algorithm injects the fittest GA individual into the swarm to re-introduce diversity and avoid local optima. After all iterations, the best overall solution (gBest) is returned as the optimal task scheduling configuration.

| Algorithm 5: Hybrid GA–PSO Algorithm |

Input:

- pop_size (population/swarm size)

- MaxIter (maximum iterations)

- StagThresh (stagnation threshold)

Output:

- g_best (best scheduling solution)

1. GA_pop ← CreateRandomPopulation(pop_size)

PSO_swarm ← CreateRandomSwarm(pop_size)

2. EvaluateFitness(GA_pop)

EvaluateFitness(PSO_swarm)

3. pBest, gBest ← UpdateBests(PSO_swarm)

4. for iter = 1 to MaxIter do

a. PSO update

for each particle in PSO_swarm do

UpdateVelocity(particle, pBest, gBest)

UpdatePosition(particle)

EvaluateFitness(particle)

end for

UpdateBests(PSO_swarm)

b. GA evolution

GA_pop ← Selection(GA_pop)

GA_pop ← Crossover(GA_pop)

GA_pop ← Mutation(GA_pop)

EvaluateFitness(GA_pop)

fittestGA ← GetFittest(GA_pop)

c. Hybridization

if IsStagnant(PSO_swarm, StagThresh) then

ReplaceWorstParticle(PSO_swarm, fittestGA)

end if

gBest ← max(gBest, fittestGA)

end for

5. return gBest |

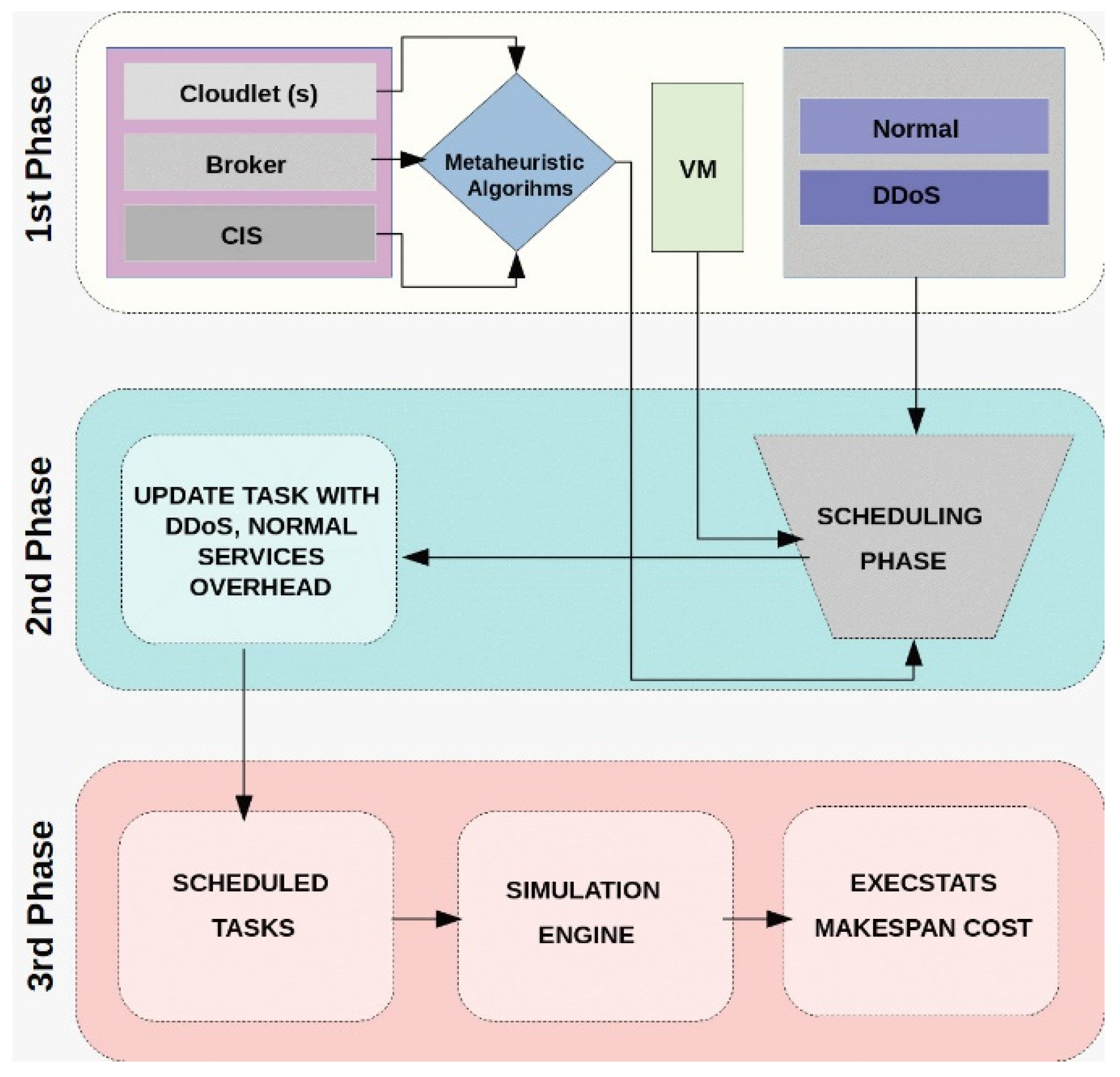

3.2. The Approach

This section provides a comprehensive overview of the proposed methodology, detailing the workflow and implementation steps. The approach is structured into six distinct stages, each playing a crucial role in optimizing task scheduling and resource management. The workflow of the initial stage is depicted in

Figure 2, outlining the fundamental processes that form the foundation of the overall strategy. These stages collectively ensure a structured and efficient approach to enhancing scheduling performance in cloud environments while addressing potential computational and security challenges.

3.2.1. Phase 1: Setting up the Simulation Environment

In this phase, a simulation environment was developed using the CloudSim framework to evaluate the scheduling approach under different operational conditions. The setup involved defining data centers, physical hosts, and VMs while also configuring cloudlets, which represent computational tasks. These tasks were designed as input for scheduling, utilizing metaheuristic algorithms to enhance efficiency. The environment was structured to ensure effective resource allocation and task management, supporting adaptability in cloud-based systems.

To examine system performance, simulations were conducted under two distinct scenarios:

Standard cloud environment: simulates normal operating conditions, where system performance is assessed without disruptions.

DDoS-affected cloud environment: evaluates system behavior under DDoS attack conditions, analyzing its impact on task execution and resource management.

In our DDoS simulation, volumetric flooding was emulated purely by scaling cloudlet resource demands: each “attack” cloudlet’s execution length was multiplied by 100 and both its input and output file sizes were increased to 3000 MB (from 300 MB). This artificial overload targets CPU and network bandwidth to mimic high-volume attack behavior commonly used in CloudSim studies. Although we did not incorporate a real-world traffic trace, this parameter-scaling approach is a recognized method to emulate DDoS conditions.

Detailed explanations regarding the execution of these scenarios are presented in a later section of the study. This controlled experimental setup allows for a comparative assessment of scheduling performance, providing insights into the resilience and efficiency of different optimization techniques under varying network conditions.

3.2.2. Phase 2: Scheduling Process

After configuring the simulation environment and initializing the metaheuristic algorithms, the task scheduling phase commenced. The primary goal was to enhance scheduling efficiency, optimize system performance, and reduce execution time by systematically assigning tasks to VMs.

Metaheuristic algorithms played a critical role in this process by dynamically adapting to workload conditions, ensuring efficient resource utilization and balanced task distribution. These optimization techniques enabled flexible and adaptive scheduling, thereby improving overall computational efficiency. The outcomes of this phase provided a basis for assessing how different algorithms perform under varying operational scenarios, including normal conditions and DDoS-induced disruptions.

3.2.3. Phase 3: Simulation of Scheduled Tasks and Results Analysis

At this stage, the scheduled tasks were incorporated into the simulation environment, allowing for the replication of task execution in a cloud computing system. The performance of the system was then evaluated, and the results were analyzed to measure the effectiveness of the proposed scheduling method.

A key aspect of the evaluation was makespan analysis, which quantifies the total time required for task completion. Makespan serves as a crucial metric in determining system efficiency, as it captures the time span between the task start and the task completion. The output of the scheduling algorithm gives this duration, indicating how efficiently tasks are assigned and executed.

To assess makespan performance, four statistical indicators were employed:

Min makespan: the shortest execution time recorded across all test runs (expressed in seconds);

Max makespan: the longest observed makespan value, indicating the worst-case execution time;

Avg makespan: the mean makespan across multiple trials, representing the overall scheduling efficiency;

Std: a measure of variation in makespan values, providing insight into the stability of the scheduling approach.

Min value;

where,

, is the makespan value obtained in the i-th experiment

where

, the makespan value obtained in the i-th experiment is

: the makespan obtained in the i-th trial is

N: Total number of attempts

Std Value (standard deviation):

Another key focus of the evaluation was resource utilization, which measures the efficiency of VM usage within a cloud computing system. This metric indicates the proportion of utilized resources relative to the total available capacity over a defined time period, offering insights into how well computational resources are distributed and managed.

Resource utilization is represented as a percentage and is calculated using the following formula:

An effective scheduling strategy ensures balanced resource allocation, preventing issues such as underutilization, which leads to wasted computational power, or overloading, which may degrade performance. By analyzing resource utilization trends, this study evaluates the impact of different scheduling techniques on overall system stability and efficiency under both normal and DDoS-affected conditions.

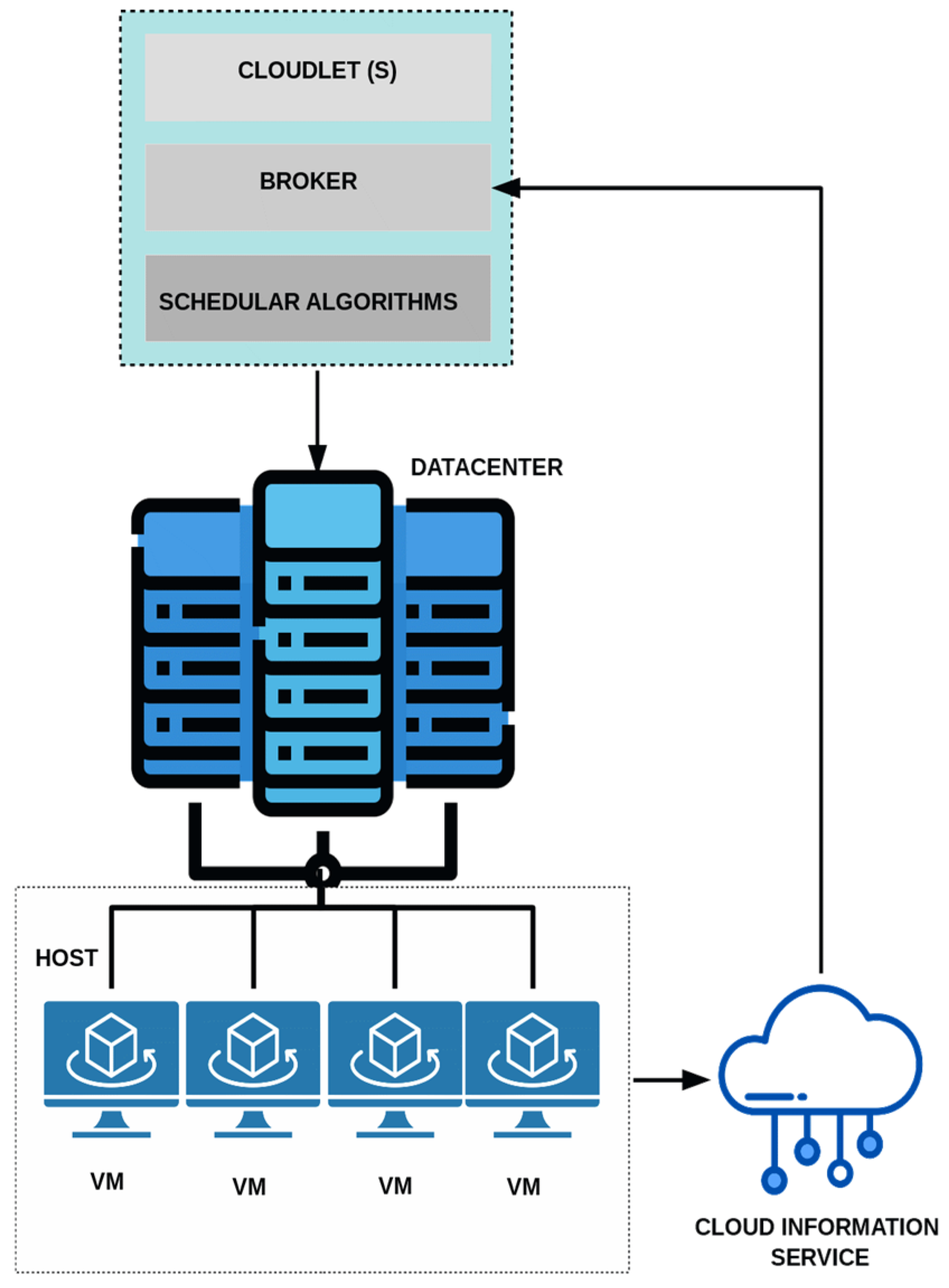

Figure 3 presents the structural foundation of CloudSim, highlighting its significance in simulating and modeling cloud computing environments. CloudSim provides an adaptable framework for replicating key cloud components, including data centers, physical hosts, VMs, and task scheduling processes.

The simulation process begins with the creation of cloudlets, representing workloads assigned to VMs for execution. At the core of this framework is the broker, which serves as an intermediary between tasks and available resources. The broker’s role is to allocate tasks based on predetermined rules or scheduling algorithms, ensuring efficient task execution and resource distribution. By facilitating a structured and controlled simulation, CloudSim allows for a detailed evaluation of scheduling strategies in various operational scenarios, including those affected by DDoS attacks.

In this study, we enhanced task scheduling efficiency in CloudSim by integrating metaheuristic algorithms, introducing an advanced optimization mechanism beyond the default broker-based approach. Unlike static task allocation, these algorithms dynamically analyze task complexity, resource availability, and overall system performance to determine the most efficient VM assignments.

The scheduling process begins with metaheuristic techniques evaluating task demands and current resource conditions. Once an optimized allocation strategy is established, tasks are assigned to the most suitable VMs, and the simulation proceeds accordingly. This adaptive scheduling approach not only optimizes resource usage but also enhances system responsiveness to changing workloads, resulting in more realistic and efficient cloud simulations.

By incorporating advanced optimization methods, CloudSim extends its functionality, enabling more accurate modeling of complex cloud environments. This refinement not only improves task scheduling effectiveness but also establishes a robust platform for further exploration in cloud computing research and performance evaluation.

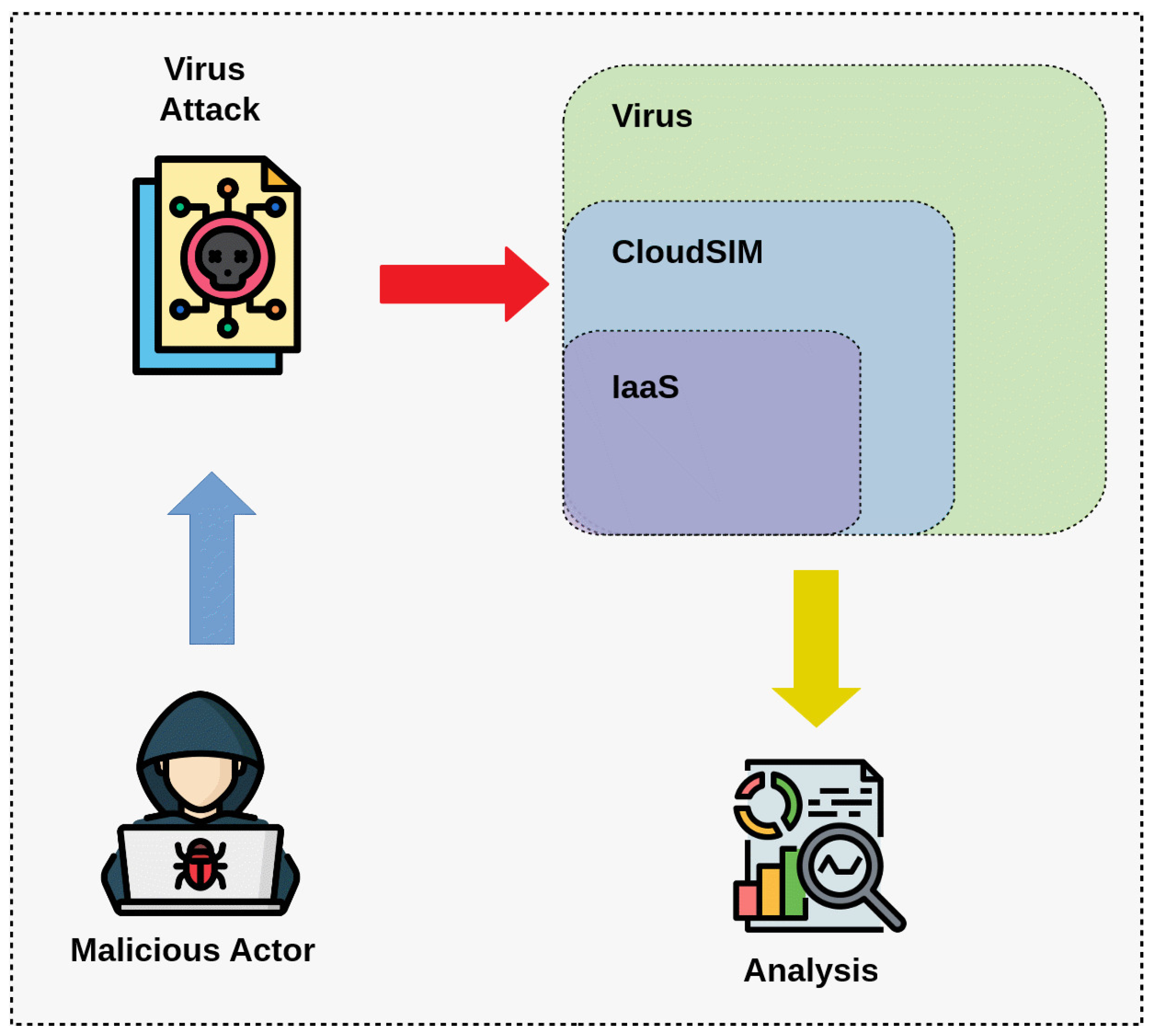

Figure 4 illustrates a DDoS attack scenario modeled within CloudSim, where an unauthorized entity targets the IaaS layer to disrupt task scheduling and resource allocation. The purpose of this simulation is to analyze how such an attack influences system efficiency and stability, utilizing CloudSim’s environment to assess the impact of security threats on cloud computing performance.

In this simulation, user identity modifications lead to the creation of high-load cloudlets that place excessive demand on system resources. These cloudlets are configured to exhibit prolonged execution times and require substantial input–output operations, causing increased bandwidth consumption and computational strain. Expanding the parameters, such as page size and file size, intensifies network congestion, delaying application processing and reducing overall system efficiency.

A major objective of this study is to evaluate how scheduling algorithms adapt to DDoS-induced system stress and whether they can effectively manage task execution while minimizing delays. This analysis provides valuable insights into the resilience and adaptability of scheduling techniques under extreme workload conditions.

By simulating attack scenarios, this research identifies potential security weaknesses in cloud computing environments and offers a structured framework for assessing and improving scheduling mechanisms. The ultimate goal is to enhance task scheduling strategies, ensuring that cloud systems maintain efficiency and stability, even under malicious cyber threats or operational disruptions.

3.3. Simulation Environment

The CloudSim simulation framework, widely utilized in cloud computing research, was chosen as the experimental platform. By allowing the simulation of VMs and tasks, this framework has enabled the implementation and analysis of metaheuristic algorithms for solving the task scheduling problem.

In the CloudSim simulation framework, tasks are represented as cloudlets, which mimic real-world workloads in a cloud computing environment. To assess system performance in this study, 50 to 100 cloudlets were generated following a uniform distribution. These cloudlets were assigned to VMs and executed within a simulated cloud infrastructure. The simulation environment, detailed in

Table 1, consists of 10 VMs, a single data center, and one physical host. This setup closely reflects a practical scenario where tasks are scheduled and executed on virtualized resources.

Each VM in the cloud possesses a specific computational capacity, quantified in MIPS. Tasks are characterized by their size in terms of million instructions (MI) and are allocated to VMs accordingly. In this context, the execution time of a task depends on both its size in MI and the computational power of the assigned virtual machine MIPS. The set of tasks assigned to the m-th VM can be expressed as

= {

}, where k denotes a specific task and m identifies the VM it is assigned to.

In this study, the execution time of the k-th task assigned to the m-th VM is determined using Equation (7), while the total execution time for all tasks is calculated with Equation (8). Among all VM machines, the highest total execution time, derived from Equation (9), represents the makespan value.

Explanation for Equations (7)–(9):

The makespan is defined as the maximum total execution time across all VMs.

Table 2 provides an overview of the simulation parameters applied across all algorithms, detailing the fundamental configurations and settings used during the experimental process. These parameters have been standardized to ensure consistency and comparability in performance evaluation, ensuring a reliable assessment of each algorithm’s effectiveness.

Table 2 shows the parameter selection rationale as follows:

Population/swarm size = 75: Pre-tests showed smaller sizes lacked exploration, while larger sizes significantly increased computation time.

GA crossover rate = 0.5 and mutation rate = 0.015: common literature values, confirmed by preliminary experiments for balanced exploration/exploitation.

PSO inertia = 0.9, C1 = C2 = 1.5: standard canonical PSO settings chosen for fast convergence.

The algorithms were initialized with a population of 75 randomly generated individuals. For the GA, parameters were set as follows: a mutation rate of 0.015, a crossover rate of 0.5, a tournament size of 15, and the application of elitism. Additionally, an enhancement was introduced by incorporating a stagnation threshold of 0.5 and a maximum stagnation count of 15. These modifications led to improved performance compared to the traditional GA and contributed positively to the hybrid algorithm. For the PSO algorithm, the inertia factor was set at 0.9, with both C₁ and C₂ assigned a value of 1.5. Similarly, in the ABC algorithm, the swarm size and other parameters were adjusted to align with the simulation environment.

To comprehensively analyze task scheduling algorithms, experiments were conducted across different iteration counts. Specifically, each algorithm was tested at 10, 50, and 100 iterations to examine performance variations as the iteration count increased. This experimental design facilitated the simulation of diverse operational conditions, providing insights into the scalability and robustness of the algorithms when handling different workloads.

To enhance the reliability of the findings, each algorithm was executed independently 10 times for each iteration setting. Given the inherent randomness in metaheuristic algorithms, different outcomes were observed in each run. Repeated execution helped mitigate the influence of stochastic variations, ensuring statistical significance and offering a clearer assessment of algorithm efficiency and stability. The final results were obtained by computing the arithmetic mean of the recorded values.

Table 3 outlines the number of trials, iteration counts, and the task distribution across the simulated scenarios.

The simulation environment and testing methodology were systematically structured to assess key performance indicators such as task completion times, resource utilization, and scheduling efficiency. This well-defined experimental framework enabled a thorough evaluation of the proposed scheduling mechanisms, offering valuable insights into their effectiveness in terms of managing workloads within cloud computing environments.

3.4. Experimental Setup and Scenarios

The experimental framework was designed to assess the efficiency and adaptability of metaheuristic algorithms under different operational conditions. Two distinct scenarios were examined to evaluate task scheduling performance.

3.4.1. Normal Cloud Environment

In this scenario, tasks were processed in a standard cloud computing environment without external disturbances. The following metaheuristic algorithms were employed to optimize task scheduling and resource allocation:

PSO algorithm;

GA;

GA–PSO hybrid algorithm;

ABC algorithm.

3.4.2. DDoS-Attacked Cloud Environment

To analyze the resilience of task scheduling algorithms under high-stress conditions, a DDoS attack scenario was simulated. This environment was characterized by excessive resource consumption and increased task execution delays. The same metaheuristic algorithms utilized in the standard cloud computing scenario were applied to assess their performance and adaptability in mitigating the impact of resource saturation.

3.5. Workflow Execution

The task scheduling process followed a structured execution sequence to ensure efficient resource utilization and performance evaluation (

Figure 4).

The workflow consisted of the following steps:

Initialization: The cloud simulation environment was configured, including the generation of cloudlets and VMs.

Algorithm execution: Metaheuristic algorithms were applied to determine optimal task assignments based on resource availability and computational efficiency.

Fitness evaluation: Task scheduling outcomes were assessed using predefined performance metrics such as makespan, resource utilization, and execution time.

Iterative optimization: Algorithm parameters, including positional and velocity adjustments in heuristic-based approaches, were refined iteratively to enhance solution accuracy.

Termination conditions: The execution process concluded upon reaching an optimal solution or satisfying predefined stopping criteria.

Result documentation and analysis: Performance data were systematically recorded, compared, and analyzed across both scenarios to evaluate algorithm effectiveness in different cloud computing environments.

This structured workflow ensured a rigorous and consistent evaluation of scheduling strategies, allowing for an in-depth comparison of algorithmic efficiency across varying operational conditions.

3.6. Data Analysis

The evaluation of each algorithm’s performance was primarily based on makespan, which represents the total time required to complete all scheduled tasks. This analysis aimed to assess the algorithms’ effectiveness in responding to different operational challenges, including both standard execution conditions and high-stress scenarios induced by DDoS attacks. While the CloudSim framework is primarily designed to support makespan-based task scheduling analysis, the presence of a DDoS attack inherently affects resource consumption, which may indirectly influence factors such as energy efficiency and overall system utilization.

It is important to note that this study specifically focuses on makespan as the primary performance metric. Although resource utilization and energy efficiency are inevitably impacted in such conditions, they were not explicitly analyzed or optimized in this research. A more in-depth examination of these aspects would require distinct methodologies and targeted investigations. The findings presented align with the analytical capabilities of CloudSim, maintaining a focused approach on task completion times across varying operational scenarios.

While broader performance metrics such as throughput, energy efficiency, and SLA violations are important for comprehensive evaluation, our study focuses on makespan and resource utilization due to limitations inherent in the CloudSim framework. CloudSim primarily supports scheduling-based metrics and does not model energy consumption or SLA enforcement at a fine-grained level. Incorporating such metrics would require extensive customization or integration with specialized simulators, which is beyond the scope of this study.

4. Experimental Results

This section details the experimental procedures undertaken to evaluate the proposed approach and presents the corresponding findings. The experiments were systematically designed to assess the methodology’s performance under diverse operational conditions, including both standard execution scenarios and environments impacted by security threats such as DDoS attacks.

To determine the efficiency and robustness of the proposed solution, key performance indicators in the form of execution time, accuracy, and resource utilization were analyzed. The obtained results were then compared with existing methodologies reported in the literature to highlight the achieved improvements and substantiate the effectiveness of the proposed approach. This comparative evaluation provides insights into the advantages and potential applications of the method in real-world cloud computing environments.

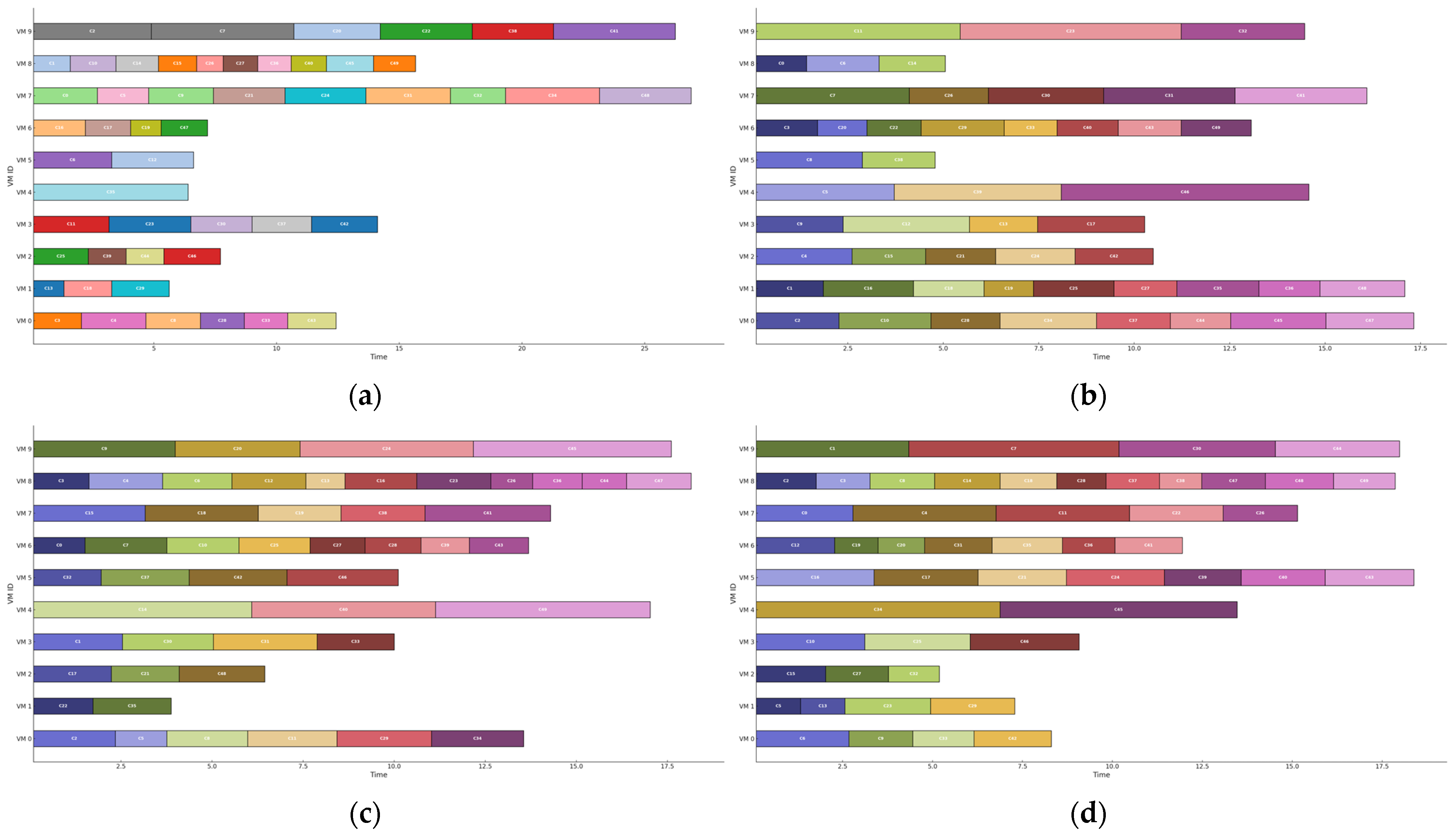

Figure 5 shows how different meta-intuitive algorithms managed task scheduling in a cloud computing environment under normal conditions, using 10 iterations and 50 cloudlets. The results highlighted clear differences in how each algorithm responded to disruptions, particularly in the presence of DDoS attacks.

The ABC algorithm displayed complete consistency, maintaining the same task assignments regardless of external interference. This suggests that its scheduling approach remains rigid and unaffected, ensuring stability but lacking adaptability to changing conditions. In contrast, the GA exhibited considerable instability, with over 30 out of 50 cloudlets reassigned to different VMs under attack. This shift indicated that the GA failed to sustain a structured task allocation, leading to unpredictable assignments.

A comparison between the GA and GA–PSO revealed that the hybrid approach achieved greater scheduling balance. While the GA struggled with frequent disruptions, the GA–PSO maintained a more controlled task distribution. However, fluctuations still occurred, suggesting that although the hybrid method improved reliability, it did not entirely prevent task reallocation when subjected to attacks.

Further examination of the GA–PSO and PSO algorithms under DDoS influence demonstrated PSO’s superior stability. While the GA–PSO performed more consistently than the GA, PSO experienced only minor task shifts, making it the most resilient approach in the comparison.

These findings indicate that the ABC maintained an unchanged structure, PSO experienced minimal disruptions, the GA suffered significant inconsistencies, and the GA–PSO provided an intermediate level of stability. To fully assess the impact of these variations on system efficiency, further analysis of execution time and makespan differences would be essential.

Figure 5 shows that in a normal environment, the ABC (a) remains unchanged under DDoS; GA (b) shows significant task reassignments; hybrid GA–PSO (c) and PSO (d) exhibit intermediate stability.

Figure 6 shows that under DDoS attacks, PSO maintains the most stable task assignments, the GA shows drastic shifts, the ABC is static, and the hybrid GA–PSO mitigates the GA’s instability to some extent.

Figure 6 also shows an evaluation of how meta-intuitive algorithms handle task scheduling in a cloud computing environment under DDoS attack conditions. The analysis covers four key approaches: ABC, GA, hybrid GA–PSO, and PSO, each tested over 10 iterations with 50 cloudlets.

The results reveal clear distinctions in terms of how these algorithms respond to system disruptions. ABC maintains an identical scheduling structure to its normal state, showing no adaptation to attack-induced variability. This suggests that while the ABC remains consistent, it lacks responsiveness to dynamic threats.

In contrast, the GA undergoes significant disruption, with over 30 cloudlets being reassigned to different VMs. This instability indicates that the GA’s scheduling mechanism is highly sensitive to external interference, leading to a loss of structured task distribution. The GA–PSO, however, demonstrates greater stability, mitigating some of the scheduling inconsistencies seen in GA. Although the GA–PSO still exhibits some variations, its hybrid approach enhances resilience, offering a more controlled allocation strategy.

Among all the algorithms, PSO proves to be the most stable under attack conditions. Most cloudlets retain their original VM assignments, with only minor shifts observed. This suggests that PSO inherently provides a more structured and resilient task scheduling mechanism, making it less prone to attack-induced inefficiencies. The analysis indicates that PSO maintains the most stable and efficient scheduling performance in a disrupted environment, whereas the GA exhibits significant instability, struggling to preserve task assignments under attack conditions. The GA–PSO mitigates some of the GA’s weaknesses by providing a more balanced allocation, though it still experiences fluctuations. In contrast, the ABC remains entirely unchanged, neither adapting to external threats nor experiencing performance degradation.

4.1. Overall Performance Degradation

This section examines the overall performance degradation of meta-intuitive algorithms under DDoS attack conditions, focusing on their ability to maintain scheduling stability and system efficiency. The findings reveal notable differences in how each algorithm responds to external disruptions, impacting task allocation, execution time, and makespan performance.

Among the evaluated algorithms, PSO demonstrates the highest level of stability, with only minor task assignment variations observed. Most cloudlets remain allocated to their original VMs, indicating a robust scheduling mechanism that resists disruptions. Conversely, the GA experiences substantial instability, with over 30 out of 50 cloudlets being reassigned under attack conditions, reflecting its high sensitivity to scheduling inconsistencies. The ABC, on the other hand, remains entirely static, neither adapting to the attack nor suffering from task displacement, suggesting a rigid but predictable scheduling approach. Increasing the number of iterations from 10 to 100 improved the GA–PSO makespan by approximately 15%, while PSO and the ABC showed less than 5% improvement. This indicates that the hybrid model benefits more significantly from increased exploration time.

To fully assess the impact of DDoS attacks on overall system efficiency, key performance indicators such as execution time and makespan must be closely analyzed. PSO’s scheduling consistency may help minimize processing delays, whereas the GA’s unpredictability could lead to extended execution times and inefficient resource utilization. The GA–PSO, despite its relative improvement over the GA, requires further investigation to determine whether its structured scheduling translates into measurable efficiency gains. The ABC’s unchanged scheduling may ensure predictability, but its lack of adaptability raises concerns about how well it would perform in dynamic, real-world cloud environments.

By analyzing execution time, makespan variations, and resource utilization under attack conditions, this study aims to provide a comprehensive evaluation of how different scheduling strategies contribute to cloud computing resilience in the face of cyber threats.

DDoS attacks disrupt cloud-based systems by overwhelming network and computational resources, causing delays, resource exhaustion, and instability in task execution. In such an environment, cloud infrastructures experience increased latency, irregular resource allocation, and unpredictable execution times, making efficient task scheduling a significant challenge.

Different scheduling algorithms respond to DDoS-induced disruptions in varying ways. PSO maintains a relatively stable task allocation, minimizing deviations in assignments, while the GA exhibits high volatility, frequently reassigning tasks and losing scheduling consistency. The GA–PSO offers a more structured approach than the GA, yet still experiences fluctuations that affect performance. The ABC, on the other hand, remains completely static, showing neither degradation nor adaptation, which raises concerns about its responsiveness in dynamic cloud environments.

To assess how well these algorithms function under attack conditions, key performance indicators such as execution time, makespan, and system resource utilization need to be analyzed. Understanding how each algorithm handles scheduling in an unstable environment provides critical insights into its ability to sustain efficiency and operational reliability in cloud computing systems.

4.2. Impact by Threat Type

This section explores how different threat types, particularly DDoS attacks, influence the performance of task scheduling algorithms. The analysis emphasizes variations in algorithm stability and adaptability, offering insights into their effectiveness in minimizing performance disruptions under adverse conditions.

The effects of DDoS attacks are most pronounced in short-term task execution, where network congestion and resource exhaustion create scheduling inconsistencies and irregular execution times. These conditions disrupt system efficiency, forcing algorithms to adapt in real time to maintain workload distribution. While the GA exhibits significant instability, frequently reassigning tasks and struggling to maintain structured scheduling, PSO demonstrates greater resilience by preserving task consistency with minimal deviations.

The GA–PSO improves system stability, particularly with regard to long-term optimization tasks in dynamic conditions.

The inconsistent performance of the GA, particularly under DDoS conditions, may be attributed to its reliance on stochastic genetic operations such as mutation and crossover. These operations introduce significant variability between runs and are sensitive to noise caused by resource saturation. In contrast, PSO maintains a population-based memory of best solutions, enabling more consistent and stable performance across varying scenarios. The GA–PSO mitigates the GA’s randomness by integrating PSO’s convergence behavior, yet some residual fluctuation remains due to genetic diversity mechanisms.

To fully assess the impact of threats on scheduling efficiency, it is essential to analyze execution time fluctuations, makespan stability, and resource utilization. These factors provide a deeper understanding of how different scheduling strategies can be refined to improve resilience in dynamic cloud environments.

4.3. Critical Insights and Resilience of Hybrid Algorithms

Building on the comparative results, the GA–PSO hybrid strategy has demonstrated strong performance in addressing the complexities of cloud-based task scheduling.

By seamlessly combining the advantages of PSO and GA, the hybrid GA–PSO algorithm effectively adapts to dynamic cloud environments characterized by fluctuating workloads and variable resource availability. Its resilience remains intact even in the presence of network disruptions or security threats, making it a highly adaptive scheduling approach.

Furthermore, this hybrid strategy has demonstrated its ability to address the complexities of cloud-based task scheduling by optimizing cloudlet allocation to VMs and minimizing execution time, even in scenarios where security vulnerabilities compromise system stability. The integration of local and global optimization techniques, coupled with stagnation mitigation strategies, enables high adaptability and resilience in resource-constrained and high-risk environments.

By tackling critical challenges in cloud systems that are susceptible to security threats, the hybrid GA–PSO algorithm provides a reliable framework for ensuring stable and efficient task scheduling. Its adaptability and robustness make it a valuable tool for researchers and practitioners striving to enhance both the performance and security of cloud computing infrastructures.

Interestingly, although the ABC algorithm displays faster execution times, it consistently reports lower resource utilization. This behavior suggests that the ABC may be prioritizing speed by allocating tasks unevenly, potentially leaving some VMs underutilized. While this results in low makespan values in certain trials, it raises concerns about fairness and scalability. In contrast, PSO and the GA–PSO exhibit better workload balance and are more resilient under dynamic conditions, even if their execution times are slightly higher.

Table 4 presents a comparative analysis of the average execution times of various algorithms across different studies, evaluating their performance under identical or similar simulation settings. The results demonstrate that the proposed hybrid GA–PSO algorithm achieves significantly lower execution times compared to conventional approaches such as PSO, GAs, and the ABC. This improvement underscores the effectiveness of hybrid methodologies in optimizing task scheduling and enhancing computational efficiency, particularly in complex and resource-constrained cloud environments. These findings highlight the potential of hybrid algorithms in addressing the limitations of traditional scheduling techniques by balancing exploration and exploitation to improve overall system performance.

The findings from the study were evaluated using Min, Max, Avg, and Std values. However, the assessments in

Table 4,

Table 5 and

Table 6 were based on average makespan (Avg) results, as they provide a more balanced representation of algorithmic performance across different scenarios. The study highlights the performance differences of GAs, PSO, ABC, and the proposed hybrid GA–PSO algorithm under both normal and DDoS attack conditions. A particularly noteworthy observation is the unexpected performance of the ABC algorithm. While the ABC exhibits higher makespan values in terms of Avg results, its resource utilization remains significantly lower compared to other algorithms (

Table 7,

Table 8 and

Table 9). This suggests that the ABC executes tasks rapidly but does not efficiently allocate system resources or distribute workloads evenly. The low resource utilization raises questions about whether the ABC benefits from an advantageous scheduling mechanism or if an experimental inconsistency is influencing the results. The irregularities observed in the ABC’s performance warrant further investigation. Despite showing high makespan values, its lower resource utilization suggests an imbalance between execution speed and efficiency. This discrepancy may be attributed to a scheduling anomaly, an unintended modeling advantage, or a fundamental characteristic of the algorithm. As a result, it is crucial to determine whether the ABC’s behavior is an inherent feature of its scheduling logic or an experimental inconsistency that requires further validation. The role of the ABC in comparative analysis also requires careful consideration. Excluding the ABC entirely could weaken the comparative strength of the study, as it would narrow the scope of evaluation between the GA, PSO, and GA–PSO. However, the ABC’s unusually low resource utilization despite its high execution speed raises the possibility of an underlying anomaly in task execution. A balanced approach would be to retain the ABC in the analysis while clearly addressing this anomaly, ensuring a comprehensive yet transparent evaluation.

Meanwhile, the GA, PSO, and GA–PSO exhibit more consistent performance trends, making their comparisons more reliable. Removing ABC from the assessment could limit the study’s depth but including it without verifying its results may introduce misleading conclusions. The most rigorous approach would be to highlight ABC’s unusual performance while conducting additional validation to determine the accuracy and reliability of its reported outcomes. Ultimately, a thorough investigation into ABC’s unexpected performance trends is essential to ensure an accurate evaluation of all algorithms. Without further verification, any comparative analysis involving ABC could introduce uncertainty regarding the validity of the findings. By examining the interplay between execution time, resource allocation, and workload distribution, a clearer understanding of ABC’s role in the study can be achieved, maintaining both scientific integrity and analytical precision.

The results of this study were analyzed using Min, Max, Avg, and Std values. However, in

Table 4,

Table 5 and

Table 6, the evaluation was based on average makespan (Avg) values, as they offer a more stable reference for comparing algorithmic performance under different conditions. The findings highlight the differences in scheduling efficiency among the GA, PSO, ABC, and the proposed hybrid GA–PSO algorithm in both normal and DDoS environments. One of the most striking observations is the unexpected performance of the ABC algorithm. While the ABC consistently demonstrates lower resource utilization compared to the GA, PSO, and GA–PSO (

Table 7,

Table 8 and

Table 9), it simultaneously achieves the fastest execution times. This discrepancy suggests that the ABC completes tasks quickly but does not fully utilize available system resources, raising questions about whether its efficiency is due to optimized scheduling or an imbalance in workload distribution. Unlike PSO and the GA–PSO, which maintain higher resource utilization, the ABC prioritizes speed over computational resource engagement, making its scheduling mechanism notably different from the others.

The execution time analysis (

Table 10,

Table 11 and

Table 12) further illustrates this distinction. The ABC consistently outperforms the other algorithms in terms of speed, achieving significantly shorter execution times. However, this rapid task completion does not necessarily indicate a more efficient scheduling strategy, as it may result from an uneven distribution of computational workload rather than true optimization. PSO and the GA–PSO, while slightly slower, demonstrate a more stable workload balance, making them preferable choices in scenarios where predictable and well-distributed execution is required.

A critical finding of this study is the impact of DDoS attacks on scheduling performance. The expectation was that task allocation would remain consistent between normal and DDoS environments. This expectation held true for PSO, the GA–PSO, and the ABC, which maintained stable scheduling structures despite the attacks. However, the GA exhibited significant task reassignment variations under DDoS conditions, indicating its susceptibility to external disruptions. This instability underscores the GA’s vulnerability in adversarial conditions, reinforcing the GA–PSO’s advantages, as it mitigates the GA’s weaknesses while retaining PSO’s adaptive capabilities.

To further examine the consistency of scheduling under both normal and DDoS conditions, graphical representations were created for scenarios involving 50 cloudlets (10 iterations, 50 cloudlets; 50 iterations, 50 cloudlets; and 100 iterations, 50 cloudlets). Due to the large number of cloudlets, direct visualization may not be entirely clear. To address this, a color-based approach without algorithm labels was used, ensuring the visualization remains comprehensible while preserving meaningful comparisons.

The findings emphasize critical trade-offs between execution speed, resource utilization, and scheduling stability:

The ABC completes tasks at a much faster rate but does not efficiently allocate resources, raising concerns about workload distribution fairness.

PSO and the GA–PSO demonstrate superior resilience under DDoS conditions, making them more suitable for cloud environments where scheduling stability is essential.

The GA’s instability under attack conditions suggests the need for more robust scheduling mechanisms, particularly in environments where external disruptions are a risk factor.

Given these findings, further analysis is required to determine whether the ABC’s lower resource utilization is a natural characteristic of its scheduling logic or if it benefits from an unintended advantage in the execution model. Additionally, visualizing task scheduling consistency under normal and DDoS conditions is crucial for validating how each algorithm adapts to adversarial conditions.

Table 13 provides a comprehensive comparison of makespan results across different algorithms, emphasizing the enhanced performance of hybrid approaches in optimizing task scheduling. The results consistently indicate that hybrid algorithms, particularly the hybrid GA–PSO, outperform conventional methods by significantly reducing makespan in diverse computational settings.

Previous research supports these findings. In a study conducted by Guo et al. [

13]. the hybrid stagnation PSO–GA achieved a makespan of 18.704, significantly outperforming standalone PSO (38.69) and other PSO variations, such as CM-PSO (51.53) and L-PSO (329.96) Likewise, findings from Alsaidy et al. [

14] indicate that hybrid methods resulted in makespan values as low as 63.714, surpassing conventional scheduling techniques such as PSO (155.9) and heuristic-based approaches such as Min–Min (155.3) and Max–Min (172.4)

These results highlight the efficiency and adaptability of hybrid algorithms, even under varying conditions such as different population sizes, cloud infrastructures, and resource constraints. The key strength of hybrid methods lies in their ability to combine global search capabilities with local optimization techniques, allowing for better task distribution and reduced execution time. This balanced approach makes hybrid scheduling particularly well-suited for complex cloud computing environments, where task scheduling efficiency directly impacts overall system performance.

As shown in

Table 13, the proposed hybrid GA–PSO outperforms conventional methods in terms of makespan performance across various simulation settings, confirming its effectiveness with regard to both optimization and adaptability.

Table 13 presents a comparative analysis between the makespan values reported from selected prior studies and the results obtained with the proposed hybrid GA–PSO algorithm under equivalent experimental conditions. For instance, Guo et al. [

13] reported makespans of 38.69 s for PSO, 51.53 s for CM-PSO, and 329.96 s for L-PSO, while our hybrid GA–PSO achieved a significantly lower makespan of 20.17 s under the same workload and configuration. In the study by Bhagwan et al. [

15], various CSO variants yielded makespans ranging from 151.23 s to 181.09 s, compared to the 161.51 s achieved by our approach. Alsaidy et al. [

14] documented makespans between 155.3 s and 172.4 s for different PSO and heuristic variants, while the hybrid GA–PSO achieved a substantially lower value of 59.09 s. Furthermore, Tamilarasu et al. [

6] reported makespans between 213.74 s and 245.14 s for the ICOATS and WHACO-TSM methods, compared to the 126.46 s achieved in our experiments. Likewise, Amalarethinam et al. [

12] observed makespans of 142 s (HEFT) and 111 s (WSGA), whereas the hybrid GA–PSO completed scheduling tasks in only 47.93 s. Finally, Alsaidy et al. [

14] obtained makespans ranging from 20.6 s to 22.0 s with the SJFP-PSO and LJFP-PSO methods, while our hybrid GA–PSO achieved an even lower makespan of 11.75 s, outperforming the best PSO variants. These results collectively demonstrate that across multiple independent studies, the hybrid GA–PSO consistently outperforms prior techniques in terms of execution time. Moreover, since the experimental conditions, simulation parameters, and workload settings were carefully synchronized with those used in previous studies, thus ensuring a direct and reliable comparison, the significant reductions in makespan confirm that the proposed hybrid GA–PSO algorithm not only accelerates scheduling processes but also maintains robust performance even under dynamic and complex cloud computing environments.

Additionally, unlike previous studies that primarily assess performance in static conditions, this research focuses on dynamic and unpredictable cloud environments, demonstrating enhanced resilience in the face of disruptions. These findings not only align with existing literature but also expand upon it, underscoring the need for integrated, robust scheduling frameworks that can optimize performance in complex cloud computing systems (

Table 13).

4.4. Alternative Mechanisms for Performance Enhancement

Beyond traditional hybrid metaheuristics, other complementary strategies can further improve exploration, convergence speed, and robustness against local optima.

For example:

If the PSO inertia weight was dynamically updated with logistic or tent chaotic maps, iterative vibrations would decrease, and the convergence rate could increase.

Simulated annealing, like probabilistic acceptance rules, can increase the rate of exiting local optimum traps by allowing PSO particles to sometimes accept worse solutions.

It is observed that our study focuses on the intensification aspect of the GA–PSO hybrid. If diversity strategies such as random restart or opposition-based learning are added, the discovery of new solution sets during stagnation periods can increase, and the deviation in the worst-case makespan can be further reduced, according to our findings.

If short-term hill-climbing were applied to selected individuals while performing a global search PSO–GA blend in the hybrid, it would reduce our best makespan values.

While our current work focuses on GA–PSO hybridization, future model extensions will integrate and compare these mechanisms—individually and in combination—to assess their impact on scheduling performance in both normal and adversarial cloud environments.

5. Conclusions

Cloud computing has emerged as a fundamental pillar of modern technology, enabling scalable data management, high computational power, and operational flexibility across various industries. However, its widespread adoption also introduces significant challenges, particularly in terms of security and performance optimization. Threats such as DDoS attacks and malware (e.g., viruses) present substantial risks by degrading system efficiency and potentially disrupting service availability. These challenges emphasize the critical need for robust and secure solutions to maintain the reliability and efficiency of cloud infrastructure.

This study examined task scheduling in cloud environments through the application of metaheuristic algorithms, with a particular focus on the impact of DDoS scenarios. The findings reveal that while security threats negatively affect system performance, their influence varies in scope and severity. DDoS attacks primarily overload network resources, causing immediate yet temporary disruptions that manifest as delays and inefficiencies. However, they also exhaust essential computational resources such as CPU and RAM, leading to sustained performance degradation, particularly in scenarios requiring prolonged execution cycles.