InDepth: A Distributed Data Collection System for Modern Computer Networks

Abstract

1. Introduction

2. Background

2.1. Benchmark Datasets

2.2. Data Collection Systems

3. InDepth System Architecture and Cyber Range

3.1. Motivation

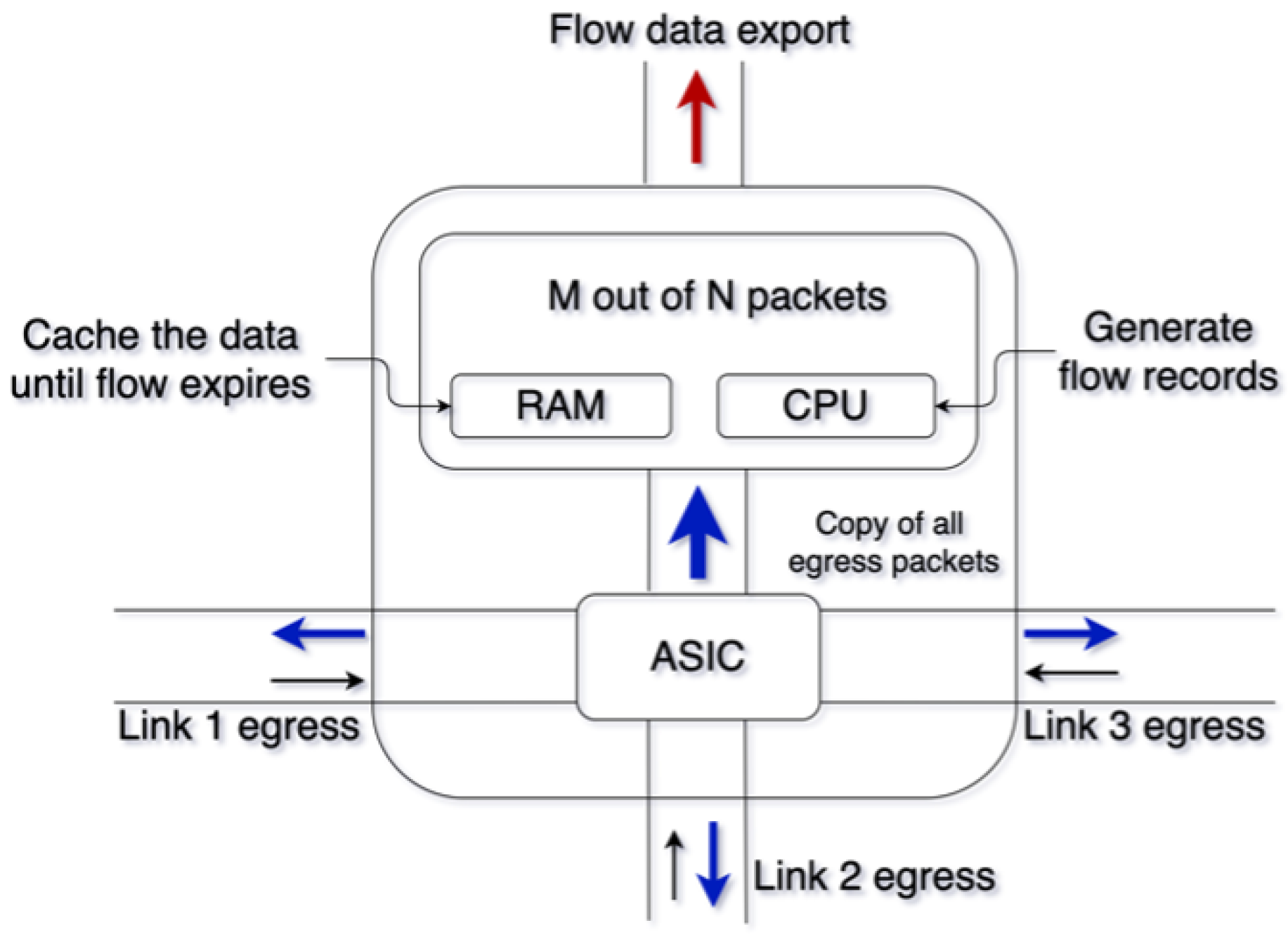

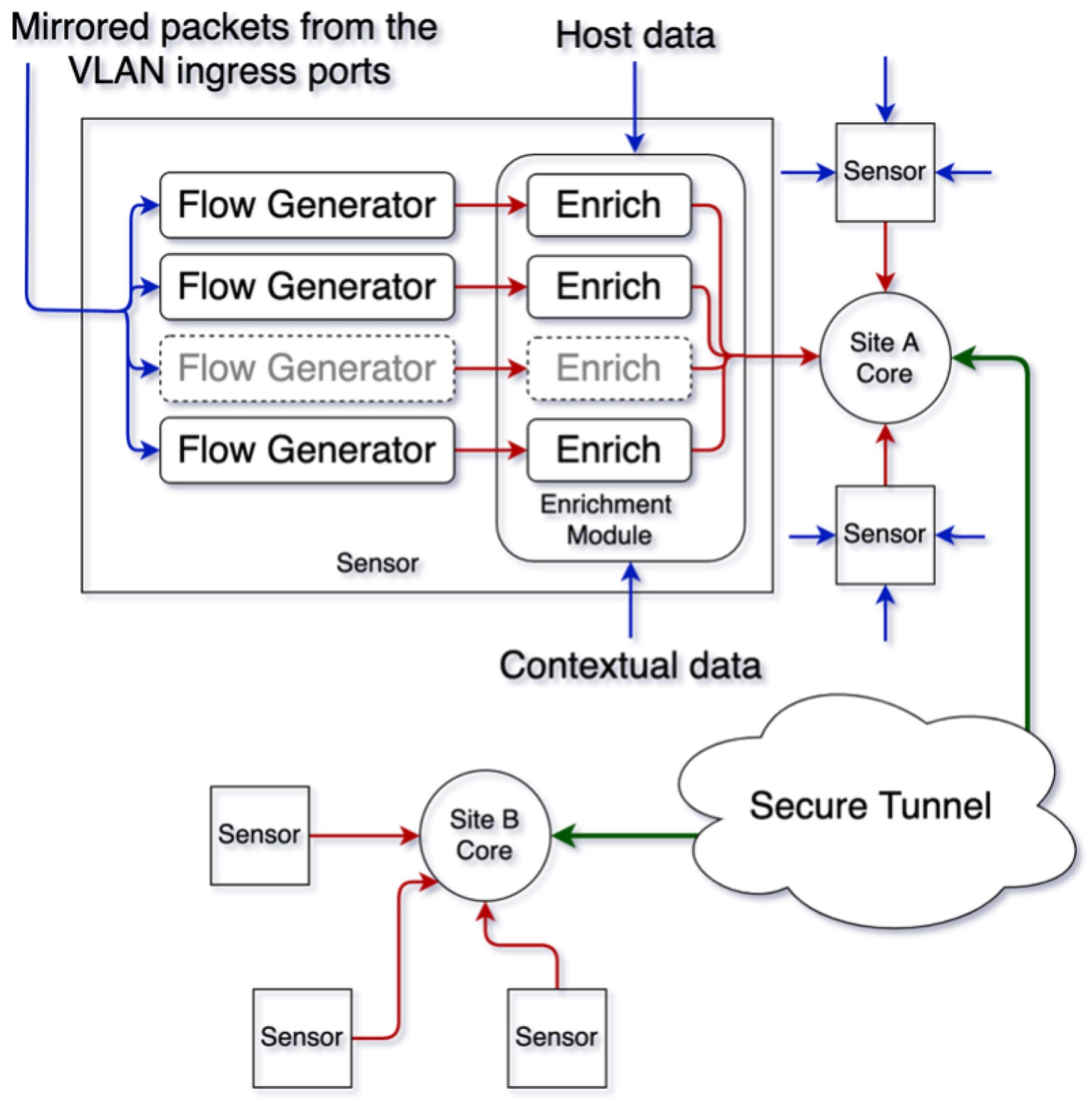

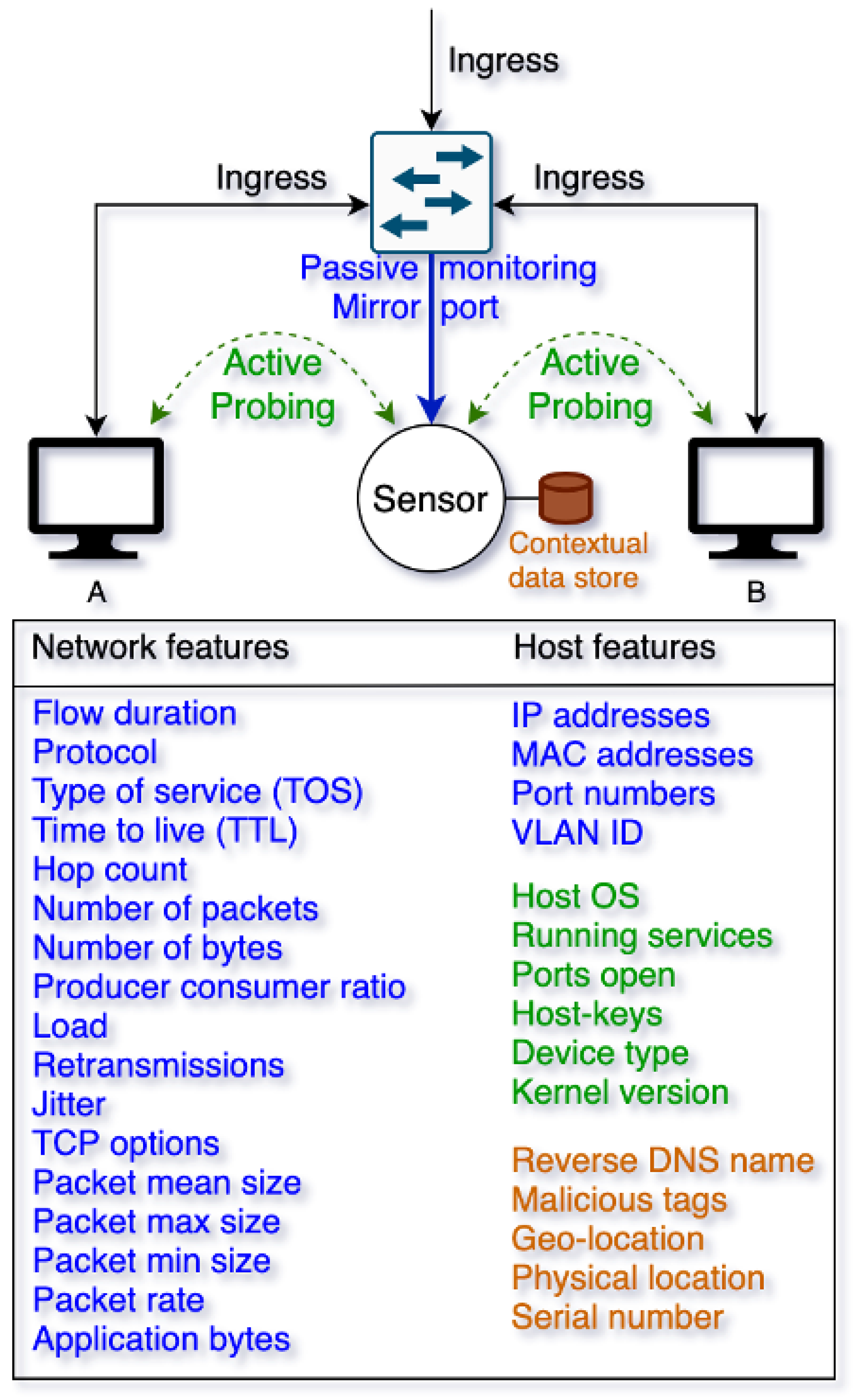

3.2. Data Generation and Storage

3.3. Modern Network Stack

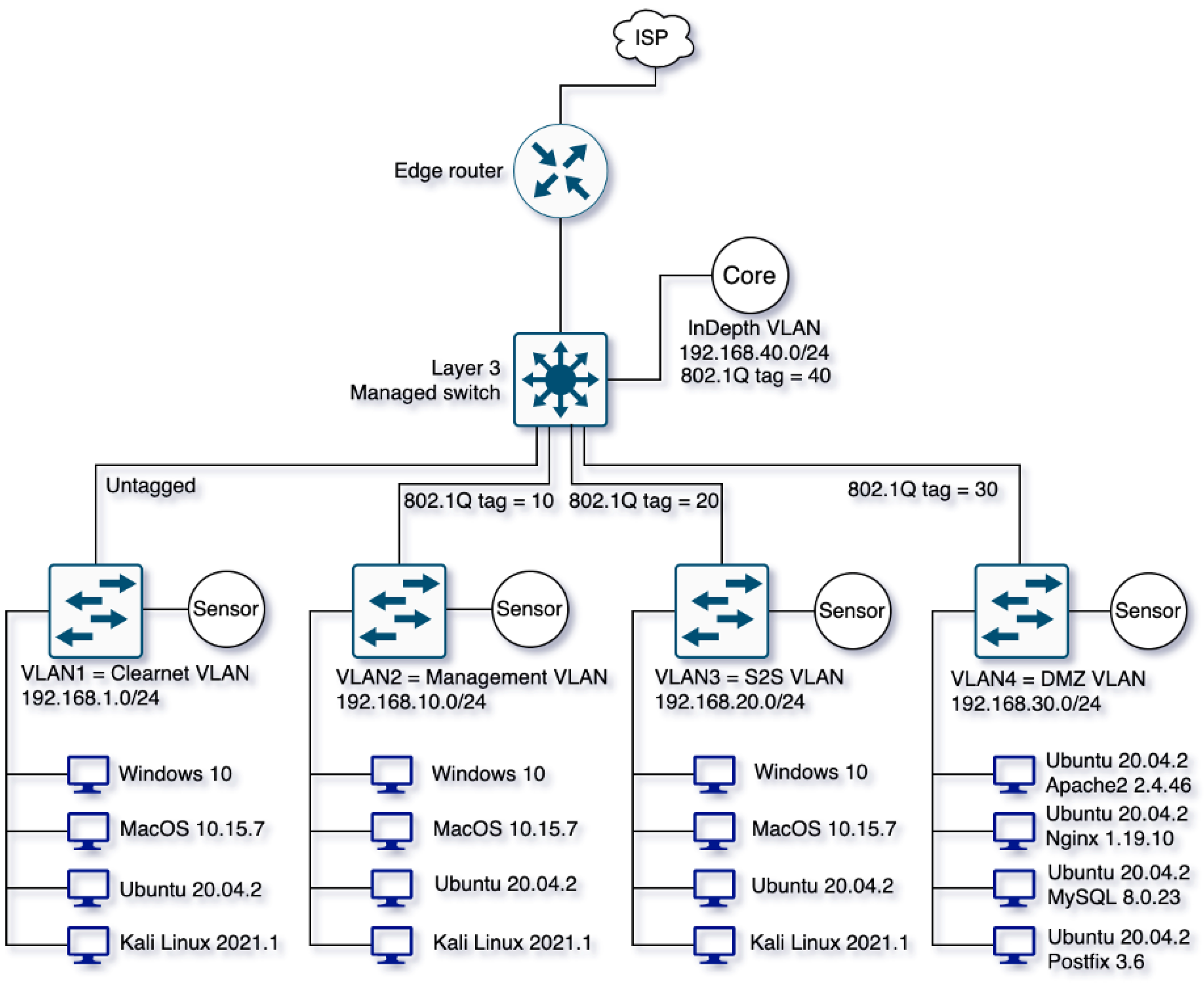

3.4. InDepth Cyber Range

- Clearnet (192.168.1.0/24, untagged): Contains standard user devices without special VPN or firewall rules.

- Management (192.168.10.0/24, tag 10): Includes devices used by management personnel, isolated from other subnets via firewall policies.

- Site-to-Site (192.168.20.0/24, tag 20): Establishes an encrypted, isolated connection to a simulated off-site network.

- DMZ (192.168.30.0/24, tag 30): Hosts critical services such as web, email, and database servers in a demilitarized zone. Although such services typically use public IP addresses, this range employs private IPs for demonstration purposes, as it is not connected to the live Internet, thus avoiding potential conflicts. The installed services run their latest versions but can be replaced with older, vulnerable counterparts (e.g., using OWASP tools [37] or Metasploitable [38]) to facilitate specific security testing and threat signature generation.

- InDepth (192.168.40.0/24, tag 40): An isolated network segment dedicated solely to the InDepth core node, which aggregates data collected from all sensor nodes across the cyber range.

4. Experimental Results

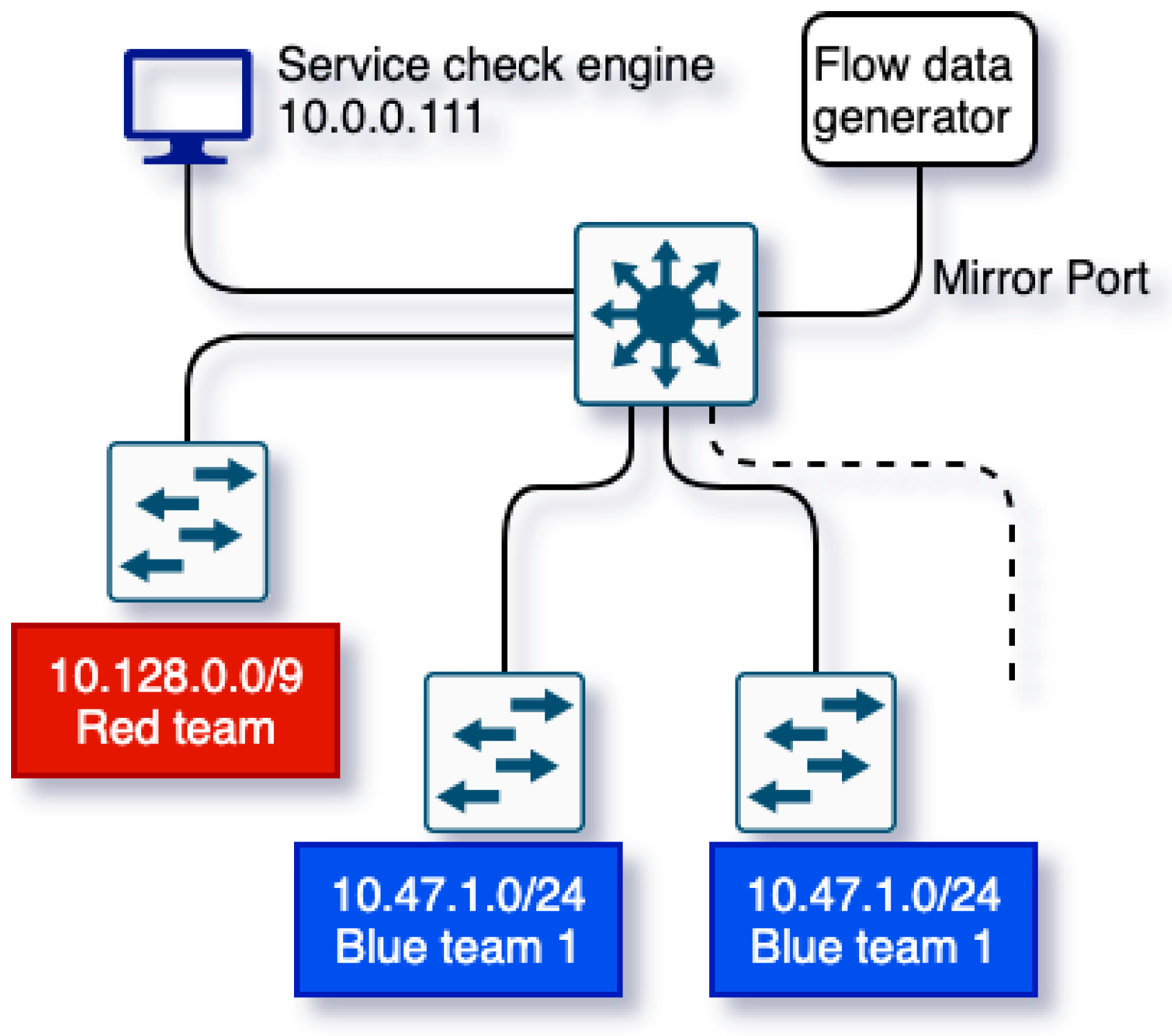

4.1. WRCCDC 2020

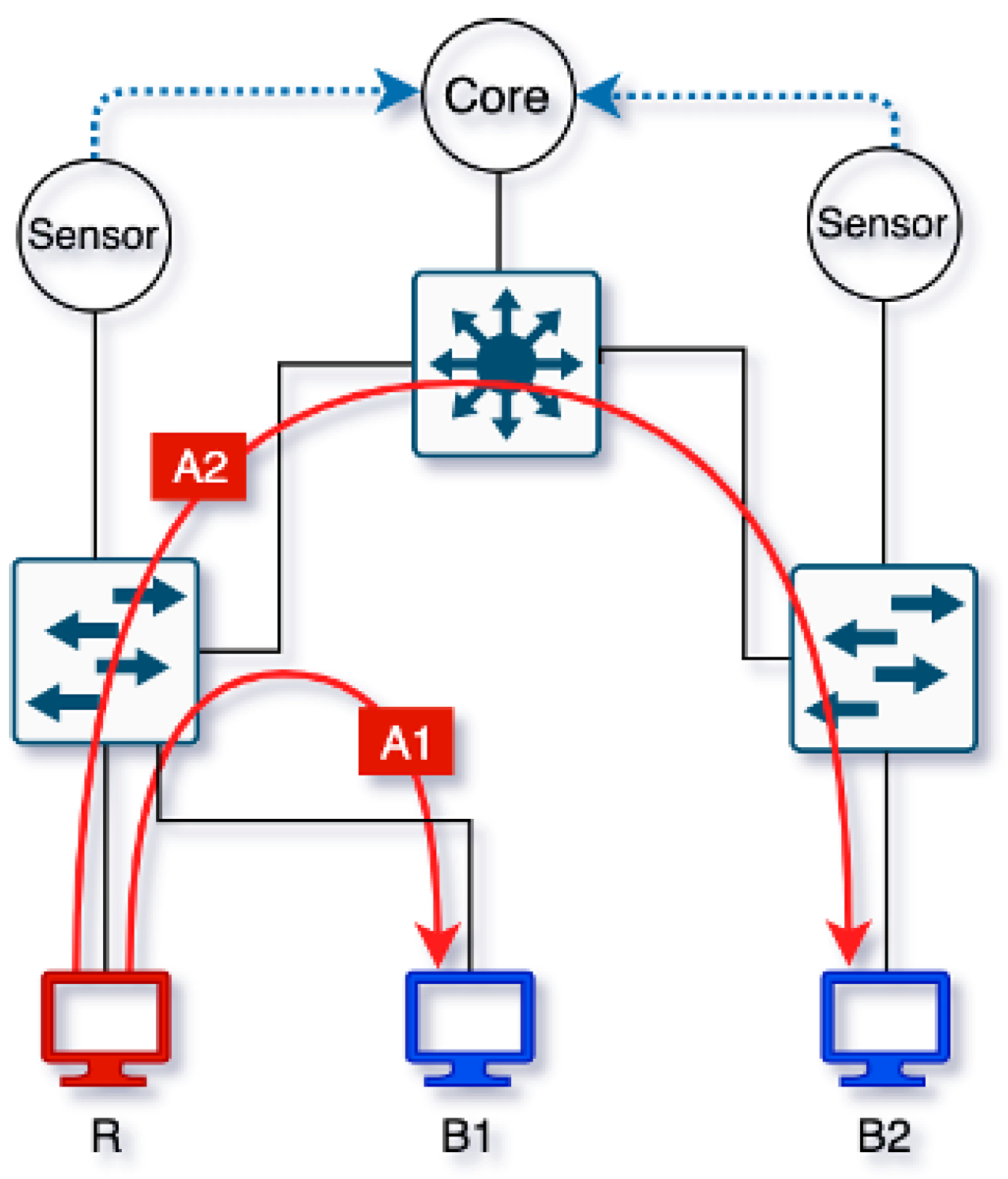

4.2. Experiment 1: Aggregation and Attribution

4.3. Experiment 2: Lateral Movement

4.4. Experiment 3: IP Address Spoofing

4.5. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- 1998 DARPA Intrusion Detection Evaluation Dataset|MIT Lincoln Laboratory. Available online: https://www.ll.mit.edu/r-d/datasets/1998-darpa-intrusion-detection-evaluation-dataset (accessed on 30 March 2025).

- KDD Cup 1999 Data. Available online: https://kdd.ics.uci.edu/databases/kddcup99/kddcup99.html (accessed on 30 March 2025).

- Tavallaee, M.; Bagheri, E.; Lu, W.; Ghorbani, A.A. A Detailed Analysis of the KDD CUP 99 Dataset. In Proceedings of the 2009 IEEE Symposium on Computational Intelligence for Security and Defense Applications, Ottawa, ON, Canada, 8–10 July 2009; pp. 1–6. [Google Scholar] [CrossRef]

- McHugh, J. Testing Intrusion Detection Systems: A Critique of the 1998 and 1999 DARPA Intrusion Detection System Evaluations as Performed by Lincoln Laboratory. ACM Trans. Inf. Syst. Secur. 2000, 3, 262–294. [Google Scholar] [CrossRef]

- Mahoney, M.V.; Chan, P.K. An Analysis of the 1999 DARPA/Lincoln Laboratory Evaluation Data for Network Anomaly Detection. In Recent Advances in Intrusion Detection; Giovanni, V., Christopher, K., Erland, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2003; pp. 220–237. [Google Scholar] [CrossRef]

- Maciá-Fernández, G.; Camacho, J.; Magán-Carrión, R.; García-Teodoro, P.; Therón, R. UGR‘16: A New Dataset for the Evaluation of Cyclostationarity-based Network IDSs. Comput. Secur. 2018, 73, 411–424. [Google Scholar] [CrossRef]

- Shiravi, A.; Shiravi, H.; Tavallaee, M.; Ghorbani, A.A. Toward Developing a Systematic Approach to Generate Benchmark Datasets for Intrusion Detection. Comput. Secur. 2012, 31, 357–374. [Google Scholar] [CrossRef]

- Creech, G.; Hu, J. Generation of a New IDS Test Dataset: Time to Retire the KDD Collection. In Proceedings of the 2013 IEEE Wireless Communications and Networking Conference (WCNC), Shanghai, China, 7–10 April 2013; pp. 4487–4492. [Google Scholar] [CrossRef]

- WRCCDC Public Archive. Available online: https://archive.wrccdc.org/pcaps/2020/ (accessed on 30 March 2025).

- Kodituwakku, A.; Gregor, J. InMesh: A Zero-Configuration Agentless Endpoint Detection and Response System. Electronics 2025, 14, 1292. [Google Scholar] [CrossRef]

- Khraisat, A.; Gondal, I.; Vamplew, P.; Kamruzzaman, J. Survey of Intrusion Detection Systems: Techniques, Datasets and Challenges. Cybersecurity 2019, 2, 20. [Google Scholar] [CrossRef]

- KDDCUP99 Dataset. Available online: https://www.tensorflow.org/datasets/catalog/kddcup99 (accessed on 30 March 2025).

- Kayacik, H.G.; Zincir-Heywood, N.; Heywood, M. Selecting Features for Intrusion Detection: A Feature Relevance Analysis on KDD 99. In Proceedings of the Third Annual Conference on Privacy, Security and Trust, St. Andrews, NB, Canada, 12–14 October 2005; Available online: https://www.semanticscholar.org/paper/Selecting-Features-for-Intrusion-Detection%3A-A-on-99-Kayacik-Zincir-Heywood/60e28c7da56eb61dd8ddb710a6f079ef02668014 (accessed on 30 March 2025).

- Yavanoglu, O.; Aydos, M. A Review on Cyber Security Datasets for Machine Learning Algorithms. In Proceedings of the 2017 IEEE International Conference on Big Data (Big Data), Boston, MA, USA, 11–14 December 2017; pp. 2186–2193. [Google Scholar] [CrossRef]

- Moustafa, N.; Slay, J. UNSW-NB15: A Comprehensive Dataset for Network Intrusion Detection Systems. In Proceedings of the 2015 Military Communications and Information Systems Conference (MilCIS), Canberra, Australia, 10–12 November 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Intrusion Detection Evaluation Dataset (ISCXIDS2012). Available online: https://www.unb.ca/cic/datasets/ids.html (accessed on 30 March 2025).

- Panigrahi, R.; Borah, S. A Detailed Analysis of CIC-IDS2017 Dataset for Designing Intrusion Detection Systems. Int. J. Eng. Technol. 2018, 7, 479–482. [Google Scholar]

- Koroniotis, N.; Moustafa, N.; Sitnikova, E.; Turnbull, B. Towards the Development of Realistic Botnet Dataset in the Internet of Things for Network Forensic Analytics: Bot-IoT Dataset. Future Gener. Comput. Syst. 2019, 100, 779–796. [Google Scholar] [CrossRef]

- MITRE ATT&CK. Available online: https://attack.mitre.org/ (accessed on 30 April 2025).

- Suricata. Available online: https://github.com/OISF/suricata (accessed on 30 April 2025).

- Wazuh. Available online: https://github.com/wazuh/wazuh (accessed on 30 April 2025).

- Zeek. Available online: https://github.com/zeek/zeek (accessed on 30 April 2025).

- ISOIEC 27001:2022; Information Security, Cybersecurity and Privacy Protection—Information Security Management Systems—Requirements. ISO: Geneva, Switzerland, 2022. Available online: https://www.iso.org/standard/27001 (accessed on 30 April 2025).

- Cantelli-Forti, A.; Capria, A.; Saverino, A.L.; Berizzi, F.; Adami, D.; Callegari, C. Critical infrastructure protection system design based on SCOUT multitech seCurity system for intercOnnected space control groUnd staTions. Int. J. Crit. Infrastruct. Prot. 2021, 32, 100407. [Google Scholar] [CrossRef]

- Wu, H.; Liu, Y.; Ni, S.; Cheng, G.; Hu, X. LossDetection: Real-Time Packet Loss Monitoring System for Sampled Traffic Data. IEEE Trans. Netw. Serv. Manag. 2023, 20, 30–45. [Google Scholar] [CrossRef]

- Morariu, C.; Stiller, B. DiCAP: Distributed Packet Capturing Architecture for High-speed Network Links. In Proceedings of the 2008 33rd IEEE Conference on Local Computer Networks (LCN), Montreal, QC, Canada, 14–17 October 2008; pp. 168–175. [Google Scholar] [CrossRef]

- Bullard, C. Argus Software. Available online: https://github.com/openargus/argus (accessed on 30 March 2025).

- Nmap: The Network Mapper. Available online: https://nmap.org/ (accessed on 30 March 2025).

- Elasticsearch. Available online: https://github.com/elastic/elasticsearch (accessed on 30 March 2025).

- Donenfeld, J.A. WireGuard VPN Tunnel. Available online: https://www.wireguard.com/ (accessed on 30 March 2025).

- VIRL. Available online: https://learningnetwork.cisco.com/s/virl (accessed on 30 March 2025).

- Linux Foundation. Open vSwitch. Available online: https://www.openvswitch.org/ (accessed on 30 March 2025).

- Open Networking Foundation. Open Network Operating System (ONOS) SDN Controller for SDN/NFV Solutions. Available online: https://opennetworking.org/onos/ (accessed on 30 March 2025).

- Durumeric, Z.; Wustrow, E.; Halderman, J.A. ZMap: Fast Internet-Wide Scanning and its Security Applications. Available online: https://github.com/zmap/zmap (accessed on 30 March 2025).

- Kodituwakku, H.A.D.E.; Keller, A.; Gregor, J. InSight2: A Modular Visual Analysis Platform for Network Situational Awareness in Large-Scale Networks. Electronics 2020, 9, 1747. [Google Scholar] [CrossRef]

- IEEE Standard for Local and Metropolitan Area Networks–Bridges and Bridged Networks. In IEEE Std 802.1Q-2022 (Revision of IEEE Std 802.1Q-2018) 2022. Available online: https://ieeexplore.ieee.org/document/10004498 (accessed on 30 March 2025). [CrossRef]

- Sagar, D.; Kukreja, S.; Brahma, J.; Tyagi, S.; Jain, P. Studying Open Source Vulnerability Scanners for Vulnerabilities in Web Applications. IIOAB J. 2018, 9, 43–49. [Google Scholar]

- Metasploitable 2 Exploitability Guide Documentation. Available online: https://docs.rapid7.com/metasploit/metasploitable-2-exploitability-guide/ (accessed on 30 March 2025).

- Crowdstrike Global Threat Report. Available online: https://www.crowdstrike.com/resources/reports/2020-crowdstrike-global-threat-report (accessed on 30 March 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kodituwakku, A.; Gregor, J. InDepth: A Distributed Data Collection System for Modern Computer Networks. Electronics 2025, 14, 1974. https://doi.org/10.3390/electronics14101974

Kodituwakku A, Gregor J. InDepth: A Distributed Data Collection System for Modern Computer Networks. Electronics. 2025; 14(10):1974. https://doi.org/10.3390/electronics14101974

Chicago/Turabian StyleKodituwakku, Angel, and Jens Gregor. 2025. "InDepth: A Distributed Data Collection System for Modern Computer Networks" Electronics 14, no. 10: 1974. https://doi.org/10.3390/electronics14101974

APA StyleKodituwakku, A., & Gregor, J. (2025). InDepth: A Distributed Data Collection System for Modern Computer Networks. Electronics, 14(10), 1974. https://doi.org/10.3390/electronics14101974