Abstract

Natural human–human communication consists of multiple modalities interacting together. When an intelligent robot or wheelchair is being developed, it is important to consider this aspect. One of the most common modality pairs in multimodal human–human communication is speech–hand gesture interaction. However, not all the hand gestures that can be identified in this type of interaction are useful. Some hand movements can be misinterpreted as useful hand gestures or intentional hand gestures. Failing to filter out these unintentional gestures could lead to severe faulty identifications of important hand gestures. When speech–hand gesture multimodal systems are designed for disabled/elderly users, the above-mentioned issue could result in grave consequences in terms of safety. Gesture identification systems developed for speech–hand gesture systems commonly use hand features and other gesture parameters. Hence, similar gesture features could result in the misidentification of an unintentional gesture as a known gesture. Therefore, in this paper, we have proposed an intelligent system to filter out these unnecessary gestures or unintentional gestures before the gesture identification process in multimodal navigational commands. Timeline parameters such as time lag, gesture range, gesture speed, etc., are used in this filtering system. They are calculated by comparing the vocal command timeline and gesture timeline. For the filtering algorithm, a combination of the Locally Weighted Naive Bayes (LWNB) and K-Nearest Neighbor Distance Weighting (KNNDW) classifiers is proposed. The filtering system performed with an overall accuracy of 94%, sensitivity of 97%, and specificity of 90%, and it had a Cohen’s Kappa value of 88%.

1. Introduction

The elderly and the disabled populations are increasing day by day [1]. Therefore, it is imperative to have enough help around to assist them. However, in the current socio-political environment, employing caretakers is expensive. Experts suggest having companions who will encourage self-sufficiency. Therefore, one of the best support systems that could act as an assistant and make the user feel self-sufficient is an intelligent wheelchair [2,3]. Users would feel empowered since they are the ones in control of their life and would be able to fulfill their chores more efficiently without any human help. Furthermore, an intelligent wheelchair should be able to act as a companion and interact with the user in a human-like manner. Human–robot interaction applied to an intelligent wheelchair should be similar to human–human communication [4]. Multimodal navigation in intelligent wheelchairs is a recent advance, which improves the accuracy of understanding navigational commands compared to unimodal navigation. Different combinations of modalities such as speech–hand gestures [5], muscle movement–voice–eye movement [6], and other different combinations of modalities are used to control these intelligent wheelchairs.

General human communication consists of voice, different gestures, and other body cues. Humans who have mobility issues tend to use their hands (from elbow to fingertips) in navigational instructions. Furthermore, vocal and gestural expressions can be integrated to understand a navigation command. A single piece of communication is conveyed through a combination of modalities. The speech–hand gesture multimodal pairing has been frequently considered in human–robot interactive (HRI) systems in recent times. The reasons behind this are the frequency of use of hand gestures as a complementary modality in general human–human communication, ease of use for the elderly/disabled community, lack of individualistic interpretations for hand gestures, etc. Different nonverbal modalities in human–human day-to-day lives make it easier to convey uncertain messages. Fusing vocal command interpretation with gestural information would improve a human–robot interactive system.

In speech–hand gesture interaction, hand gestures carry a part of the message, and when interpreted, they enhance the understanding of the whole message. However, there are some gestures that a user does not intend and which could harm the message’s meaning. These gestures are called unintentional gestures. To filter out these gestures, it is important to identify aspects of the gestures in which human users would sort these out in their day-to-day lives. Consider a hand movement performed to touch your nose while you are conveying a simple vocal message, which might not mean anything. That could be an idiosyncrasy of the user which needs to be filtered out. This type of gesture can be harmful to user safety if the intelligent system cannot filter these early. Even though the gesture might have similarities with a common gesture, the timing of the gesture and other factors would indicate the gesture as unintentional [7]. If the system is built solely on gesture features and vocal ambiguities, these unintentional gestures could cause critical damage to the user. Therefore, a robust, multimodal, interactive system should be able to filter out these unnecessary gesture elements before proceeding to the actual gesture recognition part.

Most of the hand gesture recognition systems developed for HRI systems use either hand feature information extracted by a vision sensor, images taken from a camera, EEG, or hand gloves [8,9,10]. When the systems are based on hand features, unintentional gestures that are similar in terms of the hand feature information will be processed unfiltered. Hence, unnecessary hand movements also could be identified as useful hand gestures since these systems use hand features and gesture features [11]. Some systems recognize hand gestures using other techniques such as EEG, haptics, and wearable inputs, where the case of unintentional gestures is considered [12]. However, all of these hand gesture recognition systems are standalone systems. For a hand gesture associated with a vocal command in multimodal interaction, the meaning of the gesture should be interpreted with the other modalities involved. Systems developed to filter out unintentional gestures in unimodal systems have not considered that, and applications of such systems will make uninformed decisions.

2. Related Work

In the available HRI systems, hand gestures are frequently used as a complementary information modality to enhance the understanding of uncertain information associated with vocal commands. Hand gesture recognition systems involved in either multimodal or unimodal HRI systems have used different techniques [12,13]. One such technique is to extract hand features through a vision sensor, which are then used to train a predefined gesture model [14]. However, these models have to be trained through a large set of data to avoid unintentional movements [15]. Since certain unintentional movements will look like or include the same hand features as intentional hand gestures, there is a risk of misinterpreting a gesture [16]. The same is valid for systems that use vision sensors and cameras to extract an image of a hand gesture [17]. Since these images are for static gestures, the features extracted from these images are also based on the same hand features [18]. Therefore, unintentional gestures could affect the accuracy of this method as well. Other techniques include continuous hand gesture recognition through wearable devices [12,19], EEG-based hand gesture recognition [14], and EMG-based gesture recognition, which are popular methods to identify dynamic gestures [20]. Most of these systems only facilitate filtering out unidentifiable hand movements [21] and movements which are different from the predefined gestures of the model. However, gestures that are similar to intentional gestures and have nothing to do with the interaction are not filtered. In particular, multimodal systems with speech–hand gesture commands encounter proper hand gestures that have no relationship with the corresponding uncertain command phrase. Further, there is no system in place to filter out unintentional gestures, irrespective of whether there are predefined gestures or not. Furthermore, certain time limits or mobility limits were introduced in certain systems as a means of filtering out unintentional gestures. Even though for a limited number of predefined gestures this works, there is a chance that certain intentional gestures will also be filtered out.

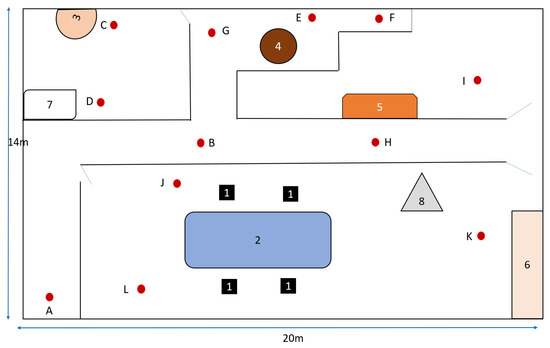

As described in the paper [7], we conducted a human study to identify hand gestures that are utilized in multimodal vocal commands. In that study, wheelchair users were asked to sort hand gestures associated with multimodal commands into three main categories, namely, deictic gestures, redundant gestures, and unintentional gestures. However, in that study, users considered all the involuntary gestures as unintentional since we analyzed voluntary hand gestures in speech–hand gesture multimodal user commands. However, some involuntary hand gestures may have emotional information, which is useful in interpreting uncertainties associated with speech commands. Therefore, as an extension of that study, we consider deictic, redundant, and meaningful involuntary gestures as intentional gestures in this paper.

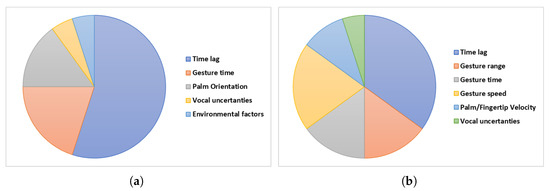

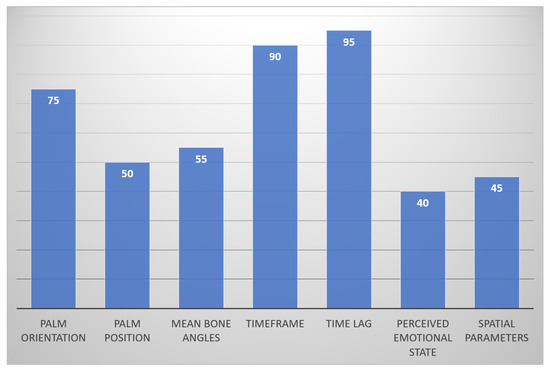

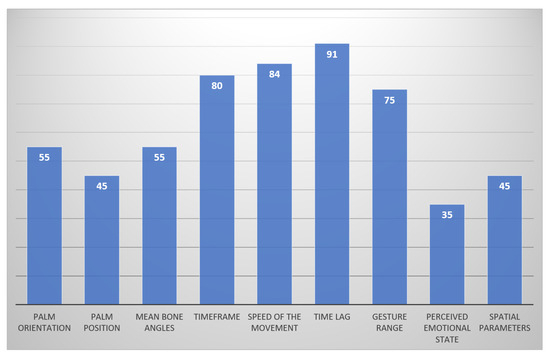

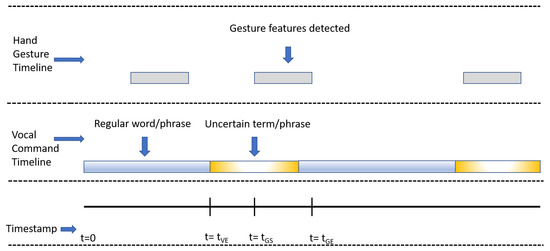

Other parameters affect the meaning of a gesture in vocal–gesture commands, such as time lag, gesture range, gesture timeframe, gesture speed, spatial parameters, and other environmental factors [7]. In different degrees, these aspects are used by humans in their general communication to sort out a real hand gesture from an idiosyncrasy. Therefore, the purpose of this research is to develop an intelligent system that is able to filter out unintentional hand gestures in speech–hand gesture multimodal interactions to ensure user safety. The proposed system considers comparing hand gesture timelines and vocal command timelines to determine parameters that can be used to filter out unintentional gestures. Further, the liking of the parameter selection and sorting of the unintentional/intentional gestures are conducted from an external person’s point of view. The reason behind this is to mimic human–human interaction between a user and their caretaker.

Section 3 describes the human studies conducted and an analysis of the results obtained. Further in Section 3, the parameters that are associated with unintentional gestures are identified and defined for the proposed system. Section 3.4 discusses the various hand features associated with unintentional gestures and hand features that are used to calculate timeline parameters, which are described in Section 3.5. Section 4 presents the system overview, and Section 5 describes the gesture identification and filtering algorithms. Results and discussion are included in Section 6, and finally, Section 7 and Section 8 discuss the limitations and conclusions.

4. System Overview

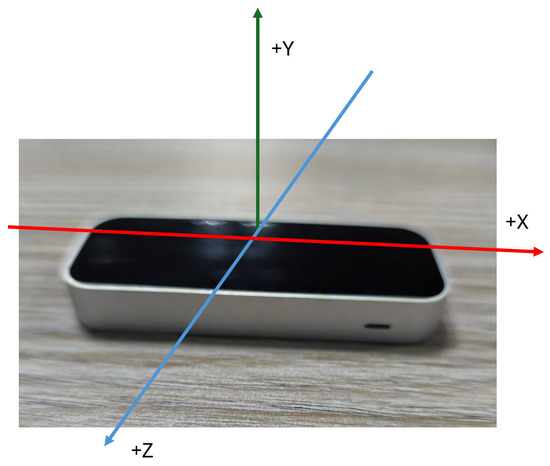

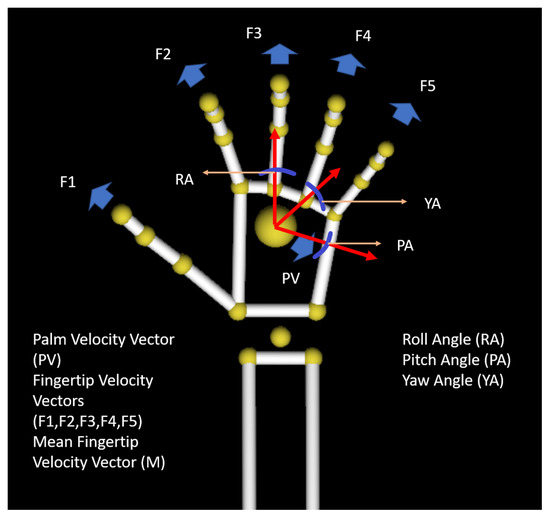

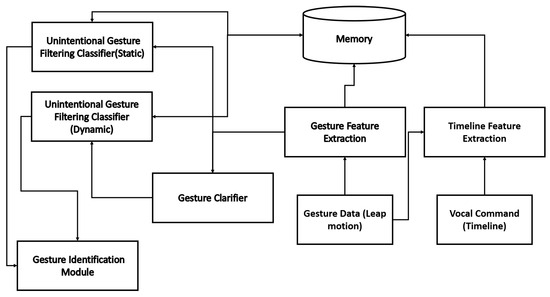

As represented in Figure 8, the proposed system for unintentional gesture filtering consists of a Gesture Feature Extraction Module (GFEM) and a Timeline Feature Extraction Module (TFEM). The GFEM extracts data from the Leap motion data, and the TFEM extracts data from both the Leap motion data and vocal command timeline data. The GFEM calculates gesture feature vectors, which are used in the Gesture Clarifier (GC) and Filtering modules. The TFEM calculates timeline parameters, comparing the vocal command timeline and gesture timeline. Both gesture features and timeline features are sent to the Unintentional Gesture Filtering Classifier (Static) and Unintentional Gesture Filtering Classifier (Dynamic) (UGFCS and UGFCD). Classified gestures are used in the Gesture Identification Module (GIM) to filter out the unintentional gestures from the intentional gestures and further continue the gesture identification process. Unintentional gestures of both types, dynamic and static, are classified in the UGFCS and UGFCD. The GC clarifies the gestures into static and dynamic gestures based on the gesture feature information that comes from the GFEM.

Figure 8.

System overview of Gesture Filtering Module.

5. Gesture Identification and Filtering

5.1. Naive Bayes and Locally Weighted Naive Bayes

Naive Bayes (NB) is a classification based on probability and is considered the simplest form of Bayesian network classifier [24]. Probability-based problems can be easily solved through this classifier and are widely used in current applications worldwide. However, the issue with NB is that it will not scale to larger datasets in real-world problems. The reason behind this is the complexity of real-world problems. As a solution, NB assumes that attributes or parameters are conditionally independent, which is not the case in complex issues. However, using NB, it is possible to solve problems with high-dimensional data [25]. By assuming the conditional independence among attributes, NB achieves high accuracy and efficiency [26]. However, the conditional independence of the attributes or parameters is violated many times in real-world scenarios. As solutions, few approaches have been introduced that include attribute weighting, instance weighting, structure extension, attribute selection, and instance selection [27,28,29]. Locally Weighted Naive Bayes (LWNB) utilizes an instance weighting function, and training instances are weighted in the neighborhood of the instance whose class value should be predicted (locally weighted) [30,31]. However, LWNB alone does not consider the relationships that may occur among the attributes or the parameters. Especially in this article, we consider parameters that are not independent of each other. Therefore, attribute weighting also should be considered. Even though there are systems that have considered attribute and instance weighting [31], the problem discussed in this article requires a lazy classification with lower computational time and also deals with a larger amount of data.

5.2. Static and Dynamic Gesture Clarification

The Gesture Clarifier (GC) consists of Algorithm 1 to distinguish dynamic gestures from static gestures. The GC clarifies the received gesture and activates the UGFC (Static) or UGFC (Dynamic). The GC also sends gestural feature information to the SGFFM and DGFFM. Mean fingertip velocity and palm center velocity are used to sort the gestures into static or dynamic categories.

| Algorithm 1 Clarification algorithm. |

| F = Mean fingertip velocity |

| = Palm center velocity |

| K = Extracted frame from Leap motion sensor |

| n = number of frames captured from Leap sensor |

| by 0.25 s intervals |

| Require: F, |

| Ensure: Activation Module |

| for ‘K’ in n do |

| if F > 0 and > 0 then |

| Activate UGFCD |

| else |

| Activate UGFCS |

| end if |

| end for |

5.3. Gesture Filtering

Since Naive Bayes (NB) is a probability-based classifier, for a lazy classification such as unintentional gesture filtering, it can be easily used. Even though NB is feasible for a probability-associated problem such as this, the higher dimensionality of the data and the complex nature of the problem require improvements. Therefore, as mentioned in [32,33], LWNB is the most suitable improved classifier. However, since the attributes/parameters involved with unintentional gestures might not be fully independent, assuming the conditional independence for the attributes lowers the accuracy. Therefore, attribute weighting is required. However, since unintentional gesture filtering should be executed efficiently and quickly as it is an initial step of the recognition system, a lazy classification method should be implemented. Therefore, to sort out the unintentional gestures from intentional gestures, a modified algorithm of a Locally Weighted Naive Bayes classifier (LWNB) with a K-Nearest Neighbor with distance weighting classifier (KNNDW) [34,35] is used [36,37].

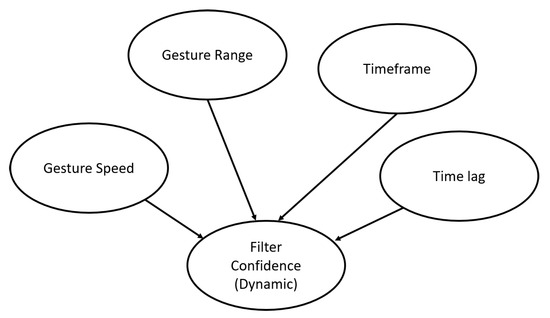

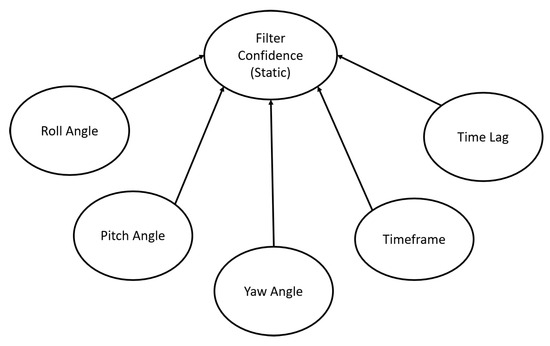

Let us consider that we have m parameters in our input vector of filtering parameters (corresponding timeline and gesture parameters). Then we have a vector with m dimensions. For dynamic gesture filtering, there are five parameters (m = 4) available according to the semantic map in Figure 9, and for static gesture filtering, there are four parameters (m = 5) according to the semantic map represented in Figure 10.

Figure 9.

Semantic map for unintentional gesture filtering in dynamic gestures.

Figure 10.

Semantic map for unintentional gesture filtering in static gestures.

Then input vector V(m) is

Here, a1 to am are the roll angle, pitch angle, yaw angle, timeframe, and time lag in the static gesture filtering. For dynamic gesture filtering, a1 to am are gesture speed, gesture range, timeframe, and time lag. There are two types of outputs, and they are unintentional gestures and intentional gestures:

Here, c1 and c2 are class labels for unintentional gestures and intentional gestures. If we consider that there are n vectors in the training data file (n samples), then each vector is

The distance weighting vector (Wdi) for the input filtering parameters is defined as

The distance from the extracted vector to the training vector n is then calculated using a Euclidean distance metric as follows:

We also normalize these distances. Therefore, all the distances are in the range of [0, 1]. Then, we choose the K vectors from the training dataset which have the shortest distance to the given parameter.

Then, Naive Bayes probability of the class for the given input parameter, p(C(j)|x), is calculated as follows:

Here, j is the index of each class. Furthermore, the (C(j), c(x)) value varies as follows:

To improve the result, we then add weights to the neighbors. This weight is as follows:

The above function is a function of the Euclidean distance of each distance vector, and that could be any monotonically decreasing function. The weighting function that gave the best classifier performance is given below.

The Locally Weighted Naive Bayes probability by weighting equation with respect to these weights is as follows:

We can then predict the class with the highest probability as follows:

6. Results and Analysis

6.1. Training and Testing of the Filtering System

To create the training pool, for each outcome, we used around 150 samples that were taken from the previous human study data (sorted gestures from the study [7] and human study explained earlier). Then we created a testing pool with 60 samples for unintentional gestures and 40 samples for intentional gestures collected from participants other than those present in the training pool. We trained and tested the system using three classifiers, namely the Naive Bayes classifier, LWNB classifier, and improved LWNB classifier. Confusion matrices represented by Table 2, Table 3 and Table 4 are calculated considering the overall performance of both static and dynamic unintentional gesture filtering systems.

Table 2.

Confusion matrix for the prediction of unintentional gestures using Naive Bayes classifier.

Table 3.

Confusion matrix for the prediction of unintentional gestures using the LWNB classifier (K = 4).

Table 4.

Confusion matrix for the prediction of unintentional gestures of the developed intelligent system (improved LWNB classifier with K-NN distance weighting for K = 4).

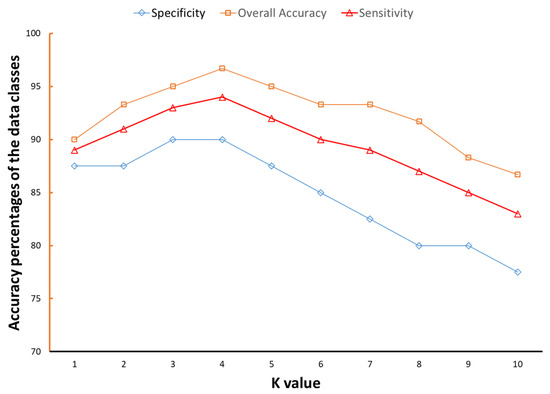

By using the Naive Bayes classifier without combining it with the K-Nearest Neighbor classification, the accuracy was 78%. The highest overall performance without weighting the input vectors (LWNB classifier) shows 88% correct classifications with K = 4. By using the GridSearchCV method, we found the best weighting vectors: Wdi(m) = 0.6, 0.6, 1.0, 1.0 for the dynamic gesture filtering classifier and Wdi(m) = 0.5, 0.5, 0.5, 1.0, 1.0 for the static gesture filtering classifier. After the weighting, the accuracy of the model was increased to 94% with K = 4, which is the best overall performance for the K value. Figure 11 represents the overall accuracy, sensitivity, and specificity for each K. From that, it can be observed that accuracy, sensitivity, and specificity assuming unintentional gesture prediction as positive sinks when K > 4. The K values mentioned here were true for both static and dynamic gestures.

Figure 11.

Naive Bayes classifier performance with weighting applied for unintentional gesture filtering.

Confusion matrices are calculated for the proposed classifier, which is in Table 4, and the other two classifiers in comparison (LWNB classifier without weighting the input vector (K = 4), which is in Table 3 and Naive Bayes classifier, which is in Table 2). Table 5 presents the comparison of the overall statistics. According to that, it is clear that the proposed classifier (combined KNNDW and LWNB classifier for K = 4) has the best overall accuracy and, most importantly, the best Cohen’s Kappa value. These statistics alongside the sensitivity of the classifier indicate that the proposed classifier has the best performance.

Table 5.

Overall statistics comparison for the classifier used in the proposed system with the other two classifiers.

6.2. Integrating the Gesture Filtering Model with a Hand Gesture-Controlled Wheelchair

The gesture filtering system discussed in this article was implemented on an intelligent wheelchair developed at the Robotics and Automation Laboratory, University of Moratuwa, Sri Lanka. This wheelchair can interact with the user through hand gestures and speech. A Leap motion sensor is used to detect hand gestures, while a microphone captures the user’s speech. The intelligent systems are powered by an industrial computer installed on the wheelchair. Additionally, a laser sensor is integrated for navigation and localization, and other necessary sensors for navigational assistance, including ultrasonic sensors and bumper/limit switches, are also incorporated. Figure 12 shows the intelligent wheelchair platform controlled by speech–hand gesture commands.

Figure 12.

Intelligent wheelchair developed by ISRG, University of Moratuwa, which is used as the research platform.

The partial information extracted from hand gestures was used to enhance the understanding of vocal navigational commands in that intelligent system. The details of that system can be found in the paper cited as [38]. The unintentional gesture filtering system proposed in this article was integrated with the gesture identification system to increase the overall accuracy of the system. With the gesture filtering, misclassifications that go undetected by the system can be reduced. The two systems were tested with a user rating out of 10 with 50 participants (average age is 34.5 years). The user rating received by the integrated system was higher at 8.7 compared to 8.1 for the original system.

6.3. Validation of the Filtering System

After developing the system, it was further tested using a different participant group. For this, we randomly selected a set of 50 university students who had nothing to do with the training/testing data or the project at hand. Their average age is 23.4 years, and the standard deviation is 2.1 years. In total, 30 gestures with vocal command phrases, randomly selected from the testing pool, were given to this group who were not part of the previous studies. These gestures were already classified using the intelligent gesture filtering system. Participants were asked to sort the classified gestures again. From this study, we concluded that 93% of the gestures were accurately classified.

This system was developed from the human–human interaction point of view and therefore considered the parameters humans use to determine someone else’s unintentional gestures. Hence, there was a reasonable judgment made by the participants about those parameters. To validate whether the developed system as is would filter all the unintentional gestures wheelchair users use, the accuracy of the system was calculated using unintentional gestures from wheelchair users’ point of view. In the current system, training and testing were performed using the gestures sorted by the caretakers and external participants since a wheelchair robot has to act as a companion for the wheelchair user. The accuracy calculated using the unintentional gestures from the wheelchair user’s point of view was 84%. Even in that regard, the developed system is fairly accurate.

7. Limitations

The accuracy of the system using the sorted gestures from the caretaker/external person’s point of view and the wheelchair user’s point of view is slightly different. This is because in day-to-day lives, human–human interactions are not 100% accurate. There is a certain judgment and uncertainty involved in interpretations of these interactions. However, as you can see from the accuracies calculated above, that error is not huge. This error is not addressed in the proposed system since the purpose was to implement a gesture filtering method to mimic regular human–human interactions. Furthermore, this accuracy difference will be even less when this system is trained with more data points. Therefore, an adjusted system considering parameters from the wheelchair user’s point of view can be developed. For that, user feedback on the human-friendly nature of the current system will also be taken into consideration.

8. Conclusions

Multimodal communication is more prevalent in natural communication among humans. When an intelligent robot or wheelchair is being developed, it is important to consider this aspect. One of the most common modality pairs in multimodal human–human communication is speech–hand gesture interaction. However, not all the hand gestures that can be identified in this type of interaction are useful. Some hand movements can be misunderstood as useful hand gestures or intentional hand gestures. Gesture identification systems present in the literature use hand features and other parameters that are associated with the gesture alone. Hence, unnecessary hand movements also can be identified as useful hand gestures, since these systems use hand features and gesture features.

Therefore, in this paper, we proposed an intelligent system to filter out these unnecessary gestures or unintentional gestures before the gesture identification process. To achieve that, we considered timeline parameters that are calculated by comparing the vocal command timeline and gesture timeline. Other than these parameters, a few basic features of a hand, such as palm velocity, fingertip velocity, and palm orientation, were used in the algorithm. Most of these hand features were used to calculate the timeline parameters. Therefore, this system can be used in filtering the unintentional hand gestures almost irrespective of the hand features. However, with this system, the misidentifications that occur in other systems that use hand features to identify hand gestures can be avoided.

Time lag, gesture range, timeframe, and gesture speed were used as timeline parameters, and palm orientation was used as a hand feature in the filtering algorithm; the system was trained with a combined Locally Weighted Naive Bayes (LWNB) and K- Nearest Neighbor Distance Weighting (KNNDW) classifier; and it gave an overall accuracy of 94% and Cohen’s Kappa of 0.88. Therefore, we conclude that we can filter out unintentional gestures with greater accuracy and can achieve perfection in the gesture identification process. If we fail to filter out these unintentional gestures, it could lead to severe misidentification of important hand gestures from disabled/elderly users. If they are confined to a wheelchair, that could lead to grave consequences in terms of safety. Therefore, this system was designed based on wheelchair user data and specifically for intelligent wheelchairs. However, this system can be modified to other service robots as well.

This system is currently designed to filter out unintentional (involuntary) gestures, including useful involuntary gestures such as steepling, which could convey emotional information. As future work, this system will be modified to allow those gestures in the gesture identification process to enhance the understanding of speech–hand gesture multimodal commands.

Author Contributions

Conceptualization, K.S.P.; methodology, K.S.P. and A.G.B.P.J.; validation, K.S.P., A.G.B.P.J. and R.A.R.C.G.; data curation, K.S.P.; writing—original draft preparation, K.S.P.; writing—review and editing, K.S.P., A.G.B.P.J. and R.A.R.C.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Research Council (grant number 17-069) and Senate Research Council, University of Moratuwa (grant number SRC/LT/2020/40).

Institutional Review Board Statement

All subjects gave informed consent for inclusion before participating in the study. Ethics approval is not required for this type of study. The study has been granted an exemption by the Ethics Review Committee of the University of Moratuwa, Sri Lanka.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data can be made available upon request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Smith, K.; Watson, A.W.; Lonnie, M.; Peeters, W.M.; Oonincx, D.; Tsoutsoura, N.; Simon-Miquel, G.; Szepe, K.; Cochetel, N.; Pearson, A.G.; et al. Meeting the global protein supply requirements of a growing and ageing population. Eur. J. Nutr. 2024, 63, 1425–1433. [Google Scholar] [CrossRef] [PubMed]

- Ryu, H.Y.; Kwon, J.S.; Lim, J.H.; Kim, A.H.; Baek, S.J.; Kim, J.W. Development of an autonomous driving smart wheelchair for the physically weak. Appl. Sci. 2022, 12, 377. [Google Scholar] [CrossRef]

- Ekanayaka, D.; Cooray, O.; Madhusanka, N.; Ranasinghe, H.; Priyanayana, S.; Buddhika, A.; Jayasekara, P. Elderly supportive intelligent wheelchair. In Proceedings of the 2019 Moratuwa Engineering Research Conference (MERCon), Moratuwa, Sri Lanka, 3–5 July 2019; pp. 627–632. [Google Scholar]

- Priyanayana, K.S.; Jayasekara, A.B.P.; Gopura, R. Evaluation of Interactive Modalities Using Preference Factor Analysis for Wheelchair Users. In Proceedings of the 2021 IEEE 9th Region 10 Humanitarian Technology Conference (R10-HTC), Bengaluru, India, 30 September–2 October 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Gawli, P.; Desale, T.; Deshmukh, T.; Dongare, S.; Gaikwad, V.; Hajare, S. Gesture and Voice Controlled Wheelchair. In Proceedings of the 2025 4th International Conference on Sentiment Analysis and Deep Learning (ICSADL), Janakpur, Nepal, 7 February 2025; pp. 899–903. [Google Scholar]

- Sorokoumov, P.; Rovbo, M.; Moscowsky, A.; Malyshev, A. Robotic wheelchair control system for multimodal interfaces based on a symbolic model of the world. In Smart Electromechanical Systems: Behavioral Decision Making; Springer: Berlin/Heidelberg, Germany, 2021; pp. 163–183. [Google Scholar]

- Priyanayana, K.; Jayasekara, A.B.P.; Gopura, R. Multimodal Behavior Analysis of Human-Robot Navigational Commands. In Proceedings of the 2020 3rd International Conference on Control and Robots (ICCR), Tokyo, Japan, 26–29 December 2020; pp. 79–84. [Google Scholar]

- Qi, J.; Ma, L.; Cui, Z.; Yu, Y. Computer vision-based hand gesture recognition for human-robot interaction: A review. Complex Intell. Syst. 2024, 10, 1581–1606. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, T.; Li, Y.; Li, P.; Wu, H.; Li, K. Continuous Hand Gestures Detection and Recognition in Emergency Human-Robot Interaction Based on the Inertial Measurement Unit. IEEE Trans. Instrum. Meas. 2024, 73, 2526315. [Google Scholar] [CrossRef]

- Filipowska, A.; Filipowski, W.; Raif, P.; Pieniążek, M.; Bodak, J.; Ferst, P.; Pilarski, K.; Sieciński, S.; Doniec, R.J.; Mieszczanin, J.; et al. Machine Learning-Based Gesture Recognition Glove: Design and Implementation. Sensors 2024, 24, 6157. [Google Scholar] [CrossRef]

- Abavisani, M.; Joze, H.R.V.; Patel, V.M. Improving the performance of unimodal dynamic hand-gesture recognition with multimodal training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1165–1174. [Google Scholar]

- Han, H.; Yoon, S.W. Gyroscope-based continuous human hand gesture recognition for multi-modal wearable input device for human machine interaction. Sensors 2019, 19, 2562. [Google Scholar] [CrossRef]

- Georgiou, O.; Biscione, V.; Harwood, A.; Griffiths, D.; Giordano, M.; Long, B.; Carter, T. Haptic In-Vehicle Gesture Controls. In Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications Adjunct, AutomotiveUI ’17, Oldenburg, Germany, 24–27 September 2017; pp. 233–238. [Google Scholar] [CrossRef]

- Su, H.; Ovur, S.E.; Zhou, X.; Qi, W.; Ferrigno, G.; De Momi, E. Depth vision guided hand gesture recognition using electromyographic signals. Adv. Robot. 2020, 34, 985–997. [Google Scholar] [CrossRef]

- Islam, S.; Matin, A.; Kibria, H.B. Hand Gesture Recognition Based Human Computer Interaction to Control Multiple Applications. In Proceedings of the 4th International Conference on Intelligent Computing & Optimization, Hua Hin, Thailand, 30–31 December 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 397–406. [Google Scholar]

- Huu, P.N.; Phung Ngoc, T. Hand gesture recognition algorithm using SVM and HOG model for control of robotic system. J. Robot. 2021, 2021, 3986497. [Google Scholar] [CrossRef]

- Hoang, V.T. HGM-4: A new multi-cameras dataset for hand gesture recognition. Data Brief 2020, 30, 105676. [Google Scholar] [CrossRef]

- Pinto, R.F., Jr.; Borges, C.D.; Almeida, A.M.; Paula, I.C., Jr. Static hand gesture recognition based on convolutional neural networks. J. Electr. Comput. Eng. 2019, 2019, 4167890. [Google Scholar] [CrossRef]

- Wu, Y.; Wu, Z.; Fu, C. Continuous arm gesture recognition based on natural features and logistic regression. IEEE Sens. J. 2018, 18, 8143–8153. [Google Scholar] [CrossRef]

- Qi, J.; Jiang, G.; Li, G.; Sun, Y.; Tao, B. Surface EMG hand gesture recognition system based on PCA and GRNN. Neural Comput. Appl. 2020, 32, 6343–6351. [Google Scholar] [CrossRef]

- Chen, L.; Fu, J.; Wu, Y.; Li, H.; Zheng, B. Hand gesture recognition using compact CNN via surface electromyography signals. Sensors 2020, 20, 672. [Google Scholar] [CrossRef] [PubMed]

- Bandara, H.R.T.; Priyanayana, K.; Jayasekara, A.B.P.; Chandima, D.; Gopura, R. An intelligent gesture classification model for domestic wheelchair navigation with gesture variance compensation. Appl. Bionics Biomech. 2020, 2020, 9160528. [Google Scholar] [CrossRef] [PubMed]

- Mansur, V.; Reddy, S.; Sujatha, R. Deploying Complementary filter to avert gimbal lock in drones using Quaternion angles. In Proceedings of the 2020 IEEE International Conference on Computing, Power and Communication Technologies (GUCON), Greater Noida, India, 2–4 October 2020; pp. 751–756. [Google Scholar] [CrossRef]

- Friedman, N.; Geiger, D.; Goldszmidt, M. Bayesian network classifiers. Mach. Learn. 1997, 29, 131–163. [Google Scholar] [CrossRef]

- Zaidi, N.A.; Petitjean, F.; Webb, G.I. Preconditioning an artificial neural network using naive bayes. In Proceedings of the 20th Pacific-Asia Conference on Knowledge Discovery and Data Mining, Auckland, New Zealand, 19–22 April 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 341–353. [Google Scholar]

- Webb, G.I.; Boughton, J.R.; Zheng, F.; Ting, K.M.; Salem, H. Learning by extrapolation from marginal to full-multivariate probability distributions: Decreasingly naive Bayesian classification. Mach. Learn. 2012, 86, 233–272. [Google Scholar] [CrossRef]

- Wu, J.; Pan, S.; Zhu, X.; Cai, Z.; Zhang, P.; Zhang, C. Self-adaptive attribute weighting for Naive Bayes classification. Expert Syst. Appl. 2015, 42, 1487–1502. [Google Scholar] [CrossRef]

- Berend, D.; Kontorovich, A. A finite sample analysis of the Naive Bayes classifier. J. Mach. Learn. Res. 2015, 16, 1519–1545. [Google Scholar]

- Bermejo, P.; Gámez, J.A.; Puerta, J.M. Speeding up incremental wrapper feature subset selection with Naive Bayes classifier. Knowl.-Based Syst. 2014, 55, 140–147. [Google Scholar] [CrossRef]

- Jiang, L.; Cai, Z.; Zhang, H.; Wang, D. Naive Bayes text classifiers: A locally weighted learning approach. J. Exp. Theor. Artif. Intell. 2013, 25, 273–286. [Google Scholar] [CrossRef]

- Zhang, H.; Jiang, L.; Yu, L. Attribute and instance weighted naive Bayes. Pattern Recognit. 2021, 111, 107674. [Google Scholar] [CrossRef]

- Zheng, Z.; Cai, Y.; Yang, Y.; Li, Y. Sparse weighted naive bayes classifier for efficient classification of categorical data. In Proceedings of the 2018 IEEE Third International Conference on Data Science in Cyberspace (DSC), Guangzhou, China, 18–21 June 2018; pp. 691–696. [Google Scholar]

- Granik, M.; Mesyura, V. Fake news detection using naive Bayes classifier. In Proceedings of the 2017 IEEE First Ukraine Conference on Electrical and Computer Engineering (UKRCON), Kyiv, UKraine, 29 May–2 June 2017; pp. 900–903. [Google Scholar]

- Li, Q.; Li, W.; Zhang, J.; Xu, Z. An improved k-nearest neighbour method to diagnose breast cancer. Analyst 2018, 143, 2807–2811. [Google Scholar] [CrossRef] [PubMed]

- Mateos-García, D.; García-Gutiérrez, J.; Riquelme-Santos, J.C. On the evolutionary weighting of neighbours and features in the k-nearest neighbour rule. Neurocomputing 2019, 326, 54–60. [Google Scholar] [CrossRef]

- Dey, L.; Chakraborty, S.; Biswas, A.; Bose, B.; Tiwari, S. Sentiment analysis of review datasets using naive bayes and k-nn classifier. arXiv 2016, arXiv:1610.09982. [Google Scholar] [CrossRef]

- Marianingsih, S.; Utaminingrum, F.; Bachtiar, F.A. Road surface types classification using combination of K-nearest neighbor and naïve bayes based on GLCM. Int. J. Adv. Soft Comput. Its Appl. 2019, 11, 15–27. [Google Scholar]

- Priyanayana, K.; Jayasekara, A.B.P.; Gopura, R. Enhancing the Understanding of Distance Related Uncertainties of Vocal Navigational Commands Using Fusion of Hand Gesture Information. In Proceedings of the TENCON 2023—2023 IEEE Region 10 Conference (TENCON), Chiang Mai, Thailand, 31 October–3 November 2023; pp. 420–425. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).