Radar Perception of Multi-Object Collision Risk Neural Domains during Autonomous Driving

Abstract

1. Introduction

1.1. State of Knowledge

1.2. Study Objectives

- Synthesis of a radar perception algorithm mapping the neural domains of autonomous objects characterizing their collision risk;

- Assessment of the degree of radar perception on the example of multi-object autonomous driving simulation.

1.3. Article Content

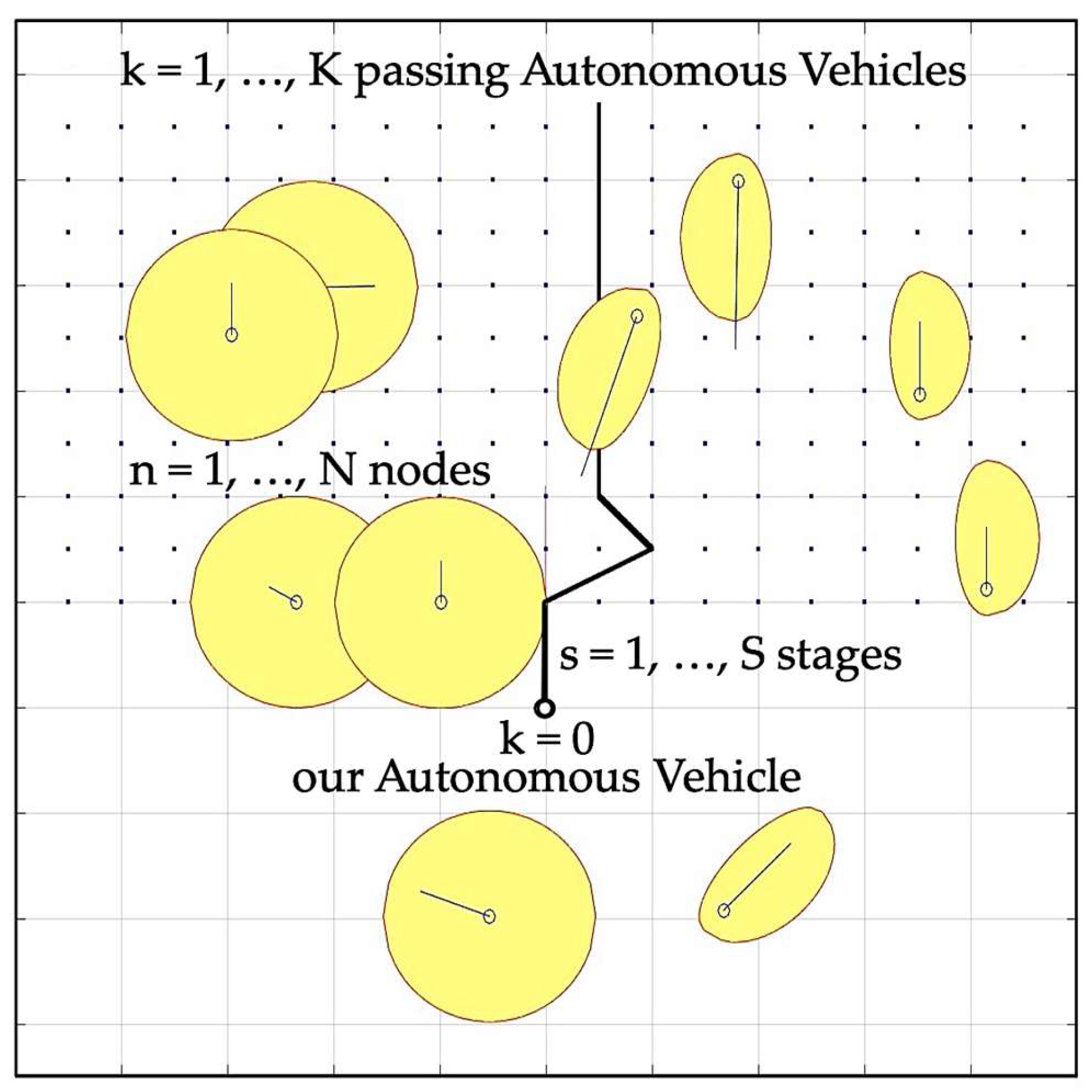

2. Autonomous Driving of Multiple Objects

3. Radar Perception of Collision Risk

- “Safe” where rk = 0;

- “Attention” where rk = 0.3;

- “Possible Risk” where rk = 0.5;

- “Dangerous” where rk = 0.7;

- “Collision” where rk = 1.

4. Control Algorithm

| Algorithm 1: Autonomous driving with neural domains of collision risk |

| BEGIN |

| 1. Radar Perception Data: xk, yk, vk, αk |

| 2. Stage: s:=1 |

| 3. Autonomous Vehicle: k=1 |

| 4. Creating collision risk neural domain with k Autonomous Vehicle |

| IF not k=K THEN (k:= k+1 and GOTO 4) |

| ELSE Node: n:=1 |

| 5. Dynamic programming of our Autonomous Vehicle safe path |

| IF not n=N THEN (n:= n+1 and GOTO 6) |

| ELSE s:= S |

| IF not s=S THEN (s:= s+1 and GOTO 3) |

| ELSE Determining optimal path of our Autonomous Vehicle |

| in relation to passes k Autonomous Vehicles |

| END |

- Creating collision risk neural domain of our k = 0 Autonomous Vehicle with k other Autonomous Vehicles;

- Dynamic programming of safe path of our k = 0 Autonomous Vehicle;

- Determining the optimal path our k = 0 Autonomous Vehicle in relation to all k passes objects.

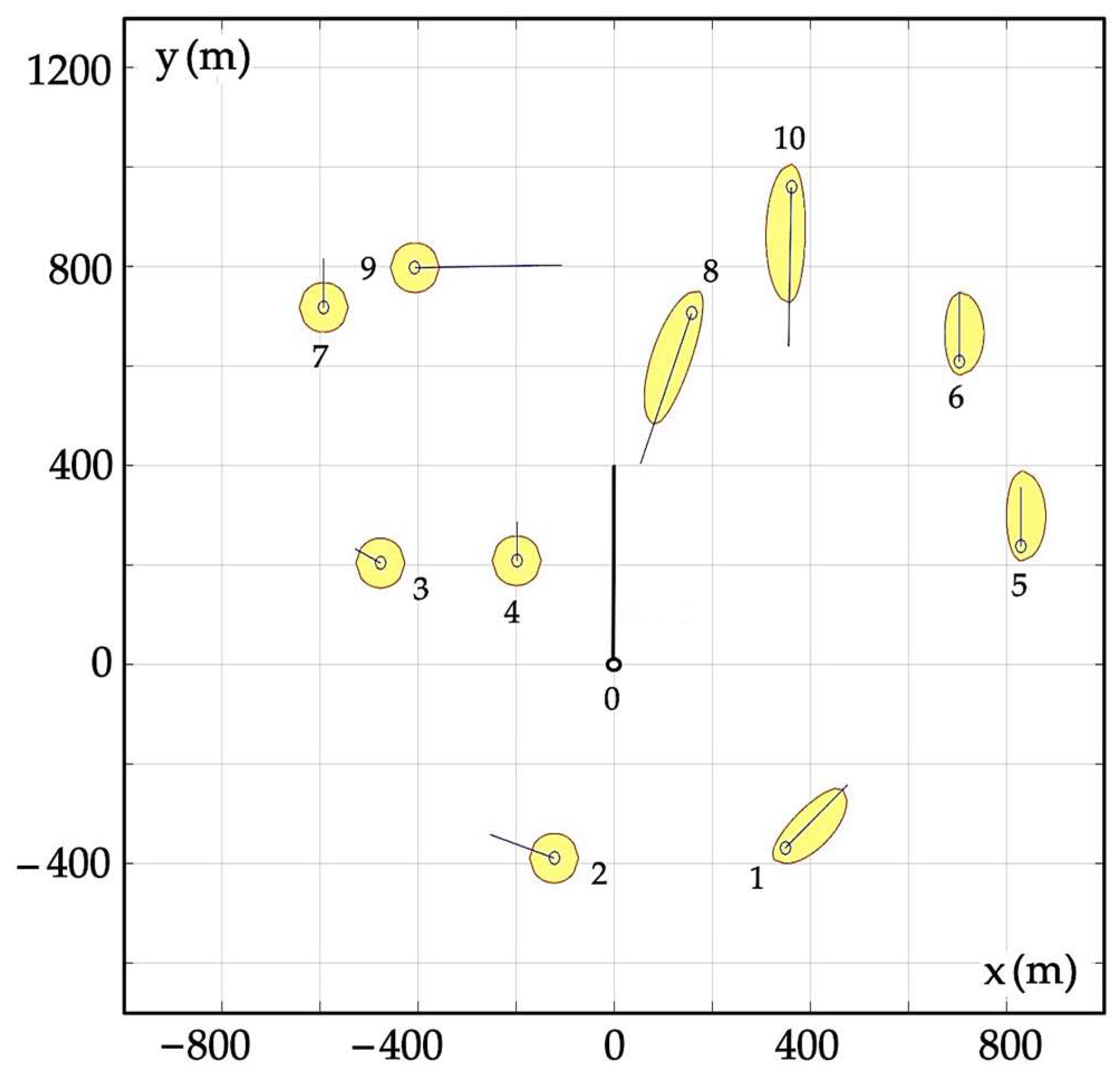

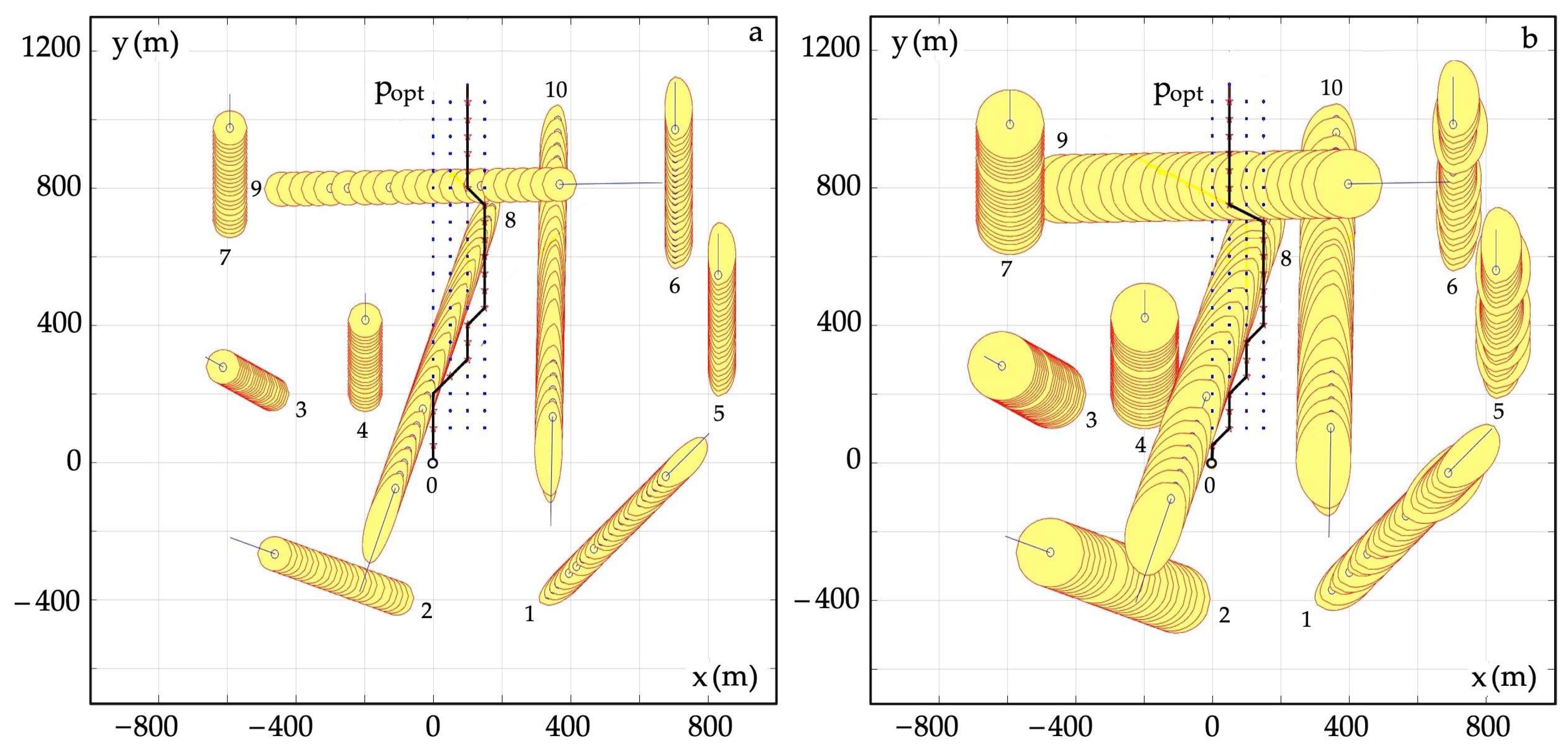

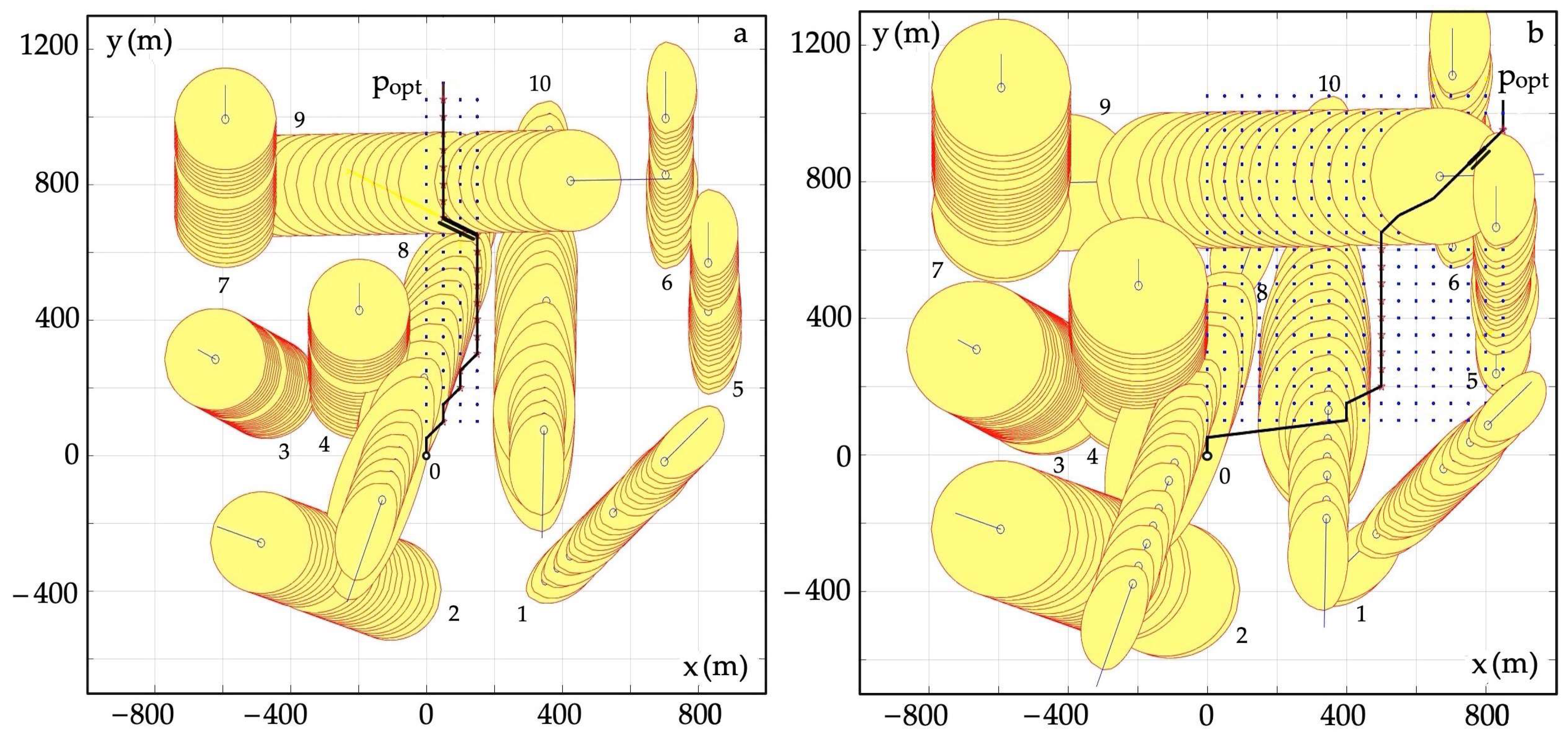

5. Computer Simulation

6. Conclusions

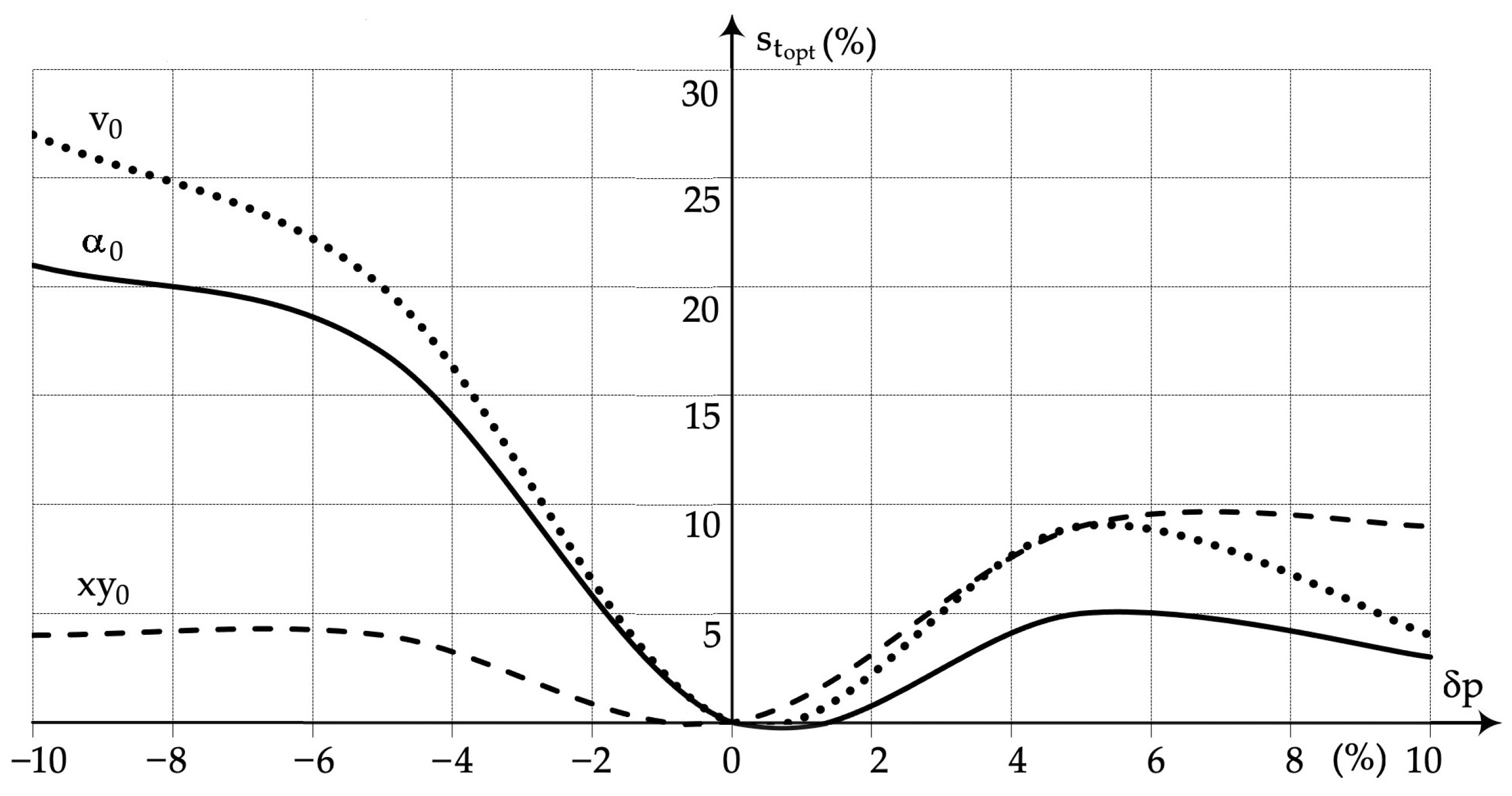

- Greatest changes from 4% to 27% for errors occur in the perception of the speed of an autonomous object ranging from −10% to +10%;

- Large changes in sensitivity from 3% to 22% occur for errors in the perception of the heading of an autonomous object ranging from −10% to +10%;

- Object position measurement errors from −10% to +10% cause the least sensitivity ranging from 4% to 9%;

- The increase in the sensitivity of time-optimal autonomous driving was caused by taking into account the risk of collisions from passing autonomous vehicles, which were assigned neural domains of prohibited maneuvering areas of variable size;

- The advantage of the presented method for determining the optimal safe autonomous driving path is its low sensitivity to the inaccuracy of the input data from the radar device;

- The limitation of the method is the reaction to changes in the direction and speed of passing vehicles during safe path calculations.

- Taking into account the properties of the autonomous driving process in situations where other autonomous vehicles do not respect the right of road;

- Application of a cooperative and non-cooperative positional game model that takes into account areas of prohibited maneuvers due to the obligation to maintain a safe distance when passing autonomous objects;

- Using the collision risk matrix game model, cooperative and non-cooperative;

- Optimization of the process of safe autonomous driving based on the selected particle swarm model, for example using the ant algorithm;

- Simulation of more complex autonomous driving situations, taking into account both a larger number of objects and various real scenarios of parallel two-way and one-way driving, with an intersection and a roundabout.

Funding

Data Availability Statement

Conflicts of Interest

References

- Aufrère, R.; Gowdy, J.; Mertz, C.; Thorpe, C.; Wang, C.C.; Yata, T. Perception for collision avoidance and autonomous driving. Mechatronics 2003, 13, 1149–1161. [Google Scholar] [CrossRef]

- Marcano, M.; Matute, J.A.; Lattarulo, R.; Martí, E.; Pérez, J. Intelligent Control Approaches for Modeling and Control of Complex Systems. Complexity 2018, 2018, 7615123. [Google Scholar] [CrossRef]

- Manghat, S.K. Multi Sensor Multi Object Tracking in Autonomous Vehicles. Master’s Thesis, Purdue University, Indianapolis, IN, USA, 12 December 2019. [Google Scholar]

- Sligar, P. Machine Learning-Based Radar Perception for Autonomous Vehicles Using Full Physics Simulation. IEEE Access 2020, 8, 51470–51476. [Google Scholar] [CrossRef]

- Manjunath, A.; Liu, Y.; Henriques, B.; Engstle, A. Radar Based Object Detection and Tracking for Autonomous Driving. In Proceedings of the 2018 IEEE MTT-S International Conference on Crowaves for Intelligent Mobility (ICMIM), Munich, Germany, 15–17 April 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Hussain, M.I.; Azam, S.; Munir, F.; Khan, Z.; Jeon, M. Multiple Objects Tracking using Radar for Autonomous Driving. In Proceedings of the 2020 IEEE International IOT, Electronics and Mechatronics Conference (IEMTRONICS), Vancouver, BC, Canada, 9–12 September 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Yang, B.; Ruo, R.; Liang, M.; Casas, S.; Urtasun, R. RadarNet: Exploiting Radar for Robust Perception of Dynamic Objects. arXiv 2020. [Google Scholar] [CrossRef]

- Kramer, A. Radar-Based Perception for Visually Degraded Environments. Ph.D. Thesis, University of Washington, Washington, DC, USA, 2021. [Google Scholar]

- Scheiner, N.; Weishaupt, F.; Tilly, J.F.; Dickmann, J. New Challenges for Deep Neural Networks in Automotive Radar Perception. In Automatisiertes Fahren; Bertram, T., Ed.; Springer Vieweg: Wiesbaden, Germany, 2021. [Google Scholar] [CrossRef]

- Gupta, A.; Anpalagan, A.; Guan, L.; Khwaja, A.S. Deep learning for object detection and scene perception in self-driving cars: Survey, challenges, and open issues. Array 2021, 10, 100057. [Google Scholar] [CrossRef]

- Yao, S.; Guan, R.; Peng, Z.; Xu, C.; Shi, Y.; Yue, Y.; Lim, E.G.; Seo, H.; Man, K.L.; Zhu, X.; et al. Radar Perception in Autonomous Driving: Exploring Different Data Representations. arXiv 2021. [Google Scholar] [CrossRef]

- Tu, J.; Li, H.; Yan, X.; Ren, M.; Chen, Y.; Liang, M.; Bitar, E.; Yumer, E.; Urtasun, R. Exploring Adversarial Robustness of Multi-sensor Perception Systems in Self Driving. In Proceedings of the 5th Conference on Robot Learning, London, UK, 8–11 November 2021; Available online: https://proceedings.mlr.press/v164/tu22a.html (accessed on 20 February 2024).

- Hoss, M.; Scholtes, M.; Eckstein, L. A Review of Testing Object-Based Environment Perception for Safe Automated Driving. Automot. Innov. 2022, 5, 223–250. [Google Scholar] [CrossRef]

- Gao, X. Efficient and Enhanced Radar Perception for Autonomous Driving Systems. Ph.D. Thesis, University of Washington, Washington, DC, USA, 2023. Available online: https://hdl.handle.net/1773/50783 (accessed on 18 February 2024).

- Li, P.; Wang, P.; Berntorp, K.; Liu, H. Exploiting Temporal Relations on Radar Perception for Autonomous Driving. arXiv 2022. [Google Scholar] [CrossRef]

- Zhou, Y.; Lu, L.; Zhao, H.; López-Benítez, M.; Yu, L.; Yue, Y. Towards Deep Radar Perception for Autonomous Driving: Datasets, Methods, and Challenges. Sensors 2022, 22, 4208. [Google Scholar] [CrossRef]

- Huang, T. V2X Cooperative Perception for Autonomous Driving: Recent Advances and Challenges. arXiv 2023. [Google Scholar] [CrossRef]

- Zhang, Y.; Carballo, A.; Yang, H.; Takeda, K. Perception and sensing for autonomous vehicles under adverse weather conditions: A survey. ISPRS J. Photogramm. Remote Sens. 2023, 196, 146–177. [Google Scholar] [CrossRef]

- Gragnaniello, D.; Greco, A.; Saggese, A.; Vento, M.; Vicinanza, A. Benchmarking 2D Multi-Object Detection and Tracking Algorithms in Autonomous Vehicle Driving Scenarios. Sensors 2023, 23, 4024. [Google Scholar] [CrossRef]

- Pandharipande, A.; Cheng, C.-H.; Dauwels, J.; Gurbuz, S.Z.; Guzman, J.I.; Li, G.; Piazzoni, A.; Wang, P.; Santra, A. Sensing and Machine Learning for Automotive Perception: A Review. IEEE Sens. J. 2023, 23, 11097–11115. [Google Scholar] [CrossRef]

- Barbosa, F.M.; Osorio, F.S. Camera-Radar Perception for Autonomous Vehicles and ADAS: Concepts, Datasets and Metrics. arXiv 2023. [Google Scholar] [CrossRef]

- Sun, C.; Li, Y.; Li, H.; Xu, E.; Li, Y.; Li, W. Forward Collision Warning Strategy Based on Millimeter-Wave Radar and Visual Fusion. Sensors 2023, 23, 9295. [Google Scholar] [CrossRef]

- Gebregziabher, B. Multi Object Tracking for Predictive Collision Avoidance. arXiv 2023. [Google Scholar] [CrossRef]

- Snider, J.M. Automatic Steering Methods for Autonomous Automobile Path Tracking; Tech. Rep. CMU-RITR-09-08; Robotics Institute: Pittsburgh, PA, USA, 2009; Available online: https://api.semanticscholar.org/CorpusID:17512121 (accessed on 16 February 2024).

- Lombard, A.; Hao, X.; Abbas-Turki, A.; Moudni, A.E.; Galland, S.; Yasar, A.U.H. Lateral Control of an Unmaned Car Using GNSS Positionning in the Context of Connected Vehicles. Procedia Comput. Sci. 2016, 98, 148–155. [Google Scholar] [CrossRef]

- Lee, D.H.; Chen, K.L.; Liou, K.H.; Liu, C.L.; Liu, J.L. Deep learning and control algorithms of direct perception for autonomous driving. Appl. Intell. 2019, 51, 237–247. Available online: https://api.semanticscholar.org/CorpusID:204907021 (accessed on 1 March 2024). [CrossRef]

- Muzahid, A.J.M.; Kamarulzaman, S.F.; Rahman, M.A.; Murad, S.A.; Kamal, A.S.; Alenezi, A.H. Multiple vehicle cooperation and collision avoidance in automated vehicles: Survey and an AI-enabled conceptual framework. Sci. Rep. 2023, 13, 603. [Google Scholar] [CrossRef]

- Sana, F.; Azad, N.L.; Raahemifar, K. Autonomous Vehicle Decision-Making and Control in Complex and Unconventional Scenarios—A Review. Machines 2023, 11, 676. [Google Scholar] [CrossRef]

- Abdallaoui, S.; Ikaouassen, H.; Kribeche, A.; Chaibet, A.; Aglzim, E. Advancing autonomous vehicle control systems: An in-depth overview of decision-making and manoeuvre execution state of the art. J. Eng. 2023, 2023, e12333. [Google Scholar] [CrossRef]

- He, Y.; Li, J.; Liu, J. Research on GNSS INS & GNSS/INS Integrated Navigation Method for Autonomous Vehicles: A Survey. IEEE Access 2023, 11, 79033–79055. [Google Scholar] [CrossRef]

- Ibrahim, A.; Abosekeen, A.; Azouz, A.; Noureldin, A. Enhanced Autonomous Vehicle Positioning Using a Loosely Coupled INS/GNSS-Based Invariant-EKF Integration. Sensors 2023, 23, 6097. [Google Scholar] [CrossRef]

- Localization, Path Planning, Control, and System Integration. Available online: https://master-engineer.com/2020/11/04/localization-path-planning-control-and-system-integration/ (accessed on 9 January 2024).

- Karle, P.; Furtner, L.; Lienkamp, M. Self-Evaluation of Trajectory Predictors for Autonomous Driving. Electronics 2024, 13, 946. [Google Scholar] [CrossRef]

- Yang, W.; Wang, X.; Luo, X.; Xie, S.; Chen, J. S2S-Sim: A Benchmark Dataset for Ship Cooperative 3D Object Detection. Electronics 2024, 13, 885. [Google Scholar] [CrossRef]

- Tsai, J.; Chang, Y.-T.; Chen, Z.-Y.; You, Z. Autonomous Driving Control for Passing Unsignalized Intersections Using the Segmentation Technique. Electronics 2024, 13, 484. [Google Scholar] [CrossRef]

- Cai, C.; Chen, B.; Qiu, J.; Xu, Y.; Li, M.; Yang, Y. Migratory Perception in Edge-Assisted Internet of Vehicles. Electronics 2023, 12, 3662. [Google Scholar] [CrossRef]

- Wang, L.; Song, C.; Sun, Y.; Lu, C.; Chen, Q. A Neural Multi-Objective Capacitated Vehicle Routing Optimization Algorithm Based on Preference Adjustment. Electronics 2023, 12, 4167. [Google Scholar] [CrossRef]

- Liu, W.; Hua, M.; Deng, Z.; Meng, Z.; Huang, Y.; Hu, C.; Song, S.; Gao, L.; Liu, C.; Shuai, B.; et al. A Systematic Survey of Control Techniques and Applications in Connected and Automated Vehicles. IEEE Internet Things J. 2023, 10, 21892–21916. [Google Scholar] [CrossRef]

| Object k | Coordinate xk (m) | Coordinate yk (m) | Speed vk (m/s) | Course αk (deg) |

|---|---|---|---|---|

| 0 | 0 | 0 | 10.8 | 0 |

| 1 | 340 | 10 | 4.5 | 45 |

| 2 | 100 | 400 | 3.5 | 290 |

| 3 | 430 | 200 | 1.5 | 299 |

| 4 | 200 | 200 | 2.0 | 0 |

| 5 | 820 | 210 | 3.0 | 89 |

| 6 | 710 | 600 | 3.5 | 199 |

| 7 | 600 | 700 | 2.5 | 0 |

| 8 | 160 | 760 | 8.0 | 0 |

| 9 | 450 | 800 | 7.5 | 0 |

| 10 | 360 | 1000 | 8.0 | 181 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lisowski, J. Radar Perception of Multi-Object Collision Risk Neural Domains during Autonomous Driving. Electronics 2024, 13, 1065. https://doi.org/10.3390/electronics13061065

Lisowski J. Radar Perception of Multi-Object Collision Risk Neural Domains during Autonomous Driving. Electronics. 2024; 13(6):1065. https://doi.org/10.3390/electronics13061065

Chicago/Turabian StyleLisowski, Józef. 2024. "Radar Perception of Multi-Object Collision Risk Neural Domains during Autonomous Driving" Electronics 13, no. 6: 1065. https://doi.org/10.3390/electronics13061065

APA StyleLisowski, J. (2024). Radar Perception of Multi-Object Collision Risk Neural Domains during Autonomous Driving. Electronics, 13(6), 1065. https://doi.org/10.3390/electronics13061065