Abstract

Underwater object detection is an important task in marine exploration. The existing autonomous underwater vehicle (AUV) designs typically lack an integrated object detection module and are constrained by communication limitations in underwater environments. This results in a situation where AUV, when tasked with object detection missions, require real-time transmission of underwater sensing data to shore-based stations but are unable to do so. Consequently, the task is divided into two discontinuous phases: AUV acquisition of underwater data and shore-based object detection, leading to limited autonomy and intelligence for the AUV. In this paper, we propose a novel autonomous online underwater object detection system for AUV based on side-scan sonar (SSS). This system encompasses both hardware and software components and enables AUV to perform simultaneous data acquisition and object detection for underwater objects, thereby providing guidance for coherent AUV underwater operations. Firstly, this paper outlines the hardware design and layout of a portable integrated AUV for reconnaissance and strike missions, achieving online object detection through the integration of an acoustic processing computer. Subsequently, a modular design for the software architecture and a multi-threaded parallel design for the software workflow are developed, along with the integration of the YOLOv7 intelligent detection model, addressing three key technological challenges: real-time data processing, autonomous object detection, and intelligent online detection. Finally, lake experiments show that the system can meet the autonomy and real-time requirements of predefined object detection on AUV, and the average positioning error is better than 5 m, which verifies the feasibility and effectiveness of the system. This provides a new solution for underwater object detection in AUV.

1. Introduction

AUV represents an irreplaceable high-tech tool in humanity’s quest to understand, explore, and exploit the ocean. Characterized by high autonomy, extensive detection ranges, strong concealment, and agile maneuverability, AUV have found widespread application in fields such as marine environmental monitoring, oceanic resource surveys, and maritime security defense [1,2,3]. Underwater object detection is an important task of AUV [1,2], comprising mainly underwater data acquisition and the underwater objects detection.

Underwater data acquisition predominantly depends on advanced detection devices to acquire image data embedded with underwater object information. In the realm of AUV-based underwater object detection, two primary methods emerge: optical detection [4,5,6] and acoustic detection [7,8,9]. Acoustic detection, characterized by its extensive range and high adaptability [10,11], has become the dominant approach in this field. Within acoustic methods, SSS distinguishes itself from other acoustic equipment due to its simpler array structure, superior two-dimensional imaging resolution, compact size, and affordability [12,13], thus establishing itself as a vital tool and technique in underwater exploration. The imaging process of SSS is fundamentally grounded in the processing of echo signal data. By vertically arranging the echo data from each transmission cycle, a two-dimensional acoustic image of the seabed morphology is generated, enabling a detailed point-by-point mapping of the seabed surface. The intensity of these echo signals is directly converted into the grayscale values of the pixels in the acoustic image.

Underwater object detection involves the identification, classification, and localization of such objects, typically encompasses steps such as feature extraction and object classification [14]. Conventional sonar image-based underwater object detection methods can be broadly divided into three categories. These methods are based on mathematical statistics, mathematical morphology, and pixel analysis [10]. However, these methods often necessitate expert supervision to ensure effective feature selection, encountering challenges like poor robustness and low recognition rates. Recently, with the rapid advancement of deep learning, deep convolutional neural networks have been extensively applied in object detection algorithms, demonstrating significant potential in underwater sonar image object detection [15,16,17,18,19]. Single-stage detection algorithms, comprising only one object detection process, are characterized by their simplistic structure and high computational efficiency. They facilitate convenient end-to-end training, becoming an important trend in the field of real-time object detection [20].

In underwater environments, communication for AUV is restricted, preventing the real-time transmission of comprehensive detection data [21]. Furthermore, AUV designs typically do not include integrated target detection modules. As a result, AUV must surface or be recovered to transmit data to shore-based control centers, where it undergoes processing and analysis by professionals, often involving manual interpretation of sonar images. However, this method is characterized by low efficiency, lengthy processing times, and a strong dependence on subjective judgment, while increasing the exposure risk of AUV during reconnaissance missions in hazardous areas. In response to these challenges, the research community is increasingly focusing on developing an online detection system that coherently integrates underwater information collection with object detection and is capable of immediate processing and detection. This is aimed at advancing the autonomous and intelligent operations of AUV. Luo Y. et al. [22] have systematically designed a sonar image object detection based on deep learning. Yan S. et al. [23] have introduced a method combining SSS with GPUs and utilizing a self-cascading Convolutional Neural Network for autonomous detection of underwater objects by AUV. Tang Y.L. et al. [8] have developed an AUV-based side-scan sonar real-time method for underwater target detection, achieving real-time object detection with AUV. Nevertheless, the aforementioned three studies previously mentioned have not conducted comprehensive hardware and software designs for the object detection system.

To overcome the above problems, this paper proposes a SSS-based AUV autonomous online object detection system. Specifically, we introduce both hardware design and layout, as well as software and procedural design. The system is developed on the foundation of a portable, integrated reconnaissance and strike AUV, starting with hardware selection and layout. It then combines autonomous online detection of underwater objects with the design of onboard mission payloads. This paper focuses on three key issues, which are SSS detection data real-time processing, underwater object autonomous detection, and intelligent online detection. The objective is to achieve autonomous detection, recognition, and localization of underwater objects by AUV. In weak underwater communication conditions, this ensures that tasks such as sonar detection, data processing, object detection, and online interaction maintain a degree of real-time capability. This allows the AUV to balance extensive area exploration with coherent operational abilities. In lake experiments, the system successfully demonstrated autonomous online detection of underwater objects by an AUV, achieving a positioning error of less than 5 m on the basis of navigation accuracy, fulfilling the engineering requirements of cruise detection. This research can provide reference for the implementation methods and detection processes in underwater acoustic object detection for marine exploration equipment. The main contributions of this paper include three aspects.

- (1)

- We design a SSS-based AUV autonomous online detection system, which includes the hardware design and layout, software, and process design of the system;

- (2)

- We adopt the YOLO network for SSS images and propose an autonomous online underwater object detection method for AUV;

- (3)

- We conduct extensive experiments to verify the effectiveness of the proposed system.

The contents of this paper are as follows. Section 2 introduces the AUV real-time underwater object detection system based on SSS and elaborates the hardware design and layout, software architecture, and process design of the system based on AUV Section 3 verifies the proposed method by experiments. Section 4 discusses the advantages and limitations of the system and draws some conclusions.

2. Materials and Methods

The focus of this study is the autonomous online object detection of underwater by AUV, emphasizing both autonomy and real-time capabilities during underwater operations. Autonomy here pertains to the AUV’s inherent ability for self-directed perception of underwater objects, encompassing autonomous detection, identification, and localization. The real-time aspect necessitates the capacity for instantaneous data exchange among various autonomous task modules within the AUV to facilitate online object detection. This autonomous perception mandates that AUV possess the capability to not only detect underwater objects but also to process, interpret, and generate high-resolution imaging of the detected data. Subsequently, the system is required to categorize and localize the acquired underwater object images. Online object detection involves establishing an integrated communication interface among the AUV’s internal task modules for efficient data parsing and distribution, ensuring a seamless connection between upstream and downstream software, thus forming a comprehensive end-to-end processing workflow. In specific scenarios, real-time communication between the AUV and a shore-based control station is also essential for higher-level command and control. In light of these requirements, this paper introduces an autonomous online detection system for underwater objects using SSS on an AUV. From an engineering implementation perspective, the paper initially details the design, selection, and arrangement of mission payloads on the AUV, followed by the development of system software and processes tailored to the autonomous and real-time requirements of underwater object detection. Finally, it culminates in the creation of an intelligent detection algorithm based on deep learning, aimed at enhancing the system’s intelligence and detection efficiency.

2.1. Hardware Design of Underwater Object Autonomous On-Line Detection System

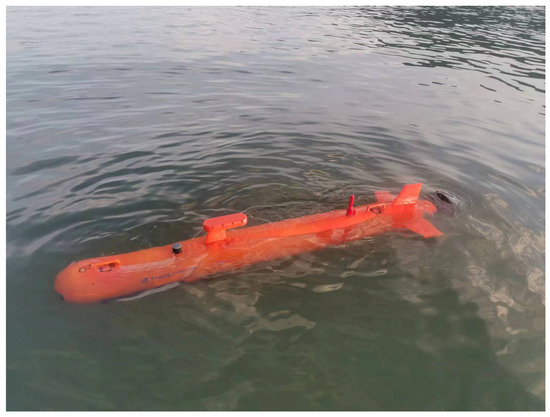

The autonomous online detection system for underwater objects is deployed using a portable integrated reconnaissance and combat AUV, as shown in Figure 1. The AUV has a length of 2.3 m, a main body weight of 80 kg, and can operate at a maximum depth of 100 m.

Figure 1.

Portable AUV.

The primary payload of this system is a side-scan sonar. The side-scan sonar consists of transducers and a processor. The transducer of the side-scan sonar adopts a dual-row uniform linear array structure, with each horizontal array measuring 430 mm in length. It operates at a frequency of 900 kHz, featuring a horizontal beam width of 0.2°, and a vertical beam width of 50°. Utilizing adaptive waveform adjustment technology, it achieves a constant slant range resolution of 0.26 m × 0.01 m at a distance of 75 m. The side-scan sonar processor, an embedded platform, provides interfaces such as Ethernet and RS232, enabling the configuration of multi-module side-scan sonar systems and the collection of multi-sensor data.

To ensure the feasibility and real-time capability of online object detection, a dedicated computer, equipped specifically for sonar data processing and object detection, is employed. This computer utilizes the MIO-5272 model (Advantech Co, Ltd., Taipei City, Taiwan), runs on the Windows 10 operating system, and is equipped with an Intel Core i7 processor @2.6 GHz (Intel Corporation, Santa Clara, CA, USA) and 16 GB of memory.

The integrated navigation unit, providing geographic encoding necessary for the side-scan sonar (SSS), comprises a 600 K Doppler Velocity Log (DVL), a Fiber-Optic Inertial Navigation System (INS), and a Global Positioning System (GPS). This setup achieves an autonomous navigation accuracy of 0.5% D (CEP), meeting the precision requirements for positioning.

The layout of the mission payloads on the AUV is shown in Figure 2. The main control computer (MCC), acoustic processing computer (APC), sensors, and communication equipment processors are housed within a sealed compartment, interconnected via serial ports and Ethernet. The side-scan sonar transducers are symmetrically arranged on both sides of the AUV hull, angled downwards at 45°, and are connected to the side-scan sonar processor inside the sealed compartment via watertight cables. The DVL is mounted on the bottom exterior of the AUV hull, while the GPS and INS, located inside the sealed compartment, form the integrated navigation unit. The radio and underwater acoustic modems are mounted on the top exterior of the AUV hull, and the fiber-optic module is installed in the tail’s water-permeable section, together constituting a multimodal communication unit.

Figure 2.

Task load layout.

The AUV autonomous online object detection system is capable of operating in two distinct modes: autonomous object detection and human-–machine interactive collaborative detection. In the autonomous object detection mode, the AUV navigates along a predefined survey route, gathers underwater information, and detects sonar images. It then transmits the location of the objects and their confidence levels back to the shore base via radio or underwater acoustic modems. In the human–machine interactive collaborative mode, the AUV, equipped with a fiber-optic module, sends back real-time detection results and sonar images with marked objects to the supervisory computer. This mode not only facilitates integrated reconnaissance and combat capabilities of the AUV but also provides operators with vital data to modify and optimize the survey route.

During mission execution, the main control computer directs the AUV’s navigation and operations, while the acoustic processing computer interprets the SSS detection data for object identification. The integrated navigation unit supplies the navigation data, and the multimodal communication unit exchanges data with the shore-based segment, thereby enabling the autonomous online detection and identification of underwater objects.

2.2. Software and Process Design of Underwater Object Autonomous On-Line Detection System

This section, based on the hardware environment and combined with the requirements of online detection tasks, software for the autonomous online detection of underwater objects has been designed and developed. Through a modular design of the software architecture and a multi-threaded parallel design of the software process, sub-tasks such as object detection, object recognition, and human–machine interaction during the AUV’s underwater operations are seamlessly integrated, enabling real-time collection, detection, and reporting while cruising.

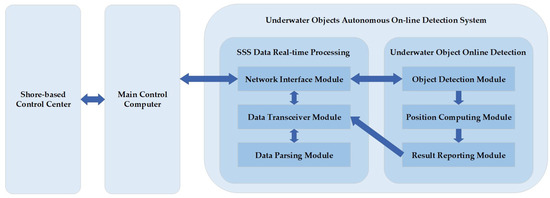

2.2.1. System Software Design

The operation of the online detection system is controlled by the main control unit, dividing internally into upstream and downstream subsystems based on the work content. The upstream subsystem, related to real-time processing of sonar data, is responsible for establishing network communication between the autonomous online detection system and the AUV’s main control unit, setting up multiple real-time data transmission channels within the system, and processing SSS data in real time. The downstream subsystem, related to object detection, tasks itself with detecting objects in the processed sonar images and calculating the geographical coordinates of detected targets upon identification. Both subsystems have been modularly designed, and the system software architecture is shown in Figure 3.

Figure 3.

Architecture of the system software.

The SSS data real-time processing subsystem comprises a network interface module, a data parsing module, and a data transceiver module. The network interface module is responsible for establishing communication interfaces and data transfer between the acoustic computer and the side-scan sonar processor, the integrated navigation unit, and the multimodal communication unit. The data parsing module handles the interpretation and processing of received navigation data, control commands, and sonar data. The data transceiver module manages the reception and unpacking of data between sub-task modules, as well as packaging and sending these data.

The underwater object online detection subsystem includes an object detection module, a position calculation module, and a result reporting module. The object detection module employs pre-trained detection models to identify objects in sonar images. The position calculation module combines the relative position of objects in sonar images with corresponding navigational data to ascertain the geographical location of the objects. The result reporting module filters the detected objects, packages the filtered object characteristics into a predetermined format, and reports them to the corresponding units.

2.2.2. Detection Process Design

Controlled by the AUV’s main control unit, the online detection system enters its operational mode upon receiving execution commands. During the initialization phase, the system software establishes communication interfaces between various hardware modules through the main program, subsequently entering a multi-threaded loop operation state. The data transmission and reception module of this system software maintains three key transmission channels. The first channel is responsible for receiving and interpreting information such as the AUV’s position, speed, course, attitude, and altitude provided by the combined navigation unit, and then forwarding this information to the side-scan sonar processor. The second channel handles the reception of sonar data from the side-scan sonar processor. By integrating the current navigational information and the sonar image data received by the transducer at the corresponding moment, it executes geocoding to generate a complete frame of sonar image data, which are then sent to the underwater object online detection module. The third channel involves the underwater object online detection module. After completing a detection task, it receives the current sonar images sent by the sonar interface, performs object detection, calculates the object’s position, and reports the object characteristic information when the object confidence level reaches the preset threshold. The overall process is shown in Figure 4.

Figure 4.

Process of the system software.

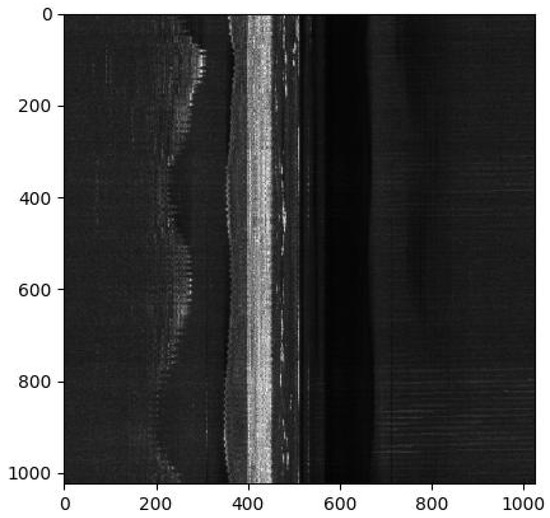

In the SSS data parsing workflow, the acoustic processing computer receives detection data from the side-scan sonar and, upon confirming that the sonar data constitutes a complete frame, decodes the data according to the specified protocol. The system extracts grayscale value segments from the data of each ping and populates a corresponding matrix to generate a frame of sonar imagery. With each update of a ping, the system produces a new sonar image, utilizing a sliding window of size 1024 × 1024. The resulting grayscale SSS image is shown in Figure 5. Each ping’s generated SSS grayscale image is saved, and its corresponding ping number is sent to the underwater target online detection subsystem, with navigation data stored according to the respective ping numbers.

Figure 5.

Grayscale SSS image.

In the object detection process, for efficiency and real-time operation, SSS images are detected on a per-ping basis. The underwater object online detection subsystem, upon receiving the current parsed ping number from the SSS data real-time processing subsystem, reads its corresponding SSS sonar image for object detection. After completing the detection of one SSS image, it reads and detects the SSS image corresponding to the ping number sent by the upstream subsystem, ignoring ping numbers sent during the detection process. This method addresses the backlog issue caused by a mismatch between the detection speed and the SSS image collection and parsing speed.

Upon detecting an object, the system proceeds with object localization. It calculates the geographical coordinates of the object using the positional information from the object detection results and the corresponding navigational data. The calculation method is as follows.

The object’s center pixel point in the original sonar image is determined in terms of its relative position (x, y) using Equation (1):

where , , , and represent the horizontal and vertical coordinates of the top-left and bottom-right corners of the object box in the detection results; and are the horizontal and vertical scaling factors between the original and detected sonar images.

The object’s distance d relative to the AUV is computed using Equation (2):

where denotes the actual distance corresponding to each pixel point, and h is the height from the AUV to the seabed during the detection process.

The geographical latitude and longitude (L, B) of the object are calculated using Equation (3):

where and are the latitude and longitude coordinates of the location of the AUV, R is the Earth’s average radius, and is the bearing angle.

After determining the geographical coordinates of the object, the AUV reports information such as the type, confidence level, and geographical coordinates of the object through the combined navigation unit to the shore-based station. In radio and acoustic communication modes, only object information is reported. In fiber optic communication mode, both the detection result images and object information are transmitted, allowing operators to interpret the data for further task decisions.

During the execution of its tasks, the online detection system for underwater objects commences operation under the control of the main control unit. Throughout the process, it initiates three threads that are both independent and interconnected. Sub-task modules of the two subsystems perform their respective functions in a cyclical manner. This arrangement enables the AUV to achieve autonomous real-time data collection, real-time object detection, and real-time reporting of results regarding underwater objects.

2.2.3. Intelligent Detection Model Based on Deep Learning

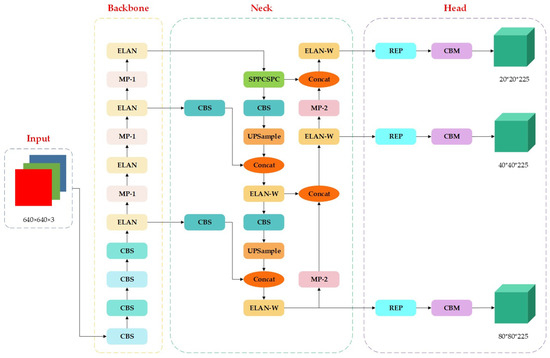

Given the requirements for efficiency, real-time performance, and accuracy in autonomous online detection of underwater objects, this study has selected the YOLOv7 [24] model to complete underwater object intelligent detection. YOLOv7, an advanced single-stage object detection model, incorporates several innovative technologies, making it well-suited for online detection of underwater objects. It integrates structural re-parameterization, positive and negative sample allocation strategies, and a training method with an auxiliary head, achieving a favorable balance between detection efficiency and accuracy [25].

The architecture of the YOLOv7 network consists of four main components: the Input Layer, Backbone, Neck, and Head. The Input Layer is responsible for receiving pre-processed image data. The Backbone, typically a deep convolutional network, extracts features from the image. The Neck performs feature fusion across feature maps of different scales, enhancing the model’s capability to detect multi-scale objects. Finally, the Head network generates the final detection results, including the categories and position information of the objects. The network structure is shown in Figure 6.

Figure 6.

Structure of the YOLOv7 model. (CBS: Convolution-BatchNorm-SiLU, using different colors to distinguish different CBS; SiLU: Swish-like Linear Unit; CBM: Convolution-BatchNorm-Mish; REP: Re-parameterization; ELAN: Efficient Layer Aggregation Network; ELAN: Efficient Layer Aggregation Network-Wide; MP-1/2: Modified Pooling layer; SPPCSPC: Spatial Pyramid Pooling—Cross Stage Partial Connections; Concat: Concatenation).

3. Results

To validate the feasibility and effectiveness of the proposed SSS-based AUV autonomous online object detection system, a lake experiment was conducted in Songhua Lake, Jilin City, Jilin Province. The experimental waters area is planned at a depth of about 50 m. The experiment consists of three parts: dataset creation, object detection model training, and AUV cruise detection of predefined objects. The experimental equipment includes a portable AUV, as shown in Figure 1, a test ship, a training server, a shore-based control center, etc.

3.1. Dataset Creation

Acquiring sonar images containing underwater objects presents challenges, as the conditions of objects embedded in the seabed sediment vary, and such objects often involve sensitive information not suitable for public disclosure. Consequently, for this experiment, predefined object was fabricated and submerged at planned locations within a designated detection area in the lake, with the deployment positions meticulously recorded. Following this, SSS detection was conducted on the predefined object. The data collected were then processed into grayscale images, depicting the predefined object and its corresponding SSS images, as shown in Figure 7.

Figure 7.

Predefined object: (a) photo of predefined object; (b) grayscale SSS image of predefined object.

Due to the limited number of SSS images containing predefined object, this experiment employed techniques such as rotation, mirroring, stitching, and object embedding to augment the dataset, resulting in a total of 7952 sonar images. These images were annotated using Labelimg (version 1.8.6), focusing on a single category labeled “preset”, yielding a collection of annotated image files. Subsequently, the entire image set was divided into a training set and a test set in a 9:1 ratio. Along with the annotation files, a dataset in VOC format was constructed. The detailed parameters of the dataset are illustrated in Table 1.

Table 1.

Dataset parameter.

3.2. Detection Model Training and Analysis

In order to ensure the efficiency of the experiment, model pre-training was conducted on the training server. The Pytorch deep learning framework was utilized, with Python used to implement the training and testing of the YOLOv7 object detection model. The hardware environment of the training server was as follows: Ubuntu 18.04 operating system; CPU: Intel Core i7-6600U; GPU: NVIDIA Quadro RTX 5000 with 16 GB of video memory (Nvidia Corporation, Santa Clara, CA, USA).

In this experiment, Average Precision (AP) was employed to assess the accuracy of the model. AP_0.5 refers to the AP value when the Intersection Over Union (IOU) is set to 0.5, while AP_0.5:0.95 represents the average AP value when the IOU threshold ranges from 0.5 to 0.95 in increments of 0.05. The calculation method is as shown below:

where P is Precision, R is Recall, True Positives (TP, where the object is a positive sample and classified correctly), False Positives (FP, where the object is a negative sample but classified as positive), and False Negatives (FN, where the object is a positive sample but classified as negative).

Frames Per Second (FPS) was used as an indicator to evaluate the efficiency of the model’s detection capabilities. The network was trained using the VOC dataset. The performance of YOLOv7 on the test set is illustrated in Table 2.

Table 2.

Detection performances of the YOLOv7 model.

The training results show that the detection accuracy rate and recall rate of the predefined object are more than 85%, the detection performance can meet the engineering requirements, and it can be deployed on the AUV for intelligent online object detection.

3.3. Cruise Detection

To ensure the smooth conduct of the experiments, a pre-experiment planning and a rational experimental mechanism were established for the lake experiments. Initially, the speed settings and survey line planning were determined based on the detection principle of SSS and the underwater environment of the experimental waters. Subsequently, the AUV was directed to detect underwater objects autonomously and online along the planned detection route at a set speed. Finally, the detection results from each survey line were aggregated and processed to evaluate the system’s performance in terms of object detection efficiency and localization accuracy.

3.3.1. Pre-Survey Planning

The AUV, equipped with SSS, aimed to achieve full coverage along the course direction, which involves ensuring continuity between two consecutive pings on the track. With a coverage requirement of 200% for underwater object detection tasks, the AUV’s speed was a primary factor affecting full coverage detection, given a fixed SSS sampling rate. The AUV was expected to maintain a constant, straight course, with the maximum speed calculated using the formula:

where is the maximum speed in knots (kn); L is the minimum object size to be detected, in m, with the largest object diameter set at 3 m; n is the minimum number of pulses required to detect the object per pass, set at 5 for precision scanning in this experiment; f is the sonar’s pulse emission rate per second, set at 3 pulses per second for the experiment; and m is the beam or pulse coefficient, set at 1 for single-beam SSS. With a slant range of 75 m, the horizontal beam width of the sonar was 0.26 m. The calculated maximum speed, was 3.3 kn. To achieve the detection task and ensure system stability, the actual cruising speed was set at 3 kn.

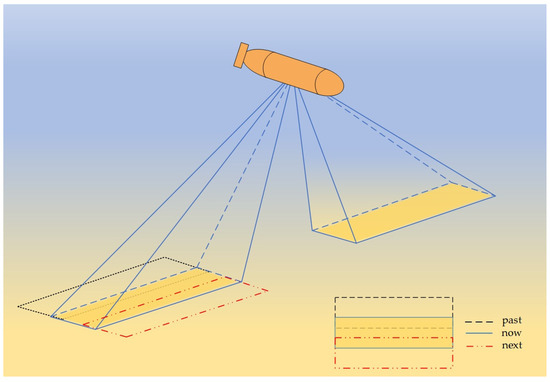

During the operation of the SSS, a horizontal blind area of the same length as the height above the bottom was observed, as shown in Figure 8. To ensure effective detection, six survey lines were symmetrically laid on both sides of the object at a fixed range of 75 m, with distances of 30 m, 45 m, and 60 m from the object, corresponding to heights above the bottom of 20 m, 30 m, and 40 m, respectively.

Figure 8.

Working principle diagram of SSS.

After completing a survey along a planned track, the AUV’s object detection results from that voyage were compiled and normalized. The normalization process was as follows: the Softmax function was used to normalize the confidence of all objects to obtain a weight and then the latitude and longitude of each object were averaged according to the new weights to obtain the average detection result of a voyage (L, B).

where is the confidence of the object detected for the i time on a voyage, and is the longitude and latitude of the object detected for the i time on a voyage.

3.3.2. Experimental Results

To ensure safety, underwater experiments were conducted during periods with favorable water conditions. After the experimental vessel entered the deployment area of the predetermined object, the AUV was launched into the water. Once the AUV stabilized, the shore-based control center issued object detection commands to it. The AUV then autonomously navigated along the pre-planned route and initiated its autonomous online detection mode to detect underwater objects. During its journey along a survey line, the AUV performed real-time data collection, object detection, position calculation, and result reporting. A total of six different survey lines were explored in this process.

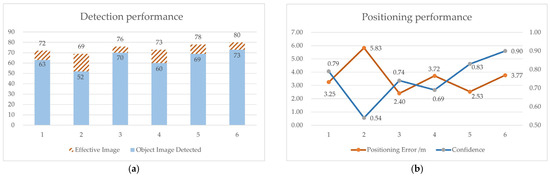

In the first voyage, data acquisition through the SSS effectively yielded 72 SSS grayscale images containing the designated objects. Out of these, 63 images successfully identified the objects. The expedition achieved a positioning accuracy of 3.25 m for the designated objects, with a confidence level of 0.79. The other 5 voyages were completed as smoothly as the first voyage, and the comprehensive detection results of the six voyages as shown in Figure 9.

Figure 9.

Detection results: (a) detection performance; (b) positioning performance.

From Figure 9a, it is evident that the AUV successfully detected objects in each trajectory, demonstrating a stable detection performance with an average detection efficiency of 86.4%. As shown in Figure 9b, based on the navigational unit’s positioning accuracy, the system achieved a stable positioning performance for predefined objects, with an average positioning error of detected objects being less than 5 m. The experiment validates that both the detection efficiency and accuracy of the system meet the engineering requirements.

The experimental procedure meticulously documented the duration consumed in each segment of the online detection process: on average, acquiring a sonar data point took 350 ms, interpreting a sonar image required 200 ms, and detecting a object in a sonar image necessitated 48 ms. Consequently, the average total duration for the online detection process was 598 ms. Comprehensive real-time performance metrics for the six voyages are illustrated in Table 3.

Table 3.

Online detection performances of the system.

It can be observed that, during the operating process of the online detection system, the average time from data collection to result output was less than 1 s, and the operational time for each module, along with the coherence between the upstream and downstream modules, met the online requirements of the engineering project.

The aforementioned experimental results validate the feasibility, efficacy, and real-time performance of the proposed SSS-based AUV autonomous online object detection system, which has guiding significance for realizing the autonomous online intelligent detection of underwater objects by AUV equipped with SSS.

4. Discussion

The results of the lake experiment show that the SSS-based AUV autonomous online object detection system satisfactorily meets engineering requirements in terms of detection effectiveness and positioning accuracy for underwater predefined objects. Further discussion on the following key technologies proposed in this paper is presented.

4.1. Significance of the Proposed Method

4.1.1. Autonomization

This study introduces a object detection system that significantly augments the autonomous underwater object detection capabilities of AUV. Existing mechanisms for underwater object detection based on AUV merely require the AUV to collect data underwater, with subsequent object detection tasks being carried out at a shore-based control center. In this study, by integrating an additional computing unit onboard the AUV and deploying specialized object detection software for the analysis of SSS data, this system facilitates a fully autonomous operation, encompassing data acquisition, processing, object detection, and localization.

4.1.2. Intelligentization

This paper advances AUV underwater object detection intelligence by incorporating the YOLOv7 object detection algorithm into the proposed system. Two-stage processes for detecting underwater objects, which rely on expert interpretation of sonar videos, are significantly subjective and time-intensive, often exceeding 30 min. The methodology introduced here transforms SSS data into images for object detection via feature extraction by the YOLOv7 model, markedly accelerating the detection process. This system’s capability to meet engineering requirements for underwater object identification and surpass manual analysis speeds facilitates an intelligent, end-to-end detection workflow. Additionally, the outcomes of the detection process are instrumental in guiding AUV operations and aiding in precise localization, representing a crucial enhancement in AUV intelligence.

4.1.3. Real-Time Transformation

To achieve real-time detection, the study has developed a software that operates on a multi-threaded, parallel processing framework, significantly improving the efficiency of data exchanges with the shore-based control center. This software concurrently executes multiple processes, including SSS data handling and object detection, using a real-time data exchange method for image processing. The complete cycle from SSS signal dispatch to detection result submission is accomplished within 1 s, enabling instantaneous data collection, object detection, and reporting. The detection results, which include information on the object’s category, location coordinates, and confidence level, are transmitted to the control center with a data footprint of no more than 15 KB. This method proves to be both more efficient and reliable than transmitting the entire detection dataset.

4.2. Limitations of the Proposed Method

4.2.1. Hardware Design

Given the restricted payload capabilities of portable AUV, which preclude the inclusion of GPUs within their acoustic processing units, computational power is inherently limited. Despite this limitation, it remains feasible to conduct autonomous online object detection tasks independently of other AUV functions. Yet, the deployment of pre-trained models onto AUV noticeably diminishes detection speeds. Consequently, AUV tasked with an array of acoustic processing activities, such as noise reduction in raw SSS data and image segmentation, necessitate enhanced configurations for increased computational capacity. Typically, the existing system hardware suffices for autonomous online detection requirements.

4.2.2. Software Design

In the dataset creation Phase, cylindrical objects were fabricated and deployed at the lakebed for sampling purposes, facilitating the training of an intelligent detection model. While this approach faithfully replicates the authentic characteristics of SSS imaging, it incurs high costs and is limited to the creation of objects with predefined shapes and structures. During the model training phase, the object detection model undergoes training on a high-performance server before its deployment on the AUV, optimizing computational resources. However, the transition of models to AUV hardware introduces performance degradation due to hardware constraints, suggesting a potential need for in situ model training on AUV. Despite these challenges, the process is generally viable for AUV underwater object detection tasks.

4.2.3. Detection Model Refinement

The YOLOv7 algorithm encounters certain detection omissions in practical scenarios. To enhance detection precision or to sustain detection capabilities at increased velocities, it is imperative to improve the detection model. This improvement aims to augment its proficiency in identifying small underwater objects while balancing detection speed and effectiveness. Nonetheless, the algorithm adequately fulfills intelligent detection needs in standard underwater operational.

5. Conclusions

Addressing issues such as poor autonomy and low intelligence in AUV underwater object detection, this paper proposes a system for AUV underwater object detection. The innovative aspects of this paper include the following: (1) proposing a SSS-based AUV autonomous online object detection system, including the system composition and implementation process; (2) adopting the YOLO network for SSS images and proposing an autonomous online underwater object detection method for AUV; and (3) through lake experiments, achieving an autonomous online detection of underwater objects with the AUV equipped with SSS and verifying the feasibility and effectiveness of the proposed method. This provides a new solution for AUV underwater object detection. Overall, the SSS-based AUV autonomous online object detection system proposed in this paper overcomes, to a certain extent, the limitations of underwater communication difficulties on underwater object detection, achieving autonomy, online capability, and intelligence in AUV underwater object detection. It also has good adaptability and can be ported to other marine exploration equipment for autonomous underwater object detection, which is significant for the development and application of intelligent detection in marine engineering. Future research focuses include optimizing system detection techniques, such as model lightweighting and multi-sensor fusion detection, to further enhance real-time performance and accuracy, and optimizing engineering implementation, such as compatibility between hardware and software, as well as improving configurations, to further increase the system’s detection efficiency.

Author Contributions

Conceptualization, S.W.; methodology, S.W. and X.L.; software, S.W. and X.L.; validation, S.W., X.L., X.Z., B.C. and X.S.; formal analysis, S.W., X.L. and S.Y.; investigation, S.W.; resources, X.Z.; data curation, S.W., X.L. and X.S.; writing—original draft preparation, S.W.; writing—review and editing, X.L., X.Z., S.Y., B.C. and X.S.; visualization, S.W.; supervision, X.Z.; project administration, X.Z. and B.C.; funding acquisition, X.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by CAS Key Technology Talent Program, grant number Y929090301.

Data Availability Statement

Access to the data will be considered upon request by the authors.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Huang, Y.; Li, Y. State-of-the-Art and Development Trends of AUV Intelligence. ROBOT 2020, 42, 215–231. [Google Scholar] [CrossRef]

- Song, B.W.; Pan, G. Development trend and key technologies of autonomous underwater vehicles. Chin. J. Ship Res. 2022, 17, 27–44. [Google Scholar] [CrossRef]

- Sahoo, A.; Dwivedy, S.K. Advancements in the field of autonomous underwater vehicle. Ocean Eng. 2019, 181, 145–160. [Google Scholar] [CrossRef]

- Xu, G.F.; Zhou, D.X. Vision-based underwater target real-time detection for autonomous underwater vehicle subsea exploration. Front. Mar. Sci. 2023, 10, 1112310. [Google Scholar] [CrossRef]

- Alla, D.N.V.; Jyothi, V.B.N. Vision-based Deep Learning algorithm for Underwater Object Detection and Tracking. In Proceedings of the OCEANS Conference, Chennai, India, 21–24 February 2022. [Google Scholar]

- Zhang, T.; Li, Q. Underwater Optical Image Restoration Method for Natural/Artificial Light. J. Mar. Sci. Eng. 2023, 11, 470. [Google Scholar] [CrossRef]

- Cao, X.; Ren, L. Research on Obstacle Detection and Avoidance of Autonomous Underwater Vehicle Based on Forward-Looking Sonar. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 9198–9208. [Google Scholar] [CrossRef] [PubMed]

- Tang, Y.; Wang, L. AUV-Based Side-Scan Sonar Real-Time Method for Underwater-Target Detection. J. Mar. Sci. Eng. 2023, 11, 690. [Google Scholar] [CrossRef]

- Zhang, T.-D.; Wan, L. Object detection and tracking method of AUV based on acoustic vision. China Ocean. Eng. 2012, 26, 623–636. [Google Scholar] [CrossRef]

- Hao, Z.X.; Wang, Q. Underwater Target Detection Based on Sonar Image. J. Unmanned Undersea Syst. 2023, 31, 339–348. [Google Scholar]

- Guo, G.; Wang, X.K. Review on underwater target detection, recognition and tracking based on sonar image. Control Decis. 2018, 33, 906–922. [Google Scholar] [CrossRef]

- Geng, J.Y. Overview of Marine scanning sonar technology. China-Arab. States Sci. Technol. Forum 2022, 128–132. [Google Scholar]

- He, Y.G. Present Situation and Development of Ocean Side Scan Sonar Detection Technology. Intelligentilize Informatiz. 2020, 275–276. [Google Scholar] [CrossRef]

- Tan, P.L.; Wu, X.B. Review on Underwater Target Recognition Based on Sonar Image. Digit. Cean Uuderwater Warf. 2022, 5, 342–353. [Google Scholar] [CrossRef]

- Zeng, L.; Sun, B. Underwater target detection based on Faster R-CNN and adversarial occlusion network? Eng. Appl. Artif. Intell. 2021, 100, 104190. [Google Scholar] [CrossRef]

- Ouyang, W.; Wei, Y. YOLOX-DC: A Small Target Detection Network up to Underwater Scenes. In Proceedings of the OCEANS Hampton Roads Conference, Online, 17–20 October 2022. [Google Scholar]

- Sun, Y.; Zheng, W. Underwater Small Target Detection Based on YOLOX Combined with MobileViT and Double Coordinate Attention. J. Mar. Sci. Eng. 2023, 11, 1178. [Google Scholar] [CrossRef]

- Yi, W.; Wang, B. Research on Underwater Small Target Detection Algorithm Based on Improved YOLOv7. IEEE Access 2023, 11, 66818–66827. [Google Scholar] [CrossRef]

- Qiang, W.; He, Y. Exploring Underwater Target Detection Algorithm Based on Improved SSD. J. Northwestern Polytech. Univ. 2020, 38, 747–754. [Google Scholar] [CrossRef]

- Liu, J.M.; Meng, W.H. Review on Singl-Stage Object Detection Algorithm Based on Deep Learning. Aero Weapon. 2020, 27, 44–53. [Google Scholar]

- Tang, Y.L.; Jin, S.H. Research on mechanism of real-time underwater target detection by sonar based on AUV. Hydrogr. Surv. Charting 2023, 43, 26–29. [Google Scholar]

- Luo, Y.H. Sonar Image Object Detection System Based on Deep Learning. Digit. Cean Uuderwater Warf. 2023, 6, 423–428. [Google Scholar] [CrossRef]

- Song, Y.; He, B. Real-Time Object Detection for AUVs Using Self-Cascaded Convolutional Neural Networks. IEEE J. Ocean. Eng. 2021, 46, 56–67. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (7464), Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Xin, S.A.; Ge, H.B. Improved YOLOv7’s lightweight underwater target detection algorithm. Comput. Eng. Appl. 2023, 1–16. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).