Abstract

Neurodegenerative diseases present significant challenges in terms of mobility and autonomy for patients. In the current context of technological advances, brain–computer interfaces (BCIs) emerge as a promising tool to improve the quality of life of these patients. Therefore, in this study, we explore the feasibility of using low-cost commercial EEG headsets, such as Neurosky and Brainlink, for the control of robotic arms integrated into autonomous wheelchairs. These headbands, which offer attention and meditation values, have been adapted to provide intuitive control based on the eight EEG signal values read from Delta to Gamma (high and low/medium Gamma) collected from the users’ prefrontal area, using only two non-invasive electrodes. To ensure precise and adaptive control, we have incorporated a neural network that interprets these values in real time so that the response of the robotic arm matches the user’s intentions. The results suggest that this combination of BCIs, robotics, and machine learning techniques, such as neural networks, is not only technically feasible but also has the potential to radically transform the interaction of patients with neurodegenerative diseases with their environment.

1. Introduction

Over the past decade, the intersection of neuroscience and robotics has experienced exponential growth, largely driven by advances in brain–computer interfaces (BCIs). These technologies, which translate brain activity into commands for external devices, have opened new possibilities for enhancing the quality of life of individuals with severe motoric disabilities [1,2] Unlike conventional interfaces, such as those using eye movement ElectroOculoGraphy (EOG) or facial muscle contractions (electromyography, EMG) [3,4]. BCIs require no connection to peripheral muscles or nerves, allowing control of devices without verbal or physical interaction [5,6]. This is particularly relevant for patients in advanced stages of diseases that preclude any movement, such as subcortical stroke, amyotrophic lateral sclerosis, or cerebral palsy.

Specifically, the use of BCIs in assisted mobility, like wheelchair control, represents a rapidly developing and promising research field [7]. This study focuses on the integration of low-cost BCIs, specifically using commercial ElectroEncefaloGraphy (EEG) headbands with a single electrode, for controlling robotic arms on autonomous wheelchairs, an area that has not yet been exhaustively explored [8].

Controlling assistive devices through brain signals not only offers new hope for individuals with physical limitations but also poses significant technical challenges. Accuracy, ease of use, and adaptability are crucial aspects that must be addressed to ensure the feasibility of these systems in real-world environments. In this context, our work focuses on the use of commercial EEG headbands, providing a non-invasive and accessible way to capture brain signals [1].

Integrating these technologies with autonomous robotic systems presents a series of unique challenges. The architecture of the system proposed in this study includes a development board capable of hosting both artificial intelligence (AI) and running the Robot Operating System (ROS), along with a microcontroller to control the drivers associated with the stepper motors of a six-axis cobot and wheelchair movements. This setup allows real-time interpretation of EEG signals and an adaptive response of the system, ensuring that the robotic arm’s action aligns with the user’s intentions [9].

The incorporation of the AI development board is crucial for the efficient real-time processing of EEG data. The ability of these boards to run deep learning models, such as Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), facilitates the accurate interpretation of brain signals [10]. These models are essential for decoding complex patterns of brain activity and converting them into specific commands for robotic control.

Moreover, the use of ROS as a software architecture underpins the system’s flexibility and scalability. ROS provides a robust platform for integrating various software and hardware modules, enabling efficient communication between the development board and other system components, such as sensors and actuators [11]. This modular architecture facilitates the implementation of improvements and adaptation to different types of BCI applications.

On the other hand, the microcontroller plays a fundamental role in controlling the physical components of the system. Its capability to handle multiple inputs and outputs makes it ideal for controlling the stepper motors and other mechanical elements of the robotic arm and wheelchair. The combination of the development board with the microcontroller and ROS creates an integrated system capable of performing complex tasks efficiently and reliably.

This paper details the design and evaluation of a BCI system architecture that combines EEG signals, machine learning techniques with neural networks, and robotic systems, focused on providing solutions for individuals with neurodegenerative diseases, spinal problems, or any other reason that may lead to a reduction in their natural motor abilities. With this architecture, we aim not only to demonstrate the feasibility of the proposed development but also to make a significant contribution to the emerging field of BCI-assisted mobility, paving new paths for the research and development of more advanced and accessible BCI mobility solutions.

The content of this article is structured by initially addressing Section 2, which focuses on the state-of-the-art study of BCI systems to date, followed by a description of the iterative process of defining the architecture in Section 3, in the Research Methodology section. It continues in Section 4 with a description of the proposed architecture, breaking down the study into functional architecture, hardware, and software. Section 5 relates to the results of the development, compliance with identified requirements, and the economic and legal technical feasibility of the proposal, concluding the article with Section 6 on discussion and Section 7 with conclusions and future work that complete the content of the paper.

2. State of the Art

In recent years, as evidenced in published research, there have been significant advancements in the field of BCIs applied to wheelchair control and other assistive devices. In 2021, a notable study by Banach et al. explored the use of Alpha wave-based EEG signals for controlling electric wheelchairs, offering a new mode of interaction for individuals with incurable diseases that severely limit their communication and mobility [12]. This approach marks a critical advancement in aiding individuals “locked-in” their bodies due to severe conditions.

Furthermore, Antoniou et al. (2021) introduced an innovative system using a brain–computer interface to capture EEG signals during eye movement, classifying them into six categories using a random forest classification algorithm [13]. This development is significant in the interpretation of EEG signals for BCI applications [14]. On another note, a 2019 study, extending into 2021, demonstrated a hybrid brain–computer interface (hBCI) combining EEG and EOG signals to control an integrated wheelchair and robotic arm system, showing satisfactory control accuracy and highlighting the potential of BCI-controlled systems in complex daily tasks.

In a similar vein, a 2021 study by researchers explored a facial–machine interface system based on EEG artifacts to enhance mobility in individuals with paraplegia using the Emotiv Neuroheadset. Results indicated that combining eye and jaw movements can be highly efficient, suggesting a practical hybrid BCI system for wheelchair control [15].

Another significant study in 2021 focused on developing an operative motor controller that can extract and read brain signals to convert them into usable commands to act upon the wheels of a wheelchair [16]. Also, a semi-autonomous navigation control system for an intelligent wheelchair was presented, based on asynchronous Steady State Visually Evoked Potential (SSVEP), demonstrating high stability and flexibility [17].

Finally, a work by Olesen et al. (2021) investigated the possibility of a hybrid BCI system combining EEG and EOG signals for the remote control of a vehicle, such as a wheelchair, using machine learning techniques to design a robust and computationally efficient system [18]. These studies represent a significant body of work in the development of BCI technologies to enhance the quality of life for individuals with severe physical disabilities.

Recent research in the realm of EEG-based BCIs has made significant strides, especially in applications for controlling robotic devices like robotic arms and wheelchairs. Here is a structured summary of key developments in this field, reflecting the current state of the art and highlighting principal focus areas, as well as significant challenges and achievements.

Advancements and Utilization of BCI Technologies: BCI systems endeavor to establish a conduit between the human cerebrum and external apparatuses. Prior investigations have underscored the potential of BCIs in maneuvering both virtual entities and tangible objects, such as wheelchairs and quadcopters. Yet, the application of non-invasive BCIs in directing robotic limbs for reach and grasp endeavors poses significant hurdles [13,19].

BCIs for Individuals with Motor Impairments: Persons afflicted with severe neuromuscular conditions or motor system impairments often experience a loss of voluntary muscle control. Nonetheless, many retain the ability to generate neural signals pertinent to motor functions akin to those of unimpaired individuals. This revelation has propelled the exploration of BCIs as a nascent technology capable of decoding cerebral activity in real time, thereby facilitating anthropomorphic manipulation of prosthetic or exoskeletal assistive devices [19].

Non-Invasive BCIs in Robotic Limb Control: The aspiration to control robotic limbs via non-invasive BCIs presents a compelling alternative, albeit with constraints in achieving adept multidimensional manipulation within a three-dimensional ambit. While previous endeavors have predominantly focused on virtual and tangible object control, scant research has ventured into the domain of prosthetic or robotic limb manipulation through scalp-based EEG BCIs [13].

Experimental Framework and Reach and Grasp Tasks: Addressing this lacuna, experiments were meticulously designed, incorporating progressively challenging reach and grasp tasks. A cohort of healthy participants was enlisted to navigate a robotic limb through intricate tasks using non-invasive BCIs, segmented into two phases: initial guidance of the cursor/robotic appendage across a bi-dimensional plane toward an object positioned in a tri-dimensional space, followed by the downward maneuver of the robotic limb to secure the object. This sequential experimental approach effectively minimized the degrees of freedom required for BCI interpretation, thereby simplifying the grasping mechanism within a tri-dimensional context [13].

User Proficiency and Control Retention: Participants exhibited an exceptional aptitude in modulating their cerebral rhythms to govern a robotic limb using our bifurcated non-invasive control schema. They adeptly mastered the manipulation of a robotic limb to seize and reposition arbitrarily located objects within a confined tri-dimensional space, consistently maintaining control proficiency over multiple sessions spanning 2–3 months [20].

This summary reflects the evolution and current state of the art in the development of BCIs for controlling robotic devices, underscoring both the achievements and the outstanding challenges in this research area. Continuation in innovation and refinement of these technologies is essential to overcome current limitations and expand the practical applications of BCIs in assisting individuals with motor disabilities and enhancing human interaction with advanced technologies. Several key challenges for future research in the field of BCIs for controlling robotic limbs can be identified.

Improvement in Accuracy and Speed: Although significant advancements have been made, the accuracy and response speed of BCI systems in controlling robotic limbs can still be limited. Investigating methods to enhance the decoding speed of brain signals and the precision of control would be a valuable area of research.

Integration of Sensory Feedback: Most current BCI systems primarily focus on translating user intentions into commands for the robotic device. Incorporating sensory feedback, such as touch or pressure, into the BCI system could significantly improve natural interaction and control efficacy.

System Adaptability and Learning: Developing BCI systems that can adapt and learn from individual user interactions could enhance personalization and efficiency of control. This includes adapting to changes in the user’s brain signals over time or between sessions [13].

Reduction in User’s Mental Load: Controlling complex devices via BCIs can be mentally demanding for users. Investigating ways to reduce this mental load, possibly through hybrid or shared control systems, is crucial for the long-term viability of BCIs in practical applications.

Improvement in Non-Invasiveness and Comfort: Although non-invasive BCI systems have advanced, they can still be uncomfortable or impractical for prolonged use. Investigating new sensing technologies and algorithms that improve comfort and ease of use without compromising performance would be beneficial [20].

Applications in Rehabilitation and Assistance: Further exploring how BCI systems can be customized and optimized for rehabilitation and assistance applications, especially for individuals with severe motor disabilities, is an area of great potential.

Enhanced Brain–Robot Interface: Developing more intuitive and natural interfaces between the human brain and robotic devices, possibly through improved artificial intelligence and machine learning algorithms, could significantly enhance the usability and acceptance of BCI systems [20].

Ethics and Privacy: As BCI systems become more advanced, ethical and privacy concerns related to the access and use of brain data arise. Addressing these concerns and developing clear ethical guidelines will be crucial for the widespread adoption of BCI technology.

These challenges represent exciting and fundamental areas for future research, with the potential to significantly advance the field of brain–computer interfaces and their application in controlling robotic devices.

Considering the comprehensive review of existing work, our proposed approach is designed to overcome the identified limitations and challenges. Leveraging advanced algorithms and innovative hardware integration, we aim to offer a more robust, efficient, and user-centric solution. By adopting a holistic and adaptive framework, our method not only addresses immediate technical limitations but also paves the way for future improvements. In this way, our approach remains at the forefront of technological advances and offers a scalable and flexible solution that adapts to changing user needs and new challenges in the field.

3. Research Methodology, Iterative Process of Defining the Architecture

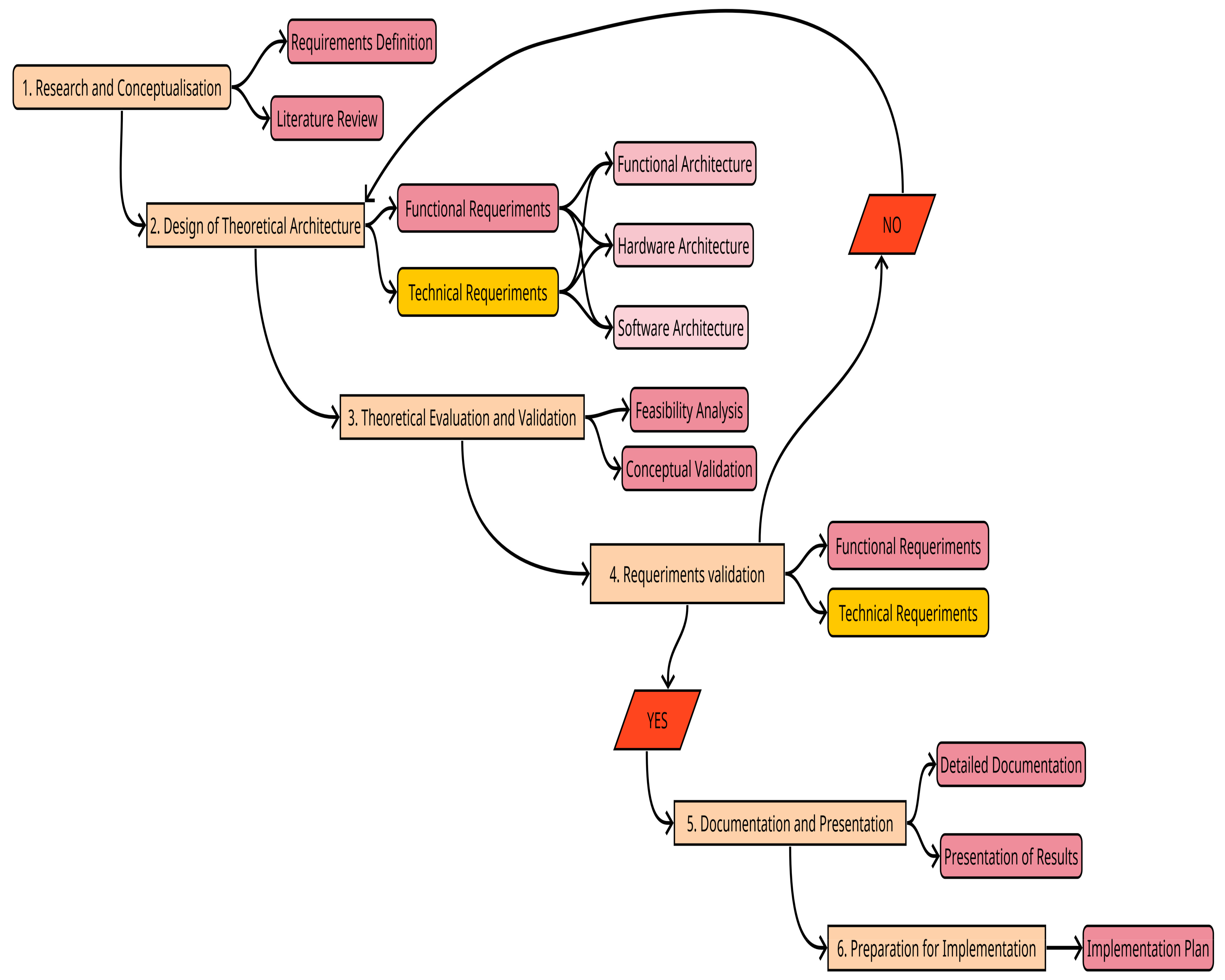

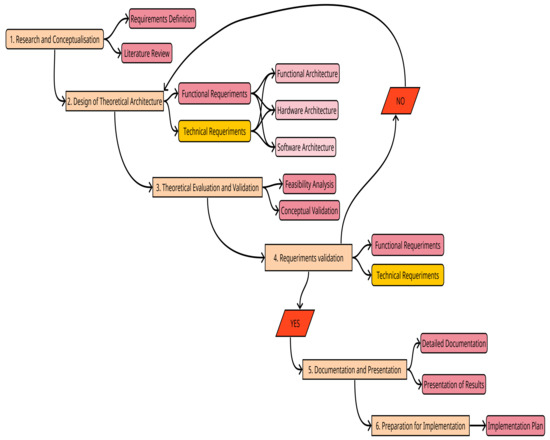

We will start by outlining the workflow performed, which can be seen in Figure 1, to develop the definition of the proposed architecture and achieve the results presented.

Figure 1.

Workflow.

The workflow commences with an extensive bibliographic review and analysis of existing studies to understand best practices and available technologies in BCIs, robotics, and assistive systems. Based on the research, functional and technical requirements that need to be met are defined. This phase focuses on theory and technical possibilities.

Next, a theoretical model of how functional components interact to fulfil the functional requirements are designed. Then, a theoretical hardware structure, selecting ideal components based on theoretical and comparative analyses, is proposed. From the hardware structure, a theoretical software architecture choosing frameworks, languages, and algorithms based on their theoretical suitability is designed.

Once the architectures are proposed, the theoretical models are evaluated to verify their feasibility. The feasibility analysis conducts a detailed analysis of the technical, economic, and operational feasibility of the proposed architecture. Then, the validation of the requirements defined in the design stage, both functional and technical requirements, is conducted.

This approach ensures a thorough and well-founded development of the architecture before any physical implementation, minimizing risks and ensuring that the design is suitable for the end user’s needs.

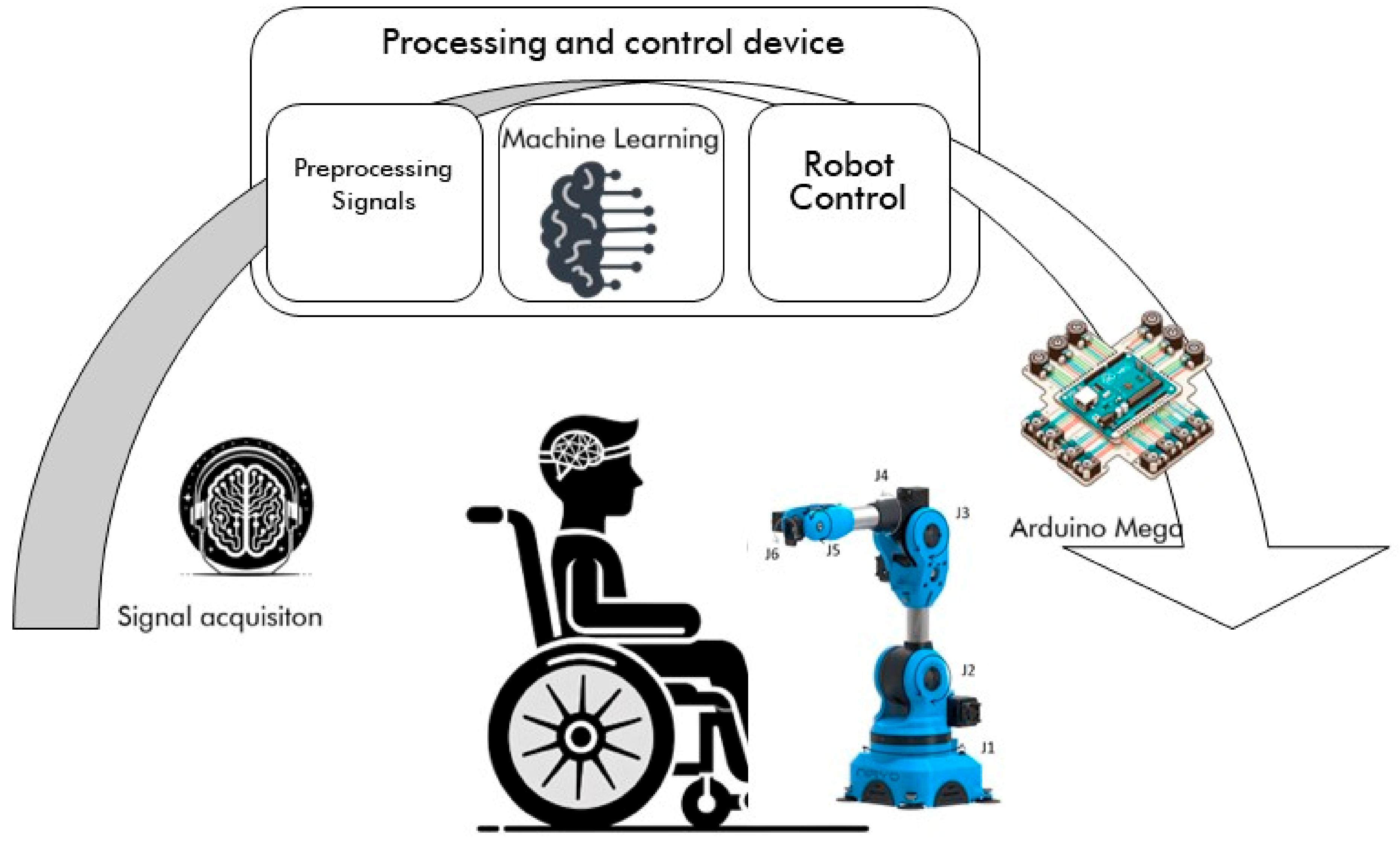

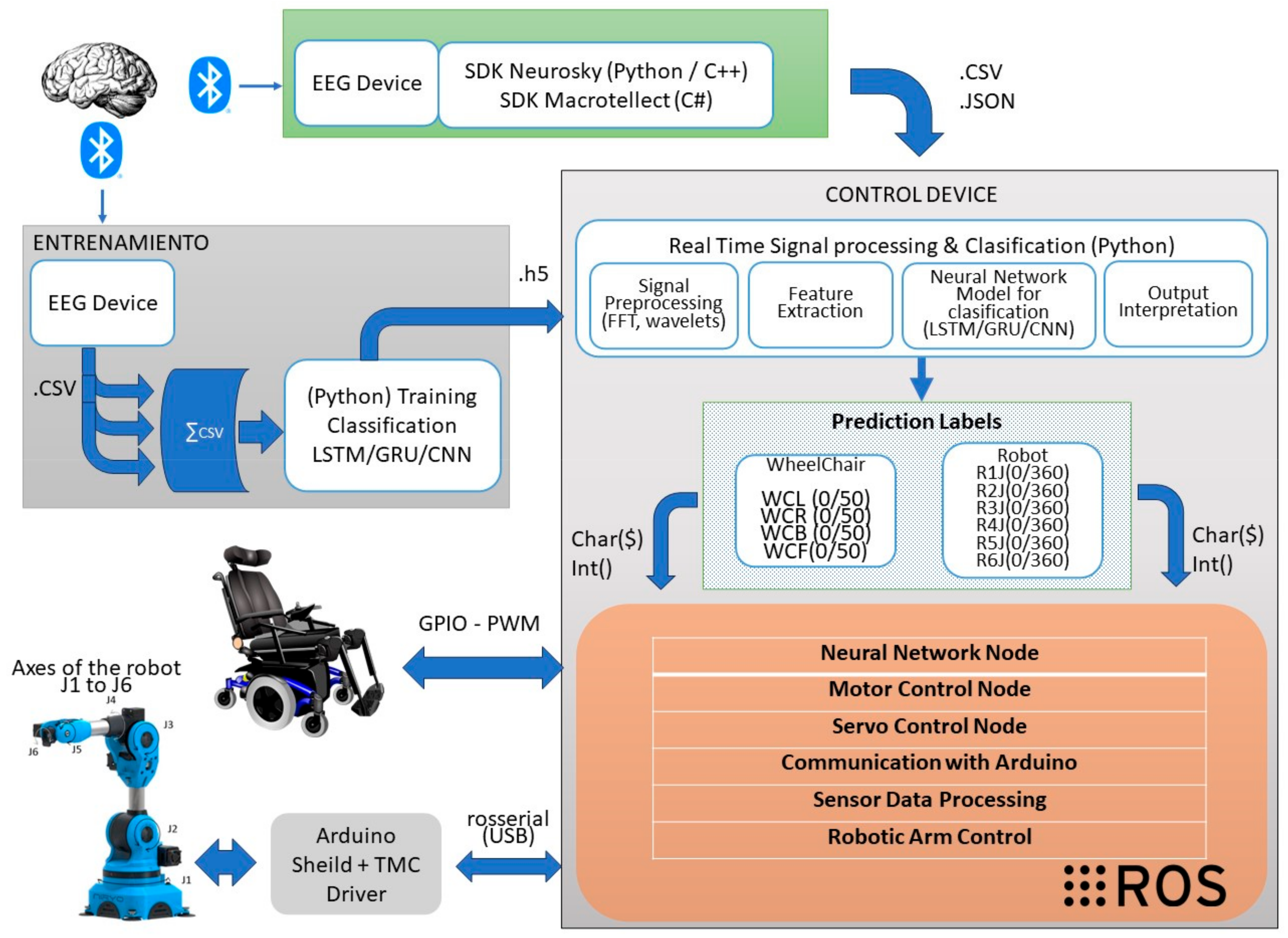

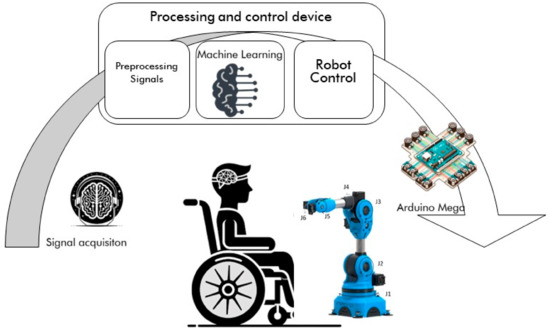

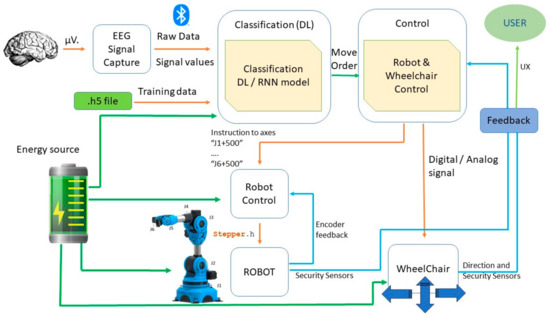

A conceptual diagram of the basic architecture can be seen in Figure 2. It is essential to note that we are combining brain data capture technologies through EEG signals from a specific area of the brain to ultimately control a collaborative robot or cobot positioned at a point in space, combining 6 stepper motors in conjunction with a motorized wheelchair.

Figure 2.

Conceptual diagram of the basic architecture for control of a wheelchair and a cobot.

For this development, the functional requirements of the system and the technical requirements have been identified separately to ensure, firstly, the suitability of the architecture and, secondly, the feasibility of the system and its value proposition.

3.1. Functional Requirements

Table 1 below sets out the functional requirements identified for the development of the research and the necessary architecture, which can be seen in the functional architectures, hardware, and software section of this paper (Figure 3, Figure 4 and Figure 13).

Table 1.

Functional requirements tables.

Figure 3.

Functional architecture.

Figure 4.

Hardware architecture.

Further information on each of the functional requirements identified in Table 1 can be found in Appendix A.

To group functional and technical requirements into typologies, we can consider several aspects, such as the main functionality of the system, usability, adaptability, security, portability, and others, as you can see next. Here is a proposed grouping with at least five typologies and at least two requirements in each:

- Functionality and Integration: EEG signal capture; real-time interpretation of EEG signals; system integration with commercial chairs.

- Control and Operability: Intuitive control of the robotic arm and wheelchair; control of wheelchair movements.

- Adaptability and Usability: Adaptability to different users; preset routines in robot and robot + chair actions.

- Safety and Stability: Safety and stability in motion; EEG/ECG signal quality analysis.

- Portability and Compatibility: Portability and low weight (<100 g; commercial wireless headsets.

Each of these groups covers a set of key aspects of the system, from how it interacts with the user and the environment to how security and usability are ensured. This grouping should provide you with a clear structure for organizing and addressing the functional requirements of the project. These requirement groups will be used to compare the architecture with other previous works.

3.2. Technological Requirements

In Table 2, we can find the identified technical requirements necessary for the development of the proposed architecture.

Table 2.

Technical requirements table.

Further information on each of the technical requirements identified in Table 2 can be found in Appendix A.

To group technical requirements, we can consider aspects such as integration and compatibility, system efficiency and performance, usability and accessibility, autonomy and economic sustainability, and regulatory compliance. Here is a proposed grouping with at least five typologies and at least two requirements in each:

- Integration and Compatibility: Effective integration of BCI with chair + robot; integration with commercial motorized wheelchairs; multi-platform headset support.

- Efficiency and Performance: Efficient EEG data processing (t ≤ 1 s); real-time interpretation of EEG signals; robust control system for robotic arm and wheelchair.

- Usability and Accessibility: Accessible and user-friendly interface; user feedback system.

- Autonomy and Economic Sustainability: Autonomy of use (≥3 h); low integration cost (<EUR 1500).

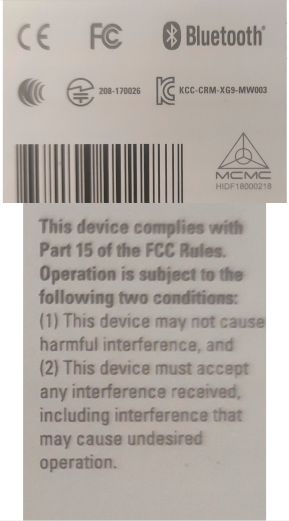

- Regulatory Compliance: Compliance with applicable regulations.

Each of these groups addresses different technical aspects that are fundamental to the development and effective implementation of the system.

These tables and the detailed information for each requirement will serve as a reference for determining the feasibility of the proposed architecture.

4. Architecture

This section presents the proposed architecture to achieve the research objectives and requirements presented in Section 3.1 and Section 3.2 of these documents.

The architecture is described by levels of abstraction, from functional levels through hardware elements to software components.

4.1. Functional Architecture

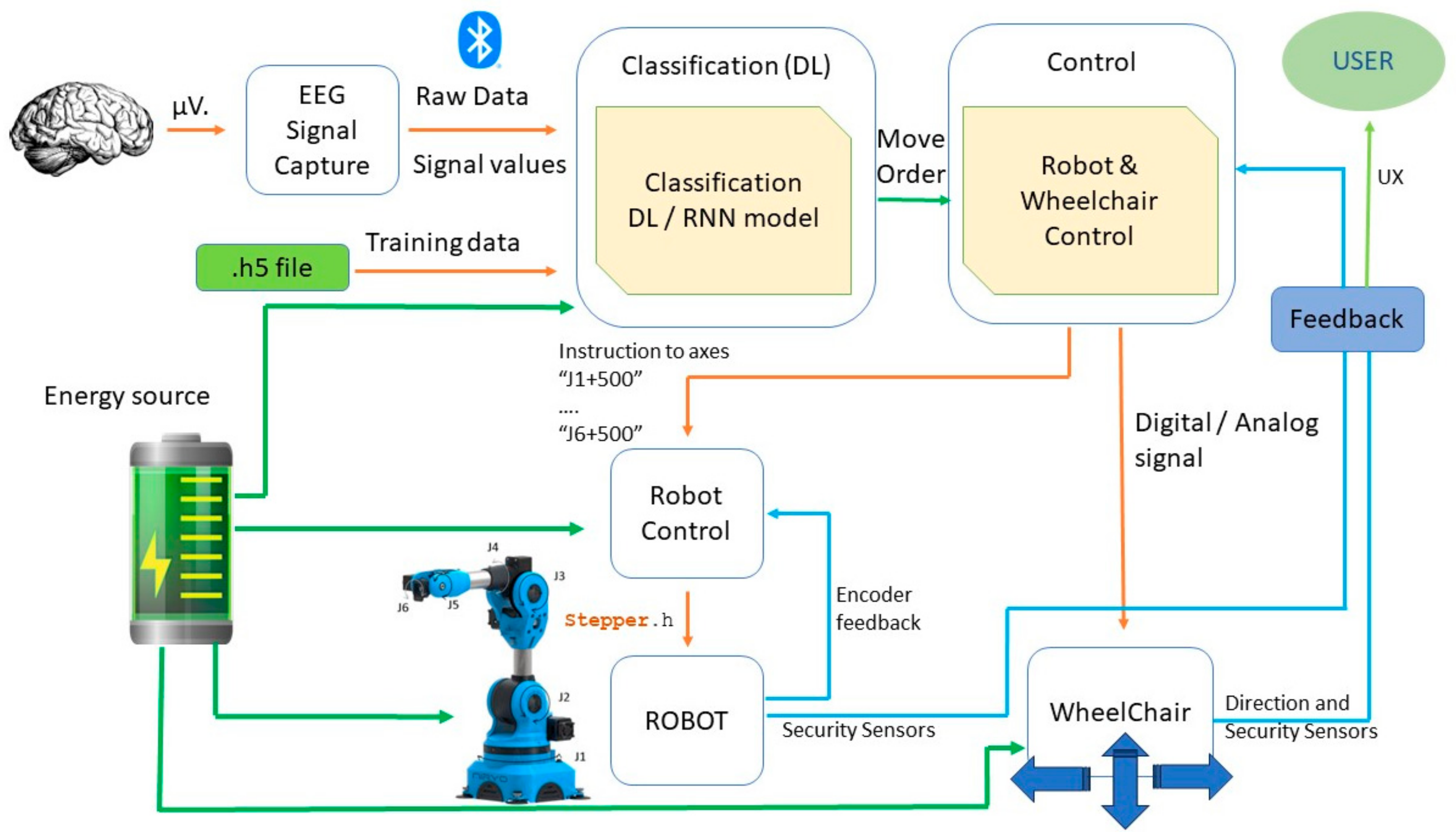

Figure 3 illustrates the developed functional architecture of the BCI system to control a robot and a motorized wheelchair. The process begins with the capture of EEG signals from the brain, measured in microvolts (µV) and segmented by frequency ranges: Delta, Theta, Alpha, Beta, and Gamma signals. These raw signals, filtered by the TGAM, are then processed, and their values are used as training data in an initial stage and subsequently utilized in real time. LSTM networks are deep learning models that require a previous training process before they can be used to make predictions or classifications. This training allows the LSTM network to learn patterns and relationships in the training data.

Precise hyperparameter tuning is crucial as it can drastically impact LSTM convergence, overfitting, and generalization. Factors like the number of layers, nodes per layer, activations, dropouts, batch size, and optimizers each play a key role. These hyperparameters can be manually adjusted, although an automatic method such as random search is recommended.

Once the LSTM network is trained, the optimized weights and parameters of the model are saved in a file with a .h5 extension. This file basically contains all the “memory” and knowledge captured by the LSTM network during training.

If we wanted to use the LSTM network to classify new data, it is absolutely necessary to load the .h5 file with the trained weights. Otherwise, we would be using an untrained LSTM network, which would provide completely random or erroneous classification results.

The next stage is classification, likely through a DL model or RNN. The model interprets the EEG signals and issues a movement order, which is transmitted to the robot’s control system. This order can be as specific as “M1 + 500”, encoding a particular action of the robot or wheelchair, with different values associated with and varying depending on the number of degrees of freedom of the system (M1 and M2 for wheelchair motors and J1 to J6 for the axes of the robot). In this case, a total of eight degrees of freedom are considered: six from the robot and two from the motorized wheelchair, as the combination of turning the two motors, right and left, facilitates the complete mobility of the wheelchair, according to the user’s commands.

Figure 3 presents the functional architecture of our brain–computer interface (BCI) system, which is designed for controlling robotic devices and motorized wheelchairs. It begins with capturing EEG signals from the brain, which are categorized into various frequency bands like Delta, Theta, Alpha, Beta, and Gamma. The RNN model receives the raw data of these signals as inputs to generate the movement orders. Different DL neural networks can be used, but Long Short-Term Memory (LSTM) networks are recommended due to their proven efficiency in signal classification tasks. In this architecture, it is shown that this RNN has been previously trained (information in h5 file), and here, it is utilized for real-time decision making.

The movement orders are received by the control module. The control module communicates with the wheelchair, and the module controls the robot. This component adapts the abstract commands sent by the classification system to the low-level commands or control signals of the devices. In addition, it oversees all the actions carried out by the system.

On the other hand, the robot control module is in charge of managing the low-level control of the robotic arm. It generates the speed references for the motors to control the position of each joint. As feedback position, it receives the encoder signal of each joint. The control of the robot is managed through a module, possibly a C++ code file (indicated by the .h extension for a header file). Finally, the energy source provides the required power to all the components in the architecture.

Finally, the system provides feedback to the user, likely indicating whether an action has been executed or if there is any error or state that the user needs to be aware of. This feedback loop is essential for the interactivity and real-time error correction of the BCI system.

4.2. Hardware Architecture

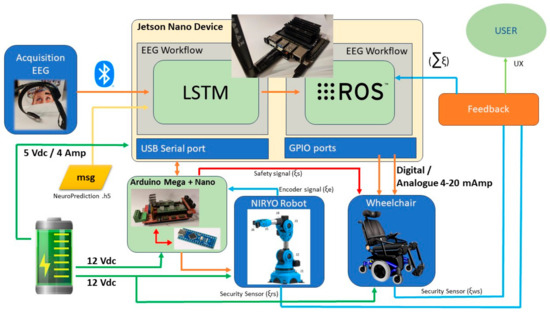

The elements that make up the proposed architecture are the following, which can be seen in Figure 4, which describes, in addition to the actual photograph of the hardware device, the main functional characteristics of each of the elements.

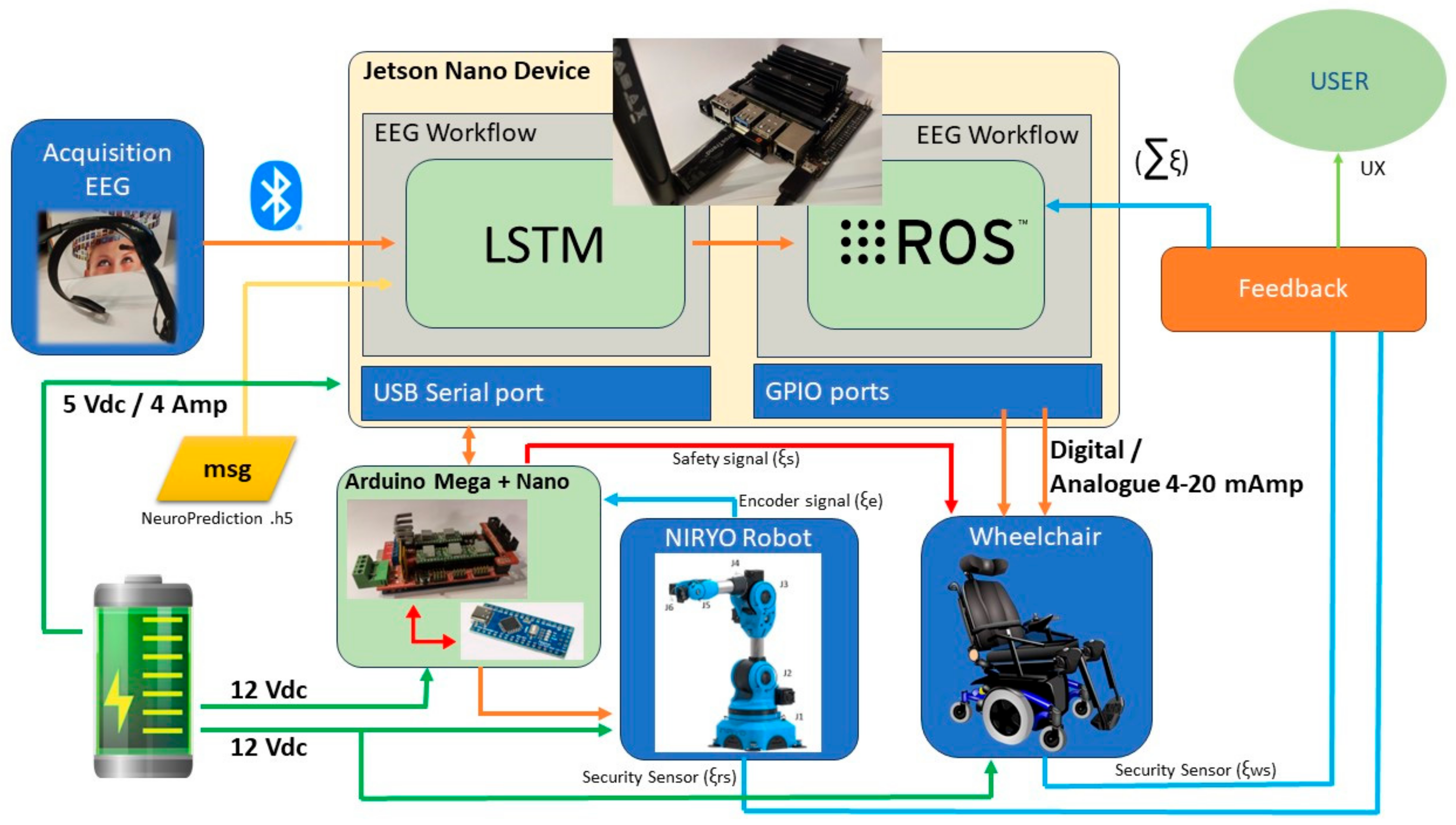

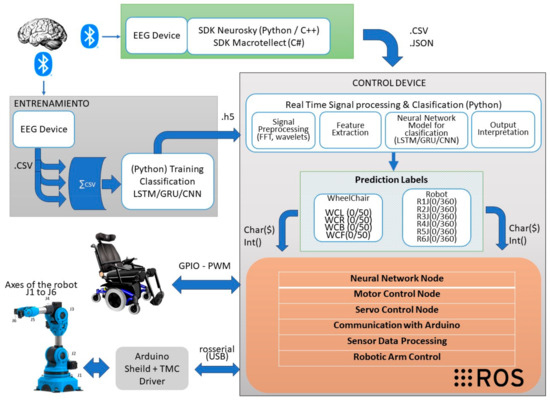

Figure 4 shows the hardware architecture of a BCI system, with a complex network of components designed to control robotic devices and motorized wheelchairs. At the core of the acquisition process, EEG signals are captured by the Neurosky and/or Brainlink devices, which capture and read the user’s brainwaves. These signals are then transmitted to the Jetson Nano device, a compact hardware device capable of performing computationally intensive tasks. The signals are transmitted via Bluetooth, which ensures wireless communication for the user’s convenience and mobility.

The Jetson Nano processes EEG signals through an LSTM neural network, a type of recurrent neural network recommended for its ability to handle time series data. This LSTM is responsible for managing the EEG data workflow and generating movement commands based on the analysis performed. The ROS operating system acts as a middleware that enables communication between the various hardware and software components. This process is carried out within the Jetson Nano, optimizing the process of analysis, communication, and management of the orders associated with the EEG signal values.

The signals resulting from the above process are routed to various ports depending on the function to be performed. The USB serial port facilitates direct communication with the Arduino modules responsible for controlling the robot and the system’s safety chain, while the GPIO ports will focus on the direct control of the motorized wheelchair’s movements. The safety signals (ξs) and encoder signals (ξe) are crucial to the operation of the robot: the safety signal serves as an emergency or caution interrupt redundantly, and the encoder signal provides information about the positions of the robot’s joints, controlling the actual position of each of the robot’s axes.

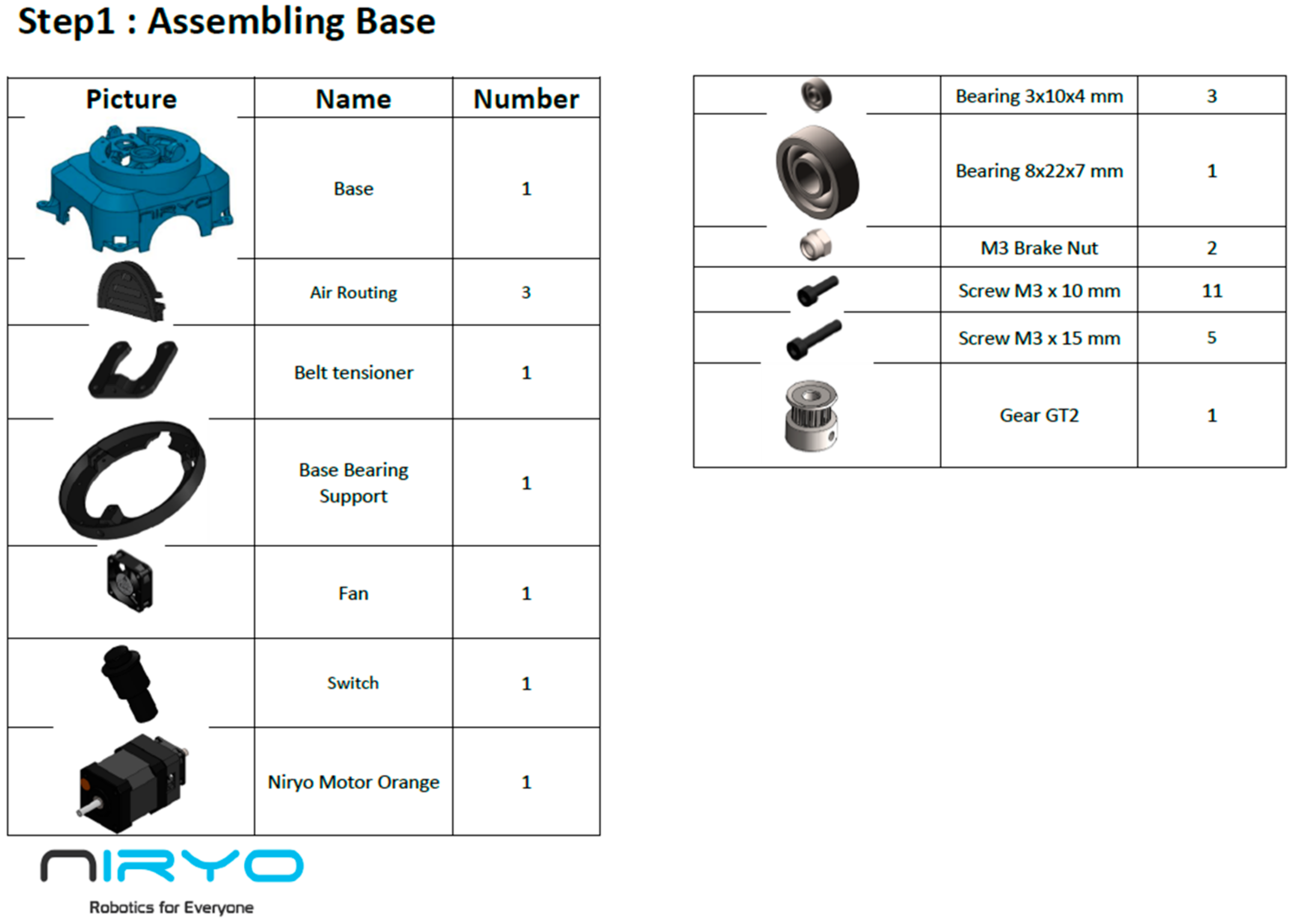

The Arduino Mega + Nano configuration is the microcontroller platform that receives commands from the ROS part of the Jetson Nano, processes these signals, and translates them into physical movements of the NIRYO robot, which is shown with a blue outline, indicating its status as the central element of the system. The Arduino setup is also responsible for handling encoder feedback and issuing safety signals as needed.

The wheelchair interface is represented by a digital/analog signal line (4–20 mA), common in industrial control systems, to manage its operation. Power is supplied by a 12 Vdc supply, and there is a secondary 5 Vdc/4 Amp power supply line, highlighting the system’s need for different voltage levels for various components.

In adherence to stringent safety standards, a dual redundant system based on Arduino technology has been incorporated, which integrates an Arduino Mega with an Arduino Nano, both connected to the Jetson Nano. In turn, these Arduino devices are connected to the safety chain of the electrical wheelchair. This configuration ensures that in the event of a malfunction in any of the devices or an anomaly within the safety chain, the entire system will halt, thereby activating the safety mechanisms of the motorized wheelchair. This setup guarantees continuous monitoring of the system’s performance, effectively precluding any safety hazards during operation. Such a redundant system not only reinforces the reliability of our setup but also aligns with the highest safety norms, ensuring that our design is safe and functional.

4.2.1. EEG Data Acquisition

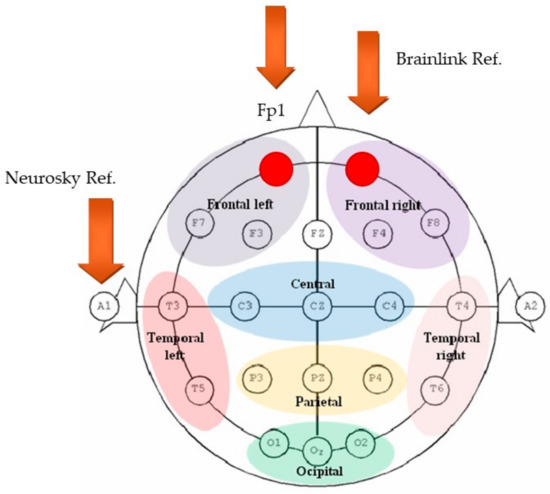

In our research, the capture of EEG data is conducted using low-cost devices such as Neurosky and Brainlink commercial headbands. This represents a significant advancement in the accessibility, application, and democratization of EEG technology in BCIs. These headbands, unlike traditional EEG systems, offer a practical and non-invasive solution for capturing brain signals, although with certain limitations in terms of resolution and spatial precision since they only collect signals from a specific area of the user’s brain, as described in Figure 5. On the other hand, the use of these headbands, due to their ease of use, favours the comfort and portability of the device for the user, eliminating the need to wear bulky devices or caps with multiple electrodes.

Figure 5.

Brainlink headband from Macrotellect (left). Brainwave headband from Neurosky (right).

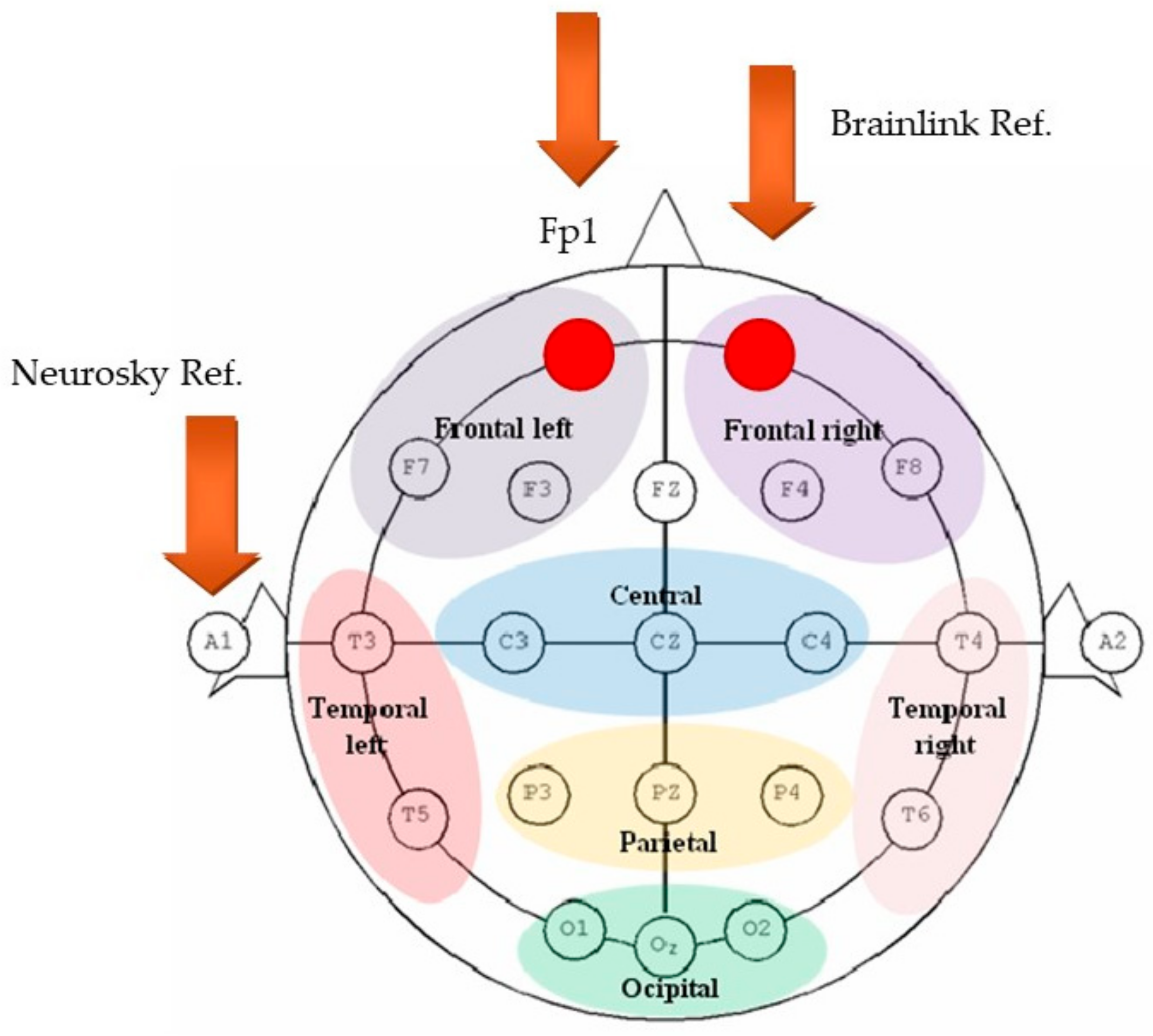

These devices capture a wide range of brain signals from the prefrontal area of the user’s skull, specifically from points (Fp1) as observed in Figure 6, obtaining Delta (1–3 Hz), Theta (4–7 Hz), Alpha (8–13 Hz, subdivided into high and low), Beta (14–30 Hz, subdivided into high and low), and Gamma (31–100 Hz, subdivided into high and low) waves [14].

Figure 6.

Electrode position (researchgate source).

These devices feature a chip called TGAM integrated into the headbands, which performs essential preprocessing of the data, filtering noise in the extraction of these characteristics [15]. In addition to the brain signals, they provide attention and meditation indices calculated from the processed EEG signals. The voltage amplitudes of these signals vary between 20 and 200 μV [16], with the voltage ranges of the EEG signals presented in Table 3.

Table 3.

Frequencies and voltages of captured brain signals.

A crucial technical aspect of these headbands is their signal capture method. The Neurosky headband, for instance, uses a dry electrode on the forehead and a reference point on the left earlobe to establish a baseline voltage of 0. In contrast, the Brainlink headband uses a reference point on the user’s own forehead. This simplified signal capture approach, though it limits spatial resolution, makes the headbands ideal for BCI applications where the complexity and cost of traditional medical EEG systems are very high.

The operation of the Neurosky headset and the preprocessing of EEG signals involves several stages. First, the raw EEG data are subjected to a common reference and baseline correction process [21]. Subsequently, artifact detection algorithms, such as facial gestures or muscle movements, can be applied to identify and remove unwanted signals [22]. In addition, relevant feature extraction steps are performed from the pre-processed signals for further analysis [23]. These processes are essential to ensure the quality of EEG data and their usefulness in brain–computer interface applications and other research areas.

ThinkGear technology in the Neurosky headband is a system that amplifies the raw brainwave signal, eliminates noise and artifacts, and delivers a digitized brainwave signal [20]. This technology, together with a dry electrode, enables the acquisition of EEG signals for further processing and analysis in brain–computer interface applications and other areas of research. ThinkGear and TGAM are Neurosky’s patented technologies.

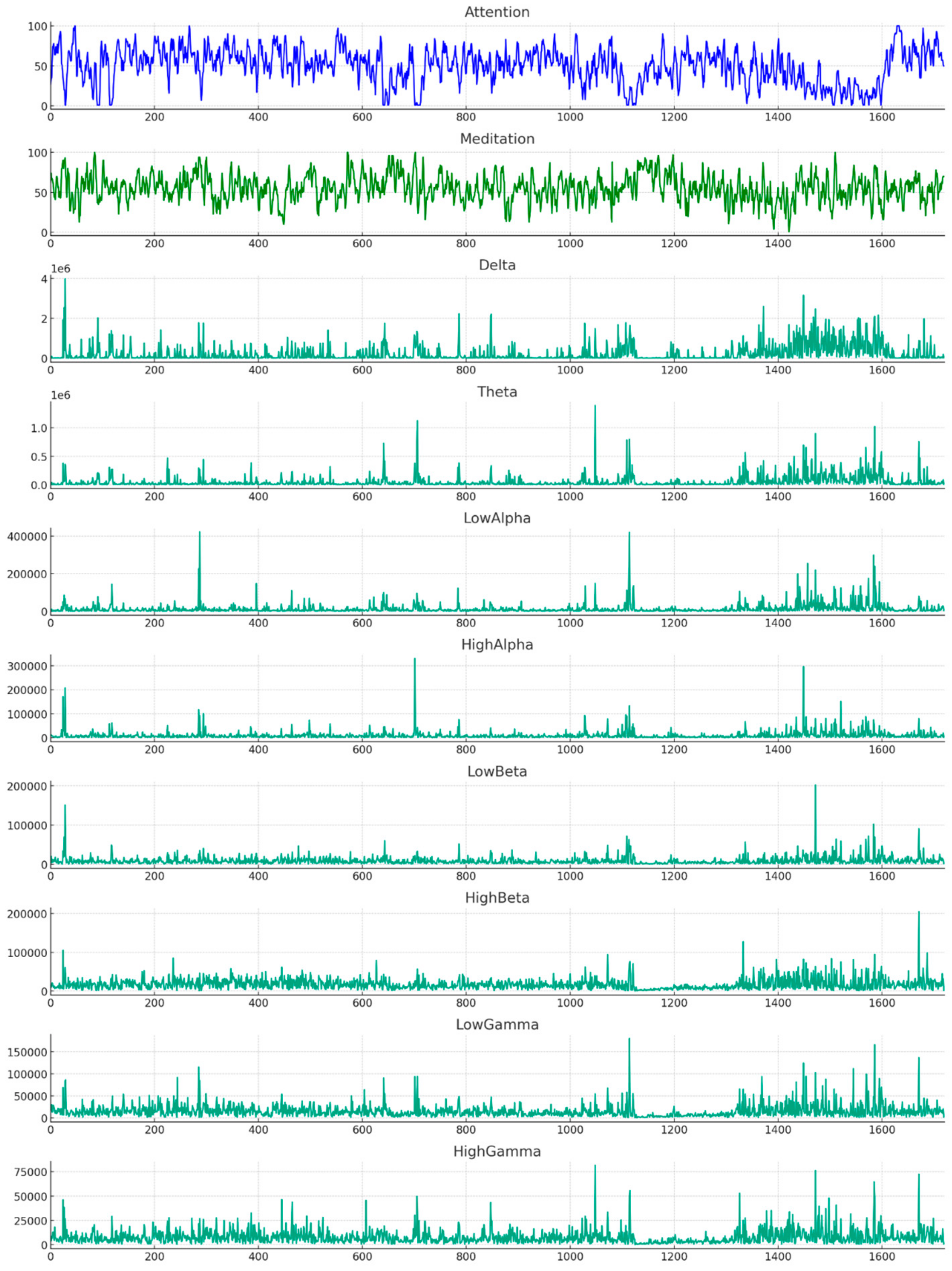

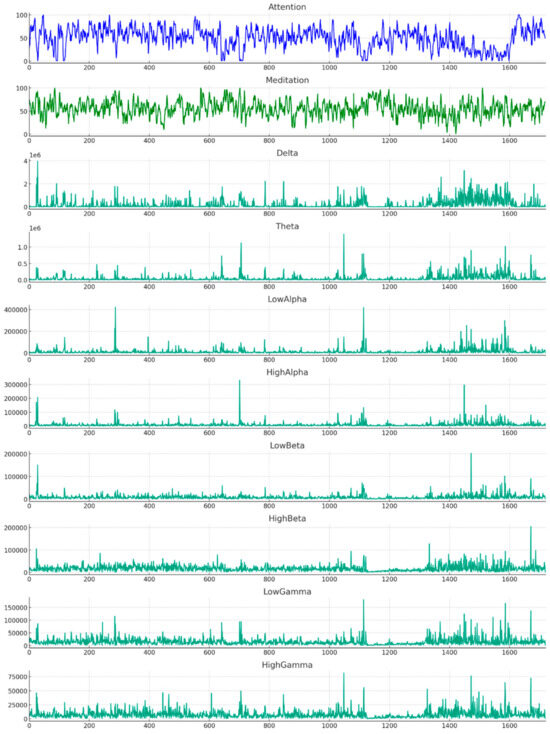

Figure 7 provides a comprehensive visualization of EEG signal fluctuations over time across various frequency bands: Delta, Theta, Alpha (low and high), Beta (low and high), and Gamma (low and high), alongside metrics for attention and meditation. The figure demonstrates the complexity and dynamic nature of brain activity, as well as the capability of our system to capture and distinguish between different mental states and cognitive loads. These data are crucial for our analysis as they validate the sensitivity and specificity of our BCI system in detecting and interpreting nuanced patterns of neural activity, which is foundational for accurate system responses.

Figure 7.

Brain signals obtained with Brainlink headset.

The eight base signals, from Delta to Gamma, can be observed, along with the attention and meditation values calculated by the Neurosky algorithm [24].

The application of these headbands in signal labelling for BCIs is particularly promising. Initially, our focus was on using motor imagery to label EEG signals. This technique, which involves mentally simulating movements without physical execution, has the potential to enable users to control devices by simply imagining movements. Additionally, we are exploring models based on evoked potentials, such as P300 and SSVEPs, for cases where motor imagery does not provide the desired level of control [25].

However, low-cost EEG headbands present challenges related to the quality and accuracy of the captured signals. Limitations to a single capture point and the use of dry electrodes reduce spatial resolution and data fidelity. Despite this, advances in machine learning algorithms and signal processing are improving the interpretation of these data. For example, the article “Methodologies and Wearable Devices to Monitor Biophysical Parameters Related to Sleep Dysfunctions: An Overview” (2022) by Roberto De Fazio et al. provides an overview of the use of EEG devices in monitoring applications, which can be extrapolated to BCI applications [26].

Looking forward, the combination of these low-cost technologies with sophisticated algorithms promises broader integration of BCIs in various applications. Future research should focus on improving the accuracy of these devices and exploring new methodologies for signal classification. The democratization of access to EEG technology opens a world of possibilities in the field of neuroscience and human–computer interaction, promising significant advances in understanding and manipulating brain activity.

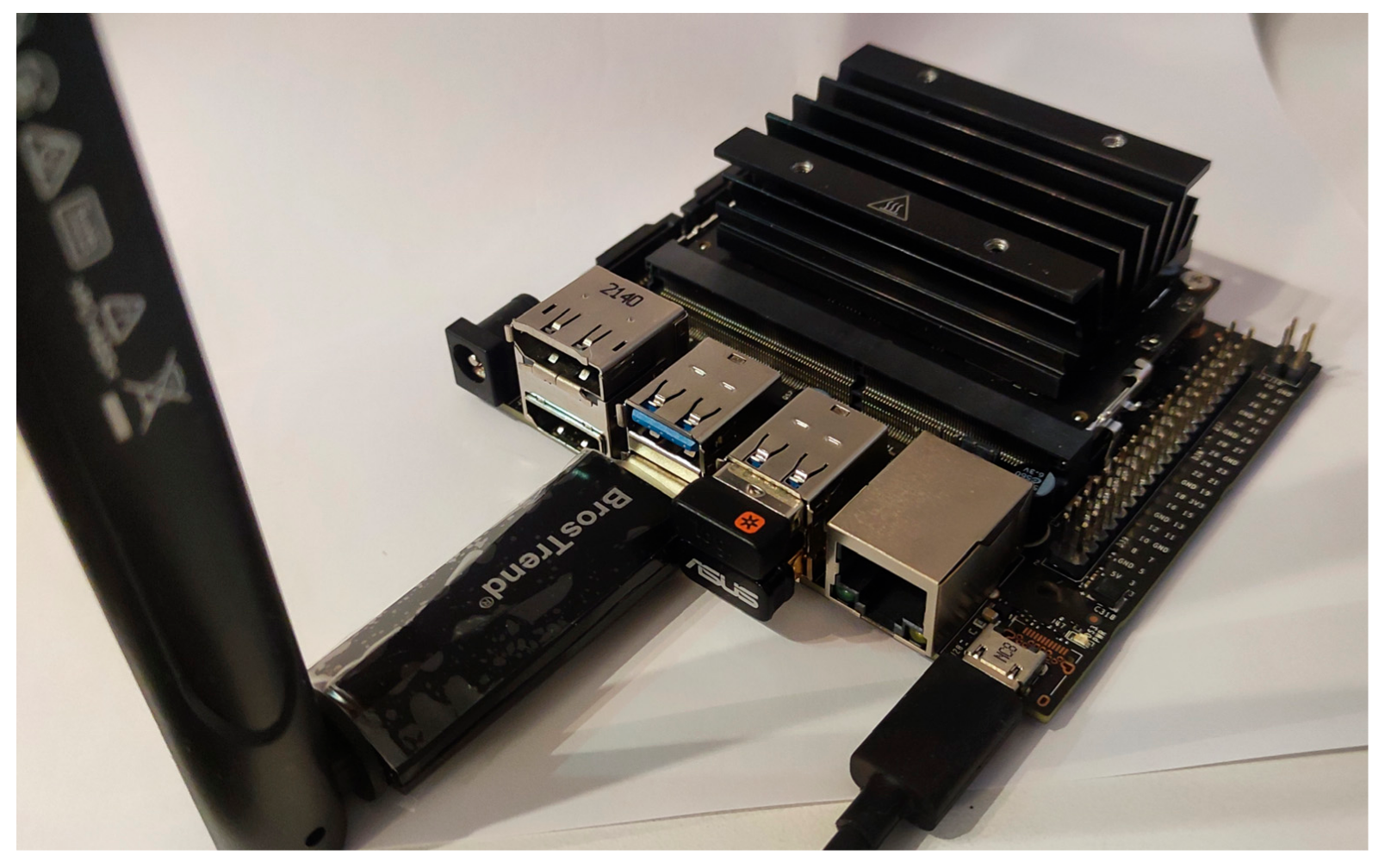

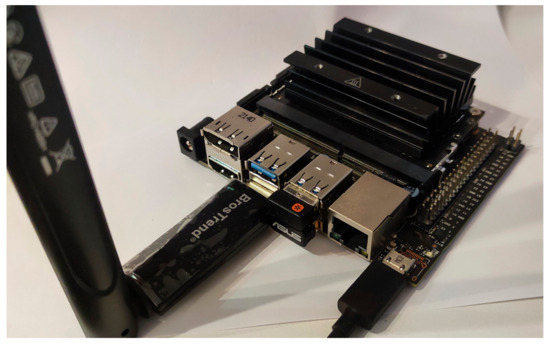

4.2.2. Hardware for Classification and Control

The NVIDIA Jetson Nano (Figure 8), pivotal for classification and control tasks in our BCI project, is a compact AI powerhouse. With its quad-core ARM Cortex-A57 CPU and 128-core Maxwell GPU, it is adept at running parallel neural networks and handling multiple high-resolution streams, courtesy of 4 GB LPDDR4 RAM. Its compatibility with a plethora of interfaces like GPIO and I2C, along with its real-time computational prowess, makes it ideal for real-time EEG signal processing with LSTM networks and robotic control via ROS, providing the necessary speed and parallel processing capabilities for sophisticated robotics and BCI applications.

Figure 8.

Jetson Nano device.

Table 4 summarizes the key specifications and features of the NVIDIA Jetson Nano.

Table 4.

Jetson Nano specifications.

These specs highlight the Jetson Nano’s capacity for complex, high-speed AI computations and its suitability for advanced BCIs and robotics systems.

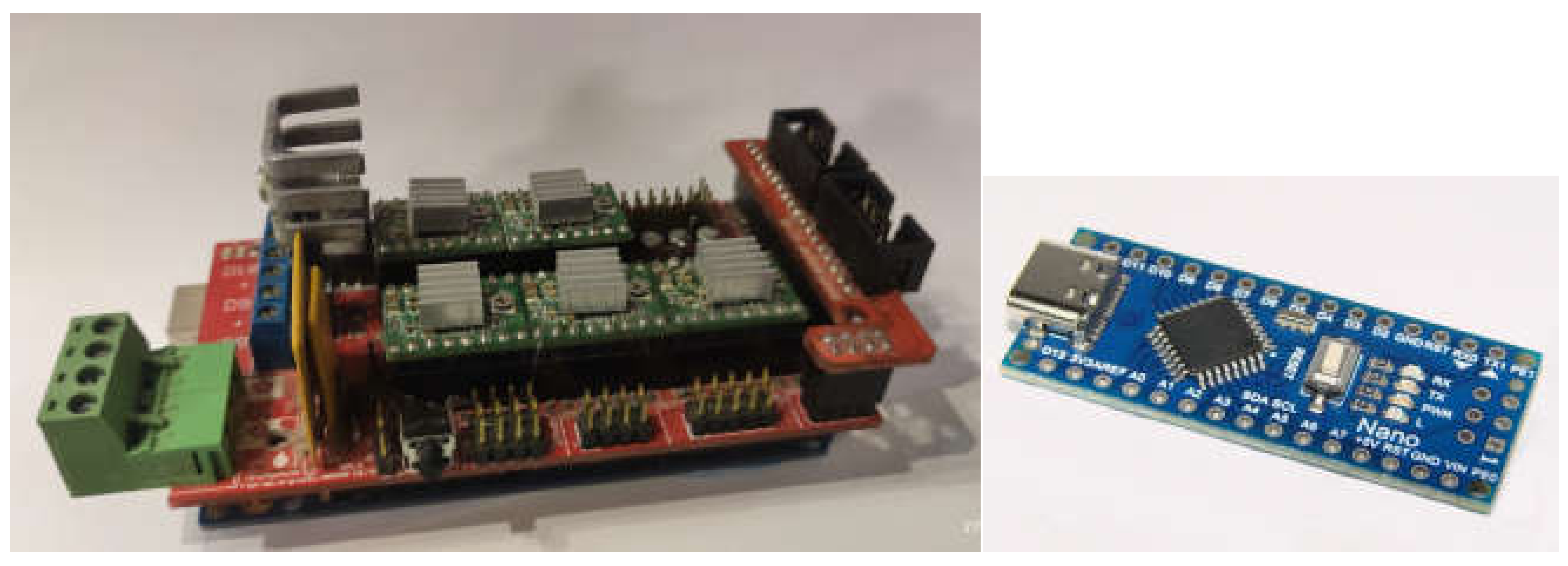

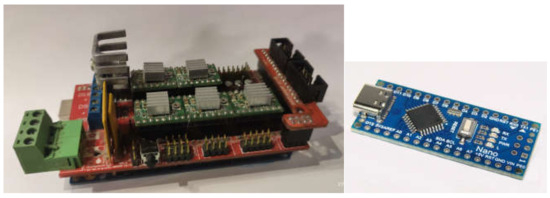

The Arduino Mega, powered by the ATmega 2560 (Figure 9), is a versatile microcontroller with 54 digital I/O pins, 16 analogue inputs, and 4 UARTs, suitable for complex projects involving multiple I/O operations, such as robotic control. It is complemented by the Ramps 1.4 shield, which can manage five stepper motors, typical in precision applications such as 3D printing. This shield also supports power controller drivers for high endurance operation and variable power requirements. Together, the Arduino Mega and Ramps 1.4 shield form a powerful duo for a six-axis robotic system, offering the control and power needed to drive multiple motors and axes with precision. This cost-effective and customizable combination is capable of the detailed motion control essential to the requirements of the BCI project, allowing for custom tuning and scalability of the system.

Figure 9.

Arduino Mega + Shield Ramps 1.4 (left); Arduino Nano 33 (right).

The Arduino Nano, a compact microcontroller board based on the ATmega 328P, plays a critical role in our redundant safety system. Its small footprint and robust functionality make it an ideal choice for embedding within complex systems. In our setup, the Nano serves as a backup controller, working in conjunction with the Arduino Mega to ensure system reliability. Through seamless communication with the Mega, it provides an additional layer of monitoring and control, enhancing the safety and stability of the motorized wheelchair’s operation.

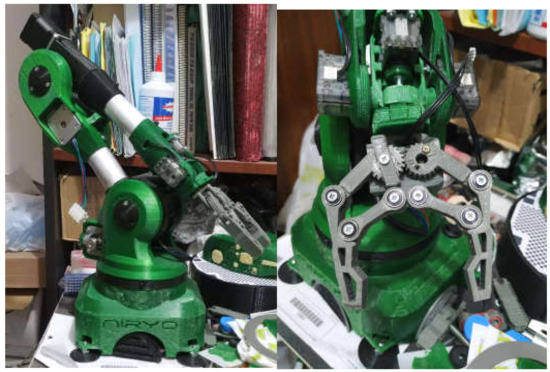

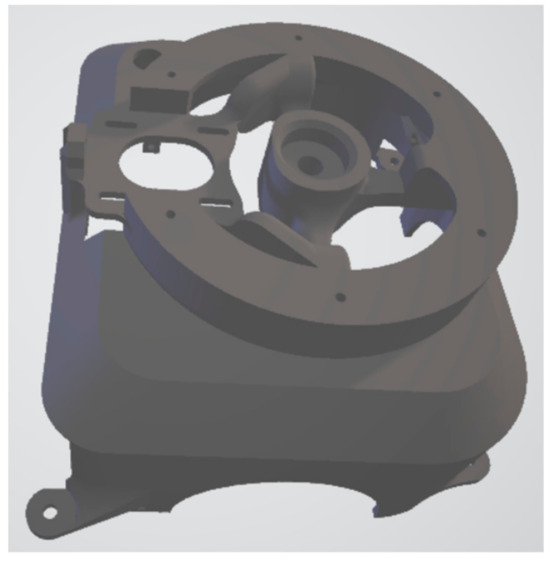

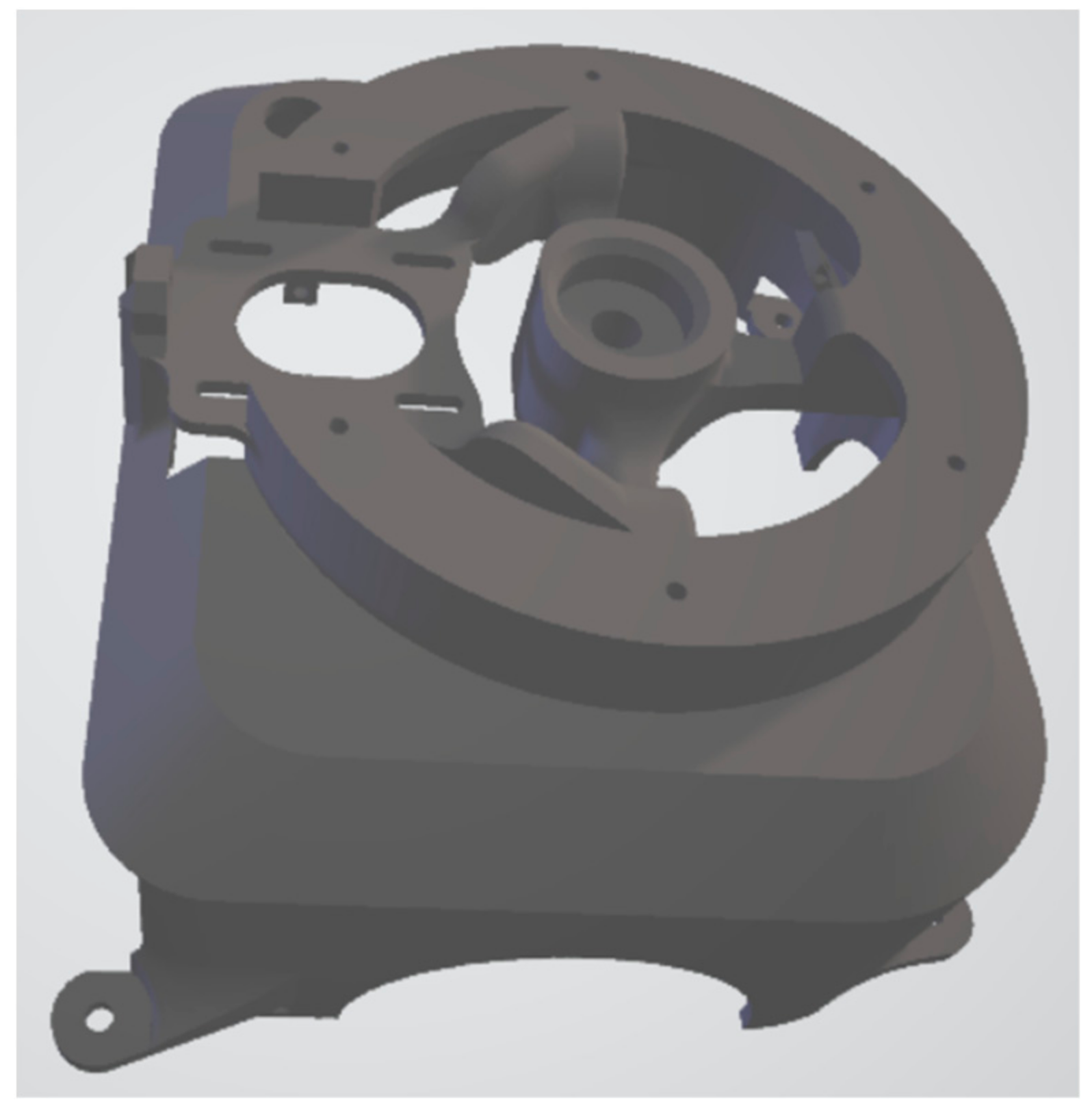

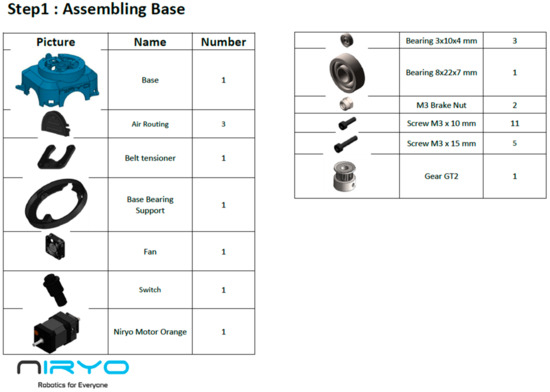

4.2.3. Cobot and Motorized Wheelchair

The integration of advanced technologies in motorized wheelchairs and cobots, such as the Niryo (Figure 10) open-source model, represents a significant advance in assistance for people with reduced mobility [27,28]. This integration not only improves the autonomy of users but also opens new possibilities in terms of control and adaptability.

Figure 10.

Open-source NIRYO ONE robot.

Modern motorized wheelchairs (Figure 11), offer various control options to suit the individual needs of users. Control methods include joysticks, manual controls, and remote control, each with its own advantages in terms of accessibility and ease of use. For example, a study on “Modelling and control strategies for a motorized wheelchair with hybrid locomotion systems” highlights the importance of versatile control systems in motorized wheelchairs [29].

Figure 11.

Basic model of motorized wheelchair.

The proportional control of power wheelchairs (Figure 12) improves manoeuvrability and user comfort by adjusting the movement to the force applied., which is vital for a safe and pleasant driving experience. Studies, such as one on motorized wheelchair implementation for the handicapped, affirm the efficacy of proportional controls. Wheelchair access can be through parallel joystick control or directly to the motor driver, allowing movement control via digital or analogue setpoint signals. Collaborative robots (cobots) like the open-source Niryo model offer adaptability to various environments and tasks, with precise programming capabilities making them suitable for daily assistance and user life quality improvement [27]. Integrating cobots with motorized wheelchairs, such as the Niryo One, a six-axis robot designed for education, research, and industry, fosters new assistance and autonomy avenues. This integration allows cobots to perform tasks while the user navigates, enhancing efficiency and independence.

Figure 12.

Manual control of motorized wheelchair.

The Niryo One’s simple, efficient mechanical design, its ease of programming through Niryo Studio, and 3D Unity visualization cater to users across technical proficiencies, enabling immersive learning and operation understanding. Its precision and repeatability are pivotal for EEG signal processing and LSTM network classifications, requiring sensitivity and specificity in tasks involving brain signals. ROS integration expands its application to advanced robotics and BCI projects, making Niryo One an exemplary platform for BCI endeavors that demand seamless hardware–software integration. The combination of its technical capabilities, user-friendly software, and precision control positions Niryo One as an ideal tool for high-level BCI project integration, underscoring the potential of combining advanced control systems and collaborative robotics to enhance autonomy and assistance in BCI applications [30].

Despite advances, there are challenges in integrating these technologies, especially in terms of user interface and accessibility. However, studies such as “Design and Implementation of Hybrid BCI based Wheelchair” show how continuous innovation in wheelchair control can overcome these challenges [31].

4.3. Software Architecture

In this section, we address the integration of EEG signal processing with the robotic control system through ROS. We detail how brain signals are processed using machine learning algorithms and neural networks, enabling precise interpretation of user intentions. This crucial process ensures safe and effective commands for the dynamic control of robotic devices and motorized wheelchairs, highlighting technical innovations and challenges in the brain–computer interface and robotics, based on ROS by the Niryo robot, and other similar robots [27].

As a first approach, Figure 13 presents the diagram of the proposed software architecture, as well as the flow of signals and data necessary for the implementation of the proposed structure.

Figure 13.

Software architecture.

For this integration, the Jetson Nano device has been used, which allows all the requirements of this development to be integrated into a single device, including each component of the process, its function, specific details, the type of output generated, and the necessary software or libraries.

Having identified the steps for processing the signals in Table 5, we have opted for the NVIDIA Jetson Nano device as our module for execution and data processing.

Table 5.

EEG to ROS processing and control structure.

One of the main advantages of the Jetson Nano is its ability to perform deep learning inferences at the edge, meaning it can process data directly on the device without needing a connection to a central server. This is crucial in BCI applications, where low latency and real-time processing are essential for smooth and effective interaction. This is necessary in our research for the application of controlling wheelchairs or robotic prosthetics, given its capacity to process EEG signals and make real-time decisions, which is fundamental for the safety and efficacy of the system.

Another factor that influenced our choice of the Jetson Nano is its energy efficiency, making it suitable for portable and mobile applications. This is especially relevant in the context of low-cost EEG headbands like Neurosky or Brainlink, where portability and energy efficiency are key considerations. Combining these headbands with a device like the Jetson Nano can facilitate the implementation of BCI systems in non-laboratory environments, increasing their accessibility and applicability in real-life situations.

4.3.1. Real-Time Signal Preprocessing (Jetson Nano)

The Bluetooth connection between the EEG headband and the Jetson Nano initiates the preprocessing of EEG signals, a crucial step in implementing efficient BCIs. This process converts raw EEG signals into a format suitable for real-time analysis and classification, essential for the precision and effectiveness of brainwave classification.

In preprocessing, normalization standardizes the EEG data, while the Fourier Transform and wavelet transforms decompose the signals into frequency components, which is crucial for identifying different cognitive and emotional states. Normalization enhances the robustness of the model, and the Fast Fourier Transform and wavelet transform allow for precise temporal localization of frequencies.

Subasi (2021), in his article, highlights the importance of these techniques in BCIs [32]. Subsequently, the pre-processed EEG signals are classified by neural networks. CNNs and RNNs have proven effective for this, as per Roy et al. (2022) [33].

The integration of these preprocessing techniques with advanced neural networks promises to improve the accuracy and efficacy of BCI systems, opening new possibilities in human–computer interaction and assistance to individuals with motor disabilities.

4.3.2. Classification

The classification of EEG signals, fundamental for brain–computer interfaces, has made significant progress with the incorporation of deep learning and machine learning techniques. Advanced neural networks like CNNs and LSTMs have enhanced efficiency and precision in EEG classification [34,35]. One of the main innovations of our proposed EEG-based control architecture is the integration of a neural short-term memory (LSTM) network to decode brain activity signals in real time. As LSTMs possess innate capabilities to model temporal sequences and learn long-range contextual patterns, they are ideally suited to handle the dynamic and non-linear relationships of EEG data streams.

Once trained, the LSTM model is integrated into our architecture for classifying EEG segments in real time and mapping predictions to wheelchair commands. As users generate distinctive brain patterns via motor imagery, the LSTM classifier assigns probability scores for each control label. On passing preset confidence thresholds, the categorized intents are programmed to trigger corresponding wheelchair movements (e.g., turning left/right, forward/back). This allows intuitive, adaptive wheelchair navigation based solely on decoded brain activity. Moreover, the adaptation of deep transfer networks has shown advantages over traditional methods [36], while feature selection based on minimum entropy has improved performance [37]. The optimization of deep learning models using evolutionary algorithms has increased energy efficiency [34]. Finally, the implementation of simplified models, such as a version of GoogLeNet, has been effective in EEG signal classification [38], highlighting the advancement and practical application of these technologies in BCIs.

Next, we propose a list of possible labels that the LSTM network could send and interpret in ROS to be operational.

WCL, WCR, WCB, and WCF, followed by a value between 0 and 50. The string corresponds to the acronym for Wheelchair Left (WCL), Wheelchair Right (WCR), and so on with backward and forward.

Following the same mechanism, we can do the same with the robot, assigning the letter R for Robot and J to determine the axis that moves, plus a value from 0 to 360 degrees to determine the movement.

4.3.3. LSTM Description

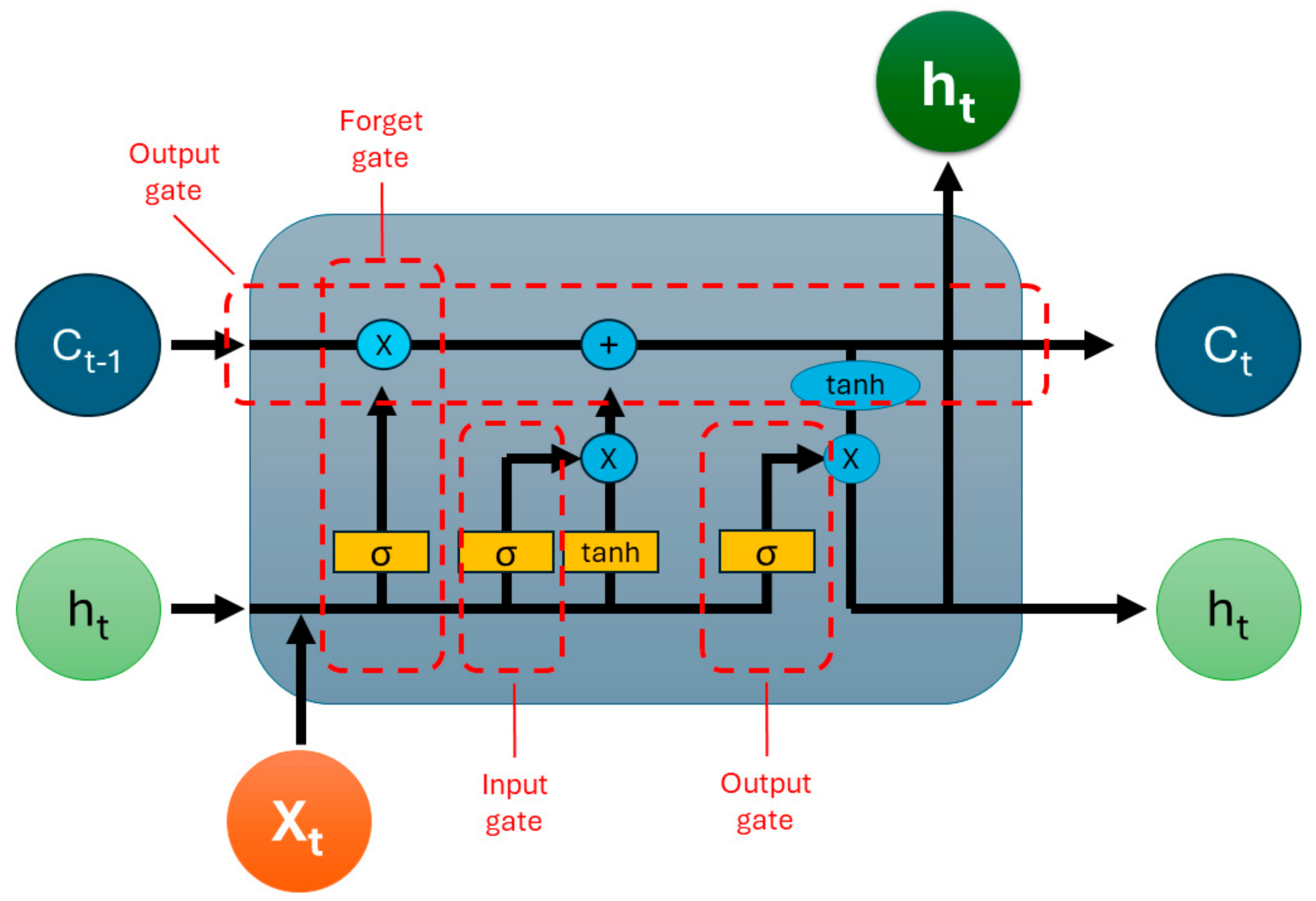

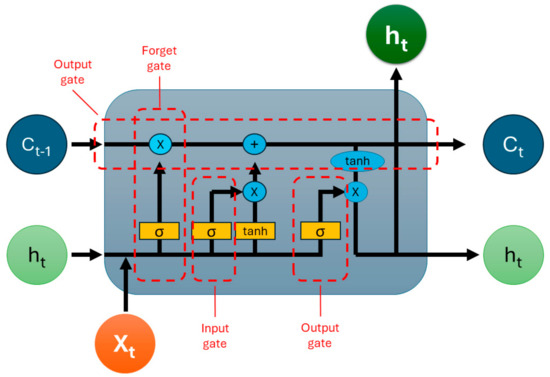

LSTM networks, a subclass of recurrent neural networks (Figure 14), are pivotal in our project for their exceptional ability to process and remember information over extended periods, making them ideal for handling the sequential and temporal nature of EEG signals. Unlike traditional neural networks, LSTMs are designed to avoid the long-term dependency problem, enabling them to remember inputs for long durations with their unique architecture comprising three gates: input, output, and forget gates [39].

Figure 14.

LSTM diagram.

Figure 14 shows the structure of a cell unit in the LSTM network. The input gate controls the extent to which a new value flows into the cell, the forget gate regulates the retention of previous information, and the output gate determines the value to be outputted based on input and the memory of the cell. This gating mechanism allows LSTMs to make precise decisions about retaining or discarding information, making them highly effective for tasks requiring the understanding of context over time, such as EEG signal analysis in our BCI project.

In the context of our work, LSTMs are employed to analyse EEG signals for pattern recognition and classification, which is crucial for translating neural activity into commands for assistive technologies. The ability of LSTMs to learn from the temporal sequence of EEG data, recognizing patterns associated with specific neural activities or intentions, underpins the system’s capability to provide accurate and responsive control to the end users. This makes LSTM networks an integral component of our project, bridging the gap between raw EEG signals and actionable outputs in assistive devices, thereby enhancing the interaction between humans and machines in a seamless, intuitive manner. Their integration within our BCI framework not only exemplifies the cutting-edge neural processing technologies but also solidifies the foundation for future advancements in the field [40].

4.3.4. ROS

ROS is a versatile framework like an operating system for robotics, offering hardware abstraction, device control, functionality implementation, inter-process communication, and package management. Its modularity is essential, allowing robots to be created with reusable software components. ROS 2 further advances robotics by focusing on intelligent systems and compatibility with modern technologies.

Integration with hardware such as Arduino is simplified by rosserial, which combines the hardware control of Arduino with the high-level functions of ROS. The programming of nodes in ROS, which communicate to perform computations, is vital and emphasizes publish/subscribe mechanisms, services, and actions for robust robotic systems. The ROS environment favours AI algorithms for advanced automation and intelligent robotics. The use of a Jetson Nano for AI, together with an Arduino with TMC controllers, exemplifies the merging of AI with precise motor control for autonomous robots. This innovative integration of ROS, Arduino, and AI is fundamental to the advancement of intelligent robotics [41].

4.3.5. ROS Nodes on Jetson Nano

Next, in Table 6, we outline the necessary implementation in ROS, focusing on nodes, topics, and basic functions for controlling the robotic arm and wheelchair.

Table 6.

ROS nodes and topics.

These components and configurations form a comprehensive guide to implementing the system on the Jetson Nano, utilizing ROS for EEG signal processing, control of the robotic arm, and wheelchair navigation [42].

Controlling an electric wheelchair involves using a propulsion and steering system, which can be controlled via electrical signals. In the context of ROS, a node could be developed that subscribes to a specific topic where LSTM network labels are published. This node would interpret the received labels and generate the appropriate control signals for the GPIO terminals that control the wheelchair. The detailed implementation of this node will depend on the specific configuration of the wheelchair and how it is electrically controlled. For example, if the wheelchair uses DC (Direct Current) motors for propulsion, the node will need to generate the appropriate PWM (Pulse Width Modulation) signals to control the speed and direction of the motors. If the wheelchair uses a joystick for control, the node will need to map the LSTM network labels to joystick movements and generate the corresponding electrical signals.

4.3.6. Coding Orders

The development of an advanced robotic application that integrates EEG data capture, interpretation, and prediction using AI on a Jetson Nano platform, using ROS to control a motorized wheelchair in conjunction with a six-degrees-of-freedom cobot and an Arduino Mega as a microcontroller, is a complex process involving multiple stages and components.

The final architecture, which meets all the requirements defined in this research, is shown in Table 7. This table relates the selected physical components with the input and output signals between the devices and the necessary software for their implementation.

Table 7.

Hardware/signals/software relationship.

Having identified both the functional and technical requirements, as well as the necessary hardware and software devices to meet the requirements and define the proposed architecture in detail, we will proceed to elaborate on each part. To make sense of this definition of methods and methodology, we will follow the timeline of the process and, thus, the data flow.

In summary, this proposed architecture integrates cutting-edge technologies in BCIs, machine learning, robotics, and hardware control to create a solution that can significantly improve the quality of life for patients with neurodegenerative diseases. The key to the success of this project lies in the effective integration of these technologies and in the thorough validation of their functionality and safety, in addition to a substantial cost reduction, focusing on the use of low-cost and/or open-source devices, democratizing this technology.

5. Results

In this section, we present a theoretical examination of the proposed method in comparison with established baselines. It is imperative to note that the scope of this study encompasses theoretical analyses, eschewing empirical evaluations of the proposed system. Such empirical assessments are earmarked for subsequent investigations. This delineation ensures a clear understanding of our current findings, which are intended to illustrate potential improvements and theoretical advancements over existing frameworks. Our aim is to lay a robust groundwork for future empirical studies, thereby contributing both to the academic discourse and to practical applications in the field.

5.1. Comparison with Other Works

After a comparison of the proposed architecture with nine similar developments, in which both the objectives and the way the research was carried out were evaluated, the results are conclusive, especially in the achievement of the technical requirements.

These works were selected after a search of references in Scopus and Google Scholar, where a search was filtered by terms related to this research. The combination of the keywords “EEG”, “ML”, “ROS”, and “wheelchair” has resulted in the following research that has been used to compare our research with research that is very close in concept and format.

- Ref 1.—SSVEP-Based BCI Wheelchair Control System [43].

- Ref 2.—Brain-Computer Interface Controlled Robotic Gait Orthosis [44].

- Ref 3.—Wheelchair Automation by a Hybrid BCI System Using SSVEP and Eye Blinks [45].

- Ref 4.—EEG-Based BCIs: A Survey [46].

- Ref 5.—EEG Wheelchair for People of Determination [47].

- Ref 6.—BCI-Controlled Hands-Free Wheelchair Navigation with Obstacle Avoidance [48].

- Ref 7.—A Literature Review on the Smart Wheelchair Systems [49].

- Ref 8.—A Real-Time Control Approach for Unmanned Aerial Vehicles Using Brain-Computer Interface [50].

- Ref 9.—Real-Time Brain Machine Interaction via Social Robot Gesture Control [51].

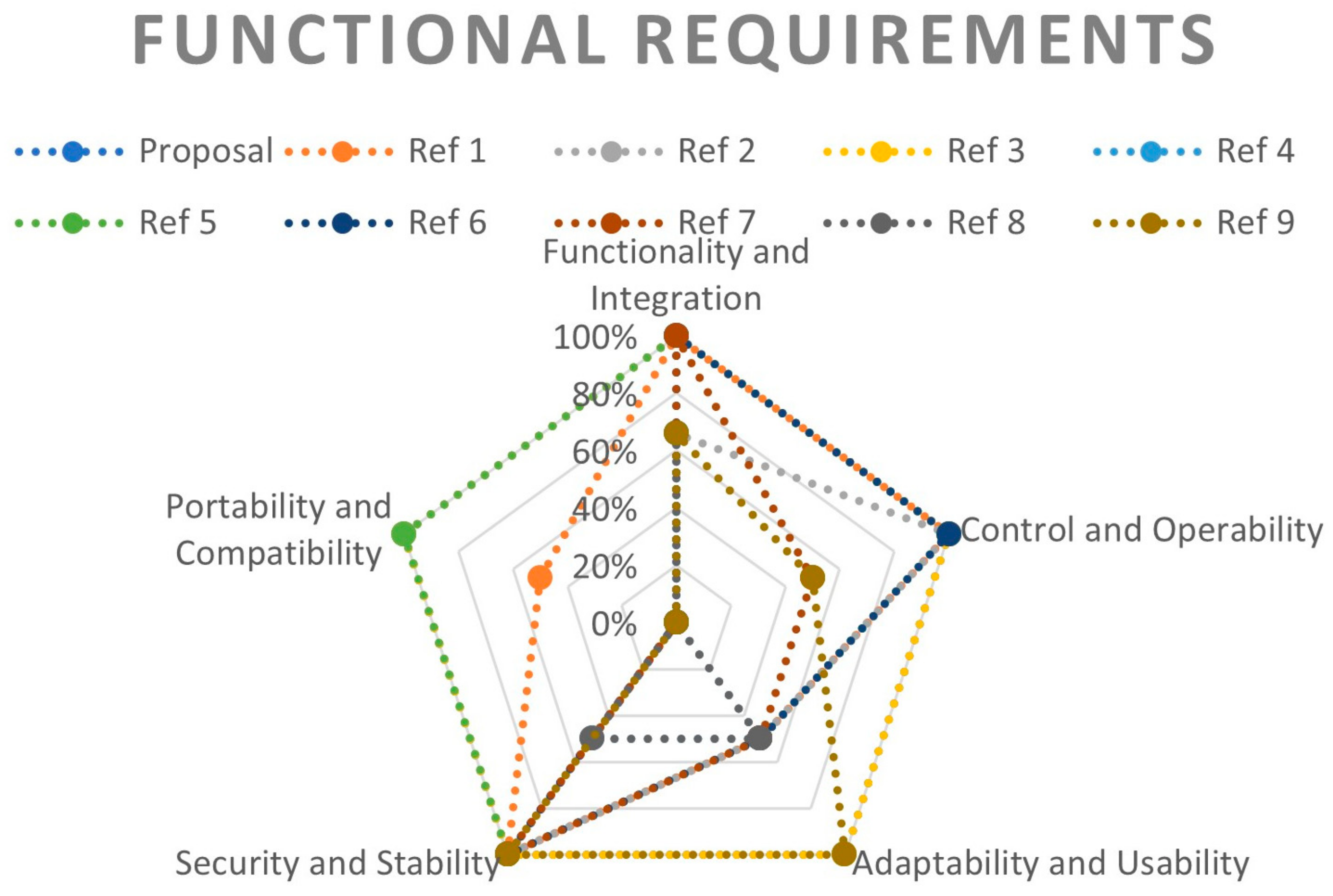

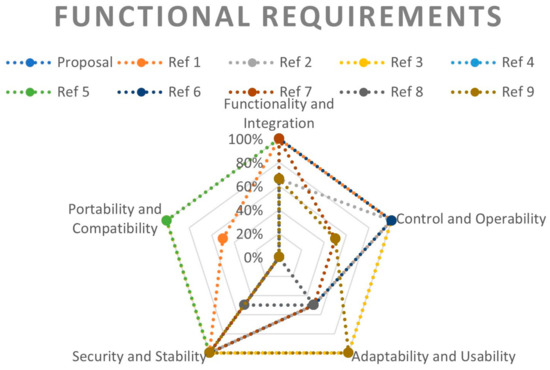

The spider chart provided in Figure 15 presents a comparison of functional requirements across a proposed solution and eight references. Notably, both the proposal and reference 3 exhibit remarkable alignment across all the metrics, suggesting a close or identical prioritization of functional aspects. These encompass functionality and integration, control and operability, adaptability and usability, as well as security and stability, and finally, portability and compatibility. In these domains, the proposal and reference 3 reach parallel scores, implying that they may share similar design philosophies or operational objectives.

Figure 15.

Functional requirements compliance diagram. Ref 1.—SSVEP-Based BCI Wheelchair Control System [42]; Ref 2.—Brain-Computer Interface Controlled Robotic Gait Orthosis [43]. Ref 3.—Wheelchair Automation by a Hybrid BCI System Using SSVEP and Eye Blinks [44]. Ref 4.—EEG-Based BCIs: A Survey [45]. Ref 5.—EEG Wheelchair for People of Determination [46]. Ref 6.—BCI-Controlled Hands-Free Wheelchair Navigation with Obstacle Avoidance [47]. Ref 7.—A Literature Review on the Smart Wheelchair Systems [48]. Ref 8.—A Real-Time Control Approach for Unmanned Aerial Vehicles Using Brain-Computer Interface [49]. Ref 9.—Real-Time Brain Machine Interaction via Social Robot Gesture Control [50].

Contrastingly, the other references display varied degrees of divergence from the proposed solution, with each reference presenting a unique profile of strengths and weaknesses. This diversity in scoring indicates differing emphases on the functional requirements, which may stem from alternative strategic focuses or target user needs. Such discrepancies underscore the necessity for a thorough analysis when benchmarking against multiple frameworks to ensure that the chosen reference aligns with the specific goals and constraints of the project at hand.

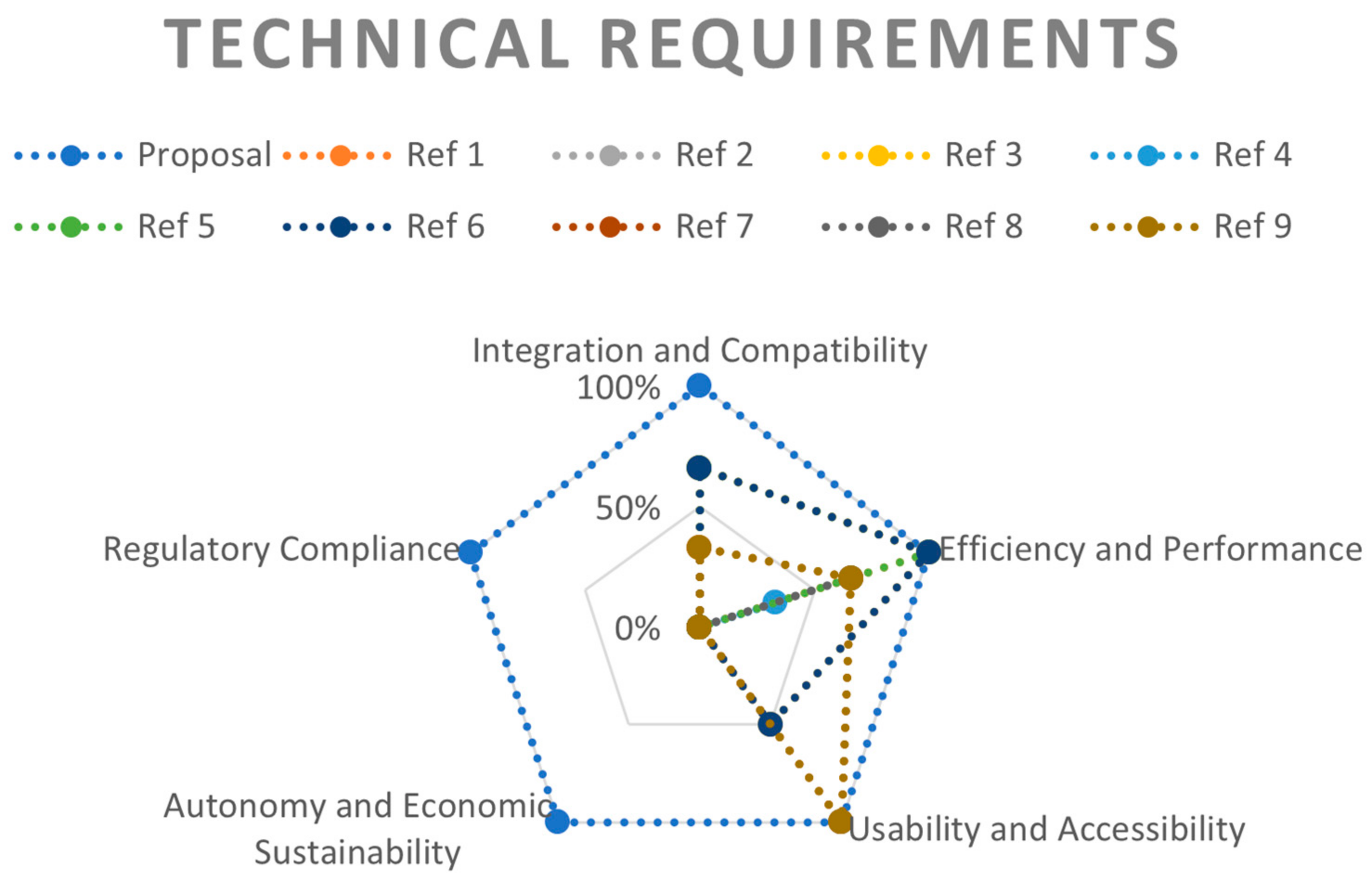

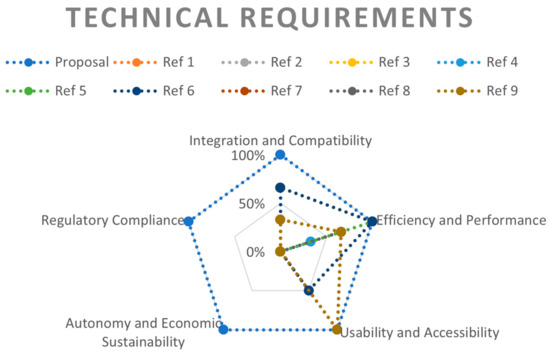

In Figure 16, the spider chart delineates a comparative assessment of technical requirements for a proposal against a suite of references. It becomes apparent that the proposal is particularly strong in regulatory compliance, suggesting a conscientious alignment with relevant laws and standards. However, in other technical domains, such as efficiency and performance, usability and accessibility, and autonomy and economic sustainability, the proposal does not reach the benchmark set by some references. This is indicative of a potential trade-off that has been made in favour of compliance over other technical merits.

Figure 16.

Technical requirements compliance diagram. Ref 1.—SSVEP-Based BCI Wheelchair Control System [42]; Ref 2.—Brain-Computer Interface Controlled Robotic Gait Orthosis [43]. Ref 3.—Wheelchair Automation by a Hybrid BCI System Using SSVEP and Eye Blinks [44]. Ref 4.—EEG-Based BCIs: A Survey [45]. Ref 5.—EEG Wheelchair for People of Determination [46]. Ref 6.—BCI-Controlled Hands-Free Wheelchair Navigation with Obstacle Avoidance [47]. Ref 7.—A Literature Review on the Smart Wheelchair Systems [48]. Ref 8.—A Real-Time Control Approach for Unmanned Aerial Vehicles Using Brain-Computer Interface [49]. Ref 9.—Real-Time Brain Machine Interaction via Social Robot Gesture Control [50].

Differences among the references themselves are also noticeable, with some excelling in areas where others are less capable. Reference 7, for example, shows substantial scores in integration and compatibility, indicating a focus on harmonious system incorporation. In contrast, other references, such as Ref 1 and Ref 9, seem to prioritize efficiency and performance more highly. These variances highlight the diversity of technical strategies and priorities that exist within the field and the importance of carefully selecting a reference that aligns with the specific technical aspirations of a project.

As can be seen in Table 8 and Table 9, as well as in the diagrams in Figure 15 and Figure 16, for the functional requirements part, some of the papers. Specifically, the one referenced with nº 3 could be considered to meet the parameters proposed by our architecture, but on the contrary, this same paper, Ref. 3, is substantially far from the technical objectives; therefore, we can consider that in none of the cases compared, the specifications and requirements that our architecture proposes as a solution are met.

Table 8.

Comparative table of functional requirements.

Table 9.

Comparative table of technical requirements.

5.2. Analysis of the Requirement Fulfillment

Our architecture meets all the functional and technical requirements detailed in Table 1 and Table 2 of this publication, as shown in the checklists of Table 8 and Table 9. Table 10 shows the degree of compliance with the functional requirements and the justification for compliance.

Table 10.

Functional requirements compliance tables.

Appendix B. details the result on the Fulfilment of Functional and Technical Requirements with Other Works.

Appendix C details each of the requirements and their degree of compliance in relation to the functional issues.

Next, in Table 11, we will verify that compliance with the technical requirements is also achieved according to the proposed architecture.

Table 11.

Technical requirements compliance tables.

Appendix D details each of these requirements and their degree of compliance in relation to technical issues.

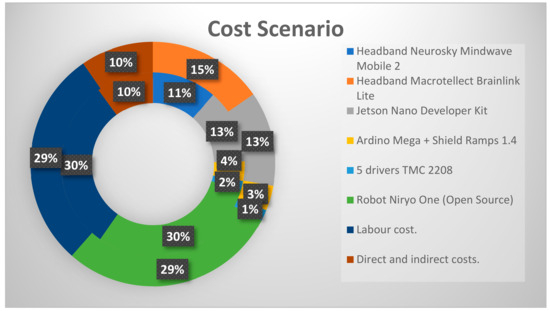

5.3. Economic Viability of the Proposed Architecture

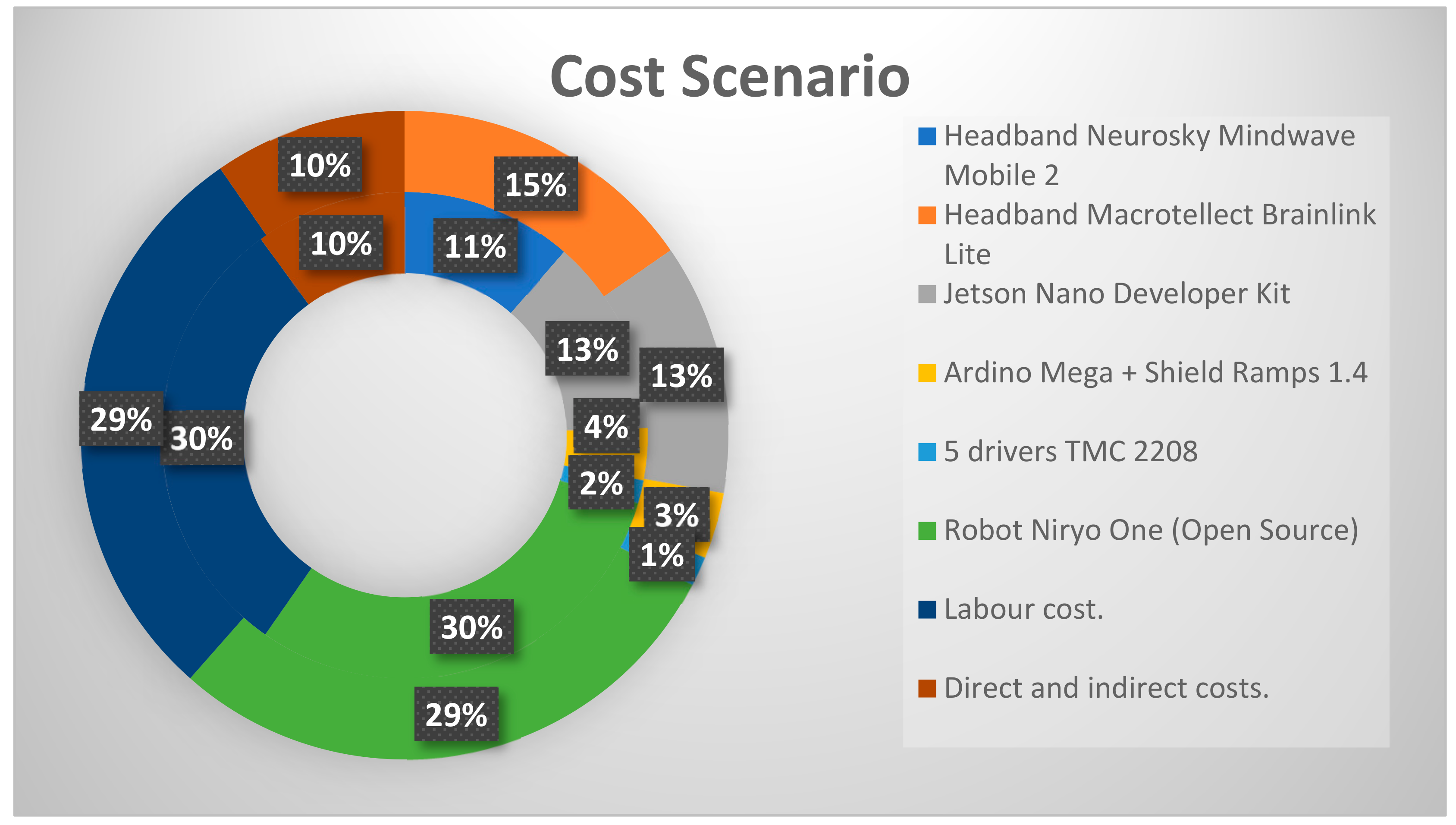

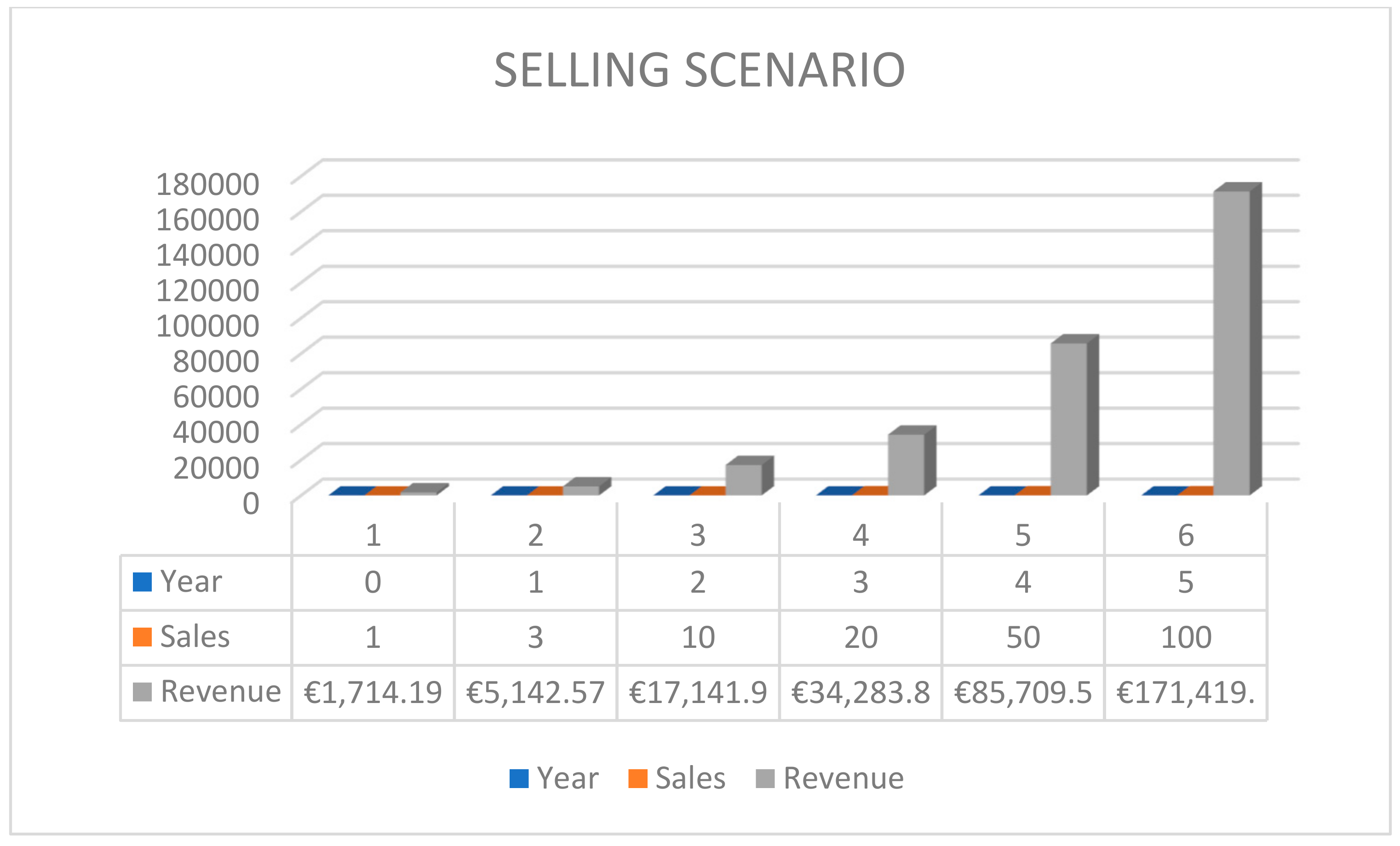

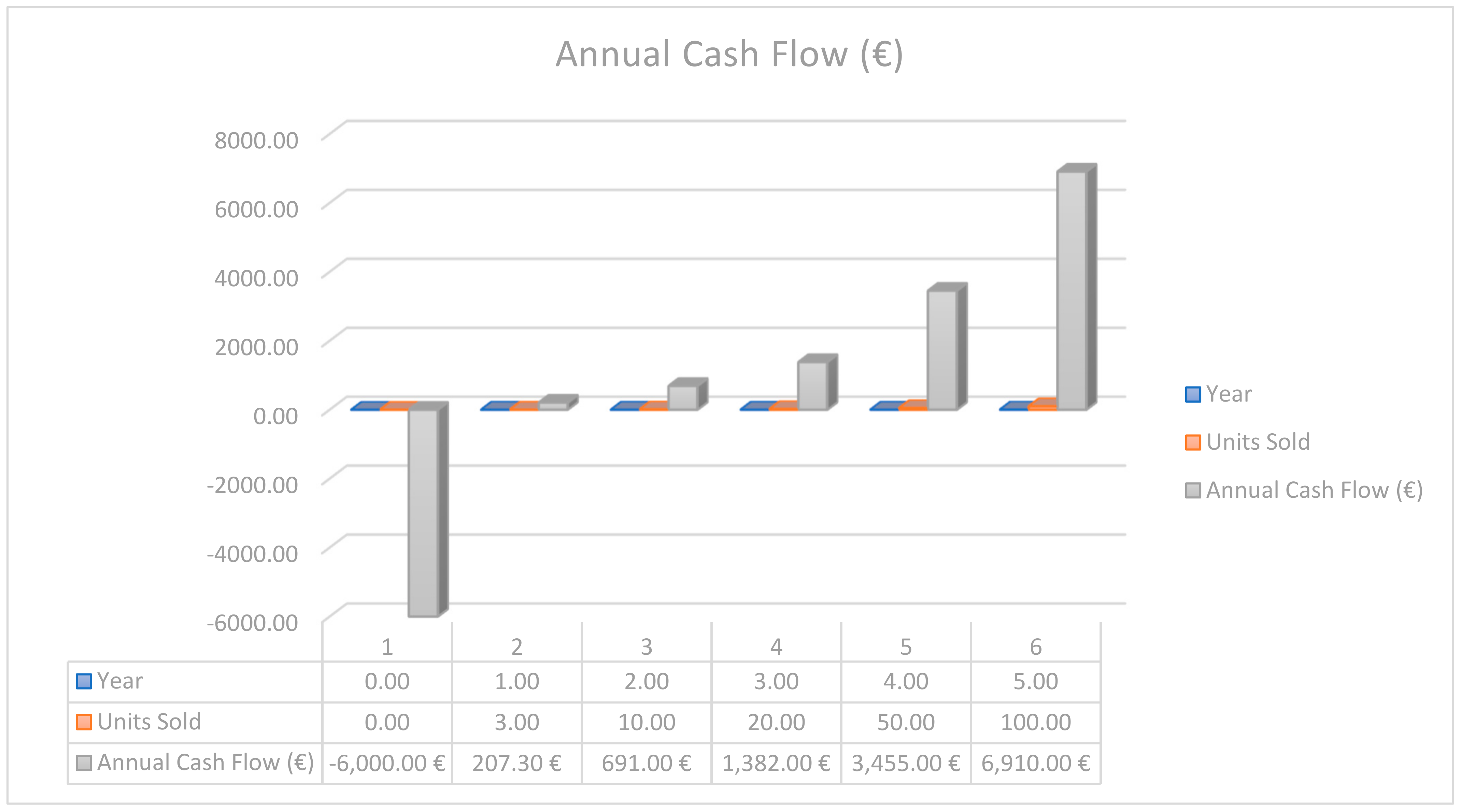

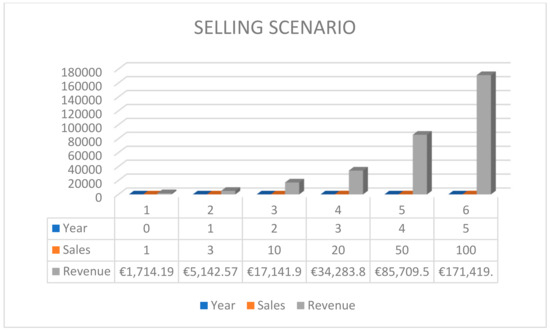

An economic and financial analysis was carried out, considering a scenario of device sales, with the results shown in the following figures and tables.

Figure 17.

Cost of production scenario.

Figure 18.

Selling scenario (5 years).

NPV, IRR, and ROI results for the sales scenario with an initial investment of EUR 6000, necessary for the first year of activity, assuming a rate of return of 10%.

In Table 12, you can see the results of the analysis during the first 5 years, which are fundamental for the survival of the business model.

Table 12.

Financial results.

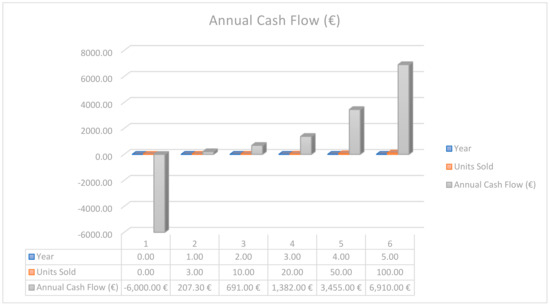

Figure 19 shows the evolution of annual cash flow for each of the 5 years with the updated initial investment of EUR 6.000.

Figure 19.

Annual cash flow (5 years).

The annual cash flow reflects the net after subtracting variable costs from the revenue generated by unit sales each year. The initial investment is shown in Year 0 as a negative cash flow, and then a progressive increase in annual cash flow is observed as sales increase.

Moving beyond numbers, the intangible benefits of BCI technology are equally compelling. The independence it affords to individuals with physical disabilities is immeasurable, enhancing their quality of life and autonomy. A BCI system provides constant support, promoting self-reliance and emotional well-being, an advantage that cannot be matched by human assistants. Although the upfront cost is substantial, potential grants and funding opportunities can alleviate this financial challenge. In sum, the long-term economic and emotional gains from a BCI system make a strong case for its adoption, transcending mere financial metrics and profoundly impacting users’ lives.

5.4. Legal and Technical Viability

To assess the legal and technical viability of a BCI project with applications in robotics and assisted mobility, it is essential to carefully consider the following aspects.

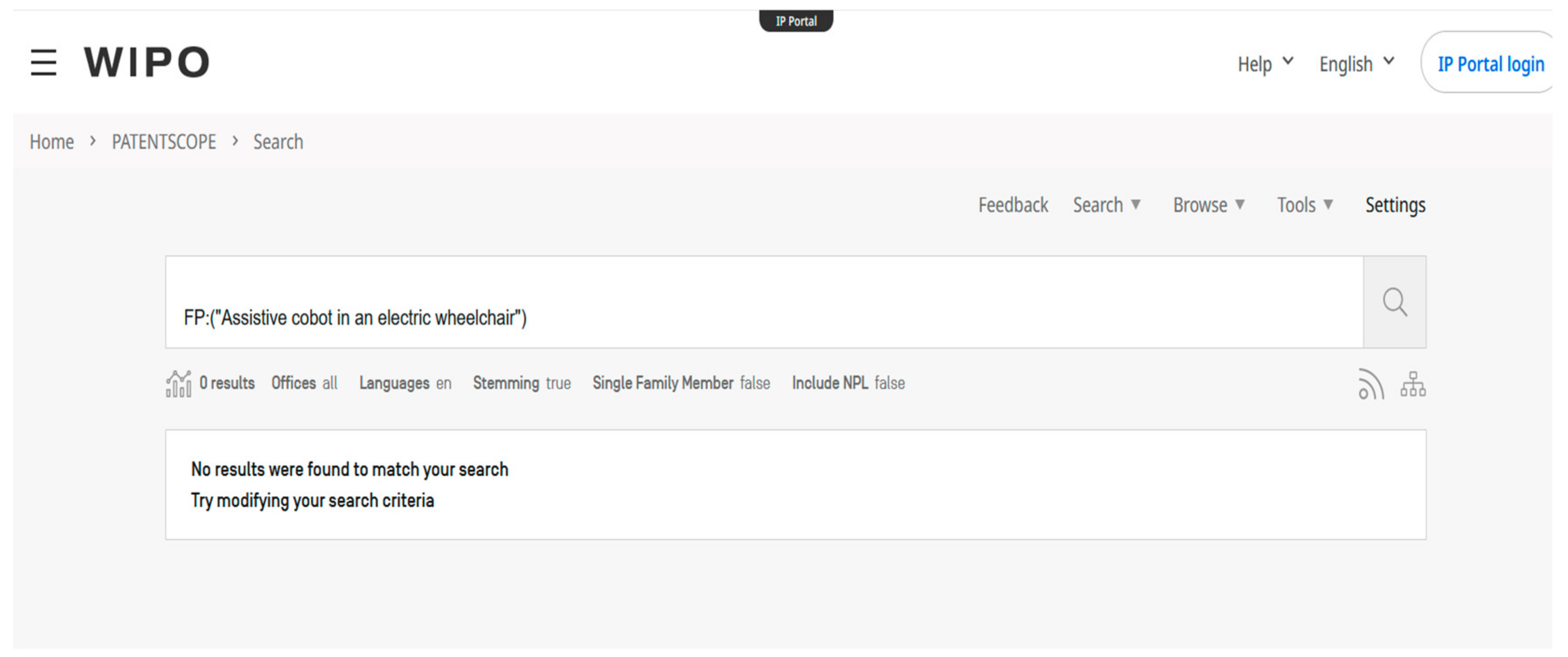

- Intellectual Property Protection:

Patentability of the development is not anticipated, nor is any other type of intellectual property protection at the moment. After reviewing existing patents, no similar models have been found, thus avoiding potential litigation for intellectual property infringement.

The possible patents and/or utility models have been reviewed in the European Patent Office (EPO) and specifically in PATENTSCOPE—Worldwide Inventions within the alternatives of web patent search engines with the following search parameters [52,53]:

- Search 1: “Assistive cobot in an electric wheelchair”; 0 results.

- Search 2: “Assistive cobot in a motorised wheelchair”; 0 results.

- Search 3: “Motorised wheelchair with integrated robot”; 33 results, nonsimilar.

Figure 20, Figure 21 and Figure 22 show the result of the search of the patents found in the PATENTSCOPE browser.

Figure 20.

Results of the 1st search on Patentscope.

Figure 21.

Results of the 2nd search on Patentscope.

Figure 22.

Results of the 3rd search on Patentscope.

6. Discussion

The use of low-cost commercial EEG headbands like Neurosky or Brainlink represents a significant advancement in the accessibility of BCI technology. These devices, although limited in terms of the number of electrodes and precision compared to more advanced EEG systems, offer a viable solution for applications where portability and cost are critical factors. The ability of these headbands to capture a range of brain signals, including Delta, Theta, Alpha, Beta, and Gamma waves, as well as attention and meditation metrics, makes them suitable for a variety of BCI applications, from device control to monitoring mental well-being.

Real-time preprocessing and classification of EEG signals are fundamental to the effectiveness of BCI systems. Techniques such as normalization, Fourier transformation, and wavelet transformation are essential for filtering noise and extracting significant features from EEG signals. These methods allow for more accurate and efficient classification, crucial for real-time applications like the control of motorized wheelchairs and cobots. Research in this field has demonstrated various strategies to improve accuracy and processing speed, vital for the practical implementation of these technologies.

The integration of systems such as Arduino Mega and Jetson Nano in BCI applications presents unique opportunities for device control. Arduino Mega, with its ability to handle multiple inputs and outputs, is ideal for controlling the mechanical aspects of wheelchairs and cobots. On the other hand, Jetson Nano, with its powerful data processing and machine learning capabilities, is suitable for real-time analysis of EEG signals. This combination of hardware allows for a smooth and efficient interaction between the user and the device, opening new possibilities in assistance for people with disabilities and automation.

In summary, research in the field of brain–computer interfaces using low-cost EEG headbands and their integration with control systems like Arduino and Jetson Nano shows great potential. Although there are limitations in terms of precision and processing capacity, advances in signal processing and classification techniques are opening new avenues for practical and accessible applications in this field.

7. Conclusions and Futures Work Lines

Research in the field of BCIs using low-cost EEG headbands, such as Neurosky or Brainlink, focused on reading brain signals from the prefrontal area, has proven to be promising for a variety of practical applications. These devices, accessible, portable, and non-invasive, offer a viable solution for capturing brain signals, including a range of brain waves and attention and meditation metrics, calculated thanks to their own algorithm. Despite their limitations compared to more advanced EEG systems, these headbands are sufficient for many BCI applications, especially in contexts where portability, ease of use, and cost are critical considerations.

Real-time preprocessing and classification of EEG signals are crucial aspects of the effectiveness of BCI systems. Brain signal processing techniques, such as normalization, Fourier transformation, and wavelet transformation, play an essential role in improving the accuracy and efficiency of classification. These methods extract significant features from EEG signals, which is fundamental for real-time applications, considering that the filtering stage is carried out in the EEG headband itself thanks to its TGAM device. Research in this field has provided valuable insights on how to improve the speed and accuracy of signal processing, which is vital for the practical implementation of BCI technologies.

The integration of systems like Arduino Mega and Jetson Nano in BCI applications has opened new possibilities for device control. While Arduino Mega is ideal for handling the mechanical aspects of devices like motorized wheelchairs and cobots, Jetson Nano, with its data processing and machine learning capabilities, is suitable for real-time analysis of EEG signals and management of the control environment through ROS and ROS-Neuro. This combination of hardware facilitates a smooth and efficient interaction between the user and the device, which is crucial for practical applications in assistance to people with disabilities and in the automation process.

Regarding the control of motorized wheelchairs, research has shown that the integration of BCI systems with proportional controls demonstrates the ability to control a wheelchair using brain signals, opening new possibilities for people with severe motor disabilities and offering greater independence and quality of life.

We can affirm after this research that it is theoretically demonstrated that the use of cobots, such as the open-source model from Niryo, controlled by open-source ROS platforms (ROS-Neuro) in combination with EEG/BCI systems, represents a promising area of application. These cobots, adapted to work alongside humans, can be controlled by brain signals to perform complex tasks, which has significant implications in the industry and especially in personal assistance.

In conclusion, research in the field of brain–computer interfaces using low-cost EEG headbands and their integration with control systems like Arduino and Jetson Nano shows great potential for practical and accessible applications. These developments, as shown in this publication, not only improve the quality of life of people with neurodegenerative diseases but also offer new opportunities in automation and the control of physical devices.

While the current research has focused on mobility assistance and robotic control, future work could explore other potential applications of low-cost BCI systems. This includes areas such as mental health monitoring, neurofeedback therapy, and integration with virtual and augmented reality for various educational and entertainment purposes.

By focusing on this area, the field of brain–computer interfaces can continue to evolve, offering increasingly effective and user-friendly solutions that enhance the lives of individuals, particularly those with disabilities, and pave the way for innovative applications across various sectors.

Author Contributions

Conceptualization, F.R.; methodology, J.E.S. and J.M.C.; formal analysis, F.R.; research, F.R., J.E.S. and J.M.C.; data preservation, F.R.; data, F.R.; writing the original draft, F.R.; writing, revising and editing, J.E.S. and J.M.C., visualization, J.E.S.; supervision, J.E.S. and J.M.C.; project administration, F.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Statement of the Bioethics Committee

The study has been conducted in accordance with ethical standards and, in particular, with the provisions of the Declaration of Helsinki and other applicable regulations, as well as with the provisions of the Universal Declaration on Bioethics and Human Rights (UNESCO, 2005), the General Data Protection Regulation of the European Union (Regulation 2016/679) and Organic Law 3/2018, of 5 December, on the Protection of Personal Data and Guarantee of Digital Rights and approved by the Bioethics Committee of the University of Burgos, U05100001, Burgos (Spain). Approval Code: REGAGE24s00009305342 of Date of approval: 5 February 2024.

Informed Consent Statement

Informed consent is available for all volunteers participating in the study.

Data Availability Statement

The data presented in this study are available on request form the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| BCI | Brain–Computer Interface |

| hBCI | Hybrid Brain–Computer Interface |

| TGAM | ThinkGear ASIC Module |

| MI | Motor Imagery |

| SSVEP | Steady State Visually Evoked Potential |

| EEG | Electroencephalography |

| EMG | Electromyography |

| EOG | (Electrooculography) |

| AI | Artificial Intelligence |

| CNNs | Convolutional Neural Networks |

| RNNs | Recurrent Neural Networks |

| LSTM | Long Short-Term Memory |

| PID | Proportional–Integral–Derivative |

| GPUs | Graphics Processing Units |

| GPIO | General Purpose In and Out |

| STL | Standard Triangle Language |

| ISO | Internacional Organization for Standardization |

Appendix A. Functional and Technical Requirements

In this appendix, you can find the details and additional data associated with the description of the functional and technical requirements identified in the proposed architecture and their corresponding justification.

Appendix A.1. Functional Requirements

- EEG signal capture

Using high-quality EEG headsets ensures a frustration-free experience where the user’s intentions are correctly translated into actions. This is crucial in terms of ergonomics, as it reduces the need for extra effort or repeated attempts to perform a task, improving user comfort and efficiency. In addition, specialized and comfortable hardware minimizes discomfort during prolonged use, which is especially important for users with disabilities who may have additional sensitivities or limitations in mobility.

- Real-time interpretation of EEG signals

Real-time interpretation of EEG signals is vital for a smooth and responsive user experience. By minimizing latency in the system’s response, users feel they have immediate and natural control, similar to habitual body movements. This not only increases user confidence in the system but also enhances intuitive interaction, reducing cognitive load and improving system ergonomics. Powerful hardware and advanced software enable this rapid interpretation to make the system more user-friendly and accessible, even for users with limited technological skills.

- Intuitive control of the robotic arm and wheelchair

Intuitive control of the robotic arm and wheelchair is essential for users with limitations to be able to operate the system independently and with confidence. A simplified user interface, designed with ergonomics in mind, facilitates adaptation and learning of the system, making it accessible even for users with little experience in technology. Integration with commercial motorized wheelchair joysticks offers a familiar control option, which can significantly improve comfort and reduce the stress of learning a new system.

- Safety and stability of movement

Safety and stability in movement are critical aspects for the user’s peace of mind and confidence. Implementing control systems and safety sensors, such as LiDAR, provides an additional layer of protection, ensuring that the environment is safe to operate in. This preventive approach is essential to avoid accidents, which is of utmost importance for users who may have limited mobility or reduced capacity to react to dangerous situations. The ergonomics of the safety design ensures that users can enjoy an experience without fear of injury or damage.

- Adaptability to Different Users

The adaptability to different users ensures that the system is inclusive and effective for a wide range of needs and preferences. The system must be usable by patients with neurodegenerative diseases, reduced mobility, individuals who have suffered spinal cord injuries, and even those in postoperative stages. This adaptability will be achieved through machine learning algorithms that adjust to the individual characteristics of each user, allowing for personalization of the system and enhancing the experience and ergonomics. This means that the system is not only easier to use but also adapts to the specific ergonomic needs of each individual, resulting in a more comfortable and effective experience. Precise and personalized adaptation is key to ensuring that all users, regardless of their abilities or limitations, can interact with the system effectively and comfortably.

- Control of the Wheelchair’s Movements

The chair must perform at least the four basic movements necessary to consider the preset movements in the four basic directions and combinations of these basic movements. These directions can be associated with specific values of the Alpha signal, as seen in the study, where controlled variations of the Alpha signal allow a user to select the direction they wish to move. In our case, the selection of the wheelchair’s movement takes as a reference more than one signal, even the values of attention and meditation resulting from the calculation algorithm performed by the headband itself [12].

- Integration of the System with Commercial Chairs

The system must be able to integrate with commercial chairs, allowing future users to adapt the motorized chair they have. The adaptation must be possible regardless of the brand and model of the chair, always seeking maximum compatibility with the existing chairs and control forms in the market.

- Portability and Low Weight (<100 g)

A necessary condition to improve the usability of the system. The headband or headbands must be light enough and comfortable for the user to sustain continuous use for at least 3 h, as indicated in the subsequent technical requirements. The maximum bearable weight has been set at 100 g, in line with the weights observed in other wearables with approximate values of 100 g as the maximum weight.

- EEG/ECG Signal Quality Analysis

To verify the correct functioning of the device, the headband must have its own signal that analyzes the quality of the signal. This way, it can even determine if the user is wearing the headband or not. This value will determine that the system is in use and will avoid unwanted signals and, therefore, unwanted or involuntary movements. This creates an extra layer of safety.

- Commercial Wireless Headbands

Maintaining the same philosophy in usage ergonomics, it is proposed to use commercial devices that send the collected data of brain activity wirelessly, facilitating their portability and interconnection with other devices without the need for cables that hinder the user experience.

- Preset Routines in the Actions of the Robot and Robot + Chair

Given the anticipated need for repeated actions over time, which we might consider typical actions, it becomes necessary to predefine certain types of actions that facilitate the control and adaptation of the user to the new tool.

Appendix A.2. Technical Requirements

- Effective Integration of BCI with Chair + Robot