A Novel Federated Learning Framework Based on Conditional Generative Adversarial Networks for Privacy Preserving in 6G

Abstract

1. Introduction

- (1)

- Integrating Conditional Generative Adversarial Networks into federated learning, where, through this conditionality, the generator can capture feature distributions of specific labels, thus protecting client data privacy while maintaining good classification performance of the client models.

- (2)

- Introducing private extractors before public classifiers and retaining extractors locally to strengthen privacy measures.

- (3)

- Sharing only the generators with the server for aggregating shared knowledge among clients to improve model performance.

- (4)

- Conducting extensive experiments to validate the performance of NFL-CFAN, demonstrating its superior performance in maintaining privacy compared to FL baseline methods.

2. Prepare Knowledge and Related Work

2.1. Federated Learning

2.2. Generative Adversarial Network

2.3. Generative Adversarial Networks in Federated Learning

3. Methods

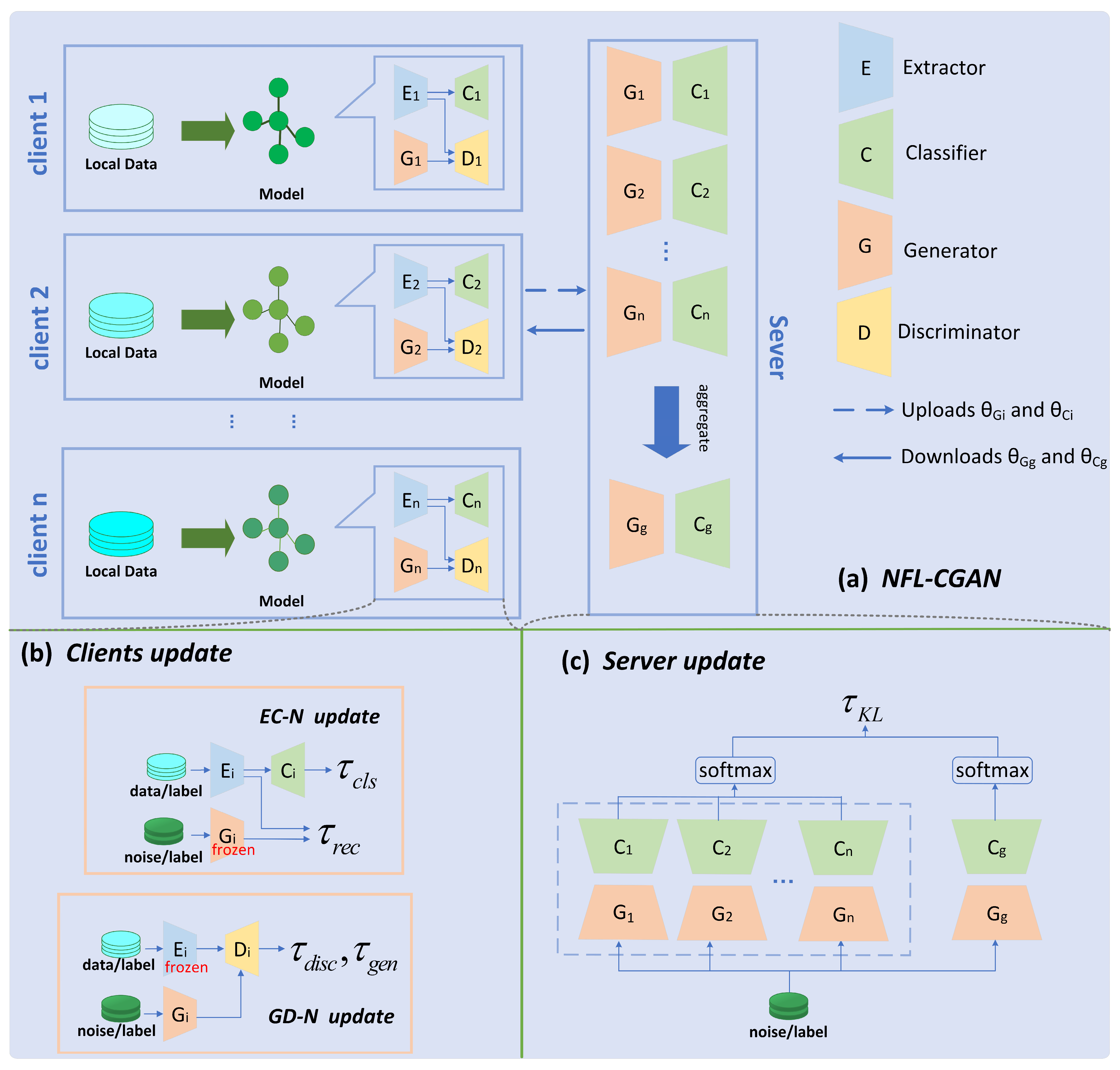

3.1. Overview

3.2. Collaboration Mechanisms of Clients

3.2.1. EC-N Update

3.2.2. GD-N Update

3.2.3. Server Update

4. Experiment

4.1. Datasets

4.2. Experimental Environment

4.3. Model Parameters

4.4. Experimental Setup

4.5. Evaluation Metrics

5. Experimental Results

5.1. Comparative Experiment Introduction

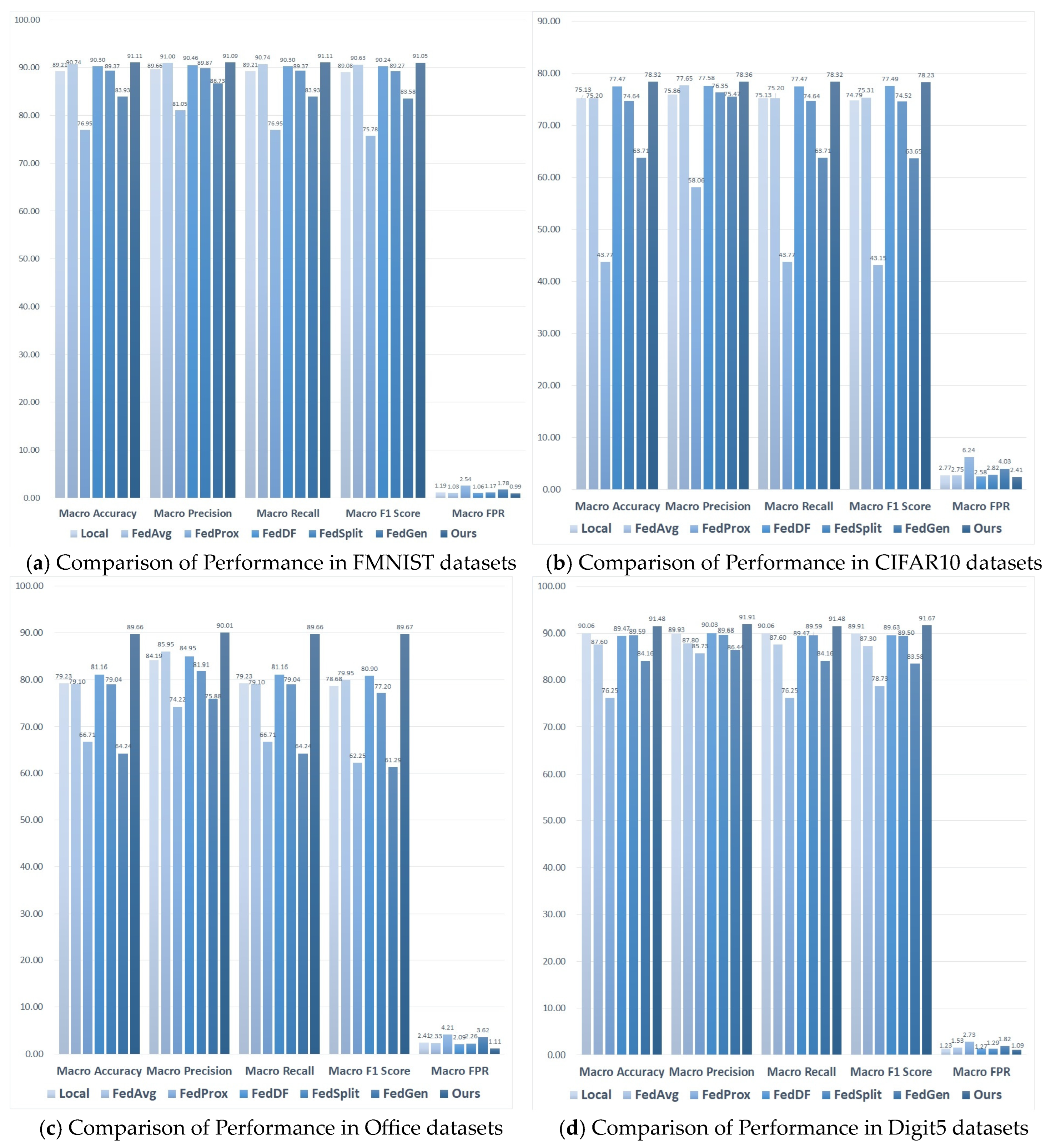

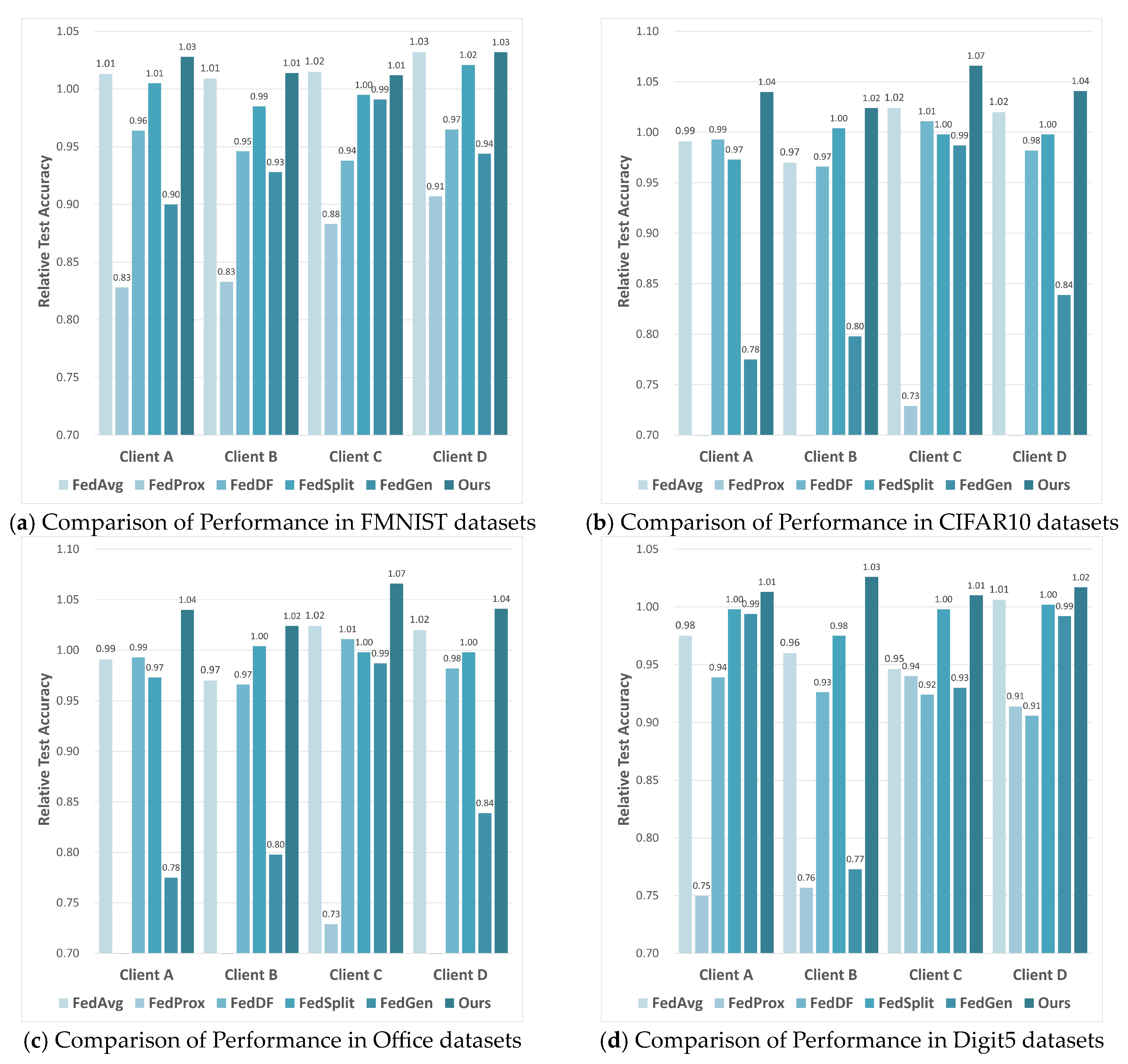

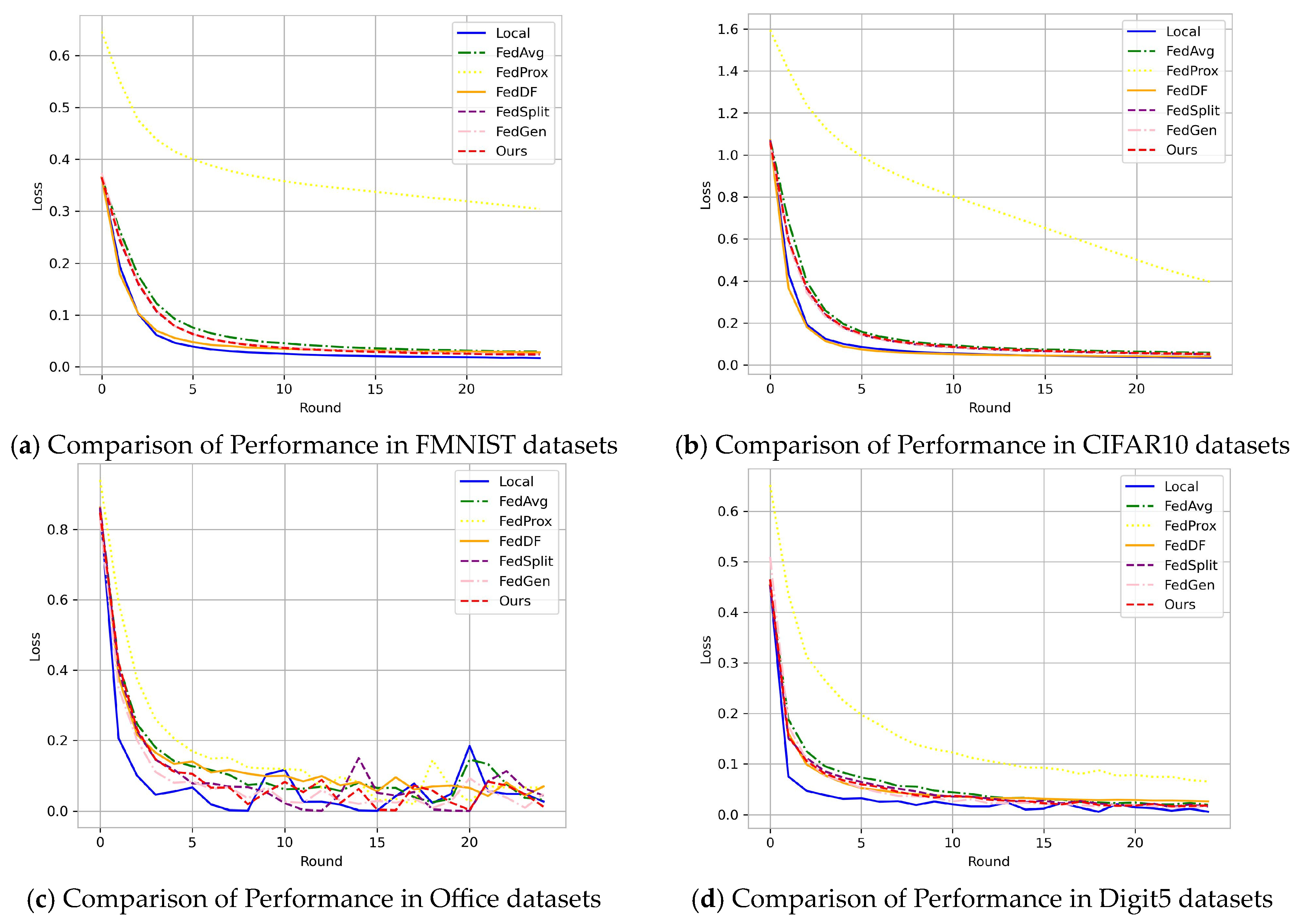

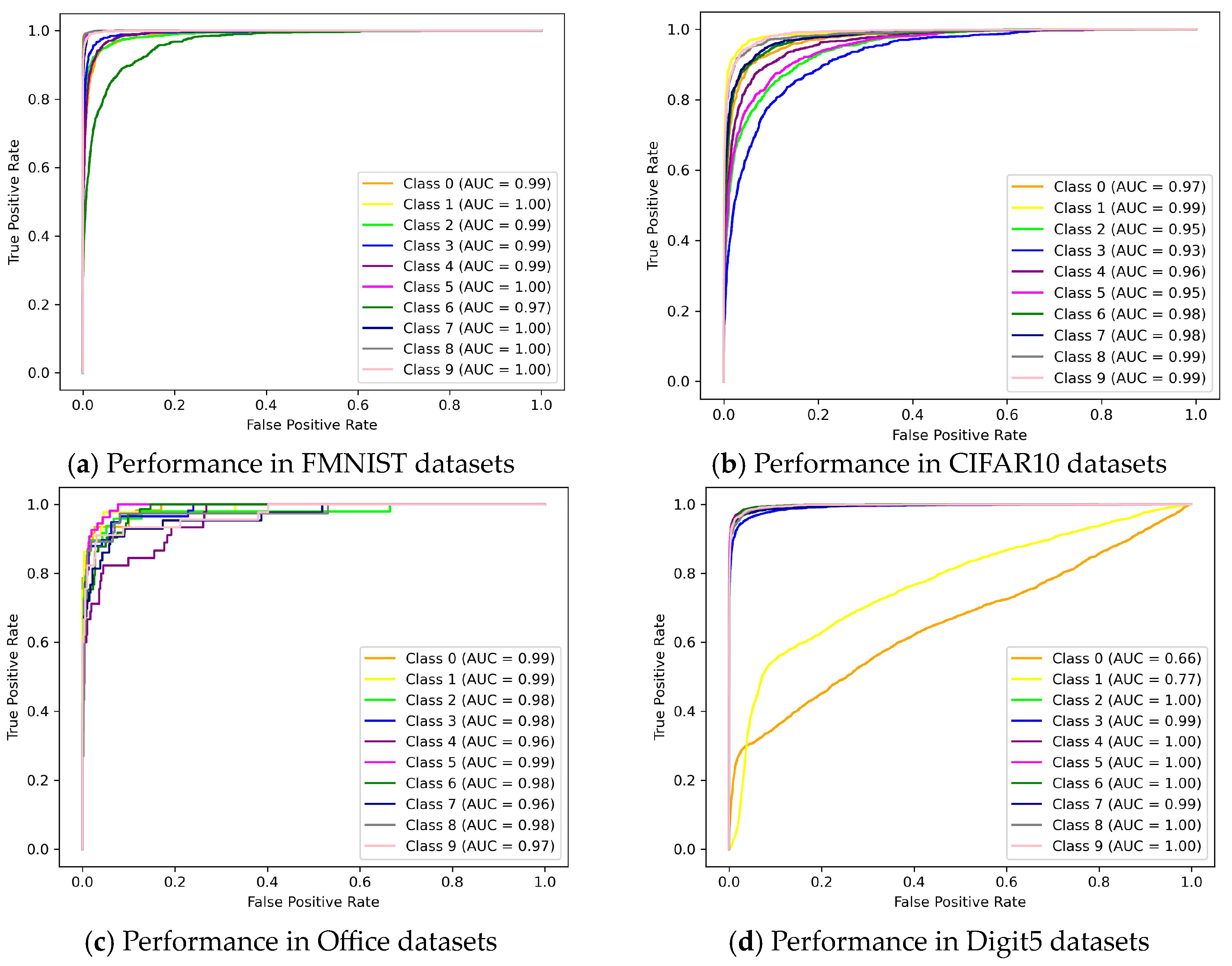

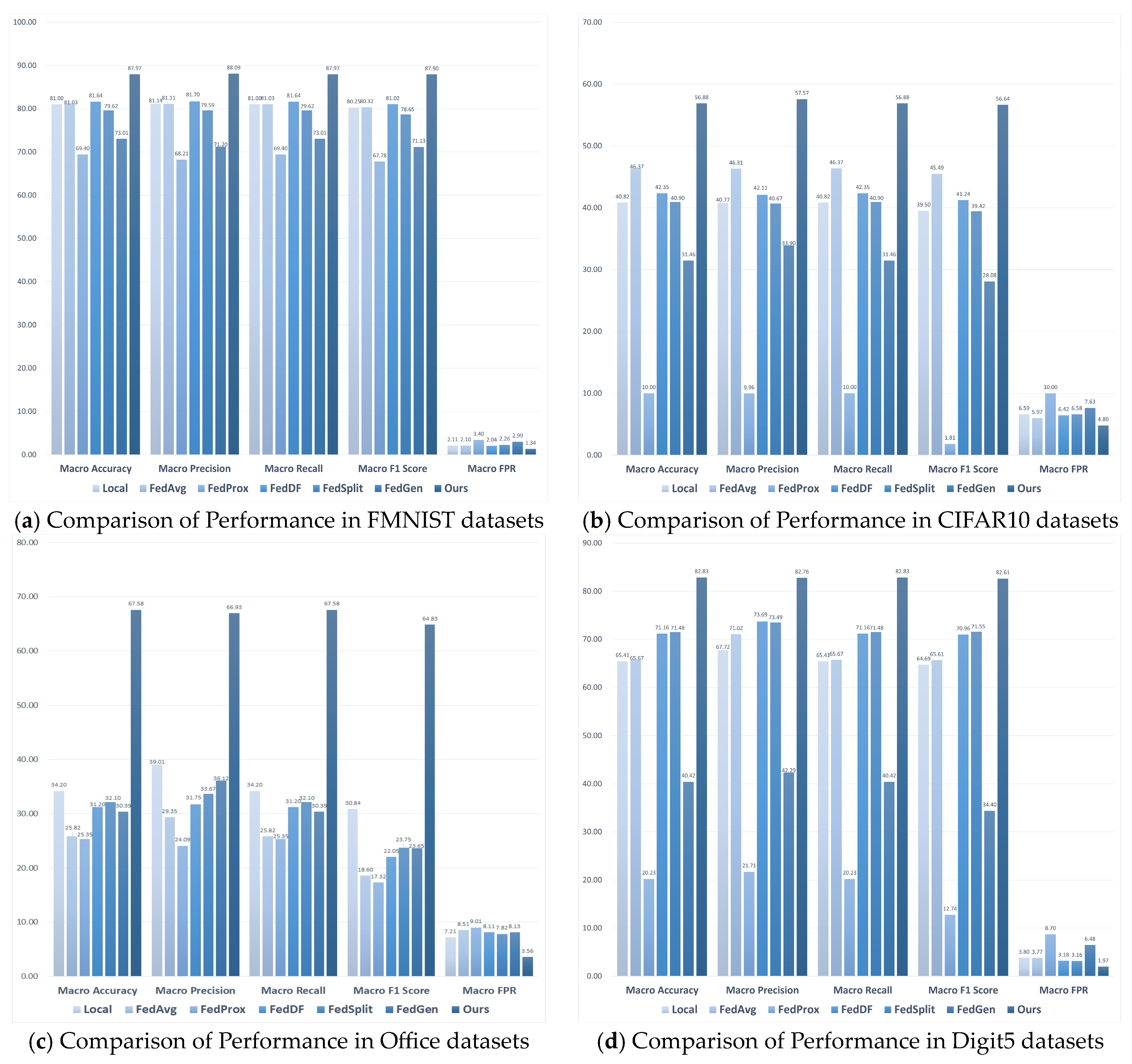

5.2. Experimental Results of Deep Residual Network

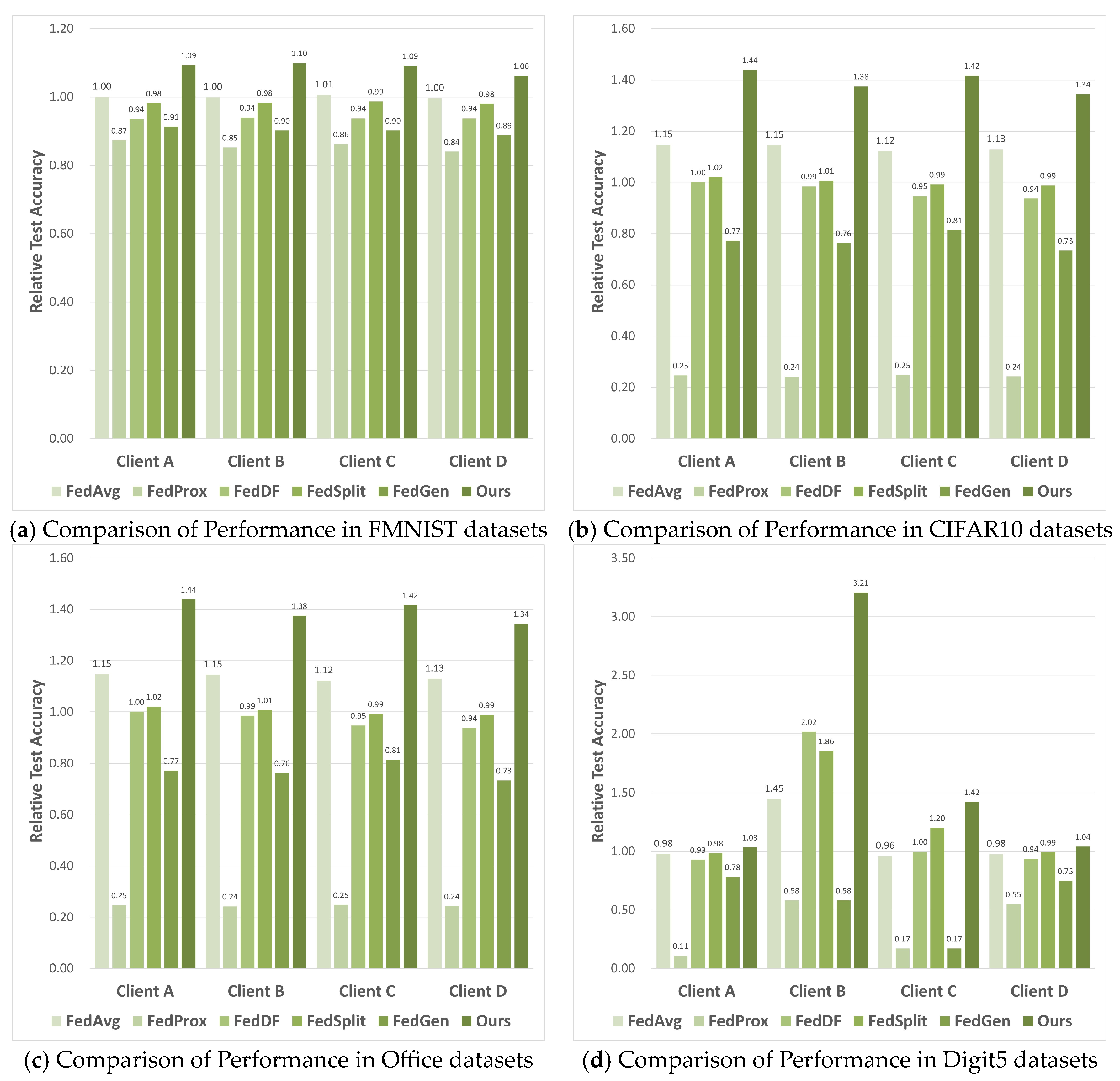

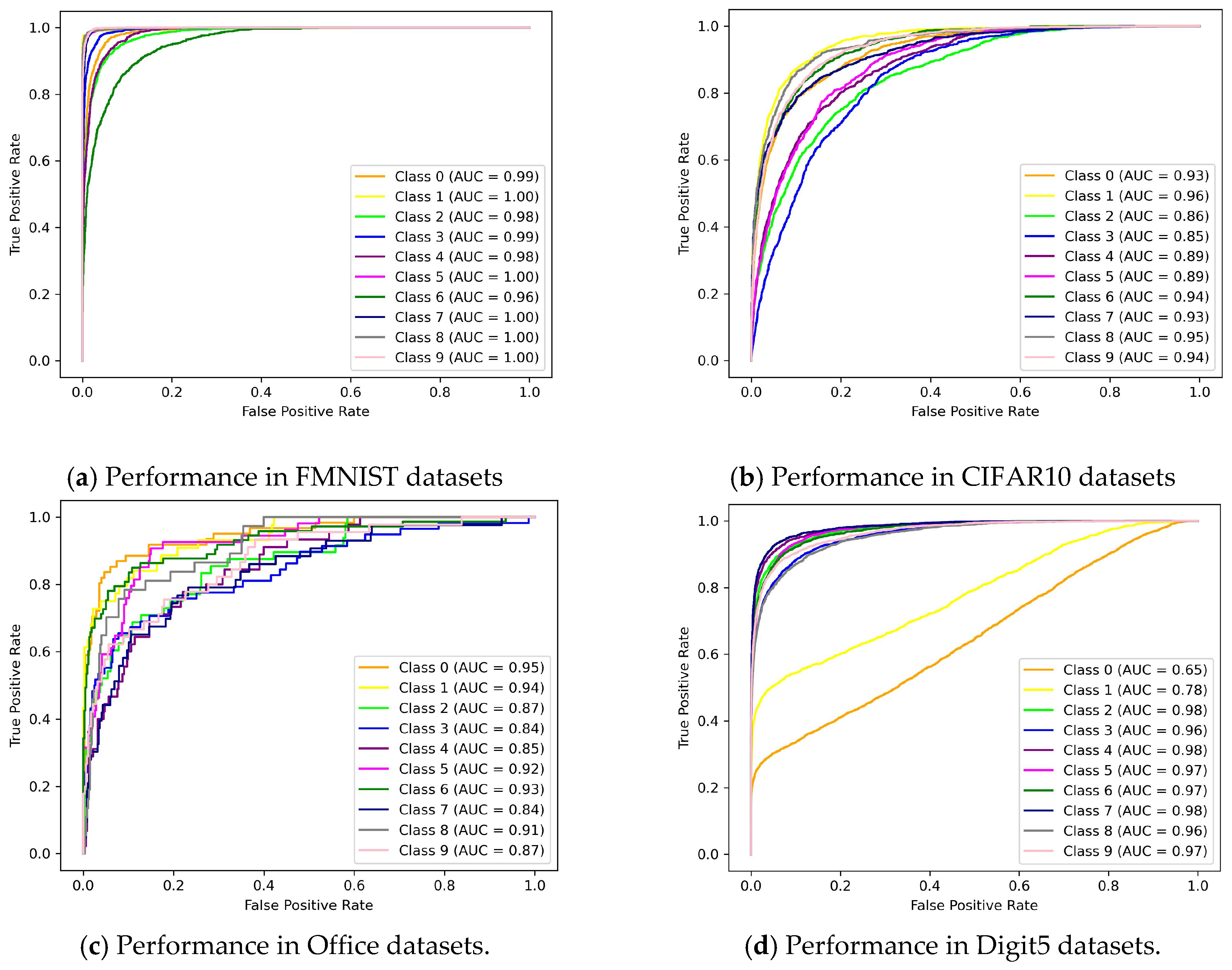

5.3. Experimental Results of Convolutional Neural Network

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep Learning for Computer Vision: A Brief Review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef] [PubMed]

- Torfi, A.; Shirvani, R.A.; Keneshloo, Y.; Tavaf, N.; Fox, E.A. Natural Language Processing Advancements by Deep Learning: A Survey. arXiv 2021, arXiv:2003.01200. [Google Scholar]

- Cheng, H.-T.; Koc, L.; Harmsen, J.; Shaked, T.; Chandra, T.; Aradhye, H.; Anderson, G.; Corrado, G.; Chai, W.; Ispir, M.; et al. Wide & Deep Learning for Recommender Systems. In Proceedings of the 1st Workshop on Deep Learning for Recommender Systems, Boston, MA, USA, 15 September 2016; ACM: New York, NY, USA, 2016; pp. 7–10. [Google Scholar]

- Asgarinia, H.; Chomczyk Penedo, A.; Esteves, B.; Lewis, D. “Who Should I Trust with My Data?” Ethical and Legal Challenges for Innovation in New Decentralized Data Management Technologies. Information 2023, 14, 351. [Google Scholar] [CrossRef]

- Hoffmann, I.; Jensen, N.; Cristescu, A. Decentralized Governance for Digital Platforms-Architecture Proposal for the Mobility Market to Enhance Data Privacy and Market Diversity. In Proceedings of the 2021 IEEE 18th Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 9–12 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- Hard, A.; Rao, K.; Mathews, R.; Ramaswamy, S.; Beaufays, F.; Augenstein, S.; Eichner, H.; Kiddon, C.; Ramage, D. Federated Learning for Mobile Keyboard Prediction. arXiv 2019, arXiv:1811.03604. [Google Scholar]

- Aggarwal, A.; Mittal, M.; Battineni, G. Generative Adversarial Network: An Overview of Theory and Applications. Int. J. Inf. Manag. Data Insights 2021, 1, 100004. [Google Scholar] [CrossRef]

- Li, X.; Huang, K.; Yang, W.; Wang, S.; Zhang, Z. On the Convergence of FedAvg on Non-IID Data. arXiv 2020, arXiv:1907.02189. [Google Scholar]

- Yuan, X.; Li, P. On Convergence of FedProx: Local Dissimilarity Invariant Bounds, Non-Smoothness and Beyond. Adv. Neural Inf. Process. Syst. 2022, 35, 10752–10765. [Google Scholar]

- Liu, H.; Li, B.; Xie, P.; Zhao, C. Privacy-Encoded Federated Learning Against Gradient-Based Data Reconstruction Attacks. IEEE Trans. Inf. Forensics Secur. 2023, 18, 5860–5875. [Google Scholar] [CrossRef]

- He, X.; Peng, C.; Tan, W. Fast and Accurate Deep Leakage from Gradients Based on Wasserstein Distance. Int. J. Intell. Syst. 2023, 2023, 5510329. [Google Scholar] [CrossRef]

- Acar, A.; Aksu, H.; Uluagac, A.S.; Conti, M. A Survey on Homomorphic Encryption Schemes: Theory and Implementation. ACM Comput. Surv. 2019, 51, 1–35. [Google Scholar] [CrossRef]

- Dwork, C. Differential Privacy. In Automata, Languages and Programming; Bugliesi, M., Preneel, B., Sassone, V., Wegener, I., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1–12. [Google Scholar]

- Ruan, J.; Liang, G.; Zhao, J.; Qiu, J.; Dong, Z.Y. An Inertia-Based Data Recovery Scheme for False Data Injection Attack. IEEE Trans. Ind. Inform. 2022, 18, 7814–7823. [Google Scholar] [CrossRef]

- Truex, S.; Baracaldo, N.; Anwar, A.; Steinke, T.; Ludwig, H.; Zhang, R.; Zhou, Y. A Hybrid Approach to Privacy-Preserving Federated Learning. In Proceedings of the 12th ACM Workshop on Artificial Intelligence and Security, London, UK, 11 November 2019; ACM: New York, NY, USA, 2019; pp. 1–11. [Google Scholar]

- Mou, W.; Fu, C.; Lei, Y.; Hu, C. A Verifiable Federated Learning Scheme Based on Secure Multi-Party Computation. In Wireless Algorithms, Systems, and Applications; Liu, Z., Wu, F., Das, S.K., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2021; Volume 12938, pp. 198–209. ISBN 978-3-030-86129-2. [Google Scholar]

- Wu, X.; Zhang, Y.; Shi, M.; Li, P.; Li, R.; Xiong, N.N. An Adaptive Federated Learning Scheme with Differential Privacy Preserving. Future Gener. Comput. Syst. 2022, 127, 362–372. [Google Scholar] [CrossRef]

- Ma, J.; Naas, S.; Sigg, S.; Lyu, X. Privacy-preserving Federated Learning Based on Multi-key Homomorphic Encryption. Int. J. Intell. Syst. 2022, 37, 5880–5901. [Google Scholar] [CrossRef]

- Cao, Y.; Zhang, J.; Zhao, Y.; Su, P.; Huang, H. SRFL: A Secure & Robust Federated Learning Framework for IoT with Trusted Execution Environments. Expert Syst. Appl. 2024, 239, 122410. [Google Scholar]

- Xia, Q.; Ye, W.; Tao, Z.; Wu, J.; Li, Q. A Survey of Federated Learning for Edge Computing: Research Problems and Solutions. High-Confid. Comput. 2021, 1, 100008. [Google Scholar] [CrossRef]

- Yu, R.; Li, P. Toward Resource-Efficient Federated Learning in Mobile Edge Computing. IEEE Netw. 2021, 35, 148–155. [Google Scholar] [CrossRef]

- Khalil, K.; Khan Mamun, M.M.R.; Sherif, A.; Elsersy, M.S.; Imam, A.A.-A.; Mahmoud, M.; Alsabaan, M. A Federated Learning Model Based on Hardware Acceleration for the Early Detection of Alzheimer’s Disease. Sensors 2023, 23, 8272. [Google Scholar] [CrossRef]

- Pathak, R.; Wainwright, M.J. FedSplit: An Algorithmic Framework for Fast Federated Optimization. Adv. Neural Inf. Process. Syst. 2020, 33, 7057–7066. [Google Scholar]

- Malekmohammadi, S.; Shaloudegi, K.; Hu, Z.; Yu, Y. An Operator Splitting View of Federated Learning. arXiv 2021, arXiv:2108.05974. [Google Scholar]

- Ishwarya, T.M.; Durai, K.N. Detection of Face Mask Using Convolutional Neural Network. In Proceedings of the 2022 8th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 25–26 March 2022; IEEE: Piscataway, NJ, USA, 2022; Volume 1, pp. 2008–2012. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. Adv. Neural Inf. Process. Syst. 2014, 1050, 10. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional Generative Adversarial Nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Zhang, Y.; Qu, H.; Chang, Q.; Liu, H.; Metaxas, D.; Chen, C. Training Federated GANs with Theoretical Guarantees: A Universal Aggregation Approach. arXiv 2021, arXiv:2102.04655. [Google Scholar]

- Hardy, C.; Le Merrer, E.; Sericola, B. Md-Gan: Multi-Discriminator Generative Adversarial Networks for Distributed Datasets. In Proceedings of the 2019 IEEE International Parallel and Distributed Processing Symposium (IPDPS), Rio de Janeiro, Brazil, 20–24 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 866–877. [Google Scholar]

- Gan, S.; Lian, X.; Wang, R.; Chang, J.; Liu, C.; Shi, H.; Zhang, S.; Li, X.; Sun, T.; Jiang, J.; et al. BAGUA: Scaling up Distributed Learning with System Relaxations. arXiv 2021, arXiv:2107.01499. [Google Scholar] [CrossRef]

- Liu, X.; Hsieh, C.-J. Rob-Gan: Generator, Discriminator, and Adversarial Attacker. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11234–11243. [Google Scholar]

- Cao, L.; Li, K.; Du, K.; Guo, Y.; Song, P.; Wang, T.; Fu, C. FL-GAN: Feature Learning Generative Adversarial Network for High-Quality Face Sketch Synthesis. IEICE Trans. Fundam. Electron. Commun. Comput. Sci. 2021, E104.A, 1389–1402. [Google Scholar] [CrossRef]

- Gou, J.; Yu, B.; Maybank, S.J.; Tao, D. Knowledge Distillation: A Survey. Int. J. Comput. Vis. 2021, 129, 1789–1819. [Google Scholar] [CrossRef]

- Lin, T.; Kong, L.; Stich, S.U.; Jaggi, M. Ensemble Distillation for Robust Model Fusion in Federated Learning. Adv. Neural Inf. Process. Syst. 2020, 33, 2351–2363. [Google Scholar]

- Venkateswaran, P.; Isahagian, V.; Muthusamy, V.; Venkatasubramanian, N. FedGen: Generalizable Federated Learning for Se-quential Data. arXiv 2023, arXiv:2211.01914. [Google Scholar]

| Settings | Hyper Parameters |

|---|---|

| Extractor | (1) Layer 1: Conv2d (image_channel, 6, 5), AvgPool2d (2, 2), Sigmoid () (2) Layer 2: Conv2d (6, 16, 5), AvgPool2d (2, 2), Sigmoid () |

| Classifier | (1) Layer 1: Flatten () (2) Layer 2: Dropout (p = 0.2, inplace = False) 5, 120), Sigmoid () (4) Layer 4: Linear (120,84), Sigmoid () (5) Layer 5: Linear (84, class_num) |

| Settings | Hyper Parameters |

|---|---|

| Extractor | (1) Layer 1: Conv2d (3, self.inplanes, kernel_size = 7, stride = 2, padding = 3, bias = False) (2) Layer 2: Norm_layer (self.inplanes) (3) Layer 3: ReLU (inplace = True) (4) Layer 4: MaxPool2d (kernel_size = 3, stride = 2, padding = 1), (5) Layer 5: Residual block1 (block, 64) (6) Layer 6: Residual block2 (64, 128, stride = 2) (7) Layer 7: Sigmoid () |

| Classifier | (1) Layer 1: Residual block3 (block, 256, stride = 2) (2) Layer 2: Residual block4 (256, 512, stride = 2) (3) Layer 3: Avgpool (512) (4) Layer 4: Flatten (512, 1) block.expansion, num_classes) |

| Settings | Hyper Parameters |

|---|---|

| Generator | (1) Layer 1: Embedding (args.num_classes, args.num_classes) (2) Layer 2: ConvTranspose2d (args.noise_dim + args.num_classes, 512, 2, 1, 0, bias = False) (3) Layer 3: BatchNorm2d (512) (4) Layer 4: LeakyReLU (0.2, inplace = True) (5) Layer 5: ConvTranspose2d (512, 256, 2, 1, 0, bias = False) (6) Layer 6: BatchNorm2d (256) (7) Layer 7: LeakyReLU (0.2, inplace = True) (8) Layer 8: ConvTranspose2d (256, 128, 2, 1, 0, bias = False) (9) Layer 9: BatchNorm2d (128) (10) Layer 10: LeakyReLU (0.2, inplace = True) 1, 2, 1, 0, bias = False) (12) Layer 12: Sigmoid () |

| Discriminator | (1) Layer 1: Embedding (args.num_classes, args.num_classes) (2) Layer 2: Spectral_norm (nn.Conv2d(args.feature_num + args.num_classes, 128, 2, 1, 0, bias = False)) (3) Layer 3: BatchNorm2d (128) (4) Layer 4: LeakyReLU (0.2, inplace = True) (5) Layer 5: Spectral_norm (nn.Conv2d(128, 256, 2, 1, 0, bias = False)) (6) Layer 6: BatchNorm2d (256) (7) Layer 7: LeakyReLU (0.2, inplace = True) (8) Layer 8: Spectral_norm (nn.Conv2d(256, 512, 2, 1, 0, bias = False)) (9) Layer 9: BatchNorm2d (512) (10) Layer 10: LeakyReLU (0.2, inplace = True) (11) Layer 11: Spectral_norm (nn.Conv2d(512, 1, 2, 1, 0, bias = False)) (12) Layer 12: Sigmoid () |

| Dataset | FMNIST | CIFAR10 | Office | Digit5 |

|---|---|---|---|---|

| FedGen | 13.0 | 13.3 | 13.1 | 13.2 |

| Ours | 12.6 | 11.2 | 12.6 | 12.9 |

| Dataset | FMNIST | CIFAR10 | Office | Digit5 |

|---|---|---|---|---|

| FedGen | 13.0 | 9.2 | 12.8 | 12.5 |

| Ours | 11.6 | 8.3 | 10.4 | 10.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, J.; Chen, Z.; Liu, S.; Long, H. A Novel Federated Learning Framework Based on Conditional Generative Adversarial Networks for Privacy Preserving in 6G. Electronics 2024, 13, 783. https://doi.org/10.3390/electronics13040783

Huang J, Chen Z, Liu S, Long H. A Novel Federated Learning Framework Based on Conditional Generative Adversarial Networks for Privacy Preserving in 6G. Electronics. 2024; 13(4):783. https://doi.org/10.3390/electronics13040783

Chicago/Turabian StyleHuang, Jia, Zhen Chen, Shengzheng Liu, and Haixia Long. 2024. "A Novel Federated Learning Framework Based on Conditional Generative Adversarial Networks for Privacy Preserving in 6G" Electronics 13, no. 4: 783. https://doi.org/10.3390/electronics13040783

APA StyleHuang, J., Chen, Z., Liu, S., & Long, H. (2024). A Novel Federated Learning Framework Based on Conditional Generative Adversarial Networks for Privacy Preserving in 6G. Electronics, 13(4), 783. https://doi.org/10.3390/electronics13040783