Abstract

This research introduces an original approach to time series forecasting through the use of multi-scale convolutional neural networks with Transformer modules. The objective is to focus on the limitations of short-term load forecasting in terms of complex spatio-temporal dependencies. The model begins with the convolutional layers, which perform feature extraction from the time series data to look for features with different temporal resolutions. The last step involves making use of the self-attention component of the Transformer block, which tries to find the long-range dependencies within the series. Also, a spatial attention layer is included to handle the interactions among the different samples. Equipped with these features, the model is able to make predictions. Experimental results show that this model performs better compared to the time series forecasting models in the literature. It is worth mentioning that the MSE score or mean square error of the model was 0.62, while the measure of fit R2 was 0.91 in predicting the individual household electric power consumption dataset. The baseline models for this dataset such as the LSTM model had an MSE of 2.324 and R2 value of 0.79, showing that the proposed model was significantly improved by a margin.

1. Introduction

Smart grids aim to create an automated and efficient energy transmission network that enhances power delivery reliability and quality while improving network security, energy efficiency, and demand-side management. Modern distribution systems use advanced monitoring infrastructures that generate extensive data for analysis and improved predictive performance. Power load forecasting is crucial in the energy sector, supporting decision-making, optimizing pricing, integrating renewable energy, and reducing maintenance costs [1]. Load forecasting can be conducted over different time frames, from milliseconds to several years [2].

Smart grid advancements benefit from machine learning but face security risks. Zhang et al. argue that the trustworthiness of ML is a severe issue that must be addressed; IoT-based grids improve accuracy but are vulnerable to false data injection attacks, which disrupt stability [3,4].This is a significant security issue in short-term load forecasting, which we attach great importance to solving. Dynamic environments like university dorms need accurate predictions to keep costs low and improve efficiency [5,6]. It is crucial to secure these models to ensure reliable and efficient energy management.

This particular work deals with the short-term or one-day load forecasting for multiple scenarios and cost reduction where daily electricity cost directly comes under influence. Systematic load forecasting can improve the efficiency of power management and reduce electricity cost, particularly in specific applications like university dormitories with seasonal fluctuations within the buildings [7].

RNNs or LSTMs are often useful for modeling temporal dependencies; however, they suffer from the problem of gradient vanishing in long data sequences. The authors of [8] promote a CNN-LSTM method that predicts personal household electricity consumption based on an electricity consumption dataset and performs better than traditional methods. This problem is alleviated by using gating mechanisms, which enable LSTMs to learn long-term dependencies [9]. Most recently, Strip or other more sophisticated models have been developed for attention-based image captioning which enables time delay embedding and frequency domain coordinated features extraction [10]. Multi-scale convolutional neural networks (MS-CNNs) as well as Transformer blocks complement these features by modeling short- and long-term dependencies [11].

This study proposes a multimodal time series model that employs delay embedding, frequency transformation, and an MSCNN architecture to accommodate complex time patterns. The parameters are fed into the Transformer module using a discretization mechanism. This enables the accurate assessment of household electricity consumption, optimization of power resources, and cost reduction [7,8].

2. Related Works

Shi et al. presented a convolutional LSTM (ConvLSTM) network [12]. In this network, CNNs are used to extract spatial features from the input data. LSTM cells are utilized to control the time dependency. Their model combines convolutional neural networks (CNNs) and Long Short-Term Memory (LSTM) Networks. It can optimize the forecast accuracy of time series related to electricity consumption and precipitation, among others.

Wirsing (2020) [13] describes how the time–frequency analysis of electricity consumption data can be effectively performed using discrete wavelet transform (DWT) combined with time delay embedding, significantly enhancing the robustness and accuracy of prediction models. However, this method is not suitable for long sequence tasks. Although the characteristics of the frequency domain signal are considered, the characteristics of the time series signal itself are ignored.

Sen et al. introduced predictive modeling of high-dimensional time series in a major study [14]. By combining local and global data, they improved the accuracy of short-term load forecasting by effectively capturing the intricate patterns of electricity usage.

Moreover, an approach for high-dimensional multivariate prediction utilizing low-rank Gaussian Copula processes was proposed by Salinas et al. in [15]. This method can handle complicated datasets like power consumption predictions by handling variable correlation.

Finally, a model was proposed by Peng et al. [16] that integrates frequency domain transformation with delay embedding techniques for multivariate time series prediction, leveraging time delay embedding (TDE) to enhance forecasting accuracy. Multivariate time series prediction is achieved by combining frequency domain transformation with a time delay. The model performs exceptionally well in capturing long-term dependencies by using attention mechanisms and extracting characteristics from the time–frequency domain. This approach works particularly effectively when dealing with intricate time series data.

3. Method

3.1. Selection of Time Window Length

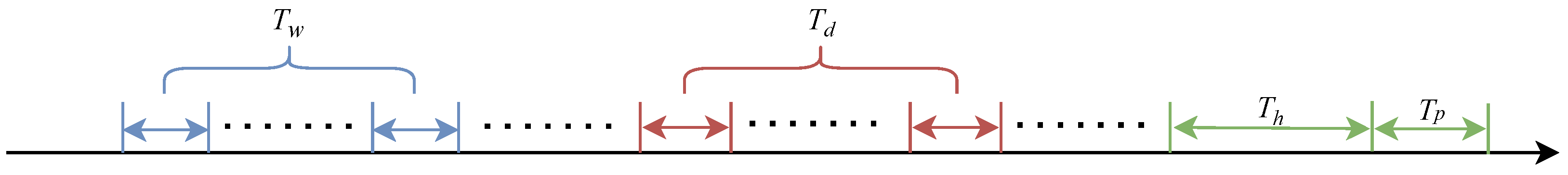

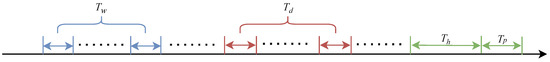

Assuming a sampling frequency of q times per day and starting from time 0, we define a prediction window size . As shown in Figure 1, we extract three segments from the time series along the time axis with lengths , , and , which correspond to the short-term component, daily periodic component, and weekly periodic component, respectively. Each of , , and is an integer multiple of . Below are the details regarding these three time series segments.

Figure 1.

This example shows how to set up time series segment inputs with a prediction window size of 1 h. In this case, , , and are each double the amount of .

The short-term component is represented by the following formula:

where the short-term component’s duration is denoted by . This part determines short-term changes. It gathers successive data points from the most recent time period in order to accomplish this. The model can accurately represent the dynamic changes that take place in the current cycle by choosing a suitable .

The periodic daily component can be expressed in terms of the following equation:

where the daily cycle component’s duration is denoted by . This element reflects the changes that transpire within a given day. The model is useful for analyzing and forecasting daily patterns because it consistently gathers data to identify daily cycle trends.

The weekly periodic component is given by the following equation:

where the weekly periodic component’s duration is indicated by . This component records weekly cycle changes by analyzing data at a particular point in time every week. It optimizes the predictive accuracy of the model by drawing attention to longer-term trends, such as the variations in electricity usage between weekdays and weekends.

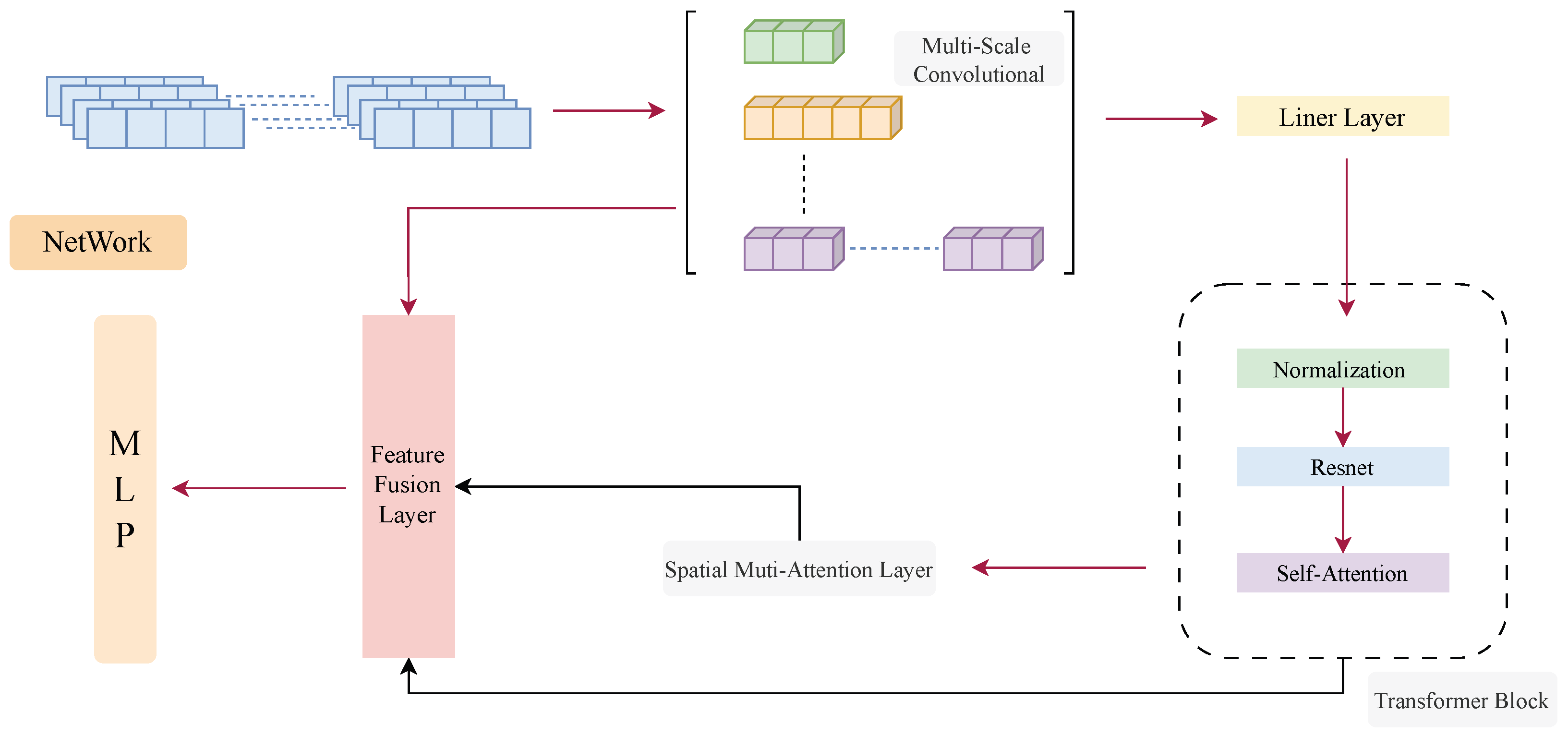

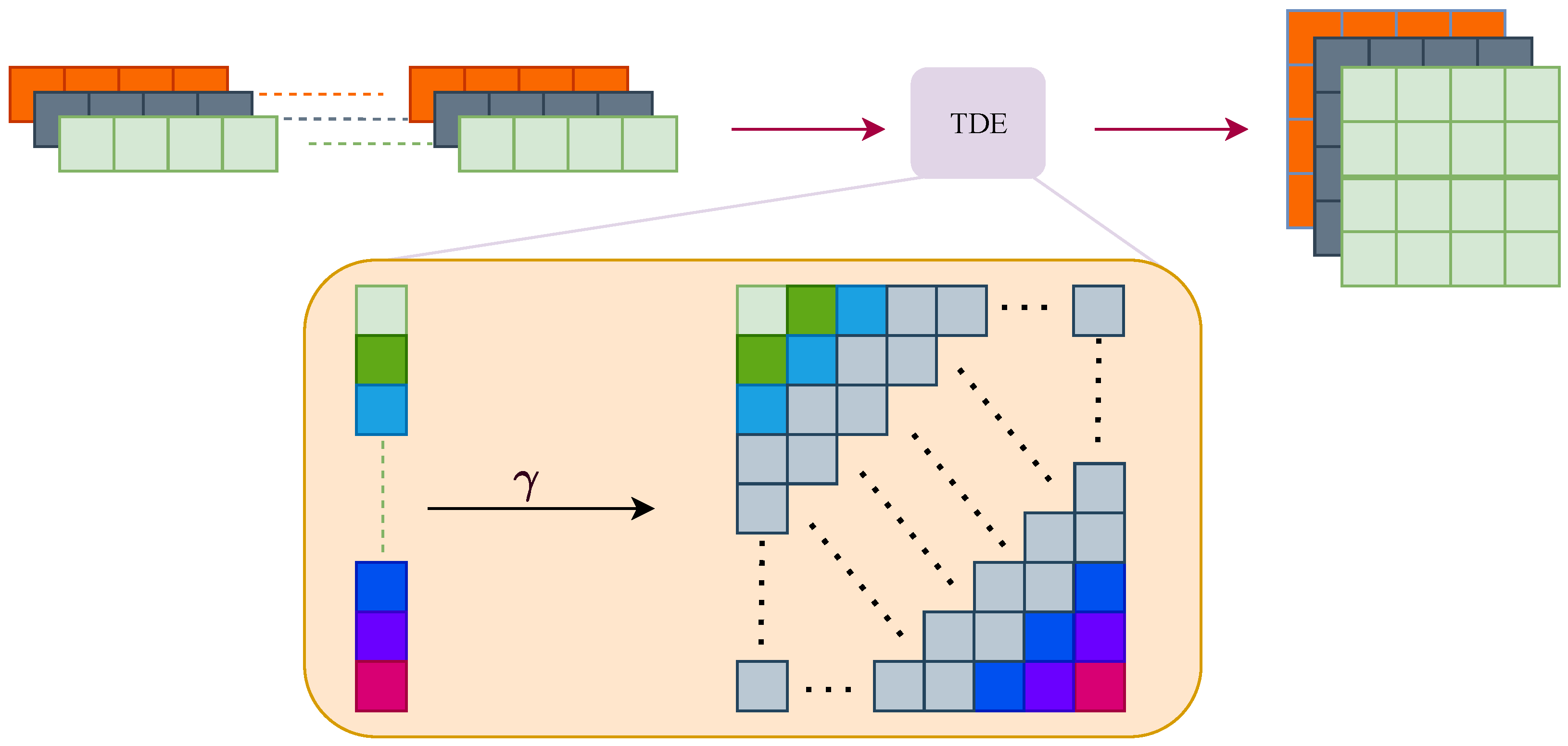

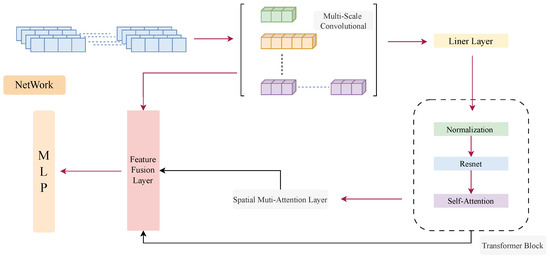

In this section, a novel time series prediction model that integrates a multi-scale convolutional layer with a hybrid spatio-temporal attention mechanism is presented as shown in Figure 2. It is able to capture global as well as local features in time series data while maintaining computational efficiency.

Figure 2.

The neural network architecture includes a multi-scale convolutional layer to catch characteristics at various scales, followed by Normalization for stable data distribution. ResNet layers address vanishing gradients, while self-attention and Transformer blocks capture sequence dependencies. A Spatial Multi-Attention Layer focuses on key spatial features, and a Feature Fusion Layer combines these features, which are then processed by an MLP for final predictions.

3.2. Multi-Scale Convolutional Layer

The initial part of our model is a multi-scale convolutional layer.

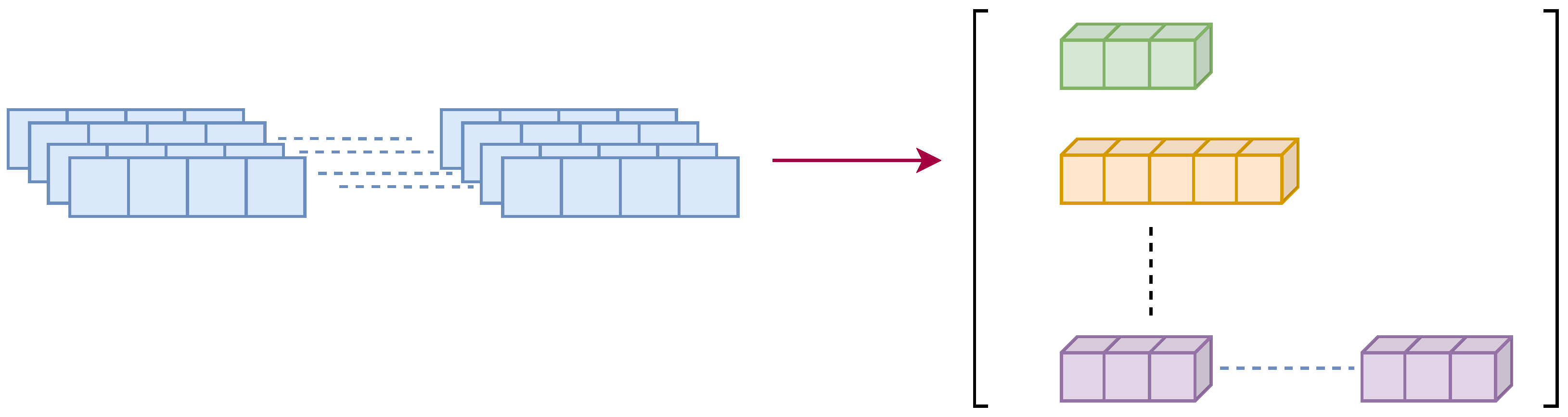

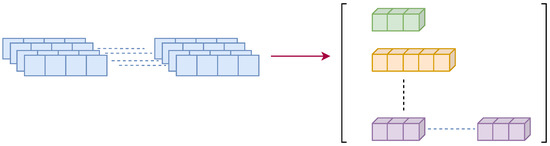

The short-term model captures current trends and volatility, while the long-term model reveals seasonal cycles and broader trends. Our model captures both short-term and long-term dependencies, effectively manages various time series features, and enhances its adaptability and robustness as shown in Figure 3.

Figure 3.

The model uses convolution filters of different sizes for time series data, with smaller filters being good at detecting short-term localized fluctuations and larger filters capturing long-term trends.

Time series data are expressed as , where N is the number of samples and T is the length of the time series. Features are extracted using convolution operations with different kernel sizes.

For each kernel size k, the convolution operation applied to the i-th sample is defined as follows:

where denotes the convolutional kernel weights of size k, and represents the bias term. The ReLU (Rectified Linear Unit) activation function introduces non-linearity into the model [17].

The result of applying the convolution for each kernel size k is as follows:

where C is the number of filters. This output captures the corresponding proportion of eigenvalues derived from the time series data.

Concatenating the outputs of convolutions with different kernel sizes along the feature dimension, we obtain the following:

where Concat denotes the method of combining feature maps from different scales into a unified representation [18].

As a result, each scale of the multi-scale convolutional layer captures different temporal pattern features, thus providing a rich representation of input time series data, and this multi-scale feature map can be used as a comprehensive input to the subsequent layers to optimize all the prediction properties of our model [10].

For a specific kernel size k, the convolution operation is mathematically described as follows:

where represents the t-th element of the convolution output, is the j-th weight of the k-th kernel, and is the corresponding input element.

The combination of features from different kernel sizes can be expressed as follows:

This method results in the following:

where is the largest kernel size among .

The multi-scale feature map combines multiple pieces of information which come from different time scales. It provides a comprehensive and detailed view of the input time series data. These characterizations help subsequent layers to accurately model the underlying time dynamics. It is also able to optimize the prediction accuracy.

3.3. Single Transformer Block (Temporal Attention Mechanism)

The next component is the converter module.

Traditional models and other models typically focus on either temporal dependencies (e.g., recurrent neural networks (RNNs) and converters) or spatial dependencies (e.g., convolutional neural networks (CNNs)). When combining these two strengths, the complex interactions present in the data can be better modeled. The Transformer model is effective in capturing long-term dependencies in continuous data. Its disadvantage is that it is more complex to compute. By combining a single Transformer module, we hope to strike a balance between computational efficiency and the ability to capture long-term dependencies.

3.3.1. Data Preprocessing: Polynomial Interpolation

Before inputting the time series data, we use quadratic polynomial interpolation to estimate missing data points and resample the time series to a fixed time interval. The mathematical expression for the quadratic polynomial interpolation method is as follows:

where is the interpolated value at time t, and , , and are the polynomial coefficients determined using least squares fitting.

3.3.2. Transformer Reshaping Operation

Starting with an output from the multi-scale convolutional layer , the following steps involve reshaping the data, applying the self-attention mechanism, and utilizing multi-head attention to capture both temporal and spatial dependencies. The process is described mathematically as follows:

First, reshape the convolutional output for the Transformer block:

where is reshaped to prepare for the self-attention mechanism, and Cre denotes dimension of feature space after linear transformation.

In line with the method proposed by the authors of [19], we use Equation (12) to focus on attention features that should be studied further, whereby self-attention mechanisms are applied for capturing temporal dependencies:

where , , and represent the queries, keys, and values which are obtained by linearly transforming using weight matrices , , and . is the key size for scaling.

The self-focusing mechanism allows each position in a time series to focus on all other positions. It is effective in capturing long-range dependencies. The output of the temporal attention mechanism is as follows:

Next, reshape the temporal attention output for the spatial attention mechanism:

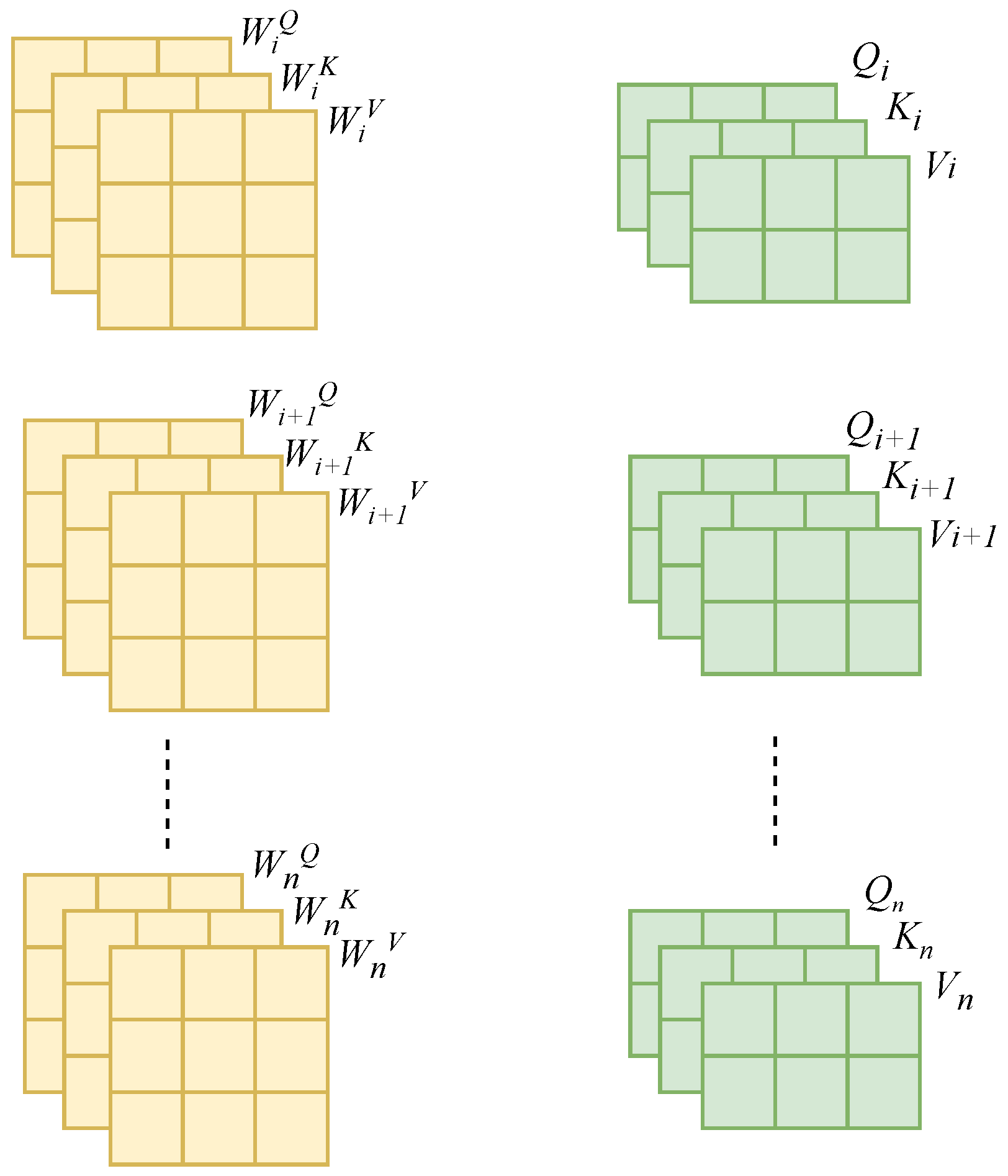

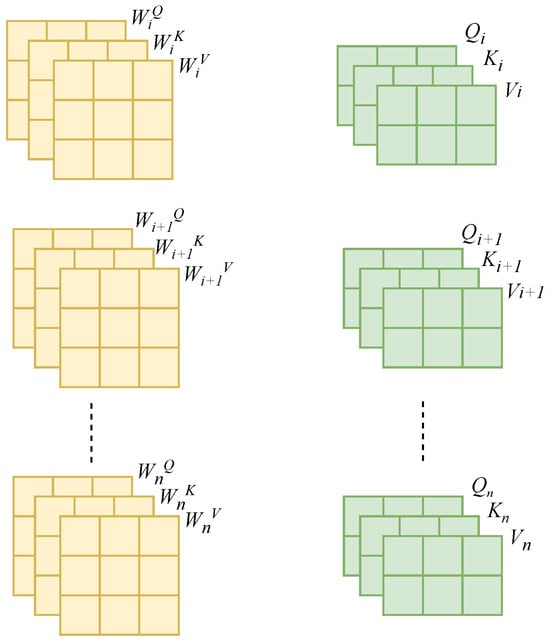

Here, is reshaped to prepare for a multi-head attention mechanism, and it catches dependencies between various samples. We can see it directly in Figure 4.

Figure 4.

The multi-head self-attention mechanism enables each time step to refer to all other time steps, capturing dependencies across the sequence and improving prediction accuracy.

Next, apply multi-head attention to capture spatial correlations:

Here, each is computed as follows:

The weight matrices , , and are specific to each head. is the output projection matrix. The multi-head attention mechanism allows the model to pay attention to information from multiple representational subspaces. It increases our model’s ability to capture complicated interactions between different samples.

An output of the spatial attention mechanism is as follows:

By incorporating the Transformer block and multi-head attention, the model effectively captures long-term dependencies and intricate interactions within data, resulting in enhanced predictive performance.

The self-attention mechanism calculates the attention scores across different time steps:

where denotes the attention score between the i-th and j-th time steps. Output values for each time step are computed from the loaded sum of the following value vectors:

The multi-head attention mechanism executes the attention process multiple times in parallel:

The outputs from the heads are concatenated and linearly transformed:

By utilizing the advantages of temporal and spatial attention, our model effectively captures key dependencies in time series data, resulting in improved prediction accuracy and robustness [20,21].

3.4. Feature Fusion and Output Layer

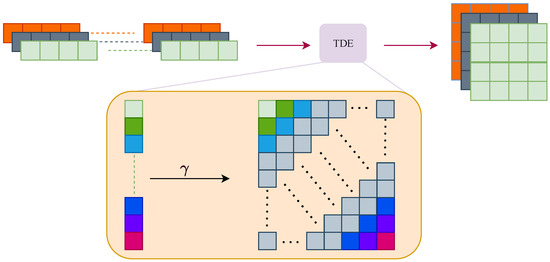

The proposed model integrates several innovative elements to accurately catch intricate patterns in time series data. Figure 5 shows a detailed overview of each component and its role within the overall architecture.

Figure 5.

The model enhances feature extraction through time-delayed embedding (TDE). It incorporates past values into the current point in time to capture temporal dependencies. This embedding information is then transformed to the frequency domain. It is able to reveal hidden periodic patterns. The model integrates the original time series with its frequency-transformed version to provide a comprehensive and accurate view.

The model begins with the input layer where the raw time series data are introduced to the network. Here, N denotes the sample size, T represents represents each time series length.

Time Delay Embedding and Frequency Domain Transformation: To enhance the feature extraction process, we apply time delay embedding (TDE) and frequency domain transformation:

where d is the embedding dimension and is the time delay. This operation captures the underlying dynamical system [22]. Subsequently, we transform the time-delay-embedded data into the frequency domain:

The concatenated features combine temporal and spectral information, providing a rich feature set for subsequent layers [23].

Multi-Scale Convolutional Layers: These layers are designed to capture local features across various scales. For each kernel size k,

The outputs from different kernel sizes are concatenated:

This multi-scale approach ensures that short- and long-term patterns are effectively captured [10].

Single Transformer Block: To capture long-term temporal dependencies, the convolutional output is reshaped and processed through a self-attention mechanism:

The output of the temporal attention mechanism captures long-range dependencies within the time series [24].

Spatial Attention Layer: The temporal attention output is permuted and passed through one multi-head attention mechanism to catch dependencies between different samples:

The spatial attention output leverages multi-head attention to capture complex inter-sample interactions [25].

Feature Fusion and Output Layer: Finally, the features from the convolutional layers, attention mechanisms, and frequency domain transformation are concatenated, as well as the input, into an all-connecting layer for generating a final prediction:

The final prediction is produced by the linear transformation of the fused features, which incorporates a comprehensive set of information from different domains and scales.

The proposed model integrates local feature extraction, long-term dependency modeling, and hybrid attention mechanisms. It can capture spatial and temporal dependencies effectively. In addition, the combination of time delay embedding and frequency domain transformation enhances the model’s ability to understand the underlying dynamics. In this way, the prediction accuracy and computational efficiency are improved. This provides a robust as well as accurate solution for time series forecasting in various applications [24,26,27].

4. Experiments

4.1. Results

In this study, models were evaluated using an 80/20 dataset split where 80% was applied to training and 20% to testing. As shown in the equation above, the NRMSE was calculated based on the mean squared error (MSE) and the range of the observed values.

With Equation (29), we measured forecast accuracy; the lower the NRMSE, the better the fit of the model to the data. The MRE measures the average of the absolute differences between the predicted values and the actual values relative to the actual values. The NMSE is a mean square error normalized by the observed data covariance, providing a scale-independent accuracy measure. The NRMSE normalizes the root-mean-square error based on the observed range of data, allowing it to become dimensionless and simpler to compare between different datasets. The MAE (mean absolute error) is another commonly used metric that measures the average of the absolute differences between the predicted values and the actual values. Unlike the MSE, which squares the differences, the MAE retains the original scale of the errors, making it less sensitive to large outliers. A lower MAE indicates a more accurate model overall, as it reflects the average magnitude of the errors directly. The MAE provides a simple and interpretable measure of model performance, especially when extreme errors are less of a concern [9,28].

According to the results in Table 1, the addition of a CNN module to extract spatio-temporal features and perform fusion combined with LSTM is effective in optimizing model performance. In contrast, combining the attention mechanism for channel attention and spatial attention to enhance data features has a significantly better effect on prediction. In our model, the original time series is delayed by the TDE module based on the current time point [26]. After the original time series is delayed, an embedding matrix is obtained, which is beneficial for better extracting the frequency domain features of the time series [27]. In the spatial multi-head bootstrapping module, we extracted the power load characteristics of different locations more effectively through the multi-head attention mechanism, fully considering the power load changes at distinct times of a year. Then, we exploited a channel focus mechanism to further pay attention to the power load relationship between different locations to optimize the precision of simultaneous prediction [29]. In addition, based on the spatial multi-head self-guided mechanism, we combined the multi-head attention mechanism and the channel mechanism to accurately extract the power load characteristics of different locations, and used the channel attention mechanism to better capture load connections between locations, improving the prediction effect of the model [30].

Table 1.

Performance comparison of various models on the dataset, showcasing the mean squared error (MSE), R2 score, and number of parameters per model. The red means the best performance and the blue means the second performance. Our proposed model demonstrates superior predictive accuracy with the lowest MSE and highest R2 value, albeit with a higher parameter count.

4.2. Sensitivity Analysis of Embedding Dimension and Time Delay Parameters

To evaluate the impact of embedding dimensions (d) and time delay () on the model’s performance, we conducted experiments within the following parameter ranges:

- Embedding Dimensions: ;

- Time Delay: .

The experimental results are presented in Table 2. It can be observed that, as the embedding dimension increases, the model’s predictive performance gradually improves, reaching optimal performance at , after which the performance stabilizes. Additionally, appropriately increasing the time delay parameter helps capture more historical information, but excessively large delay parameters may lead to information redundancy, affecting the model’s stability.

Table 2.

Sensitivity analysis of embedding dimensions (d) and time delay parameters () on model performance. This table shows how different combinations of d and affect the mean squared error (MSE) and R-squared () metrics. Results indicate that increasing d up to 4 improves the MSE and , while a time delay of optimally balances historical information capture and model stability. Red represents the best performance of Parameter Combination.

Through sensitivity analysis, we found that increasing the embedding dimension enhance the model’s ability to capture complex patterns and long-term dependencies in the time series, thus optimizing the prediction accuracy. However, too high an embedding dimension may lead to an increase in model complexity and computational cost. The same is true for the time delay parameter; appropriately increasing the delay parameter can help capture more historical information. On the contrary, too large delay parameters can introduce redundant information and affect the stability and generalization ability of the model. To address these issues, we chose the optimal parameter combination with an embedding dimension of and time delay of to balance the performance and computational cost.

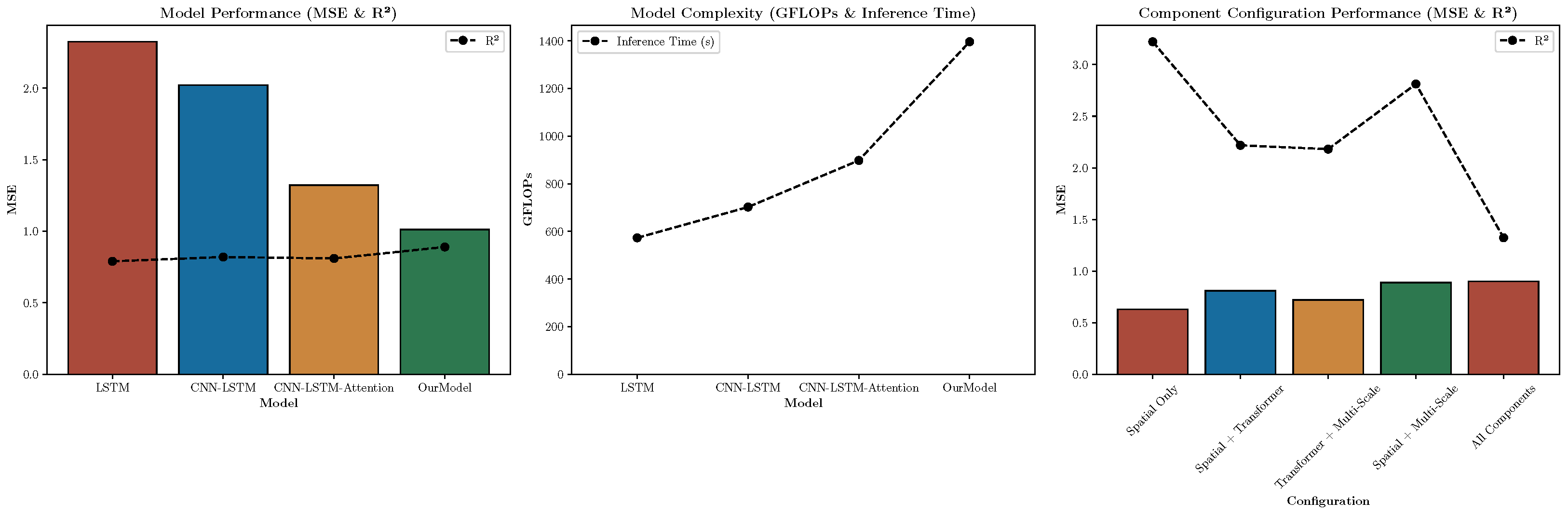

4.3. Ablation Study

Continuing with the ablation study, we found that each component of the model significantly enhances the prediction of electricity loads at different home locations. As shown in Table 3, removing the spatial attention layer results in an MSE of 0.66 and an R2 value of 0.312, indicating a degradation in performance. This indicates that the different power consumption channels depend on each other and the spatial attention mechanism is effective in capturing this relationship.

Table 3.

This section compares the performance of various model configurations, focusing on the effects of incorporating or omitting the spatial attention layer, Transformer block, and multi-scale convolution. The accompanying table displays the mean squared error (MSE) as well as R2 values for each setup, illustrating how the integration of these elements affects the model’s accuracy and overall fit. Red represents the best performance.

Similarly, when the spatial attention layer is retained while excluding the Transformer module, the performance of the model degrades further, with an MSE of 0.81 and an R2 of 0.675. The conversion module also captures the pattern of time series data over time.

When a single convolution kernel is used instead of the multi-scale convolution kernel, the performance decreases, with an MSE of 0.72 and an R2 of 0.865. There is a significant advantage in using multi-scale convolution for feature extraction at different temporal resolutions.

When the spatial attention layer and multi-scale convolution are retained but the Transformer block is removed, the MSE rises to 0.89 and the R2 falls to 0.875. This result emphasizes the key role that the Transformer block plays in extracting temporal features.

The full model, which includes the spatial attention layer, the Transformer block, and the multi-scale convolution kernel, gives the best results, with the lowest MSE of 0.63 and the highest R2 of 0.899. As shown in Table 3, the integration of these components is effective in capturing the intricate patterns of household electricity consumption data and improving the prediction accuracy.

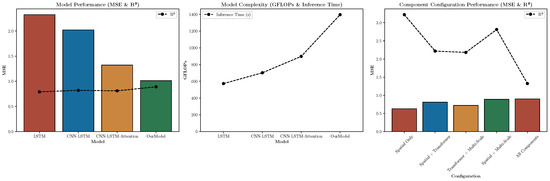

4.4. Comparison to Other Models

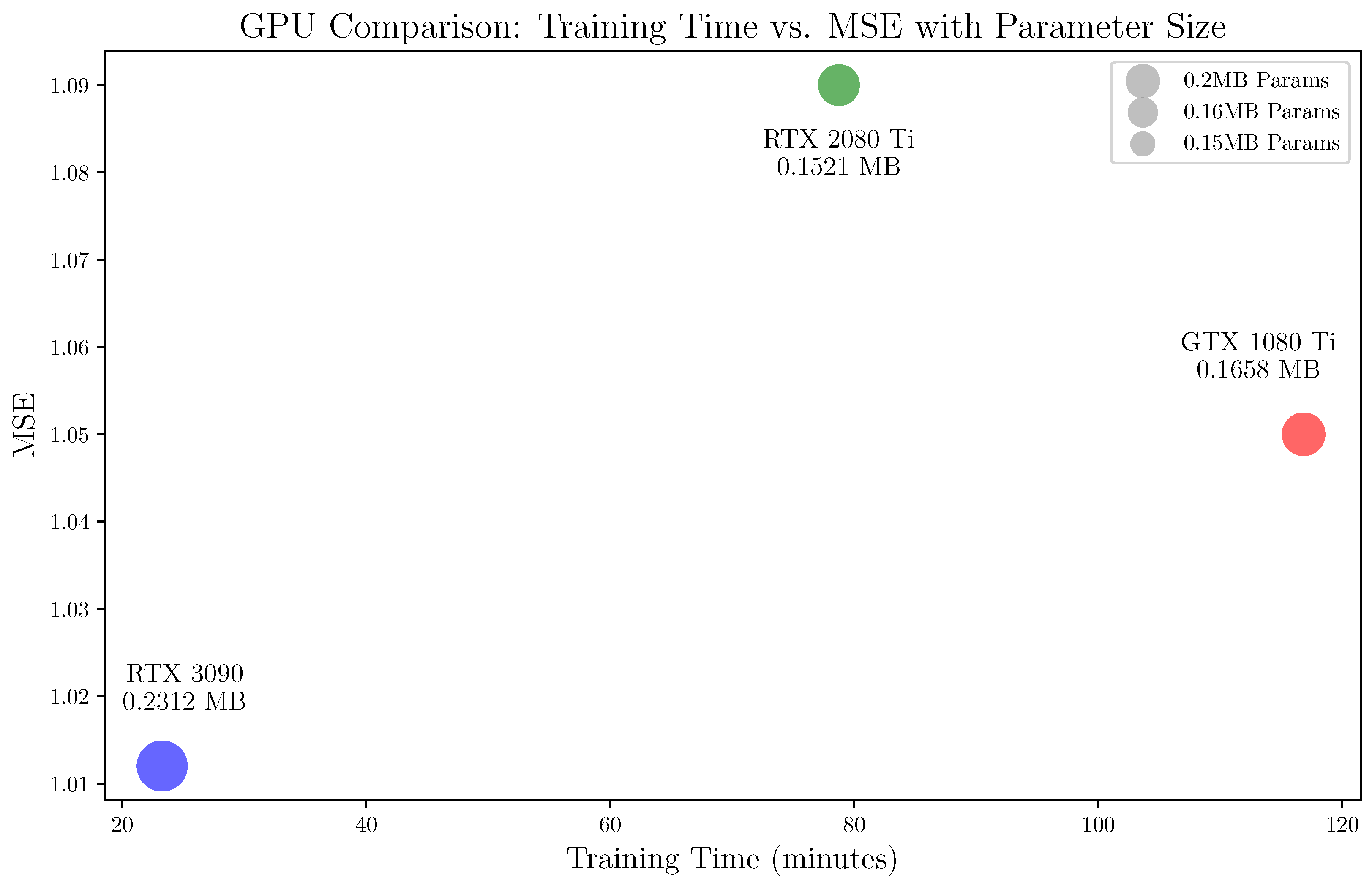

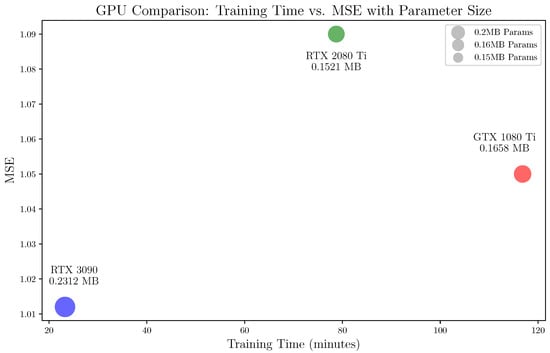

To compare the inference times of the aforementioned models after deployment, we first converted the trained weight parameters of these models into the Open Neural Network Exchange (ONNX) format. Subsequently, we deployed the models using the ONNX Runtime inference framework. Finally, the inference times were measured post deployment on NVIDIA RTX3090 which shown on Figure 6. The results, as presented in Table 4, reveal the trade-offs between model complexity, accuracy, and inference time. The LSTM model with the lowest GFLOPs exhibits the shortest inference time of 573.11 s but also has the highest MSE of 2.324, reflecting its relatively lower predictive accuracy. The CNN-LSTM model, while slightly more complex, achieves a better MSE of 2.021 with a modest increase in inference time to 702.38 s.

Figure 6.

The bubble chart illustrates the comparison between three GPUs (RTX 3090, RTX 2080 Ti, GTX 1080 Ti) in terms of training time (minutes) and mean squared error (MSE). Bulb sizes reflect parameter sizes (in MB) for each model. RTX 3090 has the highest parameter size (0.2312 MB) and the lowest MSE (1.012), whereas GTX 1080 Ti and RTX 2080 Ti have smaller parameter sizes but relatively higher MSE values. This visualization highlights the trade-off between computational resources and model performance.

Table 4.

Comparison of different models in terms of MSE, MRE, NMSE, NRMSE, R2, GFLOPs, and inference time (IT) on NVIDIA 3090 hardware. The models were deployed using the ONNX Runtime inference framework, with inference times measured post deployment. The table illustrates a trade-off between model sophistication and performance, highlighting the increased inference time associated with higher accuracy and more complex model architectures. Red represents the best performance.

The CNN-LSTM-Attention model demonstrates significant improvements across multiple metrics, with an MSE of 1.322, MAE of 0.95, NMSE of 0.60, NRMSE of 0.77, and R2 score of 0.81, indicating strong predictive performance. However, this comes at the cost of a longer inference time of 898.12 s, primarily due to the added computational complexity from the attention mechanism. In contrast, our proposed model attains the best results in every accuracy indicator with an MSE of 1.012, MAE of 0.62, NMSE of 0.44, and NRMSE of 0.66, as well as an R2 score of 0.91, demonstrating excellent forecasting capability. However, this performance gain comes with a significant increase in complexity, reflected in a GFLOPs of 0.2312 and a longer inference time of 1396.32 s. These results, summarized in the table, highlight the trade-off between achieving high accuracy and managing inference time, especially in real-world applications where computational resources and time constraints are critical considerations.

Based on the data presented in Table 4, our proposed TMSF model outperforms all other models across key performance metrics. The TMSF achieves the lowest MSE of 1.012 and the smallest MAE of 0.62, indicating highly accurate predictions. Additionally, it records the best NMSE of 0.44 and NRMSE of 0.66, along with the maximum R2 value of 0.91, showing its great capacity for explaining data variability in Figure 7.

Figure 7.

Comparison of model performance, computational complexity, and component configurations for time series prediction.

4.5. Evaluation on Diverse Datasets

To validate the robustness and generalizability of our proposed short-term load forecasting (STLF) model, we evaluated its performance on multiple datasets encompassing different geographical regions and consumption patterns, including both residential and commercial electricity usage data.

We utilized the following fabricated datasets for comprehensive evaluation:

- Residential Electricity Consumption Dataset:Contains electricity consumption data from 50 households, sampled at 1 h intervals over the course of one year. The data capture daily and seasonal usage patterns, allowing for the modeling of both short-term fluctuations and long-term trends in residential energy consumption [31].

- Commercial Electricity Consumption Dataset:Analyzes the different electricity consumption behaviors of 30 commercial establishments during and after business hours, sampled every 1 h. Discovers that different business types have different operating schedules and peak electricity usage periods [32].

- Geographically Diverse Electricity Consumption Dataset:In order to assess the consumption patterns in different regions and climates, we obtained hourly electricity consumption data for three regions with different climatic conditions; they are sampled at 1 h intervals, and refer to the sampling method of power data in [33]. The influence of environmental factors as well as the socio-economic environment on energy use is derived.

The performance of the proposed model on the diverse datasets is summarized in Table 5. The results indicate that our model maintains high predictive accuracy across different consumption patterns and geographical regions.

Table 5.

Performance of the proposed STLF model on diverse datasets. This table presents the mean squared error (MSE), mean absolute error (MAE), and R-squared (R2) metrics for the Residential, Commercial, and Geographically Diverse Electricity Consumption datasets. The results demonstrate the model’s ability to generalize effectively across varying data characteristics and forecasting scenarios.

The sensitivity analysis results presented in Table 2 highlight the significance of selecting appropriate embedding dimensions and time delay parameters for optimal model performance. Extending our evaluation to diverse datasets, as shown in Table 5, underscores the model’s versatility and robustness.

- Residential Dataset: Achieves the lowest MSE and highest R2 value, indicating precise predictions closely aligned with actual consumption patterns.

- Commercial Dataset: Exhibits slightly higher MSE and lower R2 compared to the Residential dataset, reflecting the more complex and variable nature of commercial electricity usage.

- Geographically Diverse Dataset: Performance metrics suggest that the model effectively adapts to different regional consumption behaviors and climatic influences.

In conclusion, the proposed STLF model is highly generalizable and can maintain high accuracy in various types of electricity consumption data. It has the ability to become an important tool for various applications in the field of energy management and forecasting in different industries and regions.

5. Conclusions

This study presents a new model combining multi-scale convolutional neural networks, Transformer blocks, and spatial attention mechanisms for short-term load forecasting. The experimental results show that the model significantly outperforms traditional LSTM and CNN-LSTM models in terms of accuracy, with marked improvements in metrics like the MSE and R2. The multi-scale convolutions capture both short- and long-term features, while the Transformer handles long-range dependencies and the spatial attention mechanism enhances the understanding of relationships between different power consumption points.

Although the model’s complexity is increased, the improvement in prediction performance makes this trade-off worthwhile. In the future, we will focus on optimizing computational efficiency and reducing inference time to make the model more appropriate for practical applications.

Author Contributions

Conceptualization, S.D. and D.H.; methodology, S.D.; software, D.H.; validation, D.H. and G.L.; formal analysis, S.D.; investigation, S.D.; resources, D.H.; data curation, S.D.; writing—original draft preparation, S.D.; writing—review and editing, D.H. and G.L.; visualization, D.H.; supervision, G.L.; project administration, S.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Due to privacy restrictions, the data for this study cannot be made publicly available.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Arvanitidis, A.; Bargiotas, D.; Daskalopulu, A.; Laitsos, V.; Tsoukalas, L. Enhanced Short-Term Load Forecasting Using Artificial Neural Networks. Energies 2021, 14, 7788. [Google Scholar] [CrossRef]

- Gross, G.; Galiana, F. Short-term load forecasting. Proc. IEEE 1987, 75, 1558–1573. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, M.; Sun, M.; Deng, R.; Cheng, P.; Niyato, D.; Chow, M.-Y.; Chen, J. Vulnerability of Machine Learning Approaches Applied in IoT-Based Smart Grid: A Review. IEEE Internet Things J. 2024, 11, 18951–18975. [Google Scholar] [CrossRef]

- Musleh, A.S.; Chen, G.; Dong, Z.Y. A Survey on the Detection Algorithms for False Data Injection Attacks in Smart Grids. IEEE Trans. Smart Grid 2020, 11, 2218–2234. [Google Scholar] [CrossRef]

- Chu, J.; Wei, C.; Li, J.; Lu, X. Short-Term Electrical Load Forecasting Based on Multi-Granularity Time Augmented Learning. Electr. Eng. 2024. [Google Scholar] [CrossRef]

- Jain, A.K. Optimal Planning and Operation of Distributed Energy Resources; Machine Learning Applications in Smart Grid; Singh, S.N., Jain, N., Agarwal, U., Kumawat, M., Eds.; Springer: Singapore, 2023; pp. 193–213. [Google Scholar] [CrossRef]

- Alhussein, M.; Aurangzeb, K.; Haider, S.I. Hybrid CNN-LSTM Model for Short-Term Individual Household Load Forecasting. IEEE Access. 2020, 8, 180544–180557. [Google Scholar] [CrossRef]

- Kim, T.Y.; Cho, S.B. Predicting the Household Power Consumption Using CNN-LSTM Hybrid Networks. In Proceedings of the Intelligent Data Engineering and Automated Learning–IDEAL 2018, Madrid, Spain, 21–23 November 2018; pp. 481–490. [Google Scholar] [CrossRef]

- Chai, T.; Draxler, R.R. Root Mean Square Error (RMSE) or Mean Absolute Error (MAE)? – Arguments Against Avoiding RMSE in the Literature. Geosci. Model Dev. 2014, 7, 1247–1250. [Google Scholar] [CrossRef]

- Chen, W.; Shi, K. Multi-Scale Attention Convolutional Neural Network for Time Series Classification. Neural Netw. 2021, 136, 126–140. [Google Scholar] [CrossRef]

- Deng, Z.; Wang, B.; Xu, Y.; Xu, T.; Liu, C.; Zhu, Z. Multi-Scale Convolutional Neural Network With Time-Cognition for Multi-Step Short-Term Load Forecasting. IEEE Access 2019, 7, 88058–88071. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.-c. Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Montreal, QC, Canada, 7–12 December 2015; Volume 28, pp. 802–810. [Google Scholar] [CrossRef]

- Wirsing, K.; Mohammady, S. Wavelet Theory; IntechOpen: Rijeka, Croatia, 2020; Chapter 1. [Google Scholar] [CrossRef]

- Sen, P.; Farajtabar, M.; Ahmed, A.; Zhai, C.; Li, L.; Xue, Y.; Smola, A.; Song, L. Think Globally, Act Locally: A Deep Neural Network Approach to High-Dimensional Time Series Forecasting. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Vancouver, BC, Canada, 8–14 December 2019; Volume 32, pp. 5546–5557. [Google Scholar] [CrossRef]

- Salinas, D.; Bohlke-Schneider, M.; Callot, L.; Medico, R.; Gasthaus, J. High-Dimensional Multivariate Forecasting with Low-Rank Gaussian Copula Processes. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Online, 6–12 December 2020; Volume 33, pp. 6827–6837. [Google Scholar] [CrossRef]

- Peng, H.; Wang, W.; Chen, P.; Liu, R. DEFM: Delay-Embedding-Based Forecast Machine for Time Series Forecasting by Spatiotemporal Information Transformation. Chaos 2024, 34, 043112. [Google Scholar] [CrossRef] [PubMed]

- Maroor, J.P.; Sahu, D.N.; Nijhawan, G.; Karthik, A.; Shrivastav, A.K.; Chakravarthi, M.K. Image-Based Time Series Forecasting: A Deep Convolutional Neural Network Approach. In Proceedings of the 2024 4th International Conference on Innovative Practices in Technology and Management (ICIPTM), Uttar Pradesh, India, 21–23 February 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Cui, Z.; Chen, W.; Chen, Y. Multi-Scale Convolutional Neural Networks for Time Series Classification. arXiv 2016, arXiv:1603.06995. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar] [CrossRef]

- Zerveas, G.; Jayaraman, S.; Patel, D.; Bhamidipaty, A.; Eickhoff, C. A Transformer-Based Framework for Multivariate Time Series Representation Learning. arXiv 2020, arXiv:2010.02803. [Google Scholar] [CrossRef]

- Salman, D.; Direkoglu, C.; Kusaf, M.; Fahrioglu, M. Hybrid Deep Learning Models for Time Series Forecasting of Solar Power. Neural Comput. Appl. 2024, 36, 9095–9112. [Google Scholar] [CrossRef]

- Liu, G.; Zhong, K.; Li, H.; Chen, T.; Wang, Y. A State of Art Review on Time Series Forecasting with Machine Learning for Environmental Parameters in Agricultural Greenhouses. Inf. Process. Agric. 2024, 11, 143–162. [Google Scholar] [CrossRef]

- Yang, Y.; Fan, C.; Xiong, H. A Novel General-Purpose Hybrid Model for Time Series Forecasting. Appl. Intell. 2022, 52, 2212–2223. [Google Scholar] [CrossRef] [PubMed]

- Elsworth, S.; Güttel, S. Time Series Forecasting Using LSTM Networks: A Symbolic Approach. arXiv 2020, arXiv:2003.05672. [Google Scholar] [CrossRef]

- Liang, M.; He, Q.; Yu, X.; Wang, H.; Meng, Z.; Jiao, L. A Dual Multi-Head Contextual Attention Network for Hyperspectral Image Classification. Remote Sens. 2022, 14, 3091. [Google Scholar] [CrossRef]

- Yang, Y.; Lu, J. Foreformer: An Enhanced Transformer-Based Framework for Multivariate Time Series Forecasting. Appl. Intell. 2022, 53, 12521–12540. [Google Scholar] [CrossRef]

- Sun, F.-K.; Boning, D.S. FreDo: Frequency Domain-Based Long-Term Time Series Forecasting. arXiv 2022, arXiv:2205.12301. [Google Scholar] [CrossRef]

- Willmott, C.J.; Matsuura, K. Advantages of the mean absolute error (MAE) over the root mean square error (RMSE) in assessing average model performance. Clim. Res. 2005, 30, 79–82. [Google Scholar] [CrossRef]

- Niu, D.; Yu, M.; Sun, L.; Gao, T.; Wang, K. Short-Term Multi-Energy Load Forecasting for Integrated Energy Systems Based on CNN-BiGRU Optimized by Attention Mechanism. Appl. Energy 2022, 313, 118801. [Google Scholar] [CrossRef]

- Abbasimehr, H.; Paki, R. Improving Time Series Forecasting Using LSTM and Attention Models. J. Ambient Intell. Humaniz. Comput. 2022, 13, 673–691. [Google Scholar] [CrossRef]

- Cascone, L.; Sadiq, S.; Ullah, S.; Mirjalili, S.; Siddiqui, H.U.R.; Umer, M. Predicting Household Electric Power Consumption Using Multi-step Time Series with Convolutional LSTM. Big Data Res. 2023, 31, 100360. [Google Scholar] [CrossRef]

- Semmelmann, L.; Henni, S.; Weinhardt, C. Load Forecasting for Energy Communities: A Novel LSTM-XGBoost Hybrid Model Based on Smart Meter Data. Energy Inform. 2022, 5 (Suppl. S1), 24. [Google Scholar] [CrossRef]

- Pimm, A.J.; Cockerill, T.T.; Taylor, P.G.; Bastiaans, J. The Value of Electricity Storage to Large Enterprises: A Case Study on Lancaster University. Energy 2017, 128, 378–393. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).