Detect Insider Threat with Associated Session Graph

Abstract

1. Introduction

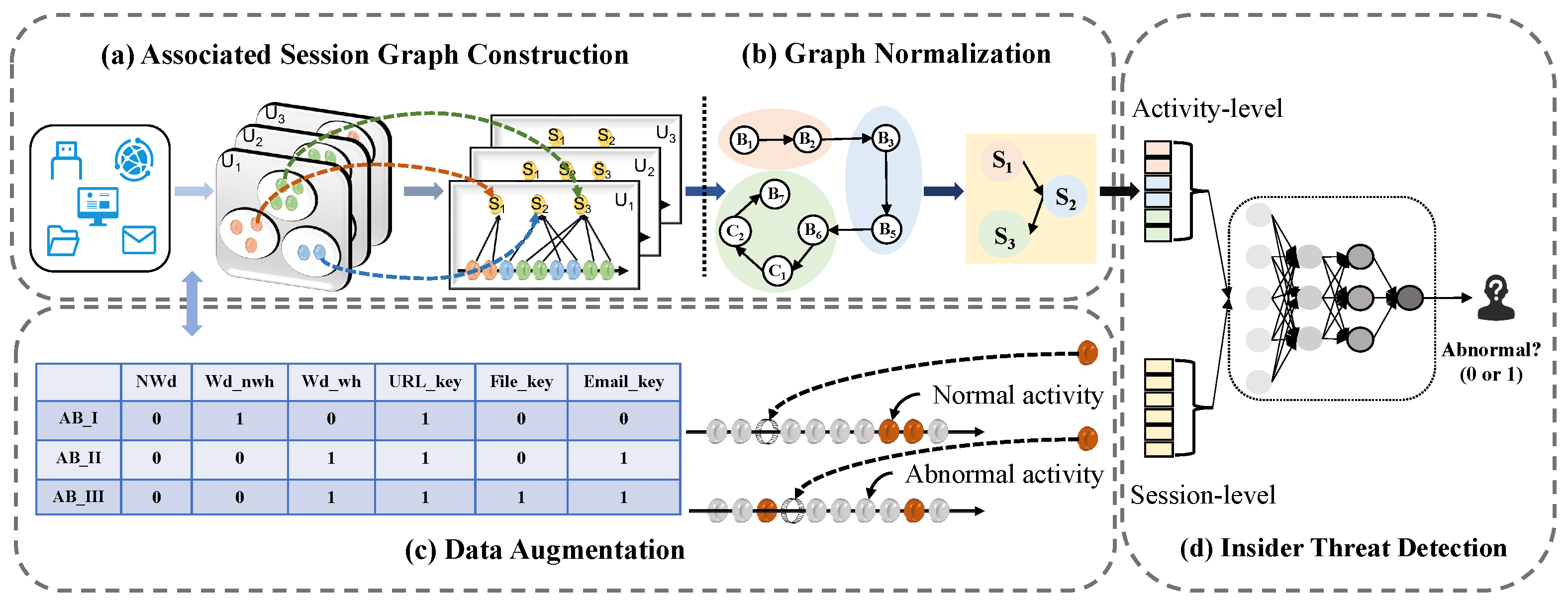

- An associated session graph-based insider threat detection approach is proposed to enhance the performance of graph-based models.

- To construct the associated session graph, seven heuristic rules are defined and fine-grained user behavior features from both intra-session and inter-session activities are extracted to model user behavior.

- To further enhance the performance of the insider threat detection model based on the associated session graph, graph normalization and data augmentation are introduced to highlight key nodes of the associated session graph and mitigate the imbalance of anomalous samples.

- Extensive experiments show that ASG-ITD effectively identifies three types of anomalous behavior and achieves state-of-the-art performance in insider threat detection that integrates multi-source heterogeneous behavioral data. We have also made our code publicly available at https://github.com/JmeiDing/ASG-ITD (accessed on 7 December 2024).

2. Related Work

2.1. Insider Threat Detection

2.2. Graph-Based Anomaly Detection

3. Problem Definition

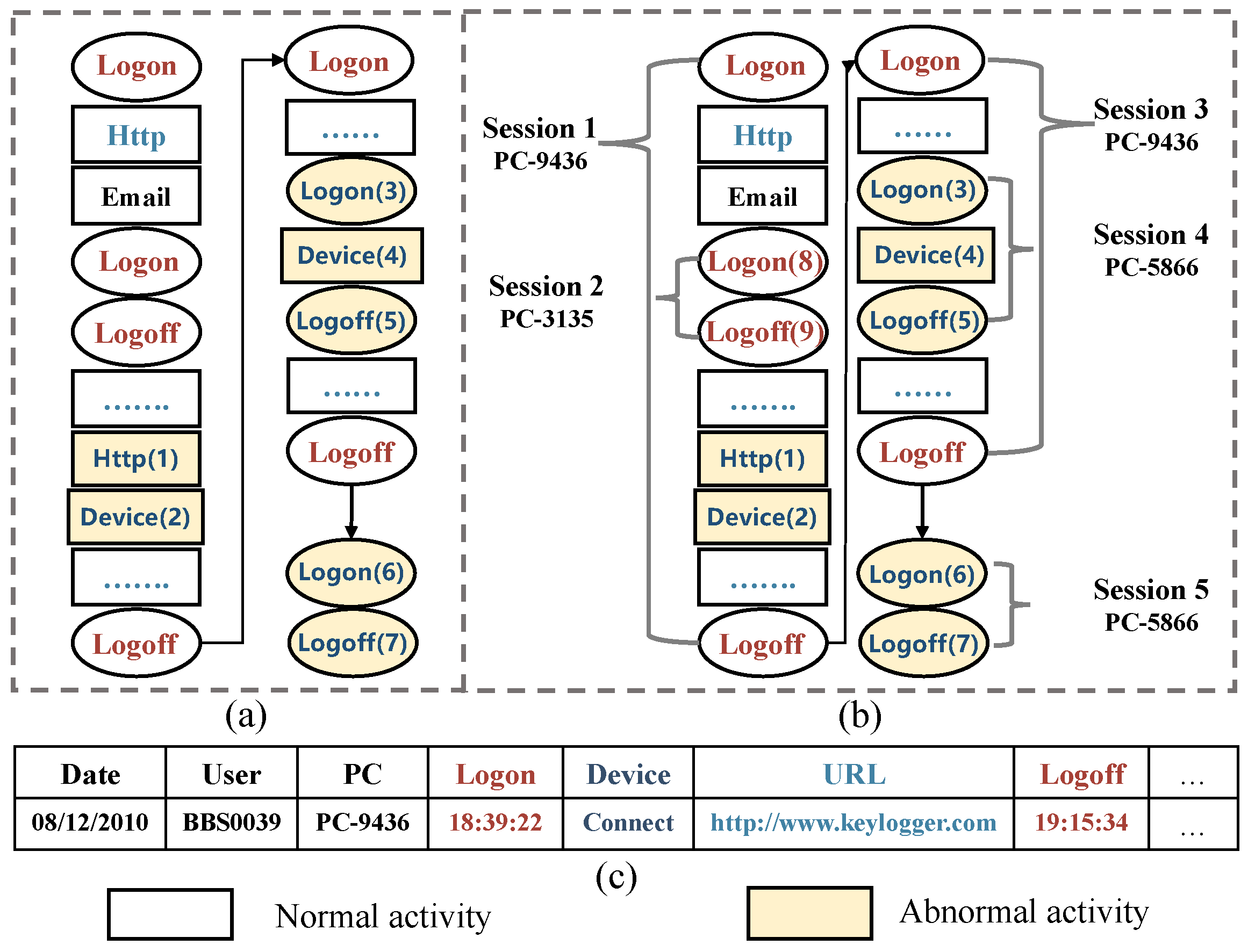

3.1. User Session

3.2. Insider Threat

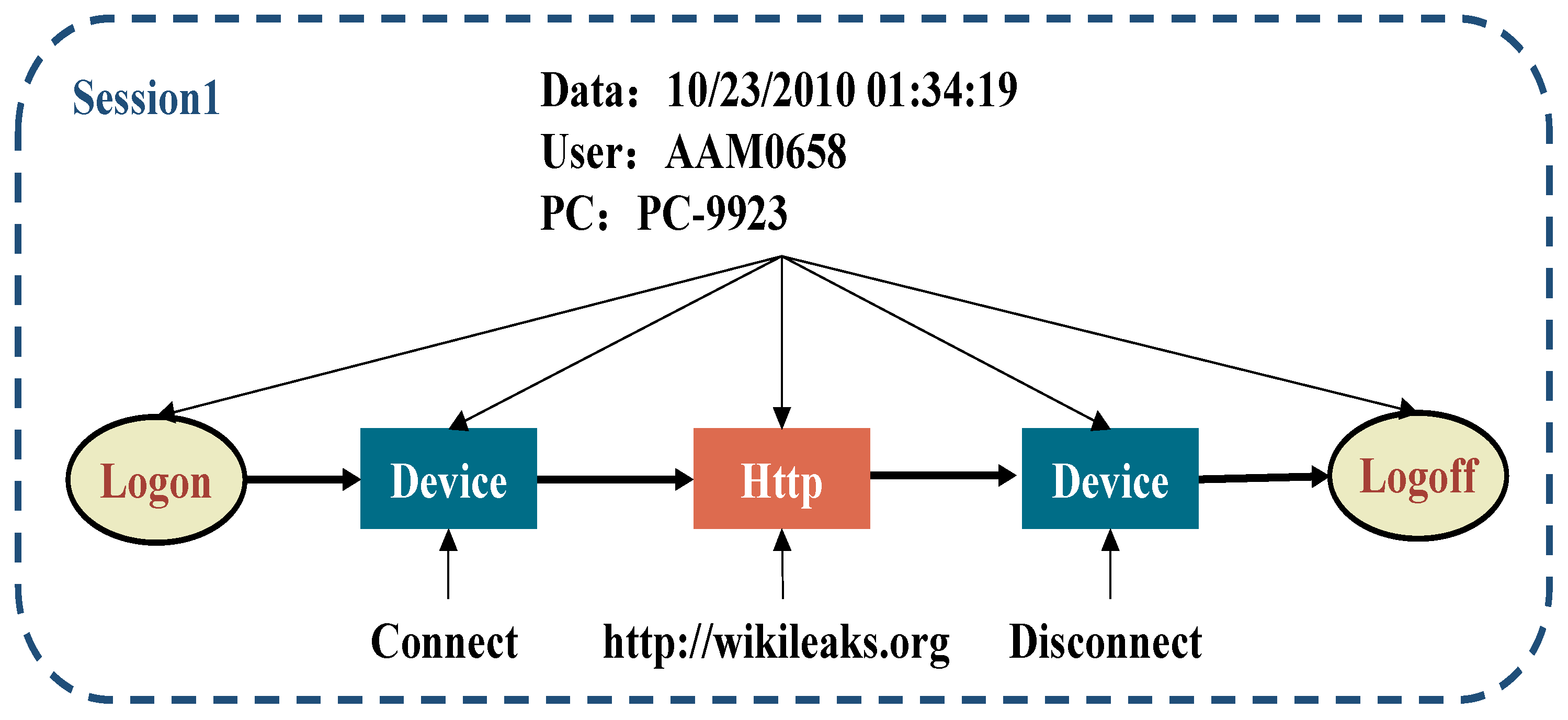

- Abnormal Behavior-I (AB-I): A user who previously did not use removable drives or who did not work at off-hour times suddenly logs on during off-hour time and uses a removable drive to upload files to a suspicious domain (i.e., wikileaks.org). After that, the user leaves the organization within the next few days.

- Abnormal Behavior-II (AB-II): A user suddenly starts visiting job sites and looking for a job that the user has never done before; after a few days, the user leaves the company and uses a thumb drive to steal data (accessing data at a rate significantly higher than during previous activity).

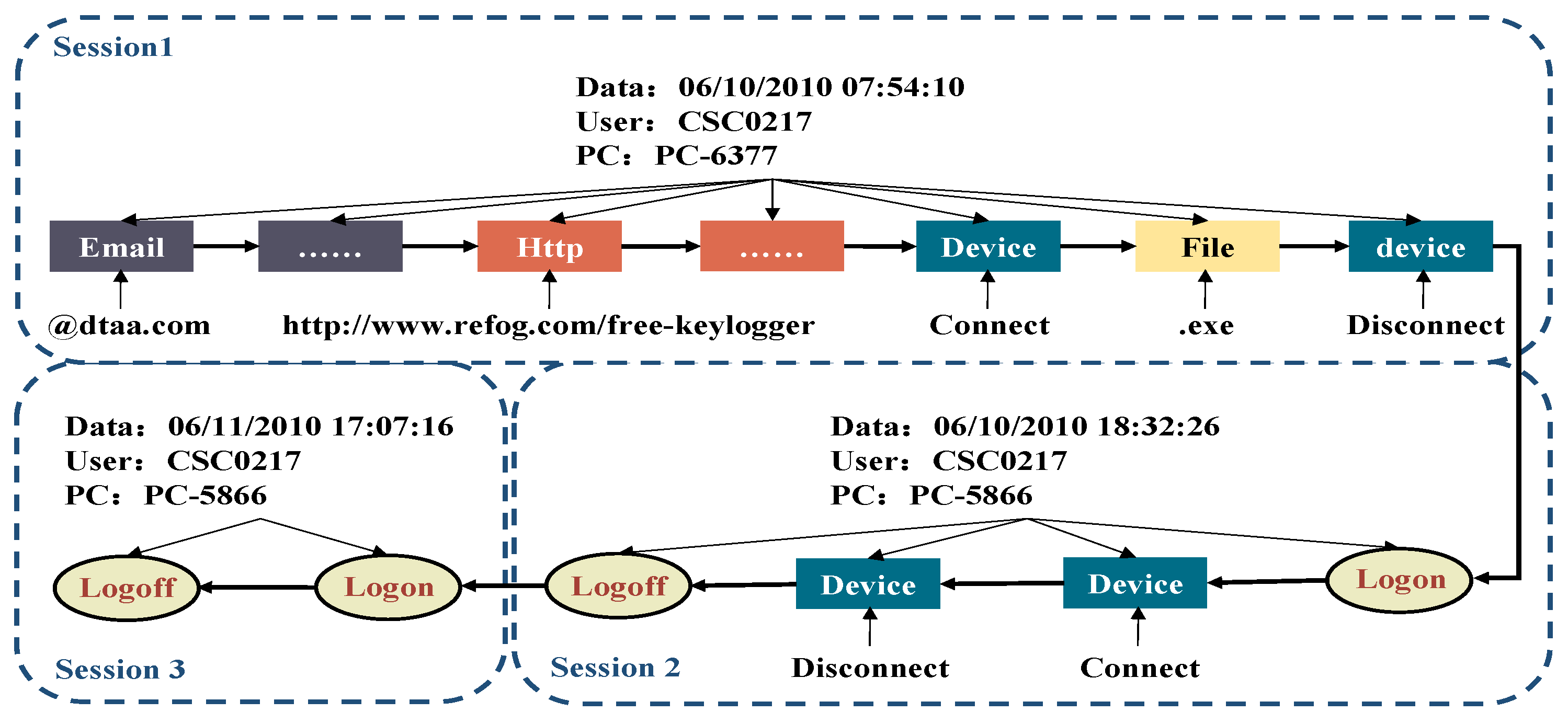

- Abnormal Behavior-III (AB-III): A system administrator who has access to a variety of assets and resources downloads a keylogger and uses a thumb drive to transfer the keyboard logger to a supervisor’s machine. The malicious administrator uses the collected keystroke logs to steal the password of the supervisor’s system, then leaves the organization.

4. Method

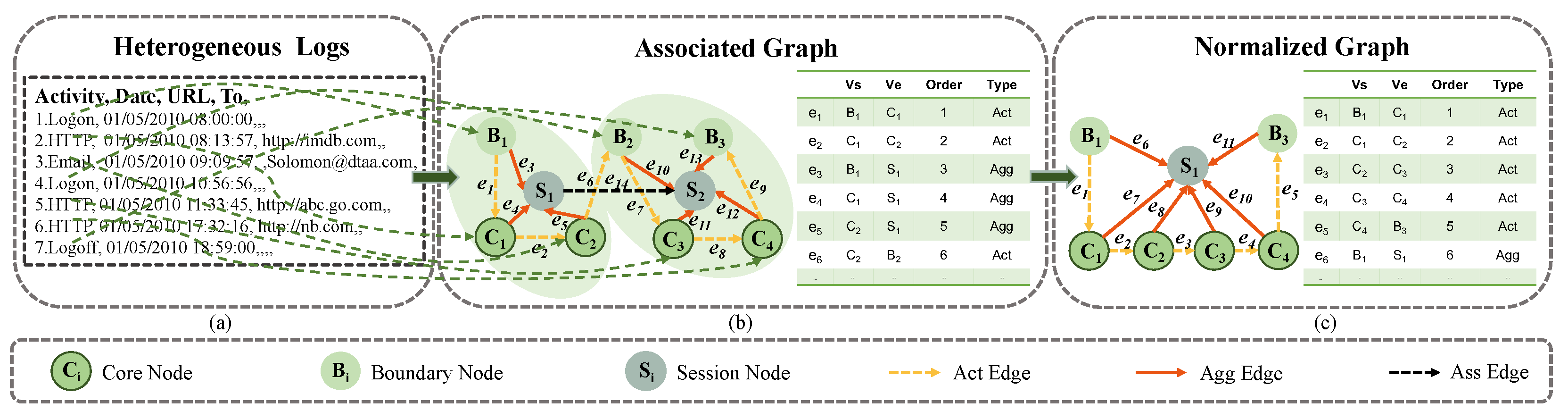

4.1. Associated Session Graph Construction

4.1.1. Activity Nodes Construction

4.1.2. Activity Edges Construction

4.1.3. Node and Edge Features in Session Graph

4.1.4. Session Node Construction

| Algorithm 1: Associated Graph Construction |

|

4.1.5. Construction of Session Edges

4.1.6. Node and Edge Features in the Associated Session Graph

4.2. Graph Normalization

4.3. Data Augmentation

| Algorithm 2: Data Augmentation |

|

4.4. Insider Threat Detection

5. Evaluation

- RQ1: Can our proposed method effectively detect user abnormal behaviors? How does our approach perform in terms of different evaluation metrics compared to state-of-the-art detectors?

- RQ2: How much do the individual components of our approach contribute to its performance gains in terms of anomalous behavior detection?

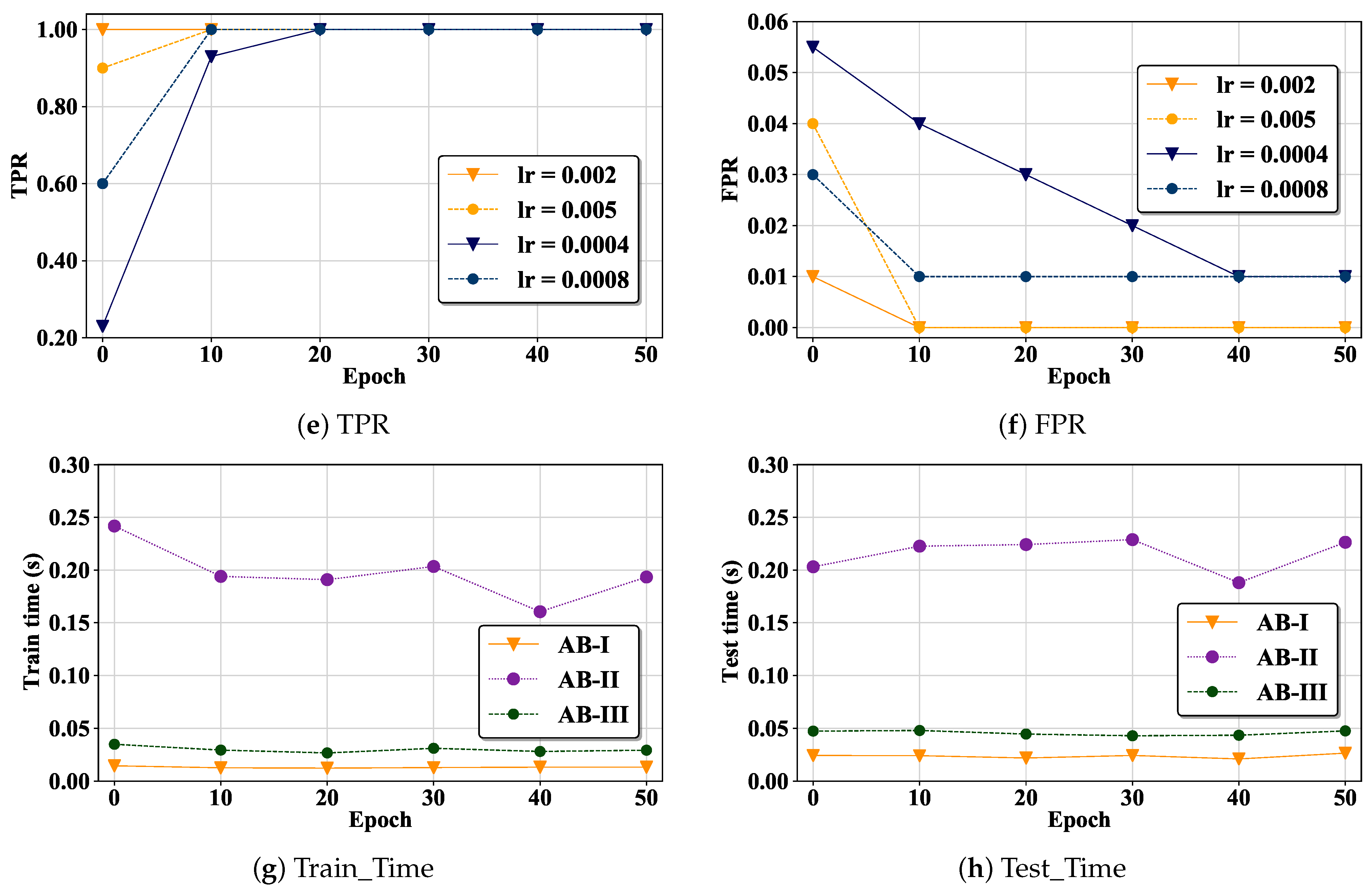

- RQ3: What are the effects of different parameter settings of the graph model on the detection of user anomalies?

5.1. Experimental Setup

5.2. Performance Comparison (Answering RQ1)

5.3. Ablation Study (Answering RQ2)

5.4. Parameter Sensitivity (Answering RQ3)

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yuan, S.; Wu, X. Deep learning for insider threat detection: Review, challenges and opportunities. Comput. Secur. 2021, 104, 102221. [Google Scholar] [CrossRef]

- Omidi, L.; Moradi, G.; Salehi, V.; Khosravifar, M. A multi-criteria decision-making approach for prioritizing factors influencing healthcare workers’ safety performance: A case of a women’s hospital. J. Saf. Sustain. 2024, 1, 173–180. [Google Scholar] [CrossRef]

- Yen, T.F.; Oprea, A.; Onarlioglu, K.; Leetham, T.; Robertson, W.; Juels, A.; Kirda, E. Beehive: Large-scale log analysis for detecting suspicious activity in enterprise networks. In Proceedings of the 29th Annual Computer Security Applications Conference, New Orleans, LA, USA, 9–13 December 2013; pp. 199–208. [Google Scholar]

- Gurucul, T.R. 2023 Insider Threat Report. Available online: https://gurucul.com/2023-insider-threat-report{#}reportForm (accessed on 7 December 2024.).

- Parveen, P.; Thuraisingham, B. Unsupervised incremental sequence learning for insider threat detection. In Proceedings of the 2012 IEEE International Conference on Intelligence and Security Informatics, Washington, DC, USA, 11–14 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 141–143. [Google Scholar]

- Glasser, J.; Lindauer, B. Bridging the gap: A pragmatic approach to generating insider threat data. In Proceedings of the 2013 IEEE Security and Privacy Workshops, San Francisco, CA, USA, 23–24 May 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 98–104. [Google Scholar]

- Eldardiry, H.; Sricharan, K.; Liu, J.; Hanley, J.; Price, B.; Brdiczka, O.; Bart, E. Multi-source fusion for anomaly detection: Using across-domain and across-time peer-group consistency checks. J. Wirel. Mob. Netw. Ubiquitous Comput. Dependable Appl. 2014, 5, 39–58. [Google Scholar]

- Ressi, D.; Romanello, R.; Piazza, C.; Rossi, S. AI-enhanced blockchain technology: A review of advancements and opportunities. J. Netw. Comput. Appl. 2024, 225, 103858. [Google Scholar] [CrossRef]

- Zhang, X.; Xu, Y.; Lin, Q.; Qiao, B.; Zhang, H.; Dang, Y.; Xie, C.; Yang, X.; Cheng, Q.; Li, Z.; et al. Robust log-based anomaly detection on unstable log data. In Proceedings of the 2019 27th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Tallinn, Estonia, 26–30 August 2019; pp. 807–817. [Google Scholar]

- Yaseen, Q.; Jararweh, Y.; Panda, B.; Althebyan, Q. An insider threat aware access control for cloud relational databases. Clust. Comput. 2017, 20, 2669–2685. [Google Scholar] [CrossRef]

- Yuan, F.; Cao, Y.; Shang, Y.; Liu, Y.; Tan, J.; Fang, B. Insider threat detection with deep neural network. In Proceedings of the Computational Science–ICCS 2018: 18th International Conference, Wuxi, China, 11–13 June 2018; Proceedings, Part I 18. Springer: Berlin/Heidelberg, Germany, 2018; pp. 43–54. [Google Scholar]

- Liu, F.; Wen, Y.; Zhang, D.; Jiang, X.; Xing, X.; Meng, D. Log2vec: A heterogeneous graph embedding based approach for detecting cyber threats within enterprise. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security, London, UK, 11–15 November 2019; pp. 1777–1794. [Google Scholar]

- Lindauer, B. Insider Threat Test Dataset. 2020. [Google Scholar]

- Hu, T.; Niu, W.; Zhang, X.; Liu, X.; Lu, J.; Liu, Y. An insider threat detection approach based on mouse dynamics and deep learning. Secur. Commun. Netw. 2019, 2019, 3898951. [Google Scholar] [CrossRef]

- Le, D.C.; Zincir-Heywood, N.; Heywood, M. Training regime influences to semi-supervised learning for insider threat detection. In Proceedings of the 2021 IEEE Security and Privacy Workshops (SPW), Francisco, CA, USA, 27 May 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 13–18. [Google Scholar]

- Defferrard, M.; Bresson, X.; Vandergheynst, P. Convolutional neural networks on graphs with fast localized spectral filtering. Adv. Neural Inf. Process. Syst. 2016, 29, 3837–3845. [Google Scholar]

- Gori, M.; Monfardini, G.; Scarselli, F. A new model for learning in graph domains. In Proceedings of the 2005 IEEE International Joint Conference on Neural Networks, Montreal, QC, Canada, 31 July–4 August 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 2, pp. 729–734. [Google Scholar]

- Micheli, A. Neural network for graphs: A contextual constructive approach. IEEE Trans. Neural Netw. 2009, 20, 498–511. [Google Scholar] [CrossRef] [PubMed]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Zeng, J.; Chua, Z.L.; Chen, Y.; Ji, K.; Liang, Z.; Mao, J. WATSON: Abstracting Behaviors from Audit Logs via Aggregation of Contextual Semantics. In Proceedings of the Network and Distributed Systems Security (NDSS) Symposium 2021, Online, 21–24 February 2021. [Google Scholar]

- Wang, C.; Zhu, H. Wrongdoing Monitor: A Graph-Based Behavioral Anomaly Detection in Cyber Security. IEEE Trans. Inf. Forensics Secur. 2022, 17, 2703–2718. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, Y.; Dong, H.; Gao, H.; Jin, Y.; Wang, W. Spectral-based directed graph network for malware detection. IEEE Trans. Netw. Sci. Eng. 2020, 8, 957–970. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, Y.; Wang, W.; Song, H.; Dong, H. Malware detection with dynamic evolving graph convolutional networks. Int. J. Intell. Syst. 2022, 37, 7261–7280. [Google Scholar] [CrossRef]

- Jiang, J.; Chen, J.; Gu, T.; Choo, K.K.R.; Liu, C.; Yu, M.; Huang, W.; Mohapatra, P. Anomaly detection with graph convolutional networks for insider threat and fraud detection. In Proceedings of the MILCOM 2019—2019 IEEE Military Communications Conference (MILCOM), Norfolk, VA, USA, 12–14 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 109–114. [Google Scholar]

- Zaman, M.; Siddiqui, T.; Amin, M.R.; Hossain, M.S. Malware detection in Android by network traffic analysis. In Proceedings of the 2015 International Conference on Networking Systems and Security (NSysS), Dhaka, Bangladesh, 5–7 January 2015; pp. 1–5. [Google Scholar] [CrossRef]

- Coskun, B. (Un)wisdom of Crowds: Accurately Spotting Malicious IP Clusters Using Not-So-Accurate IP Blacklists. IEEE Trans. Inf. Forensics Secur. 2017, 12, 1406–1417. [Google Scholar] [CrossRef]

- Liu, Z.; Qian, P.; Wang, X.; Zhuang, Y.; Qiu, L.; Wang, X. Combining Graph Neural Networks With Expert Knowledge for Smart Contract Vulnerability Detection. IEEE Trans. Knowl. Data Eng. 2023, 35, 1296–1310. [Google Scholar] [CrossRef]

- Lin, L.; Zhong, S.; Jia, C.; Chen, K. Insider threat detection based on deep belief network feature representation. In Proceedings of the 2017 International Conference on Green Informatics (ICGI), Fuzhou, China, 15–17 August 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 54–59. [Google Scholar]

- Lu, S.; Wei, X.; Li, Y.; Wang, L. Detecting anomaly in big data system logs using convolutional neural network. In Proceedings of the 2018 IEEE 16th International Conference on Dependable, Autonomic and Secure Computing, 16th International Conference on Pervasive Intelligence and Computing, 4th International Conference on Big Data Intelligence and Computing and Cyber Science and Technology Congress (DASC/PiCom/DataCom/CyberSciTech), Athens, Greece, 12–15 August 2018; IEEE Computer Society: Piscataway, NJ, USA, 2018; pp. 151–158. [Google Scholar] [CrossRef]

- Meng, W.; Liu, Y.; Zhu, Y.; Zhang, S.; Pei, D.; Liu, Y.; Chen, Y.; Zhang, R.; Tao, S.; Sun, P.; et al. LogAnomaly: Unsupervised detection of sequential and quantitative anomalies in unstructured logs. In Proceedings of the IJCAI, Macao, China, 10–16 August 2019; Volume 19, pp. 4739–4745. [Google Scholar]

- Sharma, B.; Pokharel, P.; Joshi, B. User behavior analytics for anomaly detection using LSTM autoencoder-insider threat detection. In Proceedings of the 11th International Conference on Advances in Information Technology, Bangkok, Thailand, 1–3 July 2020; pp. 1–9. [Google Scholar]

- Zhang, F.; Ma, X.; Huang, W. SeqA-ITD: User Behavior Sequence Augmentation for Insider Threat Detection at Multiple Time Granularities. In Proceedings of the 2022 International Joint Conference on Neural Networks (IJCNN), Padua, Italy, 18–23 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–7. [Google Scholar]

| Type | NA | EA | AN | SN |

|---|---|---|---|---|

| AB-I | 54.20 | 52.20 | 815,948 | 15,336 |

| AB-II | 561.98 | 555.17 | 2,704,237 | 29,244 |

| AB-III | 311.32 | 300.34 | 1,786,364 | 59,159 |

| Category | NB | AB-I | AB-II | AB-III |

|---|---|---|---|---|

| User | 70 | 30 | 30 | 10 |

| Activity | 32,763,243 | 345 | 6426 | 213 |

| Session | 455,060 | 69 | 7676 | 7786 |

| Associated session | 11,800 | 69 | 30 | 10 |

| Data augmentation | 13,319 | 7566 | 2364 | 2890 |

| Type | Model | Method | ACC (%)↑ | PR (%)↑ | F1 (%)↑ | TPR (%)↑ | FPR (%)↓ | AUC (%)↑ |

|---|---|---|---|---|---|---|---|---|

| AB-I | DBN-OCSVM | fixed time | 81.03 | 84.10 | 80.50 | 65.65 | 4.10 | 80.77 |

| CNN | fixed time | 86.45 | 84.66 | 86.47 | 88.35 | 5.41 | 86.52 | |

| LSTM | fixed time | 86.79 | 86.78 | 86.77 | 83.29 | 9.79 | 86.77 | |

| LSTM-CNN | fixed time | 91.67 | 93.36 | 91.57 | 89.85 | 3.55 | 91.73 | |

| LSTM-AE | fixed time | 50.64 | 25.32 | 33.62 | 0 | 0 | 50.00 | |

| fixed time | 95.03 | 95.11 | 95.14 | 93.89 | 3.00 | 95.39 | ||

| session | 95.31 | 96.18 | 95.29 | 94.12 | 3.00 | 95.82 | ||

| ASG-ITD | session | 98.67 | 99.61 | 98.59 | 97.70 | 0 | 98.66 | |

| AB-II | DBN-OCSVM | fixed time | 50.88 | 25.44 | 33.72 | 0 | 0 | 50.00 |

| CNN | fixed time | 75.82 | 75.12 | 75.65 | 76.19 | 14.55 | 75.97 | |

| LSTM | fixed time | 66.94 | 68.82 | 67.87 | 84.10 | 5.03 | 66.95 | |

| LSTM-CNN | fixed time | 71.49 | 77.69 | 74.74 | 96.03 | 5.23 | 72.01 | |

| LSTM-AE | fixed time | 57.82 | 67.41 | 62.32 | 0 | 3.76 | 57.96 | |

| fixed time | 95.31 | 96.18 | 95.29 | 97.12 | 3.00 | 95.82 | ||

| session | 96.91 | 96.43 | 96.63 | 97.48 | 2.20 | 96.35 | ||

| ASG-ITD | session | 99.56 | 99.54 | 99.53 | 99.56 | 0 | 99.56 | |

| AB-III | DBN-OCSVM | fixed time | 51.68 | 25.84 | 34.07 | 0 | 0 | 50.00 |

| CNN | fixed time | 95.19 | 93.96 | 94.48 | 95.87 | 3.75 | 94.48 | |

| LSTM | fixed time | 95.01 | 95.28 | 95.02 | 95.43 | 5.79 | 94.43 | |

| LSTM-CNN | fixed time | 95.17 | 95.22 | 95.20 | 95.83 | 9.30 | 94.24 | |

| LSTM-AE | fixed time | 95.20 | 95.18 | 95.29 | 0 | 0 | 48.68 | |

| fixed time | 95.31 | 95.39 | 95.40 | 96.02 | 3.00 | 94.82 | ||

| session | 95.60 | 95.87 | 95.45 | 96.86 | 2.00 | 95.01 | ||

| ASG-ITD | session | 99.67 | 99.14 | 99.13 | 99.15 | 0 | 98.63 |

| Behavior | Model | Method | ACC (%)↑ | PR (%)↑ | F1 (%)↑ | TPR (%)↑ | FPR (%)↓ | AUC (%)↑ |

|---|---|---|---|---|---|---|---|---|

| AB OR NOT | Lin et al. [28] | fixed time | 87.79 | n/a | n/a | 81.04 | 12.80 | n/a |

| Yuan et al. [11] | fixed time | n/a | n/a | n/a | n/a | n/a | 94.49 | |

| Zhang et al. [32] | fixed time | n/a | 95.79 | 95.63 | 95.64 | n/a | 95.85 | |

| Sharma et al. [31] | session | 90.17 | 2.62 | 5.09 | 91.03 | 9.84 | n/a | |

| Le et al. [15] | fixed time | n/a | n/a | n/a | 79.10 | 5.00 | 96.80 | |

| ASG-ITD | session | 99.05 | 99.48 | 98.89 | 98.38 | 0 | 98.81 |

| Method | Raw Data | Augmented Data | Type | ACC (%)↑ | PR (%)↑ | F1 (%)↑ | TPR (%)↑ | FPR (%)↓ | AUC (%)↑ | |

|---|---|---|---|---|---|---|---|---|---|---|

| ASG | SG | ASG | ||||||||

| ITD-NDE | ✓ | AB-I | 99.74 | 22.34 | 22.39 | 22.67 | 0 | 24.97 | ||

| DT-Nne | ✓ | AB-I | 92.82 | 92.82 | 92.82 | 92.83 | 0.77 | 92.83 | ||

| RF-Nne | ✓ | AB-I | 94.76 | 94.76 | 94.76 | 94.76 | 0.45 | 94.76 | ||

| MLP-Nne | ✓ | AB-I | 93.78 | 93.77 | 93.78 | 93.79 | 0.58 | 93.79 | ||

| KNN-Nne | ✓ | AB-I | 93.46 | 93.45 | 93.46 | 93.46 | 0.58 | 93.46 | ||

| LSTM-Nne | ✓ | AB-I | 70.86 | 75.41 | 69.70 | 71.21 | 8.09 | 71.21 | ||

| ✓ | AB-I | 95.31 | 96.18 | 95.29 | 94.12 | 0.40 | 95.82 | |||

| ✓ | AB-I | 96.66 | 97.63 | 96.37 | 94.84 | 0.02 | 96.52 | |||

| ITD-NNE | ✓ | AB-I | 96.91 | 99.58 | 96.69 | 95.17 | 0 | 96.88 | ||

| ASG-ITD | ✓ | AB-I | 98.67 | 99.61 | 98.59 | 97.70 | 0 | 98.66 | ||

| ITD-NDE | ✓ | AB-II | 98.82 | 0 | 0 | 0 | 0 | 15.62 | ||

| DT-Nne | ✓ | AB-II | 90.75 | 90.73 | 90.75 | 90.87 | 1.61 | 90.87 | ||

| RF-Nne | ✓ | AB-II | 92.07 | 92.07 | 92.07 | 92.09 | 0.20 | 92.09 | ||

| MLP-Nne | ✓ | AB-II | 91.94 | 91.92 | 91.94 | 91.98 | 0.40 | 91.98 | ||

| KNN-Nne | ✓ | AB-II | 92.44 | 92.12 | 92.44 | 91.48 | 0.40 | 92.48 | ||

| LSTM-Nne | ✓ | AB-II | 62.82 | 78.25 | 58.02 | 64.03 | 7.19 | 64.03 | ||

| ✓ | AB-II | 96.91 | 96.43 | 96.63 | 97.48 | 2.20 | 96.35 | |||

| ✓ | AB-II | 97.12 | 97.65 | 97.64 | 98.42 | 2.00 | 97.37 | |||

| ITD-NNE | ✓ | AB-II | 98.95 | 98.45 | 98.93 | 99.48 | 2.00 | 98.95 | ||

| ASG-ITD | ✓ | AB-II | 99.56 | 99.54 | 99.53 | 99.56 | 0 | 99.56 | ||

| ITD-NDE | ✓ | AB-III | 99.32 | 0 | 0 | 0 | 0 | 10 | ||

| DT-Nne | ✓ | AB-III | 93.25 | 93.25 | 93.25 | 93.25 | 0.70 | 93.25 | ||

| RF-Nne | ✓ | AB-III | 94.32 | 94.32 | 94.23 | 94.32 | 0.65 | 94.32 | ||

| MLP-Nne | ✓ | AB-III | 93.25 | 93.25 | 93.25 | 93.25 | 0.70 | 93.25 | ||

| KNN-Nne | ✓ | AB-III | 93.90 | 93.89 | 93.90 | 93.94 | 2.12 | 93.93 | ||

| LSTM-Nne | ✓ | AB-III | 62.82 | 78.25 | 58.02 | 64.03 | 7.19 | 64.03 | ||

| ✓ | AB-III | 95.60 | 95.87 | 95.45 | 96.86 | 2.00 | 95.01 | |||

| ✓ | AB-III | 97.76 | 96.03 | 96.63 | 97.48 | 1.80 | 96.35 | |||

| ITD-NNE | ✓ | AB-III | 97.80 | 96.12 | 97.46 | 98.96 | 1.20 | 97.04 | ||

| ASG-ITD | ✓ | AB-III | 99.67 | 99.14 | 99.13 | 99.15 | 0 | 98.63 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, J.; Qian, P.; Ma, J.; Wang, Z.; Lu, Y.; Xie, X. Detect Insider Threat with Associated Session Graph. Electronics 2024, 13, 4885. https://doi.org/10.3390/electronics13244885

Ding J, Qian P, Ma J, Wang Z, Lu Y, Xie X. Detect Insider Threat with Associated Session Graph. Electronics. 2024; 13(24):4885. https://doi.org/10.3390/electronics13244885

Chicago/Turabian StyleDing, Junmei, Peng Qian, Jing Ma, Zhiqiang Wang, Yueming Lu, and Xiaqing Xie. 2024. "Detect Insider Threat with Associated Session Graph" Electronics 13, no. 24: 4885. https://doi.org/10.3390/electronics13244885

APA StyleDing, J., Qian, P., Ma, J., Wang, Z., Lu, Y., & Xie, X. (2024). Detect Insider Threat with Associated Session Graph. Electronics, 13(24), 4885. https://doi.org/10.3390/electronics13244885