Abstract

Machine learning (ML) methods have revolutionized cancer analysis by enhancing the accuracy of diagnosis, prognosis, and treatment strategies. This paper presents an extensive study on the applications of machine learning in cancer analysis, with a focus on three primary areas: a comparative analysis of medical imaging techniques (including X-rays, mammography, ultrasound, CT, MRI, and PET), various AI and ML techniques (such as deep learning, transfer learning, and ensemble learning), and the challenges and limitations associated with utilizing ML in cancer analysis. The study highlights the potential of ML to improve early detection and patient outcomes while also addressing the technical and practical challenges that must be overcome for its effective clinical integration. Finally, the paper discusses future directions and opportunities for advancing ML applications in cancer research.

1. Introduction

Globally, cancer continues to be a significant public health challenge, with nearly 20 million new cases and 9.7 million deaths that occurred in 2022 alone [1]. In 2024, the American Cancer Society estimated there would be 2,001,140 new cancer cases and 611,720 cancer-related deaths. The projected 611,720 deaths translate to approximately 1671 fatalities daily, with lung, prostate, and colorectal cancers being the primary causes in men, and lung, breast, and colorectal cancers being the primary causes in women [2]. These data underscore the ongoing challenges posed by cancer and the importance of continued research and innovation in cancer analysis and care.

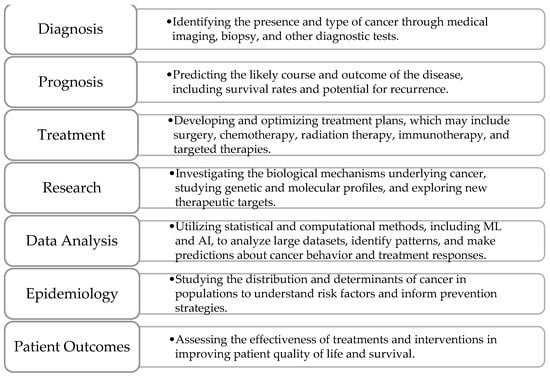

Cancer analysis refers to the comprehensive examination and study of cancer, involving various methods and techniques to understand its development, progression, diagnosis, treatment, and prognosis. It encompasses several key areas, illustrated in Figure 1.

Figure 1.

Key areas of cancer analysis.

Diagnosis is the foundational step, involving the identification of cancer through methods like imaging (MRI, CT scans) or biopsy. This phase is crucial, as early and precise detection greatly impacts treatment decisions and patient outcomes. Prognosis follows, focusing on predicting the likely course of the disease, including survival rates and the risk of recurrence. Prognostic models consider factors like tumor size, stage, histological grade, and molecular markers. With advancements in ML, these models can now incorporate complex datasets, such as imaging and genomic information, to enhance prediction accuracy and guide tailored treatment strategies. Treatment is another vital area, centered on developing and optimizing therapeutic plans based on the cancer type and individual patient characteristics. Standard modalities include surgery, chemotherapy, radiation therapy, targeted therapy, and immunotherapy. The emergence of personalized medicine has enabled the use of treatments specifically targeting genetic mutations within tumors, improving effectiveness and minimizing side effects. ML and AI assist in predicting patient responses to different therapies, optimizing treatment selection, and adjusting plans as cancer progresses or responds to interventions. Research plays a critical role in advancing cancer analysis by investigating the biological mechanisms underlying cancer, identifying new therapeutic targets, and exploring novel diagnostic methods. With the integration of genomics, proteomics, and bioinformatics, research efforts have accelerated, providing deeper insights into tumor behavior and potential treatment pathways. In parallel, data analysis leverages statistical and computational techniques, including ML, to analyze vast datasets from imaging, clinical records, and genetic profiles. This analysis aids in identifying patterns, classifying cancer types, segmenting tumors, and making predictive assessments regarding patient outcomes. Epidemiology studies the distribution and determinants of cancer across populations to identify risk factors, assess the impact of preventive measures, and inform public health policies. Understanding trends in cancer incidence, survival, and mortality is essential for developing effective screening programs and educational campaigns. Finally, patient outcomes focus on evaluating the success of treatments in terms of survival, quality of life, and recurrence rates. By analyzing outcomes, healthcare providers can refine treatment protocols, improve supportive care, and ensure optimal patient well-being during and after their cancer journey. Together, these key areas form an integrated approach to cancer analysis, driving progress in diagnosis, treatment, research, and patient care.

Medical imaging data analysis is crucial for effectively and accurately detecting and diagnosing various types of cancer. Expert radiologists and pathologists rely on medical images to identify anomalous growths or abnormalities within the patient’s body, determining the staging and metastasis of cancer through meticulous analysis. A diverse range of imaging modalities is used for diagnosing different cancer types, significantly enhancing diagnostic precision by providing exceptionally accurate and clear depictions of tissues and internal organs.

In recent years, extensive research has focused on AI and ML, identifying numerous areas where these techniques can be applied to yield superior results [3]. These advancements offer promising avenues for enhancing cancer diagnosis and care, as such algorithms can analyze medical images with unprecedented efficiency, aiding in early detection and personalized treatment strategies for cancer patients. Moreover, they can assist in automating repetitive tasks, freeing up valuable time for healthcare professionals to focus on more complex aspects of patient care.

1.1. Paper Structure

This paper presents a comprehensive study on the utilization of ML methods for cancer analysis and is divided into four key sections. The first section provides an in-depth presentation of various medical imaging techniques, including X-rays, mammography, ultrasound, CT, PET, MRI, and endoscopy, highlighting their effectiveness in cancer detection and diagnosis. The next section presents a detailed literature review of lung, breast, brain, cervical, colorectal, and liver cancers, examining how ML techniques have been applied to these cancer types to enhance diagnostic and prognostic accuracy. Lastly, the various challenges and limitations associated with the use of ML in cancer analysis, such as data quality, model interpretability, and the need for extensive validation before clinical application, are explored.

1.2. Motivation and Contribution

The survey explores recent advancements in AI and ML for various cancers (lung, breast, brain, cervical, colorectal, and liver) and aims at updating researchers on new developments and tackling major challenges in cancer care, such as complexity, the need for personalized treatments, and the large volume of healthcare data. The paper highlights how AI and ML can overcome these issues, offering insights into potential solutions and improvements. It emphasizes improving patient outcomes through successful case studies and algorithms, and informs healthcare professionals about the benefits of computer-aided techniques in early detection, accurate diagnosis, personalized treatment planning, and patient monitoring.

The main contributions of this paper can be summarized as follows:

- ▪

- Analyzes and highlights the most important aspects of the aforementioned six cancer types.

- ▪

- Analyzes various ML methods based on benchmark datasets and several performance evaluation metrics.

- ▪

- Identifies the majority of datasets utilized in the reviewed papers.

- ▪

- Outlined various research challenges, potential solutions, and opportunities for cancer analysis and care suggested for future researchers.

1.3. Summary

In the following paragraphs, a concise overview of the six cancer types discussed in this paper is provided along with the imaging modalities commonly employed for their detection and diagnosis.

- ▪

- Lung cancer originates in the tissues of the lungs, usually in the cells lining the air passages. It is strongly associated with smoking, but can also occur in non-smokers due to other risk factors like exposure to secondhand smoke, radon, or certain chemicals. Lung cancer is one of the leading causes of cancer-related deaths worldwide, emphasizing the importance of early detection and smoking cessation efforts. It is detected primarily through chest X-rays, CT scans, and PET scans. CT scans offer high-resolution images and are particularly useful for detecting small lung nodules, while PET scans help assess the metabolic activity of suspected tumors, aiding in staging and treatment planning.

- ▪

- Breast cancer develops in the cells of the breasts, most commonly in the ducts or lobules. It predominantly affects women, but men can also develop it, although it is much less common. This cancer is often diagnosed using mammography, ultrasound, and MRI modalities. Mammography remains the gold standard for screening, while ultrasound and MRI are utilized for further evaluation of suspicious findings, particularly in dense breast tissue.

- ▪

- Brain cancer refers to tumors that develop within the brain or its surrounding tissues. These tumors can be benign or malignant. It is diagnosed using imaging modalities like MRI, CT scans, PET scans, and sometimes biopsy for histological confirmation. MRI is the preferred imaging modality for evaluating brain tumors due to its superior soft tissue contrast, allowing for precise localization and characterization of lesions.

- ▪

- Cervical cancer starts in the cells lining the cervix, which is the lower part of the uterus that connects to the vagina. It is primarily caused by certain strains of human papillomavirus (HPV). Regular screening tests such as Pap smear tests, HPV DNA tests, colposcopy, and biopsy can help detect cervical cancer early, when it is most treatable.

- ▪

- Colorectal cancer develops in the colon or rectum, typically starting as polyps on the inner lining of the colon or rectum. These polyps can become cancerous over time if not removed. Screening tests rely on various imaging modalities, including colonoscopy, sigmoidoscopy, fecal occult blood tests, and CT colonography (virtual colonoscopy). Colonoscopy is considered the gold standard for detecting colorectal polyps and cancers, allowing for both visualization and tissue biopsy during the procedure.

- ▪

- Liver cancer arises from the cells of the liver and can either start within the liver itself (primary liver cancer) or spread to the liver from other parts of the body (secondary liver cancer). Chronic liver diseases such as hepatitis B or C infection, cirrhosis, and excessive alcohol consumption are major risk factors for primary liver cancer. Its diagnosis relies on imaging modalities such as ultrasound, CT scans, MRI, and PET scans, along with blood tests for tumor markers such as alpha-fetoprotein (AFP). These imaging techniques enable the detection of liver lesions, nodules, or masses, aiding in the diagnosis, staging, and treatment planning for liver cancer patients.

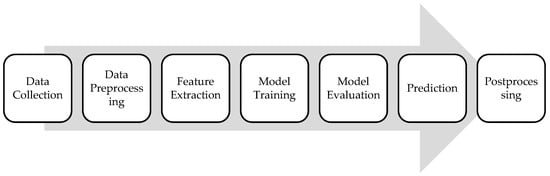

ML algorithms used for cancer detection typically follow a pattern recognition approach, where the algorithm learns patterns and features from input data to distinguish between cancerous and non-cancerous cases. Such algorithms follow a specific framework, presented in Figure 2. Each step in the framework will be detailed in following sections.

Figure 2.

ML framework for cancer analysis.

The first step involves collecting a large dataset containing features extracted from various sources such as medical imaging scans. Next, the collected data undergo preprocessing steps to clean, normalize, and standardize them for analysis. This may include removing noise, handling missing values, and scaling features to ensure consistency across the dataset. Relevant features are then extracted from the preprocessed data, depending on the type of cancer and the available input data. For medical imaging data, features may include texture, shape, intensity, or structural characteristics of tumors. The ML algorithm is trained using the labeled dataset, where each instance is associated with a binary label indicating the presence or absence of cancer. The trained model is then evaluated using separate validation datasets to assess its performance using relevant metrics that will be detailed in the upcoming section. Once the model is trained and validated, it can be used to predict the likelihood of cancer for new, unseen cases. The input data are fed into the trained model, which outputs a probability score or a binary classification indicating the presence or absence of cancer. Lastly, postprocessing steps may be applied to refine the model predictions or interpret its outputs.

1.4. Methods

The studies included in this paper focus on the application of ML techniques in cancer analysis, particularly for the diagnosis, classification, and treatment of the six most frequent cancer types: lung, breast, brain, cervical, colorectal, and liver cancers. Studies were eligible if they involved analyses of medical imaging techniques (X-rays, mammography, ultrasound, CT, MRI, PET), various AI/ML methods (e.g., deep learning, transfer learning, ensemble learning), and discussed challenges or limitations of ML in cancer care. Studies were grouped based on the cancer type and ML methodology applied. The characteristics of each study were summarized in a table that focused on the ML models used and their diagnostic performance.

A comprehensive literature search was conducted on the following databases: Web of Science, PubMed, and IEEE Xplore. Additional sources included reference lists of identified articles and conference proceedings in the fields of medical imaging and oncology. Non-English studies and reviews published before 2020 were excluded. The terms utilized in the search process were related to cancer types (“lung cancer”, “breast cancer”, etc.), ML methodologies (“deep learning”, “transfer learning”, etc.), medical imaging techniques (“CT”, “MRI”, “ultrasound”, etc.), and key areas of focus (“classification”, “segmentation”).

A single reviewer conducted the screening of titles, abstracts, and full-text articles to identify studies meeting the inclusion criteria. No automation tools were used in the selection process. The same reviewer performed the data extraction, collecting information such as study characteristics (e.g., author, year, cancer type, ML model, dataset used) and outcomes (e.g., accuracy). No formal risk-of-bias assessment was performed.

2. Medical Imaging and Diagnostic Techniques: An Overview of Key Modalities

Medical imaging techniques, together with deep learning (DL) methods, have emerged as powerful tools in the field of cancer analysis. The intersection of advanced imaging technologies and AI has led to more accurate, efficient, and early detection of cancer, revolutionizing the way healthcare professionals diagnose and treat the disease. This section briefly presents some of the key medical imaging techniques, including their underlying principles, applications, and comparative advantages when used for DL applications in cancer analysis.

2.1. X-Rays

X-rays are a type of invisible light that can pass through solid objects, including human tissue. When X-rays are directed at the body, they can create images of the inside of the body, like bones or organs, by showing how much of the X-rays are absorbed or passed through different tissues. The resulting image, typically a 2D projection, can have resolutions as fine as 100 microns, with intensities indicating X-ray absorption levels [4].

2.2. Mammography

Mammography is a specialized medical imaging technique used primarily for breast cancer screening and diagnosis [5]. It involves taking low-dose X-ray images [6] of the breast tissue to detect abnormalities such as tumors, cysts, or calcifications. These images, called mammograms, can help physicians detect early signs of breast cancer [6], such as abnormal lumps or masses, before they can be felt.

2.3. Ultrasound

Ultrasound (US) is a non-invasive and safe imaging method with extensive availability and patient comfort [7] that uses high-frequency sound waves. These waves reflect back when they hit tissues, and the returning echoes are captured to create real-time images of internal structures. The non-ionizing nature of US makes it safer for patients, as the absence of radiation reduces health risks and enhances patient comfort during diagnostic procedures. However, it fails to provide comprehensive images of organs or specific areas under examination, as its penetration capability into deeper tissues is reduced. This limitation results in incomplete images, impacting the overall visualization quality of organs, which may hinder the diagnostic accuracy and thorough assessment of certain medical conditions.

2.4. Computed Tomography

Computed tomography (CT) stands out as a fast and readily available imaging method. A CT scan is a diagnostic tool that employs X-ray images taken from various angles around the body, utilizing computer processing to generate detailed cross-sectional images (slices) of bones, blood vessels, and soft tissues. CT scan images provide superior information compared to plain X-rays, enabling a comprehensive assessment of various anatomical structures. This imaging technique allows for the identification of abnormalities in bones, blood vessels, and soft tissues, facilitating a thorough examination [8].

2.5. Positron Emission Tomography

Positron-emission tomography (PET) has transitioned from a primarily research-focused tool to an indispensable imaging modality for evaluating cancer. It uses a radioactive tracer to emit gamma rays, which are detected to create detailed images of the body’s metabolic processes. While PET offers high sensitivity for malignancy detection, its practical use as a standalone imaging modality is often limited, as it provides imprecise anatomical localization due to limited spatial resolution [9]. The advent of integrated PET–CT, a combination of PET and CT in a single device, has addressed this limitation. This approach allows for the merging of PET and CT datasets acquired during a single examination, providing both morphological and metabolic information and enhancing the accuracy and reliability of cancer staging.

2.6. Magnetic Resonance Imaging

Magnetic resonance imaging (MRI) is a precise and accurate method for tumor diagnosis, leveraging high contrasts among soft tissues in the obtained images. Although this characteristic makes it particularly effective in identifying and characterizing tumors, the diagnostic accuracy of MRI can be influenced by both patient- and operator-related factors. Patient-related considerations, such as claustrophobia, implanted materials, or devices, and uncomfortable situations, may pose limitations on the application of MRI and impact the quality of the results [10]. In contrast to CT, MRI operates without ionizing radiation, relying on magnetic stimulation of hydrogen atoms to create detailed images of targeted tissues. MRI uses powerful magnetic fields and radio waves to produce precise images of the internal structures within the body and relies on the behavior of hydrogen atoms in the body’s tissues when exposed to these magnetic fields.

2.7. Endoscopic Biopsy

Endoscopic biopsy is a procedure commonly used to obtain tissue samples from the gastrointestinal tract [11] and respiratory system [12] and involves using an endoscope, which is a long, flexible tube with a camera and light at its tip, to visualize the inside of these organs and guide the biopsy procedure. Endoscopic biopsy may be performed in various areas of the body, as presented below.

2.7.1. Colonoscopy

Colonoscopy [11,13] is a medical procedure used to examine the inside of the colon and rectum. During the procedure, if any suspicious growths or polyps are found, they can be removed or biopsied for further examination. Colonoscopy, with or without removal of a lesion, is an invasive procedure and can carry some risks, such as bleeding and perforation, although these are considered to be low.

2.7.2. Bronchoscopy

Bronchoscopy [12,14] is a medical procedure used to examine the inside of the airways and lungs. If a suspicious mass or lesion is found during the procedure, a biopsy can be taken for further examination under a microscope. The risks associated with bronchoscopy are generally considered to be low, and include bleeding, infection, and pneumothorax.

3. Machine Learning Framework

In cancer analysis, building effective ML models is crucial for accurate diagnosis, prognosis, and treatment planning. This section outlines the essential workflow stages previously presented in Figure 2: gathering data, preprocessing it for quality, extracting relevant features, training and evaluating the model, and finally, making predictions and refining outputs. Each stage plays a vital role in creating models that are accurate and robust.

3.1. Data Collection

In cancer analysis, data collection involves gathering various types of medical data, including imaging data (such as MRI, CT, or histopathology images), clinical records, genetic information, and biomarker levels. High-quality, annotated datasets are crucial, especially for tasks such as tumor classification and segmentation. Data may come from hospital databases, clinical trials, or publicly available medical repositories. It is important to collect diverse data across different patient demographics and cancer types to build a robust and generalizable model [15].

3.2. Data Preprocessing

Medical data, particularly images, require extensive preprocessing to handle noise, artifacts, and varying imaging conditions. Preprocessing steps include normalization (e.g., adjusting intensity values in images), resizing to a consistent scale, removing artifacts (e.g., motion blur in MRIs) using filters, and enhancing the contrast. For segmentation tasks, creating accurate masks that outline the tumor is vital for model training. Data augmentation techniques, such as rotation, flipping, or contrast adjustment, can help to increase the dataset’s variability and improve model robustness. Handling missing or inconsistent clinical data, such as incomplete patient records, is also part of this stage [16].

3.3. Feature Extraction

In cancer analysis, feature extraction often involves identifying key characteristics from imaging data, such as tumor size, shape, texture, and intensity patterns. Advanced methods like radiomics can quantify these features to capture the heterogeneity within a tumor. For segmentation tasks, features may include pixel intensity gradients or edge detection to outline tumor boundaries accurately. In classification, features might encompass not only image characteristics but also clinical data like patient age, genetic markers, and lab results.

3.4. Model Training

Model training in cancer analysis involves using annotated datasets (e.g., labeled images indicating tumor presence or delineated tumor regions) to teach the model to recognize cancerous patterns. For classification, algorithms like CNNs, SVMs, or ensemble methods are commonly used to differentiate between benign and malignant cases. For segmentation, more specialized architectures like U-Net are employed to accurately identify and delineate tumor regions.

Table 1 summarizes some of the most common algorithms utilized for model training in cancer analysis [17].

Table 1.

Overview of the key model training algorithms.

3.5. Model Evaluation

In medical applications, false negatives (missing a cancerous region) can have serious implications, so the evaluation must be thorough to ensure reliable clinical performance. The evaluation metrics for the performance of an ML algorithm can be categorized based on the task at hand, whether it is classification or segmentation. The following subsections will delve into the specifics of each.

3.5.1. Classification

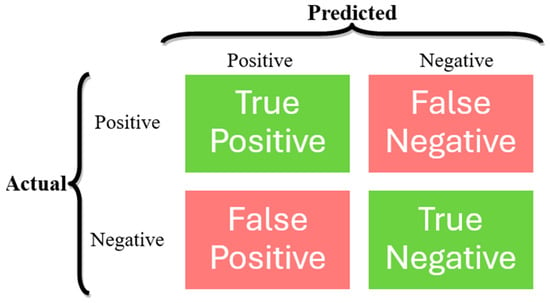

In ML classification tasks, several performance metrics are used to evaluate the effectiveness of a classifier, typically derived from a confusion matrix [24], which is a means to evaluate the performance of a classification model by presenting a summary of the model’s predictions compared to the actual labels in a tabular format, as presented in Figure 3, where:

Figure 3.

Confusion matrix structure.

- ▪

- True Positives (TP): Instances that are correctly predicted as belonging to the positive class.

- ▪

- False Positives (FP): Instances that are incorrectly predicted as belonging to the positive class when they actually belong to the negative class.

- ▪

- True Negatives (TN): Instances that are correctly predicted as belonging to the negative class.

- ▪

- False Negatives (FN): Instances that are incorrectly predicted as belonging to the negative class when they actually belong to the positive class.

The most widely used performance metrics for classification problems are accuracy, precision, recall, specificity, sensitivity, F1 score, PR curve, AUC-PR curve, ROC curve, and AUC-ROC curve, which are described below [24,25].

- ▪

- Accuracy measures the proportion of correctly classified instances out of the total instances and is calculated as the number of true positives and true negatives divided by the total number of instances:

- ▪

- Precision measures the proportion of true positive predictions among all positive predictions made by the classifier and is calculated as the number of true positives divided by the total number of instances predicted as positive:

- ▪

- Recall or sensitivity measures the proportion of true positives that are correctly identified by the classifier and is calculated as the number of true positives divided by the total number of actual positive instances:

- ▪

- Specificity measures the proportion of true negatives that are correctly identified by the classifier and is calculated as the number of true negatives divided by the total number of actual negative instances:

- ▪

- F1 score is the harmonic mean of precision and recall, providing a balance between the two metrics and is calculated as the harmonic mean of precision and recall:

- ▪

- Precision–recall (PR) curves plot the precision against the recall for different threshold values used by the classifier to make predictions. Each point on the curve corresponds to a different threshold setting used by the classifier, where a higher threshold leads to higher precision but lower recall, and vice versa.

- ▪

- Area under the PR (AUC-PR) curves summarize the performance of the classifier across all possible threshold values, with a higher AUC-PR indicating better overall performance in terms of both precision and recall.

- ▪

- Receiver operating characteristic (ROC) curves plot the recall against the false-positive rate (FPR, which measures the proportion of false-positive predictions among all actual negative instances) for various threshold values used by the classifier to make predictions. Each point on the curve corresponds to a different threshold setting used by the classifier, where a higher threshold leads to higher specificity but lower recall, and vice versa.

- ▪

- Area under the ROC curve (AUC-ROC or simply AUC) summarizes the performance of the classifier across all possible threshold values, with a higher AUC indicating better overall performance in terms of both recall and specificity.

3.5.2. Segmentation

In ML segmentation tasks, key metrics include the following [25,26,27].

- ▪

- Intersection over union (IOU) measures the overlap between the predicted and ground-truth masks by calculating the ratio of the intersection to the union of the two masks:

- ▪

- Dice similarity coefficient (DSC) measures the spatial overlap between the predicted segmentation mask and the ground-truth mask and is calculated as twice the intersection of the predicted and ground-truth masks divided by the sum of their volumes:

- ▪

- Mean intersection over union (mIoU) measures the average IoU across all classes or segments in the image:

- ▪

- Pixel accuracy measures the proportion of correctly classified pixels in the segmentation mask and is calculated as the number of pixels correctly classified divided by the total number of pixels in the image:

- ▪

- Mean average precision (mAP) summarizes the precision–recall (PR) curve across multiple classes or categories and is calculated in three steps:

- For each class or category in the dataset, the PR curve is computed based on the model’s predictions and ground-truth annotations.

- The AUC-AP curve is computed for each class.

- The mAP is calculated by taking the mean of the average precision values across all classes in the dataset.

- ▪

- Hausdorff distance (HD) measures the similarity between two sets of points in a metric space. It quantifies the maximum distance from a point in one set to the closest point in the other set, and vice versa.

The directed Hausdorff distance from set A to set B is defined as:

Similarly, the directed Hausdorff distance from set B to set A is defined as:

The Hausdorff distance between sets A and B is therefore defined as the maximum of the directed Hausdorff distances:

3.6. Prediction

Once trained and evaluated, the model is deployed to predict cancer presence or segment tumors in new, unseen patient data. The same algorithms used for model training are employed to take in new input data, apply the patterns and parameters learned during training, and output predictions. In general, for classification, the model outputs a probability score indicating the likelihood of cancer, which can aid in early diagnosis, while for segmentation tasks, the model provides a pixel-wise mask outlining the tumor boundaries, assisting radiologists and oncologists in treatment planning.

3.7. Postprocessing

Postprocessing is essential to refine the model’s output into clinically actionable insights. In segmentation, postprocessing might involve smoothing tumor boundaries, removing noise, or filling gaps in the predicted mask to improve visual clarity. For classification, probability scores might be converted into binary labels (cancerous or non-cancerous) based on a decision threshold. Additionally, postprocessing can include combining predictions with other clinical data, generating reports, or highlighting regions of interest on images for further review by medical experts.

A critical aspect of postprocessing in cancer analysis is model interpretability. After an ML model has made its predictions, interpretability techniques can be applied to explain the reasoning behind those predictions. These interpretability methods provide crucial insights, especially in medical applications, where understanding how and why the model arrived at a specific decision is essential for gaining clinical trust and ensuring the transparency of the model. Commonly used interpretability methods include:

- ▪

- Shapley additive explanation (SHAP) [28] values explain individual predictions by attributing the contribution of each feature (e.g., patient characteristics or image pixels) to the final outcome. SHAP helps clinicians understand which variables had the most significant influence on the prediction.

- ▪

- Local interpretable model-agnostic explanations (LIME) [29] approximate complex models by perturbing input data slightly and analyzing the effect on predictions. It provides local explanations for individual instances, making it particularly useful in understanding predictions made by complex, black-box models.

- ▪

- Gradient-weighted class activation mapping (Grad-CAM) [30] generates visual explanations of model decisions by highlighting the regions of an image that were most influential in the model’s decision-making process. This is particularly important in cancer imaging, where clinicians need to verify that the model is focusing on relevant areas when diagnosing or classifying cancer.

- ▪

- Score-weighted class activation mapping (Score-CAM) [31] provides visual explanations for the decisions made by CNNs, particularly in image classification and object detection tasks. It extends the concept of Grad-CAM by using the activation maps directly from the network to generate class-specific attention maps, but without relying on the gradients.

4. Literature Review

This section provides an in-depth analysis of existing research and developments in the field of ML-based cancer analysis, with a specific focus on the most prevalent and deadly six types of cancer: lung, breast, brain, cervical, colorectal, and liver cancers. These specific types of cancer are associated with high mortality rates and are frequently diagnosed at advanced stages. Recent research has demonstrated that early detection plays a crucial role in reducing mortality rates and improving patient survival rates. A notable trend observed in recent years is the extensive research efforts dedicated to the detection and diagnosis of these cancers utilizing ML-based techniques. This section aims to review the current state of the art, methodologies, and advancements in utilizing ML techniques for the diagnosis, prognosis, and treatment of these selected cancers. By examining a wide range of studies and approaches within these specific cancer types, this review offers insights into the various ML algorithms, data sources, and evaluation methods used in cancer analysis.

4.1. Lung Cancer

Lung cancer accounts for approximately 14% of the annual new cancer cases in the United States, surpassing the combined deaths caused by breast, prostate, and colon cancers [32]. Early-stage lung cancer typically lacks symptoms, leading to late-stage diagnoses. The 5-year survival rate for locally detected lung cancers is 55%, but most patients receive diagnoses at advanced stages, resulting in significantly lower survival rates (overall 5-year survival rate of 18%) [33]. The utilization of ML has the potential to revolutionize the early detection of lung cancer, leading to enhanced accuracy in results and more targeted treatment approaches, which could substantially increase patient survival rates.

Jassim et al. [34] present a transfer DL ensemble model for predicting lung cancer using CT images. The Chest CT-Scan images dataset [35] comprises 1000 CT images across four classes relevant to lung cancer. The classes include adenocarcinoma, large cell carcinoma, squamous cell carcinoma, and normal tissue. The model leverages the capabilities of three ImageNet dataset pre-trained DL architectures, namely, EfficientNetB3, ResNet50, and ResNet101, with TL and applied data augmentation techniques for training. These models are further trained on the lung cancer dataset to fine-tune the weights for specific features relevant to lung cancer classification. Each model configuration includes modifications specific to lung cancer imaging, such as adjustments in layer depths and learning rates. EfficientNetB3 emerged as the top-performing model, displaying the most effective convergence with average precision of 94%, recall of 93%, and F1 score of 93%. In comparison, the ResNet50 model achieved precision, recall, and F1 score values of 88%, 81%, and 81%, respectively, while the ResNet101 model achieved values of 94%, 93%, and 93%, respectively. The maximum accuracy achieved by the models presented in this paper is 99.44%.

Muhtasim et al. [36] propose a multi-classification approach for detecting lung nodules using AI on CT scan images from the IQ-OTHNCCD lung cancer dataset [37], consisting of 1190 CT scan images classified into normal, benign, and malignant. It employs TL with the VGG16 model and morphological segmentation to enhance the accuracy and computational efficiency of lung cancer detection. Morphological operations are applied to segment the region of interest and extract distinct morphological features. The classification task implements a DL architecture combined with seven different ML algorithms, namely, decision tree, k-NN, random forest, extra trees, extreme gradient boosting, SVM, and logistic regression, to classify lung nodules into malignant, benign, and normal categories. The proposed stacked ensemble model combines the CNN with the VGG16 TL model to achieve accuracy, precision, recall, and F1 score of 99.55%, 0.996, 0.995, and 0.995, respectively.

Luo [38] introduces the LLC-QE model, an innovative approach combining EL and reinforcement learning to improve lung cancer classification. The model classifies lung cancer into four classes: adenocarcinoma, squamous cell carcinoma, large-cell carcinoma, and small-cell carcinoma. The study utilizes the LIDC-IDRI dataset [39] comprising 1018 CT scans, primarily composed of non-cancer cases, to train and validate the model. To address dataset imbalances, the training employs strategies that involve differential reward systems in reinforcement learning, focusing on underrepresented classes to improve model sensitivity to less frequent cases. The artificial bee colony algorithm is used during pre-training to enhance the initialization of network weights, and a series of CNNs act as feature extractors. The reinforcement learning mechanism views image classification as a series of decisions made by the network within a Markov decision process framework and adopts a nuanced reward system where correct classifications of minority classes receive higher rewards compared to majority classes, encouraging the model to pay more attention to the harder-to-detect instances. The features extracted by individual CNNs are then merged to harness the collective power of multiple models, resulting in an average classification accuracy of 92.9%.

Mamun et al. [40] assess the effectiveness of various EL techniques in binary classifying lung cancer using the Lung Cancer dataset [41] from Kaggle, containing 309 instances with 16 attributes. These attributes included various symptoms and patient characteristics, such as age, smoking status, chronic disease, alcohol consumption, coughing, shortness of breath, and chest pain. The ensemble methods applied include XGBoost, LightGBM, Bagging, and AdaBoost. The data underwent preprocessing to handle missing values and balance the dataset using the synthetic minority over-sampling technique (SMOTE). The models were evaluated based on accuracy, precision, recall, F1 score, and AUC. XGBoost performed the best among the tested models, achieving accuracy of 94.42%, precision of 95.66%, and AUC of 98.14%, highlighting its capability in handling imbalanced and complex datasets. The LightGBM, AdaBoost, and Bagging methods achieved accuracy values of 92.55%, 90.70%, and 89.76%, respectively.

Venkatesh and Raamesh [42] examine the application of EL techniques for predicting lung cancer survivability through binary classification methods using the Surveillance, Epidemiology, and End Results (SEER) dataset [43]. The study evaluates the effectiveness of bagging and AdaBoost ensemble methods combined with k-NN, decision tree, and neural network classifiers. The dataset consists of 1000 samples with 149 attributes initially, which was reduced to 24 attributes after preprocessing, including smoking, gender, air pollution, chronic lung disease, chest pain, wheezing, dry cough, snoring, and swallowing difficulty. The previously mentioned ensemble techniques are used to improve the predictive performance by reducing variance (bagging) and bias (AdaBoost), as well as improving the accuracy of weak classifiers. The outputs from the different models are combined using a systematic voting mechanism to finalize the survival prediction. The results indicate that both bagging and AdaBoost techniques improve the performance of individual models. Specifically, the accuracy scores for decision trees with bagging and AdaBoost were 0.973 and 0.982, respectively, for k-NN, bagging and AdaBoost achieved scores of 0.932 and 0.951, and for neural networks, bagging and AdaBoost attained scores of 0.912 and 0.931. The integrated model achieved an accuracy score of 0.983, surpassing the scores of individual algorithms both with and without ensemble methods.

Said et al. [44] present a system for lung cancer diagnosis using segmentation and binary classification techniques based on DL architectures, specifically utilizing UNETR for segmentation and a self-supervised network for classification. The Decathlon dataset [45], consisting of 96 3D CT scan volumes, is utilized for training and testing. The segmentation part employs the UNETR neural network, a combination of U-Net and transformers, to achieve an DSC of 96.42%. The classification part uses a self-supervised neural network to classify segmented nodules as either benign or malignant, achieving a classification accuracy of 98.77%.

Table 2 provides an overview of lung cancer studies, including model name, dataset details, and preprocessing methods. No interpretability methods were presented in of any these papers.

Table 2.

Summary of Lung Cancer studies.

4.2. Breast Cancer

Breast cancer is a common type of cancer among women, accounting for approximately 30% of all annual new cancer cases among women [46]. Globally, there were 2.3 million women (11.6% of the total new cases of cancer) diagnosed with breast cancer and 670,000 deaths (6.9% of the total cancer deaths) attributed to the disease in 2022 [47]. Early detection through screening mammography can decrease breast cancer mortality by up to 20% [48]. Breast US faces challenges due to image complexity, including noise, artifacts, and low contrast. Manual analysis by sonographers is time-consuming, subjective, and can result in unintended misdiagnoses due to fatigue. Therefore, the integration of computer-aided detection or AI is crucial for enhancing the accuracy of screening breast US, reducing both false positives and false negatives, and minimizing unnecessary biopsies [49].

Interlenghi et al. [50] developed a radiomics-based ML binary classification model that predicts the BI-RADS category of suspicious breast lesions detected through US with an accuracy of approximately 92%. A dataset of 821 images, comprising 834 suspicious breast masses from 819 patients, was collected retrospectively from US-guided core needle biopsies performed by four certified breast radiologists using six different US systems. The dataset consists of 404 malignant and 430 benign lesions based on histopathology. A balanced image set of biopsy-proven benign and malignant lesions (299 each) is used to train and cross-validate ensembles of ML algorithms supervised by histopathological diagnosis. An ensemble of SVMs, using a majority vote, demonstrated the ability to reduce the biopsy rate of benign lesions by 15% to 18% while maintaining a sensitivity of over 94% in external testing. This model was tested on two additional image sets, resulting in positive predictive values (PPV) of 45.9% and 50.5% and sensitivity of 98.0% and 94.4%, respectively, outperforming the radiologists’ PPVs. The model achieved low error rates in assigning BI-RADS categories, and the radiologists accepted the model’s classifications for most masses, indicating instances where the model performed better than the radiologists by assigning a more accurate BI-RADS classification.

Kavitha et al. [51] propose a novel optimal multi-level thresholding-based segmentation with DL-enabled capsule network (OMLTS-DLCN) model for breast cancer diagnosis and multi-class classification. The model incorporates an adaptive fuzzy-based median filtering procedure to eliminate noise. The segmentation technique employed is the optimal Kapur’s multilevel thresholding with shell game optimization (OKMT-SGO) algorithm, which effectively detects the diseased portion in mammogram images. Feature extraction is performed using the CapsNet model, and the final classification is achieved using the backpropagation neural network (BPNN) model, determining appropriate class labels. The performance evaluation on the Mini-MIAS [52] (322 images) and CBIS-DDSM [53] (13,128 images) datasets, containing normal, benign, and malignant classes, demonstrates that the presented OMLTS-DLCN model outperforms other methods in terms of classification accuracy, with an accuracy of 98.50% and 97.56%, respectively.

Chen et al. [54] introduce a novel approach called DSEU-Net (squeeze-and-excitation (SE) attention U-Net with deep supervision) for the segmentation of medical US images. The proposed method combines several key elements to enhance the accuracy and robustness of the segmentation process. Firstly, a deeper U-Net architecture is employed as a benchmark network to effectively capture the intricate features present in complex US images. Next, the SE block is integrated as a bridge between the encoder and decoder, allowing for enhanced attention on relevant object regions. The SE block not only strengthens the connections between distant but useful information but also suppresses the introduction of irrelevant information, improving the overall segmentation quality. Furthermore, deep supervised constraints are incorporated into the decoding stage of the network to refine the prediction masks of US images, which further improves the accuracy and reliability of the segmentation results. The performance of DSEU-Net in US image segmentation was evaluated using extensive experiments on two clinical US breast datasets. Specifically, when applied to the first dataset (BUSI [55]), DSEU-Net achieved IOU, precision, recall, specificity, DSC, and accuracy values of 70.36%, 79.73%, 82.70%, 97.42%, 78.51%, and 95.81%, respectively. Similarly, for the second dataset, the method achieved Jaccard coefficient, precision, recall, specificity, and DSC values of 73.17%, 82.58%, 84.02%, 99.05%, and 81.50%, respectively. These results demonstrate the significant improvement of DSEU-Net over the original U-Net, with an average increase of 8.28% and 12.55% on the five-evaluation metrics for the two breast US datasets.

Dogiwal’s paper [56] investigates the effectiveness of supervised ML techniques in predicting breast cancer using histopathological data. The dataset used in this study was sourced from the UCI Machine Learning Repository, specifically the Breast Cancer Wisconsin (Diagnostic) dataset [57], which consists of 699 samples with 458 benign (65.5%) and 241 malignant (34.5%) instances with 32 attributes. This study utilizes PCA to reduce the number of dimensions while preserving the most significant information. The approach also involves feature engineering to enhance model performance by selecting the most relevant features. The study focuses on the application of three prominent algorithms, namely, random forest, logistic regression, and SVM, for breast cancer binary classification. The random forest algorithm achieved an accuracy of 98.6%, with precision and recall scores of 0.99 and 0.98, respectively. In comparison, the logistic regression algorithm attained an accuracy of 94.41%, with precision and recall scores both at 0.94. Similarly, the SVM algorithm yielded an accuracy of 93.71%, with precision and recall scores also at 0.93.

Al-Azzam and Shatnawi’s study [58] evaluates the effectiveness of both SL and semi-SL algorithms in diagnosing breast cancer using the Breast Cancer Wisconsin (Diagnostic) dataset [57], containing 569 instances with 30 features extracted from digital images of fine needle-aspirates (FNAs) of breast masses. The dataset is split into training (80%) and testing (20%) sets for both approaches, noting that for the semi-SL algorithm, the training data was divided into 50% labeled data and 50% unlabeled data. Several models, such as logistic regression, Gaussian naïve Bayes, SVM (both linear and RBF), decision tree, random forest, XGBoost, GBM, and k-NN, are trained using the labeled dataset for both approaches. All algorithms demonstrated strong performance on the test data, a minimal disparity in accuracy between the SL and semi-SL techniques being observed. Results showed that logistic regression (SL = 97%, SSL = 98%) and k-NN (SL = 98%, SSL = 97%) achieved the highest accuracy for classifying malignant and benign tumors.

Ayana et al. [59] explore a novel multistage TL (MSTL) approach tailored for US breast cancer image binary classification. The dataset consists of 20,400 cancer cell line microscopic images and US images from two datasets: Mendeley [60] (200 images) and MT-Small-Dataset [55,61] (400 images). The presented TL approach begins with a pre-trained model on ImageNet, adapted to cancer cell line microscopic images. This stage involves fine-tuning the model to recognize features relevant to medical contexts, particularly those that are morphologically similar to features in US images. Then, it uses the tuned model as a base to further train on US breast cancer images. This two-step process allows the model to refine its ability to differentiate between malignant and benign features with higher accuracy. The study employs three different CNN models: EfficientNetB2, InceptionV3, and ResNet50. The best performance was achieved using the ResNet50 with the Adagrad optimizer, resulting in a test accuracy of 99.0% on the Mendeley dataset and 98.7% on the MT-Small-Dataset.

Umer et al. [61] propose an innovative approach for breast cancer binary classification using a combination of convoluted features extracted through DL techniques and an ensemble of ML algorithms. The study employs a custom CNN model designed to extract deep convoluted features from mammographic images from the Breast Cancer Wisconsin (Diagnostic) dataset [57] consisting of 32 features. After feature extraction, multiple ML algorithms, such as random forest, decision trees, SVM, and k-NN, are employed to classify the images into benign or malignant. The final classification is determined through a majority voting system where each algorithm contributes equally to the decision-making process. The dataset was split into 70% training and 30% testing. This ensemble approach reduces the likelihood of misclassification by leveraging the diverse strengths of different algorithms, reaching accuracy of 99.89%, precision of 99.89%, recall of 99.92%, and F1 score of 99.90%.

The study conducted by Hekal et al. [62] proposes an ensemble DL system designed for binary classification of breast cancer. The system processes suspected nodule regions (SNRs) extracted from mammogram images, utilizing four different TL CNNs and subsequent binary SVM classifiers. The study uses the CBIS-DDSM [53] dataset, which provides 3549 region-of-interest (ROI) images with both mass and calcification cases containing annotations for both malignant and benign findings. SNRs are extracted from ROI images using an optimal dynamic thresholding method specifically tailored to adjust the threshold based on the detailed characteristics of each image. Four CNN architectures, namely, AlexNet, DenseNet-201, ResNet-50, and ResNet-101, are used and followed by a binary SVM classifier that determines if the processed SNRs are malignant or benign. The outputs from each CNN-SVM pipeline are combined using a first-order momentum method, which considers the training accuracies of the individual models. This fusion approach is designed to enhance decision-making by weighting the contribution of each model based on its performance. The proposed ensemble DL system achieved an accuracy of 94% for distinguishing benign and malignant cases and 95% for distinguishing between benign and malignant masses.

Deb et al. [63] provide a detailed analysis of segmenting mammogram images using DL architectures, specifically U-Net and BCDU-Net, which is an advanced variant of U-Net that incorporates bidirectional ConvLSTM and densely connected convolutional blocks. The INBreast dataset [64] is utilized, comprising 410 mammograms from 115 patients. Initial experiments are conducted on full mammograms to segment regions indicative of potential masses. Further experiments focus on ROIs extracted from mammograms, where the network segments smaller, more focused areas. Both U-Net and BCDU-Net are evaluated on their ability to segment whole mammograms and ROIs. The models achieved a DSC of 0.8376 and IOU of 0.7872 for the full mammogram, while for the ROI segmentation, they reported a DSC of 0.8723 and IOU of 0.8098. The study concludes that BCDU-Net provides better segmentation results, especially when focusing on ROIs.

Haris et al. [65] introduce a novel approach for breast cancer segmentation using a combination of Harris hawks optimization (HHO) with cuckoo search (CS) and an SVM classifier that aims to optimize segmentation by fine-tuning hyperparameters for enhanced accuracy in mammographic image analysis. The study utilizes the CBIS-DDSM [40] dataset. The hybrid model starts with initializing a population of hawks in the image matrix, where each hawk represents a potential solution. The fitness of each hawk is evaluated based on pixel intensity and neighboring intensities. This evaluation guides the optimization process, focusing on improving segmentation accuracy. The hybrid approach leverages the strengths of both HHO and CS, enabling a dynamic adjustment between exploration and exploitation phases, ultimately fine-tuning SVM parameters for optimal segmentation. The study concludes that the integration of HHO and CS with SVM significantly improves breast cancer segmentation in mammographic images, demonstrating an accuracy of 98.93%, a DSC of 98.77%, and an IOU of 97.68%.

Table 3 provides a concise overview of each paper’s details, including the model types, data preprocessing, and dataset characteristics. No interpretability methods were presented in any of these papers.

Table 3.

Summary of breast cancer studies.

4.3. Brain Cancer

Brain cancer ranks as the leading cause of cancer death for females aged 20 and younger, as well as males aged 40 and younger [66]. Each year, approximately 13,000 cases of glioblastoma are diagnosed in the United States, corresponding to an incidence rate of 3.2 per 100,000 individuals [67]. Given the pressing demand for precise and automated analysis of brain tumors, coupled with the exponential expansion of clinical imaging data, the significance of image-based ML techniques is steadily escalating.

Khan et al. [68] present an advanced method for detecting and segmenting brain tumors using a region-based CNN (RCNN). This approach utilizes MRI images from the BraTS 2020 dataset [69,70,71], which contains a total of 369 training, 125 validation, and 169 test multi-modal MRI studies, to identify and delineate tumor regions. The proposed approach consists of three phases: preprocessing, tumor localization using the RCNN, and segmentation using an active contour algorithm. The RCNN, which integrates region proposal mechanisms with CNNs, is employed to accurately localize and segment tumors in brain MRI scans. It makes use of an AlexNet pre-trained network to extract features from regions of interest within the brain scans, which are then used to identify and segment the tumors. After the initial detection and rough segmentation by the RCNN, the active contour model, also known as “snakes,” is used for precise segmentation. This method refines the boundaries of the tumor by minimizing an energy function that delineates the tumor’s shape more accurately. The proposed system achieved a mean average precision of 0.92 for tumor localization performance and an average DSC of 0.92 for tumor segmentation performance, demonstrating an accuracy of 88.9%.

Sharma et al. [72] introduce a hybrid multilevel thresholding image segmentation method for brain MRI images from the publicly available Figshare database [73] using a novel dynamic opposite bald eagle search (DOBES) optimization algorithm. The brain MRI dataset contains T1-weighted images of 233 patients, categorized into meningioma, glioma, and pituitary tumors. The DOBES algorithm is an enhancement of the traditional bald eagle search (BES) algorithm, incorporating dynamic opposition learning (DOL) to improve initialization and exploitation phases, aiming to avoid local optima and enhance convergence speed. This algorithm is used for selecting optimal multilevel threshold values for image segmentation to accurately isolate tumor regions from normal brain tissues. The segmentation process combines optimized thresholding with morphological operations to refine the segmented images, removing noise and non-tumor areas to enhance the clarity and accuracy of tumor delineation. The results showed structural similarity indices of 0.9997, 0.9999, and 0.9998 for meningioma, glioma, and pituitary tumors, respectively, with an average accuracy of 99.98%.

A study presented by Ngo et al. [74] explores an advanced approach to brain tumor segmentation, particularly focusing on small tumors, which are often challenging to detect and delineate accurately in medical imaging. It utilizes multi-task learning, integrating feature reconstruction tasks alongside the main segmentation task, and makes use of the BraTS 2018 dataset [69,70,71], which includes 3D MRI scans of 285 patients for training and 66 patients for validation. The primary task is the segmentation of brain tumors using a U-Net-based architecture modified for 3D analysis to accommodate the full complexity of brain structures in MRI. An auxiliary task of feature reconstruction using an autoencoder-like module called U-Module is implemented. The U-Module helps retain critical features that are often lost during down-sampling in traditional CNN architectures, therefore preserving important features through the encoding–decoding process, which is particularly useful for capturing the characteristics of small tumors. The model’s effectiveness is evaluated using the DSC, which showed a value of 0.4499 for tumors smaller than 2000 voxels. For overall segmentation performance, the model achieved 81.82% DSC for enhancing tumors, 89.75% for tumor cores, and 84.05% for whole tumors.

Ullah et al. [75] introduce an evolutionary lightweight model for the grading and classification of brain cancer using MRI images. It is a multi-class classification task where the brain tumors are categorized into four grades (I, II, III, and IV). The model, which combines weighted average and lightweight XGBoost decision trees, is a modified version of multimodal lightweight XGBoost. Features such as intensity, texture, and shape were extracted from the MRI images. Intensity features include mean intensity, standard deviation, skewness, and kurtosis. Texture features are derived using the gray-level co-occurrence matrix (GLCM) method, while shape features include area, perimeter, and eccentricity. The proposed lightweight XGBoost ensemble model is an ensemble of multiple XGBoost decision trees. Each tree in the ensemble is trained on different subsets of the data and with different hyperparameters to capture diverse patterns and improve generalization. The XGBoost algorithm constructs decision trees iteratively, optimizing for a specific loss function, their predictions being then combined using a weighted average approach. Using the BraTS 2020 dataset [69,70,71], which includes 285 MRI scans of patients with gliomas, the proposed model achieved an accuracy of 93.0%, precision of 0.94, recall of 0.93, F1 score of 0.94, and an AUC value of 0.984.

Saha et al. [76] propose the BCM-VEMT model, which integrates DL and EL techniques for accurate multi-class classification of brain tumors from MRI images. The model combines various DL methods to improve the detection and classification accuracy of brain cancer. The paper utilizes a dataset comprising MRI scans obtained from three publicly available sources, namely, Figshare’s Brain Tumor dataset [77], Kaggle’s Brain MRI Images for Brain Tumor Detection [78], and Brain Tumor Classification (MRI) [79] datasets. The final dataset includes 3787 MRI images divided into four classes (glioma, meningioma, pituitary, and normal) and is preprocessed using techniques like normalization, skull stripping, and image augmentation to ensure uniformity and enhance the training process. Next, significant features are extracted from the MRI images, focusing on intensity, texture, and shape. The model employs CNNs to automatically learn and extract features from the MRI images. To further enhance accuracy, the study combines multiple ML models, including SVMs and random forests, in an ensemble approach. The model achieved 97.90% accuracy for glioma, 98.94% for meningioma, 98.92% for pituitary, and 98.00% for normal cases, resulting in an overall accuracy of 98.42%.

Table 4 provides a structured overview of the previously presented studies. No interpretability methods were presented in any of these papers.

Table 4.

Summary of brain cancer studies.

4.4. Cervical Cancer

Cervical cancer, which has claimed the lives of millions of women globally, ranks as the third-leading cause of cancer-related mortality in women [1]. There were 661,044 women diagnosed with cervical cancer and 348,186 deaths attributed to the disease in 2022 [47]. Given that the Papanicolaou (Pap) test can diagnose precancerous lesions, regular screenings are essential to mitigate the significant risks associated with cervical cancer [80]. However, traditional cervical cell screening heavily relies on pathologists’ expertise, resulting in low efficiency and accuracy. Medical image processing combined with ML and DL techniques offers a notable advantage in the classification and detection of cervical cancerous cells, surpassing traditional methods in terms of effectiveness and precision.

Zhang et al. [81] proposed DeepPap, a deep CNN model designed for the binary classification of cervical cells into “normal” and “abnormal” categories. The study utilized two datasets: the Herlev [82] dataset, consisting of 917 cervical cell images across seven classes, and the HEMLBC [83] dataset, which includes 989 abnormal cells and 1381 normal cells. The DeepPap architecture leverages TL using a pre-trained network on the ImageNet dataset, followed by fine-tuning on cervical cell images. This architecture includes several convolutional layers for feature extraction, pooling layers for downsampling, and fully connected layers for final classification. The model achieved a classification accuracy of 98.3% and an AUC of 0.99.

A study presented by Guo et al. [84] employed an unsupervised DL registration approach to align cervix images taken during colposcopic examinations. It utilizes uterine cervix images collected from four different databases, namely, CVT [85,86] (3398 images), ALTS [87] (939 images), Kaggle [88] (1950 images), and DYSIS (5100 images). These datasets consist of cervix images captured at different time intervals during the application of acetic acid, covering various conditions and imaging variations, such as changes in cervix positioning, lighting intensity, and texture. The focus is on using DL architectures employing transformers, such as DeTr, for object detection. The architecture comprises a backbone network for feature extraction, a transformer encoder–decoder module, and prediction heads. The encoder–decoder module includes 3 encoder layers, 3 decoder layers, and 4 attention heads, with a feedforward network (FFN) of 256 layers and an embedding size of 128. The model employs 20 object query slots to accommodate the varying number of objects in each image. The training strategy involves two stages: initially training a DeTr-based object detection network, then replacing the bounding box prediction heads with a mask prediction head and training the network with mask ground truth. The segmentation network derived from this process is utilized to extract cervix region boundaries from original and registered images for performance evaluation of the registration network. The segmentation approach was then applied to registered time sequences, achieving Dice/IoU scores of 0.917/0.870 and 0.938/0.885 on two datasets, resulting in a 12.62% increase in Dice scores for cervix boundary detection compared to unregistered images.

Angara et al. [89] focus on enhancing the binary classification of cervical precancer using semi-SL techniques applied to cervical photographic images. The study utilizes data derived from two large studies conducted by the U.S. National Cancer Institute, namely, ALTS [87] and the Guanacaste Natural History Study (NHS). The combined dataset consists of 3384 labeled images and over 26,000 unlabeled images. The authors employ novel data augmentation techniques like random sun flares and grid drop to tackle challenges such as specular reflections in cervix images. The semi-SL framework employs the ResNeSt50 architecture, which includes a split-attention block that allows it to focus on relevant features within an image by grouping feature maps and applying attention mechanisms within these groups. It also relies on a model pre-trained on ImageNet to utilize learned features applicable to general visual recognition tasks. The semi-supervised approach includes generating pseudo-labels for unlabeled images using a teacher model trained on available labeled data. These pseudo-labels are then used to train a student model, improving its learning from both labeled and unlabeled data. The student model’s predictions refine the training process iteratively, progressively enhancing the model’s ability to classify new and unseen images accurately. The model’s effectiveness is measured through accuracy, precision, recall, and F1 score, the results on the test set being 82%, 0.84, 0.57, and 0.68, respectively. The accuracy on the test dataset is enhanced to 82.02% when utilizing the semi-supervised method compared to the 76.81% of ImageNet TL.

Kudva et al.’s paper [90] presents a novel hybrid TL (HTL) approach that integrates DL techniques with traditional image processing to improve the binary classification of uterine cervix images for cervical cancer screening. The study used 2198 cervix images, comprising 1090 negative and 1108 positive cases, sourced from Kasturba Medical College and the National Cancer Institute. The study first identified relevant filters from pre-trained models (AlexNet and VGG-16) that were effective in highlighting cervical features, particularly acetowhite regions. Two shallow-layer CNN models were developed: CNN-1, which incorporated the selected filters that were resized and adapted to the specific dimensions required for the initial convolutional layers of the CNN, and CNN-2, which included an additional step of adapting filters from the second convolutional layers of AlexNet and VGG-16, providing deeper and more detailed feature extraction capabilities. The results show that the HTL approach outperformed traditional methods that rely solely on either full training of deep CNNs or basic ML techniques, achieving an accuracy of 91.46%, sensitivity of 89.16%, and specificity of 93.83%.

Ahishakiye et al. [91] focus on a binary classification task to predict cervical cancer based on risk factors using EL techniques. The dataset was sourced from the UCI Machine Learning Repository and included records for 858 patients with 36 attributes related to cervical cancer risk factors. Feature selection consisted of selecting five main predictors deemed the most influential for predicting cervical cancer based on previous studies and expert recommendations. The EL techniques used were k-NN, classification and regression trees (CARTs), naïve Bayes classifier, and SVM. The models were integrated using a voting ensemble method and the final prediction was based on the majority vote. The proposed ensemble model achieved an accuracy of 87.21%, demonstrating its potential as a diagnostic tool in clinical settings.

Hodneland et al. [92] examine a fully automatic method for whole-volume tumor segmentation in cervical cancer using advanced DL techniques. The study included 131 patients with uterine cervical cancer who underwent pretreatment pelvic MRI. The dataset was divided into 90 patients for training, 15 for validation, and 26 for testing. The performance of the proposed enhanced residual U-Net architecture was assessed using the DSC, comparing the DL-generated segmentations against those done by two human radiologists. The DL algorithm achieved median DSCs of 0.60 and 0.58 when compared to the two radiologists, respectively, while the DSC for inter-rater comparisons was 0.78, showing a respectable but not perfect alignment with human expert segmentation.

Table 5 provides an overview of the previously presented articles, including the data type, preprocessing methods, and model interpretability information.

Table 5.

Summary of cervical cancer studies.

4.5. Colorectal Cancer

Colorectal cancer (CRC) is the second-deadliest cancer globally [93], with its incidence and mortality expected to rise significantly in the coming decades. Early detection of colorectal cancer allows for complete cure through surgery and medication, but access to early diagnosis and treatment is more prevalent in developed countries, whereas such facilities are scarce in developing regions. Globally, there were 1.9 million people (9.6% of the total new cases of cancer) diagnosed with CRC and 900,000 deaths (9.3% of the total cancer deaths) attributed to the disease in 2022 [47]. ML can significantly enhance the diagnosis and survival prediction of colorectal cancer through various innovative applications. For example, ML algorithms can be trained to analyze histopathological images or real-time videos from colonoscopies to identify polyps or other abnormalities, leading to more accurate diagnoses and effective treatment strategies.

The core of Guo et al.’s study [94] is the development of the RK-net, which combines UL techniques with DL to optimize the preprocessing and feature extraction processes for colorectal cancer diagnosis. It utilizes data that includes imaging and clinical information stored in a standardized DICOM format from 360 colorectal cancer patients, divided into 300 patients for training and 60 patients for testing. Initially, the images undergo preprocessing where they are normalized for intensity and resized to fit the input requirements of the network. The unsupervised component involves a k-means clustering algorithm, which segments the images into clusters based on similarities in texture, color, and spatial characteristics, effectively isolating relevant features from the background and noise. This network then takes the clustered images and performs feature extraction using the MobileNetV2 architecture and learns to identify and prioritize features that are most indicative of cancerous tissues. The UL component helps in reducing the training data size by focusing only on relevant clusters, thereby decreasing the computational costs and speeding up the training process. RK-net demonstrated a significant reduction in training time by up to 50% compared to traditional methods and achieved 95% accuracy in differentiating two types of colorectal cancer.

Zhou et al. [95] introduce a weakly supervised DL approach for classifying and localizing colorectal cancer in histopathology images using only global labels. The proposed DL model classifies whole-slide images (WSIs) into multiple classes, including different stages of colorectal cancer and normal tissue. The study utilizes two datasets: 1346 WSIs, including 134 normal and 1212 cancerous images, from the Cancer Genome Atlas (TCGA) and 50 newly collected WSIs from three hospitals. The study experiments with various CNN architectures, including ResNet, which was chosen due to its superior performance in patch-based classification tasks, and designs three frameworks. The image-level framework processes low-resolution thumbnails of WSIs to predict the cancerous probability of tissues by examining the entire image, which mimics the preliminary evaluations typically performed by pathologists. The cell-level framework focuses on detailed pathological information present at the cellular level and employs a CNN model to distinguish between cancerous and non-cancerous cells based on features extracted from normal tissue samples, thus avoiding the need for precise cancer annotations. The combination framework combines features from both the image-level and cell-level frameworks to improve diagnostic accuracy. The model achieved 94.6% accuracy on the TCGA dataset and 92.0% accuracy on the external dataset.

Venkatayogi et al. [96] propose a novel approach for the multi-class classification of colorectal cancer polyps using TL and a vision-based surface tactile sensor (VS-TS). The research team designed and additively manufactured 48 types of realistic polyp phantoms, varying in hardness, type, and texture. The dataset was then augmented through rotation and flipping to generate a total of 384 samples. These phantoms are used to mimic real CRC polyps and generate a dataset of textural images using the VS-TS. VS-TS consists of a deformable silicone membrane, an optics module, and an array of LEDs that illuminate the polyp during imaging. The sensor detects the deformation of the silicone upon contact with polyp phantoms, creating detailed textural images. Each phantom is systematically pressed against the sensor to record the detailed textural patterns that characterize different polyp types. The ResNet-18 network is pre-trained on the ImageNet dataset and a SVM algorithm is employed and trained to classify polyps based on the textural features extracted by the VS-TS. The classification is performed on two versions of ResNet-18: one starting with random weights (ResNet1) and the other pre-trained on ImageNet (ResNet2). ResNet2 demonstrated a test accuracy of 91.93%, surpassing the metrics of ResNet1, which exhibited an accuracy of 54.95%.

Tamang et al. [97] present a TL-based binary classifier to effectively distinguish between tumor and stroma in colorectal cancer patients using histological images obtained from a publicly available dataset by Kather et al. [98], containing 5000 tissue tiles of colorectal cancer histological images. The TL framework employs four different CNN architectures, namely, VGG19, EfficientNetB1, InceptionResNetV2, and DenseNet121, which are pre-trained on the ImageNet dataset. The bottleneck layer features (deep features just before the fully connected layers) from these models are used, as this layer typically contains rich feature representations that are broadly applicable across different tasks, including medical imaging. The classifier is then fine-tuned on the CRC dataset while keeping the pre-trained layers frozen to retain the learned features. VGG19, EfficientNetB1, and InceptionResNetV2 architectures achieved accuracies of 96.4%, 96.87%, and 97.65%, respectively, surpassing the reference values presented in the study and therefore demonstrating that the application of TL using pre-trained CNNs significantly enhances the ability to classify tumor and stroma regions in colorectal cancer histological images.

Liu et al. [99] explore the application of Fovea-UNet, a DL model inspired by the fovea of the human eye for the detection and segmentation of lymph node metastases (LNM) in colorectal cancer using CT images. The study used a dataset containing 81 WSIs of LNM, with a total of 624 metastatic regions that were manually extracted and annotated. The dataset was divided into a training set with 57 WSIs (451 metastatic regions) and a test set with 24 WSIs (173 metastatic regions). The architecture includes an importance-aware module that adjusts the pooling operation based on feature relevance, enhancing the model’s focus on significant areas. The authors introduce a novel pooling method that adjusts the pooling radius based on pixel-level importance, helping to aggregate detailed and non-local contextual information effectively. The feature extraction process utilizes a lightweight backbone modified with a feature-based regularization strategy (GhostNet backbone) to reduce computational demands while maintaining feature extraction efficiency. The proposed model demonstrated superior segmentation performance with a 79.38% IOU and 88.51% DSC, outperforming other state-of-the-art models.

Fang et al. [100] developed an advanced approach called area-boundary constraint network (ABC-Net) for segmenting colorectal polyps in colonoscopy images. The study utilizes three public colorectal polyp datasets, namely, EndoScene [101] (912 images), Kvasir-SEG [102] (1000 images), and ETIS-Larib [103] (196 images), which include various colorectal polyp images captured through colonoscopy. ABC-Net consists of a shared encoder and two decoders. The decoders are tasked with segmenting the polyp area and boundary. The network integrates selective kernel modules (SKMs) to dynamically select and fuse multi-scale feature representations, optimizing the network’s focus on the most relevant features for segmentation. The SKMs help in adapting the receptive fields dynamically, allowing the network to focus more on informative features and less on irrelevant ones. The dual decoders operate under mutual constraints, where one decoder focuses on the polyp area and the other on the boundary, with each influencing the performance of the other to improve overall segmentation accuracy. A novel boundary-sensitive loss function models the interdependencies between the area and boundary predictions, enhancing the accuracy of both. This function includes terms that encourage consistency between the predicted area and its boundary, thereby refining the segmentation output. ABC-Net achieved DSCs of 0.857, 0.914, and 0.864, and IOU scores of 0.762, 0.848, and 0.770 on the EndoScene, Kvasir-SEG, and ETIS-Larib datasets, respectively.