Multi-Head Attention Affinity Diversity Sharing Network for Facial Expression Recognition

Abstract

1. Introduction

- (1)

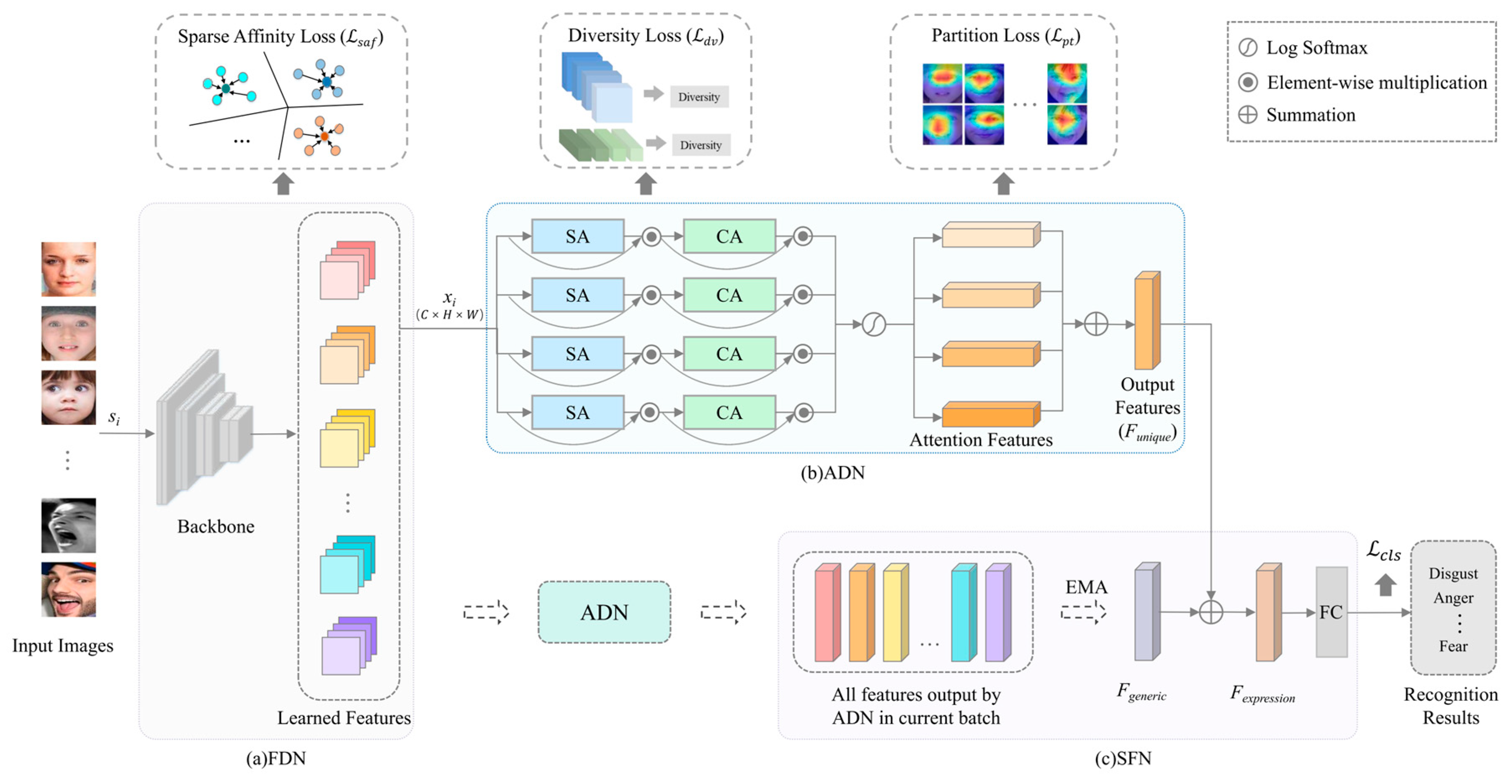

- A novel MAADS is proposed. MAADS effectively addresses the limitations of traditional single-attention networks that often fail to focus adequately on salient facial regions when dealing with inherent similarities, occlusions, and posture changes. By combining the multi-head attention mechanism with multiple loss functions and a feature-sharing mechanism, MAADS can extract highly discriminative attention features, which enhances the model’s ability to recognize facial expressions in natural scenes.

- (2)

- FDN is constructed to maximize the feature distance between different classes while minimizing the distance within the same class. It emphasizes the most salient feature parts by strategically utilizing channel attention weights of the multi-head attention network, thereby assigning varied importance to the basic expression features learned by the backbone network.

- (3)

- ADN is constructed to promote learning across multiple subspaces without auxiliary information like facial landmarks. This is achieved by minimizing the similarity between homogeneous attention maps within the multi-head attention framework. AND can maximize network diversity and focus on distinctly representative local regions.

- (4)

- SFN is designed based on the shared generic feature mechanism. It decomposes facial expression features into common features shared by all expressions and unique features specific to each expression. Utilizing this, the network avoids relearning complete features for each expression from scratch, instead focusing on discerning differences among expression features.

- (5)

- Extensive experiments were conducted on five widely used in-the-wild static FER databases containing RAF-DB, AffectNet-7, AffectNet-8, FERPlus, and SFEW. The results demonstrate that MAADS achieves the recognition accuracy of 92.93%, 67.14%, 64.55%, 91.58%, and 62.41% on RAF-DB, AffectNet-7, AffectNet-8, FERPlus, and SFEW, respectively, which outperforms other state-of-the-art FER methods.

2. Related Work

2.1. FER Based on Attention Mechanism

2.2. Deep Metric Learning for FER

3. Method

3.1. Feature Discrimination Network (FDN)

3.2. Attention Distraction Network (ADN)

3.3. Shared Fusion Network (SFN)

3.4. Joint Loss Function

4. Experiment

4.1. Datasets

4.2. Implementation Details

4.3. Comparison with State-of-the-Art Methods

4.4. Visualization Analysis

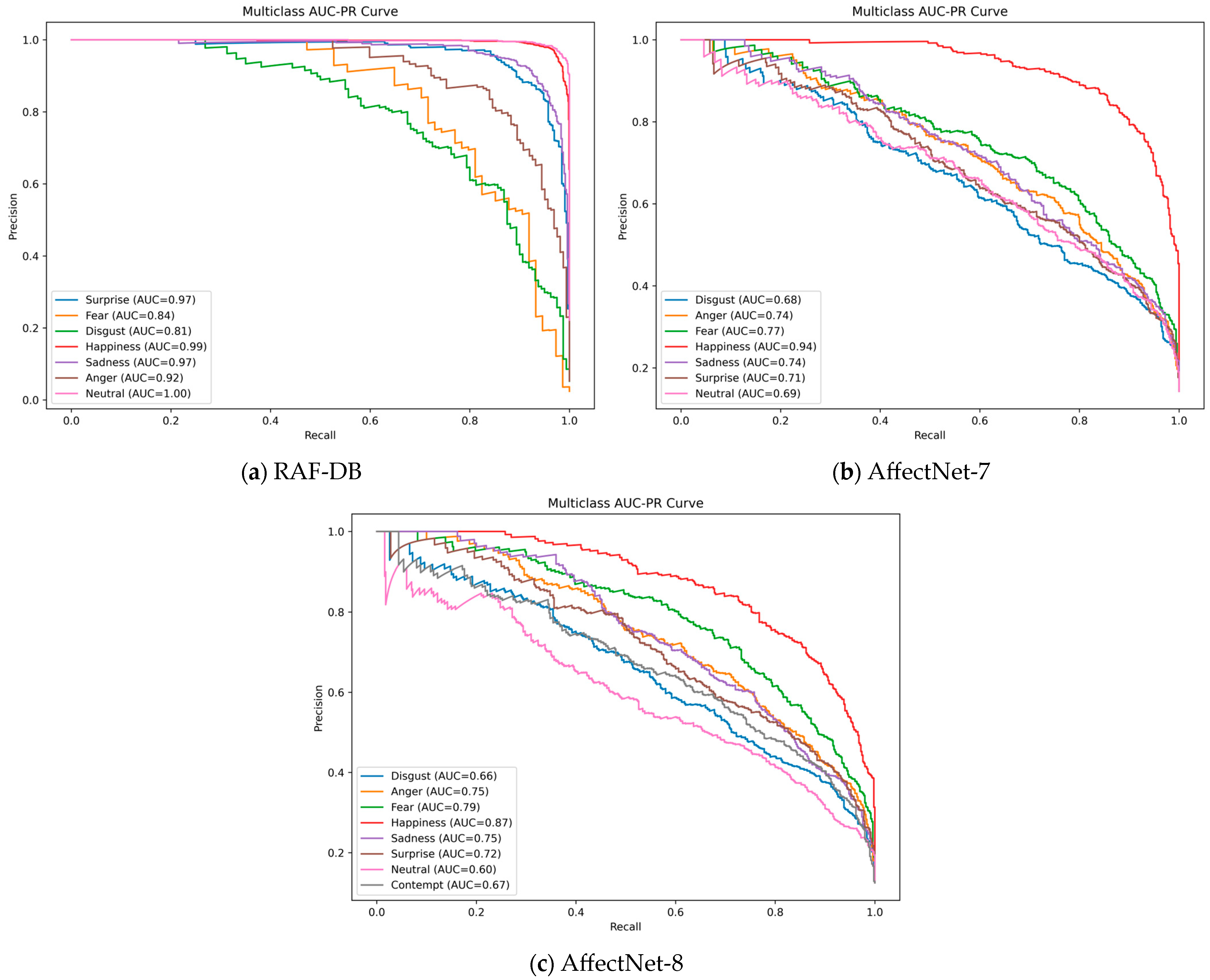

4.5. Precision–Recall Analysis

4.6. Ablation Experiment

4.7. Computational Complexity

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liao, L.; Wu, S.; Song, C.; Fu, J. RS-Xception: A Lightweight Network for Facial Expression Recognition. Electronics 2024, 13, 3217. [Google Scholar] [CrossRef]

- Hickson, S.; Dufour, N.; Sud, A.; Kwatra, V.; Essa, I. Eyemotion: Classifying facial expressions in VR using eye-tracking cameras. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa Village, HI, USA, 7–11 January 2019; pp. 1626–1635. [Google Scholar]

- Roy, S.D.; Bhowmik, M.K.; Saha, P.; Ghosh, A.K. An approach for automatic pain detection through facial expression. Procedia Comput. Sci. 2016, 84, 99–106. [Google Scholar] [CrossRef][Green Version]

- Jordan, S.; Brimbal, L.; Wallace, D.B.; Kassin, S.M.; Hartwig, M.; Street, C.N. A test of the micro-expressions training tool: Does it improve lie detection? J. Investig. Psychol. Offender Profiling 2019, 16, 222–235. [Google Scholar] [CrossRef]

- Chen, Z.; Yan, L.; Wang, H.; Adamyk, B. Improved Facial Expression Recognition Algorithm Based on Local Feature Enhancement and Global Information Association. Electronics 2024, 13, 2813. [Google Scholar] [CrossRef]

- Fasel, B.; Luettin, J. Automatic facial expression analysis: A survey. Pattern Recognit. 2003, 36, 259–275. [Google Scholar] [CrossRef]

- Wen, Z.; Lin, W.; Wang, T.; Xu, G. Distract your attention: Multi-head cross attention network for facial expression recognition. Biomimetics 2023, 8, 199. [Google Scholar] [CrossRef]

- Farzaneh, A.H.; Qi, X. Facial expression recognition in the wild via deep attentive center loss. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 2402–2411. [Google Scholar]

- Marrero Fernandez, P.D.; Guerrero Pena, F.A.; Ren, T.; Cunha, A. Feratt: Facial expression recognition with attention net. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Fan, Y.; Li, V.O.; Lam, J.C. Facial expression recognition with deeply-supervised attention network. IEEE Trans. Affect. Comput. 2020, 13, 1057–1071. [Google Scholar] [CrossRef]

- Wang, X.; Peng, M.; Pan, L.; Hu, M.; Jin, C.; Ren, F. Two-level attention with two-stage multi-task learning for facial emotion recognition. J. Vis. Commun. Image Represent. 2019, 62, 217–225. [Google Scholar]

- Zhou, Y.; Jin, L.; Liu, H.; Song, E. Color facial expression recognition by quaternion convolutional neural network with Gabor attention. IEEE Trans. Cogn. Dev. Syst. 2020, 13, 969–983. [Google Scholar] [CrossRef]

- Li, Y.; Zeng, J.; Shan, S.; Chen, X. Patch-gated CNN for occlusion-aware facial expression recognition. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 2209–2214. [Google Scholar]

- Xia, Y.; Yu, H.; Wang, X.; Jian, M.; Wang, F.-Y. Relation-aware facial expression recognition. IEEE Trans. Cogn. Dev. Syst. 2021, 14, 1143–1154. [Google Scholar] [CrossRef]

- Chen, G.; Peng, J.; Zhang, W.; Huang, K.; Cheng, F.; Yuan, H.; Huang, Y. A region group adaptive attention model for subtle expression recognition. IEEE Trans. Affect. Comput. 2021, 14, 1613–1626. [Google Scholar] [CrossRef]

- Li, Y.; Zeng, J.; Shan, S.; Chen, X. Occlusion aware facial expression recognition using CNN with attention mechanism. IEEE Trans. Image Process. 2018, 28, 2439–2450. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.; Peng, X.; Yang, J.; Meng, D.; Qiao, Y. Region attention networks for pose and occlusion robust facial expression recognition. IEEE Trans. Image Process. 2020, 29, 4057–4069. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.; Liu, Q.; Wang, S. Learning deep global multi-scale and local attention features for facial expression recognition in the wild. IEEE Trans. Image Process. 2021, 30, 6544–6556. [Google Scholar] [CrossRef] [PubMed]

- Yu, W.; Xu, H. Co-attentive multi-task convolutional neural network for facial expression recognition. Pattern Recognit. 2022, 123, 108401. [Google Scholar] [CrossRef]

- Chopra, S.; Hadsell, R.; LeCun, Y. Learning a similarity metric discriminatively, with application to face verification. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; pp. 539–546. [Google Scholar]

- He, X.; Liao, L.; Zhang, H.; Nie, L.; Hu, X.; Chua, T.-S. Neural collaborative filtering. In Proceedings of the 26th International Conference on World Wide Web, Perth, Australia, 3–7 April 2017; pp. 173–182. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Xie, J.; Girshick, R.; Farhadi, A. Unsupervised deep embedding for clustering analysis. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 478–487. [Google Scholar]

- Radenović, F.; Tolias, G.; Chum, O. Fine-tuning CNN image retrieval with no human annotation. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1655–1668. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. Facenet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Meng, Z.; Liu, P.; Cai, J.; Han, S.; Tong, Y. Identity-aware convolutional neural network for facial expression recognition. In Proceedings of the 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), Washington, DC, USA, 30 May–3 June 2017; pp. 558–565. [Google Scholar]

- Liu, X.; Vijaya Kumar, B.; You, J.; Jia, P. Adaptive deep metric learning for identity-aware facial expression recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 20–29. [Google Scholar]

- Li, S.; Deng, W.; Du, J. Reliable crowdsourcing and deep locality-preserving learning for expression recognition in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2852–2861. [Google Scholar]

- Cai, J.; Meng, Z.; Khan, A.S.; Li, Z.; O’Reilly, J.; Tong, Y. Island loss for learning discriminative features in facial expression recognition. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 302–309. [Google Scholar]

- Li, Z.; Wu, S.; Xiao, G. Facial expression recognition by multi-scale cnn with regularized center loss. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 3384–3389. [Google Scholar]

- Zeng, G.; Zhou, J.; Jia, X.; Xie, W.; Shen, L. Hand-crafted feature guided deep learning for facial expression recognition. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 423–430. [Google Scholar]

- Farzaneh, A.H.; Qi, X. Discriminant distribution-agnostic loss for facial expression recognition in the wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 406–407. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Wen, Y.; Zhang, K.; Li, Z.; Qiao, Y. A discriminative feature learning approach for deep face recognition. In Proceedings of the Computer vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part VII 14. pp. 499–515. [Google Scholar]

- Xie, B.; Liang, Y.; Song, L. Diverse neural network learns true target functions. In Proceedings of the Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1216–1224. [Google Scholar]

- Heidari, N.; Iosifidis, A. Learning diversified feature representations for facial expression recognition in the wild. arXiv 2022, arXiv:2210.09381. [Google Scholar]

- Shi, J.; Zhu, S.; Liang, Z. Learning to amend facial expression representation via de-albino and affinity. arXiv 2021, arXiv:2103.10189. [Google Scholar]

- Bruce, V.; Young, A. Understanding face recognition. Br. J. Psychol. 1986, 77, 305–327. [Google Scholar] [CrossRef]

- Calder, A.J.; Young, A.W. Understanding the recognition of facial identity and facial expression. Nat. Rev. Neurosci. 2005, 6, 641–651. [Google Scholar] [CrossRef]

- Yang, H.; Ciftci, U.; Yin, L. Facial expression recognition by de-expression residue learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2168–2177. [Google Scholar]

- Xue, F.; Tan, Z.; Zhu, Y.; Ma, Z.; Guo, G. Coarse-to-fine cascaded networks with smooth predicting for video facial expression recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2412–2418. [Google Scholar]

- Mollahosseini, A.; Hasani, B.; Mahoor, M.H. Affectnet: A database for facial expression, valence, and arousal computing in the wild. IEEE Trans. Affect. Comput. 2017, 10, 18–31. [Google Scholar] [CrossRef]

- Barsoum, E.; Zhang, C.; Ferrer, C.C.; Zhang, Z. Training deep networks for facial expression recognition with crowd-sourced label distribution. In Proceedings of the 18th ACM International Conference on Multimodal Interaction, Tokyo, Japan, 12–16 November 2016; pp. 279–283. [Google Scholar]

- Dhall, A.; Goecke, R.; Lucey, S.; Gedeon, T. Static facial expression analysis in tough conditions: Data, evaluation protocol and benchmark. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; pp. 2106–2112. [Google Scholar]

- Dhall, A.; Goecke, R.; Lucey, S.; Gedeon, T. Collecting Large, Richly Annotated Facial-Expression Databases from Movies. IEEE MultiMedia 2012, 19, 34–41. [Google Scholar] [CrossRef]

- Li, S.; Deng, W. Deep facial expression recognition: A survey. IEEE Trans. Affect. Comput. 2020, 13, 1195–1215. [Google Scholar] [CrossRef]

- Deng, J.; Guo, J.; Ververas, E.; Kotsia, I.; Zafeiriou, S. Retinaface: Single-shot multi-level face localisation in the wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 5203–5212. [Google Scholar]

- Guo, Y.; Zhang, L.; Hu, Y.; He, X.; Gao, J. Ms-celeb-1m: A dataset and benchmark for large-scale face recognition. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part III 14. pp. 87–102. [Google Scholar]

- Li, H.; Wang, N.; Ding, X.; Yang, X.; Gao, X. Adaptively learning facial expression representation via cf labels and distillation. IEEE Trans. Image Process. 2021, 30, 2016–2028. [Google Scholar] [CrossRef] [PubMed]

- She, J.; Hu, Y.; Shi, H.; Wang, J.; Shen, Q.; Mei, T. Dive into ambiguity: Latent distribution mining and pairwise uncertainty estimation for facial expression recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6248–6257. [Google Scholar]

- Ruan, D.; Yan, Y.; Lai, S.; Chai, Z.; Shen, C.; Wang, H. Feature decomposition and reconstruction learning for effective facial expression recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7660–7669. [Google Scholar]

- Xue, F.; Wang, Q.; Guo, G. Transfer: Learning relation-aware facial expression representations with transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3601–3610. [Google Scholar]

- Liu, H.; Cai, H.; Lin, Q.; Li, X.; Xiao, H. Adaptive multilayer perceptual attention network for facial expression recognition. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6253–6266. [Google Scholar] [CrossRef]

- Zeng, D.; Lin, Z.; Yan, X.; Liu, Y.; Wang, F.; Tang, B. Face2exp: Combating data biases for facial expression recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 20291–20300. [Google Scholar]

- Zhang, Y.; Wang, C.; Ling, X.; Deng, W. Learn from all: Erasing attention consistency for noisy label facial expression recognition. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 418–434. [Google Scholar]

- Lee, I.; Lee, E.; Yoo, S.B. Latent-OFER: Detect, mask, and reconstruct with latent vectors for occluded facial expression recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 1536–1546. [Google Scholar]

- Le, N.; Nguyen, K.; Tran, Q.; Tjiputra, E.; Le, B.; Nguyen, A. Uncertainty-aware label distribution learning for facial expression recognition. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 6088–6097. [Google Scholar]

- Li, Y.; Wang, M.; Gong, M.; Lu, Y.; Liu, L. Fer-former: Multi-modal transformer for facial expression recognition. arXiv 2023, arXiv:2303.12997. [Google Scholar]

- Wu, Z.; Cui, J. LA-Net: Landmark-aware learning for reliable facial expression recognition under label noise. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 20698–20707. [Google Scholar]

- Chattopadhay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-cam++: Generalized gradient-based visual explanations for deep convolutional networks. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 839–847. [Google Scholar]

- Zhao, Z.; Liu, Q.; Zhou, F. Robust lightweight facial expression recognition network with label distribution training. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; pp. 3510–3519. [Google Scholar]

- Wang, K.; Peng, X.; Yang, J.; Lu, S.; Qiao, Y. Suppressing uncertainties for large-scale facial expression recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 6897–6906. [Google Scholar]

- Vo, T.-H.; Lee, G.-S.; Yang, H.-J.; Kim, S.-H. Pyramid with super resolution for in-the-wild facial expression recognition. IEEE Access 2020, 8, 131988–132001. [Google Scholar] [CrossRef]

- Chen, D.; Wen, G.; Li, H.; Chen, R.; Li, C. Multi-relations aware network for in-the-wild facial expression recognition. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 3848–3859. [Google Scholar] [CrossRef]

| Method | Year | Backbone | RAF-DB | AffectNet-7 | AffectNet-8 | FERPlus | SFEW |

|---|---|---|---|---|---|---|---|

| RAN [17] | 2020 | ResNet-18 | 86.90 | - | 59.50 | 89.16 | 56.40 |

| KTN [50] | 2021 | ResNet-18 | 88.07 | 63.97 | - | 90.49 | - |

| DMUE [51] | 2021 | ResNet-18 | 89.42 | 63.11 | - | 89.51 | 58.43 |

| MA-Net [18] | 2021 | ResNet-18 | 64.54 | 60.29 | 88.99 | 59.40 | |

| FDRL [52] | 2021 | ResNet-18 | 89.47 | - | - | - | 62.16 |

| TransFER [53] | 2021 | IR-50 | 90.91 | 66.23 | - | 90.83 | - |

| AMP-Net [54] | 2022 | ResNet-34 | 89.25 | 64.54 | 61.74 | - | 61.74 |

| Face2Exp [55] | 2022 | ResNet-50 | 88.54 | 64.23 | - | - | - |

| EAC [56] | 2022 | ResNet-18 | 89.99 | 65.32 | - | 89.64 | - |

| Latent-OFER [57] | 2023 | ResNet-18 | 89.60 | 63.90 | - | - | - |

| LDLVA [58] | 2023 | ResNet-50 | 90.51 | 66.23 | - | - | 59.90 |

| FER-former [59] | 2023 | IR-50 | 91.30 | - | - | 90.96 | 62.18 |

| LA-Net [60] | 2023 | ResNet-50 | 91.56 | 67.09 | 64.54 | 91.78 | - |

| Baseline [7] | 2021 | ResNet-18 | 89.70 | 65.69 | 62.09 | 89.68 | 57.88 |

| MAADS | - | ResNet-18 | 92.93 | 67.14 | 64.55 | 91.58 | 62.41 |

| SAL | DL | SGFM | RAF-DB | AffectNet-7 | AffectNet-8 |

|---|---|---|---|---|---|

| 88.95 | 65.14 | 61.57 | |||

| ✓ | 89.21 | 65.27 | 61.77 | ||

| ✓ | 89.08 | 65.23 | 61.67 | ||

| ✓ | 92.44 | 66.89 | 64.18 | ||

| ✓ | ✓ | 89.34 | 65.34 | 61.86 | |

| ✓ | ✓ | 92.76 | 67.05 | 64.43 | |

| ✓ | ✓ | 92.73 | 67.05 | 64.41 | |

| ✓ | ✓ | ✓ | 92.93 | 67.14 | 64.55 |

| Method | Params (M) | FLOPs (G) | Acc (%) |

|---|---|---|---|

| EfficientFace [62] | 1.28 | 0.15 | 88.36 |

| SCN [63] | 11.18 | 1.82 | 87.03 |

| RAN [17] | 11.19 | 14.55 | 86.90 |

| DAN [7] | 19.72 | 2.23 | 89.70 |

| PSR [64] | 20.24 | 10.12 | 88.98 |

| MA-Net [18] | 50.54 | 3.65 | 88.36 |

| MRAN [65] | 57.89 | 3.89 | 90.03 |

| DACL [8] | 103.04 | 1.91 | 87.78 |

| AMP-Net [54] | 105.67 | 4.73 | 89.25 |

| MAADS | 19.72 | 3.01 | 92.93 |

| SAL | DL | SGFM | FLOPs (G) | Acc (%) | |

|---|---|---|---|---|---|

| Baseline | 2.23 | 88.95 | |||

| Model 1 | ü | 2.65 | 89.21 | ||

| Model 2 | ü | ü | 2.99 | 89.34 | |

| Model 3 (MAADS) | ü | ü | ü | 3.01 | 92.93 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, C.; Liu, J.; Zhao, W.; Ge, Y.; Chen, W. Multi-Head Attention Affinity Diversity Sharing Network for Facial Expression Recognition. Electronics 2024, 13, 4410. https://doi.org/10.3390/electronics13224410

Zheng C, Liu J, Zhao W, Ge Y, Chen W. Multi-Head Attention Affinity Diversity Sharing Network for Facial Expression Recognition. Electronics. 2024; 13(22):4410. https://doi.org/10.3390/electronics13224410

Chicago/Turabian StyleZheng, Caixia, Jiayu Liu, Wei Zhao, Yingying Ge, and Wenhe Chen. 2024. "Multi-Head Attention Affinity Diversity Sharing Network for Facial Expression Recognition" Electronics 13, no. 22: 4410. https://doi.org/10.3390/electronics13224410

APA StyleZheng, C., Liu, J., Zhao, W., Ge, Y., & Chen, W. (2024). Multi-Head Attention Affinity Diversity Sharing Network for Facial Expression Recognition. Electronics, 13(22), 4410. https://doi.org/10.3390/electronics13224410