Abstract

Facial expressions exhibit inherent similarities, variability, and complexity. In real-world scenarios, challenges such as partial occlusions, illumination changes, and individual differences further complicate the task of facial expression recognition (FER). To further improve the accuracy of FER, a Multi-head Attention Affinity and Diversity Sharing Network (MAADS) is proposed in this paper. MAADS comprises a Feature Discrimination Network (FDN), an Attention Distraction Network (ADN), and a Shared Fusion Network (SFN). To be specific, FDN first integrates attention weights into the objective function to capture the most discriminative features by using the proposed sparse affinity loss. Then, ADN employs multiple parallel attention networks to maximize diversity within spatial attention units and channel attention units, which guides the network to focus on distinct, non-overlapping facial regions. Finally, SFN deconstructs facial features into generic parts and unique parts, which allows the network to learn the distinctions between these features without having to relearn complete features from scratch. To validate the effectiveness of the proposed method, extensive experiments were conducted on several widely used in-the-wild datasets including RAF-DB, AffectNet-7, AffectNet-8, FERPlus, and SFEW. MAADS achieves the accuracy of 92.93%, 67.14%, 64.55%, 91.58%, and 62.41% on these datasets, respectively. The experimental results indicate that MAADS not only outperforms current state-of-the-art methods in recognition accuracy but also has a relatively low computational complexity.

1. Introduction

Affective computing is a significant domain of artificial intelligence, which aims at detecting and understanding human emotions. As a form of non-verbal communication, facial expression is a direct and natural means for people to convey their emotions. Recently, with ongoing advancements in machine learning technology, facial expression recognition (FER) has demonstrated potential applications in areas such as student status monitoring, traffic safety, medical diagnoses, and interactive entertainment [1,2,3,4]. The fundamental task of FER is to identify a range of expressions, e.g., happiness, anger, sadness, disgust, fear, surprise, and neutral [5].

Research on FER has advanced significantly, which has evolved from controlled to uncontrolled environments and transitioned from manually designed feature extraction to end-to-end training by using deep learning methods. Deep learning methods usually use multiple layers of nonlinear transformations and establish complex hierarchical architectures to extract higher-level abstract information from the data. Convolutional neural networks (CNNs) are a classical type of deep learning model, which exhibits robustness to variations in both the position and scale of faces [6]. Therefore, CNNs have been widely adopted in the application of FER. In the last few years, the ongoing advancements in deep learning have enabled CNNs to capture the detailed features of facial expressions more and more accurately, which has significantly improved the recognition accuracy on large-scale, in-the-wild facial expression datasets. Nevertheless, FER in the wild currently faces numerous challenges including subtle variations in facial expressions, pronounced pose changes, facial occlusions, considerable intra-class variability, and high inter-class similarity.

To address the above challenges, facial expression recognition models need to be able to identify the most discriminative facial feature regions. Attention mechanisms enable deep learning models to focus on the most salient regions within facial expressions. Hence, in recent years, attention mechanisms have increasingly garnered research interest in the field of FER in real-world scenarios. However, most FER models use only a single attention head, which struggles to focus on multiple critical areas of the face simultaneously, thereby limiting the accuracy of FER to some extent. To address this issue, Wen et al. [7] introduced a Distraction Attention Network (DAN) based on a multi-head cross-attention mechanism for FER in natural scenes. Specifically, DAN extracts features with high-class separability and constructs a non-overlapping attention mechanism to achieve good recognition performance while maintaining low computational overhead. Apart from visual attention mechanisms, metric learning is also an effective method to improve facial expression recognition accuracy. The fundamental concept of metric learning is to define and optimize a loss function, which enables the model to learn an effective distance metric in the feature space. Typically, these loss functions encourage the distance between similar samples to be as small as possible, while the distance between dissimilar samples is maximized [8].

Inspired by the work of Wen et al. [7] and the idea of metric learning, this paper proposes a new Multi-Head Attention Affinity Diversity Sharing Model (MAADS) based on attention mechanisms to further improve the accuracy of FER. The architecture of MAADS mainly comprises three parts: a Feature Discrimination Network (FDN), Attention Distraction Network (ADN), and Shared Fusion Network (SFN).

The main contributions of this paper are outlined as follows:

- (1)

- A novel MAADS is proposed. MAADS effectively addresses the limitations of traditional single-attention networks that often fail to focus adequately on salient facial regions when dealing with inherent similarities, occlusions, and posture changes. By combining the multi-head attention mechanism with multiple loss functions and a feature-sharing mechanism, MAADS can extract highly discriminative attention features, which enhances the model’s ability to recognize facial expressions in natural scenes.

- (2)

- FDN is constructed to maximize the feature distance between different classes while minimizing the distance within the same class. It emphasizes the most salient feature parts by strategically utilizing channel attention weights of the multi-head attention network, thereby assigning varied importance to the basic expression features learned by the backbone network.

- (3)

- ADN is constructed to promote learning across multiple subspaces without auxiliary information like facial landmarks. This is achieved by minimizing the similarity between homogeneous attention maps within the multi-head attention framework. AND can maximize network diversity and focus on distinctly representative local regions.

- (4)

- SFN is designed based on the shared generic feature mechanism. It decomposes facial expression features into common features shared by all expressions and unique features specific to each expression. Utilizing this, the network avoids relearning complete features for each expression from scratch, instead focusing on discerning differences among expression features.

- (5)

- Extensive experiments were conducted on five widely used in-the-wild static FER databases containing RAF-DB, AffectNet-7, AffectNet-8, FERPlus, and SFEW. The results demonstrate that MAADS achieves the recognition accuracy of 92.93%, 67.14%, 64.55%, 91.58%, and 62.41% on RAF-DB, AffectNet-7, AffectNet-8, FERPlus, and SFEW, respectively, which outperforms other state-of-the-art FER methods.

2. Related Work

In this section, we first introduce various implementations of attention mechanisms in FER and then explore the applications of deep metric learning in the field of FER.

2.1. FER Based on Attention Mechanism

Attention mechanisms can effectively focus on visible sections of occluded images and address self-occlusion issues caused by changes in head posture. In the field of FER, attention mechanisms are commonly integrated with other models to enhance the effectiveness of FER methods. FER approaches utilizing attention mechanisms can be categorized into three types: those based on global attention, those based on local attention, and those that integrate both global and local attention.

Global-based methods analyze the face as a whole and emphasize the overall facial features. Fernandez et al. [9] presented a novel FER network based on integrating attention mechanisms, which focuses on the entire face and represents expressions within a Gaussian space. Fan et al. [10] constructed a two-stage training scheme for a deep supervised attention network, which utilizes statistical differences across race, gender, and age to recognize facial expressions. Wang et al. [11] developed a dual-stage multi-task learning framework with two levels of attention for estimating facial emotions in static images. Zhou et al. [12] proposed a quaternion convolutional neural network based on an attention mechanism, which can consider the correlations between color channels and effectively decouple latent geometric information from facial images. Wen et al. [7] explored different regions of facial expressions by using a multi-head cross-attention mechanism and obtained the attention weights for facial expression recognition by fusing attention maps.

Local-based methods segment facial expressions into multiple overlapping or non-overlapping local areas, which can be categorized into facial landmark-based methods and image patch-based methods. Li et al. [13] designed an end-to-end trainable patch-gated convolutional neural network to automatically sense occluded regions and concentrate attention on the most discriminative unoccluded areas. This method can improve the model’s recognition performance of both original facial expression images and generated occluded facial expression images. Xia et al. [14] introduced a relation-aware FER method, which adaptively captures the relationship between key areas, such as the eyes and mouth, thereby making the model focus on the most discriminative areas. Chen et al. [15] introduced an attention model for subtle expression recognition, which crops the image into multiple regions of interest (RoIs) based on facial landmark–emotion correlations and examines the relationships among these RoIs, as well as the interactions between local and global regions.

In recent years, several researchers have fused global-based and local-based approaches to improve FER performance. Li et al. [16] introduced an FER network that fuses multiple representations of facial RoI based on an attention mechanism and uses gated units to weight each representation; thus, this network can concentrate on the most discriminative unobscured areas. Wang et al. [17] developed a regional attention network aimed at adaptively identifying the significance of individual facial areas under complex conditions such as occlusions and posture changes. Zhao et al. [18] proposed a new network architecture by combining global multi-scale and local attention. This network extracts mid-level features from initial data and integrates features from different receptive fields; thus, it can guide the model to focus on significant local features. Yu et al. [19] treated facial keypoint detection as an auxiliary task and proposed an end-to-end collaborative attention multi-task convolutional neural network, which includes a spatial collaborative attention module and a channel attention collaboration module.

Global attention-based methods often overlook specific facial details, while those based on local attention (whether landmark-based or patch-based methods) struggle with adapting to real-world scenarios and typically bring additional computational burdens. In this paper, we propose a global-based approach that utilizes multiple parallel attention networks and various guiding loss functions to enable our model to adaptively focus on several distinct and non-overlapping facial regions, thereby enhancing the attention to crucial local details and avoiding the shortcomings of methods based on global attention.

2.2. Deep Metric Learning for FER

Metric learning seeks to create a metric function that measures the similarity between samples. Deep metric learning (DML) involves explicitly mapping input features through nonlinear transformations, and relocating data points to a new space where they are compared and matched via deep neural network architectures. Metric learning was first introduced to the field of deep learning to address face verification problems [20] and has since been extensively applied to various other tasks [21,22,23,24,25,26].

Meng et al. [27] proposed expression-sensitive contrastive and identity-sensitive contrastive loss functions to ensure that the extracted features are consistent regardless of changes in facial expressions. Liu et al. [28] developed a method that integrates deep metric loss and softmax loss into a unified framework to achieve joint optimization across two fully connected branches. Li et al. [29] designed a new deep locally preserving convolutional neural network to tackle the ambiguity and multimodal issues of facial expressions in natural scenes. This network adds a locally preserving loss layer to the basic network framework to boost the discriminative capability of the learned features. Cai et al. [30] proposed an Island loss to enhance the discriminative power of deep learning features. The Island loss function compacts each category cluster and separates the different cluster centers, thereby making them isolated “Islands”. Li et al. [31] developed a multi-scale convolutional neural network with an integrated attention mechanism, which uses multi-scale CNNs to capture facial features at various scales and employs regularized center loss to optimize model learning. Zeng et al. [32] developed a novel feature loss to reduce discrepancies between features and designed a deep network guided by handcrafted features for expression recognition. Farzaneh et al. [33] proposed a discriminative distribution-agnostic loss that distinguishes the features of a specific category from those of other categories and pulls these features toward their respective category centers in the embedding space, which can adjust the distribution of feature vectors in a highly imbalanced embedding space.

Unlike the aforementioned methods, the proposed sparse affinity loss in this paper not only maximizes inter-class distances and minimizes intra-class distances but also incorporates attention weights to encode crucial information within deep feature vectors. This approach selectively learns discriminative information in the deep embedding space, thereby reducing the model’s reliance on irrelevant data.

3. Method

To improve the performance of FER, it is necessary to address the potential issue that a single attention head may not be able to focus on multiple important facial regions, while also ensuring that the network can identify the most discriminative regions of facial expression features. Additionally, if multiple attention heads are used to extract multiple feature spaces, it is essential to minimize the overlap between these feature spaces as much as possible and effectively integrate different features without significantly increasing model complexity or adding extra information. Based on these ideas and inspired by the work of Wen et al. [7], we develop a new Multi-head Attention Affinity and Diversity Sharing Network (MAADS) for FER.

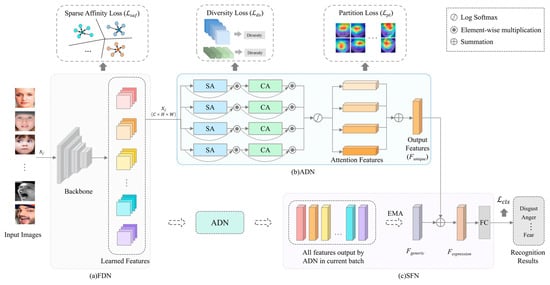

MAADS mainly contains three components, a Feature Discrimination Network (FDN), Attention Distraction Network (ADN), and Shared Fusion Network (SFN), as illustrated in Figure 1. First, given a small batch of facial expression images, FDN uses a lightweight ResNet-18 as its backbone network to acquire feature representations of facial expression images and employs the sparse affinity loss to effectively reduce redundancy and noise in the learned deep features while maintaining intra-class compactness and inter-class separability. Subsequently, ADN generates multiple attention subspaces by using several parallel attention networks, which can ensure that the model focuses on multiple facial salient regions more comprehensively. In ADN, each attention network includes spatial attention (SA) units and channel attention (CA) units. Concurrently, diversity loss and partition loss [7] are employed in ADN to constrain the space learned by the multiple parallel attention networks, which aims to prevent overlap among the attention networks and emphasize crucial salient facial regions. Finally, SFN integrates features from ADN by using a shared generic feature mechanism and updates the current batch of generic features by using the exponential moving average (EMA), which can obtain the final facial expression features used for the category prediction.

Figure 1.

Overview of our proposed MAADS method.

3.1. Feature Discrimination Network (FDN)

FDN utilizes ResNet-18 [34] as its backbone and employs sparse affinity loss to extract the most discriminative deep features. ResNet-18 [34] is one of the classical architectures of CNNs. The residual module of ResNet-18 addresses the problems of gradient vanishing and exploding during training deep networks by directly connecting the input of one layer to the output of the subsequent layer. Given an input expression image, the corresponding output of the backbone network is represented as

where represents the -th input expression sample with a class label , and where Nc is the number of the expression class; represents the output feature from the last convolutional layer of the backbone , and stands for the weight parameters of the network.

Sparse Affinity Loss: To effectively enhance the discriminability of deep learning features, Wen et al. [35] proposed the center loss. This loss function is to learn a center for deep features corresponding to each category and minimize the distance between these features and their respective category centers. However, it does not explicitly constrain the distances between different classes. To address this issue, affinity loss [7] was proposed to increase the distances between different classes by introducing the standard deviation of class centers. However, affinity loss neglects the potential negative impact of redundancy and noise on the discriminative ability of deep feature vectors. In fact, not all elements within feature vectors contribute to discriminability [8] for the image-understanding task. Deep features may include not only crucial information but also noise and redundancy. Treating crucial information, redundancy, and noise equally will increase the difficulty of learning discriminative facial features. Therefore, selectively filtering out irrelevant features while keeping only those that contribute to discriminability could be key to enhancing the model performance. Based on these considerations, we propose a sparse affinity loss to further bolster the model’s discriminative capabilities. For a -class classification problem, the sparse affinity loss is designed to divide the deep embedding space into clusters, and is defined as follows:

where represents the number of images in the training batch, is the deep feature vector obtained by using average pooling to process of the -th sample from the yi-th class, is the corresponding yi-th class center represents the weight of the -th deep feature in the embedding space, denotes element-wise multiplication, and represents the standard deviation among the class centers that is used to control the scaling of inter-class distances.

We use a multi-head attention network to obtain the weights for calculating the sparse affinity loss. This multi-head attention network is the one adopted in the ADN module. We will discuss it in the next section, so it will not be elaborated on here. The attention weights for the sparse affinity loss are computed by averaging the channel attention units:

where K represents the number of attention heads within the network, and is the channel attention weight vector for learned by the -th attention head of the multi-head attention network.

3.2. Attention Distraction Network (ADN)

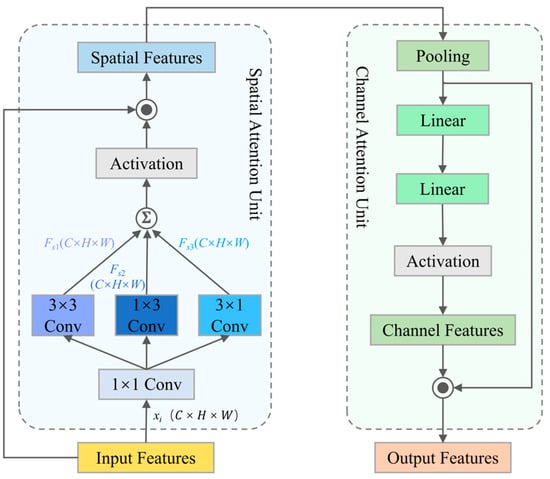

Integrating attention mechanisms into deep learning models enables the model to more precisely focus on the essential parts of the input data, which can enhance the overall performance of FER. Therefore, an ADN is constructed in our proposed MAADS to simultaneously capture diverse discriminative local region features of facial images. ADN first utilizes a multi-head attention network [7] composed of multiple parallel attention heads to strengthen the model’s ability to extract complex feature interactions, then employs the diversity loss for enhancing the diversity of feature representation spaces generated by the multi-head attention network, as depicted in Figure 1. In ADN, each head in the multi-head attention network comprises a spatial attention unit and a channel attention unit, as illustrated in Figure 2. In our implementation, we use padding operations to ensure that the output feature maps (Fs1, Fs2, Fs3) from convolutions with kernel sizes of 3 × 3, 1 × 3, and 3 × 1 have consistent spatial dimensions; thus, these feature maps can be summed together. In Figure 2, (C × H × W) represents the dimension (Channel × Height × Width).

Figure 2.

The structure of the attention head.

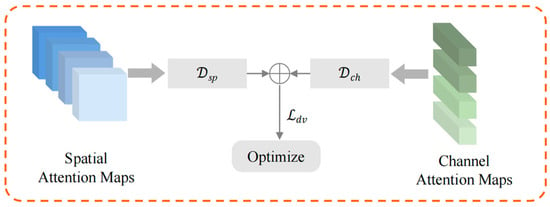

Diversity Loss: Increasing diversity across various network layers can significantly boost the generalization performance of neural networks [36,37]. In deep learning, the diversity of feature representations plays a crucial role in extracting both discriminative and non-redundant features, thereby enhancing the model’s generalization ability for unseen data in visual perception tasks. To guide multiple parallel attention heads to learn differentiated feature representation spaces, we employ the diversity loss [37] to maximize diversity in both the spatial and channel dimensions of multi-head attention networks, as shown in Figure 3.

Figure 3.

Illustration of diversity loss.

In the multi-head attention network, there are total attention heads, with each head comprising a spatial attention unit and a channel attention unit. Total spatial attention maps are derived from attention heads. Suppose that and represent the spatial attention map learned by the -th and t-th attention head, respectively, where k, t ∈ {1, 2, …, K}. The average similarity between any two spatial attention maps, and , is computed by a radial basis function:

where represents the number of feature maps, is a hyperparameter, and and denote the t-th and k-th spatial attention maps with dimensions 1 learned by the -th and -th attention heads for the input feature , respectively.

Similarly, channel attention maps of size are derived from attention heads. The average similarity between any two channel attention maps, and , is computed by a radial basis function:

The values computed by the radial basis function serve to measure the differences in spatial attention maps along spatial dimensions and channel attention maps along channel dimensions. Higher similarity between feature maps indicates lower diversity in the attention network. Consequently, the pairwise similarity between feature maps is computed by the determinant of matrix , which quantitatively represents the diversity

where , and is a matrix whose elements are obtained by Equation (5), while is a matrix whose elements are obtained by Equation (6).

The multi-head attention network, which is composed of multiple spatial and channel attention heads, generates various spatial and channel attention maps. To enhance model robustness by minimizing redundancy among these maps, the diversity loss is introduced and defined as follows:

where stands for the feature diversity calculated along spatial dimensions, and represents the feature diversity calculated along channel dimensions. That is, .

3.3. Shared Fusion Network (SFN)

To further boost the discriminability of the features and simplify the process of feature learning, we utilize SFN to further process the features extracted by ADN. SFN employs the shared generic feature mechanism [38] to integrate features from ADN and utilizes an exponential moving average (EMA) to update the generic features with the data from the current batch, which culminates in the final facial expression features.

Shared Generic Feature Mechanism: Research has shown that facial expression recognition can be achieved by comparing a specific expression with its reference expression (i.e., a neutral expression) [39,40]. Compared to general classification tasks, facial expression recognition extracts the features from the facial images. Because of the similarity of facial structure, the features extracted from different types of facial expression images often have certain similarities. Inspired by previous works [38,41,42], facial expressions can be divided into two parts: a generic part and a unique part. The generic part encompasses the common features of different facial expressions, while the unique part captures the distinctive characteristics of specific expression classes. Thus, facial expression features can be conceptualized as

where represents the generic feature representation of the current batch, and represents the unique feature representation of the current batch.

Given a batch of expression images, the initial generic feature representation is obtained by calculating the average of all expression features :

where represents the average feature of the initial batch, represents the average feature of the current batch, and represents the number of samples in the current batch.

Subsequently, the average feature of the current batch is updated by using EMA:

where denotes the average features of the previous batch, denotes the generic features of the current batch, and is a constant smoothing factor ranging from 0 to 1.

Learning generic features for similar facial expressions simplifies the feature-learning process. This approach enables the network to concentrate on learning the differences based on generic features rather than reconstructing entire facial expressions from scratch. In other words, this mechanism allows the parts that are similar across different facial expressions to be shared; thus, the network only needs to focus on learning the differences between the different facial expressions.

3.4. Joint Loss Function

MAADS is trained in an end-to-end fashion. To optimize MAADS more effectively, a joint optimization strategy is adopted. The overall loss function is described as

where stands for the cross-entropy loss for classification, represents the sparse affinity loss function, denotes the partition loss [7], and represents the diversity loss. In Equation (11), the greater the diversity loss , the better, and the smaller the other loss (, , and ), the better. So, we put a minus sign in front of the diversity loss and then add it with other losses. In this way, the network optimizes the network parameters by minimizing in the training process. Consistent with the settings in Ref. [7], and are empirically set to 1.0. Partition loss [7] is defined as follows:

where stands for the number of samples in the training batch, denotes the dimension of the feature vectors, indicates the variance of the feature vector, and refers to the number of attention heads.

4. Experiment

To assess the effectiveness of the proposed method, extensive experiments were conducted on in-the-wild static facial expression datasets: RAF-DB, AffectNet, FERPlus, and SFEW.

4.1. Datasets

RAF-DB [29] comprises 29,672 facial expression images sourced from the Internet. RAF-DB comprises two subsets: a basic expression dataset and a compound expression dataset. The basic expression subset encompasses seven common types of fundamental emotions, while the compound expression subset includes twelve more complex types of expressions. The basic expression dataset comprises 15,339 images, divided into 12,271 for training and 3068 for testing.

AffectNet [43] is the largest static facial expression database to date. Researchers collected over one million facial expression images using expression keywords on three different search engines. Studies on AffectNet are categorized into research based on seven emotional categories (AffectNet-7) and eight emotional categories (AffectNet-8). AffectNet-7 consists of 287,401 images, of which 283,901 images are used for training, and 3500 images are used for testing. Compared to AffectNet-7, AffectNet-8 includes an additional contempt expression category, which contains 297,568 images in total. In AffectNet-8, 287,568 images are used for training and 4000 images are used for testing.

FERPlus [44] represents an enhanced version of FER2013, which comprises 28,709 training samples, 3589 validation samples, and 3589 test samples. The FER2013 dataset exhibits significant sample labeling errors, resulting in inaccuracies in sample precision. Consequently, the dataset was re-annotated to produce the FERPlus dataset, which adds a contempt expression to the seven existing categories.

SFEW [45] was derived from key frames of the AFEW database [46]. AFEW consists of video clips from various movies with spontaneous expressions, varied head poses, and different occlusions and lighting conditions [47]. The SFEW dataset includes seven basic expression classes, totaling 1766 expression images. These images are divided into 958 as training samples, 436 as validation samples, and 372 as testing samples. The expressions in the training and validation sets are public, while the labels for the test set are not available; thus, we used the validation set as a test set in this study.

4.2. Implementation Details

We carried out experiments on an NVIDIA RTX 3080Ti 12GB GPU platform and employed the PyTorch framework. For the RAF-DB, AffectNet, and FERPlus datasets, we utilize officially provided aligned facial images. For the SFEW dataset, RetinaFace [48] was employed to detect and align faces. All input images were resized to 224 × 224 pixels. To reduce overfitting, we applied various image augmentation methods such as horizontal flipping, random rotation, and random erasing during training. The backbone network (ResNet-18 [34]) was pre-trained on the MS-Celeb-1M database [49]. Our proposed MAADS was trained for 80 epochs across all datasets. We used a batch size of 256 for the RAF-DB, AffectNet, and FERPlus datasets, while for the smaller SFEW dataset, the batch size was reduced to 16. For optimization, referring to the parameter settings in Ref. [7], Stochastic Gradient Descent (SGD) starting with a learning rate of 0.1 was applied to the RAF-DB, FERPlus, and SFEW datasets. For the AffectNet dataset, the Adam optimizer was employed with an initial learning rate of 0.0001, and an imbalance sampling strategy was implemented to address significant class distribution imbalances. Hyperparameters in the total loss function were empirically set to 1.

4.3. Comparison with State-of-the-Art Methods

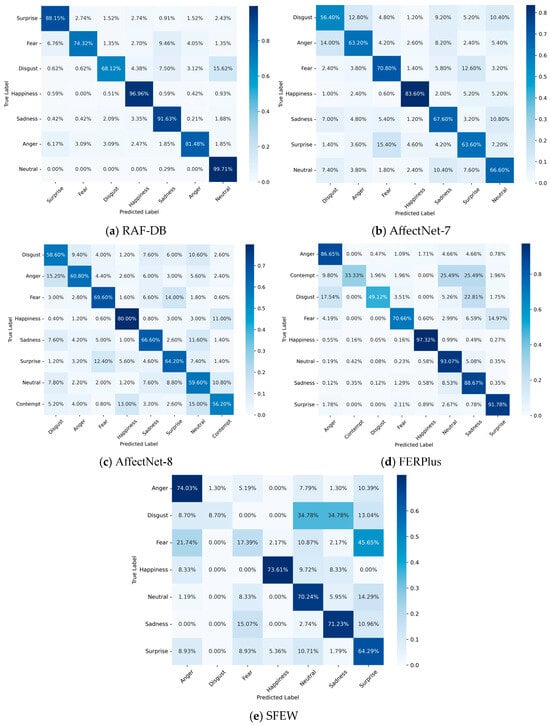

We evaluate the proposed MAADS against a series of state-of-the-art models on five wild datasets: RAF-DB, AffectNet-7, AffectNet-8, FERPlus, and SFEW. The comprehensive comparison results (recognition accuracy) are summarized in Table 1, where the symbols “-” indicate that the authors did not provide results on this dataset. Additionally, Figure 4 displays the confusion matrices illustrating the classification performance of MAADS on each dataset. Next, we analyze the results in Table 1 and Figure 4 for each database individually.

Table 1.

Comparison with state-of-the-art methods on different datasets (%).

Figure 4.

Confusion matrices of the different datasets.

Results on RAF-DB: From Table 1, it can be seen that our proposed MAADS consistently outperforms other current advanced models on the RAF-DB dataset. In particular, MAADS achieves a 1.37% higher accuracy than the state-of-the-art LA-Net. Notably, MAADS employs ResNet-18 as its backbone, which is lighter than the ResNet-34, ResNet-50, and IR-50 utilized by other models. Moreover, MAADS does not employ Transformer-based architectures or rely on auxiliary information like facial landmarks; thus, it maintains a lower computational complexity. The confusion matrix results for MAADS on RAF-DB are presented in Figure 4a. In fact, while most facial expression recognition models typically achieve the highest accuracy for happiness expressions on the RAF-DB dataset, MAADS achieves the highest accuracy for neutral expressions. This result is attributed to the effectiveness of the shared generic feature mechanism, which adeptly captures shared features among different expressions, particularly the neutral aspects. MAADS exhibits relatively lower accuracy for negative expressions like sadness, anger, fear, and disgust, which aligns with the trend seen in most recognition models. This phenomenon reflects the class imbalance problem inherent in in-the-wild datasets and the difficulty in recognizing negative expressions. Overall, MAADS achieves high accuracy across all categories on the RAF-DB dataset.

Results on AffectNet-7: The AffectNet dataset is available in two versions: one with seven classes and another with eight classes. This section focuses on the experimental results for the AffectNet-7 version, as presented in Table 1. Our proposed MAADS achieves a recognition accuracy of 67.14% on AffectNet-7, which slightly outperforms the current state-of-the-art LA-Net method by 0.05%. The confusion matrix results for MAADS on AffectNet-7 are illustrated in Figure 4b. The recognition accuracy for the categories of neutral, sadness, surprise, and anger are relatively similar, which ranges from 63.20% to 67.60%. MAADS achieves the highest accuracy in recognizing happiness expressions and the lowest accuracy in recognizing disgust.

Results on AffectNet-8: AffectNet-8 added a new expression (contempt) in comparison to AffectNet-7. As shown in Table 1, the proposed MAADS achieves a recognition accuracy of 64.55% on AffectNet-8, which is higher than the state-of-the-art LA-Net method by 0.01%. The confusion matrix results for MAADS on AffectNet-8 are displayed in Figure 4c. The recognition accuracy for the categories of disgust, neutral, and anger are consistently similar, which ranges from 58.60% to 60.80%. MAADS achieved the highest accuracy in recognizing happiness expressions, while its performance in the newly added contempt category was the worst. Achieving high recognition accuracy in the contempt category on AffectNet-8 remains a challenge for all facial expression recognition models.

Results on FERPlus: Similarly to AffectNet-8, FERPlus also comprises eight classes. As shown in Table 1, MAADS achieves a slightly lower accuracy compared to LA-Net, which is constructed based on the ResNet-50 backbone. However, it should be noted that MAADS adopts ResNet-18 as the backbone, and compared to the LA-Net version that uses ResNet-18 as the backbone, the recognition accuracy of our proposed MAADS is higher than that of LA-Net by 0.21%. This indicates that under the same backbone conditions, MAADS outperforms LA-Net. We chose ResNet-18 as the backbone of MAADS to keep the network at a low parameter count to reduce computational complexity. The confusion matrix results for MAADS on FERPlus are depicted in Figure 4d. From Figure 4d, we can find that MAADS cannot recognize the contempt category well, which is primarily due to the high similarity between contempt and neutral expressions in the FERPlus dataset. Despite lower accuracy in recognizing contempt, disgust, and fear, MAADS achieves excellent performance in other categories.

Results on SFEW: The SFEW dataset is derived from movie clips recorded in varied environments [47]; thus, the experimental results on this dataset effectively validate the robustness of our proposed MAADS. Compared to the state-of-the-art LA-Net method, MAADS surpasses it by 0.23% on the SFEW dataset, as shown in Table 1. The confusion matrix results for MAADS on the SFEW dataset are depicted in Figure 4e. The highest recognition accuracy is achieved for anger expressions, while the lowest occurs in disgust expressions. Given that the SFEW dataset is smaller and with an extremely unbalanced distribution, such as having only 54 disgust images and 81 fear images in the training set, the lower recognition accuracy in these categories is understandable and acceptable.

In summary, MAADS generally outperforms other comparison methods on the experimental databases. This effectiveness arises from the FDN, AND, and SFN modules within MAADS. Specifically, the FDN module ensures that the network emphasizes the most salient feature components, the AND module directs the network to focus on distinctly representative local regions, and the SFN module enhances the network’s ability to differentiate among expression features.

4.4. Visualization Analysis

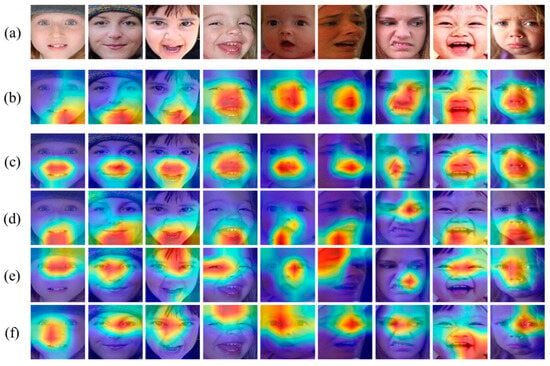

Figure 5 illustrates the attention maps obtained by each attention head in MAADS. In Figure 5, Figure 5a represents the original image input to the model, and Figure 5b illustrates the attention map learned by the attention network only containing a single attention head, while Figure 5c to Figure 5f show the attention maps learned by each of the four different attention heads within the multi-head attention network. By comparing Figure 5b with Figure 5c to Figure 5f, differences in focused regions between single- and multi-head attention can be observed. The single attention head typically focuses on a smaller, specific core area and may overlook other critical regions; the multi-head attention network can focus on multiple distinct facial areas. Multiple parallel attention heads can capture regions of interest more accurately and comprehensively, which ensures that the model can learn diverse facial expression features and richer information to improve the expression classification performance. In the multi-head attention network, certain attention heads may focus on similar facial areas when recognizing different expressions; however, each head also concentrates on unique regions. These unique regions can provide complementary information for facial expression recognition.

Figure 5.

Attention maps are visualized by using GradCAM++ tool [61]. (a) Original image, (b) attention map obtained by using single attention head, (c–f) attention maps obtained by using multiple attention heads.

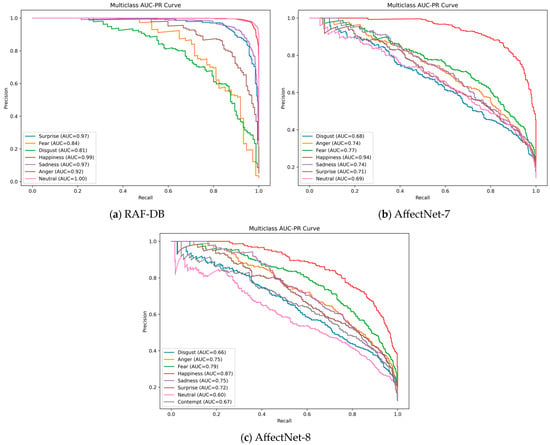

4.5. Precision–Recall Analysis

The precision–recall curves of MAADS for each class on RAF-DB, AffectNet-7, and AffectNet-8 datasets are presented in Figure 6. The x-axis indicates recall, and the y-axis represents precision. As shown in Figure 6a, MAADS exhibits strong recognition capabilities for most expressions on RAF-DB, especially for neutral and happiness expressions, while recognition performance is less robust for fear and disgust expressions. This is likely due to the subtlety of these expressions’ features and the limited number of samples available in the RAF-DB dataset. Across all categories, MAADS achieves more than 80% precision at approximately 50% recall. This indicates that when the model correctly identifies half of the actual positive samples, the probability that these predictions are accurate exceeds 80%, which demonstrates that the model has robust recognition performance. Figure 6b,c present the precision–recall curves on AffectNet-7 and AffectNet-8 datasets. It is apparent that in both datasets, the Area Under the Curve (AUC) values for happiness expressions are the highest. In AffectNet-8, the newly introduced contempt expression achieves an AUC of 0.67.

Figure 6.

Precision–recall curves for each class on RAF-DB, AffectNet-7, and AffectNet-8.

4.6. Ablation Experiment

Influence of Each Module: To validate the effectiveness of the sparse affinity loss, diversity loss, and shared generic feature mechanism in MAADS, ablation experiments are conducted on different datasets. Table 2 lists the ablation experimental results (recognition accuracy), where SAL represents sparse affinity loss, DL represents diversity loss, and SGFM stands for the shared generic feature mechanism. Compared to the baseline model DAN [7] (first row in Table 2), each module enhances the performance of facial expression recognition. The sparse affinity loss and diversity loss have comparable effects on performance, while the shared generic feature mechanism has a more significant impact on the facial expression recognition results. Notably, the sparse affinity loss and diversity loss improve performance with a minimal increase in complexity. When any two modules are introduced into the baseline model, further performance gains are achieved. Ultimately, the optimal results are achieved when all three modules are applied together, which confirms the individual effectiveness of each module.

Table 2.

Evaluation for each module of MAADS on different databases (%).

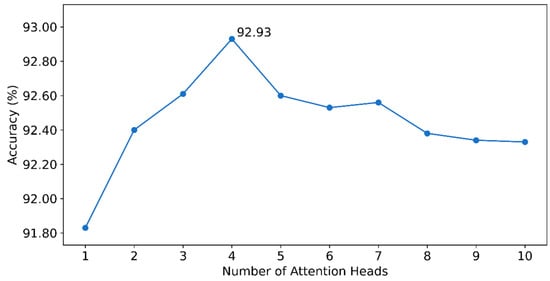

Influence of the Number of Attention Heads: To investigate the impact of the number of attention heads, a series of ablation experiments were conducted on the RAF-DB dataset. As illustrated in Figure 7, accuracy varies with different numbers of attention heads. When only one attention head is utilized, accuracy is at its lowest, which indicates that a single attention head is insufficient for capturing all necessary facial expression features. As the number of attention heads increases, accuracy improves, and it reaches its peak when using four attention heads. This suggests that multiple attention heads can capture facial expression features more effectively by extracting features from various perspectives. However, beyond this peak, further increases in the number of attention heads lead to a decline in performance, which is likely due to the introduction of redundant information. The decline in recognition accuracy becomes more gradual when the number of attention heads exceeds five, which indicates that MAADS has a tolerance for higher numbers of attention heads. Overall, there exists an optimal number of attention heads for MAADS, beyond which any increase or decrease results in a decline in performance. Consequently, the setting of four attention heads is employed in our proposed MAADS.

Figure 7.

The impact of the number of attention heads on the RAF-DB dataset.

4.7. Computational Complexity

Parameters and floating point operations (FLOPs) are critical metrics for assessing model complexity. A detailed comparison of the proposed MAADS with other advanced methods in terms of parameters, FLOPs, and recognition accuracy (Acc) on the RAF-DB dataset is presented in Table 3. MAADS possesses 19.72 M parameters and 3.01 G FLOPs. MAADS does not exhibit an increase in parameters but incurs an additional 0.78 G in FLOPs compared to the baseline model (DAN) [7]. However, MAADS achieves a 3.23% higher accuracy on the RAF-DB dataset than that of the baseline model. Compared to other models, MAADS maintains lower FLOPs. For example, both MA-Net and AMP-Net are attention-based models that focus on local facial regions. MA-Net possesses 50.54 M parameters and 3.65 G FLOPs, while AMP-Net has 105.67 M parameters and 4.73 G FLOPs. Despite having fewer parameters and lower FLOPs, MAADS achieves higher accuracy on RAF-DB than the two models mentioned above, which demonstrates that MAADS is a relatively lightweight model with good recognition performance.

Table 3.

Comparison of computational complexity with other state-of-the-art models.

Table 4 shows the changes in FLOPs and recognition accuracy (Acc) on the RAF-DB dataset when we sequentially introduce our innovations (SAL, DL, and SGFM) into the baseline model [7]. From Table 4, it can be seen that the recognition accuracy gradually improves with the incremental introduction of each improvement (SAL, DL, and SGFM) to the baseline model, which finally achieves an increase of about 5% compared to the baseline model while the FLOPs only increase by 0.78 G. This indicates that our improvements result in a relatively small increase in computational load while significantly enhancing recognition accuracy.

Table 4.

FLOPs vs. recognition accuracy.

5. Conclusions

In this paper, we propose a recognition method called MAADS based on a multi-head attention mechanism, which comprises a Feature Discrimination Network (FDN), an Attention Distraction Network (ADN), and a Shared Fusion Network (SFN). Specifically, sparse affinity loss is designed. Sparse affinity loss can incorporate attention weights into the discriminative loss to encode the most crucial information within deep feature vectors, thereby reducing the model’s focus on irrelevant information and enhancing its generalizability. Furthermore, diversity loss is introduced. Diversity loss can minimize the similarity between feature maps of the multi-head attention network to maximize the network’s diversity and make the network focus on different facial regions. Additionally, the shared generic feature mechanism is employed. The shared generic feature mechanism can decompose facial expression features into common and unique components, thereby simplifying the feature-learning process when dealing with similar expressions. Extensive experiments are conducted on five widely used in-the-wild facial expression datasets to validate the effectiveness and stability of the proposed MAADS. The results demonstrate that MAADS exhibits superior performance.

Author Contributions

Methodology, C.Z. and J.L.; project administration, W.Z.; writing—original draft preparation, C.Z. and J.L.; writing—review and editing, C.Z., J.L., W.Z., W.C. and Y.G.; supervision, W.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Funds of the Education Department of Jilin Province (Nos. JJKH20231083KJ and JLJY202301810566); the Humanities and Social Science Project of the Ministry of Education (No. 23YJCZH319); the Science and Technology Development Plan Project of Jilin Province, China (No. 20240101382JC); the Natural Science Foundation of China (No. 62272096); the Basic Science Research Project of Jiangsu Provincial Department of Education (No. 23KJD520003); and the Fundamental Research Funds for the Central Universities (No. 2412024JD015).

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

Author Wenhe Chen was employed by the company Shanghai Huace Navigation Technology Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Liao, L.; Wu, S.; Song, C.; Fu, J. RS-Xception: A Lightweight Network for Facial Expression Recognition. Electronics 2024, 13, 3217. [Google Scholar] [CrossRef]

- Hickson, S.; Dufour, N.; Sud, A.; Kwatra, V.; Essa, I. Eyemotion: Classifying facial expressions in VR using eye-tracking cameras. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa Village, HI, USA, 7–11 January 2019; pp. 1626–1635. [Google Scholar]

- Roy, S.D.; Bhowmik, M.K.; Saha, P.; Ghosh, A.K. An approach for automatic pain detection through facial expression. Procedia Comput. Sci. 2016, 84, 99–106. [Google Scholar] [CrossRef][Green Version]

- Jordan, S.; Brimbal, L.; Wallace, D.B.; Kassin, S.M.; Hartwig, M.; Street, C.N. A test of the micro-expressions training tool: Does it improve lie detection? J. Investig. Psychol. Offender Profiling 2019, 16, 222–235. [Google Scholar] [CrossRef]

- Chen, Z.; Yan, L.; Wang, H.; Adamyk, B. Improved Facial Expression Recognition Algorithm Based on Local Feature Enhancement and Global Information Association. Electronics 2024, 13, 2813. [Google Scholar] [CrossRef]

- Fasel, B.; Luettin, J. Automatic facial expression analysis: A survey. Pattern Recognit. 2003, 36, 259–275. [Google Scholar] [CrossRef]

- Wen, Z.; Lin, W.; Wang, T.; Xu, G. Distract your attention: Multi-head cross attention network for facial expression recognition. Biomimetics 2023, 8, 199. [Google Scholar] [CrossRef]

- Farzaneh, A.H.; Qi, X. Facial expression recognition in the wild via deep attentive center loss. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 2402–2411. [Google Scholar]

- Marrero Fernandez, P.D.; Guerrero Pena, F.A.; Ren, T.; Cunha, A. Feratt: Facial expression recognition with attention net. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Fan, Y.; Li, V.O.; Lam, J.C. Facial expression recognition with deeply-supervised attention network. IEEE Trans. Affect. Comput. 2020, 13, 1057–1071. [Google Scholar] [CrossRef]

- Wang, X.; Peng, M.; Pan, L.; Hu, M.; Jin, C.; Ren, F. Two-level attention with two-stage multi-task learning for facial emotion recognition. J. Vis. Commun. Image Represent. 2019, 62, 217–225. [Google Scholar]

- Zhou, Y.; Jin, L.; Liu, H.; Song, E. Color facial expression recognition by quaternion convolutional neural network with Gabor attention. IEEE Trans. Cogn. Dev. Syst. 2020, 13, 969–983. [Google Scholar] [CrossRef]

- Li, Y.; Zeng, J.; Shan, S.; Chen, X. Patch-gated CNN for occlusion-aware facial expression recognition. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 2209–2214. [Google Scholar]

- Xia, Y.; Yu, H.; Wang, X.; Jian, M.; Wang, F.-Y. Relation-aware facial expression recognition. IEEE Trans. Cogn. Dev. Syst. 2021, 14, 1143–1154. [Google Scholar] [CrossRef]

- Chen, G.; Peng, J.; Zhang, W.; Huang, K.; Cheng, F.; Yuan, H.; Huang, Y. A region group adaptive attention model for subtle expression recognition. IEEE Trans. Affect. Comput. 2021, 14, 1613–1626. [Google Scholar] [CrossRef]

- Li, Y.; Zeng, J.; Shan, S.; Chen, X. Occlusion aware facial expression recognition using CNN with attention mechanism. IEEE Trans. Image Process. 2018, 28, 2439–2450. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.; Peng, X.; Yang, J.; Meng, D.; Qiao, Y. Region attention networks for pose and occlusion robust facial expression recognition. IEEE Trans. Image Process. 2020, 29, 4057–4069. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.; Liu, Q.; Wang, S. Learning deep global multi-scale and local attention features for facial expression recognition in the wild. IEEE Trans. Image Process. 2021, 30, 6544–6556. [Google Scholar] [CrossRef] [PubMed]

- Yu, W.; Xu, H. Co-attentive multi-task convolutional neural network for facial expression recognition. Pattern Recognit. 2022, 123, 108401. [Google Scholar] [CrossRef]

- Chopra, S.; Hadsell, R.; LeCun, Y. Learning a similarity metric discriminatively, with application to face verification. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; pp. 539–546. [Google Scholar]

- He, X.; Liao, L.; Zhang, H.; Nie, L.; Hu, X.; Chua, T.-S. Neural collaborative filtering. In Proceedings of the 26th International Conference on World Wide Web, Perth, Australia, 3–7 April 2017; pp. 173–182. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Xie, J.; Girshick, R.; Farhadi, A. Unsupervised deep embedding for clustering analysis. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 478–487. [Google Scholar]

- Radenović, F.; Tolias, G.; Chum, O. Fine-tuning CNN image retrieval with no human annotation. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1655–1668. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. Facenet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Meng, Z.; Liu, P.; Cai, J.; Han, S.; Tong, Y. Identity-aware convolutional neural network for facial expression recognition. In Proceedings of the 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), Washington, DC, USA, 30 May–3 June 2017; pp. 558–565. [Google Scholar]

- Liu, X.; Vijaya Kumar, B.; You, J.; Jia, P. Adaptive deep metric learning for identity-aware facial expression recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 20–29. [Google Scholar]

- Li, S.; Deng, W.; Du, J. Reliable crowdsourcing and deep locality-preserving learning for expression recognition in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2852–2861. [Google Scholar]

- Cai, J.; Meng, Z.; Khan, A.S.; Li, Z.; O’Reilly, J.; Tong, Y. Island loss for learning discriminative features in facial expression recognition. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 302–309. [Google Scholar]

- Li, Z.; Wu, S.; Xiao, G. Facial expression recognition by multi-scale cnn with regularized center loss. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 3384–3389. [Google Scholar]

- Zeng, G.; Zhou, J.; Jia, X.; Xie, W.; Shen, L. Hand-crafted feature guided deep learning for facial expression recognition. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 423–430. [Google Scholar]

- Farzaneh, A.H.; Qi, X. Discriminant distribution-agnostic loss for facial expression recognition in the wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 406–407. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Wen, Y.; Zhang, K.; Li, Z.; Qiao, Y. A discriminative feature learning approach for deep face recognition. In Proceedings of the Computer vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part VII 14. pp. 499–515. [Google Scholar]

- Xie, B.; Liang, Y.; Song, L. Diverse neural network learns true target functions. In Proceedings of the Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1216–1224. [Google Scholar]

- Heidari, N.; Iosifidis, A. Learning diversified feature representations for facial expression recognition in the wild. arXiv 2022, arXiv:2210.09381. [Google Scholar]

- Shi, J.; Zhu, S.; Liang, Z. Learning to amend facial expression representation via de-albino and affinity. arXiv 2021, arXiv:2103.10189. [Google Scholar]

- Bruce, V.; Young, A. Understanding face recognition. Br. J. Psychol. 1986, 77, 305–327. [Google Scholar] [CrossRef]

- Calder, A.J.; Young, A.W. Understanding the recognition of facial identity and facial expression. Nat. Rev. Neurosci. 2005, 6, 641–651. [Google Scholar] [CrossRef]

- Yang, H.; Ciftci, U.; Yin, L. Facial expression recognition by de-expression residue learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2168–2177. [Google Scholar]

- Xue, F.; Tan, Z.; Zhu, Y.; Ma, Z.; Guo, G. Coarse-to-fine cascaded networks with smooth predicting for video facial expression recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2412–2418. [Google Scholar]

- Mollahosseini, A.; Hasani, B.; Mahoor, M.H. Affectnet: A database for facial expression, valence, and arousal computing in the wild. IEEE Trans. Affect. Comput. 2017, 10, 18–31. [Google Scholar] [CrossRef]

- Barsoum, E.; Zhang, C.; Ferrer, C.C.; Zhang, Z. Training deep networks for facial expression recognition with crowd-sourced label distribution. In Proceedings of the 18th ACM International Conference on Multimodal Interaction, Tokyo, Japan, 12–16 November 2016; pp. 279–283. [Google Scholar]

- Dhall, A.; Goecke, R.; Lucey, S.; Gedeon, T. Static facial expression analysis in tough conditions: Data, evaluation protocol and benchmark. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; pp. 2106–2112. [Google Scholar]

- Dhall, A.; Goecke, R.; Lucey, S.; Gedeon, T. Collecting Large, Richly Annotated Facial-Expression Databases from Movies. IEEE MultiMedia 2012, 19, 34–41. [Google Scholar] [CrossRef]

- Li, S.; Deng, W. Deep facial expression recognition: A survey. IEEE Trans. Affect. Comput. 2020, 13, 1195–1215. [Google Scholar] [CrossRef]

- Deng, J.; Guo, J.; Ververas, E.; Kotsia, I.; Zafeiriou, S. Retinaface: Single-shot multi-level face localisation in the wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 5203–5212. [Google Scholar]

- Guo, Y.; Zhang, L.; Hu, Y.; He, X.; Gao, J. Ms-celeb-1m: A dataset and benchmark for large-scale face recognition. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part III 14. pp. 87–102. [Google Scholar]

- Li, H.; Wang, N.; Ding, X.; Yang, X.; Gao, X. Adaptively learning facial expression representation via cf labels and distillation. IEEE Trans. Image Process. 2021, 30, 2016–2028. [Google Scholar] [CrossRef] [PubMed]

- She, J.; Hu, Y.; Shi, H.; Wang, J.; Shen, Q.; Mei, T. Dive into ambiguity: Latent distribution mining and pairwise uncertainty estimation for facial expression recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6248–6257. [Google Scholar]

- Ruan, D.; Yan, Y.; Lai, S.; Chai, Z.; Shen, C.; Wang, H. Feature decomposition and reconstruction learning for effective facial expression recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7660–7669. [Google Scholar]

- Xue, F.; Wang, Q.; Guo, G. Transfer: Learning relation-aware facial expression representations with transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3601–3610. [Google Scholar]

- Liu, H.; Cai, H.; Lin, Q.; Li, X.; Xiao, H. Adaptive multilayer perceptual attention network for facial expression recognition. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6253–6266. [Google Scholar] [CrossRef]

- Zeng, D.; Lin, Z.; Yan, X.; Liu, Y.; Wang, F.; Tang, B. Face2exp: Combating data biases for facial expression recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 20291–20300. [Google Scholar]

- Zhang, Y.; Wang, C.; Ling, X.; Deng, W. Learn from all: Erasing attention consistency for noisy label facial expression recognition. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 418–434. [Google Scholar]

- Lee, I.; Lee, E.; Yoo, S.B. Latent-OFER: Detect, mask, and reconstruct with latent vectors for occluded facial expression recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 1536–1546. [Google Scholar]

- Le, N.; Nguyen, K.; Tran, Q.; Tjiputra, E.; Le, B.; Nguyen, A. Uncertainty-aware label distribution learning for facial expression recognition. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 6088–6097. [Google Scholar]

- Li, Y.; Wang, M.; Gong, M.; Lu, Y.; Liu, L. Fer-former: Multi-modal transformer for facial expression recognition. arXiv 2023, arXiv:2303.12997. [Google Scholar]

- Wu, Z.; Cui, J. LA-Net: Landmark-aware learning for reliable facial expression recognition under label noise. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 20698–20707. [Google Scholar]

- Chattopadhay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-cam++: Generalized gradient-based visual explanations for deep convolutional networks. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 839–847. [Google Scholar]

- Zhao, Z.; Liu, Q.; Zhou, F. Robust lightweight facial expression recognition network with label distribution training. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; pp. 3510–3519. [Google Scholar]

- Wang, K.; Peng, X.; Yang, J.; Lu, S.; Qiao, Y. Suppressing uncertainties for large-scale facial expression recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 6897–6906. [Google Scholar]

- Vo, T.-H.; Lee, G.-S.; Yang, H.-J.; Kim, S.-H. Pyramid with super resolution for in-the-wild facial expression recognition. IEEE Access 2020, 8, 131988–132001. [Google Scholar] [CrossRef]

- Chen, D.; Wen, G.; Li, H.; Chen, R.; Li, C. Multi-relations aware network for in-the-wild facial expression recognition. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 3848–3859. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).