Abstract

Automatic modulation recognition (AMR) methods used in advanced wireless communications systems can identify unknown signals without requiring reference information. However, the acceptance of these methods depends on the accuracy, number of parameters, and computational complexity. This study proposes a hybrid convolutional transformer classifier (HCTC) for the classification of unknown signals. The proposed method utilizes a three-stage framework to extract features from in-phase/quadrature (I/Q) signals. In the first stage, spatial features are extracted using a convolutional layer. In the second stage, temporal features are extracted using a transformer encoder. In the final stage, the features are mapped using a deep-learning network. The proposed HCTC method is investigated using the benchmark RadioML database and compared with state-of-the-art methods. The experimental results demonstrate that the proposed method achieves a better performance in modulation signal classification. Additionally, the performance of the proposed method is evaluated when applied to different batch sizes and model configurations. Finally, open issues in modulation recognition research are addressed, and future research perspectives are discussed.

1. Introduction

Automatic modulation recognition (AMR) involves automatically categorizing the modulation type from received complex-valued raw radio signals without any prior understanding of the signal information or channel properties. In modern communications and signal-processing systems, AMR guarantees reliable communication in diverse wireless environments, increases spectral efficiency, and improves signal detection [1]. However, the radio environment is becoming more chaotic because of recent advancements in wireless communications technologies, making AMR more challenging [2].

Conventional AMR techniques can be divided into two basic categories: feature based (FB) and probabilistic likelihood based (LB). LB methods use the likelihood function for each signal and model multiple hypotheses based on the number of signal types. The performance of the classifier is obtained using the likelihood ratio test [3]. In contrast, FB methods involve the extraction of meaningful features from the received signal that are then used for classification [4]. Typical LB methods include the average likelihood ratio test (ALRT) [5,6], generalized likelihood ratio test (GLRT) [7], and hybrid likelihood ratio test (HLRT) [8]. Common types of features used in FB methods include higher-order statistical features [9], cyclic features [10], constellation shapes [11], entropy features [12,13], and transform domain features [14]. The FB methods classify the modulation signal by the further use of classifiers, such as an artificial neural network (ANN) [9,15], a fuzzy-logic-based classifier [16], a support vector machine (SVM) [17,18], a hidden Markov model (HMM) [19], a polynomial classifier [20], and K-nearest neighbors (KNNs) [21]. The LB method possesses high computational complexity and requires knowledge of the communication channel’s information [22]. However, the FB method relies on a known threshold of the extracted features and the classifier, which is not the best suited for the selected features, making it insufficiently discriminative over complex modulation types [23].

For efficient feature extraction and radio signal classification, deep-learning (DL) and machine-learning (ML) techniques address the limitations of the FB and LB methods. A convolutional-neural-network (CNN)-based model was developed that utilized in-phase/quadrature (I/Q) data for AMR [24]. A long short-term memory (LSTM) model was proposed for AMR, considering the variable symbol rate, which outperformed the CNN-based model [25]. A combination of LSTM and a denoising autoencoder framework was proposed, with the framework found to be capable for extracting robust features from noisy signals [26]. Another approach, based on a CNN, was presented that considered more realistic signals with four convolutions and two dense layers [27]. The key findings of the CNN layer’s width and the effect of the depth in improving the accuracy of the model were proposed by West and O’Shea [28], who presented a CNN-LSTM model. Considering the long symbol rate, another approach, based on a CNN, was presented, which used pretraining and fine-tuning of the presented model [29].

Feature extraction from a skip connection was presented using an asymmetric kernel for a multiscale convolutional block to improve the performance [30]. A 1D and a 2D CNN, along with the LSTM and deep-neural-network (DNN) layer architectures, were presented, which showed feature extraction in both the time and space domains [31]. A parallel fusion framework using a 1D CNN to extract amplitudinal and phasic features was proposed [32], achieving improved accuracy and an efficient convergence speed. Another CNN-based approach used the 5G communications system, which exhibited low computational complexity by applying different types of layers for regularization, dropouts, and Gaussian noise layers, thus minimizing the overfitting issue [33]. However, the accuracy of the model was very low [1].

Another CNN-based approach involved combining seven convolutional layers, an LSTM layer, and a DNN to produce a CLDNN [34]. This was compared with a dense connection between the layers (DenseNet) and a residual neural network (ResNet). It was concluded that the CLDNN method was the best fit for the AMR problem. A classifier was proposed using a two-layer gated recurrent unit neural network (GRU) [35]. A three-stage architecture that combined the shallow CNN, GRU, and DNN was proposed, which incorporated pooling layers, a dropout, and several other layers to overcome overfitting [36]. A lightweight phasic parameter estimator model was proposed that extracted features from the spatial and temporal domains using a CNN and a GRU [37].

It is also evident from the literature that the performance of the AMR method relies on the type and combination of input signals after passing through various feature extractors. These may include spectral features [38], statistical features [39], constellation images [40,41], SPWVD images, BJD images [14], I/Q signals [24,27,28,30,34,36,37,42], amplitudes/phases [25,26], I/Q signals with amplitudinal and phasic features [43,44], and I/Q signals with individual I and Q signals [31]. As the received signal is available in the form of an I/Q signal, it is easy to obtain its individual signals rather than convert them to different spectral features or constellation diagrams, which involve a computationally complex mechanism for real-time applications [31]. Therefore, in this study, an I/Q signal was used alongside individual I and Q signals by separation. Recently, many researchers have presented systematic literature reviews on modulation classification [1,45,46,47,48,49,50,51,52]. Notable work has been presented on several AMR algorithms, datasets, and results in [45].

The main contributions of this study are as follows:

- A hybrid architecture for AMR is proposed that combines a convolutional transformer-based DL classifier to minimize computational complexity while retaining classification performance;

- The proposed algorithm is investigated by conducting experiments with different batch sizes, optimizers, and model configurations.

The remainder of this paper is organized as follows. In Section 2, the proposed hybrid convolutional transformer classifier (HCTC) model is introduced along with the signal information. The implementation of the proposed model is briefly explained in Section 3. Section 4 presents the experimental results, and Section 5 presents additional experiments on the proposed model, with different configurations and batch sizes. Section 6 explains open issues and future work, followed by the conclusion in Section 7.

2. Received Signal and Proposed HCTC Model

2.1. Received Signal

The modulation recognition problem can be outlined as a multiclass classification problem. Generally, the received signal from the transmitter is defined, as in [25], as follows:

where is the modulated received signal, h(t) is the impulse response, and n(t) is the additive Gaussian noise (AWGN). More commonly, signal is expressed in the in-phase form, i.e., , and the quadrature components are . A denotes the amplitude of r(t), and denotes the phase of r(t).

In this study, the modulation recognition problem was investigated in the popular RadioML2016a and RadioML2016b datasets [53]. These were synthetic datasets generated by GNU Radio [54]. The RadioML2016a dataset included 11 modulation classes: 64QAM, AM-DSB, AM-SSB, BPSK, CPFSK, GFSK, PAM4, QPSK, WBFM, 16QAM, and 8PSK. The RadioML2016b dataset consisted of 10 modulation cases: 64QAM, AM-DSB, BPSK, CPFSK, GFSK, PAM4, QPSK, WBFM, 16QAM, and 8PSK. Both datasets consisted of (i) I/Q signal components, where the dimensions of each signal were 128 and, collectively, 2 × 128; (ii) modulation signals, where each signal comprised a particular modulation listed above, with signal-to-noise ratios (SNRs) of −20 dB, −18 dB, −16 dB, −14 dB, −12 dB, −10 dB, −8 dB, −6 dB, −4 dB, −2 dB, 0 dB, 2 dB, 4 dB, 6 dB, 8 dB, 10 dB, 12 dB, 14 dB, 16 dB, and 18 dB. However, the number of samples in each dataset varied; the RadioML2016a dataset consists of 220,000 signal samples, whereas RadioML2016b contained 1,200,000 signal samples.

2.2. Proposed HCTC Model

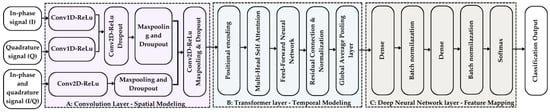

The design of the proposed HCTC model is shown in Figure 1. The model uses input signals in the form of in-phase (I) and quadrature (Q) signals along with the individual components of the I and Q signals. The proposed model comprised three stages: (A) a convolutional layer for the extraction of spatial features, (B) a transformer encoder layer for the extraction of temporal features, and (C) a deep neural layer for feature mapping.

Figure 1.

Proposed HCTC model architecture.

2.2.1. Stage A—Convolutional Layer

In the first stage, the individual I and Q components and the combined I/Q component of the signal were passed. The I and Q components of the input signal were passed to the 1D convolutional layer as ‘conv1_2’ and ‘conv1_3’. The convolutional layers were configured using 64 filters and a kernel size of eight. ‘Causal’ padding was used to maintain the temporal order of the data by ensuring that each output at time t only depended on the current and previous inputs. The weights of the convolutional kernel were initialized using the Glorot uniform distribution, which helped to maintain a balanced variance of activations across layers. The outputs of the conv1_2 and conv1_3 layers were later reshaped and concatenated along with feature dimensions and then passed to the 2D convolutional layer as ‘conv2’. This layer used the same padding to maintain the same dimensions as those of the input. The output of this layer was fed to the max-pooling layer with a pool size of (2,2) and a stride of one, followed by the dropout layer, which was used to prevent overfitting. Furthermore, the outputs of the two 1D convolutional layers and the 2D convolutional layer were merged and passed to the 2D convolutional layer, followed by the max-pooling and dropout layers. To stabilize and accelerate the output of the convolutional layer, batch normalization was applied. All the convolutional layers of the HCTC used (a) the ReLU activation function, which introduced non-linearity to the model; (b) L2 regularization with a regularization factor of 1 × 10−4, which was applied to the kernel weights to mitigate overfitting.

The ReLU activation function was proposed in [55], and is most widely used as activation functions in the context of modulation classification. ReLU exhibits faster learning [56] when compared to other activation functions, such as sigmoidal and tanh, shows better performance and generalization [57,58], and avoids the vanishing gradient problem [58,59]. The ReLU function defined in Equation (2) shows sparsity in the hidden units, which leads to more efficient representation and generalization for classification tasks.

The configuration of stage A of the proposed model is shown in Table 1.

Table 1.

Configuration of the convolutional layer (stage A) of the proposed HCTC model.

2.2.2. Stage B—Transformer Layer

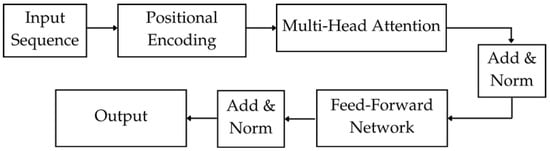

The output of the convolutional layer after reshaping was fed to stage B, the transformer layer. In the proposed model, a transformer encoder layer, as shown in Figure 2, was added, which was motivated by the work presented in [60]. Stage B of the proposed method extracted the temporal features from the input by applying positional encoding.

Figure 2.

Block diagram of the transformer layer (stage B) of the proposed HCTC model.

The following are the details of each block.

Input Sequence: This block receives processed signals from the convolutional layer, formatted as a tensor. It is a feature-rich representation of the original I/Q signals, ready for further processing. The input signal fed to the encoder step is often called input token embedding, which assigns a number of tokens to the input sequence.

Positional Encoding: This layer adds positional information to the input sequences. Because transformers do not inherently understand the sequence order, positional encoding helps the model to capture the temporal relationships between the elements. To adjust the positional encoding, multiplication of the range tensor was performed, followed by computation of the sine and cosine of the angle in radians. The formulae for the position, often referred to as pos in positional encoding (PE), are given in Equations (3) and (4).

where d is the depth of the embedding.

Multihead Attention: This mechanism allows for the model to focus on different parts of the input simultaneously. It computes attention scores for the input sequences, enabling the model to weigh the importance of various signals in the context. In the proposed method, two heads were used, which utilized the concept of multiple attention heads to capture the aspect of the relationship between tokens from different parts of the sequence simultaneously. The results for all the heads were concatenated and linearly transformed.

Add and Norm (Post-Attention): This step implements a residual connection [61], where the output of the multihead attention is added back to its original input.

This preserves the initial signal information and mitigates potential issues related to vanishing gradients in deeper networks. Following the addition, layer normalization [62] is applied to stabilize the output, and it is given by .

Feed-Forward Network (FFN): This component consists of two dense layers with a ReLU activation function. It enhances the model’s capacity to learn complex transformations of token embedding.

Add and Norm (Post-FFN): Similar to the previous block, this step maintains a residual connection; that is, it adds the input from the FFN back to its output, maintaining a residual connection. Following the addition, layer normalization is again applied to stabilize the output.

The details of the transformer encoder configuration are listed in Table 2.

Table 2.

Configuration of the transformer layer (Stage B) of the proposed HCTC model.

2.2.3. Stage C—Feature Mapping

In the final stage (Stage C), several dense layers were applied, followed by batch normalization to enhance the performance of the proposed model. The first dense layer, referred to as fc1, contained 256 units and utilized the scaled exponential linear unit (SELU) activation function, which helped to stabilize the learning process and promote faster convergence. L2 regularization was used with a weight decay factor of 1 × 10−4.

The subsequent dense layer, fc2, had 128 units and employed the SELU activation function. This was accompanied by L2 regularization to maintain consistency throughout the network. This layer was followed by another batch normalization step to further stabilize the activation.

Finally, the output layer was a dense layer with the number of units equal to the number of target classes and used the softmax activation function. The softmax function converted the output to a probability distribution over the classes, allowing for the model to produce class probabilities that summed to one. This configuration was essential for classification tasks, because it enabled the model to predict the most likely class for each input. The details of the configuration of stage C are presented in Table 3.

Table 3.

Configuration of the feature mapping (stage C) of the proposed HCTC model.

3. Implementation Details

The proposed HCTC method was applied to the widely used RadioML2016a and RadioML2016b datasets [53]. The datasets were partitioned into 60% for training, 20% for validation, and the remaining 20% for testing after applying random sampling to each class. The performance of the model was evaluated using categorical cross-entropy as the loss function, and the Adam optimizer was employed to adjust the model parameters. The batch size for the implementation was 400, and the initial learning rate was set to 0.001. To prevent overfitting and ensure effective learning, the validation loss was monitored throughout the training process. If the validation loss did not improve over five consecutive epochs, its value was halved to encourage the model to escape from potential local minima. The training was terminated if the validation loss remained stagnant for 50 epochs, and the model with the best validation performance was retained.

All the experiments were performed using an NVIDIA GeForce RTX 4070 GPU, leveraging Keras 2.10.0 with TensorFlow 2.10.1 as the backend framework to facilitate efficient computation. To benchmark the performance of the proposed model, it was compared with several commonly used AMR models. These included CNN1 [24], CNN2 [27], MCNET [30], IC-AMCNET [33], ResNet [34], DenseNet [34], GRU [35], LSTM [25], DAE [26], MCLDNN [31], CLDNN [28], CLDNN2 [34], CGDNet [36], PET-CGDNN [37], and 1DCNN-PF [32]. In addition, experiments were conducted with the proposed HCTC model using various batch sizes and model parameters.

4. Experimental Results and Discussion

4.1. Classification Accuracy and Confusion Matrix

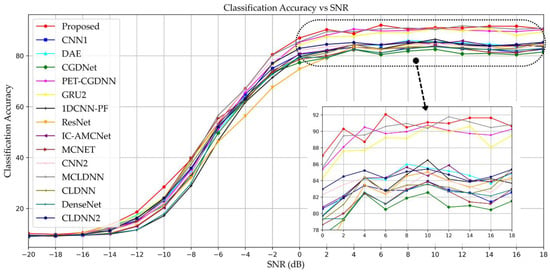

To evaluate the performance of the proposed HCTC model, it was compared with several other models across SNRs in the RadioML2016a dataset. The results, in bold letters in Table 4, demonstrate that the proposed HCTC consistently outperformed the other models in terms of accuracy across the SNRs. At different SNR levels, HCTC achieved high accuracy scores: 10.18% at −20 dB, 87.03% at 0 dB, and 90.61% at 18 dB. When averaging the accuracy across two SNR ranges, HCTC showed average accuracies of 38.04% from −20 dB to 0 dB and 90.45% from 0 dB to 18 dB, both of which were the highest among those of all the models tested. Overall, the average accuracy of the HCTC across the entire SNR range was 61.80%, which ranks it first among all the models. The highest accuracy achieved by the HCTC was 92.06%, which was also the best in comparison to those of the other models. These results demonstrate that the HCTC is highly effective and reliable, particularly for handling various levels of noise.

Table 4.

Accuracy of the proposed HCTC model compared to those of widely used AMR models across various SNRs.

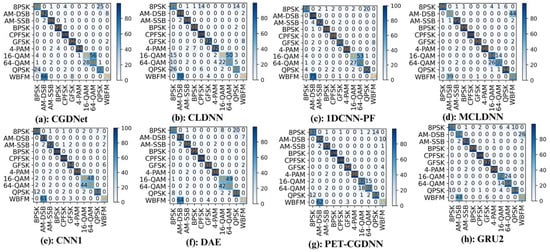

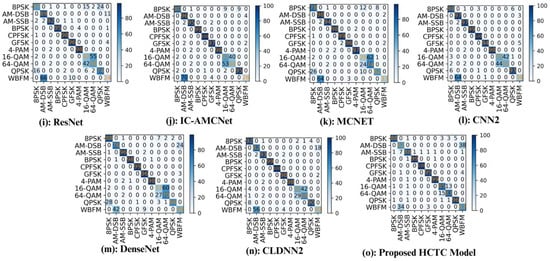

To compare the performance of the proposed HCTC model to those of the other models, detailed confusion matrices are presented for all the widely used AMR models in Figure 3 and Figure 4. In Figure 3, the confusion matrices of (a) CGDNet [36], (b) CLDNN [28], (c) 1DCNN-PF [32], (d) MCLDNN [31], (e) CNN1 [24], (f) DAE [26], (g) PET-CGDNN [37], and (h) GRU [35] are presented, whereas in Figure 4, the confusion matrices of (i) ResNet [34], (j) IC-AMCNet [33], (k) MCNet [30], (l) CNN2 [27], (m) DenseNet [34], (n) CLDNN2 [34], and (o) the proposed HCTC model are shown. In Figure 3 and Figure 4, each row represents an actual label, whereas each column represents a predicted label. Figure 3 and Figure 4 show the classification accuracies of the modulation classes 8PSK, AM-DSB, AM-SSB, BPSK, CPFSK, GFSK, 4-PAM, 16-QAM, 64-QAM, QPSK, and WBFM over the RadioML2016a dataset.

Figure 3.

Confusion matrices of AMR models simulated in the RadioML2016a dataset at a 0 dB SNR: (a) CGDNet; (b) CLDNN; (c) 1DCNN-PF; (d) MCLDNN; (e) CNN1; (f) DAE; (g) PET-CGDNN; (h) GRU2.

Figure 4.

Confusion matrices of AMR models simulated in the RadioML2016a dataset at a 0 dB SNR: (i) ResNet; (j) IC-AMCNet; (k) MCNet; (l) CNN2; (m) DenseNet; (n) CLDNN2; (o) the proposed HCTC model.

The HCTC model achieved accurate classifications for CPFSK, 4-PAM, and BPSK, which were on par with or better than the highest performances of the other models. Notably, for 8PSK, the HCTC model correctly classified 88% of the instances, outperforming models such as CLDNN [28]. Similarly, AM-DSB was classified correctly in 62% of the instances by the HCTC model, with some misclassifications in WBFM, but this performance is still comparable or superior to those of many of the previously proposed models. The performance of the HCTC model in classifying GFSK with 95% correct classifications and 16-QAM with 85% correct classifications also demonstrates its robustness and accuracy, showing fewer misclassifications compared to earlier models. For 64-QAM, the HCTC model accurately classified 83% of the instances, surpassing the performances of models such as PET-CGDNN [37] and DenseNet [34]. Similarly, QPSK was correctly classified in 96% of the instances, indicating highly reliable classifications with minimal errors and improvement over models such as GRU [35] and CNN1 [24]. The WBFM class, although showing some misclassifications, with 38% of the instances misclassified as AM-DSB, still maintained a high level of correct classification, highlighting the overall improved accuracy of the HCTC model.

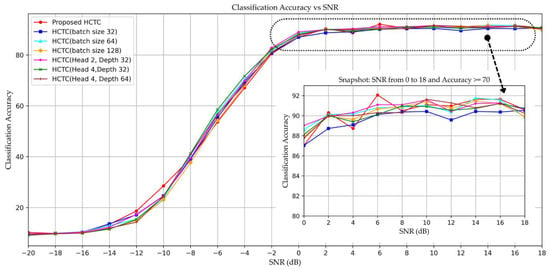

Figure 5 shows an accuracy comparison plot for the proposed model and other algorithms in the RML2016a dataset.

Figure 5.

Accuracy comparison for the proposed HCTC model with other AMR models.

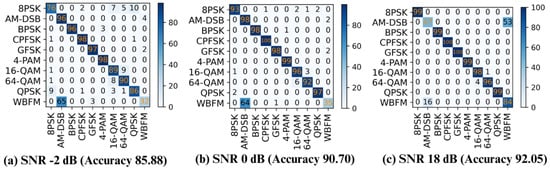

In Figure 5, the inset plots demonstrate how the proposed HCTC model achieved the best performance in the SNR range of 0–18 dB. The model was also tested in the RML2016B dataset, which contained a high number of samples and ten modulation classes. Figure 6 shows the confusion matrices of the proposed HCTC model across SNRs of −2 dB, 8 dB, and 18 dB.

Figure 6.

Confusion matrices of the proposed HCTC model across SNRs of (a) −2 dB, (b) 0 dB, (c) and 18 dB in the RadioML2016b dataset.

The accuracy of the proposed HCTC model compared with those of widely used AMR models across different modulations is shown in Table 5. Among the models evaluated, the proposed HCTC model consistently delivered the best results across most modulation types. For example, it achieved accuracies for BPSK of approximately 98.70% and for CPFSK of approximately 100.00%, indicating robust performance in these modulation schemes. In contrast, the 1DCNN-PF [32] model demonstrated superior accuracy with AM-DSB (100.00%), though it had a lower performance with 16-QAM (41.50%). Models such as CGDNet [36] and CLDNN [28] generally performed well with AM-SSB, with accuracies of 96.00% and 94.50%, while the BPSK accuracy was 99.00% but fell short with 16-QAM and 64-QAM, where the accuracies were notably lower. DenseNet [34] excelled with CPFSK (99.00%) and performed competitively with other modulation schemes. Furthermore, CNN1 [24] and CNN2 [27] showed high accuracies with simpler modulation types, such as BPSK and CPFSK, but their performances receded with more complex schemes, such as 16-QAM and 64-QAM. Notably, the GRU [35] and IC-AMCNet [33] models exhibited good accuracies across various classes, but their performances were less consistent compared to those of the best-performing models.

Table 5.

Accuracy comparison of the proposed HCTC model and those of widely used AMR models at an SNR of 0 dB for various modulation types in the RML2016a dataset.

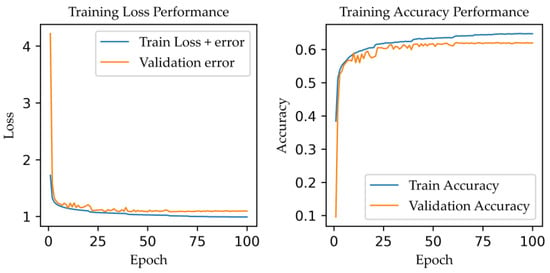

Figure 7 shows the accuracy and loss curves of the proposed HCTC model for training and validation. Figure 7 (left) shows the training loss (blue) and validation loss (orange) across 100 epochs. Both curves exhibit a steady decrease, and they stabilize toward the end of the training, indicating that the model does not overfit the training data.

Figure 7.

Training accuracy and loss curves for the proposed HCTC model.

Figure 7 (right) presents the training and validation accuracy curves. The training accuracy increases rapidly before reaching a plateau, while the validation accuracy follows a similar trend, suggesting that the model is learning in a generalizable manner.

During training, the training loss exhibited a general decreasing trend, signifying that the model was continuously learning and improving its predictions. Notably, in the first 20 epochs, the loss decreased significantly, indicating rapid learning in the initial stages. As the epochs progressed, the loss stabilized, showing the model’s ability to converge. The validation loss followed a similar pattern, confirming that the model did not overfit the training data and generalized well to unseen data. This trend highlights the improved convergence speed and the promising performance of the proposed method. To ensure that the model did not overfit the data, several overfitting prevention strategies were employed during the training process, such as early stopping, reducing the learning rate on the plateau, dropout, and L2 regularization. We utilized early stopping to monitor the validation loss. This method allows for us to halt the training when the validation loss does not improve for a specified number of epochs (patience = 50). By doing so, we prevent the model from overfitting the training data, ensuring it maintains its ability to generalize to new, unseen data. Reducing the learning rate on the plateau was employed to adjust the learning rate dynamically. If the validation loss stagnates, the learning rate is reduced by a certain factor (0.5 in our case). This adjustment helps the model to escape from local minima and can lead to better convergence, promoting improved performance without overfitting. L2 regularization is applied with a kernel regularizer, L2 (1 × 10−4), to the convolutional and dense layers, which helps to reduce overfitting. In addition to this, Gaussian dropout and batch normalization techniques are used, which help to reduce overfitting.

4.2. Numbers of Parameters and FLOPs and Accuracy at 0 dB and the Average Accuracy from 0 to 18 dB

Table 6 presents a comprehensive evaluation of various AMR methods across machine-learning models for the numbers of parameters and floating-point operations per second (FLOPs) and the accuracy at 0 dB and the average accuracy from 0 to 18 dB for the RML2016a dataset. This allowed for a direct comparison of the performances, complexities, and computational efficiencies of the models. The proposed HCTC model achieved the highest performance and a competitive number of parameters and FLOPs and, thus, robustness. Models such as DenseNet and ResNet [34] had higher numbers of parameters and FLOPs and offered superior performances; however, their computational costs increased. There are tradeoffs between the accuracy and the numbers of FLOPs and parameters, which help to select a specific model according to the application requirements.

Table 6.

Numbers of parameters and FLOPs and accuracy at 0 dB and the average accuracy from 0 to 18 dB for the RML2016a dataset.

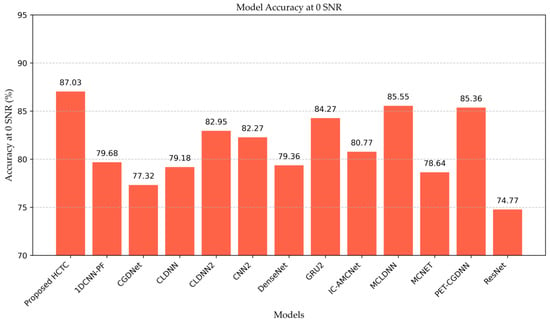

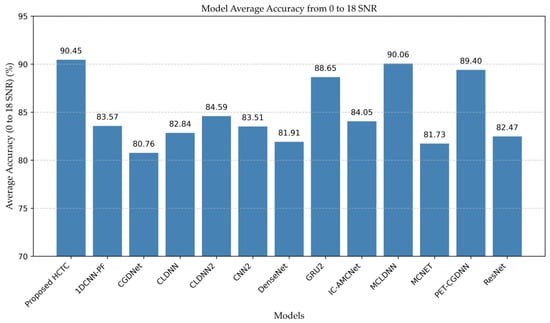

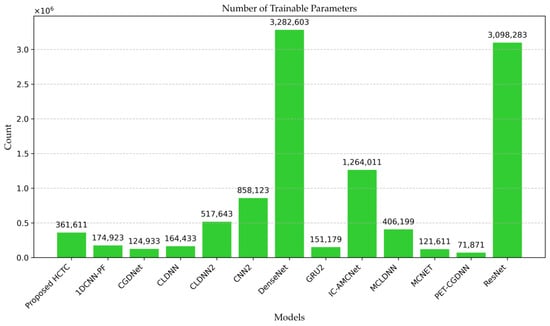

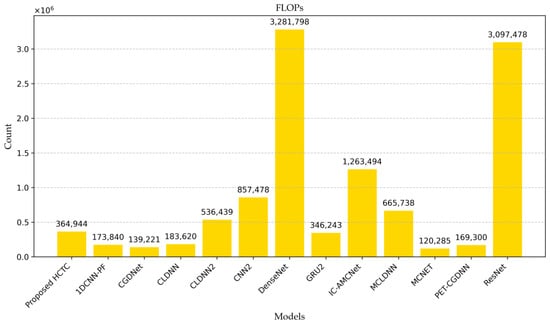

Performance metrics, such as the accuracy and numbers of parameters and FLOPs, are shown in Figure 8, Figure 9, Figure 10 and Figure 11.

Figure 8.

Accuracies of various models when evaluated at an SNR of 0 dB with the RML2016a dataset.

Figure 9.

Average accuracy of various models when evaluated at an SNR of 0 to 18 dB with the RML2016a dataset.

Figure 10.

Numbers of trainable parameters of various models with the RML2016a dataset.

Figure 11.

Numbers of FLOPs of various models with the RML2016a dataset.

Figure 8 presents a bar chart illustrating the accuracy of the various models when evaluated at an SNR of 0 dB with the RML2016a dataset, indicating that the proposed HCTC model performed better than the other models in terms of the accuracy. Furthermore, the proposed HCTC model and the MCLDNN [31] and PET-CGDNN [37] models achieved the highest accuracies, demonstrating their superior performances. In contrast, models such as ResNet [34] and CGDNet [36] exhibited lower accuracies, indicating that they are less effective at an SNR of 0.

Figure 9 shows the average accuracies of the various models over a range of SNRs from 0 to 18 dB with the RML2016a dataset. This broader range of SNR values provided a comprehensive view of the performance of each model. Models such as the proposed HCTC and the MCLDNN [31] and PET-CGDNN [37] models consistently maintained high average accuracies, whereas models such as MCNet [30] and CGDNet [36] showed low average accuracies across the SNR range. This figure shows the overall robustness and reliability of the models and provides insights into how well they perform across a spectrum of practical noise environments.

Figure 10 shows the number of trainable parameters for each model, demonstrating insights into the learning capacity. Models such as DenseNet and ResNet [34] possessed significantly higher numbers of trainable parameters, whereas models such as PET-CGDNN [37], CGDNet [36], and MCNET [30] had fewer parameters, which indicates reduced computational requirements. The proposed HCTC model indicates a competitive number of parameters but shows the highest accuracy at an SNR of 0 dB and over an average SNR range from 0 dB to 18 dB. These results are pivotal for understanding the tradeoffs between model complexity and efficiency, which helps to balance performance with real-time deployment constraints.

Figure 11 shows the number of FLOPs required for each model, highlighting the computational complexity involved in running them. Models such as DenseNet and ResNet [34] required high numbers of FLOPs, indicating that they were computationally intensive. By contrast, models such as CGDNet [36] and MCNET [30] had fewer FLOPs, suggesting that they were more efficient in terms of the computational cost.

5. Experiments on Variations in the Model Configuration and Batch Size

The configuration of the transformer encoder was varied by altering the number of attention heads and the model depth. The proposed HCTC model employed two attention heads, a deeper model with 64 layers, a low feed-forward dimension, and a hidden layer size of 256. HCTC-A included two attention heads, 128 layers of depth, and a feed-forward dimension of 256. These models were labeled as HCTC (Head 2, Depth 32), HCTC (Head 4, Depth 32), and HCTC (Head 4, Depth 64). They were used to compare the performances of the proposed HCTC model. The increased depth allowed for more complex feature extraction and representation, although the reduced number of dimensions may have limited the capacity of the model to encode detailed information.

In the second experiment, the batch size was varied among 32, 64, and 128, which allowed for the investigation of the influences of the batch size on the training and performance of the proposed HCTC model. It is seen in the literature that if the dataset is small, the small batch size will improve the model training, and if the dataset is large, the large batch size will improve the speed of the training. The batch size is typically considered as a power of two, e.g., 2, 4, 8, 16, 32, 64, and so on. As we are dealing with the RML2016A dataset, which has 220,000 samples (large), we are experimenting with batch sizes of 32, 64, 128, and 400. According to the literature, MCLDNN [31] uses a batch size of 400 and shows a competitive performance; we did an ablation experiment and decided to use a batch size of 400 for the proposed method.

By systematically varying the batch size and other architectural parameters, the aim was to optimize the training process and performance of the transformer encoder across different configurations. Figure 12 shows the accuracy curves of the experiment, where the proposed HCTC model had effective modeling parameters, such as two attention heads, a depth of 64, and a feed-forward dimension of 256, with a batch size of 400.

Figure 12.

Results of the ablation study for model accuracy with the RML2016a dataset.

In order to consider two attention heads and a depth of 64, we experimented with the number of heads and the depth of the model. The choices of the two attention heads and the depth of the model as 64 show the balance between the number of parameters and the accuracy. Two heads allow for the model to capture different types of relationships within the data while keeping the architecture manageable. As we increase the depth, there is an increase in the computational cost, as the number of parameters is increased. The results of this experiment are shown in Table 7. A feed-forward dimension of 256 was chosen to provide sufficient capacity for non-linear transformations between the attention layers.

Table 7.

Accuracies and numbers of parameters for specific HCTC models represented by the numbers of heads, depths, and numbers of FF dimensions.

Experiment on Purely Convolutional Model and Pure Transformer Encoder Model

In the proposed HCTC model, we combine a convolutional network architecture with a transformer encoder architecture. Now, we experiment with each individual architecture as (A) a purely convolutional architecture and (B) a pure transformer encoder architecture. The experiments were performed on the RML2016.10a dataset. We considered the recognition accuracy for each of the model architectures and compared it with that for the proposed HCTC model. The recognition accuracy per SNR is presented in Table 8.

Table 8.

Accuracies of the proposed model, purely convolutional model, and pure transformer encoder model in the RML2016a dataset.

This table clearly demonstrates that the proposed HCTC model outperforms the other models. It achieves the highest accuracy among all the sub-models. Notably, the transformer-only model displays a lower accuracy compared to that of the convolution-only model, indicating that the convolutional layer effectively extracts the most significant features from the input dataset. This highlights the importance for combining the convolutional model with the transformer encoder model in enhancing the recognition accuracy.

Table 9 compares the three models—purely convolutional, pure transformer encoder, and the proposed HCTC—across the total number of parameters and average accuracies. The total number of parameters reflects the overall number of parameters in each model and shows that the proposed HCTC model indicates more complexity as compared to those of the other models. Trainable parameters are the parameters adjusted during training, whereas non-trainable parameters remain constant during training. The HCTC model shows the highest number (361,611) of trainable parameters, indicating the model’s learning capacity and superiority over the other models in terms of the accuracy. Table 9 also shows the performance measures in terms of the average accuracies of the models at signal-to-noise ratios of 0–18 dB. The proposed HCTC model exhibits the highest average accuracy (90.46%), showcasing its superior performance compared to those of the purely convolutional (83.73%) and pure transformer models (63.90%).

Table 9.

Parameters and average accuracies of the models.

6. Open Issues in AMR Models and Future Work

Although there are many studies on modulation recognition, several open issues remain that require solutions in future studies. With the recent development of DL-based algorithms, efforts are being made to obtain an effective, reliable, and robust classifier. The remainder of this section discusses the open issues and future work.

Radio signals vary widely based on the modulation scheme, noise levels, and present transmission conditions. Classification is difficult in advanced communications systems that use OFDM- or M-ordered QAM schemes for modulation. Real-world signals are often corrupted by noise and interference from other transmitters, resulting in difficulties in signal classification. Datasets that include all types of modulation signals with appropriate labels are required. Recent models, particularly DL-based models, are computationally complex and require high processing powers and large amounts of memory; therefore, they often cannot be used in real-time processing. It is also observed that models have improved performance for known data but often show poor performance with unknown data. It is sometimes difficult to identify the relevant features that contribute to an accurate classification. Another issue is the fixed length of the input signal; however, real-time signals have varying frame lengths, which may affect the model’s performance.

Future work should concentrate on the combination of feature extraction methods, such as statistical, time, and frequency domain features, or the fusion of these feature extractions for AMR. Furthermore, the image-based representation of input signals, such as eye diagrams, constellation images, AF images, and their combination, should be studied. Image-based representation models provide innovative ways to visualize modulation schemes by transforming time-domain or frequency-domain data to visual formats. These formats are essential for understanding and extracting features from signals prior to applying deep-learning techniques. By employing image representation models, we can enhance our understanding of the underlying patterns within the data, facilitating more intuitive and effective classification processes. There is also a scope for working with the hybrid DL method, in which multiple methods are combined for modulation classification tasks.

7. Potential Real-World Applications

7.1. The Military and Secure Communication

Modulation classification or recognition is the most essential part of secure communication. The proposed modulation classification model differentiates modulation types, which helps to identify legitimate signals and detect potential threats. This leads to operational security in defense communication [3,49]. Recent advancements in AMR technologies are vital for military applications, as highlighted by Wang et al., in [63], who emphasize the necessity of modulation recognition in obstructing enemy communication. Additionally, Peng et al., in [64], discuss the widespread use of modulation recognition technology in modern radio communications, including military electronic warfare, where determining the modulation type of intercepted enemy signals is crucial for successful demodulation and information retrieval. This technology not only aids in electronic warfare support but also plays a significant role in electronic protection against enemies’ jamming signals.

7.2. Cognitive Radio Networks

The proposed modulation classification model can improve dynamic spectral access, enabling the efficient use of available frequencies [49]. As noted by Kim et al., in [65], AMR methods significantly improve the spectral utilization efficiency by allowing for cognitive radios to share licensed bands between licensed and unlicensed users. Moreover, the work of Kim et al. [66] highlights the importance of robust AMC methods in cognitive radio networks, which are essential for meeting the spectral demands of emerging technologies.

7.3. Satellite Communications

The proposed model can be applied in satellite systems to improve signal detection and decoding, leading to more robust communication links in challenging environments [67,68].

7.4. Internet of Things (IoT)

In IoT networks, the proposed model can aid in efficiently classifying modulation types used by various devices, improving resource allocation and energy efficiency. As IoT devices increasingly rely on modern communication methods, such as multiple input–multiple output (MIMO) systems, accurately identifying the modulation types of received signals becomes vital for optimizing the performance. In [69], an efficient convolutional neural network for automatic modulation classification (AMC) is proposed, demonstrating that the authors’ model significantly reduces the number of parameters while maintaining the performance, making it suitable for low-power IoT devices. Additionally, Rashvand et al., in [70], introduce an innovative approach using transformer networks to enhance AMR for IoT applications. Their work emphasizes the importance of real-time processing for edge devices, which is crucial for IoT ecosystems. The proposed methods show promising results in terms of the accuracy and efficiency, addressing the unique challenges faced in the IoT context. Moreover, Zhou et al., in [71], highlight the necessity of AMC in spectral monitoring, especially given the challenges posed by the proliferation of IoT devices that may misuse spectral resources. Their research emphasizes that effective modulation classification can aid in spectral regulation and ensure efficient the utilization of electromagnetic space, even under non-cooperative communication conditions.

7.5. 5G-and-Beyond Communications Systems

In next-generation wireless networks, the proposed model can enhance modulation recognition, facilitating adaptive modulation techniques that optimize data rates and reliability under varying conditions [49]. Clement et al. [72] propose a novel deep-learning architecture that addresses the challenges for identifying modulation types in dynamic and heterogeneous 5G environments, particularly in non-cooperative scenarios. In [73], a deep-learning-based modulation classifier is designed explicitly for communications networks beyond 5G. Their research emphasizes the need for real-time modulation recognition to accommodate a broader range of signals, showcasing that hyperparameter optimization and diverse data formats can enhance classification effectiveness.

8. Conclusions

The HCTC model, referred to as the Hybrid Convolutional Transformer Classifier model, is proposed in this work for modulation classification and shows significant classification performance. The proposed HCTC model explores the use of a convolutional layer, a transformer encoder, and a DNN for the extraction of spatial and temporal features from I/Q sequences. The proposed model was evaluated using the benchmark RadioML database, and its performance was compared with those of 14 widely used algorithms. The performance evaluation metrics included recognition accuracy across SNRs, confusion matrices, and the numbers of parameters used for training and FLOPs. The experimental results demonstrate that the proposed HCTC model achieves a better performance in the classification of the modulation signal. In addition, the proposed HCTC method was studied for different batch sizes and model configurations. In future studies, ensemble hybrid DL methods and image representation models for modulation recognition should be developed.

Author Contributions

Conceptualization, J.D.R. and H.-N.K.; methodology, J.D.R. and H.-N.K.; software J.D.R.; writing—original draft preparation, J.D.R.; writing—review and editing, J.D.R., D.-H.P. and S.-Y.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no funding.

Data Availability Statement

The RadioML database used in this work was downloaded from the website https://www.deepsig.ai/Datasets/ (accessed on 17 October 2023).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Jdid, B.; Hassan, K.; Dayoub, I.; Lim, W.H.; Mokayef, M. Machine Learning Based Automatic Modulation Recognition for Wireless Communications: A Comprehensive Survey. IEEE Access 2021, 9, 57851–57873. [Google Scholar] [CrossRef]

- Ma, H.; Xu, G.; Meng, H.; Wang, M.; Yang, S.; Wu, R.; Wang, W. Cross Model Deep Learning Scheme for Automatic Modulation Classification. IEEE Access 2020, 8, 78923–78931. [Google Scholar] [CrossRef]

- Zhu, Z.; Nandi, A.K. Automatic Modulation Classification: Principles, Algorithms and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2015; ISBN 1118906497. [Google Scholar]

- Ge, Z.; Jiang, H.; Guo, Y.; Zhou, J. Accuracy Analysis of Feature-Based Automatic Modulation Classification via Deep Neural Network. Sensors 2021, 21, 8252. [Google Scholar] [CrossRef] [PubMed]

- Hameed, F.; Dobre, O.A.; Popescu, D.C. On the Likelihood-Based Approach to Modulation Classification. IEEE Trans. Wirel. Commun. 2009, 8, 5884–5892. [Google Scholar] [CrossRef]

- Dobre, O.A.; Hameed, F. Likelihood-Based Algorithms for Linear Digital Modulation Classification in Fading Channels. In Proceedings of the 2006 Canadian Conference on Electrical and Computer Engineering, Ottawa, ON, Canada, 7–10 May 2006; pp. 1347–1350. [Google Scholar]

- Panagiotou, P.; Anastasopoulos, A.; Polydoros, A. Likelihood Ratio Tests for Modulation Classification. In Proceedings of the MILCOM 2000 Proceedings, 21st Century Military Communications, Architectures and Technologies for Information Superiority (Cat. No. 00CH37155), Los Angeles, CA, USA, 22–25 October 2000; IEEE: Piscataway, NJ, USA, 2000; Volume 2, pp. 670–674. [Google Scholar]

- Derakhtian, M.; Tadaion, A.A.; Gazor, S. Modulation Classification of Linearly Modulated Signals in Slow Flat Fading Channels. IET Signal Process. 2011, 5, 443–450. [Google Scholar] [CrossRef]

- Nandi, A.K.; Azzouz, E.E. Algorithms for Automatic Modulation Recognition of Communication Signals. IEEE Trans. Commun. 1998, 46, 431–436. [Google Scholar] [CrossRef]

- Gardner, W.A.; Spooner, C.M. Cyclic Spectral Analysis for Signal Detection and Modulation Recognition. In Proceedings of the MILCOM 88, 21st Century Military Communications—What’s Possible?’, Conference Record, Military Communications Conference, San Diego, CA, USA, 23–26 October 1988; Volume 2, pp. 419–424. [Google Scholar]

- Spooner, C.M.; Brown, W.A.; Yeung, G.K. Automatic Radio-Frequency Environment Analysis. In Proceedings of the Conference Record of the Thirty-Fourth Asilomar Conference on Signals, Systems and Computers (Cat. No. 00CH37154), Pacific Grove, CA, USA, 29 October–1 November 2000; IEEE: Piscataway, NJ, USA, 2000; Volume 2, pp. 1181–1186. [Google Scholar]

- Pawar, S.U.; Doherty, J.F. Modulation Recognition in Continuous Phase Modulation Using Approximate Entropy. IEEE Trans. Inf. Forensics Secur. 2011, 6, 843–852. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, Y.; Zhu, X.; Lin, Y. A Method for Modulation Recognition Based on Entropy Features and Random Forest. In Proceedings of the 2017 IEEE International Conference on Software Quality, Reliability and Security Companion (QRS-C), Prague, Czech Republic, 25–29 July 2017; pp. 243–246. [Google Scholar]

- Zhang, Z.; Wang, C.; Gan, C.; Sun, S.; Wang, M. Automatic Modulation Classification Using Convolutional Neural Network with Features Fusion of SPWVD and BJD. IEEE Trans. Signal Inf. Process. Netw. 2019, 5, 469–478. [Google Scholar] [CrossRef]

- Wong, M.L.D.; Nandi, A.K. Automatic Digital Modulation Recognition Using Artificial Neural Network and Genetic Algorithm. Signal Process. 2004, 84, 351–365. [Google Scholar] [CrossRef]

- Lopatka, J.; Pedzisz, M. Automatic Modulation Classification Using Statistical Moments and a Fuzzy Classifier. In Proceedings of the WCC 2000-ICSP 2000. 2000 5th International Conference on Signal Processing Proceedings. 16th World Computer Congress 2000, Beijing, China, 21–25 August 2000; IEEE: Piscataway, NJ, USA, 2000; Volume 3, pp. 1500–1506. [Google Scholar]

- Muller, F.C.B.F.; Cardoso, C.; Klautau, A. A Front End for Discriminative Learning in Automatic Modulation Classification. IEEE Commun. Lett. 2011, 15, 443–445. [Google Scholar] [CrossRef]

- Park, C.-S.; Choi, J.-H.; Nah, S.-P.; Jang, W.; Kim, D.Y. Automatic Modulation Recognition of Digital Signals Using Wavelet Features and SVM. In Proceedings of the 2008 10th International Conference on Advanced Communication Technology, Gangwon, Republic of Korea, 17–20 February 2008; IEEE: Piscataway, NJ, USA, 2008; Volume 1, pp. 387–390. [Google Scholar]

- Kim, K.; Akbar, I.A.; Bae, K.K.; Um, J.-S.; Spooner, C.M.; Reed, J.H. Cyclostationary Approaches to Signal Detection and Classification in Cognitive Radio. In Proceedings of the 2007 2nd IEEE International Symposium on New Frontiers in Dynamic Spectrum Access Networks, Dublin, Ireland, 17–20 April 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 212–215. [Google Scholar]

- Abdelmutalab, A.; Assaleh, K.; El-Tarhuni, M. Automatic Modulation Classification Using Polynomial Classifiers. In Proceedings of the 2014 IEEE 25th Annual International Symposium on Personal, Indoor, and Mobile Radio Communication (PIMRC), Washington, DC, USA, 2–5 September 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 806–810. [Google Scholar]

- Aslam, M.W.; Zhu, Z.; Nandi, A.K. Automatic Modulation Classification Using Combination of Genetic Programming and KNN. IEEE Trans. Wirel. Commun. 2012, 11, 2742–2750. [Google Scholar]

- Wang, F.; Shang, T.; Hu, C.; Liu, Q. Automatic Modulation Classification Using Hybrid Data Augmentation and Lightweight Neural Network. Sensors 2023, 23, 4187. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.; Lin, M.; Zhang, X.; Huang, Y.; Zhu, Y. Automatic Modulation Classification Based on CNN-Transformer Graph Neural Network. Sensors 2023, 23, 7281. [Google Scholar] [CrossRef] [PubMed]

- O’Shea, T.J.; Corgan, J.; Clancy, T.C. Convolutional Radio Modulation Recognition Networks. In Proceedings of the Engineering Applications of Neural Networks: 17th International Conference, EANN 2016, Aberdeen, UK, 2–5 September 2016; Proceedings 17. Springer: Berlin/Heidelberg, Germany, 2016; pp. 213–226. [Google Scholar]

- Rajendran, S.; Meert, W.; Giustiniano, D.; Lenders, V.; Pollin, S. Deep Learning Models for Wireless Signal Classification with Distributed Low-Cost Spectrum Sensors. IEEE Trans. Cogn. Commun. Netw. 2018, 4, 433–445. [Google Scholar] [CrossRef]

- Ke, Z.; Vikalo, H. Real-Time Radio Technology and Modulation Classification via an LSTM Auto-Encoder. IEEE Trans. Wirel. Commun. 2022, 21, 370–382. [Google Scholar] [CrossRef]

- Tekbıyık, K.; Ekti, A.R.; Görçin, A.; Kurt, G.K.; Keçeci, C. Robust and Fast Automatic Modulation Classification with CNN under Multipath Fading Channels. In Proceedings of the 2020 IEEE 91st Vehicular Technology Conference (VTC2020-Spring), Antwerp, Belgium, 25–28 May 2020; pp. 1–6. [Google Scholar]

- West, N.E.; O’shea, T. Deep Architectures for Modulation Recognition. In Proceedings of the 2017 IEEE International Symposium on Dynamic Spectrum Access Networks (DySPAN), Baltimore, MD, USA, 6–9 March 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Meng, F.; Chen, P.; Wu, L.; Wang, X. Automatic Modulation Classification: A Deep Learning Enabled Approach. IEEE Trans. Veh. Technol. 2018, 67, 10760–10772. [Google Scholar] [CrossRef]

- Huynh-The, T.; Hua, C.H.; Pham, Q.V.; Kim, D.S. MCNet: An Efficient CNN Architecture for Robust Automatic Modulation Classification. IEEE Commun. Lett. 2020, 24, 811–815. [Google Scholar] [CrossRef]

- Xu, J.; Luo, C.; Parr, G.; Luo, Y. A Spatiotemporal Multi-Channel Learning Framework for Automatic Modulation Recognition. IEEE Wirel. Commun. Lett. 2020, 9, 1629–1632. [Google Scholar] [CrossRef]

- Perenda, E.; Rajendran, S.; Pollin, S. Automatic Modulation Classification Using Parallel Fusion of Convolutional Neural Networks. In Proceedings of the BalkanCom’19, Skopje, Republic of Macedonia, 10–12 June 2019. [Google Scholar]

- Hermawan, A.P.; Ginanjar, R.R.; Kim, D.S.; Lee, J.M. CNN-Based Automatic Modulation Classification for beyond 5G Communications. IEEE Commun. Lett. 2020, 24, 1038–1041. [Google Scholar] [CrossRef]

- Liu, X.; Yang, D.; El Gamal, A. Deep Neural Network Architectures for Modulation Classification. In Proceedings of the 2017 51st Asilomar Conference on Signals, Systems, and Computers, Pacific Grove, CA, USA, 29 October–1 November 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 915–919. [Google Scholar]

- Hong, D.; Zhang, Z.; Xu, X. Automatic Modulation Classification Using Recurrent Neural Networks. In Proceedings of the 2017 3rd IEEE International Conference on Computer and Communications (ICCC), Chengdu, China, 13–16 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 695–700. [Google Scholar]

- Njoku, J.N.; Morocho-Cayamcela, M.E.; Lim, W. CGDNet: Efficient Hybrid Deep Learning Model for Robust Automatic Modulation Recognition. IEEE Netw. Lett. 2021, 3, 47–51. [Google Scholar] [CrossRef]

- Zhang, F.; Luo, C.; Xu, J.; Luo, Y. An Efficient Deep Learning Model for Automatic Modulation Recognition Based on Parameter Estimation and Transformation. IEEE Commun. Lett. 2021, 25, 3287–3290. [Google Scholar] [CrossRef]

- Mendis, G.J.; Wei, J.; Madanayake, A. Deep Learning-Based Automated Modulation Classification for Cognitive Radio. In Proceedings of the 2016 IEEE International Conference on Communication Systems (ICCS), Shenzhen, China, 14–16 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–6. [Google Scholar]

- Lee, J.; Kim, B.; Kim, J.; Yoon, D.; Choi, J.W. Deep Neural Network-Based Blind Modulation Classification for Fading Channels. In Proceedings of the 2017 International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Republic of Korea, 18–20 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 551–554. [Google Scholar]

- Peng, S.; Jiang, H.; Wang, H.; Alwageed, H.; Zhou, Y.; Sebdani, M.M.; Yao, Y.-D. Modulation Classification Based on Signal Constellation Diagrams and Deep Learning. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 718–727. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.; Chai, L.; Li, Z.; Zhang, D.; Yao, Y.; Zhang, Y.; Feng, Z. Automatic Modulation Classification Using Compressive Convolutional Neural Network. IEEE Access 2019, 7, 79636–79643. [Google Scholar] [CrossRef]

- Shi, F.; Hu, Z.; Yue, C.; Shen, Z. Combining Neural Networks for Modulation Recognition. Digit. Signal Process. 2022, 120, 103264. [Google Scholar] [CrossRef]

- Zhang, Z.; Luo, H.; Wang, C.; Gan, C.; Xiang, Y. Automatic Modulation Classification Using CNN-LSTM Based Dual-Stream Structure. IEEE Trans. Veh. Technol. 2020, 69, 13521–13531. [Google Scholar] [CrossRef]

- Chang, S.; Huang, S.; Zhang, R.; Feng, Z.; Liu, L. Multitask-Learning-Based Deep Neural Network for Automatic Modulation Classification. IEEE Internet Things J. 2021, 9, 2192–2206. [Google Scholar] [CrossRef]

- Zhang, F.; Luo, C.; Xu, J.; Luo, Y.; Zheng, F.-C. Deep Learning Based Automatic Modulation Recognition: Models, Datasets, and Challenges. Digit. Signal Process. 2022, 129, 103650. [Google Scholar] [CrossRef]

- Xu, J.L.; Su, W.; Zhou, M. Likelihood-Ratio Approaches to Automatic Modulation Classification. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2011, 41, 455–469. [Google Scholar] [CrossRef]

- Abdel-Moneim, M.A.; El-Shafai, W.; Abdel-Salam, N.; El-Rabaie, E.M.; Abd El-Samie, F.E. A Survey of Traditional and Advanced Automatic Modulation Classification Techniques, Challenges, and Some Novel Trends. Int. J. Commun. Syst. 2021, 34, e4762. [Google Scholar] [CrossRef]

- Liu, X.; Li, C.J.; Jin, C.T.; Leong, P.H.W. Wireless Signal Representation Techniques for Automatic Modulation Classification. IEEE Access 2022, 10, 84166–84187. [Google Scholar] [CrossRef]

- Huynh-The, T.; Pham, Q.V.; Nguyen, T.V.; Nguyen, T.T.; Ruby, R.; Zeng, M.; Kim, D.S. Automatic Modulation Classification: A Deep Architecture Survey. IEEE Access 2021, 9, 142950–142971. [Google Scholar] [CrossRef]

- Xiao, W.; Luo, Z.; Hu, Q. A Review of Research on Signal Modulation Recognition Based on Deep Learning. Electronics 2022, 11, 2764. [Google Scholar] [CrossRef]

- Peng, S.; Sun, S.; Yao, Y.D. A Survey of Modulation Classification Using Deep Learning: Signal Representation and Data Preprocessing. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 7020–7038. [Google Scholar] [CrossRef] [PubMed]

- Wang, T.; Yang, G.; Chen, P.; Xu, Z.; Jiang, M.; Ye, Q. A Survey of Applications of Deep Learning in Radio Signal Modulation Recognition. Appl. Sci. 2022, 12, 12052. [Google Scholar] [CrossRef]

- O’shea, T.J.; West, N. Radio Machine Learning Dataset Generation with Gnu Radio. In Proceedings of the GNU Radio Conference, Boulder, CO, USA, 12–16 September 2016; Volume 1. [Google Scholar]

- Deepsig. Available online: https://www.deepsig.ai/datasets/ (accessed on 17 October 2023).

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Ranzato, M.; Monga, R.; Mao, M.; Yang, K.; Le, Q.V.; Nguyen, P.; Senior, A.; Vanhoucke, V.; Dean, J.; et al. On rectified linear units for speech processing. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 3517–3521. [Google Scholar]

- Dahl, G.E.; Sainath, T.N.; Hinton, G.E. Improving deep neural networks for LVCSR using rectified linear units and dropout. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 8609–8613. [Google Scholar]

- Nwankpa, C.; Ijomah, W.; Gachagan, A.; Marshall, S. Activation functions: Comparison of trends in practice and research for deep learning. arXiv 2018, arXiv:1811.03378. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Advances in Neural Information Processing Systems; NIPS: Denver, CO, USA, 2017; Volume 30. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Ba, J.L. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Wang, Y.; Lu, Q.; Jin, Y.; Zhang, H. Communication modulation signal recognition based on the deep multi-hop neural network. J. Frankl. Inst. 2021, 358, 6368–6384. [Google Scholar] [CrossRef]

- Peng, Y.; Guo, L.; Yan, J.; Tao, M.; Fu, X.; Lin, Y.; Gui, G. Automatic Modulation Classification Using Deep Residual Neural Network with Masked Modeling for Wireless Communications. Drones 2023, 7, 390. [Google Scholar] [CrossRef]

- Kim, S.-H.; Kim, J.-W.; Doan, V.-S.; Kim, D.-S. Lightweight Deep Learning Model for Automatic Modulation Classification in Cognitive Radio Networks. IEEE Access 2020, 8, 197532–197541. [Google Scholar] [CrossRef]

- Kim, S.-H.; Kim, J.-W.; Nwadiugwu, W.-P.; Kim, D.-S. Deep Learning-Based Robust Automatic Modulation Classification for Cognitive Radio Networks. IEEE Access 2021, 9, 92386–92393. [Google Scholar] [CrossRef]

- Toro-Betancur, V.; Valencia, A.C.; Bernal, J.I.M. Signal detection and modulation classification for satellite communications. In Proceedings of the 2020 3rd International Conference on Signal Processing and Machine Learning, Beijing, China, 22–24 October 2020; pp. 114–118. [Google Scholar]

- Jiang, J.; Wang, Z.; Zhao, H.; Qiu, S.; Li, J. Modulation recognition method of satellite communication based on CLDNN model. In Proceedings of the 2021 IEEE 30th International Symposium on Industrial Electronics (ISIE), Kyoto, Japan, 20–23 June 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Usman, M.; Lee, J.-A. AMC-IoT: Automatic Modulation Classification Using Efficient Convolutional Neural Networks for Low Powered IoT Devices. In Proceedings of the 2020 International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Republic of Korea, 21–23 October 2020; pp. 288–293. [Google Scholar] [CrossRef]

- Rashvand, N.; Witham, K.; Maldonado, G.; Katariya, V.; Marer Prabhu, N.; Schirner, G.; Tabkhi, H. Enhancing automatic modulation recognition for iot applications using transformers. IoT 2024, 5, 212–226. [Google Scholar] [CrossRef]

- Zhou, Q.; Zhang, R.; Zhang, F.; Jing, X. An automatic modulation classification network for IoT terminal spectrum monitoring under zero-sample situations. EURASIP J. Wirel. Commun. Netw. 2022, 2022, 25. [Google Scholar] [CrossRef]

- Clement, J.C.; Indira, N.; Vijayakumar, P.; Nandakumar, R. Deep learning based modulation classification for 5G and beyond wireless systems. Peer Peer Netw. Appl. 2021, 14, 319–332. [Google Scholar] [CrossRef]

- Kaya, O.; Karabulut, M.A.; Shah, A.F.M.S.; Ilhan, H. Modulation Classifier Based on Deep Learning for Beyond 5G Communications. In Proceedings of the 2024 47th International Conference on Telecommunications and Signal Processing (TSP), Prague, Czech Republic, 10–12 July 2024; pp. 336–339. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).