Abstract

Federated learning allows data to remain decentralized, and various devices work together to train a common machine learning model. This method keeps sensitive data local on devices, protecting privacy. However, privacy protection and non-independent and identically distributed data are significant challenges for many FL techniques currently in use. This paper proposes a personalized federated learning method (FedKADP) that integrates knowledge distillation and differential privacy to address the issues of privacy protection and non-independent and identically distributed data in federated learning. The introduction of a bidirectional feedback mechanism enables the establishment of an interactive tuning loop between knowledge distillation and differential privacy, allowing dynamic tuning and continuous performance optimization while protecting user privacy. By closely monitoring privacy overhead through Rényi differential privacy theory, this approach effectively balances model performance and privacy protection. Experimental results using the MNIST and CIFAR-10 datasets demonstrate that FedKADP performs better than conventional federated learning techniques, particularly when handling non-independent and identically distributed data. It successfully lowers the heterogeneity of the model, accelerates global model convergence, and improves validation accuracy, making it a new approach to federated learning.

1. Introduction

The rise of big data has transformed information processing and knowledge acquisition. Data-driven machine learning techniques offer great potential to improve efficiency across industries, driving innovation in areas such as healthcare and finance. Machine learning has undoubtedly become a key driver of modern technological innovation. However, this wave of innovation also poses privacy and security challenges, especially when it comes to sensitive data such as personal medical records [1] and financial information [2]. Ensuring that personal privacy is not compromised while reaping the benefits of data-driven machine learning has attracted extensive attention from academia, industry, and policy makers.

In this context, federated learning (FL) [3] is a novel machine learning technique that enables multiple participants to work together to jointly train a generic model without exchanging data. This approach optimizes the value of distributed data while protecting privacy and reducing the chance of data leakage. It provides an attractive solution for data-rich but highly privacy-sensitive industries [4].

Although FL avoids direct data exchange, model parameters may still reveal sensitive information. Malicious participants or attackers may be able to infer data features by analyzing gradients, which can be inferred or reconstructed even if the data remain localized [5]. Therefore, further enhancing privacy protection in FL to prevent various potential privacy violations has become a research priority in this area. Abadi et al. [6] were among the first to combine differentially private techniques with deep learning, proposing a differentially private (DP) stochastic gradient descent optimization algorithm. While they found that the loss in model quality when maintaining DP may be acceptable, this loss may still be significant for some applications. Noble et al. [7] introduced a differentially private FL algorithm tailored for highly heterogeneous data and analyzed the tradeoffs between privacy and model utility. However, applying the same level of noise to all clients may not provide the optimal balance between utility and privacy. By introducing noise prior to model aggregation, Kang et al.’s [8] novel differential privacy-based methodology effectively stops information leakage and offers a thorough convergence analysis. However, in real world scenarios, data are often non-independent and identically distributed (non-IID), which may affect the actual performance of the algorithm. Thus, when applying DP to FL, how to balance the effectiveness of model training with privacy protection becomes an urgent problem to solve.

In addition, another major difficulty is the non-IID character of data in FL contexts [9]. In the real world, data originating from different environments and participants lead to significant variations in data distributions, adding additional difficulties to model training [10,11]. Effectively addressing this issue to improve model generalization and overall performance is another important research direction in the field of FL. While privacy protection was taken into consideration, Jeong et al. [12] suggested using joint distillation and augmentation to maximize machine learning training on devices. However, it is still unclear how to ensure the privacy of the created data and quantify privacy protection. Fallah et al. [13] introduced a personalized FL approach using the model-agnostic meta-learning framework, which allows users to fine-tune models according to their local data for personalization. However, meta-learning introduces additional computational steps, and although approximation algorithms have been proposed to reduce the computational burden, they still impose significant computational costs on resource-constrained devices. Tursunboev et al. [14] proposed a new hierarchical federated learning algorithm that was specially used for edge-aided unmanned aerial vehicle networks and demonstrated strong performance in handling non-IID data. However, this method may face challenges in communication overhead and resource management in a wider range of application scenarios. Thus, when dealing with FL in non-IID environments, finding an ideal solution that enhances model performance and generalization, improves communication efficiency, and ensures privacy protection remains an unresolved challenge. This highlights the ongoing need to develop innovative approaches to effectively address these multifaceted issues.

In summary, existing research faces the following major challenges in integrating DP and knowledge distillation into FL. First, although FL avoids direct exchange of data, the exchange of model parameters and updates may still expose sensitive information, posing the risk of inference or reconstruction attacks. Second, the integration of DP and knowledge distillation is difficult to balance between privacy-preserving capabilities and model validity, and the adoption of uniform noise will hinder performance improvement in the later stages of training. Third, the non-ID characteristics of data in FL environments complicate the integration of knowledge distillation, and the inconsistency of data distribution among clients can hinder the knowledge transfer process and affect the generalization and performance of the model. This work proposes a customized FL strategy (FedKADP) based on knowledge refinement and DP to overcome these issues. Its main contributions are as follows:

- (1)

- A personalized FL framework combining knowledge distillation and DP is proposed to cope with non-IID data environments, incorporating knowledge distillation in the training of local models to enhance the model’s generalization capabilities and applying differential privacy mechanisms to noise perturbation of the output parameters;

- (2)

- A bidirectional feedback mechanism is proposed that adaptively adjusts the knowledge distillation and DP parameters according to the performance of the model and the user’s privacy requirements, balancing the relationship between privacy protection and model performance to achieve the optimal model performance under the demanded privacy intensity;

- (3)

- It is experimentally demonstrated that FedKADP improves the robustness and accuracy of data processing in non-IID data environments, maximizes model performance with the same privacy-preserving strength, and significantly reduces the communication cost.

2. Relevant Definitions

2.1. Federated Learning

Federated learning is a distributed machine learning technique that eliminates the requirement to aggregate data from various local data sources into a single central location in order to train models. In FL, training is done locally, and the global model can be updated by aggregating changed model parameters and sending them to a central server using a communication protocol. Using a decentralized learning approach, model training is possible while data are locally kept, reducing the requirement for data transmission and protecting user privacy. Formally, the optimization objective of traditional FL can be expressed as follows:

Here, represents the global model parameters; is the number of clients participating in FL; is the number of data samples of the -th client; is the sum of the data samples across all clients, which is denoted as ; and is the local loss function of the -th client.

Each client independently updates its model parameters based on its local dataset and then sends these updates to a central server. The central server aggregates these updates into a new global model. This aggregation process typically uses a weighted average, which can be expressed as follows:

Here, is the model parameters of the -th client at time step , and represents the updated global model parameters.

FL aims to successfully train a global model using these repeated procedures without explicitly exchanging data. This method preserves privacy while fostering collaborative learning across devices and helps address the data silos issue.

2.2. Knowledge Distillation

A model compression technique called knowledge distillation [15] transfers knowledge from a complicated model to a reduced model in an effort to minimize the size and computing cost of the model. By “distilling” the output data from the complex model into the simplified model, this strategy is put into practice. The simplified model in knowledge distillation is known as the student model, whereas the complex model is generally referred to as the teacher model.

Here, a teacher model is represented by a complex model , and a student model is represented by a simplified model . Given an input sample and the “hard label” , the soft probability distribution output using the teacher model is , and the soft probability distribution output using the student model is . Thus, the loss function for knowledge distillation can be expressed as follows:

Here, is a hyperparameter that balances two loss terms, denotes the cross-entropy loss function, and denotes the Kullback–Leibler divergence. The first term measures the cross-entropy loss between the “hard labels” and the predictions of the student model, while preserving the ability of the student model to fit the “hard labels”. The second measure is the similarity of outputs between the teacher model and the student model, specifically the Kullback–Leibler divergence between the “soft labels” of the teacher model and the predictions of the student model. By minimizing the knowledge distillation loss function, the student model can mimic the behavior of the teacher model as closely as possible without significant loss of accuracy, resulting in model compression and speedup.

2.3. Rényi Differential Privacy

The goal of the privacy protection mechanism known as DP [16] is to safeguard an individual’s privacy by adding noise to output results. The fundamental goal of DP is to secure individual privacy by guaranteeing that the probability distribution of the output results is almost the same regardless of who participates. Given a deep learning mechanism and a privacy budget , for any two neighboring datasets and , and for any output set , if the following condition is satisfied:

then the mechanism is said to satisfy . This satisfies the properties of sequential composition [17] and parallel composition [18] of algorithms. Here, is the probability of failure, and is the privacy budget. In addition, is an important parameter in the definition of differential privacy, which allows us to introduce some flexibility in privacy assurance. Specifically, represents the probability that the differential privacy algorithm may “fail” or “violate” the privacy guarantee. When , the algorithm has pure differential privacy, which means that the guarantee of differential privacy is absolutely established under any circumstances. When , the algorithm has approximate differential privacy. At this point, the algorithm still satisfies the differential privacy in most cases. However, in rare cases, privacy leakage may occur, and the probability of such privacy leakage will not exceed . Therefore, allows a small probability, and the privacy guarantee of the algorithm may not fully meet the expectations.

Rényi differential privacy [19] (RDP) is an extension of DP, and the Gaussian mechanism is a fundamental method for achieving RDP. It introduces Rényi sensitivity, which measures the divergence between two distributions using Rényi entropy. This approach is more flexible than the global sensitivity used in traditional DP, allowing for tailored levels of privacy protection according to specific application contexts and requirements. When applying composition mechanisms, there is no need for special higher-order composition theorems, as the concept of RDP can significantly optimize the computation of privacy overhead.

Definition 1

([19]). Given a training mechanism , denotes the probability density of obtained by its training on the dataset . If the mechanism satisfies under all , then it also satisfies the following conditions on the neighboring datasets and :

Definition 2

([19]). If the mechanism satisfies , then for all , satisfies .

Definition 3

([19,20]). If there exists a deterministic function that with sensitivity , then under the Gaussian mechanism, satisfies .

Definition 4

([21]). Given mechanism for sampling without replacement from a dataset of size to obtain a subset of size (sampling rate ) and mechanism , which is a randomized algorithm taking the subset as input, then, for all , if satisfies , the combination of both mechanisms satisfies , where satisfies the following condition:

Definition 5

([21]). Given two mechanisms and , if , which takes dataset as input, satisfies , and , which takes dataset and the output of as input, satisfies , then the combination of the two mechanisms satisfies .

3. FedKADP-Specific Implementation

3.1. FedKADP Framework

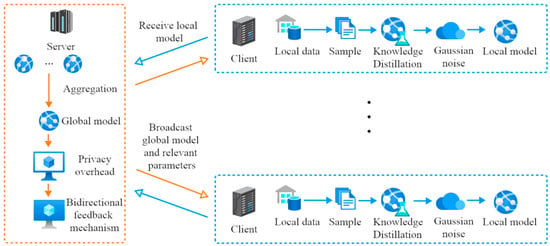

In order to address model heterogeneity, privacy, and security in federated learning with non-IID data, this research presents a framework. FedKADP, as illustrated in Figure 1, differs from traditional federated learning by using knowledge distillation, leveraging “soft labels” for local model training, integrating DP for enhanced user privacy, and employing a bidirectional feedback mechanism. This mechanism allows dynamic adjustments between knowledge distillation and DP.

Figure 1.

FedKADP framework.

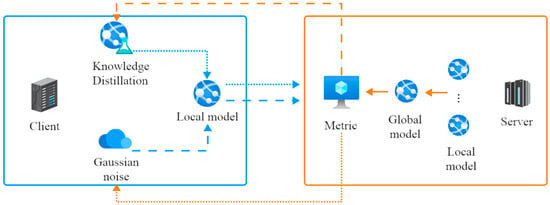

3.2. Bidirectional Feedback Mechanism

Protecting the privacy of user data is critical in a FL environment. Concurrently, it is essential to ensure that the model’s ability to generalize, as acquired through knowledge distillation, is not compromised by the implementation of privacy protection measures. The management of knowledge distillation and DP independently could lead to an unnecessary trade-off between privacy protection and model performance. To address this issue, this paper proposes a two-way feedback mechanism that dynamically adjusts the parameters of knowledge distillation and DP to optimize the overall model performance and privacy protection.

The bidirectional feedback mechanism performs a comprehensive evaluation of the model after each round of model training based on the model’s metric in terms of gradient change, loss change, number of communication rounds, and accuracy and adaptively adjusts the application of knowledge distillation and differential privacy based on the metric. The mechanism consists of two main feedback paths:

- (1)

- From DP to knowledge distillation: the temperature parameter of knowledge distillation is dynamically adjusted based on the actual impact of noise added by DP on model performance;

- (2)

- From knowledge distillation to DP: the noise parameter of the DP based on the actual impact of knowledge distillation on model performance is adjusted to ensure that model learning is maximized without sacrificing too much privacy.

Specifically, the bidirectional feedback mechanism introduces an evaluation mechanism to assess the stability of the model based on the model’s gradient change, loss change, communication rounds, and validation accuracy, reflecting the impact of knowledge distillation and DP on model performance. The evaluation mechanism can be expressed as follows:

Here, , , , and are the weights of each metric. Adjusting the weights allows the influence of the metric to be flexibly adjusted. is used to assess the changes in gradients, which can be expressed as follows:

Here, is the gradient norm of the current communication round, which is mathematically expressed as ; and are the current and previous global model parameters, respectively; and is the average of the previous gradient norms. is used to assess the changes in loss, which can be expressed as follows:

Here, is the rate of change in loss, which is mathematically expressed as , and and are the loss values of the current and previous communication rounds, respectively. is used to assess changes in accuracy, which can be expressed as follows:

Here, , , and represent the accuracy of the current, previous, and first two communication rounds, respectively. is used to assess the time factor, which can be expressed as follows:

Here, is the current communication round, and is the total number of communication rounds.

In summary, the implementation of the bidirectional feedback mechanism in FedKADP is illustrated in Figure 2. This figure depicts an effective bidirectional feedback mechanism that enables the knowledge distillation and DP processes to work in close collaboration, thereby optimizing the overall performance and privacy protection level of the federated learning model.

Figure 2.

Bidirectional feedback mechanism.

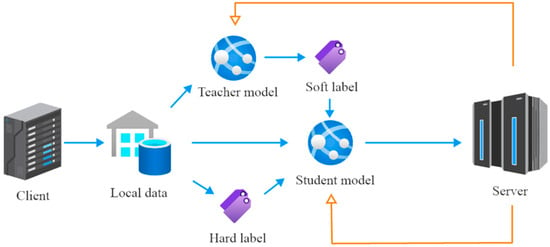

3.3. Knowledge Distillation Optimization

In traditional knowledge distillation, there is usually only one global teacher model. FedKADP proposes a hierarchical architecture of teacher models. Every local client receives the global model as its teacher model in each communication round. The client uses its local data as input to obtain “soft labels” trained by the local model. Then, the global model is again received as the student model, which participates in the training and updating of the local model. This approach reduces the loss of generalization performance brought on by non-IID data by giving each teacher model the responsibility of training a local client model. It also enables each teacher model to more precisely capture the data characteristics of its assigned client and efficiently direct the learning of the corresponding client model. The traditional federated learning method directly averages the parameters of the client model, which may lead to performance degradation of the global model because the model may be biased toward the specific data distribution of some clients. However, the student model in this method can use “soft labels” to imitate the output of the teacher model and better capture the characteristics of global data distribution, thus alleviating the model deviation caused by uneven data distribution. In addition, the student model can also use a “hard label”-driven approach to more directly fit the real data distribution of the client to ensure high accuracy in the target task. By optimizing the losses related to “hard labels”, the model can better adapt to the specific data characteristics of the client.

Specifically, in each local training iteration, the local data from the client are used as input, and the logits output of the local model (student model) is denoted as . The logits output from the global model (teacher model) is denoted as . Both the teacher and student models’ outputs are softened and transformed into probabilistic outputs that can be expressed as follows:

Here, is the temperature parameter for softening, which controls the “smoothness” of the Softmax output. A higher temperature makes the probability distribution flatter, which helps the model to focus more on the classes that are difficult to classify during learning. This is especially important when dealing with non-IID data.

Subsequently, the loss function for knowledge distillation is calculated, and the loss function is updated, which can be expressed as follows:

can be expressed as follows:

Here, is the cross-entropy loss, is the number of samples in the local dataset, is the “hard labels” of the -th class for the -th sample, and is the predicted probability of the student model for the -th class of the -th sample. The model’s fit can be determined by comparing the probability distribution of the student model’s predictions with the distribution of the “hard labels”. The student model can fit the true labels more closely by minimizing the cross-entropy loss, which enhances performance on the original task.

can be expressed as follows:

Here, is the Kullback–Leibler (KL) divergence, and is the predicted probability of the teacher model for the -th class for the -th sample. The similarity between the soft outputs of the teacher and student models is measured by calculating the difference between them. The rationale for multiplying the calculated KL divergence by the square of the temperature parameter is that when employing the temperature-adjusted Softmax output, the final loss must be scaled in accordance with the chosen temperature parameter to ensure that the magnitude of the loss remains constant. The student model can efficiently learn practical, generalized knowledge from the teacher model while retaining its uniqueness by minimizing the KL divergence.

In order to achieve the adaptive adjustment of temperature parameters and distillation weights based on a bidirectional feedback mechanism, the adjustment for the temperature parameter in FedKADP can be expressed as follows:

Here, and are the maximum and minimum values of the temperature parameter, respectively; is the threshold value; and is a parameter that adjusts the steepness of the curve. The larger the value of is, the more sensitive the function response. Such an adjustment mechanism allows for the simultaneous achievement of the following goals: increasing the temperature parameter smooths the probability distribution, helping the model learn more general information from the teacher’s model rather than specific noise; and adjusting the distillation weights enhances the influence of the teacher’s model output on the student model, improving the student model’s ability to better extract useful information despite noise and the performance reduction caused by DP.

The process of knowledge distillation optimization in FedKADP is illustrated in Figure 3. Overall, it allows the model to flexibly balance the fit to the “hard labels” and the absorption of knowledge from the teacher model during the learning process. This approach is particularly effective in improving the generalization capabilities of the model when faced with the challenges of non-IID data.

Figure 3.

Knowledge distillation optimization process.

3.4. Adaptive Differential Privacy

In order to prevent user privacy leakage when uploading local model parameters, traditional methods introduce noise equally in each communication round. This could cause the accuracy of the model to deteriorate in later stages. Currently, there are relatively few adaptive noise introduction techniques suitable for FL, especially those aimed at protecting DP at the record level. The FedKADP method employs the Gaussian mechanism to add Gaussian noise to the local model during each iteration of local model training and adaptively adjusts the noise magnitude, while utilizing RDP theory to track privacy overhead and reasonably allocate the privacy budget. The objective is to enhance model accuracy while maintaining the same conditions of privacy budget.

In the context of DP gradient descent algorithms executed on the client side, a cropping technique is frequently employed with the objective of ensuring that . Here, represents the model parameters of the client, while denotes the cropping threshold at the boundary. Consequently, the client-side model gradient cropping can be expressed as follows:

Assuming that the batch size is used to sample the samples for training in the local training, the sensitivity of the model gradient can be obtained based on the gradient trimming threshold. Therefore, the addition of Gaussian noise to the local model can be expressed as follows:

In order to achieve the adaptive adjustment of noise parameters based on a bidirectional feedback mechanism, the adjustment for the noise parameter in FedKADP can be expressed as follows:

Here, is the attenuation coefficient, and is the threshold value. Using such an adjustment mechanism that simultaneously optimizes the noise parameter based on model performance through knowledge distillation, the model can better adapt to data idiosyncrasies and variations, especially in non-IID data. This approach avoids the pitfalls of a one-size-fits-all fixed noise setting, which can often lead to inadequate model performance. With real-time tuning, the addition of noise can be optimized as needed to avoid overprotection at the expense of utility.

3.5. FedKADP-Algorithm Description

In FedKADP, clients conduct training on non-IID data, utilizing knowledge distillation techniques to optimize local model training. This method lessens the detrimental effects of model heterogeneity brought on by non-IID data, minimizes disparities between local models, accelerates global convergence, and enhances model validation accuracy. Additionally, DP is implemented using the Gaussian mechanism, where Gaussian noise is added to local models during each local iteration to create perturbations. Furthermore, a bidirectional feedback mechanism is introduced that allows dynamic adjustments between knowledge distillation and DP. The specific implementations are illustrated in Algorithms 1 and 2.

| Algorithm 1. FedKADP Client Algorithm. |

| Input: is the number of local training iterations, represents the model parameters, is the learning rate, is the batch size, is the Gaussian noise parameter, is the temperature parameter, and is the cropping threshold. |

| 1: |

| 2: |

| 3: into batches of size ) |

| 4: for do |

| 5: do |

| 6: |

| 7: |

| 8: |

| 9: |

| 10: |

| 11: |

| 12: |

| 13: end for |

| 14: return |

| Algorithm 2. FedKADP Server Algorithm. |

| Input: is the privacy budget, is the total number of clients, and is the client sampling rate. |

| 1: |

| 2: for do |

| 3: |

| 4: clients) |

| 5: for in parallel do |

| 6: Algorithm 1 7: end for |

| 8: |

| 9: (Privacy overhead calculating) |

| 10: if |

| 11: break |

| 12: end if |

| 13: ) |

| 14: end for |

| 15: return |

4. Theoretical Analysis

The following parameters are provided: the client sampling rate , the number of communication rounds , the Gaussian noise parameter for each round, the number of clients participating in each round, the set of participating clients for each round, the number of local training iterations , and the Gaussian mechanism .

4.1. Privacy Overhead Calculation

In accordance with Definition 3, it can be concluded that when the Gaussian parameter is equal to , satisfies , where .

In accordance with Definition 4, it can be concluded that after client () completes a round of local training, it satisfies . Here, is unbounded in the Gaussian mechanism, where satisfies the following condition:

In accordance with Definition 5, it can be concluded that after client () completes rounds of local training, it satisfies , where satisfies the following condition:

In accordance with the theory presented by Noble [7], after the server aggregates the outputs from all clients in , it satisfies , where satisfies the following condition:

4.2. Privacy Analysis

In accordance with the theoretical analysis in Section 4.1 and Definition 5, it can be concluded that FedKADP satisfies after rounds of communication.

In accordance with Definition 2, it can be concluded that FedKADP satisfies .

In conclusion, as long as is defined in accordance with the following condition:

Then, FedKADP satisfies , and the proof is completed.

5. Experiment

The effectiveness of FedKADP is validated in this section using experiments that assess the impact of parameter changes on the accuracy of FedKADP and compare FedKADP with existing algorithms. Additionally, the privacy overhead is tracked throughout the model training process based on RDP.

The experimental hardware configuration includes an AMD Ryzen 7 6800H CPU, GTX 3060 GPU, and 16 GB RAM, running on Windows 11. The deep learning models and DP noise addition are trained using the PyTorch 2.1.2 framework. The experimental datasets used are the widely utilized MNIST and CIFAR-10 datasets in FL algorithms. Due to limitations in the size of the datasets, the complete datasets are distributed across 100 clients. In a non-IID environment, the data are delivered to various clients after being sorted by labels. In particular, the images of the MNIST dataset are sorted according to their labels after dispersion and normalization. This process ensures that each client receives data that are predominantly label specific, rather than a uniform distribution of all labels. Similarly, the CIFAR-10 dataset is distributed to each client by means of label sorting after transformation and normalization. This approach simulates the real-world diversity and inhomogeneity of data sources from different clients, thus creating a non-IID data environment between clients with different label distributions. For the MNIST dataset, the experiments use a convolutional neural network that consists of two convolutional layers and two fully connected layers. Each convolutional layer is followed by a rectified linear unit activation function and a maximum pooling layer for feature extraction and spatial dimension reduction. The final fully connected layer outputs predictions for ten categories. For the CIFAR-10 dataset, we also conducted experiments using a convolutional neural network consisting of three convolutional layers and two fully connected layers. Each convolutional layer is followed by a rectified linear unit activation function and a maximum pooling layer. The last two fully connected layers map the extracted features to ten categories of predictions. The experiment sets the hyperparameters for the number of communication rounds , the number of local training iterations , the total number of clients , the batch size , the learning rate , and the temperature parameters and to 100, 20, 100, 32, 0.005, 2, and 3, respectively.

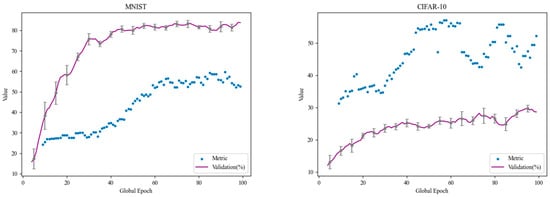

5.1. Visual Analysis of the Bidirectional Feedback Mechanism

This section shows the change in the value throughout the global iteration process in a visual way, with an initial noise parameter of 5 and a client sampling rate of 0.1. It analyzes how the bidirectional feedback mechanism dynamically adjusts the knowledge distillation and differential privacy parameters. This helps to more intuitively understand the role of the bidirectional feedback mechanism in optimizing model performance and privacy protection, as illustrated in Figure 4.

Figure 4.

Visualization of the value of the bidirectional feedback mechanism.

From the perspective of differential privacy, this bidirectional feedback mechanism dynamically adjusts the noise parameter of differential privacy according to the value of using Equation (20). When exceeds , the differential privacy noise parameter is proportionally attenuated, i.e., multiplied by the attenuation factor . When is lower than , the noise parameter remains unchanged. When is low, the performance of the model may be less than optimal, and keeping the noise parameter high at this time can enhance privacy protection and prevent the leakage of sensitive information. When reaches or exceeds , it means that the model performance is better. At this time, the introduction of noise can be appropriately reduced to further improve the accuracy of the model and reduce the performance loss due to noise. As the training of the model is gradually optimized, the system will adaptively reduce the noise to avoid overprotection, and this mechanism helps to improve the practical application of the model at a later stage, while still meeting the requirements of differential privacy.

From the perspective of knowledge distillation, the bidirectional feedback mechanism dynamically adjusts the temperature parameters of knowledge distillation technology according to the value of using Equation (17). The knowledge distillation temperature parameter increases as increases. Specifically, when approaches or exceeds , the temperature parameter tends toward the maximum value . The temperature parameter controls the student model’s focus on the “soft labels” provided by the teacher model. When the temperature is low, the student model pays more attention to the obvious classification. When the temperature is high, the student model will pay more attention to those samples that are difficult to classify, so as to learn the knowledge of the teacher model better for complex tasks. In the early or middle stage of training, when the value is low, the system will keep the temperature parameter low, which makes the model focus on the basic classification task. As training progresses and metrics improve, the mechanism gradually adjusts the temperature parameters. Thus, the model can learn complex knowledge better, which is helpful to improve the generalization ability of the model in the non-IID data.

5.2. Influence of Relevant Parameters on FedKADP

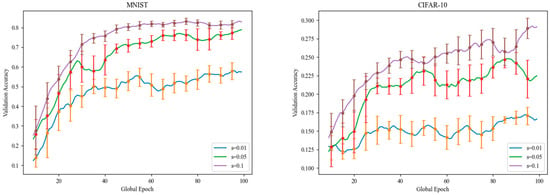

5.2.1. Client Sampling Rate ()

This section examines the influence of varying client sampling rates on the validation accuracy of FedKADP, conducting experiments with the MNIST and CIFAR-10 datasets. During the experimental process, the initial noise parameter is set at 5, and three distinct client sampling rates are employed: 0.01, 0.05, and 0.1. As illustrated in Figure 5. It is evident that the model validation accuracy increases with the number of participating clients. This increase is due to the involvement of more clients, which typically provides more data, diverse data types, additional model parameters, and greater computational resources, all of which collectively contribute to improved model validation accuracy. However, this improvement also raises the computational cost and the risk of privacy leakage. In practical applications, the client sampling rate can be flexibly adjusted based on available computational resources, privacy budgets, and training objectives to optimize training outcomes.

Figure 5.

The influence of on the validation accuracy.

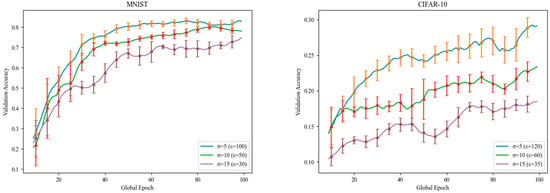

5.2.2. Initial Noise Parameter ()

This section uses tests on the MNIST and CIFAR-10 datasets to investigate how changing the initial noise parameter affects FedKADP’s validation accuracy. During the experimental process, the client sampling rate is set at 0.1. Three distinct initial noise magnitudes are employed: 5, 10, and 15. RDP is used to track privacy overhead. As illustrated in Figure 6. The model’s accuracy declines as the noise magnitude increases. This decline is due to the increased addition of noise parameters, which enhance privacy protection and reduce the privacy budget, inevitably compromising some model performance. Specifically, in the MNIST dataset, as the noise level increased from 5 to 10 and 15, the model’s validation accuracy decreased from 86% to 81% and 77.8%, respectively. In the CIFAR-10 dataset, it was observed that as the noise level increased from 5 to 10 and 15, the model’s validation accuracy dropped from 29.5% to 24.9% and 20.1%. These changes directly reflect how the introduction of noise can affect the model’s predictive ability. They also indicate that the model’s performance is highly sensitive to adjustments in noise parameters and the privacy budget. This impact seems to be more pronounced in the CIFAR-10 dataset, which can be attributed to the differences in complexity and features between the two datasets. MNIST images are simple, composed of fixed digit shapes, allowing the model to easily extract key edges and contours, thus making it more tolerant to noise. In contrast, CIFAR-10 images are more complex, containing a variety of colors, textures, and shapes. Noise more easily interferes with the extraction of these complex features, particularly in deeper convolutional layers, leading to a significant reduction in classification performance. In practical applications, the initial noise parameter can be adjusted according to the size of the privacy budget. Every training round’s privacy cost is monitored during the procedure, and the privacy budget is allocated rationally, aiming to balance the effectiveness of training with privacy concerns.

Figure 6.

The influence of on the validation accuracy.

5.3. Membership Inference Attack Experiments

This section perform experiments on membership inference attacks to validate the privacy-preserving ability of our proposed federated learning method based on knowledge distillation and DP. A popular privacy attack is the membership inference attack, which uses the model’s output to infer details about specific training data samples. In this experiment, this work simulated potentially malicious participants and attempted to infer the individual training data associated with them by analyzing the model output. Table 1 describes the parameters used for both the shadow and attack models in our experiments. The shadow model was trained for 50 epochs with a batch size of 128, a learning rate of 0.001, a learning rate decay of 0.96, and a regularization term of 1 × 10−4. The attack model was trained for 50 rounds, but with a smaller batch size of 10, a learning rate of 0.001, a learning rate decay of 0.96, and a regularization term of 1 × 10−7. These settings were chosen in order to adequately simulate a realistic training environment while ensuring that there is sufficient model complexity to perform membership inference attacks. Table 2 shows the results of the membership inference attack experiments. For FedKADP, the precision, recall, and F1 score for recognizing membership samples are 0.48, 0.03, and 0.06, respectively, with 15,000 samples supported. In comparison, traditional FedAvg has a precision of 0.50, a recall of 0.18, and an F1 score of 0.26 with 15,000 samples supported. These results show that while the both methods have similar precision, FedKADP has a significantly lower recall and F1 score, indicating better privacy protection. The lower recall and F1 scores indicate that the attack model is less successful in correctly identifying membership samples, highlighting the effectiveness of FedKADP in reducing membership inference attacks and thus providing stronger privacy protection for the training data.

Table 1.

Relevant parameters.

Table 2.

Experimental results.

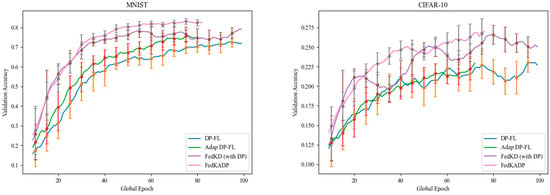

5.4. Comparison Experiments

The objective of this section is to verify FedKADP’s efficacy using non-IID data under the same privacy budget. To this end, FedKADP is compared with FedKD [22] (with DP), Adap DP-FL [23], and DP-FL [6].

As illustrated in Figure 7, the MNIST dataset is used with an initial noise parameter of 5 and a client sampling rate of 0.1. Under the same privacy budget, FedKADP is capable of completing 83 model updates with an accuracy of 85.1%, FedKD (with DP) completes 100 updates with an accuracy of 81.9%, Adap DP-FL completes 80 updates with an accuracy of 81.2%, and DP-FL completes 100 updates with an accuracy of 76.5%. From the perspective of communication costs, FedKD (with DP) reaches an accuracy of 81.9% after 99 rounds of communication, whereas FedKADP achieves the same accuracy in just 42 rounds. Adap DP-FL reaches an accuracy of 81.2% after 75 rounds of communication, whereas FedKADP achieves the same accuracy in just 42 rounds. DP-FL reaches an accuracy of 76.5% after 89 rounds of communication, whereas FedKADP achieves the same accuracy in just 30 rounds.

Figure 7.

Validation accuracy comparison.

The CIFAR-10 dataset is used with an initial noise parameter of 5 and a client sampling rate of 0.1. Under the same privacy budget, FedKADP is capable of completing 77 model updates with an accuracy of 29.6%, FedKD (with DP) completes 100 updates with an accuracy of 28.5%, Adap DP-FL completes 73 updates with an accuracy of 25.4%, and DP-FL completes 100 updates with an accuracy of 25%. From the perspective of communication costs, FedKD (with DP) reaches an accuracy of 28.5% after 78 rounds of communication, whereas FedKADP achieves the same accuracy in just 64 rounds. Adap DP-FL reaches an accuracy of 25.4% after 73 rounds of communication, whereas FedKADP achieves the same accuracy in just 35 rounds. DP-FL reaches an accuracy of 25% after 68 rounds of communication, whereas FedKADP achieves the same accuracy in just 29 rounds.

6. Conclusions

In conclusion, this work presents FedKADP, a novel federated learning technique that combines differential privacy and knowledge distillation to improve privacy and performance, especially for non-IID data. Employing a bidirectional feedback mechanism, FedKADP dynamically optimizes privacy protection and model performance and reduces communication overhead, promoting faster global model convergence. Experimental results using the MNIST and CIFAR-10 datasets demonstrate that FedKADP outperforms traditional FL methods by effectively reducing model heterogeneity and accelerating convergence, while maintaining robust differential privacy standards. This advancement marks a significant step forward in making FL scalable, efficient, and privacy-preserving, with potential applications in various data-rich but privacy-sensitive domains.

Nevertheless, there are still some limitations to this study. The experiments using this method are performed using the standard datasets MNIST and CIFAR-10 to test the machine learning models. However, these datasets are relatively small and may not fully capture the complexity of non-IID data in real-world scenarios. Future work will try to further optimize this method, and experiments should be performed on larger and more complex datasets. The performance of this method should be compared using different non-IID data to comprehensively evaluate its adaptability and robustness.

Author Contributions

Y.J. was mainly responsible for conceptualization, methodology, and writing the original draft. X.Z., the corresponding author, oversaw supervision, validation, and formal analysis and was in charge of reviewing and editing. H.L. was responsible for algorithm implementation and experimental design. Y.X. undertook data curation, investigation, and visualization. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China (Grant 61972208) and in part by the China Postdoctoral Science Foundation funded project (Grant 2018M640509).

Data Availability Statement

The data that support the findings of this study are openly available in the following public repositories: (1) MNIST dataset: available at http://yann.lecun.com/exdb/mnist/, accessed on 1 March 2024. (2) CIFAR-10 dataset: available at https://www.cs.toronto.edu/~kriz/cifar.html, accessed on 1 March 2024.

Acknowledgments

The authors extend their heartfelt gratitude to all individuals and institutions who contributed to the realization of this research.

Conflicts of Interest

The authors declare they have no conflicts of interest to report regarding this study.

References

- Waring, J.; Lindvall, C.; Umeton, R. Automated machine learning: Review of the state-of-the-art and opportunities for healthcare. Artif. Intell. Med. 2020, 104, 101822. [Google Scholar] [CrossRef] [PubMed]

- Lin, W.Y.; Hu, Y.H.; Tsai, C.F. Machine learning in financial crisis prediction: A survey. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2011, 42, 421–436. [Google Scholar]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. Artif. Intell. Statist. 2017, 54, 1273–1282. [Google Scholar]

- Singh, P.; Singh, M.K.; Singh, R.; Singh, N. Federated Learning: Challenges, Methods, and Future Directions; Springer International Publishing: Cham, Switzerland, 2022; pp. 199–214. [Google Scholar]

- Fredrikson, M.; Jha, S.; Ristenpart, T. Model inversion attacks that exploit confidence information and basic countermeasures. In Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security, Denver, CO, USA, 12–16 October 2015; pp. 1322–1333. [Google Scholar]

- Abadi, M.; Chu, A.; Goodfellow, I.; McMahan, H.B.; Mironov, I.; Talwar, K.; Zhang, L. Deep learning with differential privacy. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 308–318. [Google Scholar]

- Noble, M.; Bellet, A.; Dieuleveut, A. Differentially private federated learning on heterogeneous data. Int. Conf. Artif. Intell. Statist. 2022, 151, 10110–10145. [Google Scholar]

- Wei, K.; Li, J.; Ding, M.; Ma, C.; Yang, H.H.; Farokhi, F.; Jin, S.; Quek, T.Q.S.; Poor, H.V. Federated learning with differential privacy: Algorithms and performance analysis. IEEE Trans. Inf. Forensics Secur. 2020, 15, 3454–3469. [Google Scholar] [CrossRef]

- Tan, A.Z.; Yu, H.; Cui, L.; Yang, Q. Towards personalized federated learning. IEEE Trans. Neural Netw. Learn. Syst. 2022, 34, 9587–9603. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Li, M.; Lai, L.; Suda, N.; Civin, D.; Chandra, V. Federated learning with non-iid data. arXiv 2018, arXiv:1806.00582. [Google Scholar] [CrossRef]

- Zhang, X.; Hong, M.; Dhople, S.; Yin, W.; Liu, Y. Fedpd: A federated learning framework with adaptivity to non-iid data. IEEE Trans. Signal Process. 2021, 69, 6055–6070. [Google Scholar] [CrossRef]

- Jeong, E.; Oh, S.; Kim, H.; Park, J.; Bennis, M.; Kim, S.L. Communication-efficient on-device machine learning: Federated distillation and augmentation under non-iid private data. arXiv 2018, arXiv:1811.11479. [Google Scholar]

- Fallah, A.; Mokhtari, A.; Ozdaglar, A. Personalized federated learning: A meta-learning approach. arXiv 2020, arXiv:2002.07948. [Google Scholar]

- Tursunboev, J.; Kang, Y.-S.; Huh, S.-B.; Lim, D.W.; Kang, J.M.; Jung, H. Hierarchical Federated Learning for Edge-Aided Unmanned Aerial Vehicle Networks. Appl. Sci. 2022, 12, 670. [Google Scholar] [CrossRef]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Dwork, C.; Roth, A. The algorithmic foundations of differential privacy. Found. Trends® Theor. Comput. Sci. 2014, 9, 211–407. [Google Scholar] [CrossRef]

- McSherry, F.; Talwar, K. Mechanism design via differential privacy. In Proceedings of the 48th Annual IEEE Symposium on Foundations of Computer Science (FOCS’07), Providence, RI, USA, 21–23 October 2007; pp. 94–103. [Google Scholar]

- McSherry, F.D. Privacy integrated queries: An extensible platform for privacy-preserving data analysis. In Proceedings of the SIGMOD/PODS ‘09: International Conference on Management of Data, Providence, RI, USA, 29 June–2 July 2009; pp. 19–30. [Google Scholar]

- Mironov, I. Rényi differential privacy. In Proceedings of the 2017 IEEE 30th Computer Security Foundations Symposium (CSF), Santa Barbara, CA, USA, 21–25 August 2017; pp. 263–275. [Google Scholar]

- Liu, F. Generalized gaussian mechanism for differential privacy. IEEE Trans. Knowl. Data Eng. 2018, 31, 747–756. [Google Scholar] [CrossRef]

- Wang, Y.X.; Balle, B.; Kasiviswanathan, S.P. Subsampled rényi differential privacy and analytical moments accountant. In Proceedings of the 22nd International Conference on Artificial Intelligence and Statistics, Okinawa, Japan, 16–18 April 2019; pp. 1226–1235. [Google Scholar]

- Wu, C.; Wu, F.; Lyu, L.; Huang, Y.; Xie, X. Communication-efficient federated learning via knowledge distillation. Nat. Commun. 2022, 13, 2032. [Google Scholar] [CrossRef] [PubMed]

- Fu, J.; Chen, Z.; Han, X. Adap dp-fl: Differentially private federated learning with adaptive noise. In Proceedings of the 2022 IEEE International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom), Wuhan, China, 9–11 December 2022; pp. 656–663. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).