Feature Fusion Image Dehazing Network Based on Hybrid Parallel Attention

Abstract

:1. Introduction

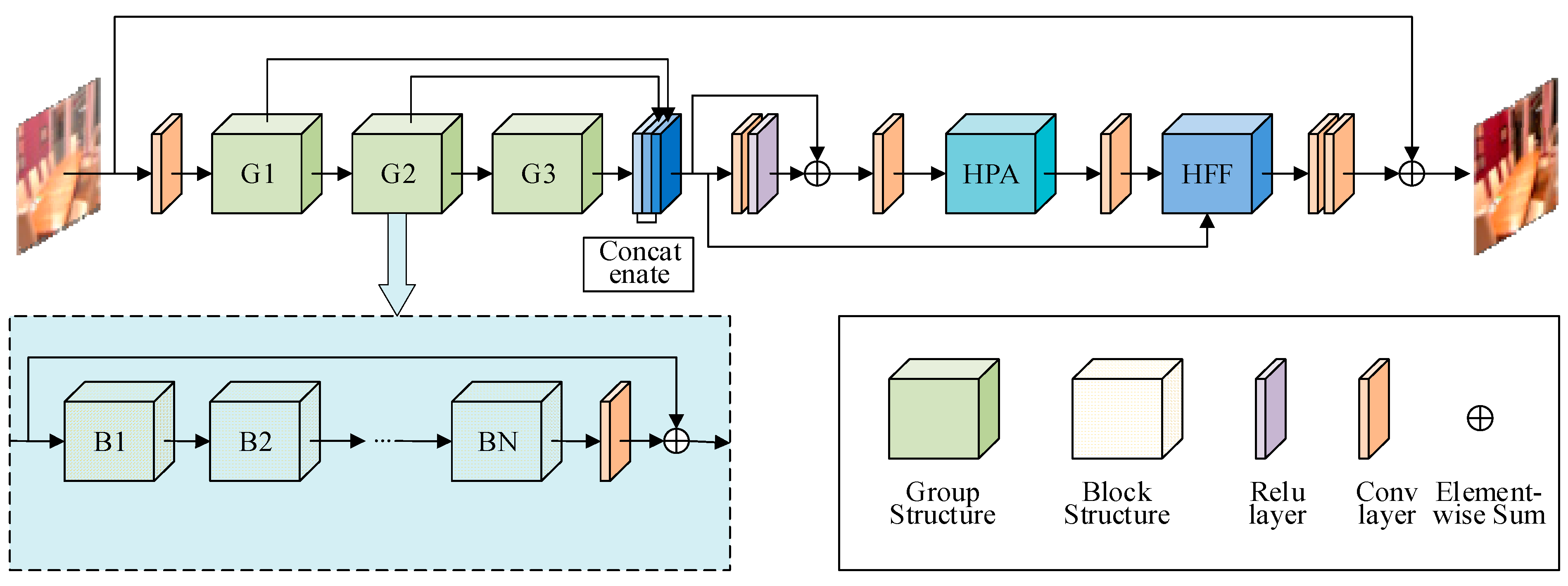

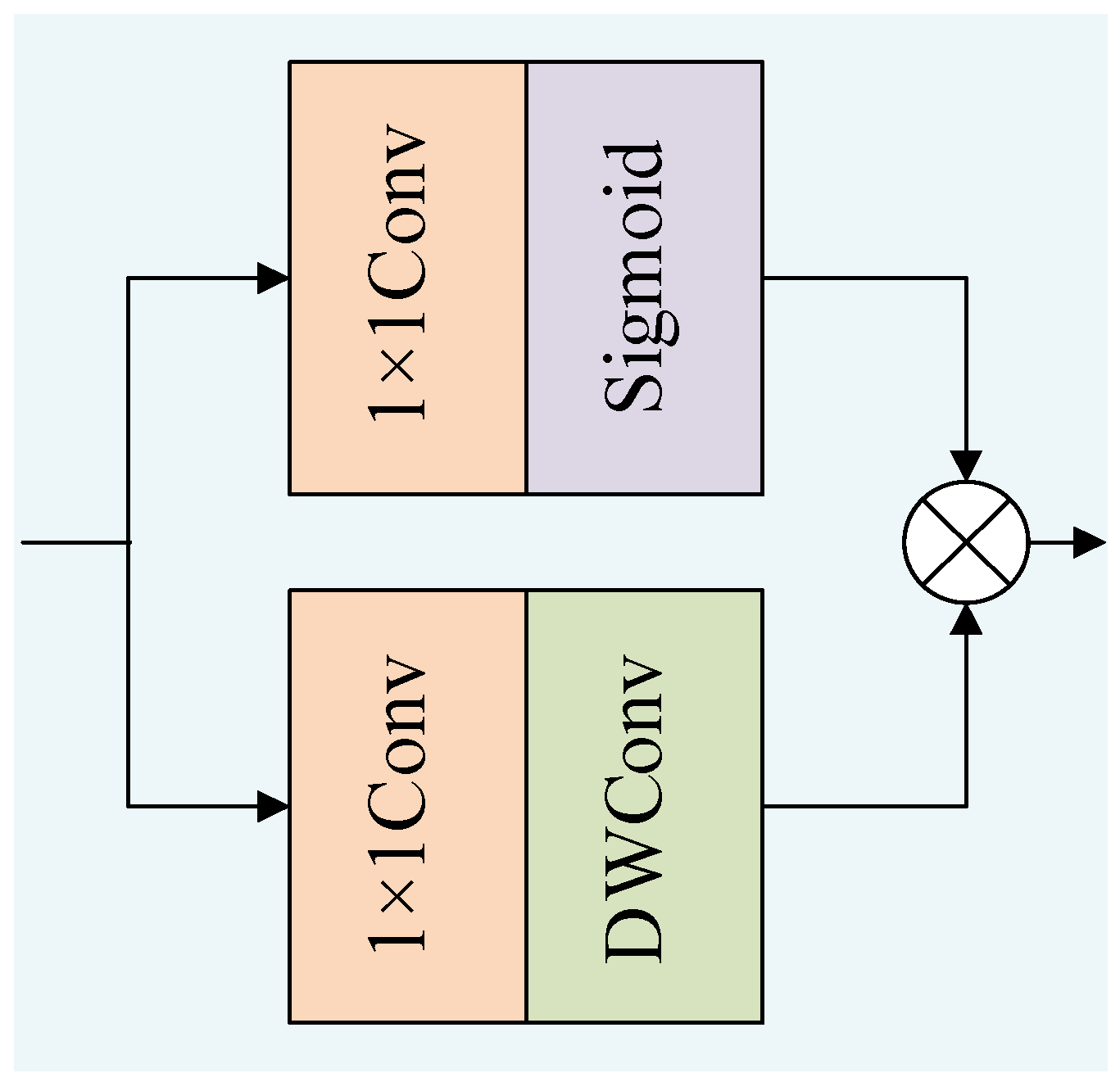

- A hybrid parallel attention (HPA) module is proposed to replace the FA module. This module combines pixel attention, channel attention, and spatial attention mechanisms. Through parallel connection, it can not only enhance the extraction and fusion capabilities of global spatial context information but also obtain more comprehensive and accurate feature expression and have a better dehazing effect on the uneven distribution of haze.

- A hierarchical feature fusion (HFF) module with an adaptively expanded receptive field is introduced, which dynamically fuses feature mappings from distinct paths to capture the trade-off between local and global features, and refines and enhances image features to improve the dehazing effect.

- A hybrid loss function is used to add a perceptual loss function to the original one to improve the brightness and contrast of the dehazed image. The L1 loss function aims to retain image edge information while reducing the noise and artifacts inside an image and comparing the difference between a generated dehazed image and the real image; the perceptual loss function focuses more on quality of image perception, which can extract the texture and structural features of the image and restore the high-frequency information of the image.

2. Related Work

2.1. Dehazing Methods Based on Priors

2.2. Deep Learning-Based Dehazing Methods

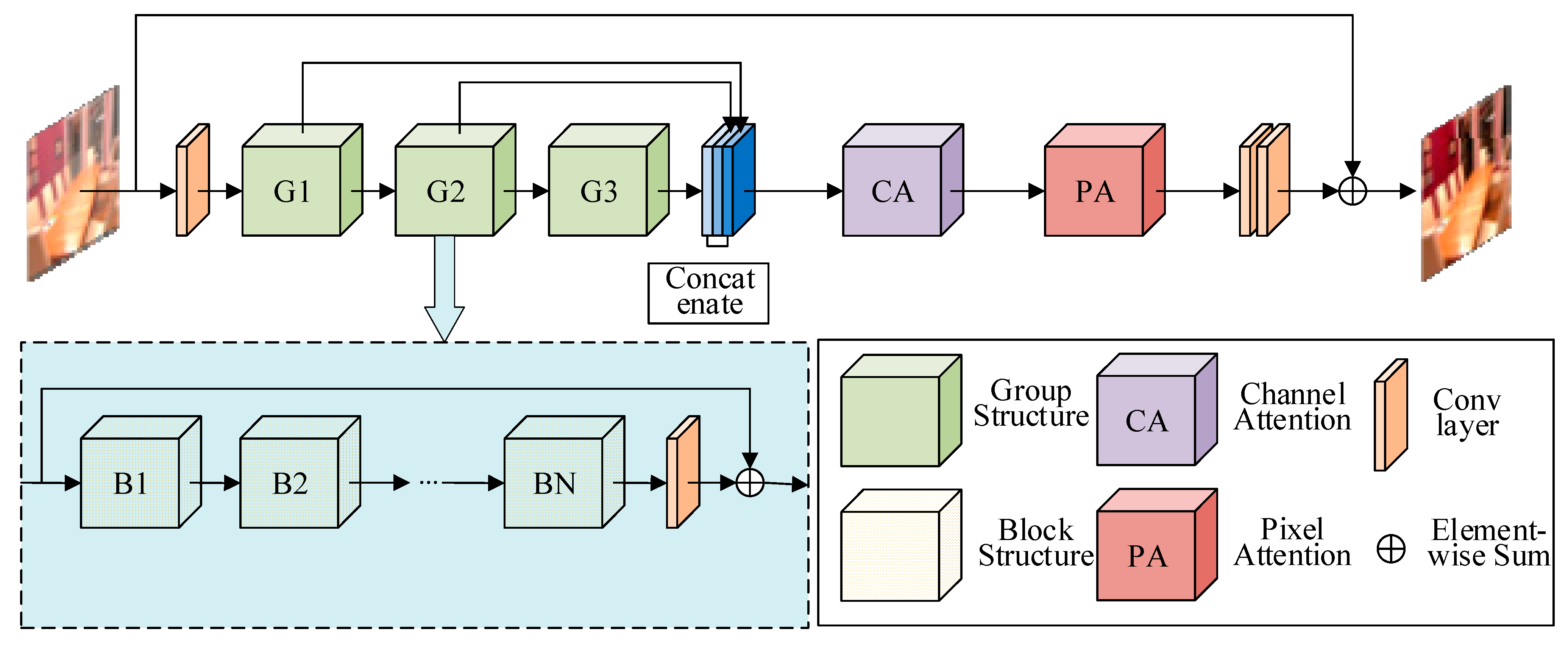

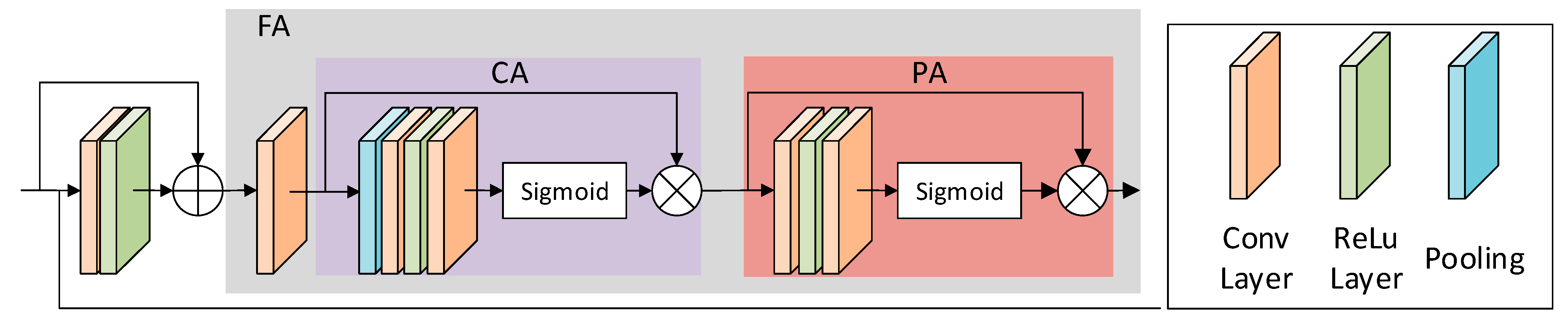

2.3. Baseline Model FFA-Net

3. HPA-HFF Network

3.1. HPA Module

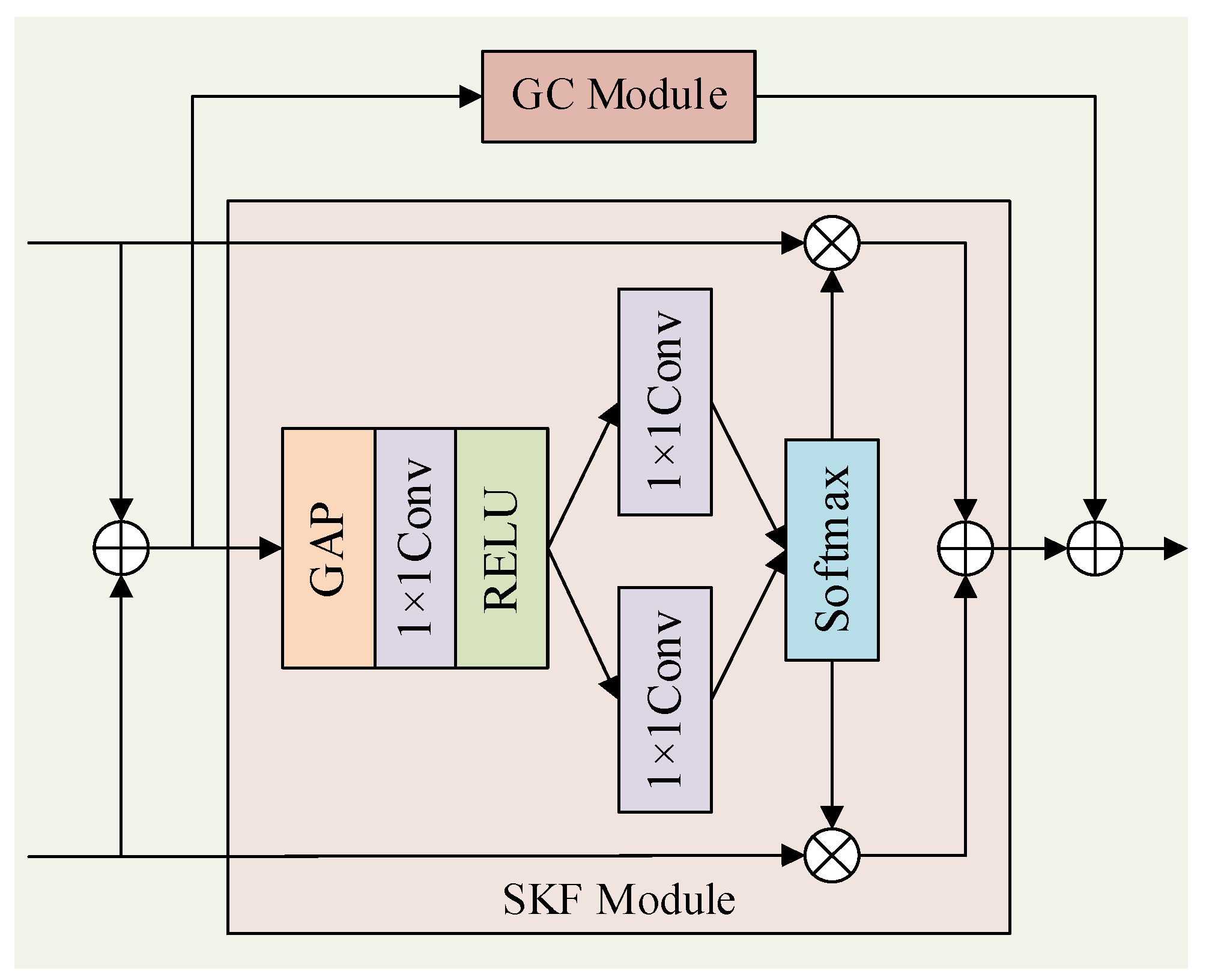

3.2. HFF Module

3.3. Loss Function

4. Experimental Results and Analysis

4.1. Dataset

4.2. Experimental Setup

4.3. Quantitative Analysis

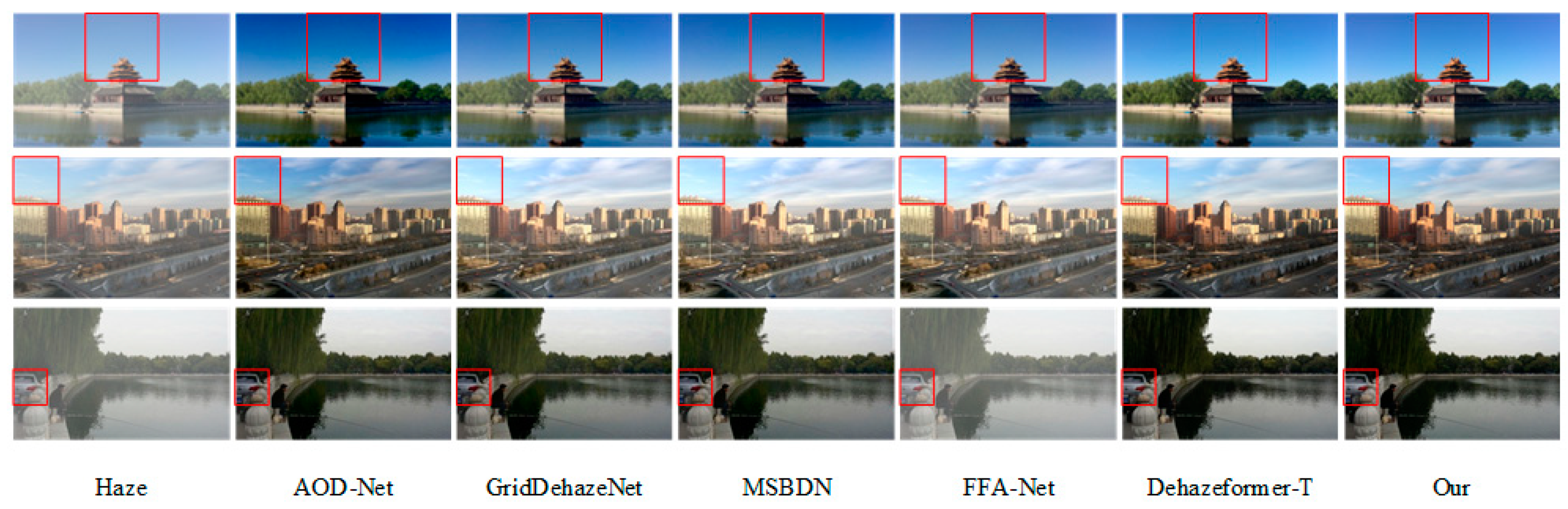

4.4. Qualitative Analysis

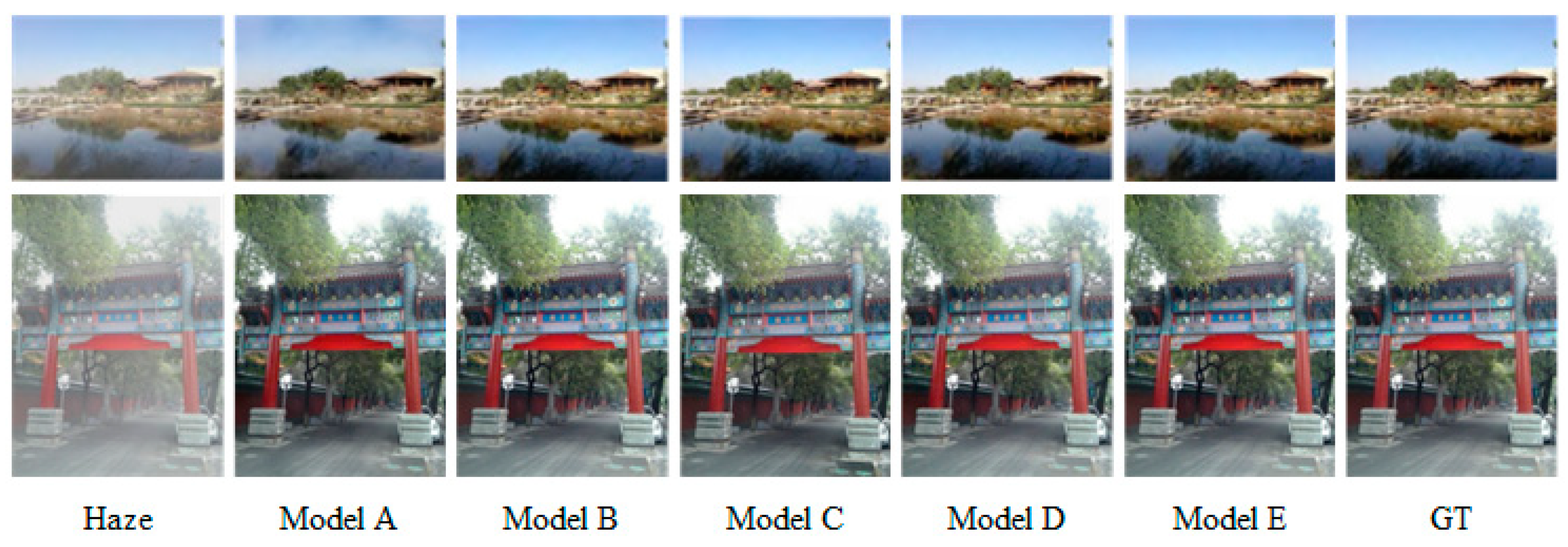

4.5. Ablation Experiments

- Model A (Base): this is the base network, which is the FFA-Net model.

- Model B (Base + HPA): the FA module in the base network is replaced with the Hybrid Parallel Attention (HPA) module.

- Model C (Base + HFF): the hierarchical feature fusion (HFF) module is added to the base network.

- Model D (Base + HPA + HFF): both the HPA module and the HFF module are added to the base network.

- Model E (Base + HPA + HFF + LP): the complete model with the addition of the perceptual loss function, representing the network proposed in this article.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yi, W.; Dong, L.; Liu, M.; Hui, M.; Kong, L.; Zhao, Y. Towards compact single image dehazing via task-related contrastive network. Expert Syst. Appl. 2024, 235, 121130. [Google Scholar] [CrossRef]

- Chow, T.-Y.; Lee, K.-H.; Chan, K.-L. Detection of targets in road scene images enhanced using conditional gan-based dehazing model. Appl. Sci. 2023, 13, 5326. [Google Scholar] [CrossRef]

- Kim, W.Y.; Hum, Y.C.; Tee, Y.K.; Yap, W.-S.; Mokayed, H.; Lai, K.W. A modified single image dehazing method for autonomous driving vision system. Multimed. Tools Appl. 2024, 83, 25867–25899. [Google Scholar] [CrossRef]

- Dong, H.; Pan, J.; Xiang, L.; Hu, Z.; Zhang, X.; Wang, F.; Yang, M.-H. Multi-scale boosted dehazing network with dense feature fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 2157–2167. [Google Scholar]

- Li, S.; Zhou, Y.; Ren, W.; Xiang, W. Pfonet: A progressive feedback optimization network for lightweight single image dehazing. IEEE Trans. Image Process. 2023, 32, 6558–6569. [Google Scholar] [CrossRef] [PubMed]

- McCartney, E.J. Optics of the Atmosphere: Scattering by Molecules and Particles; John Wiley and Sons, Inc.: New York, NY, USA, 1976. [Google Scholar]

- Nayar, S.K.; Narasimhan, S.G. Vision in bad weather. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; pp. 820–827. [Google Scholar]

- Narasimhan, S.G.; Nayar, S.K. Vision and the atmosphere. Int. J. Comput. Vis. 2002, 48, 233–254. [Google Scholar] [CrossRef]

- Liu, H.; Yang, J.; Wu, Z.; Zhang, Q.; Deng, Y. A fast single image dehazing method based on dark channel prior and Retinex theory. Acta Autom. Sin. 2015, 41, 1264–1273. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [PubMed]

- Zhu, Q.; Mai, J.; Shao, L. A fast single image haze removal algorithm using color attenuation prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [PubMed]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. Dehazenet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. Aod-net: All-in-one dehazing network. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4770–4778. [Google Scholar]

- Dong, Y.; Liu, Y.; Zhang, H.; Chen, S.; Qiao, Y. FD-GAN: Generative adversarial networks with fusion-discriminator for single image dehazing. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 10729–10736. [Google Scholar]

- Mehta, A.; Sinha, H.; Narang, P.; Mandal, M. Hidegan: A hyperspectral-guided image dehazing gan. In Proceedings of the IEEE/CVF Conference On Computer Vision And Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 212–213. [Google Scholar]

- Liu, X.; Ma, Y.; Shi, Z.; Chen, J. Griddehazenet: Attention-based multi-scale network for image dehazing. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October—2 November 2019; pp. 7314–7323. [Google Scholar]

- Qin, X.; Wang, Z.; Bai, Y.; Xie, X.; Jia, H. FFA-Net: Feature fusion attention network for single image dehazing. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 11908–11915. [Google Scholar]

- Zhao, X. A modified prior-based single-image dehazing method. Signal Image Video Process. 2022, 16, 1481–1488. [Google Scholar] [CrossRef]

- Chen, X.; Li, H.; Li, C.; Jiang, W.; Zhou, H. Single Image Dehazing Based on Sky Area Segmentation and Image Fusion. IEICE TRANSACTIONS Inf. Syst. 2023, 106, 1249–1253. [Google Scholar] [CrossRef]

- Berman, D.; Treibitz, T.; Avidan, S. Single image dehazing using haze-lines. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 42, 720–734. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Patel, V.M. Densely connected pyramid dehazing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3194–3203. [Google Scholar]

- Ren, W.; Pan, J.; Zhang, H.; Cao, X.; Yang, M.-H. Single image dehazing via multi-scale convolutional neural networks with holistic edges. Int. J. Comput. Vis. 2020, 128, 240–259. [Google Scholar] [CrossRef]

- Qu, Y.; Chen, Y.; Huang, J.; Xie, Y. Enhanced pix2pix dehazing network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 8160–8168. [Google Scholar]

- Song, Y.; He, Z.; Qian, H.; Du, X. Vision transformers for single image dehazing. IEEE Trans. Image Process. 2023, 32, 1927–1941. [Google Scholar] [CrossRef] [PubMed]

- Dutta, S.; Basarab, A.; Georgeot, B.; Kouamé, D. DIVA: Deep unfolded network from quantum interactive patches for image restoration. Pattern Recognit. 2024, 2024, 110676. [Google Scholar] [CrossRef]

- Zhao, H.; Kong, X.; He, J.; Qiao, Y.; Dong, C. Efficient image super-resolution using pixel attention. In Proceedings of the Computer Vision–ECCV 2020 Workshops: Glasgow, UK, 23–28 August 2020; Proceedings, Part III 16; Springer: Cham, Switzerland, 2020; pp. 56–72. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective kernel networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 510–519. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.-H.; Shao, L. Learning enriched features for fast image restoration and enhancement. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 1934–1948. [Google Scholar] [CrossRef] [PubMed]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.-H.; Shao, L. Learning enriched features for real image restoration and enhancement. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXV 16; Springer: Cham, Switzerland, 2020; pp. 492–511. [Google Scholar]

- Zhao, S.; Zhang, L.; Shen, Y.; Zhou, Y. RefineDNet: A weakly supervised refinement framework for single image dehazing. IEEE Trans. Image Process. 2021, 30, 3391–3404. [Google Scholar] [CrossRef] [PubMed]

| Method | SOTS Indoor | SOTS Outdoor | Param (M) | Latency (ms) | ||

|---|---|---|---|---|---|---|

| PSNR (dB) | SSIM | PSNR (dB) | SSIM | |||

| DCP | 16.73 | 0.8617 | 19.15 | 0.8146 | — | — |

| AOD-Net | 19.06 | 0.8504 | 20.29 | 0.8765 | 0.002 | 0.351 |

| DehazeNet | 20.13 | 0.8457 | 22.16 | 0.8233 | 0.009 | 0.899 |

| RefineDNet | 23.23 | 0.9431 | 23.84 | 0.9324 | — | — |

| GridDehazeNet | 32.25 | 0.9837 | 30.86 | 0.9819 | 0.956 | 9.345 |

| MSBDN | 33.67 | 0.9860 | 33.48 | 0.9820 | 31.35 | 13.254 |

| FFA-Net | 36.39 | 0.9886 | 33.57 | 0.9840 | 4.456 | 49.397 |

| DehazeFormer-T | 35.34 | 0.9831 | 33.15 | 0.9716 | 0.686 | 16.278 |

| HPA-HFF | 39.41 | 0.9967 | 35.52 | 0.9887 | 5.54 | 15.648 |

| Model | SOTS Indoor | SOTS Outdoor | Param (M) | Latency (ms) | ||

|---|---|---|---|---|---|---|

| PSNR (dB) | SSIM | PSNR (dB) | SSIM | |||

| Model A | 36.39 | 0.9886 | 33.57 | 0.9840 | 4.456 | 49.397 |

| Model B | 38.53 | 0.9912 | 34.52 | 0.9857 | 5.538 | 21.482 |

| Model C | 36.55 | 0.9809 | 33.67 | 0.9835 | 3.415 | 17.391 |

| Model D | 38.97 | 0.9934 | 35.01 | 0.9853 | 5.183 | 16.869 |

| Model E | 39.41 | 0.9967 | 35.52 | 0.9887 | 5.541 | 15.648 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, H.; Chen, M.; Li, H.; Peng, H.; Su, Q. Feature Fusion Image Dehazing Network Based on Hybrid Parallel Attention. Electronics 2024, 13, 3438. https://doi.org/10.3390/electronics13173438

Chen H, Chen M, Li H, Peng H, Su Q. Feature Fusion Image Dehazing Network Based on Hybrid Parallel Attention. Electronics. 2024; 13(17):3438. https://doi.org/10.3390/electronics13173438

Chicago/Turabian StyleChen, Hong, Mingju Chen, Hongyang Li, Hongming Peng, and Qin Su. 2024. "Feature Fusion Image Dehazing Network Based on Hybrid Parallel Attention" Electronics 13, no. 17: 3438. https://doi.org/10.3390/electronics13173438

APA StyleChen, H., Chen, M., Li, H., Peng, H., & Su, Q. (2024). Feature Fusion Image Dehazing Network Based on Hybrid Parallel Attention. Electronics, 13(17), 3438. https://doi.org/10.3390/electronics13173438