SSuieBERT: Domain Adaptation Model for Chinese Space Science Text Mining and Information Extraction

Abstract

1. Introduction

2. Related Work

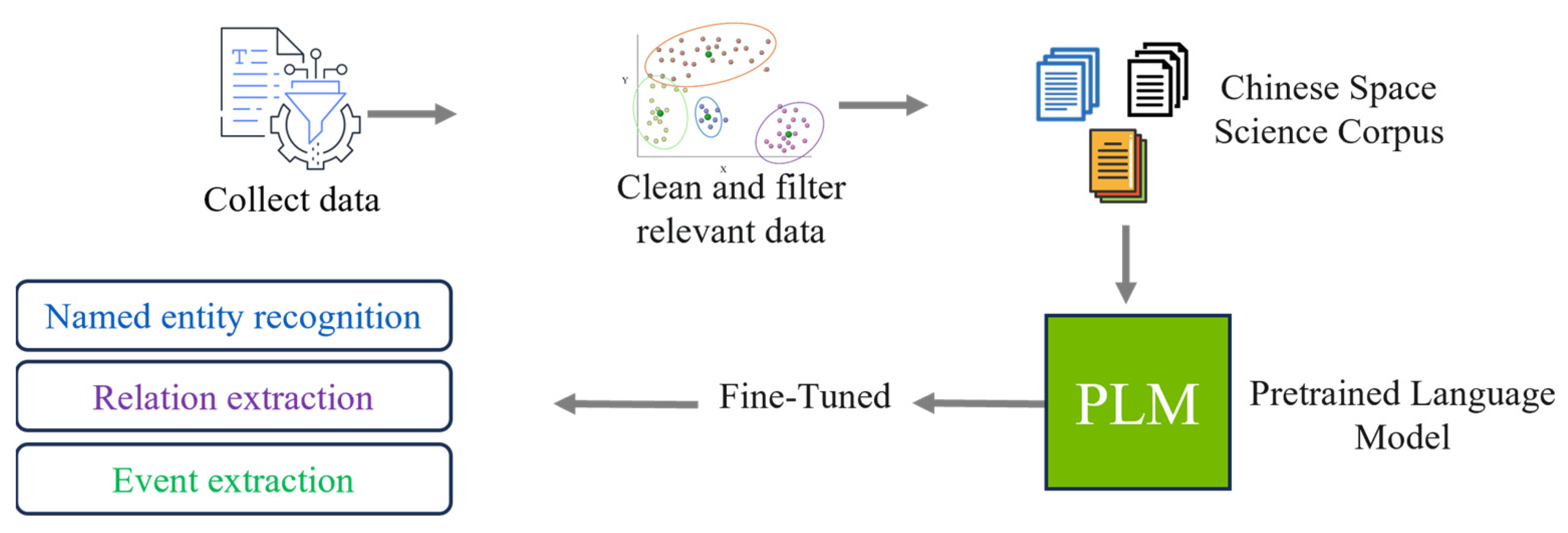

3. Methodology

3.1. Construction of Domain Pre-Training Corpus

3.2. Domain Chinese Word Segmentation

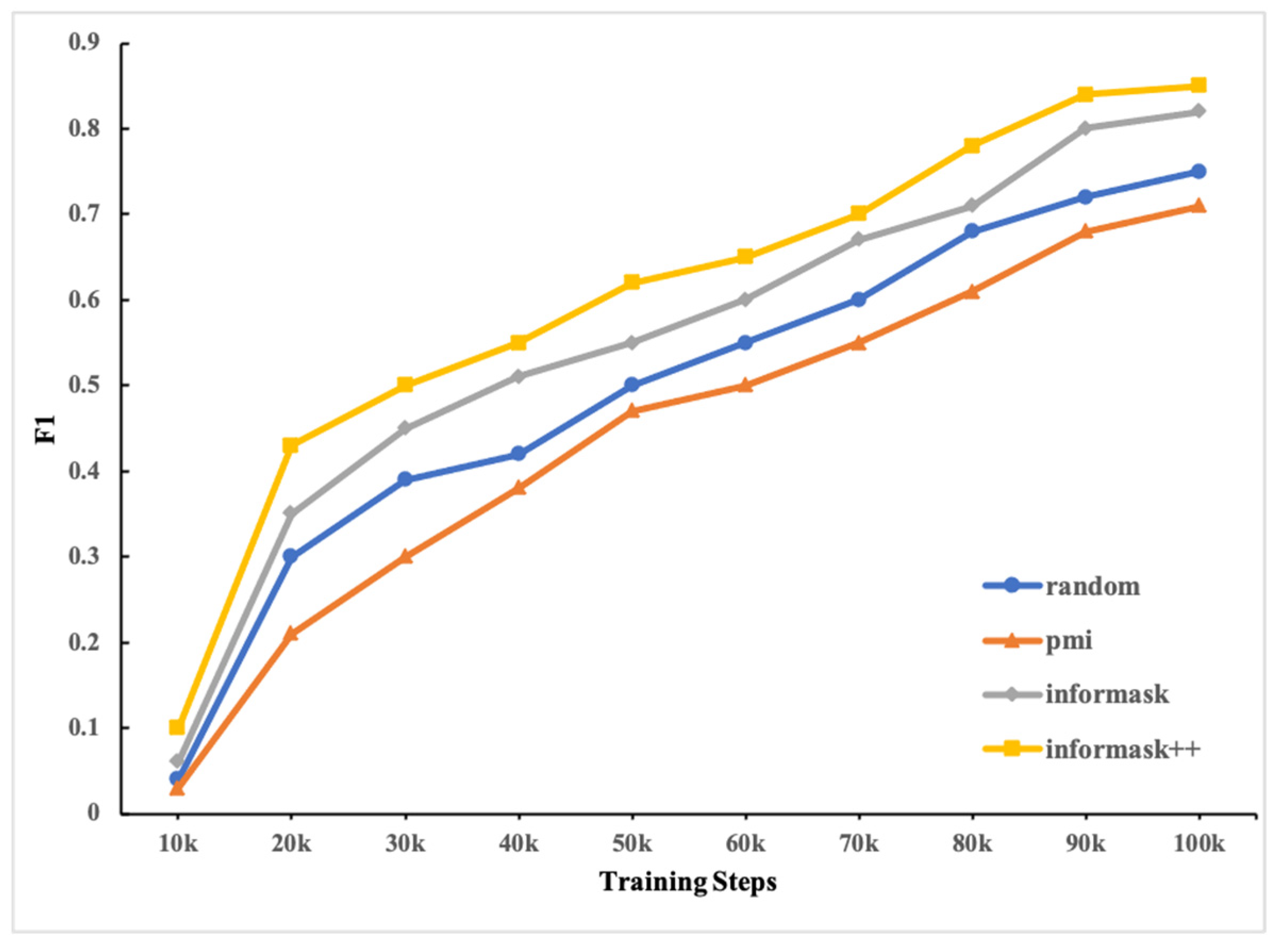

3.3. Informative Masking

| Algorithm 1. Informask++ Algorithm |

| Text Set Randomly sampled candidates’ size Informative score of -th masking candidate in text for ∈ do for = 1, 2,…, do Calculate -th masking candidate for ← Masked Words ← Unmasked Words ← 0 for ∈ do for ∈ do = + pmi (, ) pmi (, ) = end for end for end for Select candidate based on maximum end for |

- We use the domain segmentation model to generate domain words, the method to create the domain words refers to the Section 3.2.

- We propose to apply informask++ instead of random masking. we aim to automatically identify words with more important semantic information and increase their mask probability, which facilitates the model to focus on more informative words to obtain abundant semantic information.

- To align with BERT, we set the overall mask rate to 15%. 80% of the tokens are replaced with [MASK] tokens, 10% of the tokens are replaced with random words, and the original words are kept in the remaining 10%.

3.4. Further Pretraining in SSUIE Domain

- Informative masking: As mentioned in Section 3.3, it aims to automatically identify more informative tokens (e.g., professional terms and phrases) and increase the ratio that they will be masked.

- Eliminate NSP loss from training objectives: Pre-train BERT with two tasks: MLM (masking language model) and NSP (next sentence prediction). The NSP task is to determine whether two sentences are matched and semantically coherent. The authors of RoBERTa claim that the performance of downstream tasks will be improved without NSP loss.

- Full-length sequences: The maximum length limit for BERT input is 512. The authors of RoBERTa verified through experiments that the model can achieve better results when trained using full-length sequences. Specifically, it will continuously extract sentences from a text to fill the input sequence, but if it reaches the end of the text, it will continue to extract sentences from the next text to fill the sequence, and the content in different texts will still be segmented according to the [SEP] separator.

- Larger batch size: In RoBERTa’s comparative experiments with different batch sizes and learning rates, it was found that increasing the batch size is beneficial for reducing the Perplexity of training data and further improving the performance of the model.

4. Experimental Setups

4.1. SSUIE Language Model Pretraining

4.2. Finetuning Tasks

4.3. Modeling

5. Results

5.1. Named Entity Recognition

5.2. Relation Extraction

5.3. Event Classification

6. Discussion

6.1. Fine-Tuning Strategies

6.1.1. Labeling Schemes

6.1.2. Learning Rates

6.2. Effectiveness of SSuieBERT

6.3. Investigation on MLM Task

6.4. Error Analysis

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lee, J.; Yoon, W.; Kim, S.; Kim, D.; Kim, S.; So, C.H.; Kang, J. BioBERT: A pre-trained biomedical language representation model for biomedical text mining. Bioinformatics 2020, 36, 1234–1240. [Google Scholar] [CrossRef] [PubMed]

- Gururangan, S.; Marasović, A.; Swayamdipta, S.; Lo, K.; Beltagy, I.; Downey, D.; Smith, N.A. Don’t stop pretraining: Adapt language models to domains and tasks. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Stroudsburg, PA, USA, 6–8 July 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 8342–8360. [Google Scholar]

- Beltagy, I.; Lo, K.; Cohan, A. SciBERT: A pretrained language model for scientific text. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, EMNLP-IJCNLP 2019, Hong Kong, China, 3–7 November 2019; Inui, K., Jiang, J., Ng, V., Wan, X., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 3613–3618. [Google Scholar]

- Araci, D. FinBERT: Financial sentiment analysis with pre-trained language models. arXiv 2019, arXiv:1908.10063. [Google Scholar] [CrossRef]

- Lee, J.-S.; Hsiang, J. Patent classification by fine-tuning BERT language model. World Pat. Inf. 2020, 61, 101965. [Google Scholar] [CrossRef]

- Manning, C.; Schutze, H. Foundations of Statistical Natural Language Processing; MIT Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Cui, Y.; Che, W.; Liu, T.; Qin, B.; Yang, Z.; Wang, S.; Hu, G. Pre-Training with Whole Word Masking for Chinese BERT. arXiv 2019, arXiv:1906.08101. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, S.; Li, Y.; Feng, S.; Chen, X.; Zhang, H.; Tian, X.; Zhu, D.; Tian, H.; Wu, H. ERNIE: Enhanced Representation through Knowledge Integration. arXiv 2019, arXiv:1904.09223. [Google Scholar]

- Yang, Z.; Dai, Z.; Yang, Y.; Carbonell, J.; Salakhutdinov, R.; Le, Q.V. XLNet: Generalized Autoregressive Pretraining for Language Understanding. arXiv 2019, arXiv:1906.08237. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.; Dean, J. Distributed representations of words and phrases and their compositionality. In Proceedings of the 26th International Conference on Neural Information Processing System, Lake Tahoe Nevada, CA, USA, 5–10 December 2013; pp. 3111–3119. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Matthew, E.; Peters; Neumann, M.; Iyyer, M.; Gardner, M.; Clark, C.; Lee, K.; Zettlemoyer, L. Deep contextualized word representations. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2018, New Orleans, LA, USA, 1–6 June 2018; Volume 1 (Long Papers), pp. 2227–2237. [Google Scholar]

- Howard, J.; Ruder, S. Universal language model fine-tuning for text classification. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; pp. 328–339. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Huang, K.; Altosaar, J.; Ranganath, R. Clinicalbert: Modeling clinical notes and predicting hospital readmission. arXiv 2019, arXiv:1904.05342. [Google Scholar]

- Alsentzer, E.; Murphy, J.; Boag, W.; Weng, W.; Jindi, D.; Naumann, T.; McDermott, M. Publicly available clinical bert embeddings. In Proceedings of the 2nd Clinical Natural Language Processing Workshop, Minneapolis, MN, USA, 7 June 2019; pp. 72–78. [Google Scholar]

- Gupta, T.; Zaki, M.; Krishnan, N.M.A.; Mausam. MatSciBERT: A materials domain language model for text mining and information extraction. npj Comput. Mater. 2022, 8, 102. [Google Scholar] [CrossRef]

- Zhu, Y.; Kiros, R.; Zemel, R.; Salakhutdinov, R.; Urtasun, R.; Torralba, A.; Fidler, S. Aligning books and movies: Towards story-like visual explanations by watching movies and reading books. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 19–27. [Google Scholar]

- Wu, Y.; Schuster, M.; Chen, Z.; Le, Q.V.; Norouzi, M.; Macherey, W.; Krikun, M.; Cao, Y.; Gao, Q.; Macherey, K.; et al. Google’s neural machine translation system: Bridging the gap between human and machine translation. arXiv 2016, arXiv:1609.08144. [Google Scholar]

- Che, W.; Li, Z.; Liu, T. Ltp: A Chinese language technology platform. In Proceedings of the 23rd International Conference on Computational Linguistics: Demonstrations, Beijing, China, 23–27 August 2010; Association for Computational Linguistics: Stroudsburg, PA, USA, 2010; pp. 13–16. [Google Scholar]

- Joshi, M.; Chen, D.; Liu, Y.; Weld, D.S.; Zettlemoyer, L.; Levy, O. Spanbert: Improving pre-training by representing and predicting spans. Trans. Assoc. Comput. Linguist. 2020, 8, 64–77. [Google Scholar] [CrossRef]

- Levine, Y.; Lenz, B.; Lieber, O.; Abend, O.; Leyton-Brown, K.; Tennenholtz, M.; Shoham, Y. Pmi-masking: Principled masking of correlated spans. In Proceedings of the 9th International Conference on Learning Representations, ICLR 2021, Virtual Event, Austria, 3–7 May 2021. [Google Scholar]

- Sadeq, N.; Xu, C.; McAuley, J. InforMask: Unsupervised Informative Masking for Language Model Pretraining. arXiv 2022, arXiv:2210.11771. [Google Scholar] [CrossRef]

- Liu, Y.; Li, S.; Wang, C.; Xiong, X.; Zheng, Y.; Wang, L.; Hao, S. SSUIE 1.0: A Dataset for Chinese Space Science and Utilization Information Extraction. In Proceedings of the Natural Language Processing and Chinese Computing, Proceedings of the 12th National CCF Conference, NLPCC 2023, Foshan, China, 12–15 October 2023; pp. 223–235. [Google Scholar] [CrossRef]

- Baldini Soares, L.; FitzGerald, N.; Ling, J.; Kwiatkowski, T. Matching the blanks: Distributional similarity for relation learning. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 2895–2905. [Google Scholar]

- Ji, H.; Grishman, R. Refining event extraction through cross-document inference. In Proceedings of the ACL-08: HLT, Columbus, OH, USA, 15–20 June 2008; Association for Computational Linguistics: Stroudsburg, PA, USA, 2008; pp. 254–262. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; p. 15947. [Google Scholar]

- Lafferty, J.D.; McCallum, A.; Pereira, F.C.N. Conditional random fields: Probabilistic models for segmenting and labeling sequence data. In Proceedings of the Eighteenth International Conference on Machine Learning, Sydney, Australia, 28 June–1 July 2001; Morgan Kaufmann: San Francisco, CA, USA, 2001; pp. 282–289. [Google Scholar]

- pytorch-crf—Pytorch-crf 0.7.2 Documentation. Available online: https://pytorch-crf.readthedocs.io/en/stable/ (accessed on 25 July 2024).

- Huang, Z.; Xu, W.; Yu, K. Bidirectional LSTM-CRF models for sequence tagging. arXiv 2015, arXiv:1508.01991. [Google Scholar] [CrossRef]

- Li, J.; Fei, H.; Liu, J.; Wu, S.; Zhang, M.; Teng, C.; Ji, D.; Li, F. Unified named entity recognition as word-word relation classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual Event, Canada, 22 February–1 March 2022; Volume 36, pp. 10965–10973. [Google Scholar]

- Wang, C.; Xiong, X.; Wang, L.; Zheng, Y.; Liu, Y.; Li, S. A Lexicon Enhanced Chinese Long Named Entity Recognition Using Word-Aware Attention. In Proceedings of the 2023 6th International Conference on Machine Learning and Natural Language Processing, Sanya, China, 27–29 December 2023; pp. 234–242. [Google Scholar]

- Zexuan, Z.; Chen, D. A frustratingly easy approach for entity and relation extraction. arXiv 2020, arXiv:2010.12812. [Google Scholar]

- Yan, Z.; Zhang, C.; Fu, J.; Zhang, Q.; Wei, Z. A partition filter network for joint entity and relation extraction. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Virtual, 7–11 November 2021. [Google Scholar]

- Xiong, X.; Wang, C.; Liu, Y.; Li, S. Enhancing Ontology Knowledge for Domain-Specific Joint Entity and Relation Extraction. In Proceedings of the 22nd Chinese National Conference on Computational Linguistics, Harbin, China, 3–5 August 2023; pp. 713–725. [Google Scholar]

| Corpus Name | Documents | No. Tokens |

|---|---|---|

| Web crawler | 46,746 | 123,570,673 |

| Wikipedia | 45,706 | 47,347,061 |

| Scientific publications | 11,342 | 55,683,291 |

| Archived files | 12,284 | 37,845,593 |

| Total | 116,078 | 264,446,618 |

| Chinese | English | |

|---|---|---|

| Original Sentence | 基于先进的静电悬浮技术开发的无容器材料实验柜。 | A containerless material experimental cabinet based on advanced electrostatic suspension technology. |

| +CWS | 基于/先进/的/静电/悬浮/技术/开发/的/无容器/材料/实验柜/。 | — |

| +DCWS | 基于/先进/的/静电悬浮/技术/开发/的/无容器材料实验柜/。 | — |

| +BERT Tokenizer | 基/于/先/进/的/静/电/悬/浮/技/术/开/发/的/无/容/器/材/料/实/验/柜/。 | A container ##less material experimental cabinet based on advanced electro ##static suspension technology. |

| Original Masking | 基/于/先/进/的/[M]/电/悬/浮/技/[M]/开/发/的/[M]/[M]/器/材/料/实/验/柜/。 | A [M] ##less material experimental cabinet [M] on advanced [M] ##static suspension [M]. |

| +WWM | 基于/先进/的/[M][M]/悬浮/[M][M]/开发/的/[M][M][M]/材料/实验柜/。 | A [M] [M] material experimental cabinet based on advanced [M] [M] suspension [M]. |

| +Informask++ | 基于/先进/的/[M][M][M][M]/技术/开发/的/[M][M][M][M] [M][M][M][M]/。 | A [M] [M] [M] [M] [M] based on advanced [M] [M] [M] technology. |

| Models | SSuieBERT | SciBERT | BERT-wwm | RoBERTa | BERT | LE-NER | W2NER |

|---|---|---|---|---|---|---|---|

| Linear | 79.75 (79.62) | 71.32 (71.30) | 72.32 (72.28) | 64.55 (64.50) | 63.11 (63.07) | 78.05 (78.02) | 77.95 (77.20) |

| CRF | 80.63 (80.53) | 72.76 (72.42) | 73.46 (73.37) | 66.17 (66.15) | 64.23 (64.21) | ||

| BiLSTM-CRF | 81.34 (81.31) | 71.80 (71.53) | 73.82 (71.66) | 67.56 (67.43) | 64.78 (64.65) |

| SSuieBERT | SciBERT | BERT-wwm | RoBERTa | BERT | OntoRE | PURE | PFN |

|---|---|---|---|---|---|---|---|

| 65.55 (65.52) | 58.68 (58.67) | 58.74 (58.73) | 58.61 (58.58) | 58.56 (58.45) | 63.40 (63.21) | 61.31 (61.28) | 60.72 (60.62) |

| SSuieBERT | SciBERT | BERT-wwm | RoBERTa | BERT |

|---|---|---|---|---|

| 94.56 (94.32) | 92.30 (92.18) | 92.31 (92.21) | 91.54 (91.52) | 91.42 (91.21) |

| Labeling Scheme | BIO | BIOES |

|---|---|---|

| Linear | 79.75 | 79.73 |

| CRF | 80.63 | 80.60 |

| BiLSTM-CRF | 81.34 | 81.35 |

| System | NER | RE | EC |

|---|---|---|---|

| SSuieBERT | 81.34 (81.31) | 65.55 (65.52) | 94.56 (94.32) |

| DCWS→CWS | 80.21 (80.18) | 65.38 (65.36) | 94.52 (94.52) |

| w/o DCWS | 79.76 (79.75) | 65.25 (65.21) | 94.06 (94.05) |

| informask++→informask | 80.66 (80.58) | 65.48 (65.45) | 94.50 (94.48) |

| w/o informask++ | 78.28 (78.26) | 64.43 (64.42) | 92.43 (92.41) |

| Model | Result |

|---|---|

| Setence | 天和核心舱是中国空间站的首发舱段,配备有高微重力实验柜和无容器材料实验柜。 The Tianhe Core module is the first segment of the China’s space station, equipped with a high microgravity experiment cabinet and a containerless material experiment cabinet. |

| Ground Truth | [Space_Mission 天和核心舱]是[Space_Mission 中国空间站]的首发舱段,配备有[Scientific_Experiment_Payload 高微重力实验柜]和[Scientific_Experiment_Payload 无容器材料实验柜]。 The [Space_Mission Tianhe Core module] is the first segment of the [Space_Mission China’s space station], equipped with a [Scientific_Experiment_Payload high microgravity experiment cabinet] and a [Scientific_Experiment_Payload containerless material experiment cabinet]. |

| SSuieBERT | [Space_Mission 天和核心舱]是[Space_Mission 中国空间站]的首发舱段,配备有[Scientific_Experiment_Payload 高微重力实验柜]和[Scientific_Experiment_Payload 无容器材料实验柜]。 The [Space_Mission Tianhe Core module] is the first segment of the [Space_Mission China’s space station], equipped with a [Scientific_Experiment_Payload high microgravity experiment cabinet] and a [Scientific_Experiment_Payload containerless material experiment cabinet]. |

| SciBERT | [Space_Mission 天和核心舱]是[Space_Mission 中国空间站]的首发舱段,配备有[Scientific_Experiment_Payload 高微重力实验柜]和[Scientific_Experiment_Payload 无容器] [Scientific_Domain 材料] [Scientific_Experiment_Payload 实验柜]。 The [Space_Mission Tianhe Core module] is the first segment of the [Space_Mission China’s space station], equipped with a [Scientific_Experiment_Payload high microgravity experiment cabinet] and a [Scientific_Experiment_Payload containerless] [Scientific_Domain material] [Scientific_Experiment_Payload experiment cabinet]. |

| BERT | [Space_Mission 天和核心舱]是[Organization 中国] [Space_Mission 空间站]的首发舱段,配备有[Scientific_Domain 高微重力] [Scientific_Experiment_Payload 实验柜]和[Scientific_Experiment_Payload 无容器] [Scientific_Domain 材料] [Scientific_Experiment_Payload 实验柜]。 The [Space_Mission Tianhe Core module] is the first segment of the [Organization China]’s [Space_Mission space station], equipped with a [Scientific_Domain high microgravity] [Scientific_Experiment_Payload experiment cabinet] and a [Scientific_Experiment_Payload containerless] [Scientific_Domain material] [Scientific_Experiment_Payload experiment cabinet]. |

| Setence | 两年以来,无容器材料实验柜中已开展多项关键研究项目,目前正在进行的项目包括偏晶合金壳/核型结构及弥散型组织形成机理研究、空间站静电悬浮复相合金相选择与无容器制备研究等,这些研究成果未来将会在许多领域发挥重要作用。 In the past two years, a number of key research projects have been carried out in the containerless material experimental cabinet, and the current projects include the study of monotectic alloy shell/karyotype structure and the formation mechanism of dispersed tissue, the selection of electrostatic suspension complex alloys and the study of containerless preparation in the space station, etc. These research results will play an important role in many fields in the future. |

| Ground Truth | 两年以来,[Scientific_Experiment_Payload 无容器材料实验柜]中已开展多项关键研究项目,目前正在进行的项目包括[Scientific_Experiment_Project 偏晶合金壳/核型结构及弥散型组织形成机理研究]、[Scientific_Experiment_Project 空间站静电悬浮复相合金相选择与无容器制备研究]等,这些研究成果未来将会在许多领域发挥重要作用。 In the past two years, a number of key research projects have been carried out in the [Scientific_Experiment_Payload containerless material experimental cabinet], and the current projects include [Scientific_Experiment_Project the study of monotectic alloy shell/karyotype structure and the formation mechanism of dispersed tissue], [Scientific_Experiment_Project the study of the selection of electrostatic suspension complex alloys and containerless preparation in the space station], etc. These research results will play an important role in many fields in the future. |

| SSuieBERT | 两年以来,[Scientific_Experiment_Payload 无容器材料实验柜]中已开展多项关键研究项目,目前正在进行的项目包括偏晶合金壳/核型结构及[Scientific_Experiment_Project 弥散型组织形成机理研究]、[Space_Mission 空间站] [Scientific_domian 静电悬浮]复相合金相选择与[Scientific_Experiment_Project 无容器制备研究]等,这些研究成果未来将会在许多领域发挥重要作用。 In the past two years, a number of key research projects have been carried out in the [Scientific_Experiment_Payload containerless material experimental cabinet], and the current projects include the study of monotectic alloy shell/karyotype structure and [Scientific_Experiment_Project the formation mechanism of dispersed tissue], the study of the selection of [Scientific_domian electrostatic suspension] complex alloys and [Scientific_Experiment_Project containerless preparation] in the [Space_Mission space station], etc. These research results will play an important role in many fields in the future. |

| SciBERT | 两年以来,[Scientific_Experiment_Payload 无容器] [Scientific_Domain 材料] [Scientific_Experiment_Payload 实验柜]中已开展多项关键研究项目,目前正在进行的项目包括[Scientific_Domain 偏晶合金壳]/核型结构及弥散型[Scientific_Domain 组织形成机理]研究、[Space_Mission 空间站]静电悬浮复相合金相选择与[Scientific_Experiment_Payload 无容器]制备研究等,这些研究成果未来将会在许多领域发挥重要作用。 In the past two years, a number of key research projects have been carried out in the [Scientific_Experiment_Payload containerless] [Scientific_Domain material] [Scientific_Experiment_Payload experimental cabinet], and the current projects include the study of [Scientific_Domain monotectic alloy shell]/karyotype structure and [Scientific_Domain the formation mechanism of dispersed tissue], the study of the selection of electrostatic suspension complex alloys and [Scientific_Experiment_Payload containerless] preparation in the [Space_Mission space station], etc. These research results will play an important role in many fields in the future. |

| BERT | 两年以来,[Scientific_Experiment_Payload无容器] [Scientific_Domain材料] [Scientific_Experiment_Payload实验柜]中已开展多项关键研究项目,目前正在进行的项目包括偏晶合金壳/核型结构及弥散型组织形成机理研究、[Space_Mission空间站]静电悬浮复相合金相选择与[Scientific_Experiment_Payload无容器]制备研究等,这些研究成果未来将会在许多领域发挥重要作用。 In the past two years, a number of key research projects have been carried out in the [Scientific_Experiment_Payload containerless] [Scientific_Domain material] [Scientific_Experiment_Payload experimental cabinet], and the current projects include the study of monotectic alloy shell/karyotype structure and the formation mechanism of dispersed tissue, the study of the selection of electrostatic suspension complex alloys and [Scientific_Experiment_Payload containerless] preparation in the [Space_Mission space station], etc. These research results will play an important role in many fields in the future. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Li, S.; Deng, Y.; Hao, S.; Wang, L. SSuieBERT: Domain Adaptation Model for Chinese Space Science Text Mining and Information Extraction. Electronics 2024, 13, 2949. https://doi.org/10.3390/electronics13152949

Liu Y, Li S, Deng Y, Hao S, Wang L. SSuieBERT: Domain Adaptation Model for Chinese Space Science Text Mining and Information Extraction. Electronics. 2024; 13(15):2949. https://doi.org/10.3390/electronics13152949

Chicago/Turabian StyleLiu, Yunfei, Shengyang Li, Yunziwei Deng, Shiyi Hao, and Linjie Wang. 2024. "SSuieBERT: Domain Adaptation Model for Chinese Space Science Text Mining and Information Extraction" Electronics 13, no. 15: 2949. https://doi.org/10.3390/electronics13152949

APA StyleLiu, Y., Li, S., Deng, Y., Hao, S., & Wang, L. (2024). SSuieBERT: Domain Adaptation Model for Chinese Space Science Text Mining and Information Extraction. Electronics, 13(15), 2949. https://doi.org/10.3390/electronics13152949