A Lightweight Convolutional Spiking Neural Network for Fires Detection Based on Acoustics

Abstract

1. Introduction

- The proposed CSNN method adeptly merges the inherent sensitivity to temporal dynamics with the robust spatial feature extraction capabilities characteristic of convolutional operations. This integration notably enhances the accuracy of fire detection based on acoustics in real-world noisy environments.

- The study introduces a specialized convolution encoder within the CSNN framework capable of converting acoustic inputs into spike-coded representations through learnable parameters. This encoding mechanism provides a more robust and adaptive solution for fire detection based on acoustics.

- The study presents a spike-based computing method notable for its lightweight design, low computational time complexity, and high energy efficiency. It is well suited for fire detection in the edge hardware of remote surveillance systems.

2. Methodology

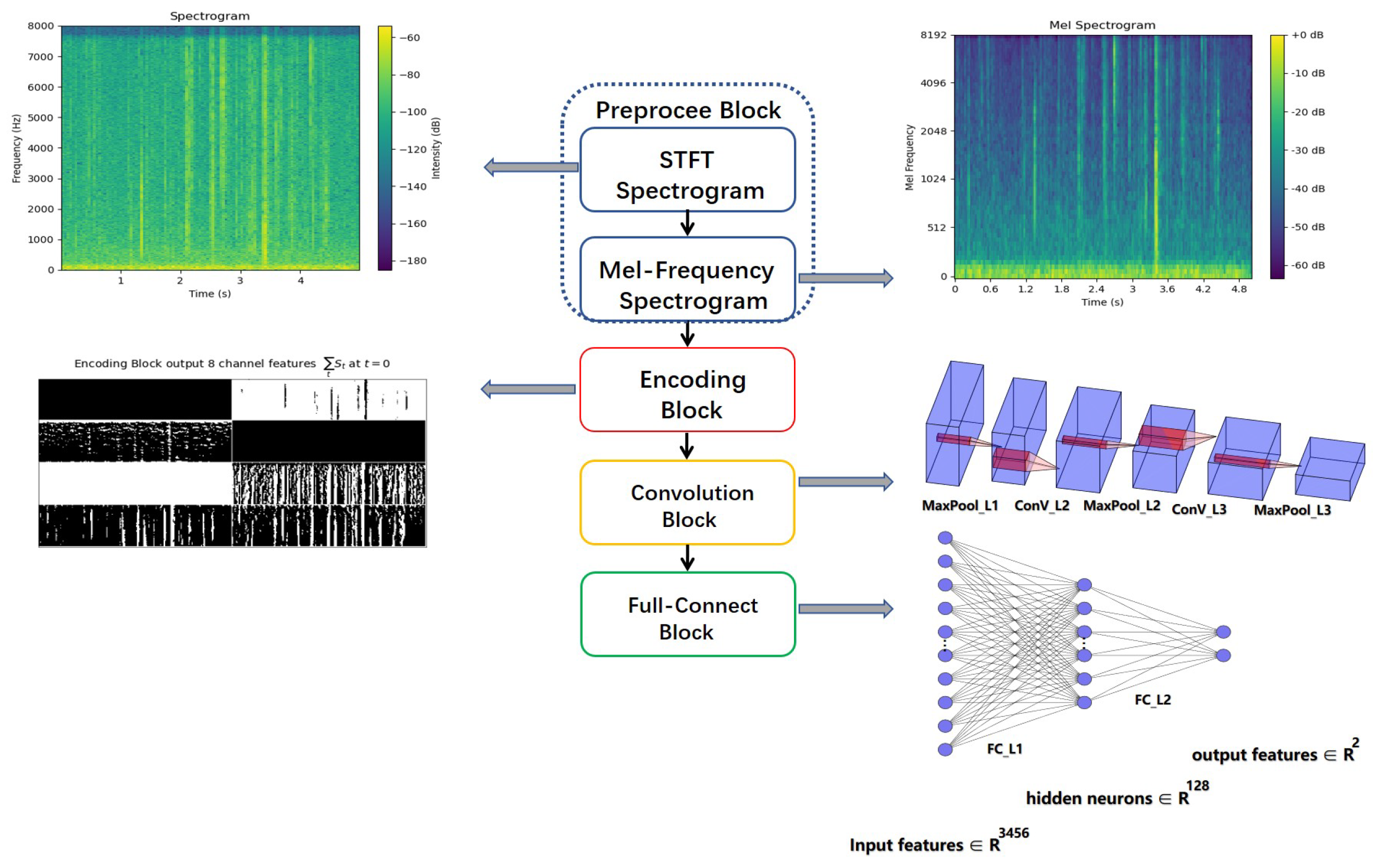

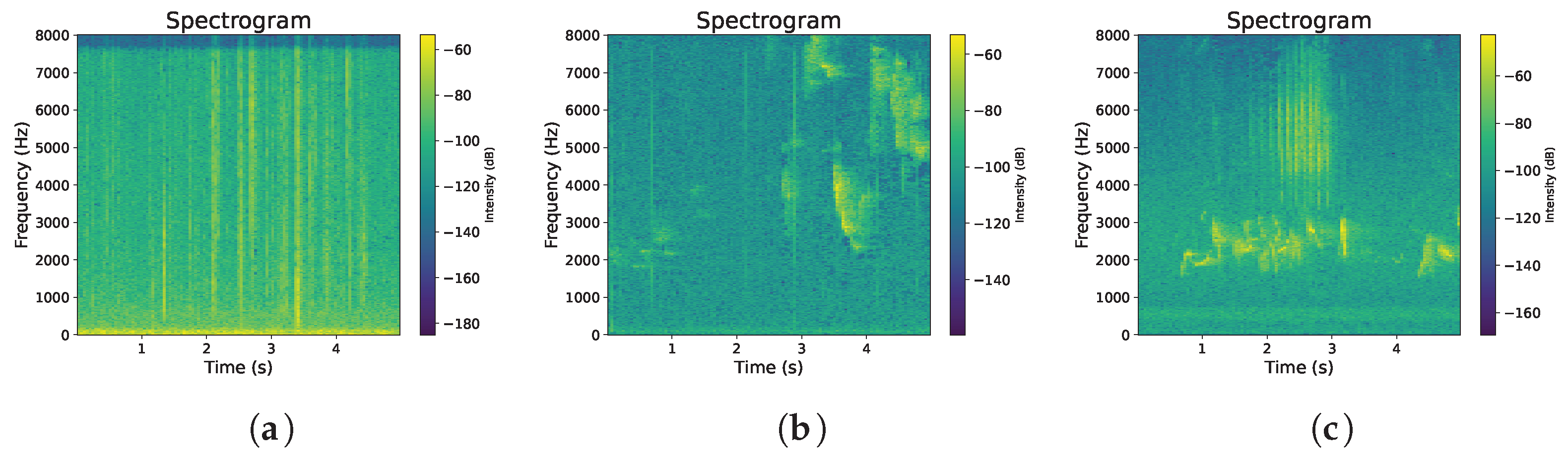

2.1. Preprocess Block

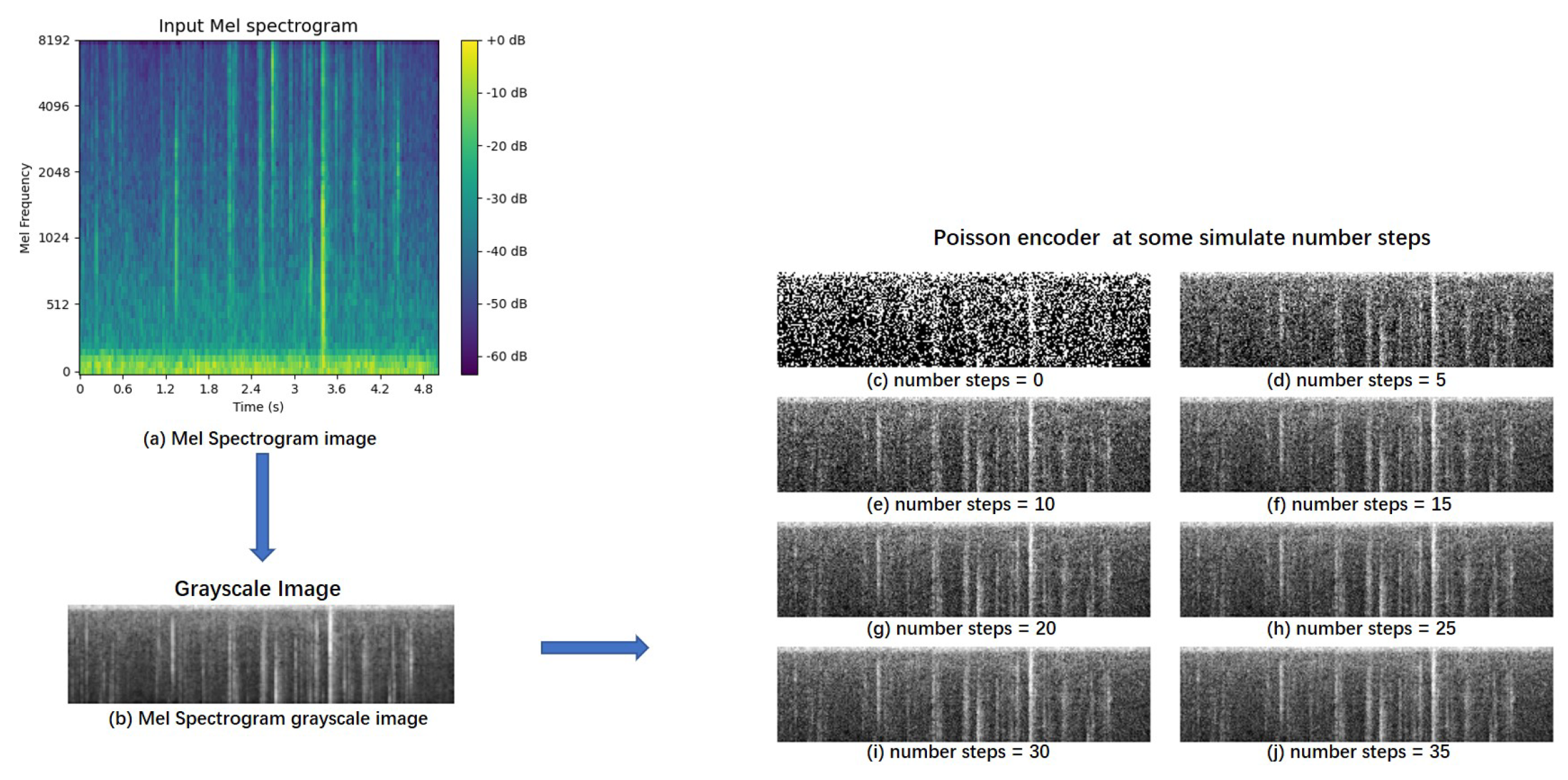

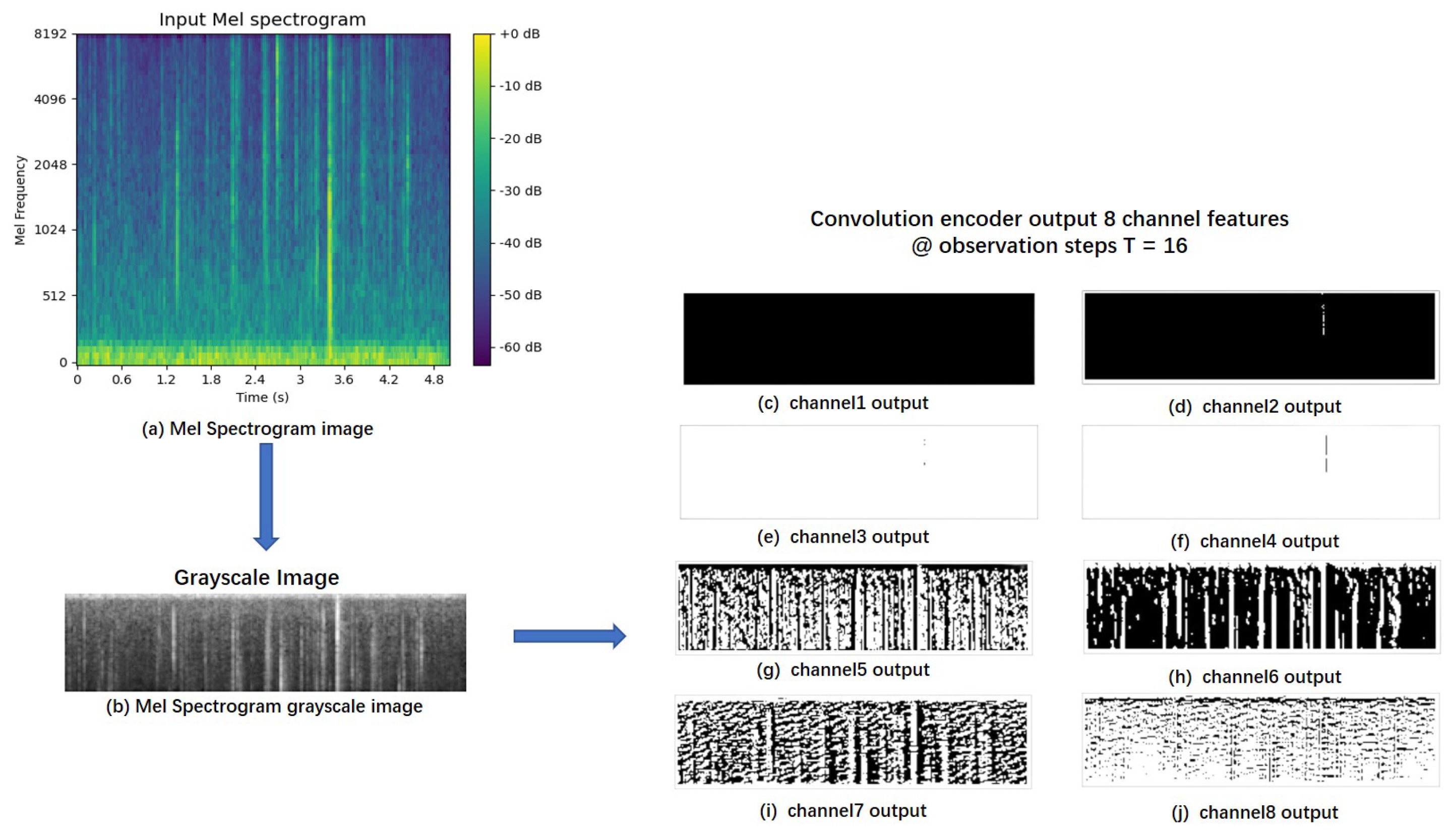

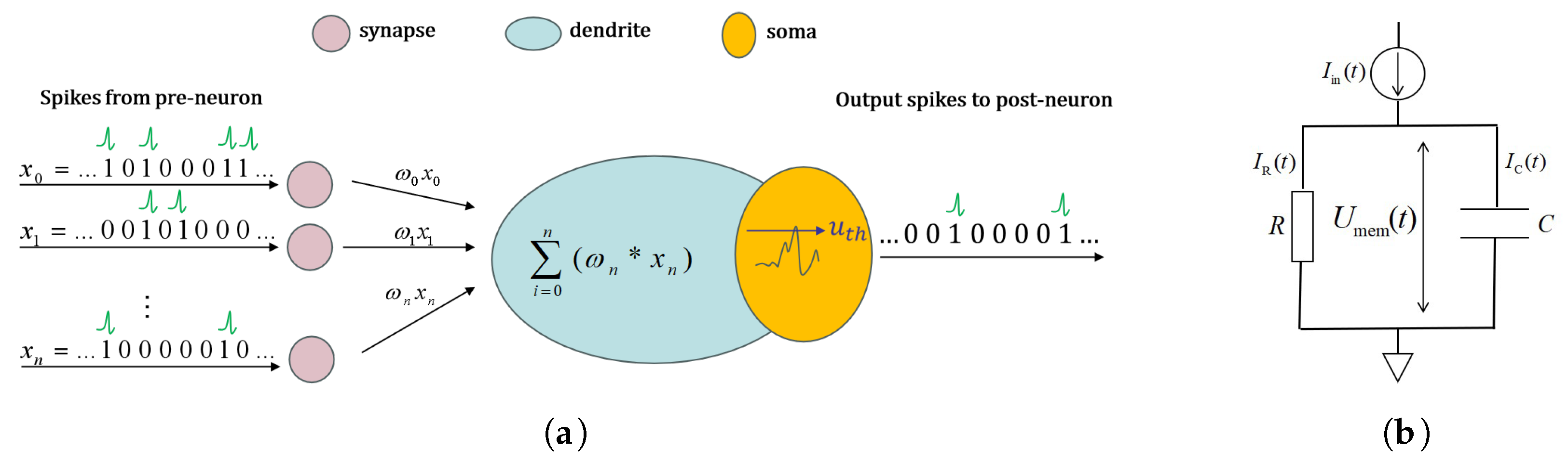

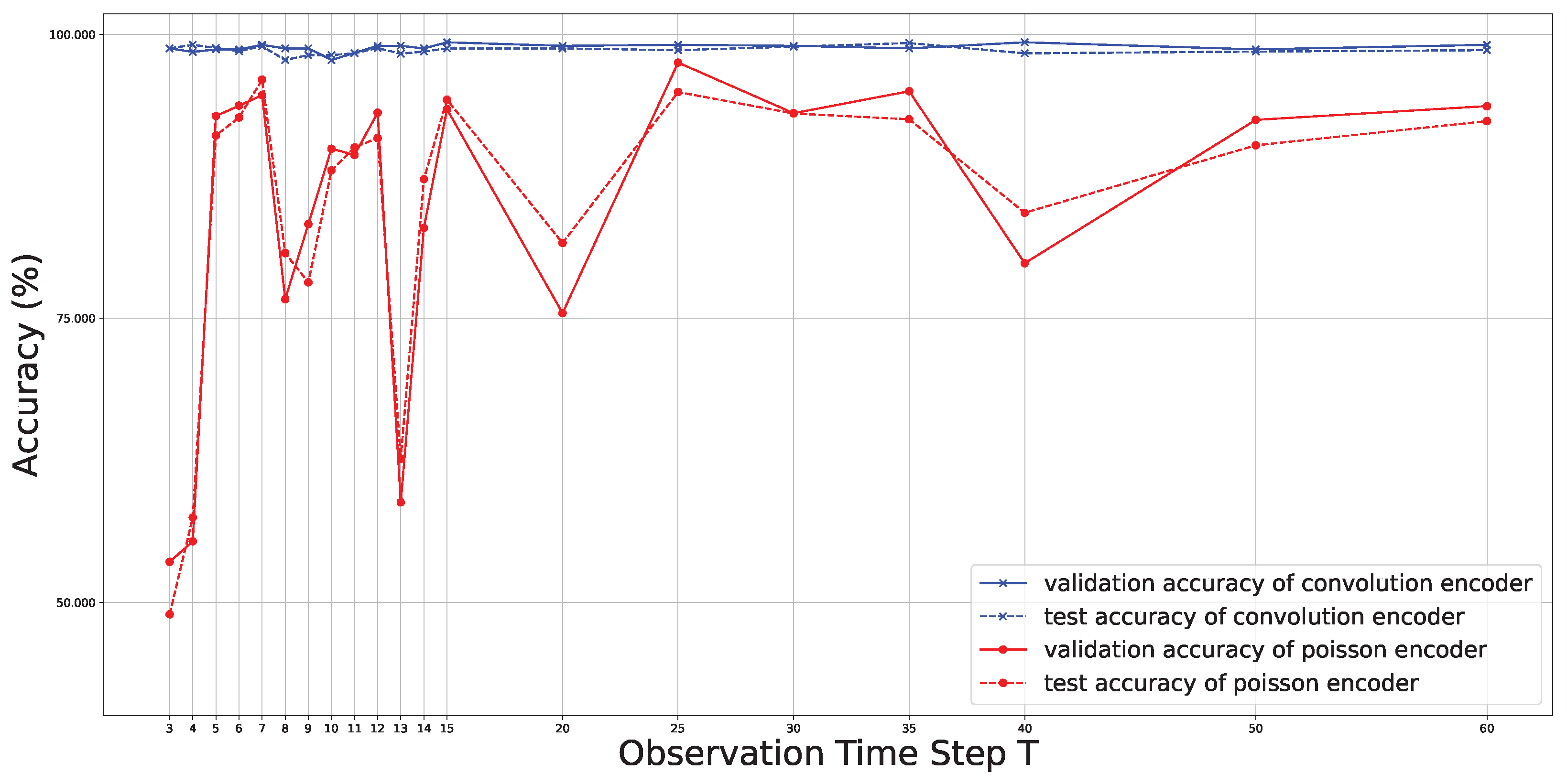

2.2. Encoding Block

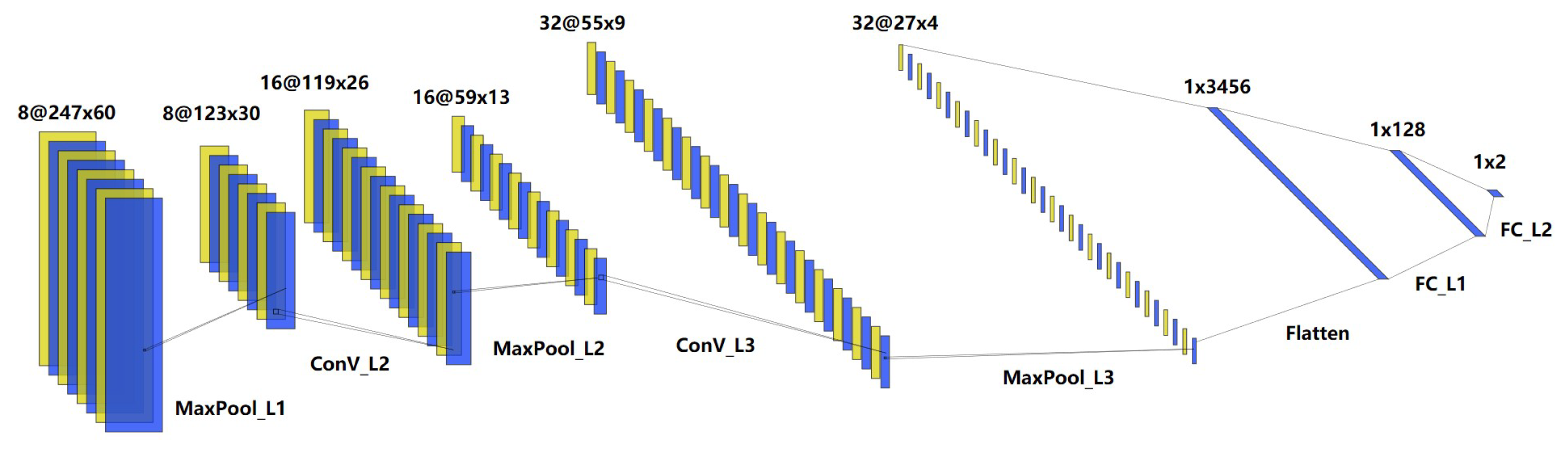

2.3. Convolutional Block

2.4. Full-Connect Block

2.5. Leaky Integrate and Fire Neuron Model

3. Experiments

3.1. Datasets

3.2. Experimental Configuration

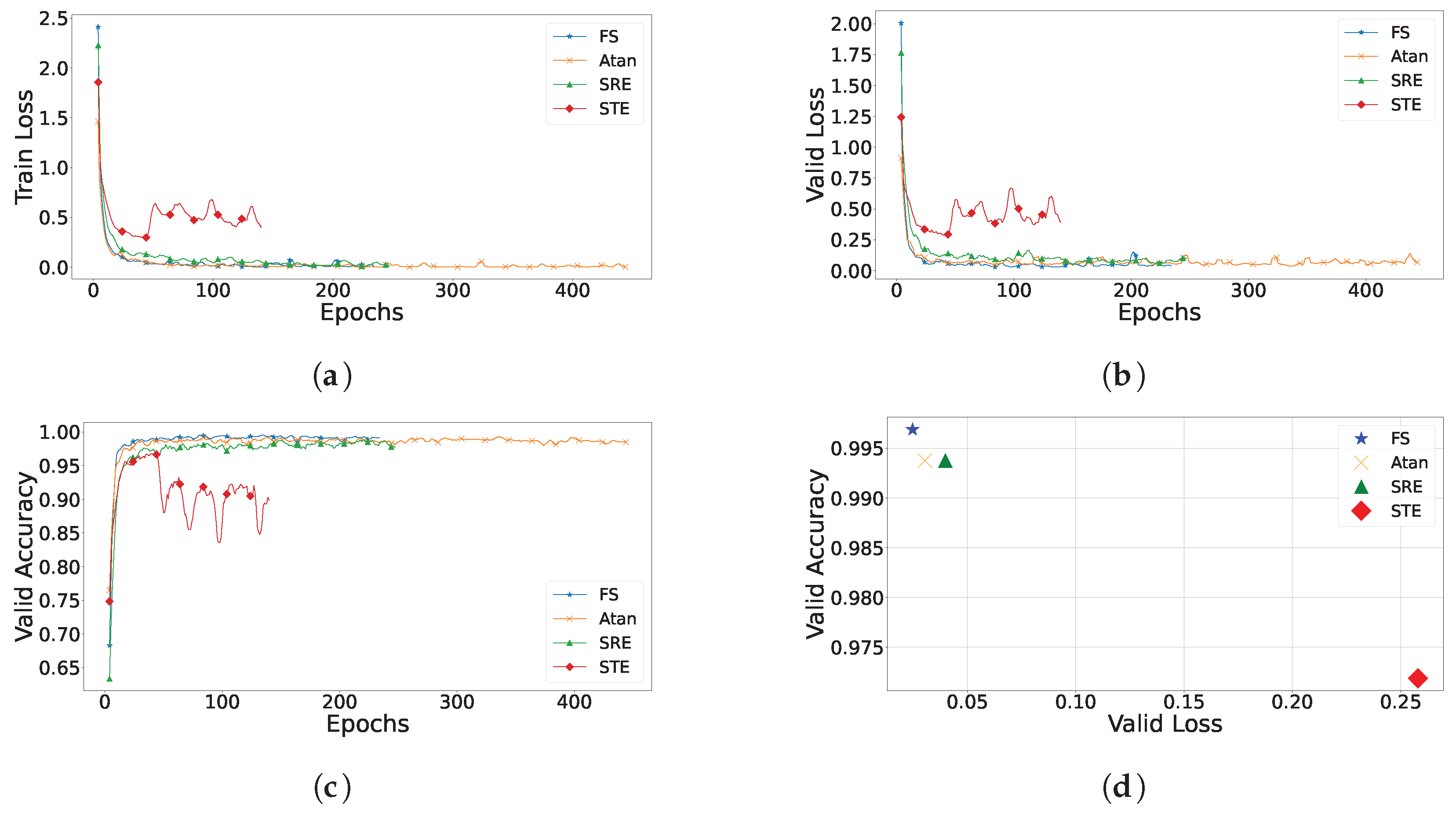

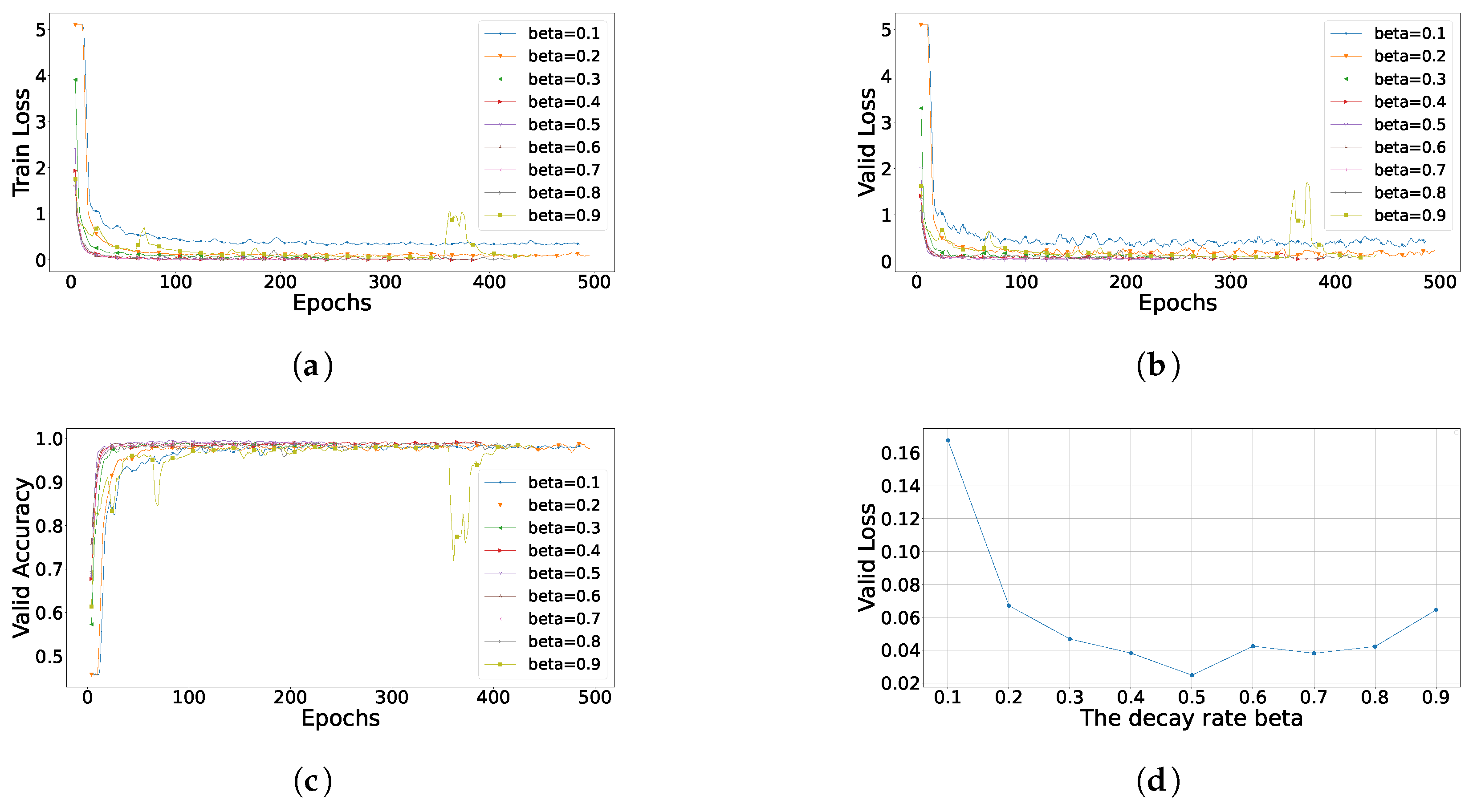

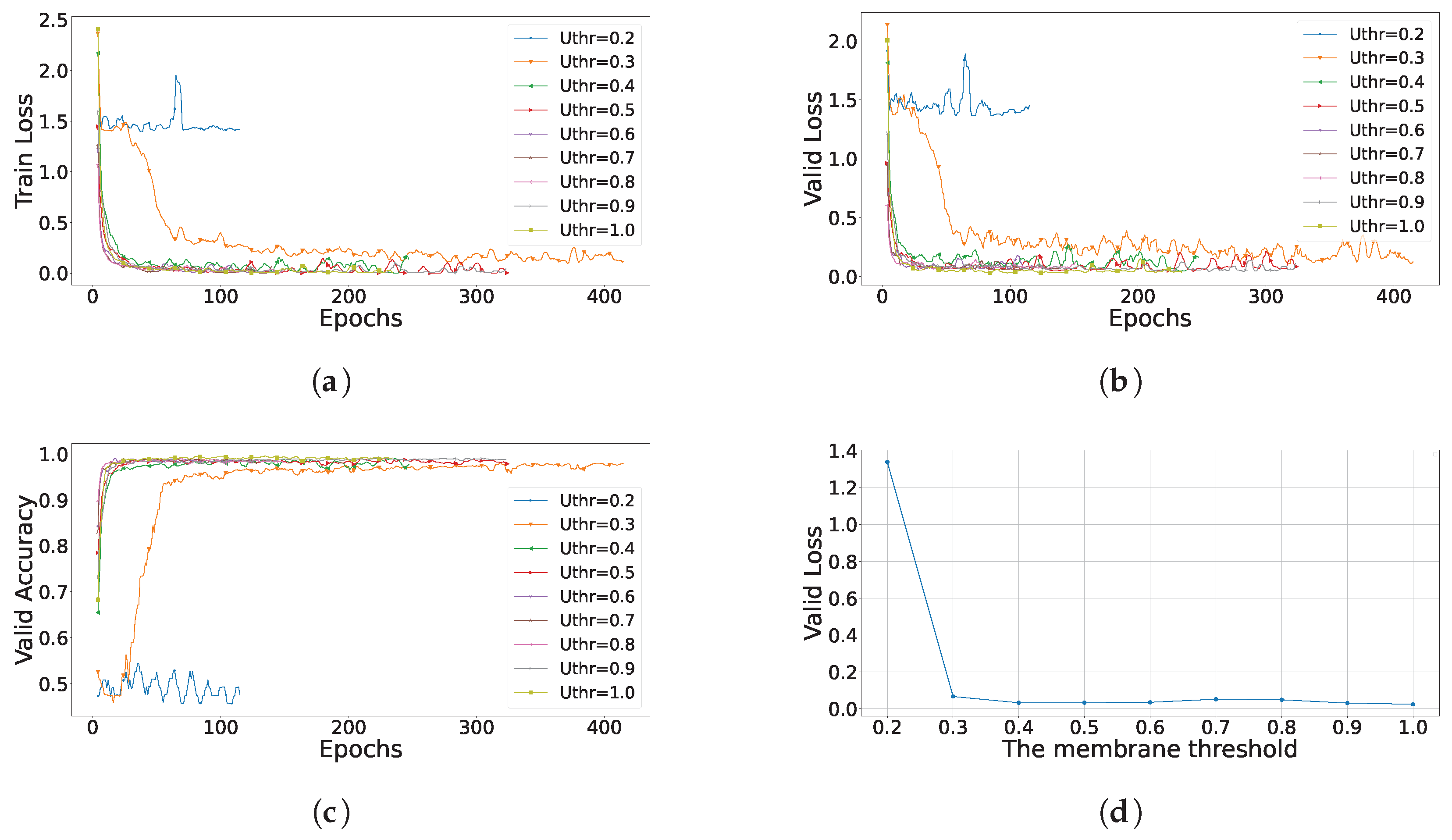

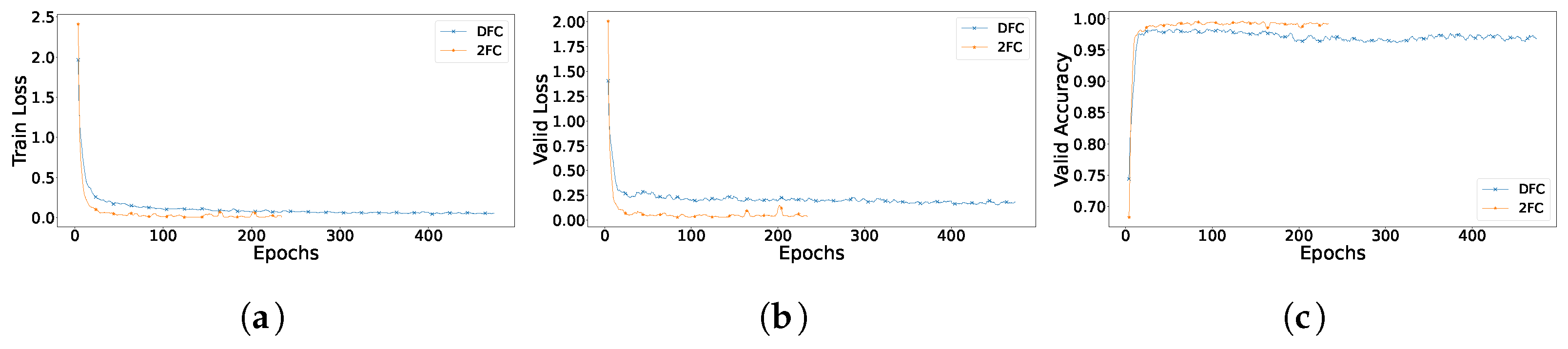

3.3. Training and Ablation Study

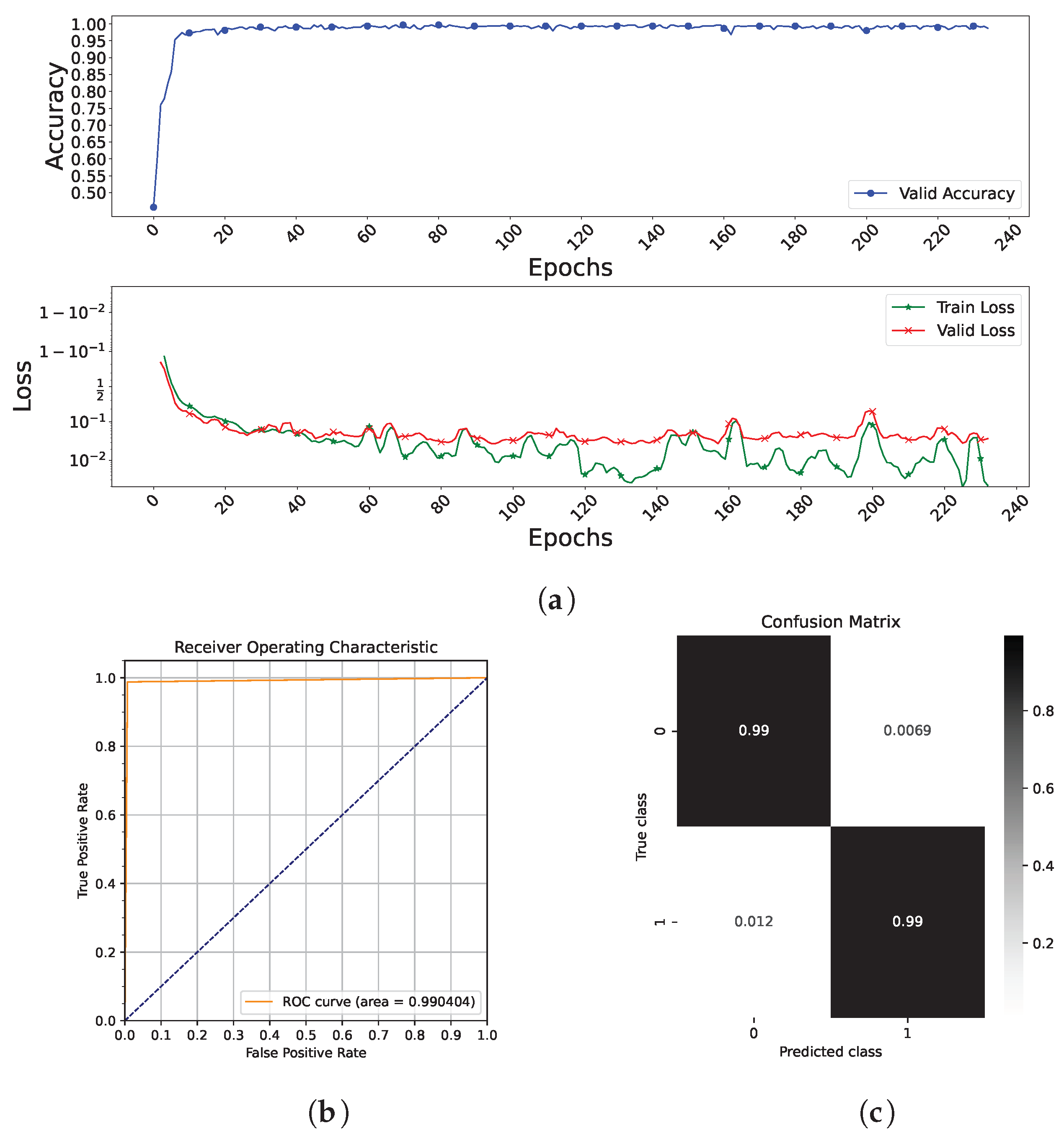

4. Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Khan, F.; Xu, Z.; Sun, J.; Khan, F.M.; Ahmed, A.; Zhao, Y. Recent advances in sensors for fire detection. Sensors 2022, 22, 3310. [Google Scholar] [CrossRef] [PubMed]

- Martinsson, J.; Runefors, M.; Frantzich, H.; Glebe, D.; McNamee, M.; Mogren, O. A novel method for smart fire detection using acoustic measurements and machine learning: Proof of concept. Fire Technol. 2022, 58, 3385–3403. [Google Scholar] [CrossRef]

- Festag, S. False alarm ratio of fire detection and fire alarm systems in germany—A meta analysis. Fire Saf. J. 2016, 79, 119–126. Available online: https://www.sciencedirect.com/science/article/pii/S0379711215300369 (accessed on 3 May 2023). [CrossRef]

- Ding, Q.; Peng, Z.; Liu, T.; Tong, Q. Building fire alarm system with multi-sensor and information fusion technology based on d-s evidence theory. In Proceedings of the 2014 International Symposium on Computer, Consumer and Control, Taichung, Taiwan, 10–12 June 2014; pp. 906–909. [Google Scholar]

- Zhang, W. Electric fire early warning system of gymnasium building based on multi-sensor data fusion technology. In Proceedings of the 2021 International Conference on Machine Learning and Intelligent Systems Engineering (MLISE), Chongqing, China, 9–11 July 2021; pp. 339–343. [Google Scholar]

- Wu, L.; Chen, L.; Hao, X. Multi-sensor data fusion algorithm for indoor fire early warning based on bp neural network. Information 2021, 12, 59. [Google Scholar] [CrossRef]

- Liu, P.; Xiang, P.; Lu, D. A new multi-sensor fire detection method based on lstm networks with environmental information fusion. Neural Comput. Appl. 2023, 35, 25275–25289. [Google Scholar] [CrossRef]

- Li, J.; Ai, F.; Cai, C.; Xiong, H.; Li, W.; Jiang, X.; Liu, Z. Fire Detecting for Dense Bus Ducts Based on Data Fusion. Energy Rep. 2023, 9, 361–369. Available online: https://www.sciencedirect.com/science/article/pii/S2352484723008995 (accessed on 12 March 2024). [CrossRef]

- Viegas, D.X.; Pita, L.P.; Nielsen, F.; Haddad, K.; Tassini, C.C.; D’Altrui, G.; Quaranta, V.; Dimino, I.; Tsangaris, H. Acoustic characterization of a forest fire event. In Proceedings of the SPIE—The International Society for Optical Engineering, Incheon, Republic of Korea, 13–14 October 2008; Volume 119, pp. 374–385. [Google Scholar]

- Khamukhin, A.A.; Bertoldo, S. Spectral analysis of forest fire noise for early detection using wireless sensor networks. In Proceedings of the 2016 International Siberian Conference on Control and Communications (SIBCON), Moscow, Russia, 12–14 May 2016. [Google Scholar]

- Chwalek, P.; Chen, H.; Dutta, P.; Dimon, J.; Singh, S.; Chiang, C.; Azwell, T. Downwind fire and smoke detection during a controlled burn—Analyzing the feasibility and robustness of several downwind wildfire sensing modalities through real world applications. Fire 2023, 6, 356. [Google Scholar] [CrossRef]

- Thomas, A.; Williams, G.T. Flame noise: Sound emission from spark-ignited bubbles of combustible gas. Proc. R. Soc. Lond. Ser. A Math. Phys. Sci. 1966, 294, 449–466. [Google Scholar]

- Grosshandler, W.; Jackson, M. Acoustic emission of structural materials exposed to open flames. Fire Saf. J. 1994, 22, 209–228. [Google Scholar] [CrossRef]

- Wang, M.; Wu, J.B.; Li, C.H.; Luo, W.; Zhang, L.W. Transformer fire identification method based on multi-neural network and evidence theory. In Proceedings of the 2020 IEEE 9th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 11–13 December 2020. [Google Scholar]

- Bedard, A.J.; Nishiyama, R.T. Infrasound generation by large fires: Experimental results and a review of an analytical model predicting dominant frequencies. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Toronto, ON, Canada, 24–28 June 2002. [Google Scholar]

- Sonkin, M.A.; Khamukhin, A.A.; Pogrebnoy, A.V.; Marinov, P.; Atanassova, V.; Roeva, O.; Atanassov, K.; Alexandrov, A. Intercriteria Analysis as Tool for Acoustic Monitoring of Forest for Early Detection Fires; Atanassov, K.T., Atanassova, V., Kacprzyk, J., Kaluszko, A., Krawczak, M., Owsinski, J.W., Sotirov, S., Sotirova, E., Szmidt, E., Zadrozny, S., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2021; pp. 205–213. [Google Scholar]

- Khamukhin, A.A.; Demin, A.Y.; Sonkin, D.M.; Bertoldo, S.; Perona, G.; Kretova, V. An algorithm of the wildfire classification by its acoustic emission spectrum using wireless sensor networks. J. Phys. Conf. Ser. 2017, 803, 012067. [Google Scholar] [CrossRef]

- Zhang, S.; Gao, D.; Lin, H.; Sun, Q. Wildfire detection using sound spectrum analysis based on the internet of things. Sensors 2019, 19, 5093. [Google Scholar] [CrossRef] [PubMed]

- Kong, Q.; Cao, Y.; Iqbal, T.; Wang, Y.; Wang, W.; Plumbley, M.D. Panns: Large-scale pretrained audio neural networks for audio pattern recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 2880–2894. [Google Scholar] [CrossRef]

- Huang, H.-T.; Downey, A.R.J.; Bakos, J.D. Audio-based wildfire detection on embedded systems. Electronics 2022, 11, 1417. [Google Scholar] [CrossRef]

- Peruzzi, G.; Pozzebon, A.; Meer, M.V.D. Fight fire with fire: Detecting forest fires with embedded machine learning models dealing with audio and images on low power iot devices. Sensors 2023, 23, 783. [Google Scholar] [CrossRef] [PubMed]

- Lee, B.-J.; Lee, M.-S.; Jung, W.-S. Acoustic based fire event detection system in underground utility tunnels. Fire 2023, 6, 211. [Google Scholar] [CrossRef]

- Zeng, Y.; Zhao, D.; Zhao, F.; Shen, G.; Dong, Y.; Lu, E.; Zhang, Q.; Sun, Y.; Liang, Q.; Zhao, Y. Braincog: A spiking neural network based, brain-inspired cognitive intelligence engine for brain-inspired ai and brain simulation. Patterns 2023, 4, 100789. [Google Scholar] [CrossRef] [PubMed]

- Yu, Q.; Yan, R.; Tang, H.; Tan, K.C.; Li, H. A spiking neural network system for robust sequence recognition. IEEE Trans. Neural Netw. Learn. Syst. 2015, 27, 621–635. [Google Scholar] [CrossRef] [PubMed]

- Agarwal, R.; Ghosal, P.; Murmu, N.; Nandi, D. Spiking Neural Network in Computer Vision: Techniques, Tools and Trends; Borah, S., Gandhi, T.K., Piuri, V., Eds.; Springer Nature: Singapore, 2023; pp. 201–209. [Google Scholar]

- Lv, C.; Xu, J.; Zheng, X. Spiking convolutional neural networks for text classification. arXiv 2024, arXiv:2406.19230. [Google Scholar]

- Xu, Q.; Qi, Y.; Yu, H.; Shen, J.; Tang, H.; Pan, G. Csnn: An augmented spiking based framework with perceptron-inception. IJCAI 2018, 1646, 1–7. [Google Scholar]

- Xiao, R.; Yan, R.; Tang, H.; Tan, K.C. A spiking neural network model for sound recognition. In Cognitive Systems and Signal Processing; Sun, F., Liu, H., Hu, D., Eds.; Springer: Singapore, 2017; pp. 584–594. [Google Scholar]

- Zeng, Y.; Zhang, T.; Bo, X.U. Improving multi-layer spiking neural networks by incorporating brain-inspired rules. Sci. China Inf. Sci. 2017, 60, 052201. [Google Scholar] [CrossRef]

- Zhang, T.; Zeng, Y.; Zhao, D.; Wang, L.; Xu, B. Hmsnn: Hippocampus inspired memory spiking neural network. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016. [Google Scholar]

- Cheng, X.; Hao, Y.; Xu, J.; Xu, B. Lisnn: Improving spiking neural networks with lateral interactions for robust object recognition. In Proceedings of the International Joint Conferences on Artificial Intelligence Organization, Online, 7–8 January 2020. [Google Scholar]

- Wang, Z.; Wang, Z.; Li, H.; Qin, L.; Jiang, R.; Ma, D.; Tang, H. Eas-snn: End-to-end adaptive sampling and representation for event-based detection with recurrent spiking neural networks. arXiv 2024, arXiv:2403.12574. [Google Scholar]

- Han, B.; Roy, K. Deep spiking neural network: Energy efficiency through time based coding. In Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 388–404. [Google Scholar]

- Yamazaki, K.; Vo-Ho, V.-K.; Bulsara, D.; Le, N. Spiking neural networks and their applications: A review. Brain Sci. 2022, 12, 863. [Google Scholar] [CrossRef] [PubMed]

- Garg, I.; Chowdhury, S.S.; Roy, K. Dct-snn: Using dct to distribute spatial information over time for low-latency spiking neural networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 4671–4680. [Google Scholar]

- Rathi, N.; Roy, K. Diet-snn: A low-latency spiking neural network with direct input encoding and leakage and threshold optimization. IEEE Trans. Neural Netw. Learn. Syst. 2021, 34, 3174–3182. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; Zhang, H.; Lin, Y.; Li, G.; Wang, M.; Tang, Y. Liaf-net: Leaky integrate and analog fire network for lightweight and efficient spatiotemporal information processing. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6249–6262. [Google Scholar] [CrossRef] [PubMed]

- Peruzzi, G.; Pozzebon, A.; Van Der Meer, M. Test Video. 2022. Available online: https://drive.google.com/file/d/1Hi2gs4mkrFibULaHfVDzgJZgVaVUYf6L/view?usp=share_link (accessed on 1 May 2024).

- Fonseca, E.; Favory, X.; Pons, J.; Font, F.; Serra, X. Fsd50k: An open dataset of human-labeled sound events. IEEE/ACM Trans. Audio Speech Lang. Process. 2022, 30, 829–852. [Google Scholar] [CrossRef]

- Piczak, K.J. Esc: Dataset for environmental sound classification. In Proceedings of the 23rd ACM International Conference on Multimedia, Ser. MM ’15, Brisbane, Australia, 26–30 October 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 1015–1018. [Google Scholar] [CrossRef]

- Eshraghian, J.K.; Ward, M.; Neftci, E.; Wang, X.; Lenz, G.; Dwivedi, G.; Bennamoun, M.; Jeong, D.S.; Lu, W.D. Training spiking neural networks using lessons from deep learning. Proc. IEEE 2023, 111, 1016–1054. [Google Scholar] [CrossRef]

- Jiang, X.; Xie, H.; Lu, Z.; Hu, J. Energy-efficient and high-performance ship classification strategy based on siamese spiking neural network in dual-polarized sar images. Remote. Sens. 2023, 15, 4966. [Google Scholar] [CrossRef]

- Rathi, N.; Srinivasan, G.; Panda, P.; Roy, K. Enabling deep spiking neural networks with hybrid conversion and spike timing dependent backpropagation. arXiv 2020, arXiv:2005.01807. [Google Scholar]

- Dasbach, S.; Tetzlaff, T.; Diesmann, M.; Senk, J. Dynamical Characteristics of Recurrent Neuronal Networks Are Robust against Low Synaptic Weight Resolution. Front. Neurosci. 2021, 15, 757790. Available online: https://www.frontiersin.org/journals/neuroscience/articles/10.3389/fnins.2021.757790 (accessed on 16 April 2024). [CrossRef]

| Window length | 1024 |

| Hop length | 320 |

| Window function | Hanning |

| Mel bins | 64 |

| Layer | Type | Output Shape | Learnable Parameters |

|---|---|---|---|

| InputLayer | - | [batch,251,64,1] | 0 |

| BatchNormLayer | Normalization | [batch,251,64,1] | 0 |

| Conv_L1 | Convolutional | [batch,247,60,8] | 208 |

| Neuron Node | LIF | [T,batch,247,60,8] | 0 |

| MaxPool_L1 | Max Pooling | [batch,123,130,8] | 0 |

| Conv_L2 | Convolutional | [batch,119,26,16] | 3216 |

| Neuron Node | LIF | [T,batch,119,26,16] | 0 |

| MaxPool_L2 | Max Pooling | [batch,59,13,16] | 0 |

| Conv_L3 | Convolutional | [batch,55,9,32] | 12,832 |

| Neuron Node | LIF | [T,batch,55,9,32] | 0 |

| MaxPool_L3 | Max Pooling | [batch,27,4,32] | 0 |

| FlattenLayer | Flatten | [batch,1,1,3456] | 0 |

| FC_L1 | Fully Connected | [batch,1,1,128] | 442,496 |

| Neuron Node | LIF | [T,batch,1,1,128] | 0 |

| FC_L2 | Fully Connected | [batch,1,1,2] | 258 |

| Neuron Node | LIF | [T,batch,1,1,2] | 0 |

| Class | Audio Type | Number of Samples | Total Time (s) |

|---|---|---|---|

| Fire | Clean fire | 277 | 1385 |

| Fire with bird sounds | 271 | 1355 | |

| Fire with kinds of noise | 546 | 2730 | |

| Recordings by other researcher | 71 | 355 | |

| Fire with wind sounds | 267 | 1335 | |

| Recordings by myself | 200 | 1000 | |

| No Fire | Noise—bird | 276 | 1380 |

| Noise—crick | 200 | 1000 | |

| Unknown noise | 400 | 2000 | |

| Noise—rain | 345 | 1725 | |

| Noise—wind | 207 | 1035 |

| Architecture | Parameters | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|---|

| CNN6 [19] | 4,837,455 | 99.02% | 99.07% | 99.07% | 99.07% |

| CNN10 [19] | 5,219,279 | 99.35% | 99.07% | 99.69% | 99.38% |

| CNN14 [2,19] | 80,753,615 | 99.51% | 100% | 99.07% | 99.53% |

| Proposed CSNN | 459,010 | 99.02% | 99.37% | 98.75% | 99.06% |

| CNN6 | CNN10 | CNN14 | Proposed CSNN | |

|---|---|---|---|---|

| Batch1 inference time (s) | 0.6459 | 0.7247 | 0.7914 | 0.0452 |

| Batch2 inference time (s) | 0.6482 | 0.7276 | 0.7953 | 0.0456 |

| Batch3 inference time (s) | 0.6505 | 0.7306 | 0.7993 | 0.0463 |

| Batch4 inference time (s) | 0.6528 | 0.7336 | 0.8030 | 0.0461 |

| Batch5 inference time (s) | 0.6563 | 0.7367 | 0.8067 | 0.0464 |

| Batch6 inference time (s) | 0.6586 | 0.7397 | 0.8107 | 0.047 |

| Batch7 inference time (s) | 0.6611 | 0.7426 | 0.8146 | 0.0493 |

| Batch8 inference time (s) | 0.6634 | 0.7456 | 0.8185 | 0.0477 |

| Batch9 inference time (s) | 0.6660 | 0.7486 | 0.8223 | 0.0498 |

| Batch10 inference time (s) | 0.7021 | 0.8398 | 0.9261 | 0.0759 |

| Average inference time (s) per audio clip | 0.0103 | 0.012 | 0.0132 | 0.0007 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Liu, Y.; Zheng, L.; Zhang, W. A Lightweight Convolutional Spiking Neural Network for Fires Detection Based on Acoustics. Electronics 2024, 13, 2948. https://doi.org/10.3390/electronics13152948

Li X, Liu Y, Zheng L, Zhang W. A Lightweight Convolutional Spiking Neural Network for Fires Detection Based on Acoustics. Electronics. 2024; 13(15):2948. https://doi.org/10.3390/electronics13152948

Chicago/Turabian StyleLi, Xiaohuan, Yi Liu, Libo Zheng, and Wenqiong Zhang. 2024. "A Lightweight Convolutional Spiking Neural Network for Fires Detection Based on Acoustics" Electronics 13, no. 15: 2948. https://doi.org/10.3390/electronics13152948

APA StyleLi, X., Liu, Y., Zheng, L., & Zhang, W. (2024). A Lightweight Convolutional Spiking Neural Network for Fires Detection Based on Acoustics. Electronics, 13(15), 2948. https://doi.org/10.3390/electronics13152948