Fast Noise Level Estimation via the Similarity within and between Patches

Abstract

1. Introduction

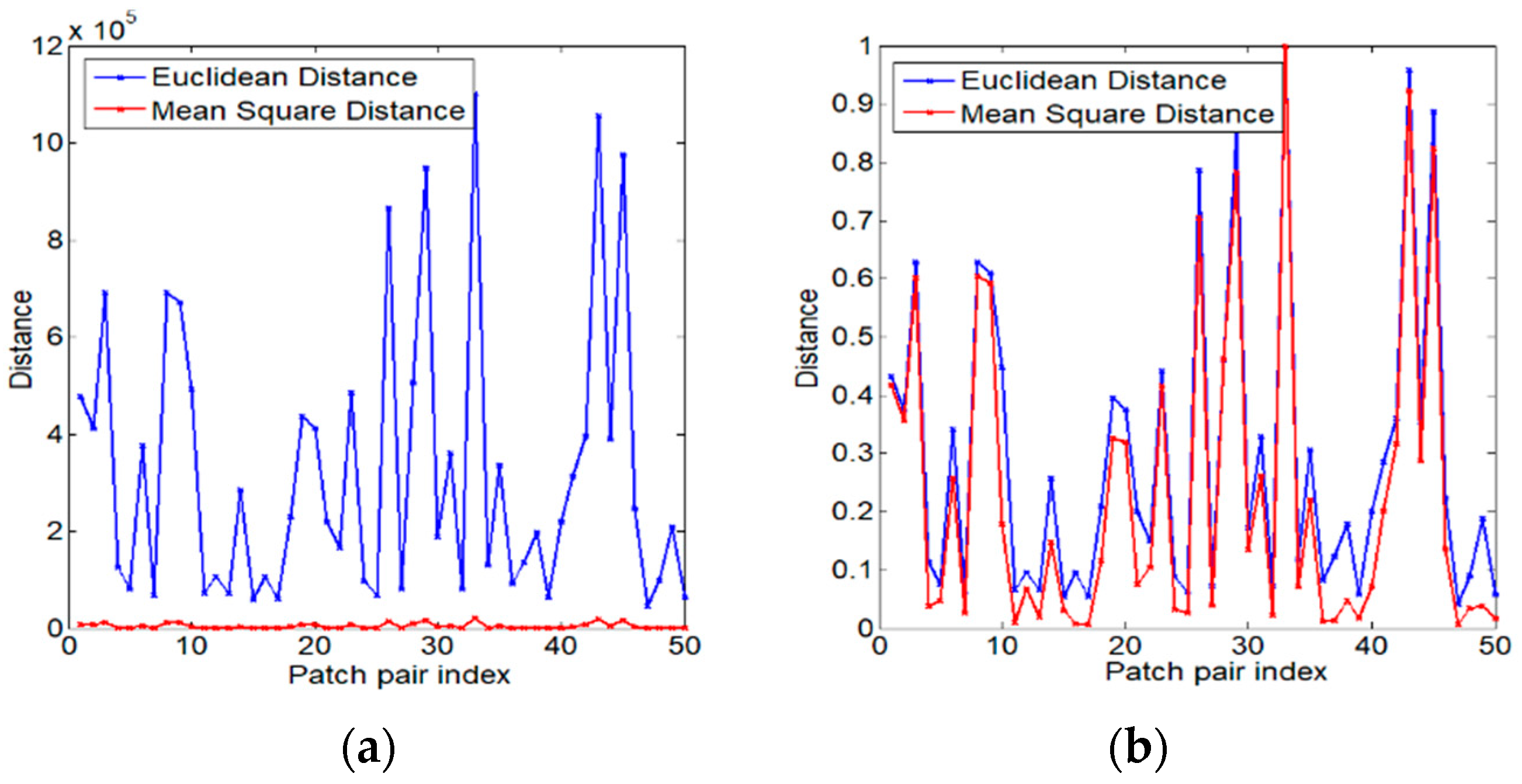

- We determine the MSD of image patches, and prove that it is more accurate than the Euclidean distance and can be expressed as the mean and std of the patches;

- We propose a pixel-level method to select similar pixels for fast NLE based on the 2D statistical histogram and summed area table;

- We propose to correct the initial estimation results by re-injecting noise to achieve more accurate NLE.

2. The Proposed Method

2.1. Mean Square Distance

2.2. Image Patch Feature Statistics

2.2.1. 2D Statistical Histogram to Represent Statistical Features

2.2.2. Summed Area Table for Fast Calculation of the Number of Similar Patches

2.3. Similar Patch and Pixel Search

2.4. Noise Level Estimation

2.5. Algorithm and Complexity Analysis

| Algorithm 1 Estimating Noise Level |

| Inputs: are used to of each patch Calculate the most similar rows of each row to obtain Calculate the local noise level end for is obtained by Equations (14) and (15) with quadratic estimation correction |

- (1)

- The complexity of calculating patch mean , std is (), establishing 2D statistical histogram is ();

- (2)

- The complexity of searching similar patches is (), where is the number of times to gradually increase or decrease the mean and std;

- (3)

- The complexity of searching similar pixels is ();

- (4)

- The complexity of computing is (), the complexity of computing , , and each calculation of the summed-area table is (1).

3. Experimental Results

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef] [PubMed]

- Hou, Y.; Xu, J.; Liu, M.; Liu, G.; Liu, L.; Zhu, F.; Shao, L. NLH: A Blind Pixel-Level Non-Local Method for Real-World Image Denoising. IEEE Trans. Image Process. 2020, 29, 5121–5135. [Google Scholar] [CrossRef]

- Li, B.; Ng, T.T.; Li, X.; Tan, S.; Huang, J. Revealing the Trace of High-Quality JPEG Compression Through Quantization Noise Analysis. IEEE Trans. Inf. Forensics Secur. 2017, 10, 558–573. [Google Scholar]

- Guo, F.F.; Wang, X.X.; Shen, J. Adaptive fuzzy c-means algorithm based on local noise detecting for image segmentation. IET Image Process. 2016, 10, 272–279. [Google Scholar] [CrossRef]

- Jiang, P.; Zhang, J.-Z. No-reference image quality assessment based on local maximum gradient. J. Electron. Inf. Technol. 2015, 37, 2587–2593. [Google Scholar]

- Scharr, H.; Spies, H. Accurate optical flow in noisy image sequences using flow adapted anisotropic diffusion. Signal Process. Image Commun. 2005, 20, 537–553. [Google Scholar] [CrossRef]

- Nan, Y.; Quan, Y.; Ji, H. Variational-EM-Based Deep Learning for Noise-Blind Image Deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Heidari-Gorji, H.; Ebrahimpour, R.; Zabbah, S. A temporal hierarchical feedforward model explains both the time and the accuracy of object recognition. Sci. Rep. 2021, 11, 5640. [Google Scholar] [CrossRef] [PubMed]

- Pratap, T.; Kokil, P. Efficient Network Selection for Computer-aided Cataract Diagnosis Under Noisy Environment. Comput. Methods Programs Biomed. 2021, 200, 105927. [Google Scholar] [CrossRef]

- Gureyev, T.E.; Paganin, D.M.; Kozlov, A.; Nesterets, Y.I.; Quiney, H.M. Complementary aspects of spatial resolution and signal-to-noise ratio in computational imaging. Phys. Rev. A 2018, 97, 053819. [Google Scholar] [CrossRef]

- Katase, H.; Yamaguchi, T.; Fujisawa, T.; Ikehara, M. Image noise level estimation by searching for smooth patches with discrete cosine transform. In Proceedings of the 2016 IEEE 59th International Midwest Symposium on Circuits and Systems (MWSCAS), Abu Dhabi, United Arab Emirates, 16–19 October 2016. [Google Scholar]

- Yang, S.M.; Tai, S.C. Fast and reliable image-noise estimation using a hybrid approach. J. Electron. Imaging 2010, 19, 033007. [Google Scholar] [CrossRef]

- Kokil, P.; Pratap, T. Additive white gaussian noise level estimation for natural images using linear scale-space features. Circuits Syst. Signal Process. 2021, 40, 353–374. [Google Scholar] [CrossRef]

- Liu, X.; Tanaka, M.; Okutomi, M. Noise level estimation using weak textured patches of a single noisy image. In Proceedings of the IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012; pp. 665–668. [Google Scholar]

- Zoran, D.; Weiss, Y. Scale invariance and noise in natural images. In Proceedings of the IEEE 12th International Conference on Computer Vision (ICCV), Kyoto, Japan, 29 September–2 October 2009; pp. 2209–2216. [Google Scholar]

- Wu, M.W.; Jin, Y.; Li, Y.; Song, T.; Kam, P.Y. Maximum-Likelihood, Magnitude-Based, Amplitude and Noise Variance Estimation. IEEE Signal Process. Lett. 2021, 28, 414–418. [Google Scholar] [CrossRef]

- Gupta, P.; Bampis, C.G.; Jin, Y.; Bovik, A.C. Natural scene statistics for noise estimation. In Proceedings of the 2018 IEEE Southwest Symposium on Image Analysis and Interpretation (SSIAI), Las Vegas, NV, USA, 8–10 April 2018. [Google Scholar]

- Fang, Z.; Yi, X. A novel natural image noise level estimation based on flat patches and local statistics. Multimed. Tools Appl. 2019, 78, 17337–17358. [Google Scholar] [CrossRef]

- Wu, J.X.; Xie, S.L.; Li, Z.G.; Wu, S.Q. Image noise level estimation via kurtosis test. J. Electron. Imaging 2022, 31, 033015. [Google Scholar] [CrossRef]

- Pyatykh, S.; Hesser, J.; Zheng, L. Image noise level estimation by principal component analysis. IEEE Trans. Image Process. 2013, 22, 687–699. [Google Scholar] [CrossRef] [PubMed]

- Jiang, P.; Wang, Q.; Wu, J. Efficient Noise-Level Estimation Based on Principal Image Texture. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 1987–1999. [Google Scholar] [CrossRef]

- Liu, W.; Lin, W. Additive white Gaussian noise level estimation in SVD domain for images. IEEE Trans. Image Process. 2013, 22, 872–883. [Google Scholar] [CrossRef] [PubMed]

- Tang, C.; Yang, X.; Zhai, G. Noise Estimation of Natural Images via Statistical Analysis and Noise Injection. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 1283–1294. [Google Scholar] [CrossRef]

- Chen, G.; Zhu, F.; Heng, P.A. An efficient statistical method for image noise level estimation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 477–485. [Google Scholar]

- Crow, F. Summed-Area Tables for Texture Mapping; SIGGRAPH: Chicago, IL, USA, 1984; Volume 84, pp. 207–212. [Google Scholar]

- Tampere Image Database 2008 (TID 2008). Available online: http://www.ponomarenko.info/tid2008.htm (accessed on 10 February 2024).

- Foi, A.; Trimeche, M.; Katkovnik, V.; Egiazarian, K. Practical poissonian-gaussian noise modeling and fitting for single-image rawdata. IEEE Trans. Image Process. 2008, 17, 1737–1754. [Google Scholar] [CrossRef]

- Liu, G.; Zhong, H.; Jiao, L. Comparing Noisy Patches for Image Denoising: A Double Noise Similarity Model. IEEE Trans. Image Process. 2015, 24, 862–872. [Google Scholar] [CrossRef]

- Deledalle, C.J.; Denis, L.; Tupin, F. How to compare noisy patches? patch similarity beyond gaussian noise. Int. J. Comput. Vis. 2012, 99, 86–102. [Google Scholar] [CrossRef]

- Arbelaez, P.; Maire, M.; Fowlkes, C.; Malik, J. Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 898–916. [Google Scholar] [CrossRef] [PubMed]

- Nam, S.; Hwang, Y.; Matsushita, Y.; Kim, S.J. A holistic approach to cross-channel image noise modeling and its application to image denoising. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1683–1691. [Google Scholar]

- Plotz, T.; Roth, S. Benchmarking denoising algorithms with real photographs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

| Noise Level | Liu [14] | Wu [19] | Yang [12] | Zoran [15] | Pyatykh [20] | Hou [2] | Gupta [17] | FNLE | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | Std | Mean | Std | Mean | Std | Mean | Std | Mean | Std | Mean | Std | Mean | Std | Mean | Std | ||

| BSDS 500 | 1 | 0.99 | 0.25 | 1.39 | 0.32 | 2.05 | 1.33 | 0.72 | 1.59 | 1.25 | 0.53 | 3.89 | 1.53 | 3.00 | 1.70 | 1.44 | 0.48 |

| 3 | 2.84 | 0.26 | 3.14 | 0.28 | 3.75 | 1.03 | 2.77 | 1.46 | 3.16 | 0.31 | 5.05 | 0.62 | 4.05 | 1.30 | 3.14 | 0.32 | |

| 5 | 4.91 | 0.14 | 5.07 | 0.14 | 5.61 | 0.86 | 4.67 | 1.38 | 5.15 | 0.21 | 5.91 | 0.73 | 5.66 | 1.10 | 5.07 | 0.18 | |

| 10 | 9.92 | 0.18 | 10.07 | 0.10 | 10.43 | 0.63 | 9.56 | 1.25 | 10.13 | 0.17 | 10.52 | 0.62 | 9.89 | 1.71 | 10.06 | 0.10 | |

| 20 | 19.91 | 0.21 | 20.04 | 0.09 | 20.24 | 0.44 | 19.31 | 1.32 | 20.09 | 0.35 | 20.54 | 0.73 | 19.80 | 0.32 | 20.01 | 0.09 | |

| 30 | 29.82 | 0.26 | 30.05 | 0.21 | 30.15 | 0.40 | 29.18 | 1.38 | 29.82 | 0.50 | 30.67 | 0.54 | 29.50 | 0.28 | 30.04 | 0.21 | |

| 40 | 39.70 | 0.27 | 40.09 | 0.29 | 40.17 | 0.52 | 39.09 | 1.39 | 39.47 | 0.69 | 40.71 | 0.75 | 39.22 | 0.26 | 40.07 | 0.25 | |

| 50 | 49.53 | 0.32 | 50.27 | 0.32 | 49.87 | 0.60 | 49.06 | 1.43 | 49.14 | 0.83 | 50.49 | 0.69 | 48.74 | 0.29 | 49.92 | 0.28 | |

| TID 2008 | 1 | 3.08 | 3.31 | 1.43 | 0.45 | 2.11 | 0.90 | 1.99 | 1.29 | 1.43 | 0.54 | 2.84 | 1.33 | 3.07 | 3.30 | 1.84 | 0.71 |

| 3 | 4.43 | 2.92 | 3.27 | 0.27 | 3.71 | 0.70 | 3.53 | 1.68 | 3.31 | 0.35 | 3.98 | 1.01 | 4.42 | 2.92 | 3.26 | 0.41 | |

| 5 | 6.02 | 2.64 | 5.14 | 0.40 | 5.56 | 0.60 | 4.25 | 1.61 | 5.14 | 0.31 | 5.92 | 0.92 | 6.01 | 2.64 | 5.13 | 0.33 | |

| 10 | 10.49 | 2.03 | 10.04 | 0.11 | 10.38 | 0.46 | 9.09 | 2.12 | 10.12 | 0.10 | 10.73 | 0.83 | 10.49 | 2.03 | 10.03 | 0.09 | |

| 20 | 19.98 | 1.32 | 20.01 | 0.14 | 20.22 | 0.37 | 19.15 | 1.78 | 19.97 | 0.27 | 20.69 | 0.85 | 19.98 | 1.32 | 20.01 | 0.14 | |

| 30 | 29.56 | 0.97 | 30.08 | 0.15 | 30.07 | 0.36 | 28.82 | 1.14 | 29.78 | 0.59 | 30.64 | 0.79 | 29.56 | 0.97 | 30.06 | 0.14 | |

| 40 | 39.23 | 0.77 | 40.22 | 0.31 | 39.81 | 0.45 | 38.77 | 1.16 | 39.44 | 0.72 | 40.50 | 0.61 | 39.23 | 0.77 | 40.16 | 0.29 | |

| 50 | 48.85 | 0.67 | 50.39 | 0.47 | 49.70 | 0.52 | 48.72 | 1.14 | 49.20 | 0.77 | 50.44 | 0.55 | 48.81 | 0.67 | 50.33 | 0.32 | |

| - | - | Noisy Image | Liu [14] | Wu [19] | Yang [12] | Zoran [15] | Pyatykh [20] | Hou [2] | Gupta [17] | FNLE |

|---|---|---|---|---|---|---|---|---|---|---|

| CC dataset | PSNR | 33.41 | 33.58 | 35.68 | 33.56 | 33.61 | 33.45 | 33.82 | 34.75 | 35.76 |

| SSIM | 0.9079 | 0.9114 | 0.9474 | 0.9113 | 0.912 | 0.9087 | 0.9161 | 0.9258 | 0.9491 | |

| Time | - | 5.767 | 2.669 | 2.435 | 12.628 | 19.783 | 3.027 | 314.012 | 1.277 | |

| DND dataset | PSNR | 28.81 | 28.86 | 33.38 | 31.50 | 29.01 | 31.31 | 30.26 | 31.69 | 33.57 |

| SSIM | 0.7893 | 0.7917 | 0.9166 | 0.8743 | 0.7974 | 0.8711 | 0.8642 | 0.8892 | 0.9201 | |

| Time | - | 3.086 | 2.386 | 2.272 | 10.358 | 17.472 | 2.994 | 301.868 | 1.242 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, J.; Jia, M.; Wu, S.; Xie, S. Fast Noise Level Estimation via the Similarity within and between Patches. Electronics 2024, 13, 2556. https://doi.org/10.3390/electronics13132556

Wu J, Jia M, Wu S, Xie S. Fast Noise Level Estimation via the Similarity within and between Patches. Electronics. 2024; 13(13):2556. https://doi.org/10.3390/electronics13132556

Chicago/Turabian StyleWu, Jiaxin, Meng Jia, Shiqian Wu, and Shoulie Xie. 2024. "Fast Noise Level Estimation via the Similarity within and between Patches" Electronics 13, no. 13: 2556. https://doi.org/10.3390/electronics13132556

APA StyleWu, J., Jia, M., Wu, S., & Xie, S. (2024). Fast Noise Level Estimation via the Similarity within and between Patches. Electronics, 13(13), 2556. https://doi.org/10.3390/electronics13132556