Offloading Strategy Based on Graph Neural Reinforcement Learning in Mobile Edge Computing

Abstract

1. Introduction

- In this paper, the adjacency list method is adopted to update the graph structure. This updating scheme ensures that the proposed policy does not lose topological information when dealing with dynamic MEC graph structures. Consequently, the agents can make optimal decisions in real-time under coverage constraints, thereby achieving the minimization of system cost.

- M-GNRL employs a sampling aggregation approach for node updates to ensure weight parameter sharing among nodes within the same layer in GNN. We refrain from using attention mechanisms during the training process. This enables nodes to retain the majority of their original feature information during message propagation, thereby reducing the scale of the training parameters.

- As the learning environment of DQN is a graph, we integrate edge features from graph neural networks into the deep neural network (DNN) of DRL. Consequently, the actions generated by DQN are mapped from edge features, thereby enhancing the accuracy of actions.

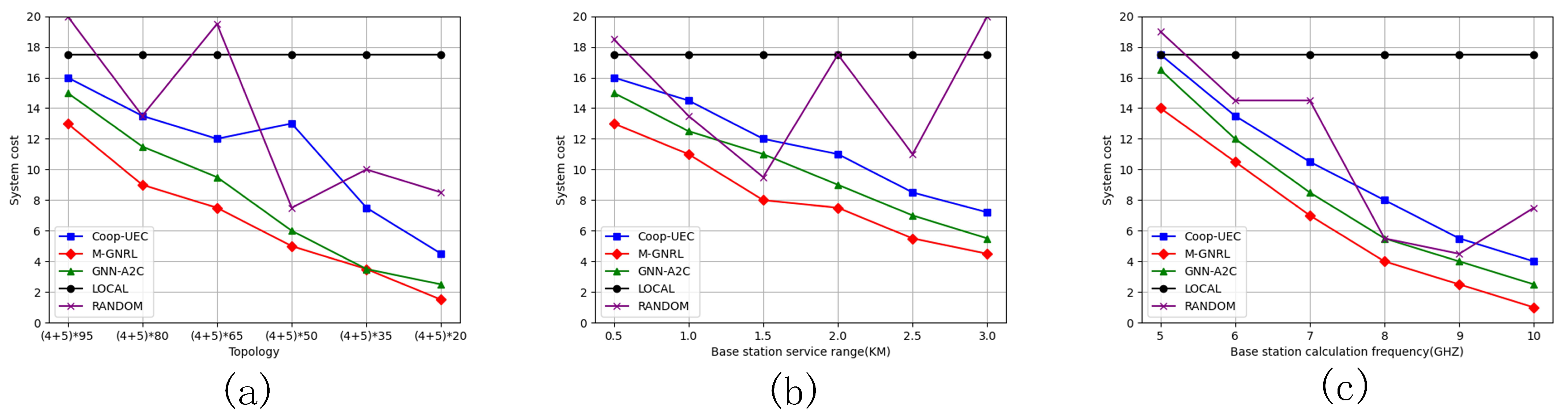

- The algorithm proposed in this paper is experimentally evaluated in various scenarios, and the results indicate that M-GNRL exhibits a strong generalization ability, even in new network environments, resulting in a reduction in system costs compared to other baseline algorithms.

2. Related Works

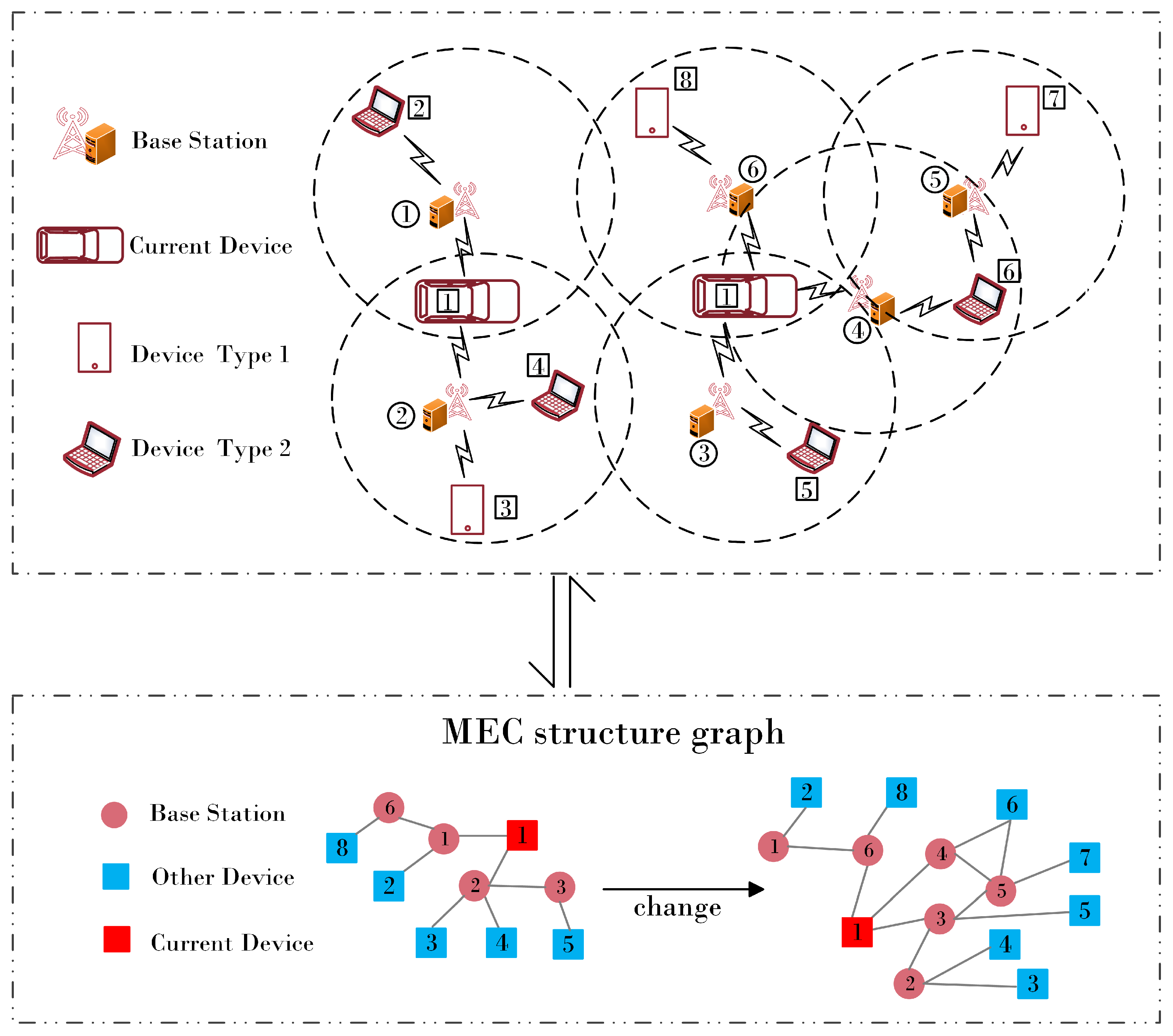

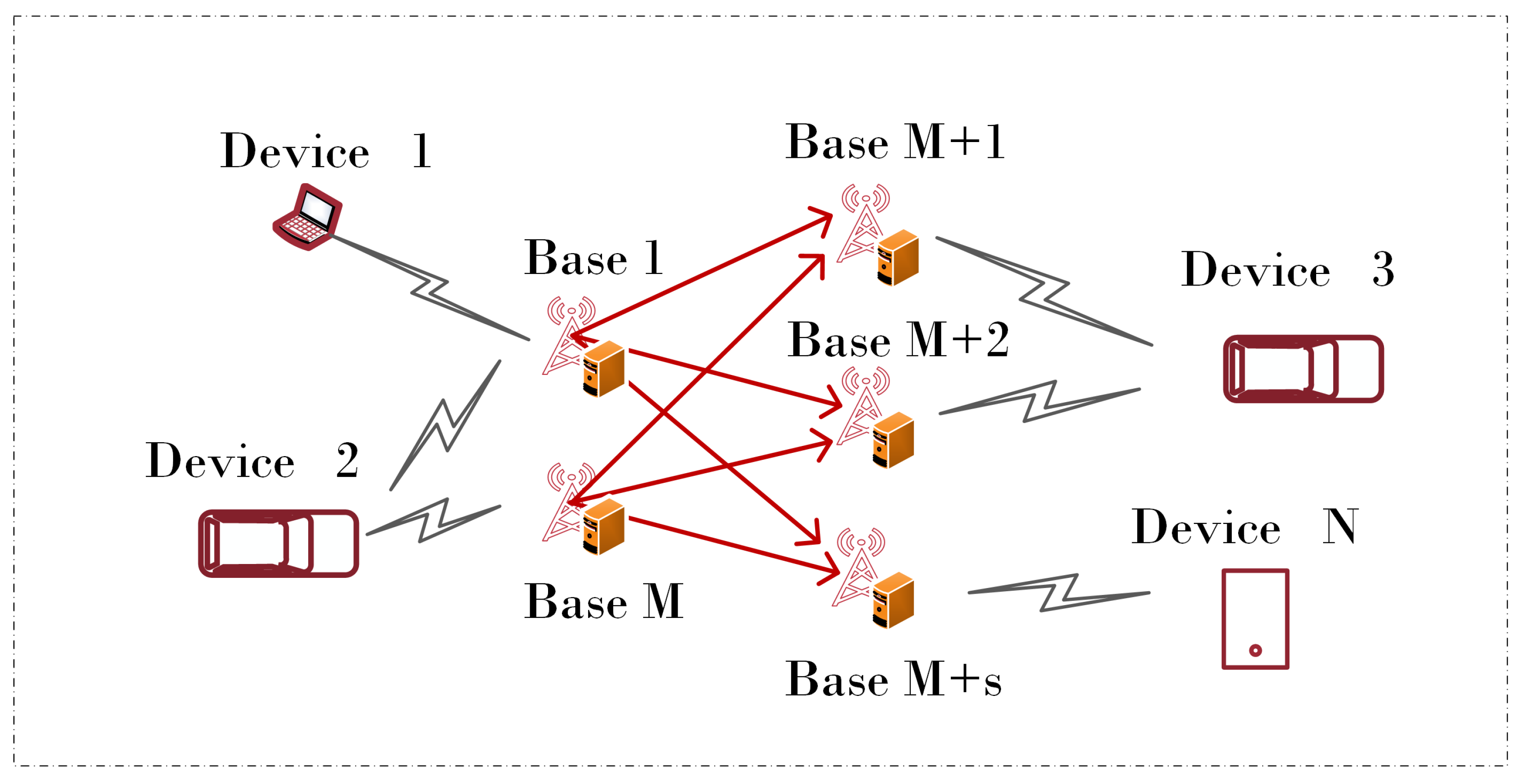

3. System Model

3.1. Communication Model

3.2. Computation Model

3.3. Problem Formulation under Multiple Constraints

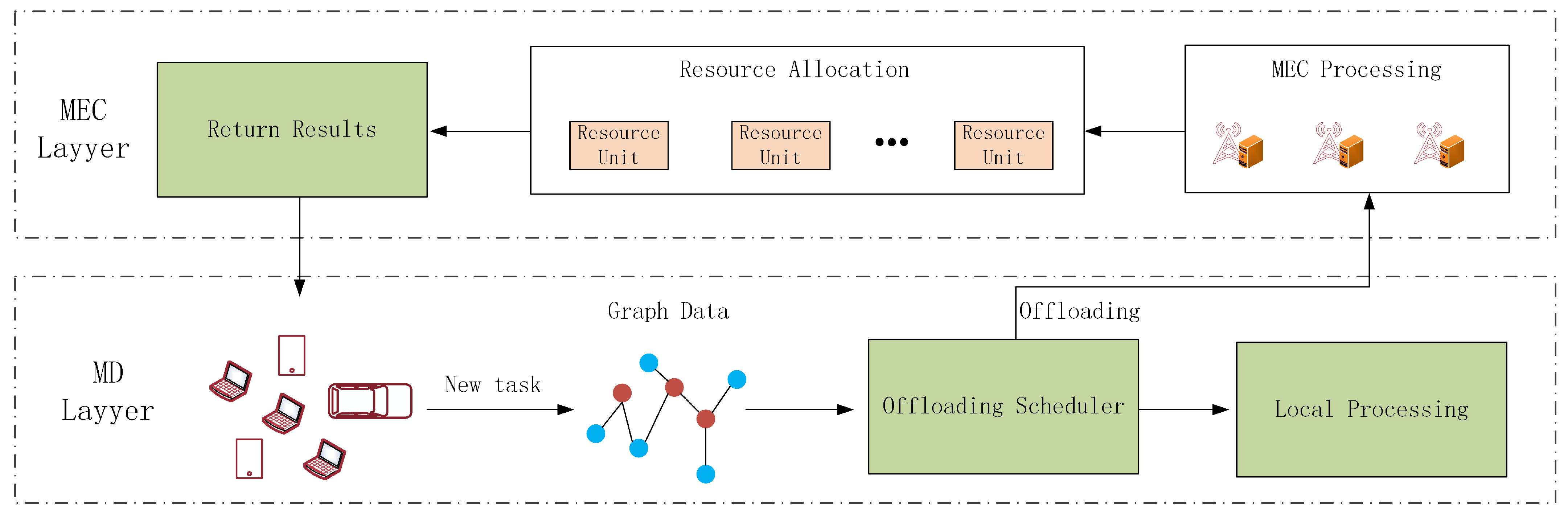

4. Mobile Offloading Strategy Based on GNN and DRL

4.1. M-GNRL Method

4.2. Learning Node Features

4.3. Offloading Strategy

| Algorithm 1 M-GNRL for task offloading strategy. |

|

4.4. Update Graph Structure

| Algorithm 2 Update graph structure. |

|

5. Experimental Analysis

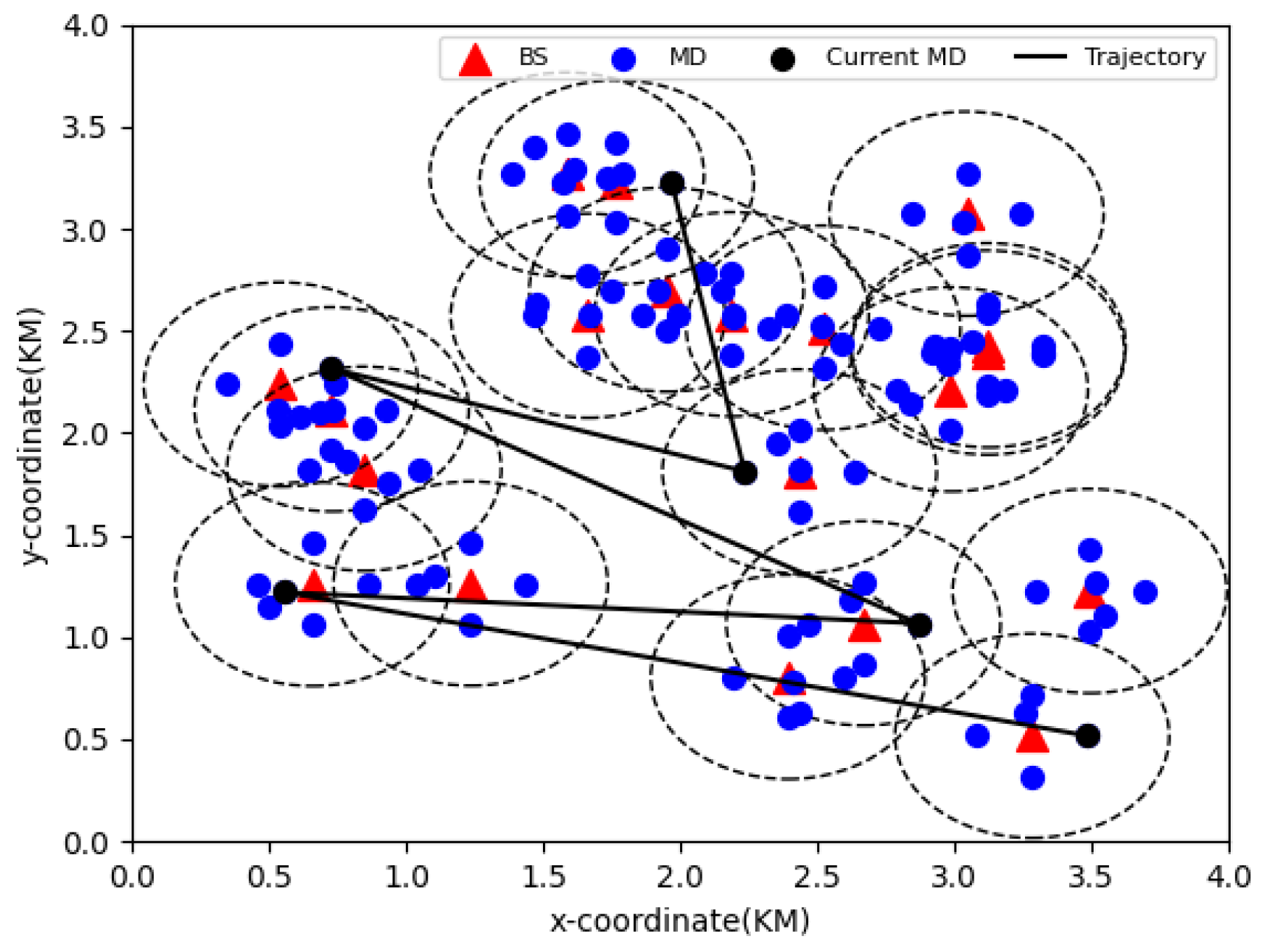

5.1. Simulation Experiment Setup

- LOCAL: The agent will only select tasks to be executed on the device.

- RANDOM: The decision of task offloading and base station selection are both made randomly.

- GNN-A2C: The author’s deep-graph-based reinforcement learning framework, which employs graph neural networks to supervise the action training of unmanned aerial vehicles in Advantage Actor-Critic (A2C) methods. This framework achieves rapid convergence and significantly reduces the task missing rate in aerial edge Internet of Things (IoT) scenarios [32].

- Coop-UEC: Drones are capable of collaborating to offload computing tasks. In order to maximize long-term rewards, the author formulates an optimization problem, describing it as a semi-Markov process and proposing a DRL-based offloading algorithm [23].

5.2. Simulation Scenario

5.3. Performance Analysis of Different Strategies

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ibn-Khedher, H.; Laroui, M.; Mabrouk, M.B.; Moungla, H.; Afifi, H.; Oleari, A.N.; Kamal, A.E. Edge computing assisted autonomous driving using artificial intelligence. In Proceedings of the 2021 International Wireless Communications and Mobile Computing (IWCMC), Harbin, China, 28 June–2 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 254–259. [Google Scholar]

- Liu, J.; Zhang, Q. To improve service reliability for AI-powered time-critical services using imperfect transmission in MEC: An experimental study. IEEE Internet Things J. 2020, 7, 9357–9371. [Google Scholar] [CrossRef]

- Mao, Y.; You, C.; Zhang, J.; Huang, K.; Letaief, K.B. A survey on mobile edge computing: The communication perspective. IEEE Commun. Surv. Tutor. 2017, 19, 2322–2358. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, X. An overview of user-oriented computation offloading in mobile edge computing. In Proceedings of the 2020 IEEE World Congress on Services (SERVICES), Beijing, China, 18–23 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 75–76. [Google Scholar]

- Noor, T.H.; Zeadally, S.; Alfazi, A.; Sheng, Q.Z. Mobile cloud computing: Challenges and future research directions. J. Netw. Comput. Appl. 2018, 115, 70–85. [Google Scholar] [CrossRef]

- You, C.; Huang, K.; Chae, H.; Kim, B.H. Energy-efficient resource allocation for mobile-edge computation offloading. IEEE Trans. Wirel. Commun. 2016, 16, 1397–1411. [Google Scholar] [CrossRef]

- Wang, Y.; Sheng, M.; Wang, X.; Wang, L.; Li, J. Mobile-edge computing: Partial computation offloading using dynamic voltage scaling. IEEE Trans. Commun. 2016, 64, 4268–4282. [Google Scholar] [CrossRef]

- Bi, S.; Zhang, Y.J. Computation rate maximization for wireless powered mobile-edge computing with binary computation offloading. IEEE Trans. Wirel. Commun. 2018, 17, 4177–4190. [Google Scholar] [CrossRef]

- Wang, F.; Xu, J.; Wang, X.; Cui, S. Joint offloading and computing optimization in wireless powered mobile-edge computing systems. IEEE Trans. Wirel. Commun. 2017, 17, 1784–1797. [Google Scholar] [CrossRef]

- Zhang, W.; Wen, Y.; Guan, K.; Kilper, D.; Luo, H.; Wu, D.O. Energy-optimal mobile cloud computing under stochastic wireless channel. IEEE Trans. Wirel. Commun. 2013, 12, 4569–4581. [Google Scholar] [CrossRef]

- Chen, M.H.; Liang, B.; Dong, M. Joint offloading decision and resource allocation for multi-user multi-task mobile cloud. In Proceedings of the 2016 IEEE International Conference on Communications (ICC), Kuala Lumpur, Malaysia, 22–27 May 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–6. [Google Scholar]

- Dinh, T.Q.; Tang, J.; La, Q.D.; Quek, T.Q. Offloading in mobile edge computing: Task allocation and computational frequency scaling. IEEE Trans. Commun. 2017, 65, 3571–3584. [Google Scholar]

- Zhan, Y.; Guo, S.; Li, P.; Zhang, J. A deep reinforcement learning based offloading game in edge computing. IEEE Trans. Comput. 2020, 69, 883–893. [Google Scholar] [CrossRef]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Philip, S.Y. A comprehensive survey on graph neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4–24. [Google Scholar] [CrossRef]

- Yick, J.; Mukherjee, B.; Ghosal, D. Wireless sensor network survey. Comput. Netw. 2008, 52, 2292–2330. [Google Scholar] [CrossRef]

- Fan, W.; Ma, Y.; Li, Q.; He, Y.; Zhao, E.; Tang, J.; Yin, D. Graph neural networks for social recommendation. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 417–426. [Google Scholar]

- Glorennec, P.Y. Reinforcement learning: An overview. In Proceedings of the European Symposium on Intelligent Techniques (ESIT-00), Aachen, Germany, 14–15 September 2000; pp. 14–15. [Google Scholar]

- Ding, S.; Lin, D.; Zhou, X. Graph convolutional reinforcement learning for dependent task allocation in edge computing. In Proceedings of the 2021 IEEE International Conference on Agents (ICA), Kyoto, Japan, 13–15 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 25–30. [Google Scholar]

- Li, Y.; Jiang, C. Distributed task offloading strategy to low load base stations in mobile edge computing environment. Comput. Commun. 2020, 164, 240–248. [Google Scholar] [CrossRef]

- Gao, Y.; Li, Z. Load balancing aware task offloading in mobile edge computing. In Proceedings of the 2022 IEEE 25th International Conference on Computer Supported Cooperative Work in Design (CSCWD), Hangzhou, China, 4–6 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1209–1214. [Google Scholar]

- Lu, H.; Gu, C.; Luo, F.; Ding, W.; Liu, X. Optimization of lightweight task offloading strategy for mobile edge computing based on deep reinforcement learning. Future Gener. Comput. Syst. 2020, 102, 847–861. [Google Scholar] [CrossRef]

- Li, J.; Gao, H.; Lv, T.; Lu, Y. Deep reinforcement learning based computation offloading and resource allocation for MEC. In Proceedings of the 2018 IEEE Wireless Communications and Networking Conference (WCNC), Barcelona, Spain, 15–18 April 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Liu, Y.; Xie, S.; Zhang, Y. Cooperative offloading and resource management for UAV-enabled mobile edge computing in power IoT system. IEEE Trans. Veh. Technol. 2020, 69, 12229–12239. [Google Scholar] [CrossRef]

- Yan, J.; Bi, S.; Zhang, Y.J.A. Offloading and resource allocation with general task graph in mobile edge computing: A deep reinforcement learning approach. IEEE Trans. Wirel. Commun. 2020, 19, 5404–5419. [Google Scholar] [CrossRef]

- Wang, J.; Hu, J.; Min, G.; Zhan, W.; Ni, Q.; Georgalas, N. Computation offloading in multi-access edge computing using a deep sequential model based on reinforcement learning. IEEE Commun. Mag. 2019, 57, 64–69. [Google Scholar] [CrossRef]

- Zou, J.; Hao, T.; Yu, C.; Jin, H. A3C-DO: A regional resource scheduling framework based on deep reinforcement learning in edge scenario. IEEE Trans. Comput. 2020, 70, 228–239. [Google Scholar] [CrossRef]

- Wang, J.; Hu, J.; Min, G.; Zhan, W.; Zomaya, A.Y.; Georgalas, N. Dependent task offloading for edge computing based on deep reinforcement learning. IEEE Trans. Comput. 2021, 71, 2449–2461. [Google Scholar] [CrossRef]

- Li, C.; Xia, J.; Liu, F.; Li, D.; Fan, L.; Karagiannidis, G.K.; Nallanathan, A. Dynamic offloading for multiuser muti-CAP MEC networks: A deep reinforcement learning approach. IEEE Trans. Veh. Technol. 2021, 70, 2922–2927. [Google Scholar] [CrossRef]

- Tang, M.; Wong, V.W. Deep reinforcement learning for task offloading in mobile edge computing systems. IEEE Trans. Mob. Comput. 2020, 21, 1985–1997. [Google Scholar] [CrossRef]

- Chen, T.; Zhang, X.; You, M.; Zheng, G.; Lambotharan, S. A GNN-based supervised learning framework for resource allocation in wireless IoT networks. IEEE Internet Things J. 2021, 9, 1712–1724. [Google Scholar] [CrossRef]

- He, S.; Xiong, S.; Ou, Y.; Zhang, J.; Wang, J.; Huang, Y.; Zhang, Y. An overview on the application of graph neural networks in wireless networks. IEEE Open J. Commun. Soc. 2021, 2, 2547–2565. [Google Scholar] [CrossRef]

- Li, K.; Ni, W.; Yuan, X.; Noor, A.; Jamalipour, A. Deep-graph-based reinforcement learning for joint cruise control and task offloading for aerial edge internet of things (edgeiot). IEEE Internet Things J. 2022, 9, 21676–21686. [Google Scholar] [CrossRef]

- Sun, Z.; Mo, Y.; Yu, C. Graph-reinforcement-learning-based task offloading for multiaccess edge computing. IEEE Internet Things J. 2021, 10, 3138–3150. [Google Scholar] [CrossRef]

- Nayak, S.; Choi, K.; Ding, W.; Dolan, S.; Gopalakrishnan, K.; Balakrishnan, H. Scalable multi-agent reinforcement learning through intelligent information aggregation. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; pp. 25817–25833. [Google Scholar]

| Notation and Description |

|---|

| (t): The set of MDs at time slot t |

| (t): The set of BSs at time slot t |

| : The time slot sequence |

| B: The bandwidth |

| : The environmental impact factor |

| (t): The data transmission rate between and at time slot t |

| (t): The local execution time of task of at time slot t |

| (t): The energy consumption for local execution of task on at time slot t |

| (t): The execution time of task offloading from to at time slot t |

| (t): The total energy consumption for task transmission for at time slot t |

| (t): The task generated by at time slot t |

| (t): The task size of at time slot t |

| (t): The task CPU cycle count of at time slot t |

| : The frequency of calculation of |

| : The transmission power of |

| : The frequency of calculation of |

| (t): The load factor of at time slot t |

| : The number of sample |

| : The discount factor |

| : The learning rate |

| Parameter and Values |

|---|

| bandwidth B: 4 Mhz |

| length of time slot sequence T: 80 |

| environment influence coefficient : [0.5, 1] |

| service range of the base station m : [0.5, 4]/km |

| task size : [800, 2000]/kbytes |

| task CPU cycle : [1000, 2500]/Mcycles |

| computational capability of device n : [0.5, 1.5]/Ghz |

| computational capability of base station m : [4, 11]/Ghz |

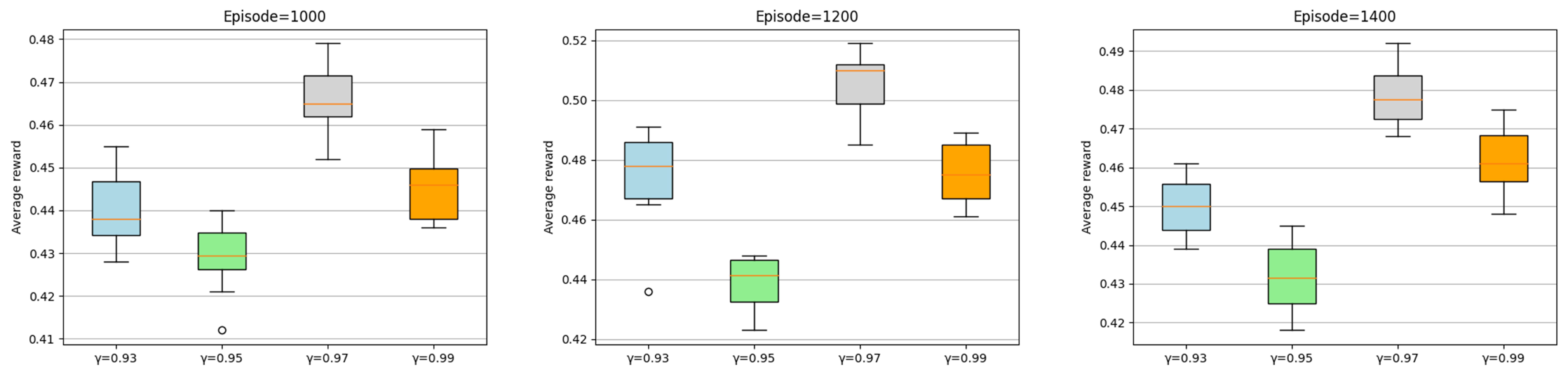

| training episodes : 1000, 1200, 1400 |

| batch size : 100 |

| number of tuples in the experience pool: 1500 |

| discount factor : 0.93, 0.95, 0.97, 0.99 |

| learning rate : 0.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, T.; Ouyang, X.; Sun, D.; Chen, Y.; Li, H. Offloading Strategy Based on Graph Neural Reinforcement Learning in Mobile Edge Computing. Electronics 2024, 13, 2387. https://doi.org/10.3390/electronics13122387

Wang T, Ouyang X, Sun D, Chen Y, Li H. Offloading Strategy Based on Graph Neural Reinforcement Learning in Mobile Edge Computing. Electronics. 2024; 13(12):2387. https://doi.org/10.3390/electronics13122387

Chicago/Turabian StyleWang, Tao, Xue Ouyang, Dingmi Sun, Yimin Chen, and Hao Li. 2024. "Offloading Strategy Based on Graph Neural Reinforcement Learning in Mobile Edge Computing" Electronics 13, no. 12: 2387. https://doi.org/10.3390/electronics13122387

APA StyleWang, T., Ouyang, X., Sun, D., Chen, Y., & Li, H. (2024). Offloading Strategy Based on Graph Neural Reinforcement Learning in Mobile Edge Computing. Electronics, 13(12), 2387. https://doi.org/10.3390/electronics13122387