Abstract

Vehicle terminals in the mobile internet of vehicles are faced with difficulty in the requirements for computation-intensive and delay-sensitive tasks, and vehicle mobility also causes dynamic changes in vehicle-to-vehicle (V2V) communication links, which results in a lower task offloading quality. To solve the above problems, a new task offloading strategy based on cloud–fog collaborative computing is proposed. Firstly, the V2V-assisted task forwarding mechanism is introduced under cloud–fog collaborative computing, and a forwarding vehicles predicting algorithm based on environmental information is designed; then, considering the parallel computing relationship of tasks in each computing node, a task offloading cost model is constructed with the goal of minimizing delay and energy consumption; finally, a multi-strategy improved genetic algorithm (MSI-GA) is proposed to solve the above task offloading optimization problem, which adapts the chaotic sequence to initialize the population, comprehensively considers the influence factors to optimize the adaptive operator, and introduces Gaussian perturbation to enhance the local optimization ability of the algorithm. The simulation experiments show that compared with the existing strategies, the proposed task offloading strategy has the lower task offloading cost for a different number of tasks and fog nodes; additionally, the introduced V2V auxiliary task forwarding mechanism can reduce the forwarding load of fog nodes by cooperative vehicles to forward tasks.

1. Introduction

The 5G internet of vehicles has been widely adopted in automatic driving [1], automatic navigation, natural language processing and other fields, but vehicle resources are insufficient to meet the requirements of computation-intensive and time-delay sensitive tasks. The cloud server can assist vehicles in handling tasks. However, the long distance between vehicles and the cloud and the centralized management mode of the cloud server, etc., result in a longer delay for the offloading tasks of the vehicle. As a supplement to cloud computing, fog computing can effectively alleviate the problems of insufficient vehicle equipment resources and the large existing network delay [2]. Fog computing can also interact with cloud computing; that is, cloud–fog collaborative computing can process tasks with high computing requirements and store data for a long time [3].

At present, the research on offloading strategies in fog computing environments is as follows. Refs. [4,5] design the task offloading problem as a Markov decision process to solve the QoS requirements of Internet of Things users and adopts deep reinforcement learning (DRL) to obtain the offloading strategy, which improves the resource utilization rate. Refs. [6,7,8] propose the partial offloading method based on evolutionary game theory to obtain a lower overhead offloading scheme. In order to satisfy the low latency requirements of delay-sensitive applications, refs. [9,10] propose a task offloading scheme based on the intelligent ant colony algorithm to obtain a low latency offloading strategy. Due to the high latency sensitivity of tasks in the internet of vehicles environments, the offloading strategies proposed in the above references are formidable to directly apply, although they are superior in fog computing environments.

In the internet of vehicles environments, considering the energy consumption and system delay, the vehicle computation offloading is introduced as a constrained multi-objective optimization problem (CMOP), and then a non-dominated sorting genetic strategy (NSGS) is proposed to solve the CMOP [11]. Similarly, refs. [12,13,14,15] formulate the offloading problem as a multi-objective optimization problem and employ various multi-objective evolutionary algorithms to find the optimal offloading strategy. Ref. [16] aims at the task offloading and resource allocation problem in the internet of vehicles environments and adopts evolutionary algorithms to solve the problem. In addition, some of the related research adopts deep learning, reinforcement learning and other methods to solve the task offloading problem in the internet of vehicles environments. Refs. [17,18] model the joint optimization of computational offloading and resource allocation as a nonlinear integer programming problem and combine the DRL method to obtain the best strategy. In the case of limited fog resources, parking vehicles are utilized to minimize service delay, and a reinforcement learning algorithm combined with a heuristic algorithm is designed to obtain a more efficient and satisfying resource allocation scheme [19]. The above references assume that the vehicle is in a stationary state, and the mobility of the vehicle and the cross-regional transmission of the task are not to be considered.

For the task offloading strategy in the internet of vehicles environments, the existing research mainly adopts centralized control resource allocation or virtual machine migration among fog nodes to ensure the integrity of task computing. Ref. [20] optimizes the task offloading problem by a distributed deep reinforcement learning (DDRL)-based genetic optimization algorithm (GOA). In view of the dynamics, randomness and time-variant of vehicular networks, the asynchronous deep reinforcement algorithm is leveraged to solve the problem of task offloading and multi-resource management [21]. Refs. [22,23,24,25] also adopt DRL to solve the task offloading problem. Ref. [26] discusses the dynamic migration of mobile edge computing services in the internet of vehicles and proposes a Lyapunov-based method to ensure that the virtual machines deployed on servers adapt to the movement of vehicles. Ref. [27] selects resources matching with on-board tasks according to vehicle speed, location and usage status of the surrounding resources so as to minimize the completion time of task flow. Mobility in the above references refer to the relative movement between the vehicle and the server, without considering the mobility of vehicle-to-vehicle (V2V) communication, resulting in the inability to fully utilize the resources in the surrounding environments of the vehicle, and the offloading position of the vehicle during the task transmission time is difficult to determine with the movement of the vehicle, thereby the stability of the task forwarding links cannot be guaranteed. Additionally, the parallel computing relationship among computing nodes is not considered.

In response to the above problems, considering cloud–fog collaborative computing, this paper introduces a V2V-assisted task forwarding mechanism to minimize system delay and energy consumption and constructs a task offloading cost model based on environmental information to predict the forwarding vehicle; additionally, a multi-strategy improved genetic algorithm (MSI-GA) is proposed to solve the above task offloading cost optimization problem to minimize the system delay and energy consumption. The main contributions of this paper are as follows:

- (1)

- One forwarding vehicle predicting algorithm based on the information of the vehicle’s surrounding environment is proposed. Firstly, those vehicles that are closer to the target fog node and around the source vehicle are the candidate vehicles; then, the proposed forwarding vehicle predicting algorithm is iteratively run until there are no candidate vehicles or the predicted forwarding vehicle can reach the target fog node; that is, the optimal forwarding vehicle that can transmit the task completely to the next fog node is predicted.

- (2)

- A task offloading cost optimization problem based on cloud–fog collaborative computing in the internet of vehicles environments is constructed. Here, a V2V-assisted task forwarding mechanism is introduced, the above forwarding vehicle predicting algorithm is adopted to obtain task forwarding links; then, considering the parallel computing relationship among computing nodes, the system delay and energy consumption optimization problem is formulated.

- (3)

- A multi-strategy improved genetic algorithm (MSI-GA) is proposed to solve the above task offloading cost optimization problem. In the proposed MSI-GA, the initialization and selection operators of GA are improved, which adopt chaotic sequence initialization and Metropolis criterion; the crossover and mutation operators can adjust adaptively by their individual fitness, generations and population similarity, and Gaussian perturbation operators are also introduced to improve the local optimization ability of the algorithm.

The rest of this paper is as follows: Section 2 constructs the system delay and energy consumption optimization model in the internet of vehicles. Section 3 describes the forwarding vehicle predicting algorithm based on environmental information and the multi-strategy improved genetic algorithm (MSI-GA). Section 4 verifies the effectiveness of the proposed task offloading strategy.

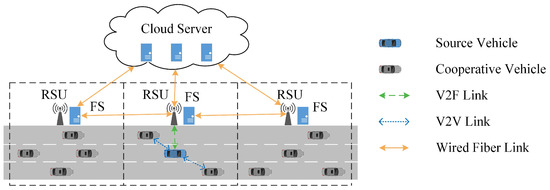

2. System Model

Considering a one-way linear highway environment, multiple fog servers (FS) equipped with road side units (RSU) are uniformly deployed along the roadside, as shown in Figure 1. The vehicles are moving in a straight line with constant speed. The vehicle with task offloading requirements is defined as the source vehicle, and the rest are defined as cooperative vehicles. The source vehicle can communicate directly with the fog servers through the vehicle-to-fog server (V2F) link, and the source vehicle can communicate directly with cooperative vehicles through the V2V link. The adjacent fog servers and cloud server are connected through the wired fiber link. In the system model, the source vehicle can offload the task to the local, the current fog server and the cloud server or offload the task to the adjacent fog servers through the current fog server or the cooperative vehicles.

Figure 1.

System model architecture.

Assume that the offloaded task can be divided into subtasks, and each subtask is indivisible and independent from each other. The subtask set is denoted as ; each subtask is denoted as , where and , respectively, represent task data size and the task computation size. Let the vehicle set be , where the source vehicle is represented as , and the cooperative vehicles are represented as , and each vehicle can be represented as , where is the vehicle speed, is the computing capacity of vehicle, and is the location of the vehicle. The fog server set is represented as ; each fog server is denoted as ; is the computing capacity of the fog server; and is the deployment position of the fog server. The computing capacity of the cloud server is denoted as . In a certain time, the fog server can obtain the vehicle information of the current area through the built-in positioning service; furthermore, the fog server can obtain the information of the adjacent fog servers through wired fiber communication, then the source vehicle sends the task information to the fog server, and according to the current environmental information, the optimal offloading strategy can be computed in the fog server and provided to the source vehicle to offload the corresponding task.

2.1. Communication Model

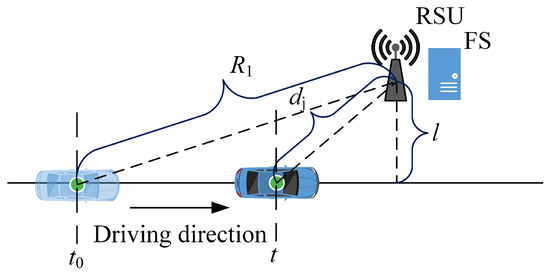

In the unidirectional linear road of the internet of vehicles scenarios shown in Figure 1, four communication models are adopted: V2F, V2V, F2F (fog server to fog server) and F2C (fog server to cloud server), in which F2F is the communication between fog servers, and F2C is the communication between fog server and cloud server. All vehicles are in the moving state, and the link status of V2V and V2F changes with the movement of the vehicles. In order to describe the change in the communication link status between the moving vehicle and the fog server, the V2F communication model is constructed as shown in Figure 2.

Figure 2.

V2F communication model.

Let be the distance between vehicle and RSU and be the bandwidth of communication link between vehicle and RSU. The channel between vehicle and RSU is Rayleigh flat fading, with representing the path loss factor, representing the link fading factor, then, the transmission rate of V2F communication can be expressed as

where is the transmission power of the vehicle, and is the noise power.

Suppose that the vehicle enters the edge of the RSU coverage range (fog area) at the time , the vehicle speed is , the road width is ignored, the vertical distance between RSU and the road is , and the RSU coverage radius is , then the distance between the vehicle and RSU at the time can be expressed as

Due to the mobility of the vehicle, the communication transmission rate between the vehicle and the RSU changes with the vehicle speed, and generally, the average transmission rate within the coverage area is taken as the actual transmission rate. The fog node is described as the combination of fog server and RSU, then the average transmission rate of the vehicle in the coverage area of the fog node is

In Equation (3), the transmission rate is a function of transmission rate with time, which can be obtained by solving the simultaneous Equations (1) and (2), where is the total driving time of the vehicle within the coverage of RSU:

The remaining communication time between the vehicle and the RSU in the coverage area of the fog node is

where the molecular part is the distance from the vehicle to the edge of the current fog area.

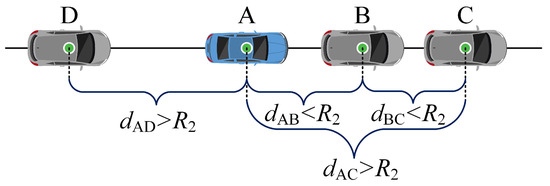

Dedicated short range communication (DSRC) technology is adopted for communication between vehicles, as shown in Figure 3. is the distance between vehicle A and vehicle B, and similarly, the distance between other vehicles is defined as , . Within the maximum communication distance between vehicles, vehicles can communicate directly with each other. When the transmission distance exceeds , and there are forwarding vehicles around, the task can be transmitted by multi-hop, otherwise it cannot be transmitted. In Figure 3, when vehicle A transmits data to vehicle C, the data can be sent to vehicle B first, then the data can be transmitted from vehicle B to vehicle C by multi-hop.

Figure 3.

V2V communication model.

If the communication bandwidth between vehicles is , the transmission rate of communication between vehicles can be expressed as

In the process of vehicle driving, the link status of V2V communication constantly changes, and the vehicle needs to determine the communication time of the vehicle within the communication range to ensure the reliability of task transmission. Take vehicle A and vehicle B in Figure 3 as an example: If vehicle A is represented as , and vehicle B is represented as , consider the following three conditions of the speed of the two vehicles:

If , the distance between vehicle A and vehicle B stays the same, enabling them to communicate continuously.

If , the communication time between vehicle A and vehicle B is

If , the communication time between vehicle A and vehicle B is

The communication between the fog servers and the communication between the fog server and the cloud server are all wired fiber links. The adjacent fog servers can directly communicate with each other; the cross-regional transmission tasks can be transmitted by the fog servers. The communication bandwidth between the fog server is , and the communication bandwidth between the fog server and the cloud server is . Then the communication transmission rate of F2F and F2C can be expressed as

where is the transmission power of the fog server.

2.2. Delay Model

When a task is offloaded to the source vehicle, the delay is generated only during task computation, and the computation delay of the task is related only to the CPU speed of the source vehicle, which is expressed as .

When a task is offloaded to a fog server, the task can be offloaded to the local fog server or the adjacent fog servers. The cross-region offloading task is forwarded by fog node or V2V auxiliary forwarding task. The forwarding mode is determined by the forwarding vehicle predicting algorithm result: When the forwarding vehicle predicting algorithm determines that cooperative vehicles can forward the task, the task is forwarded by cooperative vehicles first. If there is no cooperative vehicle nearby that can forward the task, it is forwarded by fog node. Therefore, the transmission sub-links between the source vehicle and the target fog server has three kinds: the transmission link between the vehicle and the fog server, the transmission link between the vehicles and the transmission link between the fog servers, thereby, the final transmission link of the task is composed of three seed links. The transmission delay corresponding to the above three kinds of links are as follows:

- (1)

- The transmission link between vehicle and fog server: When a task is forwarded from a vehicle to a fog node, the transmission rate of the link can be determined from Equation (3), then the transmission delay of V2F link is .

- (2)

- The transmission link between vehicles: When a task is forwarded from a vehicle to a nearby vehicle, the transmission rate of the link can be determined from Equation (6), then the transmission delay of the V2V link is .

- (3)

- The transmission link between fog servers: When a task is forwarded from a fog node to an adjacent fog node, the transmission rate of the link can be determined from Equation (9), then the transmission delay of the F2F link is .

Let denote the number of hops for the vehicle forwarding task, and let denote the number of hops for the fog node forwarding task, then the total transmission delay of the task when it is offloaded to the fog server is

The computation delay of the task offload to the fog server is .

When a task is offloaded to the cloud server, the transmission links of the task are from the source vehicle to the local fog server, then from the local fog server to the cloud server. Then, the transmission delay of the task from the local fog node to the cloud server is , and the total transmission delay of the task when it is offloaded to the cloud server is

The computation delay of the task when it is offloaded to the cloud server is .

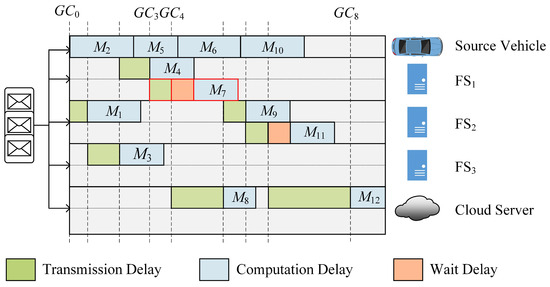

2.3. Parallel Computing Model

After the task offloading strategy is determined, the source vehicle begins to transmit the task to each computing node. When a task is transmitted, each task occupies all the bandwidth of the current link. Once the task is transmitted to the target computing node and the target computing node is idle, the task is processed. It is assumed that both computing tasks and forwarding tasks can be carried out on each node simultaneously and that each computing node can process only one task at a time. In the parallelism of computation among different computing nodes, it needs to consider whether the computing node is idle when offloading a task to a computing node; therefore, the system delay is not a simple accumulation of all parts.

Based on the above assumptions, the parallel computing model of the task can be established. When a task is transmitted, the global clock is set to record the time of the task starting forwarding and ending forwarding, and the last completion time of the task queue is recorded on each computing node. Set the initial value of the global clock to 0, then the global clock of the task forwarding process can be represented by the transmission delay of each task:

where , and represent the task set of offloading to the source vehicle, offloading to the fog server, and offloading to the cloud server, respectively. When the task is offloaded to the source vehicle, no transmission delay is generated and remains unchanged. When a task is offloaded to a fog server or cloud server, the value of needs to be added to the transmission delay of the task.

After the task offloading strategy is obtained, the source vehicle processes tasks one by one. The final completion time is obtained by adding the computation delay of tasks offloading to the source vehicle

When a task is offloaded to the fog node or the cloud server, the start computation time of the task needs to be determined. Since there is a parallel relationship between task transmission and task computation, the start computation time of the task is the larger value between the global clock and the final task offloading completion time at the target node. The start computation time of the fog node and the cloud server are expressed as , , respectively. The final completion time of each node is obtained by the start computation time of the current task adding the computation delay of the task, which can be expressed as

here, represents the final completion time of a fog node, and represents the final completion time of the cloud server.

According to the parallel computing model, the system delay is dictated by the maximum of the final completion time among all computing nodes

To better explain the parallel computing model, considering the example as shown in Figure 4. Assume that there are 3 fog servers that can provide the offloading service, and the pending task from the source vehicle is divided into 12 sub-tasks. A task offloading strategy generated by the fog server is , the system delay of the task can be obtained after the decision coding of the task offloading position. For details about the decision coding process, see Section 3.2. Taking the offloading process of as an example: firstly, because and are before in queue, which are offloaded to source vehicle, thereby, is transmitted directly after the transmission of is complete, the start transmission time of is ; then, at time , is transmitted to , the final completion time is , and ; therefore, needs to wait for the completion of computation, and the start time for is ; finally, the final completion time of is .

Figure 4.

Example of parallel computing.

According to the above calculation method, the final completion time of the source vehicle, , and the cloud server are, respectively, , , and . Then, the system delay corresponding to the decision is dictated by maximum final completion time among all computing nodes, which can be expressed as .

2.4. Energy Consumption Model

When the task is offloaded to the source vehicle, the energy consumption generated by the computing process is only related to the calculation power of the on-board service unit, and the energy consumption of the task offload to the source vehicle is , where is the calculation power of the source vehicle. The total energy consumption of tasks offloading to the source vehicle is

When the task is offloaded to the fog server, the energy consumption generated during transmission is determined by the transmission links, and the energy consumption during transmission and calculation is only related to both the transmission power and the computational power. The energy consumption of tasks offloading to the fog server is

where is the calculation power of the fog server , and is the total completion delay of all tasks offloading to the fog server . Then, the total energy consumption offloading to the fog servers is

When a task is offloading to the cloud server, the energy consumption of the task offloading to the cloud server can be expressed as

Then, the total energy consumption offloading to the cloud servers is

The total energy consumption of all computing nodes can be expressed as

2.5. System Cost Model

In order to effectively weigh the system delay and energy consumption generated during task offloading, the task offloading problem addressed in this paper is transformed into a system cost optimization problem. Aiming at minimizing system delay and energy consumption, a system cost optimization model is established as

Equation (24) is the optimization objective, where and represent the system delay and energy consumption of the comparison scheme, respectively, assuming that the comparison scheme is that all tasks are offloaded to the source vehicle, and , , respectively, represent the weight coefficient of system delay and energy consumption. The setting of weights are optional parameters reserved for users. When the source vehicle needs to offload tasks with low delay requirements, the should be increased appropriately; when the source vehicle needs to save energy consumption, the should be increased. In general, we set both and to 0.5 to obtain the task offloading strategy that balances system delay and energy consumption. Equation (25) indicates that all tasks need to be offloaded, and each subtask can only be offloaded at one location. Equation (26) represents the constraints on the weight coefficients of system delay and energy consumption.

3. Task Offloading Scheme

After the V2V-assisted task forwarding mechanism is introduced, vehicles can directly communicate with each other, but the links between vehicles vary as vehicles move. To ensure the reliability of task transmission, a new forwarding vehicle predicting algorithm based on environmental information is proposed; furthermore, a multi-strategy improved genetic algorithm is proposed, aiming to solve the optimization problem defined in Equation (24) and obtain the optimal task offloading scheme.

3.1. Forwarding Vehicle Predicting Algorithm Based on Environmental Information

Since the vehicle is in a constant state of motion, the communication links between vehicles as well as between nodes are constantly changing, making it difficult to guarantee the reliability of task forwarding between different nodes. The vehicle capable of forwarding the task to the fog server is defined as the forwarding vehicle. If the vehicle speed, vehicle location and other environmental information are known, the final forwarding vehicle is determined by predicting the duration of the link between the vehicles. The forwarding vehicle predicting algorithm based on environmental information is outlined in Algorithm 1.

In rows 6 to 11 of Algorithm 1, the first judgment is to screen out the vehicle set that can transmit the task to the next vehicle, and the second judgment is to judge whether the next hop vehicle can forward the task to the fog node before driving out of its fog area, thus ensuring that the task is not lost, and the vehicles that satisfy these conditions are the candidate vehicles. Rows 19 to 20 of Algorithm 1 assess whether the next hop vehicle brings the task to the area closer to the target fog node. If it is closer, the vehicle is set as the new forwarding vehicles; otherwise, the forwarding vehicle remains the original vehicle. Rows 23 to 25 of Algorithm 1 iterate the algorithm until the forwarding vehicles can already reach the target fog node or there are no candidate vehicles. The computational complexity of the selection operation and the numerical computation of Algorithm 1 is ; therefore, the computational complexity of Algorithm 1 is , where is the number of cooperative vehicles, and is the number of candidate vehicles.

| Algorithm 1 Forwarding vehicles predicting algorithm |

| Input: , , , , channel parameter set , , hop number , temporary hop number , target fog server , time ; Output: , ; (1) When running the algorithm for the first time, the source vehicle is initialized to forwarding vehicles, initialize , , ; (2) Obtain the set of vehicles that can communicate around forwarding vehicles; (3) if do (4) for in do (5) Calculate the existence time of the link between and , and the data transmission delay ; (6) if do (7) calculate the time of transmission fog as and time of driving out of the fog area as ; (8) if do (9) will be added to the collection of candidate vehicles; (10) end if (11) end if (12) end for (13) end if (14) if do (15) Select the nearest vehicle in the middle distance as ; (16) if the distance of after forwarding is closer to the do (17) ; (18) Located in the fog area after receiving the task is , the current fog area is ; (19) if do (20) ; (21) ; (22) end if (23) if do (24) Recursively run the algorithm; (25) end if (26) end if (27) end if |

3.2. Multi-Strategy Improved Genetic Algorithm

The task offloading problem is a NP-Hard problem [28], which makes it intensely arduous to directly solve the optimal offloading strategy. Heuristic algorithms are capable of obtaining acceptable solutions within limited polynomial time, and the real-time offloading strategy can be guaranteed by adopting this algorithm in the internet of vehicles. The genetic algorithm is a typical heuristic algorithm. To mitigate issues such as prematurity and slow convergence, a multi-strategy improved genetic algorithm is proposed to solve the task offloading problem with Equation (24) minimizing system cost. The specific steps are as follows.

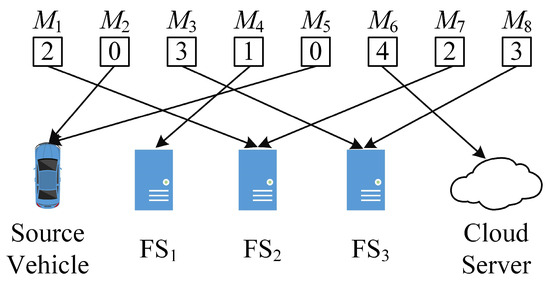

Step 1: Decision coding. The decision code is encoded in integers; the value of each gene location indicates the offloading position of the task. When the value of the gene location is 0, it means that the task is offloaded to source vehicle; when the value of the gene location is greater than 0 and less than the number of fog servers, it means that the task is offloaded to the same serial number of fog servers; when the value of the gene location is greater than the number of fog servers, it means that the task is offloaded to the cloud server.

In the coding example shown in Figure 5, there are 3 available fog servers as fog nodes in the environments, and the task is divided into 8 subtasks. When the chromosome is encoded as , the tasks and are offloaded to the source vehicle, the task is offloaded to the cloud server, and the remaining tasks are offloaded to the same serial number of fog servers.

Figure 5.

Example of decision coding.

Step 2: Population initialization. The classical genetic algorithm adopts a pseudorandom number generator to generate the initialized population, which is blind and precocious [29]. In the multi-strategy improved genetic algorithm, because the chaotic sequence is disordered, aperiodic and ergodic, it can ensure the diversity of the initial population while providing better initial solutions for the population. In this paper, Tent mapping in chaotic sequence is adopted to generate the initial population

Step 3: Selection operator. The fitness function in genetic algorithms is an indicator adopted to measure an individual’s fitness to the environments. In this paper, individual fitness is determined by system delay and energy consumption, as shown in Equation (24). However, the fitness expressed in this equation is not obvious enough for outstanding individuals, especially when the population similarity is extremely high in the later stage of the algorithm, making it difficult to distinguish and select the optimal solution. Therefore, a new fitness function is defined as

where is the constant, whose value is determined by a large number of experiments.

The Metropolis criterion in the simulated annealing algorithm is adopted to screen the population in the selection operator, which can retain the better individuals and accept the suboptimal individuals with a certain probability. The retention probability of the suboptimal individuals is

where denotes the fitness of the suboptimal individual, represents the annealing parameter. As the value increases, the retention probability of the suboptimal individual decreases, while the retention probability of the optimal individual increases. After the selection operation, the elite retention strategy is added to ensure that the optimal individual of the population is retained during the iteration, thus enhancing the convergence ability of the algorithm.

Step 4: Crossover operator. Taking into account the impact of evolutionary generation and individual fitness on the algorithm’s performance, a new adaptive crossover operator is proposed in this paper. During the execution of the algorithm, as the demand for global search diminishes, the crossover probability in the operator is gradually decreased. Additionally, individuals with higher fitness values exhibit a lower crossover probability, enabling the retention of excellent individuals [30]. The crossover probability is

where and , respectively, are the maximum value and minimum value of the crossover probability, is a constant, is the fitness of each individual, represents the average fitness of the current population, and represents the number of evolutionary generations.

Step 5: Mutation operator. As the genetic algorithm proceeds through iterative optimization, the similarity between individuals gradually increases, and a fixed mutation probability can be difficult to escape from local optimum during the operation of the algorithm. To address this issue, this paper adopts a method of adaptive adjustment of mutation probability based on population similarity. Population similarity is defined as , where and , respectively, represent the expectation and standard deviation of the population’s fitness. Then, the mutation probability can be expressed as

where is a constant.

Step 6: Perturbation operator. In this paper, the Gaussian perturbation operator is introduced to address the issues of premature convergence and local optimum in classical genetic algorithms. To reduce the complexity of the algorithm, the perturbation operator is invoked only when the algorithm encounters a local optimum. Specifically, when the best fitness value of the population remains unchanged for successive generations, indicating that the population has fallen into a local optimum, the Gaussian perturbation operator is then activated to assist the algorithm in escaping the local optimum. The Gaussian perturbation operator can be expressed as

where and , respectively, represent the optimal individual before and after the perturbation at generation ; represents the random variable that obeys the Gaussian distribution with standard deviation .

In the proposed MSI-GA, the computational complexity of population initialization is , where is the population size; the computational complexity of fitness evaluation is ; the selection operator, crossover operator, mutation operator and perturbation operator have computational complexity. Therefore, the computational complexity of MSI-GA is , where is the numbers of iterations.

4. Performance Evaluations and Simulation Results

In order to verify the performance of the proposed algorithm, MATLAB R2021a is adopted to complete the simulation experiment. In order to ensure the universality and authenticity of the simulation experiment, the environment parameters are presented in Table 1, and the algorithm parameters are presented in Table 2. In the experiment, the proposed MSI-GA scheme, the random offloading scheme, the partial collaborative scheme, the GA scheme and the CGA scheme are compared and analyzed:

Table 1.

Environment parameters.

Table 2.

Algorithm parameters.

- (1)

- Random offloading scheme: Tasks are randomly offloaded to the source vehicle, local fog server, adjacent fog servers and the cloud server.

- (2)

- Partial collaborative scheme: MSI-GA is employed to determine the offloading strategy for tasks, which can be offloaded to source vehicle, local fog server and the cloud server.

- (3)

- GA scheme: The genetic algorithm is employed to determine the offloading strategy for tasks, which can be offloaded to the source vehicle, local fog server, adjacent fog servers and the cloud server.

- (4)

- CGA scheme: The chaotic genetic algorithm is employed to determine the offloading strategy for tasks, which can be offloaded to the source vehicle, local fog server, adjacent fog servers and the cloud server.

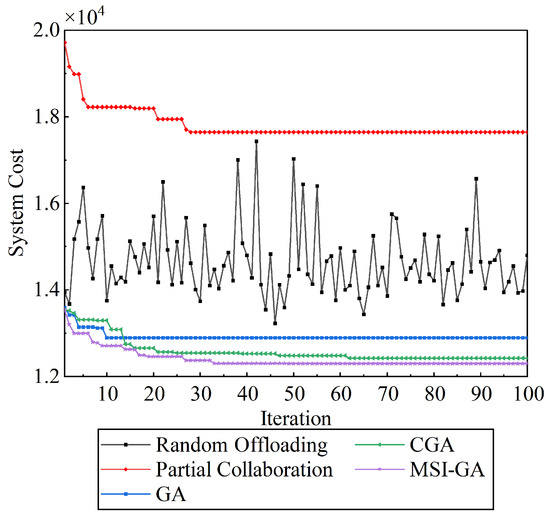

4.1. Performance Analysis Considering System Delay and Energy Consumption in Balance

Figure 6 shows the cost of each offloading scheme when the weights of system delay and energy consumption are both 0.5. The number of experimental tasks is 100 and the number of fog nodes is 5. As can be seen from the figure, compared with the partial collaborative scheme, the random offloading scheme, GA scheme, CGA scheme and the proposed MSI-GA scheme can obtain a lower system cost, indicating that the cloud and fog collaborative scheme adopting adjacent fog nodes can obtain a lower system cost than the partial collaborative scheme and is more suitable for application in the internet of vehicles environments. The system cost of the GA scheme, CGA scheme and the proposed MSI-GA scheme gradually decreases with the increase in iteration times, whereas the system cost is lower than that of the random offloading scheme. Among GA, CGA, and MSI-GA schemes, the GA scheme completes convergence earliest but the system cost is the highest; the CGA scheme converges last, and the system cost is lower than the GA scheme; the proposed MSI-GA scheme can obtain a lower system cost in generation when the GA scheme and CGA scheme converge, and there is still a decreasing trend in the future. In addition, compared with the other four schemes, the proposed MSI-GA scheme has the lowest system cost when converging.

Figure 6.

Comparison of costs for different schemes when system delay and energy consumption are balanced.

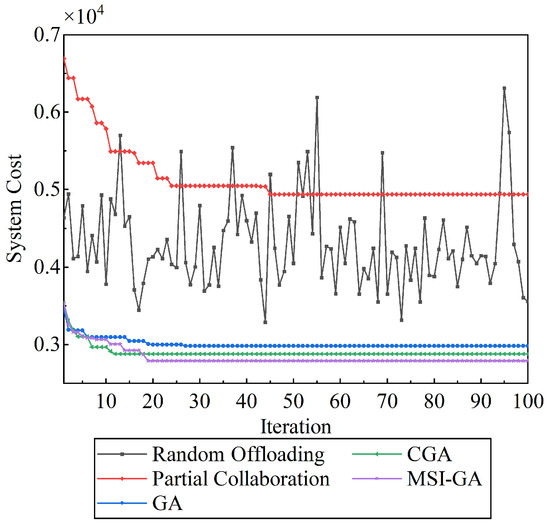

4.2. Consider Performance Analysis for Single Objective

Figure 7 and Figure 8 show the system delay and energy consumption of the task offloading schemes considering a single objective, respectively. The number of tasks in this experiment is 100 and the number of fog nodes is 5. As can be seen from Figure 7, most system costs of the random offloading schemes are lower than those of systems converging in the partial collaborative scheme. The system costs of the GA scheme, CGA scheme and MSI-GA scheme are lower than that of the random offloading scheme and the partial collaborative scheme, and all of them converge at the early stage of iteration. The system cost obtained by the CGA scheme is lower than the GA scheme but higher than the proposed MSI-GA. in which the proposed MSI-GA scheme can provide the lowest system cost when converging.

Figure 7.

Comparison of costs for different schemes when only system delay is considered.

Figure 8.

Comparison of costs for different schemes when only energy consumption is considered.

As can be seen from Figure 8, most of the system costs of the random offloading scheme are lower than the partial collaboration scheme, The system costs of the GA, CGA and MSI-GA schemes are all lower than the random offloading scheme and the partial collaboration scheme; Among the three schemes of GA, CGA and MSI-GA, the GA scheme achieves convergence earliest, but its system cost is the highest among the three schemes. The CGA scheme and MSI-GA scheme converge in a similar generation, but the system cost of the MSI-GA scheme is lower. Therefore, the proposed MSI-GA scheme is superior to the random offloading scheme, the partial collaborative scheme, the GA scheme and the CGA scheme when considering only system delay or energy consumption.

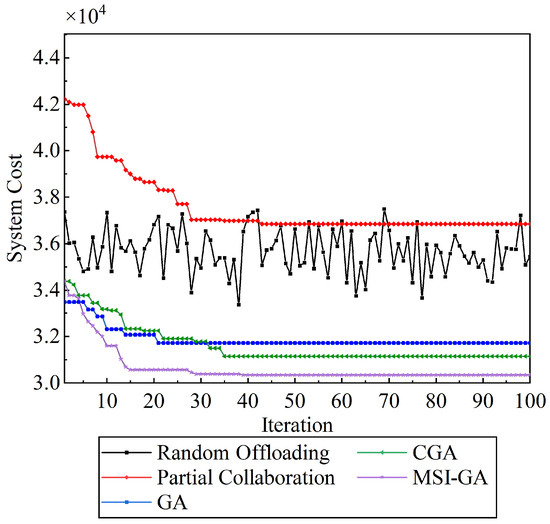

4.3. Influence the Number of Tasks on Algorithm Performance

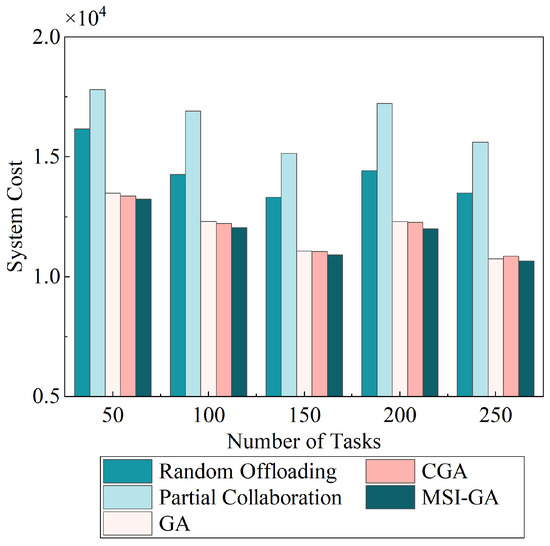

Figure 9 shows the influence of a different number of tasks on the task offloading scheme when there are five fog nodes, under balancing system delay and energy consumption. In Figure 9, the proposed MSI-GA scheme is compared with the partial collaborative scheme, the random offloading scheme, GA and CGA. From Figure 9, for the five schemes, the system cost changes steadily as the number of tasks increases, which is consistent with Equation (24). Here, when the number of tasks increases and all tasks are offloaded to the source vehicle, system delay and energy consumption increase simultaneously. Among the five schemes, the partial collaborative scheme has the highest system cost under a different task number, while the proposed MSI-GA scheme has the lowest system cost. When the number of tasks is 200, the system cost of the proposed MSI-GA scheme is reduced by approximately 20.3%, 43.67%, 2.75% and 2.34% compared with the random offloading scheme, the partial collaborative scheme, GA and CGA, respectively. This shows that the proposed MSI-GA scheme has a lower system cost than the other schemes when dealing with a different number of tasks.

Figure 9.

Comparison of system costs for different number of tasks.

4.4. Influence of Number of Fog Nodes on Algorithm Performance

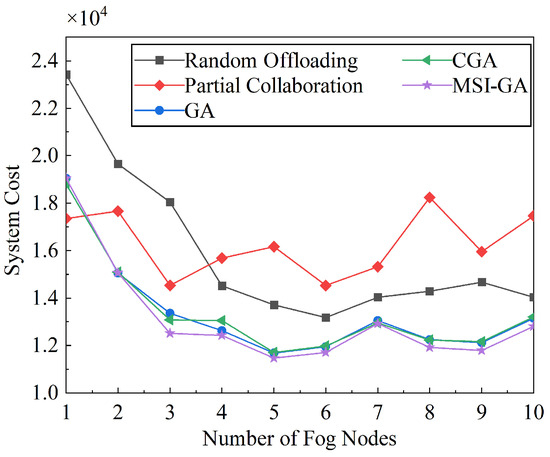

Figure 10 shows the influence of the number of different fog nodes on each scheme when the task is 100, under balancing system delay and energy consumption. In order to reduce the randomness, the random offloading scheme selects 100 offloading options randomly to compute the average system cost. As can be seen from the figure, with the increase in the number of fog nodes, the system cost of the partial collaborative scheme fluctuates within a certain range. This is because the partial collaborative scheme only offloads tasks to the local fog server, and the increase in the number of surrounding fog nodes has no effect on the system cost. The system cost of the random offloading scheme, GA scheme, CGA scheme and the proposed MSI-GA scheme can all decrease with the increase in the number of fog nodes. When the number of fog nodes increases to five, the downward trend in system cost tends to be steady. This is because the increase in the number of fog nodes brings more fog nodes to participate in the calculation, and the parallel calculation task among all nodes reduces the calculation delay of the task and, thus, reduce the system cost. When there are more fog nodes, if the task is offloaded to further fog nodes, more transmission delay is generated. Therefore, when the number of fog nodes reaches a certain level, the system cost no longer decreases. With the change in the number of fog nodes, the proposed MSI-GA scheme can obtain the lowest system cost, and the performance of the GA scheme and CGA scheme is basically the same.

Figure 10.

Comparison of system costs for different numbers of fog nodes.

4.5. Influence of Number of Cooperative Vehicles on the Forwarding Load of Fog Nodes

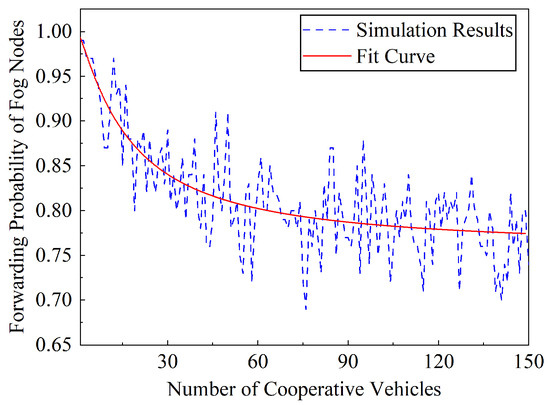

Figure 11 shows the influence of the change in the number of cooperative vehicles on the forwarding probability of fog nodes. In Figure 11, the simulation results are fitted into a fit curve adopting the logistic regression model. It can be seen from the fit curve that with the increase in the number of cooperative vehicles, the probability of fog node forwarding gradually decreases, and the decreasing trend gradually becomes more stable. After the introduction of V2V-assisted forwarding, the greater the number of vehicles around the source vehicle, the lower the probability of task forwarding through the fog nodes, and the forwarding load of the fog nodes also decreases. Without V2V-assisted forwarding, more vehicles require fog node services, posing challenges to service quality. However, after introducing V2V-assisted forwarding, all vehicles can become forwarding nodes, reducing the forwarding load for fog nodes by utilizing mutual services between vehicles and making full use of the surrounding resources.

Figure 11.

Influence of the number for cooperative vehicles on the forwarding probability of fog nodes.

5. Conclusions

In this paper, the task offloading problem of a single vehicle on a one-way straight road is studied in the internet of vehicles environments based on cloud and fog collaborative computing. The innovative work mainly includes: (1) introducing the V2V-assisted task forwarding mechanism in the environment and designing a forwarding vehicle predicting algorithm to help vehicles obtain task forwarding links; (2) considering the parallel computing relationship among computing nodes, the task offloading cost model is constructed with the goal of minimizing system delay and energy consumption; (3) a multi-strategy improved genetic algorithm is proposed to solve the offloading optimization problem. The simulation results show that compared with the existing schemes, due to the introduction of a parallel computing model in the model, the proposed MSI-GA scheme can obtain lower system costs in fewer iterations when considering different optimization objectives and has better performance under different task numbers and different fog node numbers. Therefore, the MSI-GA scheme proposed in this paper can allocate tasks more efficiently and effectively reduce the system delay and energy consumption. In addition, after the introduction of the V2V-assisted task forwarding mechanism, cooperative vehicles around the source vehicle are utilized, and the forwarding probability of the fog node can be reduced with the increase in the number of vehicles, which reduces the forwarding load of the fog node and enables vehicles to fully utilize the environmental resources. In this paper, we consider the task offloading problem for a single vehicle, which is significant to provide more precise service for vehicles in scenarios such as autonomous driving. In future work, the uncertain factors, such as road congestion, computing node availability, etc., will be studied, and efficient offloading strategies will be designed for multiple vehicles, which should also be tested in the real internet of vehicles environments based on cloud and fog collaborative computing.

Author Contributions

Conceptualization, writing—original draft, formal analysis, resources, C.L. and H.Z.; writing—review and editing, C.Z. and J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (62172457) and Open subject of Scientific research platform in Grain Information Processing Center (KFJJ2022011), The Innovative Funds Plan of Henan University of Technology (2022ZKCJ13).

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Lin, S.J.; Li, Y.Y.; Han, Z.B.; Zhuang, B.; Ma, J.; Tianfield, H. Joint Incentive Mechanism Design and Energy-Efficient Resource Allocation for Federated Learning in UAV-Assisted Internet of Vehicles. Drones 2024, 8, 82. [Google Scholar] [CrossRef]

- Cao, B.; Sun, Z.H.; Zhang, J.T.; Gu, Y. Resource Allocation in 5G IoV Architecture Based on SDN and Fog-Cloud Computing. IEEE Trans. Intell. Transp. Syst. 2021, 22, 3832–3840. [Google Scholar] [CrossRef]

- Jamil, B.; Ijaz, H.; Shojafar, M.; Munir, K.; Buyya, R. Resource Allocation and Task Scheduling in Fog Computing and Internet of Everything Environments: A Taxonomy, Review, and Future Directions. ACM Comput. Surv. 2022, 54, 1–38. [Google Scholar] [CrossRef]

- Jain, V.; Kumar, B. QoS-Aware Task Offloading in Fog Environment Using Multi-agent Deep Reinforcement Learning. J. Netw. Syst. Manag. 2023, 31, 7. [Google Scholar] [CrossRef]

- Huang, H.; Ye, Q.; Zhou, Y.T. Deadline-Aware Task Offloading with Partially-Observable Deep Reinforcement Learning for Multi-Access Edge Computing. IEEE Trans. Netw. Sci. Eng. 2022, 9, 3870–3885. [Google Scholar] [CrossRef]

- Khoobkar, M.H.; Fooladi, M.D.T.; Rezvani, M.H.; Sadeghi, M.M.G. Partial offloading with stable equilibrium in fog-cloud environments using replicator dynamics of evolutionary game theory. Clust. Comput.-J. Netw. Softw. Tools Appl. 2022, 25, 1393–1420. [Google Scholar] [CrossRef]

- Lu, W.J.; Zhang, X.L. Computation Offloading for Partitionable Applications in Dense Networks: An Evolutionary Game Approach. IEEE Internet Things J. 2022, 9, 20985–20996. [Google Scholar] [CrossRef]

- Chen, Y.; Zhao, J.; Wu, Y.; Huang, J.W.; Shen, X.M. QoE-Aware Decentralized Task Offloading and Resource Allocation for End-Edge-Cloud Systems: A Game-Theoretical Approach. IEEE Trans. Mob. Comput. 2024, 23, 769–784. [Google Scholar] [CrossRef]

- Kishor, A.; Chakarbarty, C. Task Offloading in Fog Computing for Using Smart Ant Colony Optimization. Wirel. Pers. Commun. 2022, 127, 1683–1704. [Google Scholar] [CrossRef]

- Zhu, S.F.; Cai, J.H.; Sun, E.L. Mobile edge computing offloading scheme based on improved multi-objective immune cloning algorithm. Wirel. Netw. 2023, 29, 1737–1750. [Google Scholar] [CrossRef]

- Zhang, J.; Piao, M.J.; Zhang, D.G.; Zhang, T.; Dong, W.M. An approach of multi-objective computing task offloading scheduling based NSGS for IOV in 5G. Clust. Comput.-J. Netw. Softw. Tools Appl. 2022, 25, 4203–4219. [Google Scholar] [CrossRef]

- Zhu, S.F.; Sun, E.L.; Zhang, Q.H.; Cai, J.H. Computing Offloading Decision Based on Multi-objective Immune Algorithm in Mobile Edge Computing Scenario. Wirel. Pers. Commun. 2023, 130, 1025–1043. [Google Scholar] [CrossRef]

- Long, S.Q.; Zhang, Y.; Deng, Q.Y.; Pei, T.R.; Ouyang, J.Z.; Xia, Z.H. An Efficient Task Offloading Approach Based on Multi-Objective Evolutionary Algorithm in Cloud-Edge Collaborative Environment. IEEE Trans. Netw. Sci. Eng. 2023, 10, 645–657. [Google Scholar] [CrossRef]

- Zhang, Z.R.; Wang, N.F.; Wu, H.M.; Tang, C.G.; Li, R.D. MR-DRO: A Fast and Efficient Task Offloading Algorithm in Heterogeneous Edge/Cloud Computing Environments. IEEE Internet Things J. 2023, 10, 3165–3178. [Google Scholar] [CrossRef]

- Xu, L.; Liu, Y.; Fan, B.; Xu, X.; Mei, Y.; Feng, W. An Improved Gravitational Search Algorithm for Task Offloading in a Mobile Edge Computing Network with Task Priority. Electronics 2024, 13, 540. [Google Scholar] [CrossRef]

- Sun, Y.L.; Wu, Z.Y.; Meng, K.; Zheng, Y.H. Vehicular Task Offloading and Job Scheduling Method Based on Cloud-Edge Computing. IEEE Trans. Intell. Transp. Syst. 2023, 24, 14651–14662. [Google Scholar] [CrossRef]

- Zhou, H.; Jiang, K.; Liu, X.X.; Li, X.H.; Leung, V.C.M. Deep Reinforcement Learning for Energy-Efficient Computation Offloading in Mobile-Edge Computing. IEEE Internet Things J. 2022, 9, 1517–1530. [Google Scholar] [CrossRef]

- Tang, M.; Wong, V.W.S. Deep Reinforcement Learning for Task Offloading in Mobile Edge Computing Systems. IEEE Trans. Mob. Comput. 2022, 21, 1985–1997. [Google Scholar] [CrossRef]

- Lee, S.S.; Lee, S. Resource Allocation for Vehicular Fog Computing Using Reinforcement Learning Combined with Heuristic Information. IEEE Internet Things J. 2020, 7, 10450–10464. [Google Scholar] [CrossRef]

- Jin, H.L.; Kim, Y.G.; Jin, Z.R.; Fan, C.Y.; Xu, Y.L. Joint Task Offloading Based on Distributed Deep Reinforcement Learning-Based Genetic Optimization Algorithm for Internet of Vehicles. J. Grid Comput. 2024, 22, 34. [Google Scholar] [CrossRef]

- Liu, L.; Feng, J.; Mu, X.; Pei, Q.; Lan, D.; Xiao, M. Asynchronous deep reinforcement learning for collaborative task computing and on-demand resource allocation in vehicular edge computing. IEEE Trans. Intell. Transp. Syst. 2023, 24, 15513–15526. [Google Scholar] [CrossRef]

- Luo, Q.Y.; Li, C.L.; Luan, T.H.; Shi, W.S. Minimizing the Delay and Cost of Computation Offloading for Vehicular Edge Computing. IEEE Trans. Serv. Comput. 2022, 15, 2897–2909. [Google Scholar] [CrossRef]

- Huang, M.X.; Zhai, Q.H.; Chen, Y.J.; Feng, S.L.; Shu, F. Multi-Objective Whale Optimization Algorithm for Computation Offloading Optimization in Mobile Edge Computing. Sensors 2021, 21, 2628. [Google Scholar] [CrossRef] [PubMed]

- Ren, Q.; Liu, K.; Zhang, L.M. Multi-objective optimization for task offloading based on network calculus in fog environments. Digit. Commun. Netw. 2022, 8, 825–833. [Google Scholar] [CrossRef]

- Duan, W.; Li, X.; Huang, Y.; Cao, H.; Zhang, X. Multi-Agent-Deep-Reinforcement-Learning-Enabled Offloading Scheme for Energy Minimization in Vehicle-to-Everything Communication Systems. Electronics 2024, 13, 663. [Google Scholar] [CrossRef]

- Labriji, I.; Meneghello, F.; Cecchinato, D.; Sesia, S.; Perraud, E.; Strinati, E.C.; Rossi, M. Mobility Aware and Dynamic Migration of MEC Services for the Internet of Vehicles. IEEE Trans. Netw. Serv. Manag. 2021, 18, 570–584. [Google Scholar] [CrossRef]

- Shu, W.N.; Li, Y. Joint offloading strategy based on quantum particle swarm optimization for MEC-enabled vehicular networks. Digit. Commun. Netw. 2023, 9, 56–66. [Google Scholar] [CrossRef]

- Shen, Q.Q.; Hu, B.J.; Xia, E.J. Dependency-Aware Task Offloading and Service Caching in Vehicular Edge Computing. IEEE Trans. Veh. Technol. 2022, 71, 13182–13197. [Google Scholar] [CrossRef]

- Zelinka, I.; Diep, Q.B.; Snasel, V.; Das, S.; Innocenti, G.; Tesi, A.; Schoen, F.; Kuznetsov, N.V. Impact of chaotic dynamics on the performance of metaheuristic optimization algorithms: An experimental analysis. Inf. Sci. 2022, 587, 692–719. [Google Scholar] [CrossRef]

- Movahedi, Z.; Defude, B.; Hosseininia, A.M. An efficient population-based multi-objective task scheduling approach in fog computing systems. J. Cloud Comput.-Adv. Syst. Appl. 2021, 10, 53. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).