An FPGA-Accelerated CNN with Parallelized Sum Pooling for Onboard Realtime Routing in Dynamic Low-Orbit Satellite Networks

Abstract

1. Introduction

- -

- A practical co-design method with heterogeneous processors to parallelize and accelerate the Dueling-DQN-based routing algorithm was proposed to solve the problem of real-time onboard routing for dynamic LEO satellite networks.

- -

- The sum pooling process in the Dueling-DQN was significantly accelerated on an FPGA by the proposed method, which can be applied to its other applications.

- -

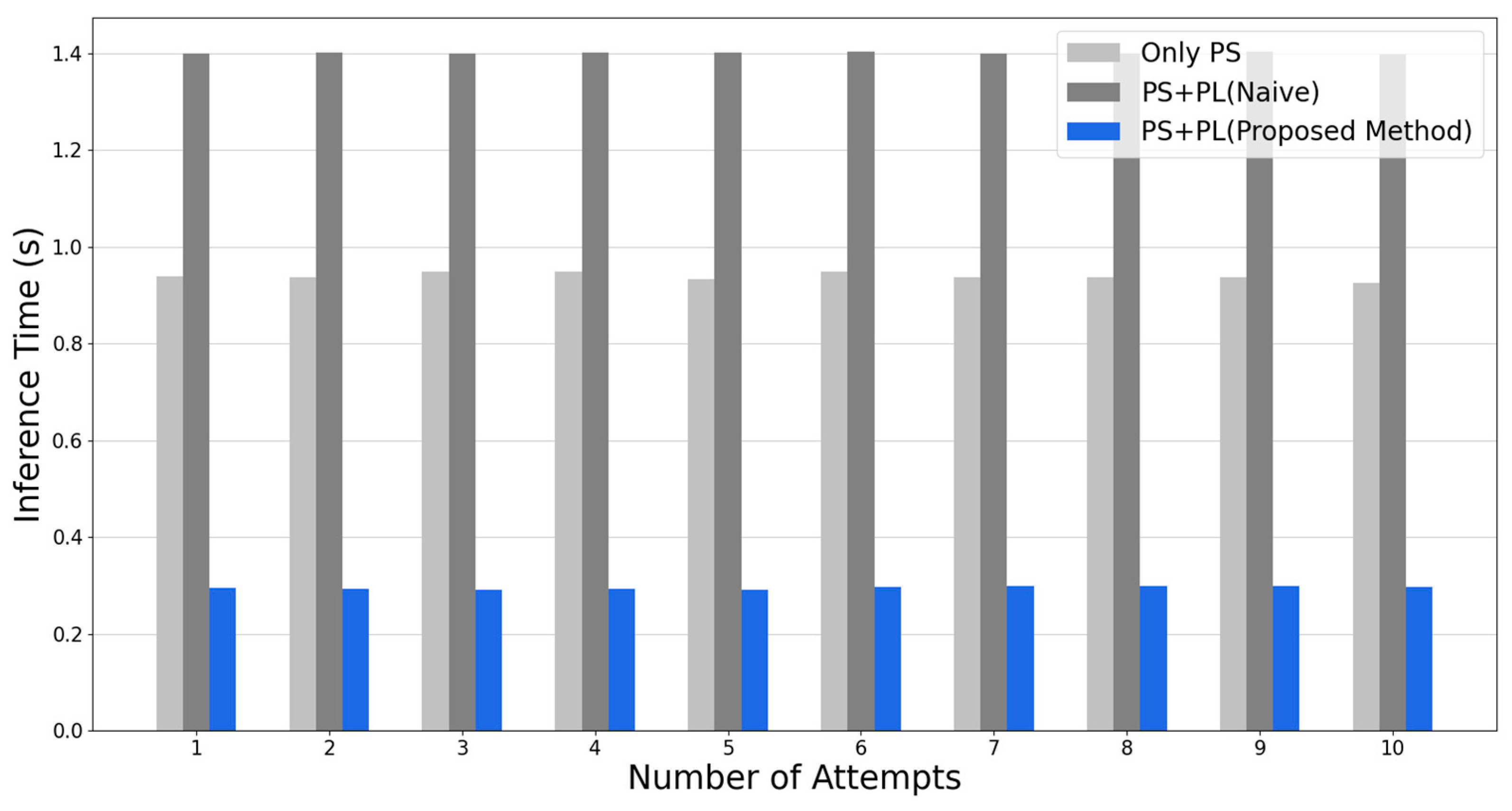

- The proposed method was tested with a real heterogeneous processor-based OBC and showed significantly reduced computation time while achieving the same routing results as the conventional method.

2. Problem Description

2.1. Routing Problem in the LEO Network

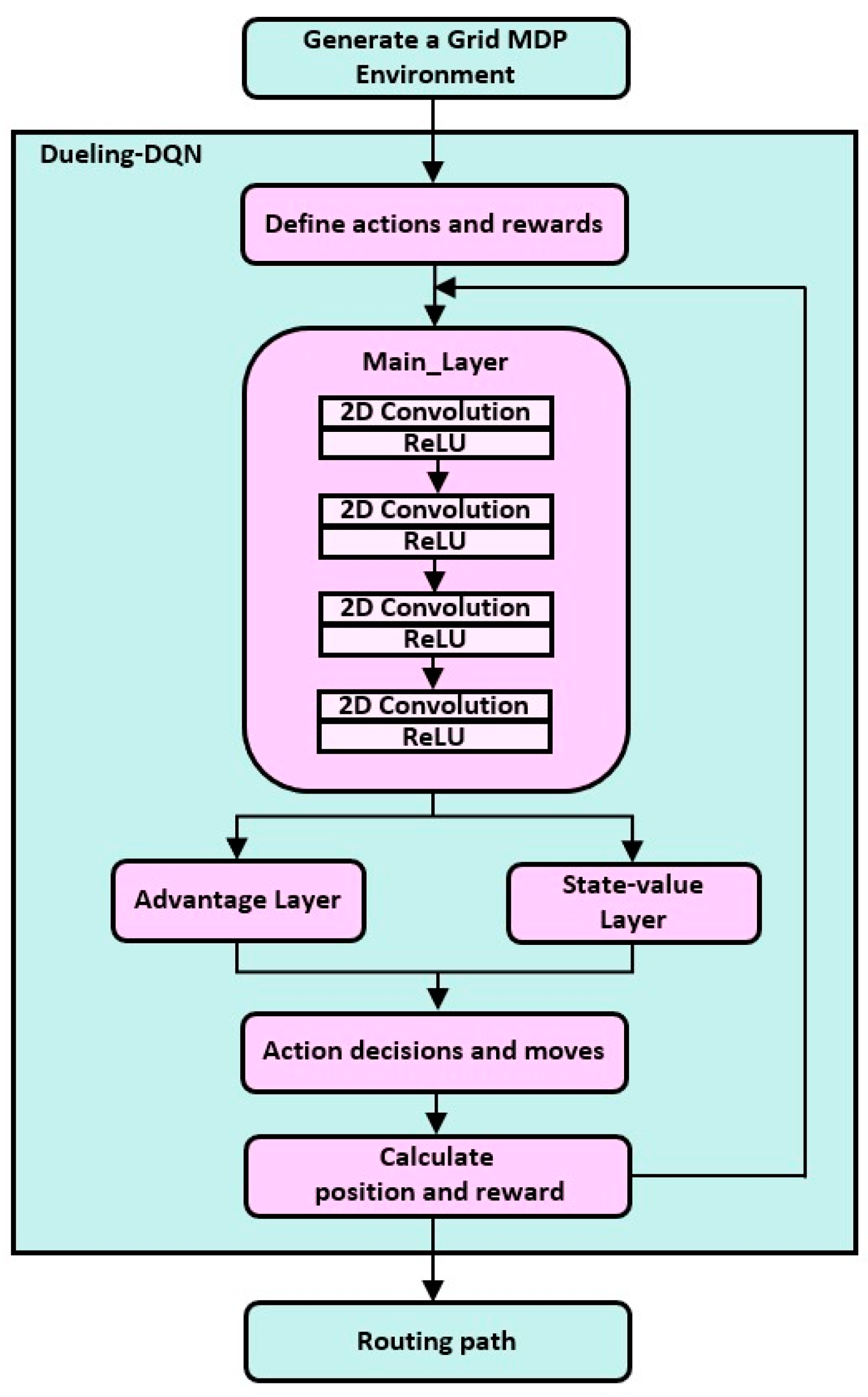

2.2. Dueling DQN-Based Reinforcement Learning Model

2.3. Problem of On-Board Real-Time Inference

3. Proposed Method

3.1. Overall Structure of the Dueling-DQN-Based Routing Algorithm

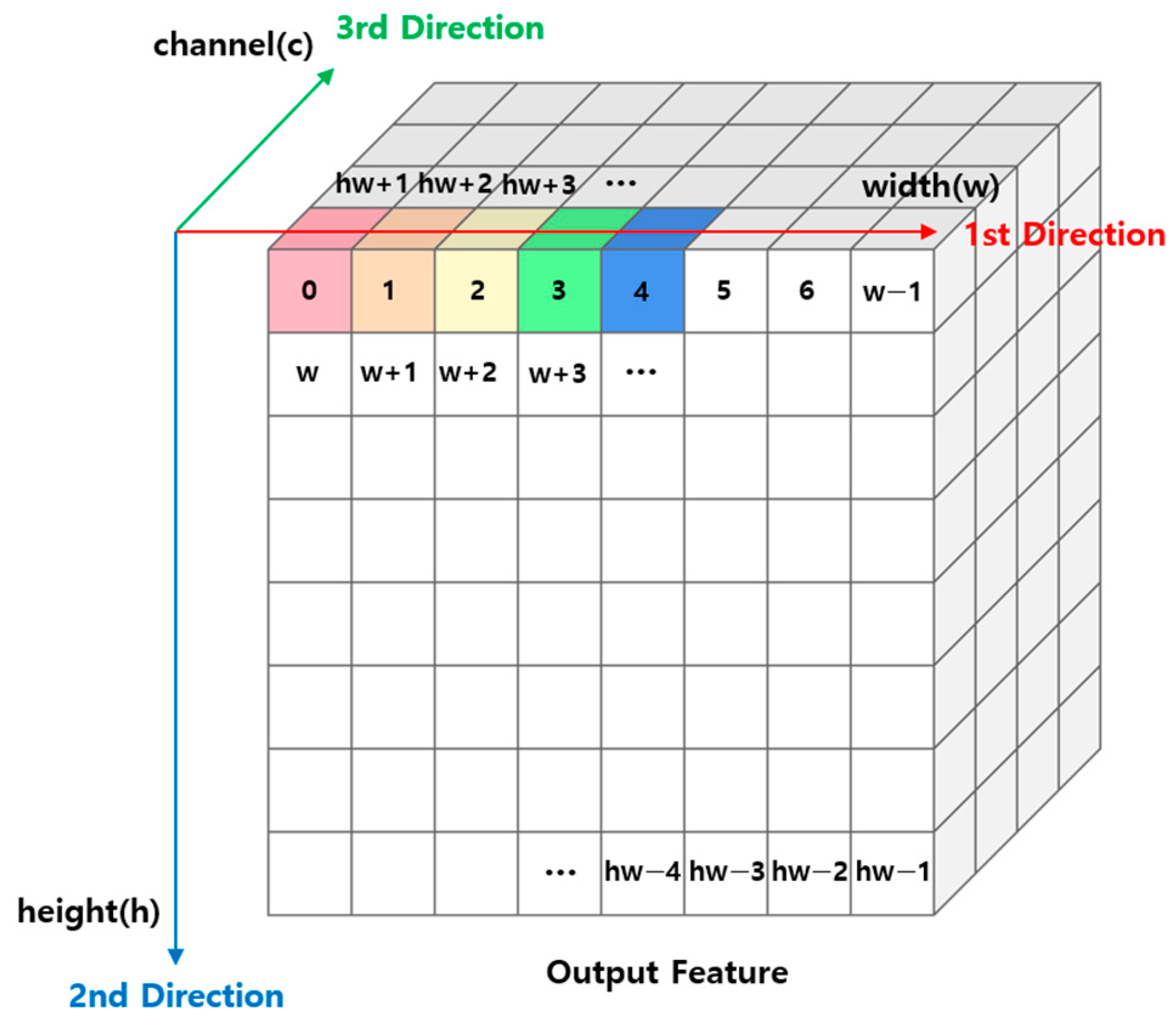

3.2. Dependency Analysis

| Algorithm 1 | The Conventional Sum Pooling Method |

| Input | conv_in[] conv_weight[], conv_bias[] input’s height, width, channel kernel size output’s height, width, channel |

| Output | conv_out[] |

| 1. | for i = 0~output_channel |

| 2. | for j = 0~output_width |

| 3. | for k = 0~output_height |

| 4. | float result = 0; |

| 5. | for x = 0~input_channel |

| 6. | for y = 0~kernel_size |

| 7. | for z = 0~kernel_size |

| 8. | Calculate in_idx |

| 9. | Calculate weight_idx |

| 10. | result += conv_in[in_idx] × conv_weight[weight_idx] |

| 11. | end |

| 12. | end |

| 13. | end |

| 14. | Calculate out_idx |

| 15. | conv_out[out_idx] = result + conv_bias[i] |

| 16. | If(conv_out[out_idx] <= 0) |

| 17. | Conv_out[out_idx] = 0; //ReLU |

| 18. | end |

| 19. | end |

| 20. | end |

| 21. | end |

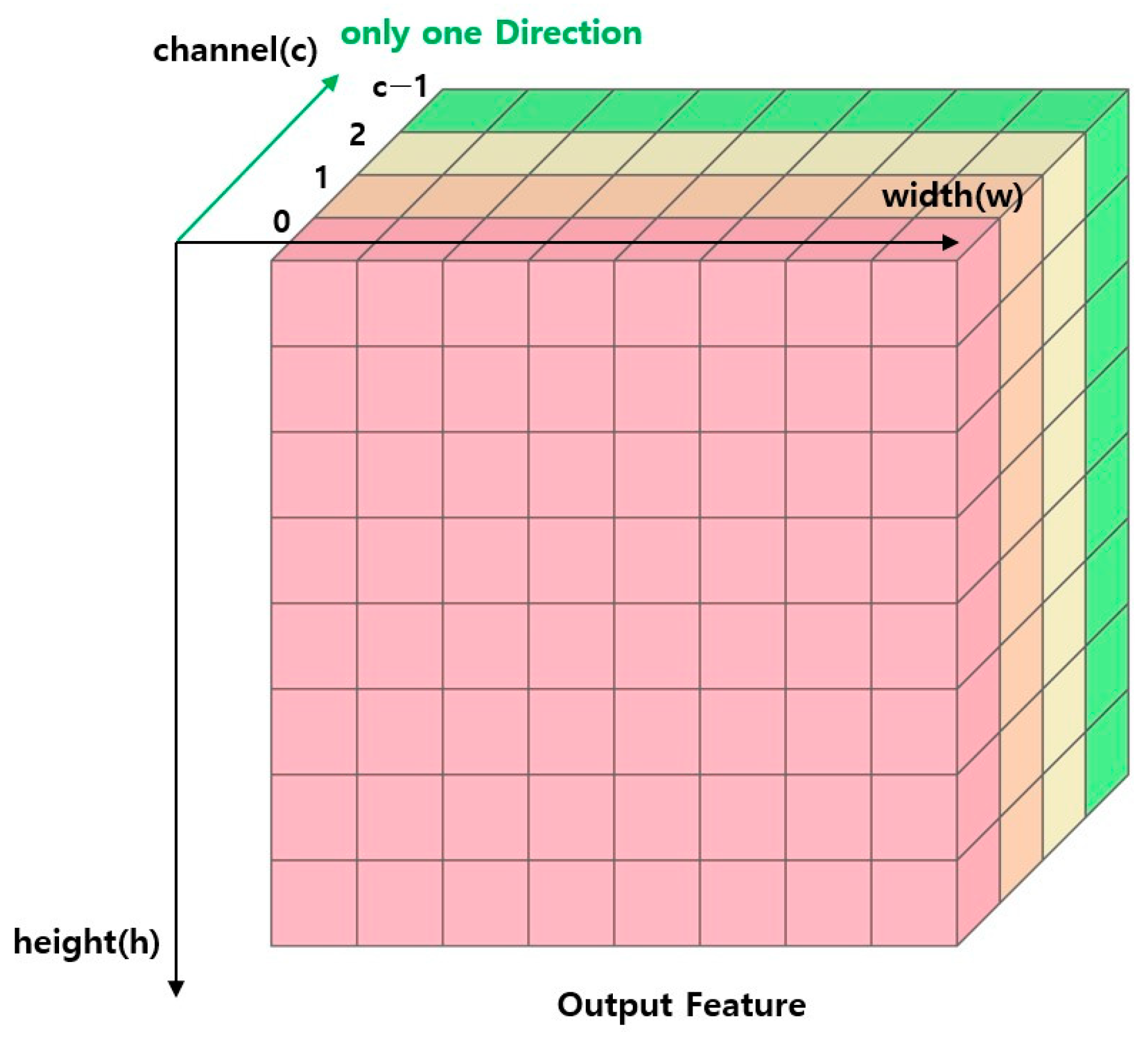

3.3. Parallelized Sum Pooling

3.4. Buffer-Based Dependency Avoidance for Adaptive Pipelining

| Algorithm 2 | The Proposed Parallelized Sum Pooling Method |

| Input | conv_in[] conv_weight[], conv_bias[] input’s height, width, channel kernel size output’s height, width, channel |

| Output | conv_out[] |

| 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. 14. 15. 16. 17. 18. 19. 20. 21. 22. 23. 24. | for i = 0~output_channel float result [output_width × output_height] = 0; for x = 0~input_channel for y = 0~kernel_size for z = 0~kernel_size Calculate weight_idx float temp = conv_weight for j = 0~output_width for k = 0~output_height #Pipeline II = 1 #ignore dependence variable = result Calculate in_idx result += conv_in[in_idx] × conv_weight[weight_idx] Calculate out_idx Calculate conv_out[out_idx] If(conv_out[out_idx] <= 0) Conv_out[out_idx] = 0; //ReLU end end end end end end end |

4. Experimental Results

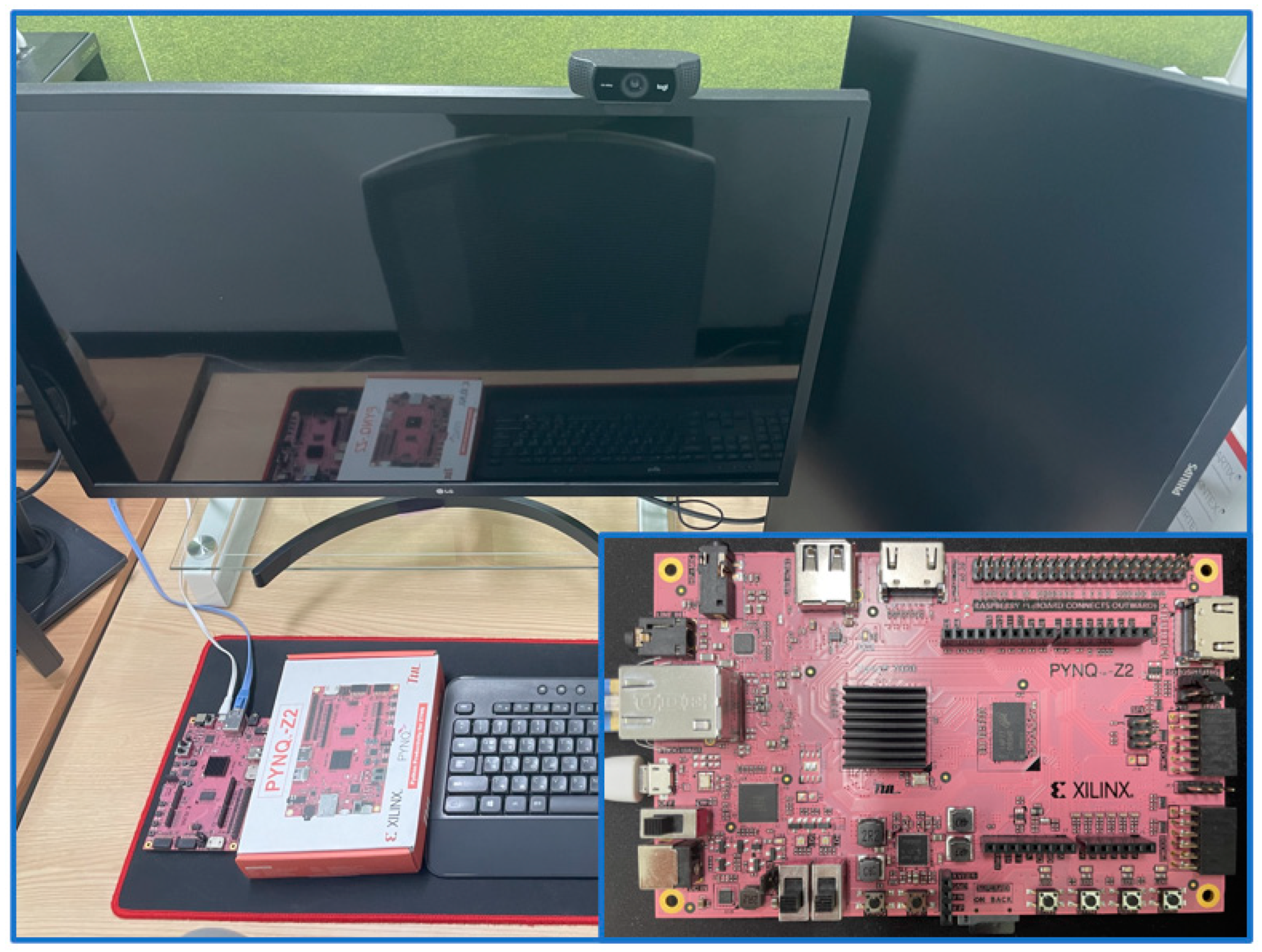

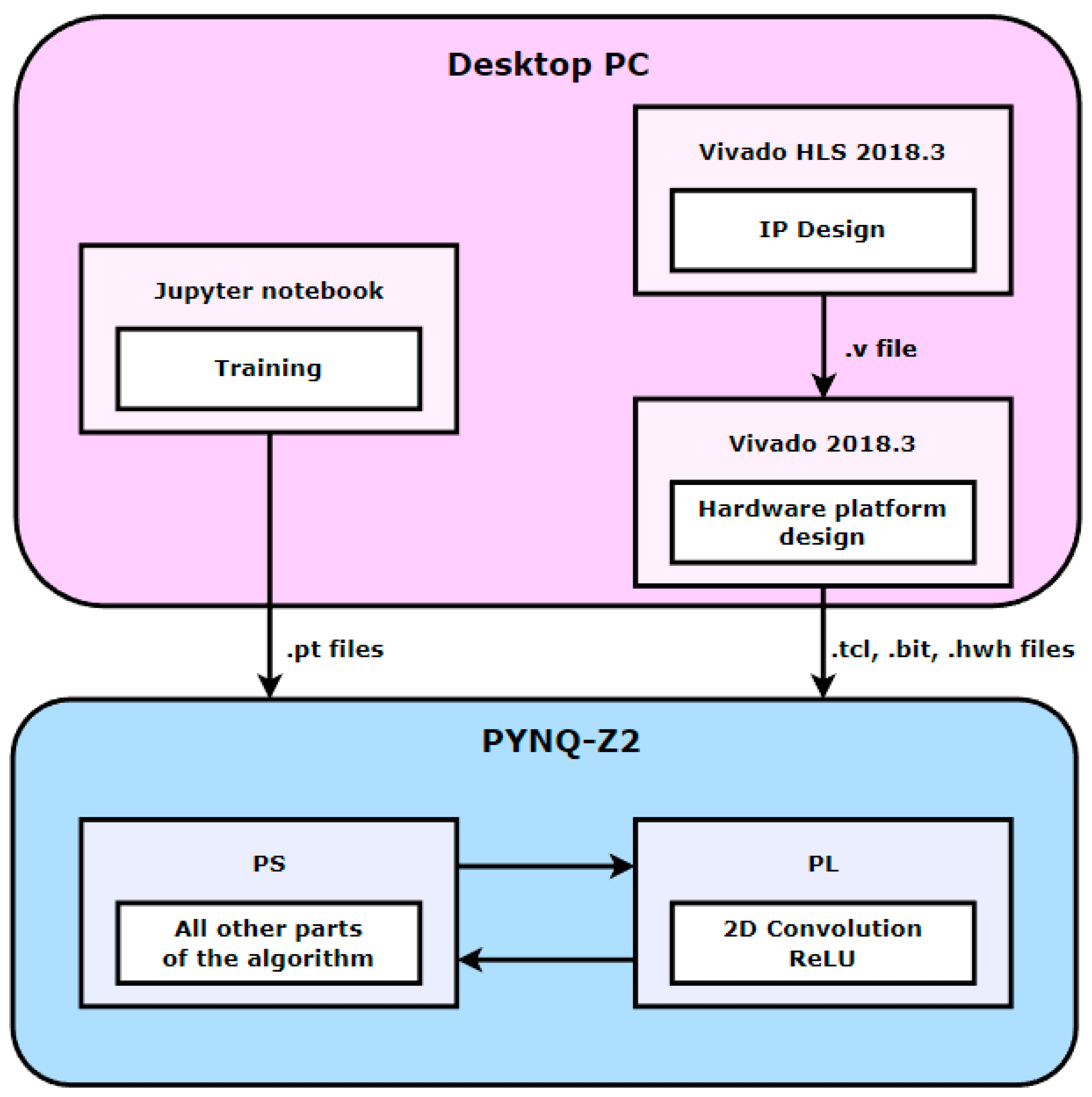

4.1. Experimental Setups

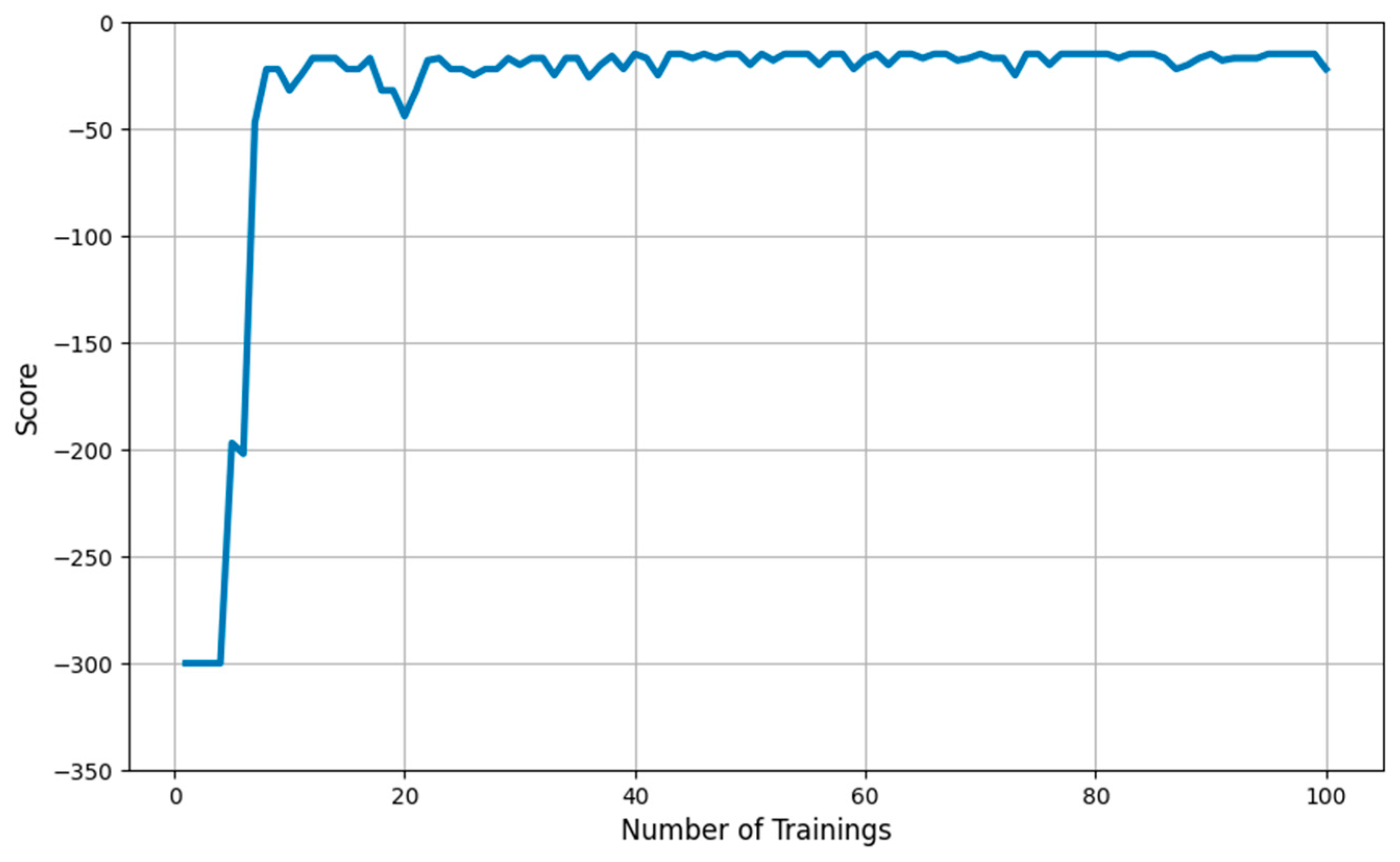

4.2. Training Results

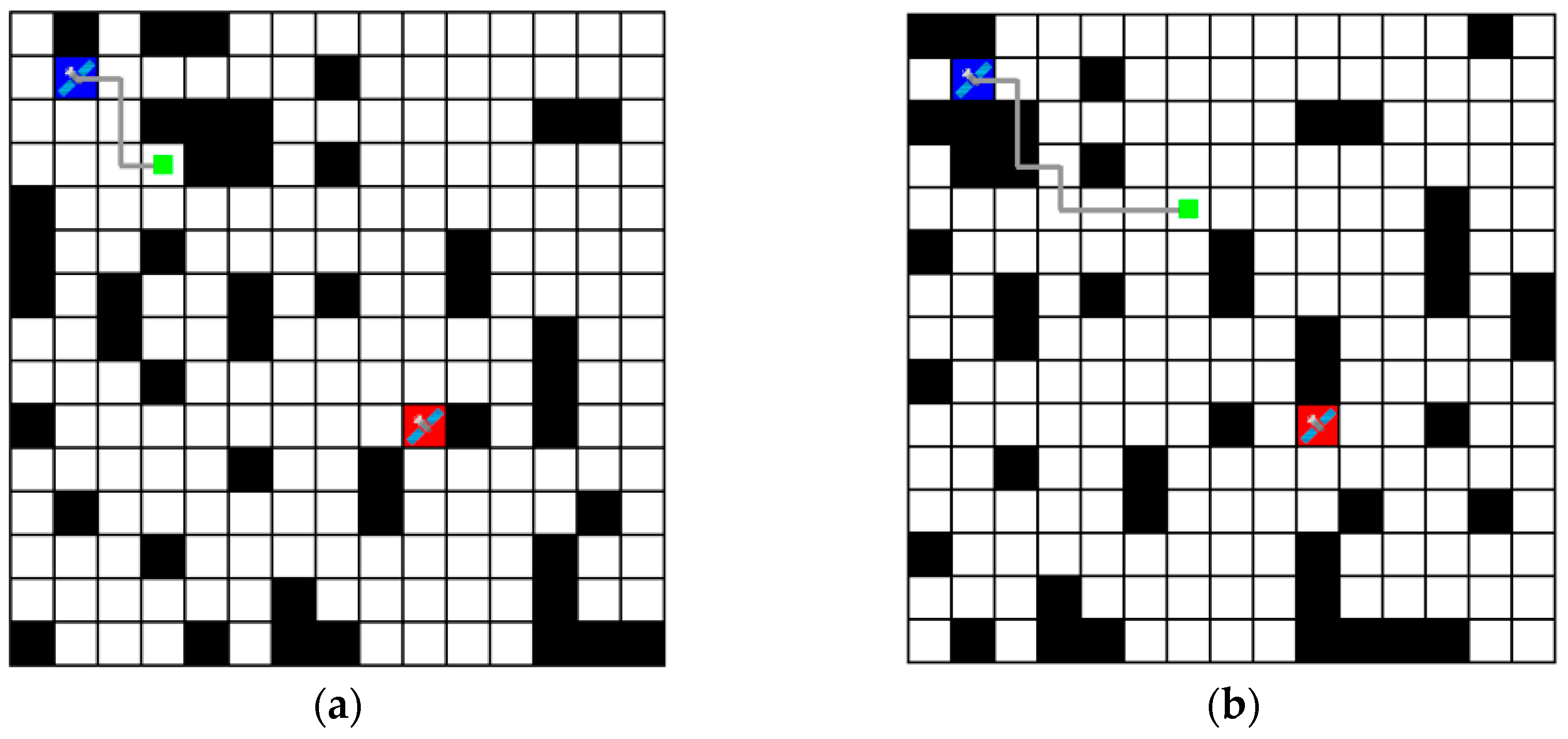

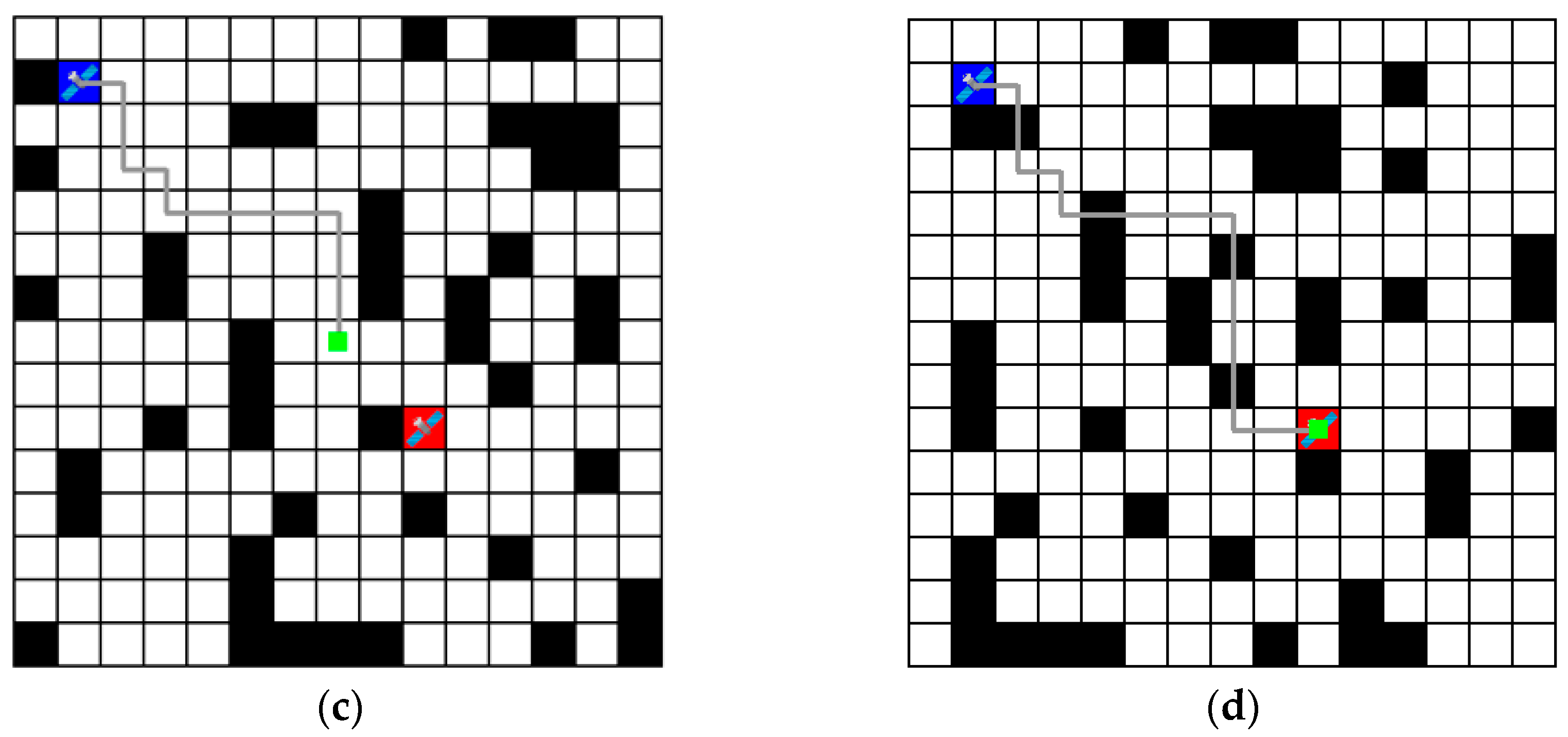

4.3. Inference Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Cakaj, S.; Kamo, B.; Lala, A.; Rakipi, A. The Coverage Analysis for Low Earth Orbiting Satellites at Low Elevation. Int. J. Adv. Comput. Sci. Appl. 2014, 5, 6–10. [Google Scholar] [CrossRef]

- Wang, J.; Li, L.; Zhou, M. Topological dynamics characterization for LEO satellite networks. Comput. Netw. 2007, 51, 43–53. [Google Scholar] [CrossRef]

- Kempton, B.; Riedl, A. Network Simulator for Large Low Earth Orbit Satellite Networks. In Proceedings of the ICC 2021—IEEE International Conference on Communications, Montreal, QC, Canada, 14–23 June 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Hu, Y.; Li, V.O.K. Satellite-based Internet: A tutorial. IEEE Commun. Mag. 2001, 39, 154–162. [Google Scholar] [CrossRef]

- Uzunalioglu, H. Probabilistic routing protocol for low Earth orbit satellite networks. In Proceedings of the ICC ’98. 1998 IEEE International Conference on Communications. Conference Record. Affiliated with SUPERCOMM’98 (Cat. No.98CH36220), Atlanta, GA, USA, 7–11 June 1998; Volume 1, pp. 89–93. [Google Scholar] [CrossRef]

- Markovitz, O.; Segal, M. Advanced Routing Algorithms for Low Orbit Satellite Constellations. In Proceedings of the ICC 2021—IEEE International Conference on Communications, Montreal, QC, Canada, 14–23 June 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Uzunalioğlu, H.; Akyildiz, I.F.; Bender, M.D. A routing algorithm for connection-oriented Low Earth Orbit(LEO) satellite networks with dynamic connectivity. Wirel. Netw. 2000, 6, 181–190. [Google Scholar] [CrossRef]

- Zhu, Y.; Qian, L.; Ding, L.; Yang, F.; Zhi, C.; Song, T. Software defined routing algorithm in LEO satellite networks. In Proceedings of the 2017 International Conference on Electrical Engineering and Informatics (ICELTICs), Banda Aceh, Indonesia, 18–20 October 2017; pp. 257–262. [Google Scholar] [CrossRef]

- Ekici, E.; Akyildiz, I.F.; Bender, M.D. A distributed routing algorithm for datagram traffic in LEO satellite networks. IEEE/ACM Trans. Netw. 2001, 9, 137–147. [Google Scholar] [CrossRef]

- Jiang, C.; Zhu, X. Reinforcement Learning Based Capacity Management in Multi-Layer Satellite Networks. IEEE Trans. Wirel. Commun. 2020, 19, 4685–4699. [Google Scholar] [CrossRef]

- Wang, X.; Dai, Z.; Xu, Z. LEO Satellite Network Routing Algorithm Based on Reinforcement Learning. In Proceedings of the 2021 IEEE 4th International Conference on Electronics Technology (ICET), Chengdu, China, 7–10 May 2021; pp. 1105–1109. [Google Scholar] [CrossRef]

- Shi, X.; Ren, P.; Du, Q. Heterogeneous Satellite Network Routing Algorithm Based on Reinforcement Learning and Mobile Agent. In Proceedings of the 2020 IEEE Globecom Workshops, Taipei, Taiwan, 7–11 December 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Zuo, P.; Wang, C.; Yao, Z.; Hou, S.; Jiang, H. An Intelligent Routing Algorithm for LEO Satellites Based on Deep Reinforcement Learning. In Proceedings of the 2021 IEEE 94th Vehicular Technology Conference (VTC2021-Fall), Norman, OK, USA, 27–30 September 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Zuo, P.; Wang, C.; Wei, Z.; Li, Z.; Zhao, H.; Jiang, H. Deep Reinforcement Learning Based Load Balancing Routing for LEO Satellite Network. In Proceedings of the 2022 IEEE 95th Vehicular Technology Conference: (VTC2022-Spring), Helsinki, Finland, 19–22 June 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Lee, J.H.; Chai, K.Y. A Study on the low-earth orbit satellite based non-terrestrial network systems via deep-reinforcement learning. In Proceedings of the Korean Institute of Communication Sciences Conference, Yeosu, Republic of Korea, 17–19 November 2021; pp. 1306–1307. [Google Scholar]

- Ma, Y.; Cao, Y.; Vrudhula, S.; Seo, J. Optimizing Loop Operation and Dataflow in FPGA Acceleration of Deep Convolutional Neural Networks. In Proceedings of the 2017 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 22–24 February 2017; pp. 45–54. [Google Scholar] [CrossRef]

- Hoozemans, J.; Peltenburg, J.; Nonnemacher, F.; Hadnagy, A.; Al-Ars, Z.; Hofstee, H.P. FPGA Acceleration for Big Data Analytics: Challenges and Opportunities. IEEE Circuits Syst. Mag. 2021, 21, 30–47. [Google Scholar] [CrossRef]

- Biookaghazadeh, S.; Ravi, P.K.; Zhao, M. Toward Multi-FPGA Acceleration of the Neural Networks. ACM J. Emerg. Technol. Comput. Syst. 2021, 17, 1–23. [Google Scholar] [CrossRef]

- Rahman, A.; Lee, J.; Choi, K. Efficient FPGA Acceleration of Convolutional Neural Networks Using Logical-3D Compute Array. In Proceedings of the 2016 Design, Automation & Test in Europe Conference & Exhibition (DATE), Dresden, Germany, 14–18 March 2016; pp. 1393–1398. [Google Scholar] [CrossRef]

- Guo, K.; Zeng, S.; Yu, J.; Wang, Y.; Yang, H. [DL] A Survey of FPGA-based Neural Network Inference Accelerators. ACM Trans. Reconfigurable Technol. Syst. 2017, 12, 1–26. [Google Scholar] [CrossRef]

- Garcia, F.; Rachelson, E. Markov Decision Processes. In Markov Decision Processes in Artificial Intelligence; Wiley: Hoboken, NJ, USA, 2013; pp. 1–38. [Google Scholar] [CrossRef]

- Kaelbling, L.P.; Littman, M.L.; Moore, A.W. Reinforcement Learning: A Survey. J. Artif. Intell. Res. 1996, 4, 237–285. [Google Scholar] [CrossRef]

- Arulkumaran, K.; Deisenroth, M.P.; Brundage, M.; Bharath, A.A. Deep Reinforcement Learning: A Brief Survey. IEEE Signal Process. Mag. 2017, 34, 26–38. [Google Scholar] [CrossRef]

- Ban, T.-W. An Autonomous Transmission Scheme Using Dueling DQN for D2D Communication Networks. IEEE Trans. Veh. Technol. 2020, 69, 16348–16352. [Google Scholar] [CrossRef]

- Wang, Z.; Schaul, T.; Hessel, M.; Hasselt, H.; Lanctot, M.; Freitas, N. Dueling network architectures for deep reinforcement learning. In Proceedings of the 33rd International Conference on International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; Volume 48, pp. 1995–2003. [Google Scholar]

- Villanueva, A.; Fajardo, A. Deep Reinforcement Learning with Noise Injection for UAV Path Planning. In Proceedings of the 2019 IEEE 6th International Conference on Engineering Technologies and Applied Sciences (ICETAS), Kuala Lumpur, Malaysia, 20–21 December 2019; pp. 1–6. [Google Scholar] [CrossRef]

- de Fine Licht, J.; Besta, M.; Meierhans, S.; Hoefler, T. Transformations of High-Level Synthesis Codes for High-Performance Computing. IEEE Trans. Parallel Distrib. Syst. 2020, 32, 1014–1029. [Google Scholar] [CrossRef]

| Related Works | RL | Dynamic Environment | Link Disconnection | Parallelization |

|---|---|---|---|---|

| [10,11] | O | X | X | X |

| [12,13,14] | O | O | X | X |

| [15] | O | O | O | X |

| Proposed Method | O | O | O | O |

| Available | Utilization (%) | ||

|---|---|---|---|

| Naïve Method | Proposed Method | ||

| LUT | 53,200 | 24.17 | 25.32 |

| LUTRAM | 17,400 | 4.22 | 4.76 |

| FF | 106,400 | 15.65 | 15.51 |

| BRAM | 140 | 22.50 | 25.36 |

| DSP | 220 | 9.09 | 9.09 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.; Park, J.; Lee, H.; Won, D.; Han, M. An FPGA-Accelerated CNN with Parallelized Sum Pooling for Onboard Realtime Routing in Dynamic Low-Orbit Satellite Networks. Electronics 2024, 13, 2280. https://doi.org/10.3390/electronics13122280

Kim H, Park J, Lee H, Won D, Han M. An FPGA-Accelerated CNN with Parallelized Sum Pooling for Onboard Realtime Routing in Dynamic Low-Orbit Satellite Networks. Electronics. 2024; 13(12):2280. https://doi.org/10.3390/electronics13122280

Chicago/Turabian StyleKim, Hyeonwoo, Juhyeon Park, Heoncheol Lee, Dongshik Won, and Myonghun Han. 2024. "An FPGA-Accelerated CNN with Parallelized Sum Pooling for Onboard Realtime Routing in Dynamic Low-Orbit Satellite Networks" Electronics 13, no. 12: 2280. https://doi.org/10.3390/electronics13122280

APA StyleKim, H., Park, J., Lee, H., Won, D., & Han, M. (2024). An FPGA-Accelerated CNN with Parallelized Sum Pooling for Onboard Realtime Routing in Dynamic Low-Orbit Satellite Networks. Electronics, 13(12), 2280. https://doi.org/10.3390/electronics13122280