Abstract

Current target-speaker extraction (TSE) models have achieved good performance in separating target speech from highly overlapped multi-talker speech. However, in real-world applications, multi-talker speech is often sparsely overlapped, and the target speaker may be absent from the speech mixture, making it difficult for the model to extract the desired speech in such situations. To optimize models for various scenarios, universal speaker extraction has been proposed. However, current models do not distinguish between the presence or absence of the target speaker, resulting in suboptimal performance. In this paper, we propose a gated cross-attention network for universal speaker extraction. In our model, the cross-attention mechanism learns the correlation between the target speaker and the speech to determine whether the target speaker is present. Based on this correlation, the gate mechanism enables the model to focus on extracting speech when the target is present and filter out features when the target is absent. Additionally, we propose a joint loss function to evaluate both the reconstructed target speech and silence. Experiments on the WSJ0-2mix-extr and LibriMix datasets show that our proposed method achieves superior performance over comparison approaches in terms of SI-SDR and WER.

1. Introduction

In multi-talker communications, overlapped speech usually has negative impacts on downstream tasks, such as automatic speech recognition (ASR). This has attracted researchers’ interest in speech separation, which aims to obtain a single stream from overlapping speech. Up until now, speech separation has been widely studied [1,2,3,4,5]. Among many solutions, target-speaker extraction (TSE) provides an effective approach to speech separation.

TSE is a technology that uses the reference speech of the target speaker to extract the target speech signal from a mixture of speech. Currently, TSE has achieved good performance in highly overlapped speech [6,7,8,9,10,11,12,13,14,15]. However, when applied to real-world applications, TSE encounters the challenge that speech is often sparsely overlapped, and the target speaker may not always be speaking [16,17], which is different from the highly overlapped scenarios in many previous TSE research studies.

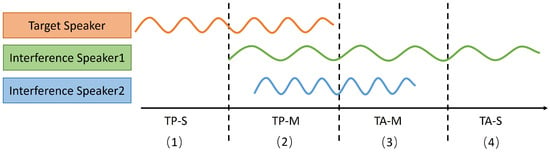

Therefore, to facilitate our work, as shown in Figure 1, we introduce a classification method that classifies real-world multi-talker speech into four types of scenarios, according to whether the target speaker is present or absent (TP or TA), and whether the speech overlaps (S or M). In this way, the speech mixtures composed of four random scenarios are closer to real-world communications. According to the classification, most prior TSE studies [6,7,8,9,10,11,12,13,14,15] have only focused on the TP-M scenario, where speech is highly overlapped and the target speaker keeps speaking. However, when applied to TA-M and TA-S scenarios, these studies may falsely extract a random speaker’s voice rather than the desired silence. This results in a strong negative effect on overall performance, which is called the false-extraction problem [17,18].

Figure 1.

(1) TP-S denotes that the target speaker speaks alone. (2) TP-M denotes that the target speaker overlaps with other interference speakers. (3) TA-M denotes that the interference speakers overlap with each other. (4) TA-S denotes an interference speaker who speaks alone.

To address the false-extraction problem, universal speaker extraction is proposed. It aims to extract speech when the target speaker is active, and output silence when the target speaker is quiet. Since the widely used scale-invariant signal-to-noise ratio (SI-SDR) [19] loss has no definition in target-absent scenarios, some researchers proposed modifying the loss function to evaluate both the quality of the reconstructed speech and silence [16,20,21,22,23]. Although these methods encourage the model to consider the absent speaker and output silence, they often degrade the quality of extracted signals in target-present scenarios. Some methods introduce additional information to verify the speaker’s presence, such as speaker activity information [24,25] or visual cues [17]. However, some scenarios cannot provide this information, resulting in limited application of these methods.

In this paper, we propose a novel universal speaker extraction model. In our method, we use gated cross-attention to fuse speaker embedding and speech mixture features. Specifically, the attention mechanism is used to distinguish whether the target speaker is speaking or quiet. And the gate mechanism is used to make the extractor focus more on the frames where the target speaker is present. In addition, to optimize both the energy of silence when the target is absent and the SI-SDR when the target is present, we propose an effective joint loss function as the training objective. Our methods take into account all four scenarios in the real world, effectively alleviating the false-extraction problem and improving the quality of extracted signals. Our contributions are as follows:

- We classify the multi-talker speech into four types: TP-S, TP-M, TA-M, and TA-S.

- We propose a universal speaker extraction method in which a gated cross-attention mechanism is employed to improve the model performance in all four scenarios. This method integrates target-speaker embedding and speech mixture features to verify whether the target speaker is present or absent, thereby enabling the extraction model to focus more on the target-present segments.

- We improve the loss function for universal speaker extraction to optimize the network on both target-present and target-absent scenarios.

- We conduct comparative experiments on the baseline model SPEX+ [11] and various universal speaker extraction methods. The experimental results on the WSJ0-2mix-extr and LibriMix datasets show that our method improves the performance in all four scenarios.

2. Related Works

2.1. Speaker Extraction for the Target-Present Mixture

In the past few years, TSE methods have made significant progress in TP-M scenarios. Representative studies include SpeakerBeam [6,7,8] and VoiceFilter [9,10]. Furthermore, the time-domain speaker extraction model SPEX [11,12,13] extended by Conv-TasNet [4] is a typical approach to speaker extraction and has achieved good performance.

Some studies [15,18] have found that, even in target-present mixtures, TSE may suffer from false-extraction problems (also called speaker confusion, SC). To solve the problem, commonly used methods include post-processing and modifying the loss function [18]. Moreover, X-SepFormer [15] proposes a new TSE model based on SepFormer [5]. It introduces the cross-attention mechanism for feature fusion [26] and effectively reduces the rate of false extraction in the TP-M scenario. These methods have the limitation that they only address false-extraction problems in TP-M scenarios. The aforementioned cross-attention [15,26] only focuses on the temporal correlation of speaker-speech features, rather than the correlation between speaker embedding and speech features, making it unable to distinguish whether the speaker is present or not.

In real-world scenarios, to avoid false extraction, it is important to learn whether the speaker is present or not. Therefore, these methods are not suitable for universal speaker extraction.

2.2. Universal Speaker Extraction for Target-Absent Scenarios

Recently, researchers have noticed the false-extraction problem in realistic communications. Modifying the loss function and training the model in various speech mixtures is a popular strategy [16,20,21,22]. The loss functions in [20,21,22] are revised for target-absent speech to penalize the outputs when they are far from silence. However, they cannot be widely used because the loss value will approach negative infinity and dominate the overall loss when the estimated output or the input signal is close to silence. Borsdorf et al. [16] proposes a unified SE-SI-SDR loss applicable across all conditions. But the weight of unified loss in target-present and target-absent scenarios cannot be adjusted. Therefore, in practice, it requires keeping a specific fixed proportion of the four scenarios in the training data.

Some researchers have attempted to solve the false-extraction problem by proposing new structures that enable the model to distinguish whether the target speaker is present or not. For example, Lin et al. [24] propose a joint learning network of personal voice activity detection (VAD) and speaker extraction, in which VAD predicts the presence of the target speaker and masks non-target speech to silence based on the predicted results. However, the hard mask method highly depends on the accuracy of VAD, which is a difficult task in overlapping speech. USEV [17] uses visual cues as the auxiliary reference and proposes a universal speaker extraction network. But visual cues are unavailable in most real-world scenarios. Delcroix et al. [22] use speaker verification after speaker extraction, which can detect the TA speech when the extracted signals do not match the target speaker’s enrollment. However, the serial structure of speaker extraction and speaker verification increases the system’s latency.

Unlike the above methods, we use gated cross-attention to calculate the attention weight only between speaker embedding and audio features. This soft mechanism has linear complexity, which can eliminate the latency problem. Meanwhile, our gate mechanism can preserve information when the target is present and ignore it when the target is absent, so no additional auxiliary reference is required.

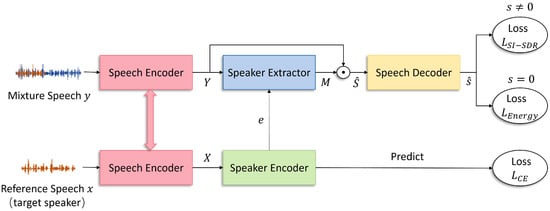

3. Overall Network Architecture of Universal Speaker Extraction

As Figure 2 illustrates, the overall network architecture of our universal speaker extraction model is built on the backbone of SPEX+ [11]. The whole network includes four components: speech encoder, speaker encoder, speaker extractor, and speech decoder. The speech encoder encodes the waveform into an acoustic representation. The speaker encoder extracts a speaker embedding from the reference speech representation of the target speaker. The speaker extractor estimates a mask for the target speaker from the mixture of speech representations to filter out the speech of other speakers. And the speech decoder can reconstruct the speech signal from the masked speech representation.

Figure 2.

The network architecture of universal speaker extraction.

We introduce the details of the process as follows. The speech encoder first encodes the waveform y into an acoustic representation Y using a 1D-CNN. At the same time, the speaker encoder extracts a speaker embedding e from the reference speech x of the target speaker. Then, the speaker extractor uses a gated cross-attention feature fusion block to fuse the speaker embedding, e, with the mixed speech representation, Y, to guide the speaker extraction. It estimates a mask, M, from the mixture speech representation, Y, and obtains the target speech representation by element-wise multiplying the mask, M, with Y. Finally, the speech decoder reconstructs the target speech signal from .

We optimize the entire network through multi-task learning of speaker classification and speaker extraction. As we classify the scenarios into TP-S, TP-M, TA-M, and TA-S types, we improve the loss function to optimize the model for target-absent () and target-present () scenarios. We give the detailed descriptions in Section 4 and Section 5.

4. Gated Cross-Attention Mechanism

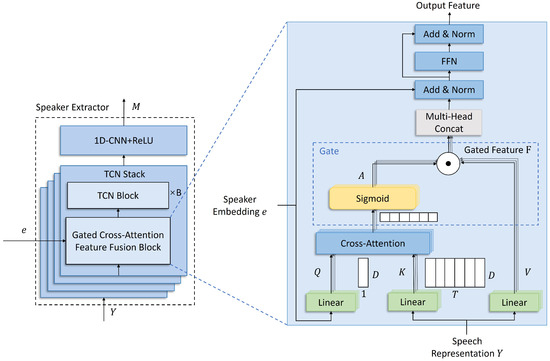

In this paper, we employ the gated cross-attention mechanism to the speaker extractor for universal speaker extraction. Figure 3 (left) illustrates the structure of the speaker extractor. It consists of four temporal convolutional network (TCN) stacks and a 1D-CNN with a ReLU activation function. A TCN stack contains a gated cross-attention feature fusion block and B TCN blocks. In these blocks, the feature fusion block fuses the mixed speech representation, Y, and the speaker embedding, e. The TCN block uses stacked dilated 1D convolutional layers for temporal feature modeling, thereby extracting the target speech from the fused feature. The use of dilated convolution allows the model to take advantage of the long-range dependencies of the speech signal while also substantially reducing the model size [4,27].

Figure 3.

The structure of the speaker extractor (left) and the details of our gated cross-attention feature fusion block (right).

Figure 3 (right) shows the details of our gated cross-attention feature fusion block. It takes the target-speaker embedding and the mixed speech representation as inputs, and modulates the speech features according to the target speaker’s presence. A detailed description of the two mechanisms is provided in Section 4.1 and Section 4.2.

4.1. Cross-Attention Mechanism

In the feature fusion block, the cross-attention mechanism learns the interaction between the target speaker and speech to distinguish whether the target speaker is present or not. The multi-head cross-attention is used in this section, while for simplicity, the explanation will be given for a single-head.

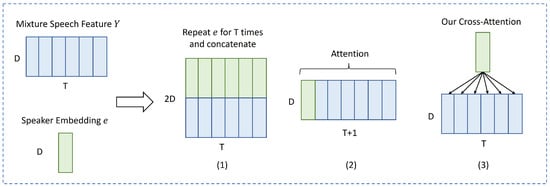

As shown in Figure 4(1), traditional feature fusion methods usually fuse the speaker embedding, e, and the mixed speech representation, Y, by concatenating, which makes it difficult to learn the degree of correlation between the two features. Some researchers [26] also use cross-attention in TSE. But as Figure 4(2) illustrates, they only apply attention to a sequentially connected sequence to learn the temporal correlation. However, as Figure 4(3) shows, our cross-attention is used to learn the interaction between e and Y in each time frame.

Figure 4.

The comparison of feature fusion methods: (1) The traditional feature fusion method. (2) Existing cross-attention feature fusion method. (3) The cross-attention mechanism in our feature fusion method.

In our cross-attention mechanism, the speaker embedding e is transformed to the query , and the speech representation Y is transformed to the key and value .

where , , and are the transformation matrices. Then, we calculate the attention weight between the speaker and speech as follows:

where b denotes a scaling factor, which is equal to the dimensionality D of the input features. Note that we use the sigmoid activation function in the calculation rather than the commonly used softmax. The reason is that the in this paper is a one-dimensional vector of length T, which represents the correlation between the speaker embedding and the speech sequence. It does not make sense to apply the softmax activation function to normalize a vector. In contrast, when using the sigmoid activation function, the attention weight A represents the probability of the target speaker’s presence, where the element is close to 1 if the target speaker is present, otherwise it is close to 0 if the target speaker is absent.

4.2. Gate Mechanism

According to the attention weight A, we next use a gate mechanism to enable the model to focus on the features of the target-present parts and filter out the features of the target-absent parts.

To this end, inspired by the gated linear unit (GLU) [28], we employ a gate mechanism to control the information flow. In GLU, the input features perform element-wise multiplication with the gate, which is a sigmoid-activated feature associated with the input feature. In our case, the gate needs to be associated with the interaction features between the speaker and the speech. Thus, we regard the cross-attention weight A as the gate:

where ⊙ denotes a broadcast element-wise multiplication, V is the transformed speech representation in Section 4.1, and is the gated feature. Since attention weight A uses sigmoid activation and indicates the degree of association between the speaker and speech, our gate mechanism can direct those features with stronger relevance to flow into the subsequent modules.

In the end, we concatenate the gated feature F from all attention heads and feed it into a linear layer. A residual connection is adopted between the output feature and the input speaker embedding e. The results are then fed into the feed-forward network (FFN). Finally, layer normalization is used to output the feature to the following blocks. In this way, the feature fusion block can automatically learn to preserve the features relevant to the target speaker and suppress the irrelevant features.

5. Loss Function

We optimize the entire network through multi-task learning of speaker classification and speaker extraction. By classifying the scenarios into TP-S, TP-M, TA-M, and TA-S types, we propose a new loss function to optimize the model for target-absent () and target-present () scenarios.

When the target speaker is present, we use SI-SDR loss to evaluate the reconstructed signal :

where is a very small term, s is the target signal, and is the estimated signal.

When the target speaker is absent, the SI-SDR loss would lead to a constant value, which is independent of the estimated signal . It means that SI-SDR is unable to optimize the model for target-absent scenarios. Thus, we employ the energy of speech as the loss function rather than SI-SDR loss:

In the two loss functions, we use a soft threshold to prevent the loss from exploding when the estimated signal is too close to silence 0 or reference s.

In summary, our signal reconstruction loss consists of SI-SDR loss and energy loss. And our speaker classification loss is a cross-entropy (CE) loss. Since the expectations for these three losses are different, we use their weighted sum as the overall loss function:

where , , and are the weights for , , and respectively. We adopt a step function to indicate whether the target is present or not. It outputs 1 when the target signal is , and outputs 0 when the target signal is . Thus, the loss function can enhance the model’s ability to improve the quality of extracted speech when the target speaker is present and output silence when the target speaker is quiet.

6. Experimental Setup

6.1. Dataset

We use WSJ0-2mix-extr [12] and Libri2Mix [29] as the datasets of our experiments. The WSJ0-2mix-extr dataset is generated from the WSJ0 corpus with a sampling rate of 8 kHz. The training set (20,000 utterances) and development set (5000 utterances) share the same 101 speakers. The test set (3000 utterances) contains 18 different speakers. The Libri2Mix dataset is a benchmark that uses the LibriSpeech [30] corpus to generate mixed speech. In our experiments, we use the “train-clean-100” subset with a sampling rate of 16 kHz, which contains 13,900 mixtures from 251 speakers. Under the same conditions, we remix 3000 utterances as the test set.

To experiment with universal speaker extraction, we simulate the TP-S, TP-M, TA-M, and TA-S scenarios and modify the datasets accordingly. The TA-S and TA-M data are generated by choosing speakers different from those in the mixture as the target speakers. And the TP-S and TA-S data are generated by replacing a portion of mixtures with non-overlapped speech signals. With reference to [16], we modify the WSJ0-2mix-extr dataset by randomly removing 15% of the data of 2T-PT and 1T-PT to create 2T-AT and 1T-AT data. While in the Libri2Mix dataset, the ratio of target-absent data is about 40%. During training, each utterance in TP-M is utilized twice to alternatively extract each speaker from it. The number of utterances of these training sets in each scenario is shown in Table 1.

Table 1.

The descriptions of training sets in Libri2Mix and WSJ0-2mix-extr. “USE” refers to the modified dataset for universal speaker extraction.

6.2. Training Setup

In our experiment, we use the SPEX+ model as the baseline. We train our models using the Adam optimizer with an initial learning rate of 0.001 on 4-second segments. We set the maximum training epoch to 150 and adopt an early stop strategy when the loss increases on the validation set for 4 epochs. The dimension of the speaker embedding D is 256. The loss function weights are set to , , and for the WSJ0-2mix-extr dataset and for the Libri2Mix dataset.

Due to the limited computational resources, we use a pre-trained SPEX+ model on the fully overlapped dataset to initialize the network parameters and further fine-tune the model with new loss functions or structures. Moreover, we find that applying our proposed gated cross-attention feature fusion block to the deep TCN stack can achieve the best performance.

6.3. Evaluation Metrics

We evaluate the model performance in terms of speech quality and the speaker extraction error rate. In the TP-M scenario, we use SI-SDR to evaluate the quality of speech extracted from the fully overlapped speech. In other scenarios, the perfectly reconstructed signals or silence may lead to excessive value of SI-SDR or energy. Ref. [20] proposed that the zero point could be an approximate boundary for the speaker extraction error rate. Consequently, we use the negative SI-SDR rate and positive energy rate to evaluate whether the model is correctly outputting target speech or silence. For TP-S and TP-M scenarios, the negative SI-SDR rate is the proportion of samples with negative SI-SDR values in the extraction results to the total test samples. And the positive energy rate for TA-S and TA-M scenarios is calculated in the same way.

In addition, to validate the effectiveness of our model in downstream applications, we fed the extracted speech of the Libri2Mix test set into a pre-trained ASR model and reported the word error rate (WER) and the word correct rate (Corr). Moreover, we report the insertion error rate (Ins) to clarify the model’s performance when the target speaker is silent. The ASR model used is the conformer model in ESPnet (https://github.com/espnet/espnet/tree/master/egs/librispeech/asr1, accessed on 1 September 2023).

7. Experimental Results

7.1. Comparative Study for Universal Speaker Extraction

In this section, we compare our proposed method with baselines in terms of SI-SDR, extraction error rate, and ASR performance. The baseline models, respectively, use the SI-SDR loss [11], MSE loss [9], and SE-SI-SDR loss [16], which are jointly optimized with CE loss. Note that the method using SI-SDR loss is trained on Libri2Mix and WSJ0-2mix-extr, while the other methods are trained on Libri2Mix (USE) and WSJ0-2mix-extr (USE) datasets.

In Table 2 and Table 3, our baseline is the method using SI-SDR loss, which cannot handle the TA-S and TA-M scenarios. SE-SI-SDR and MSE are two loss functions that can optimize the model in all four scenarios, and joint loss is our loss function. The loss functions above are trained with the SPEX+ model structure. In the last row, “+GCA” denotes that we use our proposed gated cross-attention (GCA) universal speaker extraction model together with our joint loss.

Table 2.

Comparative study of universal speaker extraction methods on the WSJ0-mix-extr test set. We present the SI-SDR in the TP-M scenario and the extraction error rates in four scenarios.

Table 3.

Comparative study of universal speaker extraction methods on Libri2Mix in terms of SI-SDR in the TP-M scenario and ASR performance.

Compared with SI-SDR loss, the following three loss functions can make the model work well in TA-M and TA-S scenarios. It is clear that our proposed joint loss function outperforms the comparison methods. In TA-M and TA-S scenarios, the joint loss achieves relatively low error rates of 26.79% and 9.36%, respectively, indicating its ability to output silence effectively. And in the TP-M scenario, our joint loss achieves the best performance compared with MSE and SE-SI-SDR. We believe that the flexible weight of loss and the soft threshold are the reasons for the stable performance.

By comparing the experimental results in the TP-M scenario, we find that including TA-S and TA-M speech in the training set can potentially degrade the extraction performance for TP-M and TP-S speech. This could be due to the baseline model’s struggles to effectively distinguish between extracting target signals and outputting silence. Our proposed GCA mechanism offers a solution to this problem. By observing the last two rows, the GCA-USE model significantly reduces the extraction error rate in TA-M and TA-S scenarios while maintaining high speaker extraction quality in the TP-M scenario. The results suggest that the proposed gated cross-attention mechanism has advantages in distinguishing the presence of target speakers, thus leading to superior performance.

In Table 3, we first report the ASR performance of the single-speaker LibriSpeech dataset to show the upper bound of the TSE output. Then, we perform ASR on the extraction results of speaker extraction models and compare the performance. Our GCA-USE model achieves the lowest insertion error rate of 1.1% and reduces WER by 7.1%. It demonstrates the effectiveness of our model on downstream tasks.

7.2. Comparative Study with Target-Speaker Extraction

This section focuses on the TP-M scenario, conducting a comparative analysis of the performance for target speech extraction and universal speech extraction models on the Libri2Mix dataset, as shown in Table 4. Firstly, by comparing the performances of TSE models with USE models, we observe that TSE models generally outperform USE models in extracting fully overlapping speech. This is because USE models are trained with data from various scenarios, making the model universal, but the performance in TP-M scenarios has decreased. Nevertheless, our proposed GCA-USE model not only achieves the best performance among USE models but also exhibits comparable performance to the latest TSE models.

Table 4.

Comparison of target-speaker extraction and universal speaker extraction performance on the Libri2Mix dataset. We present the number of parameters, SI-SDRi, and SDR in the TP-M scenario.

Secondly, we compare the parameter numbers of different models. Our model exhibits an increase of merely 0.3 M parameters over the baseline model while achieving significant performance improvements. Furthermore, we evaluate the computational cost of our model and the baseline model, with a ratio of 7.4:7.9. These results indicate that our model achieves superior performance with only a slight increase in both the number of parameters and computational costs.

7.3. Ablation Study

In this section, we conduct an ablation study on LibriMix to explore the effectiveness of the gate mechanism and cross-attention mechanism. Specifically, we remove the two mechanisms from the proposed GCA model and present the performance in Table 5. When we remove the CA and only use the gate in the model, we treat the concatenated feature of speaker embedding and speech representation as the gate rather than using the cross-attention weight. When we remove the gate, the output of cross-attention is the attention score using softmax, and we apply a dot product with the value V rather than element-wise multiplication with the gate. When we remove both the gate and CA, the model reverts to the baseline SPEX+ model.

Table 5.

Ablation experiments using the proposed joint loss on Libri2Mix. Note that “Gate” refers to the gate mechanism, “CA” refers to the cross-attention mechanism, and “-” means remove.

Compared to the baseline model in the last row, the results in the second and third rows show that using either the cross-attention mechanism or gate mechanism alone only achieves a slight improvement in each metric. When we employ the two mechanisms together, we achieve a significant improvement in all scenarios.

According to our analysis, without the correlation between the speaker and speech provided by cross-attention, it is challenging for the gate mechanism to effectively control the information flow. Likewise, using cross-attention directly has a limited impact on subsequent calculations. The results of the ablation study highlight the complementary nature of the gate and cross-attention mechanisms. The gate mechanism effectively utilizes the cross-attention mechanism to reserve the features of the target-present speech while filtering out the features of the target-absent speech.

8. Conclusions

In this paper, we propose a novel gated cross-attention universal speaker extraction model and a joint loss function. The proposed joint loss enables the model to extract target speech in various scenarios. Additionally, the gated cross-attention mechanism helps the model distinguish whether the target speaker is present and then focuses on extracting the target-present part. The experimental results demonstrate the effectiveness of our approach in various communication scenarios.

Author Contributions

Conceptualization, Y.Z. and Q.Y.; methodology, Y.Z.; software, Y.Z.; validation, B.L. and Y.Y.; formal analysis, Y.Z.; investigation, Y.Z.; resources, B.L. and Y.Y.; data curation, B.L. and Y.Y.; writing—original draft preparation, Y.Z.; writing—review and editing, Q.Y. and Y.Z.; visualization, Y.Z.; supervision, Q.Y.; project administration, Q.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within this article.

Conflicts of Interest

Author Bijing Liu and Yong Yang were employed by the company NARI Technology Co., Ltd. and Beijing Kedong Electric Power Control System Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Hershey, J.R.; Chen, Z.; Le Roux, J.; Watanabe, S. Deep clustering: Discriminative embeddings for segmentation and separation. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 31–35. [Google Scholar] [CrossRef]

- Luo, Y.; Mesgarani, N. TaSNet: Time-Domain Audio Separation Network for Real-Time, Single-Channel Speech Separation. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 696–700. [Google Scholar] [CrossRef]

- Luo, Y.; Chen, Z.; Yoshioka, T. Dual-Path RNN: Efficient Long Sequence Modeling for Time-Domain Single-Channel Speech Separation. In Proceedings of the ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 46–50. [Google Scholar] [CrossRef]

- Luo, Y.; Mesgarani, N. Conv-TasNet: Surpassing Ideal Time–Frequency Magnitude Masking for Speech Separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2019, 27, 1256–1266. [Google Scholar] [CrossRef]

- Subakan, C.; Ravanelli, M.; Cornell, S.; Bronzi, M.; Zhong, J. Attention Is All You Need In Speech Separation. In Proceedings of the ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 21–25. [Google Scholar] [CrossRef]

- Žmolíková, K.; Delcroix, M.; Kinoshita, K.; Ochiai, T.; Nakatani, T.; Burget, L.; Černocký, J. SpeakerBeam: Speaker Aware Neural Network for Target Speaker Extraction in Speech Mixtures. IEEE J. Sel. Top. Signal Process. 2019, 13, 800–814. [Google Scholar] [CrossRef]

- Delcroix, M.; Zmolikova, K.; Kinoshita, K.; Ogawa, A.; Nakatani, T. Single Channel Target Speaker Extraction and Recognition with Speaker Beam. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5554–5558. [Google Scholar] [CrossRef]

- Delcroix, M.; Zmolikova, K.; Ochiai, T.; Kinoshita, K.; Araki, S.; Nakatani, T. Compact Network for Speakerbeam Target Speaker Extraction. In Proceedings of the ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 6965–6969. [Google Scholar] [CrossRef]

- Wang, Q.; Muckenhirn, H.; Wilson, K.; Sridhar, P.; Wu, Z.; Hershey, J.R.; Saurous, R.A.; Weiss, R.J.; Jia, Y.; Moreno, I.L. VoiceFilter: Targeted Voice Separation by Speaker-Conditioned Spectrogram Masking. Proc. Interspeech 2019, 2728–2732. [Google Scholar] [CrossRef]

- Wang, Q.; Moreno, I.L.; Saglam, M.; Wilson, K.; Chiao, A.; Liu, R.; He, Y.; Li, W.; Pelecanos, J.; Nika, M.; et al. VoiceFilter-Lite: Streaming Targeted Voice Separation for On-Device Speech Recognition. Proc. Interspeech 2020, 2677–2681. [Google Scholar] [CrossRef]

- Ge, M.; Xu, C.; Wang, L.; Chng, E.S.; Dang, J.; Li, H. SpEx+: A Complete Time Domain Speaker Extraction Network. Proc. Interspeech 2020, 1406–1410. [Google Scholar] [CrossRef]

- Xu, C.; Rao, W.; Chng, E.S.; Li, H. SpEx: Multi-Scale Time Domain Speaker Extraction Network. IEEE/ACM Trans. Audio, Speech Lang. Process. 2020, 28, 1370–1384. [Google Scholar] [CrossRef]

- Ge, M.; Xu, C.; Wang, L.; Chng, E.S.; Dang, J.; Li, H. Multi-Stage Speaker Extraction with Utterance and Frame-Level Reference Signals. In Proceedings of the ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 6109–6113. [Google Scholar] [CrossRef]

- Xu, C.; Rao, W.; Chng, E.S.; Li, H. Time-Domain Speaker Extraction Network. In Proceedings of the 2019 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Singapore, 14–18 December 2019; pp. 327–334. [Google Scholar] [CrossRef]

- Liu, K.; Du, Z.; Wan, X.; Zhou, H. X-SEPFORMER: End-To-End Speaker Extraction Network with Explicit Optimization on Speaker Confusion. In Proceedings of the ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Borsdorf, M.; Xu, C.; Li, H.; Schultz, T. Universal Speaker Extraction in the Presence and Absence of Target Speakers for Speech of One and Two Talkers. Proc. Interspeech 2021, 1469–1473. [Google Scholar] [CrossRef]

- Pan, Z.; Ge, M.; Li, H. USEV: Universal Speaker Extraction with Visual Cue. IEEE/ACM Trans. Audio Speech Lang. Process. 2022, 30, 3032–3045. [Google Scholar] [CrossRef]

- Zhao, Z.; Yang, D.; Gu, R.; Zhang, H.; Zou, Y. Target Confusion in End-to-end Speaker Extraction: Analysis and Approaches. Proc. Interspeech 2022, 5333–5337. [Google Scholar] [CrossRef]

- Roux, J.L.; Wisdom, S.; Erdogan, H.; Hershey, J.R. SDR—Half-baked or Well Done? In Proceedings of the ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 626–630. [Google Scholar] [CrossRef]

- Zhang, Z.; He, B.; Zhang, Z. X-TaSNet: Robust and Accurate Time-Domain Speaker Extraction Network. Proc. Interspeech 2020, 1421–1425. [Google Scholar] [CrossRef]

- Wisdom, S.; Erdogan, H.; Ellis, D.P.W.; Serizel, R.; Turpault, N.; Fonseca, E.; Salamon, J.; Seetharaman, P.; Hershey, J.R. What’s all the Fuss about Free Universal Sound Separation Data? In Proceedings of the ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 186–190. [Google Scholar] [CrossRef]

- Delcroix, M.; Kinoshita, K.; Ochiai, T.; Zmolikova, K.; Sato, H.; Nakatani, T. Listen only to me! How well can target speech extraction handle false alarms? Proc. Interspeech 2022, 216–220. [Google Scholar] [CrossRef]

- von Neumann, T.; Kinoshita, K.; Böddeker, C.; Delcroix, M.; Haeb-Umbach, R. SA-SDR: A Novel Loss Function for Separation of Meeting Style Data. In Proceedings of the ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 6022–6026. [Google Scholar] [CrossRef]

- Lin, Q.; Yang, L.; Wang, X.; Xie, L.; Jia, C.; Wang, J. Sparsely Overlapped Speech Training in the Time Domain: Joint Learning of Target Speech Separation and Personal VAD Benefits. In Proceedings of the 2021 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Tokyo, Japan, 14–17 December 2021; pp. 689–693. [Google Scholar]

- Delcroix, M.; Zmolíková, K.; Ochiai, T.; Kinoshita, K.; Nakatani, T. Speaker Activity Driven Neural Speech Extraction. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2021, IEEE, Toronto, ON, Canada, 6–11 June 2021; pp. 6099–6103. [Google Scholar] [CrossRef]

- Wang, W.; Xu, C.; Ge, M.; Li, H. Neural Speaker Extraction with Speaker-Speech Cross-Attention Network. Proc. Interspeech 2021, 3535–3539. [Google Scholar] [CrossRef]

- Lea, C.; Flynn, M.D.; Vidal, R.; Reiter, A.; Hager, G.D. Temporal Convolutional Networks for Action Segmentation and Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1003–1012. [Google Scholar] [CrossRef]

- Dauphin, Y.N.; Fan, A.; Auli, M.; Grangier, D. Language Modeling with Gated Convolutional Networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Volume 70, pp. 933–941. [Google Scholar]

- Cosentino, J.; Pariente, M.; Cornell, S.; Deleforge, A.; Vincent, E. LibriMix: An Open-Source Dataset for Generalizable Speech Separation. arXiv 2020, arXiv:2005.11262. [Google Scholar]

- Panayotov, V.; Chen, G.; Povey, D.; Khudanpur, S. Librispeech: An ASR corpus based on public domain audio books. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, QLD, Australia, 19–24 April 2015; pp. 5206–5210. [Google Scholar] [CrossRef]

- Delcroix, M.; Ochiai, T.; Zmolikova, K.; Kinoshita, K.; Tawara, N.; Nakatani, T.; Araki, S. Improving Speaker Discrimination of Target Speech Extraction With Time-Domain Speakerbeam. In Proceedings of the ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 691–695. [Google Scholar] [CrossRef]

- Peng, J.; Delcroix, M.; Ochiai, T.; Plchot, O.; Araki, S.; Černocký, J. Target Speech Extraction with Pre-Trained Self-Supervised Learning Models. In Proceedings of the ICASSP 2024-2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 10421–10425. [Google Scholar] [CrossRef]

- Li, X.; Liu, R.; Huang, H.; Wu, Q. Contrastive Learning for Target Speaker Extraction with Attention-Based Fusion. IEEE/ACM Trans. Audio Speech Lang. Process. 2024, 32, 178–188. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).