Supervised and Few-Shot Learning for Aspect-Based Sentiment Analysis of Instruction Prompt

Abstract

1. Introduction

- (1)

- We transform the ABSA task into a unified generative task, eliminating the need to design specific network structures for each task. The connection between sentiment elements is strengthened in the compound task. For example, the F1 scores for the AESC and ASTE tasks are improved by 4.86% and 10.17%, respectively, on the Laptop 14 dataset.

- (2)

- We propose three instruction prompts to redesign the input and output formats of the model. This approach enables downstream tasks to adapt to the pre-trained model, reduces the errors between the pre-trained model and the downstream tasks, and improves the utilization of the pre-trained model.

- (3)

- We use the instruction prompt method for few-shot learning on ABSA tasks. Experimental results show that using only 10% of the original data can achieve 80% of the model performance compared to fully supervised learning. This can reduce annotation costs while obtaining good models.

- (4)

- We conducted experiments on three ABSA tasks using both fully supervised and few-shot learning approaches on four benchmark datasets. Our proposed approach outperformed the state-of-the-art in nearly all cases, demonstrating its effectiveness in improving ABSA performance. For example, our approach demonstrates improvements over the state-of-the-art in almost all cases when conducting fully supervised learning and few-shot learning experiments on three ABSA tasks across four benchmark datasets. On the Laptop 14 dataset, our fully supervised learning approach results in a 1.46% increase in the F1 score in the ASTE task, while our few-shot learning approach achieves an F1 score of 50.62%, which is 82% of the performance of the fully supervised learning model.

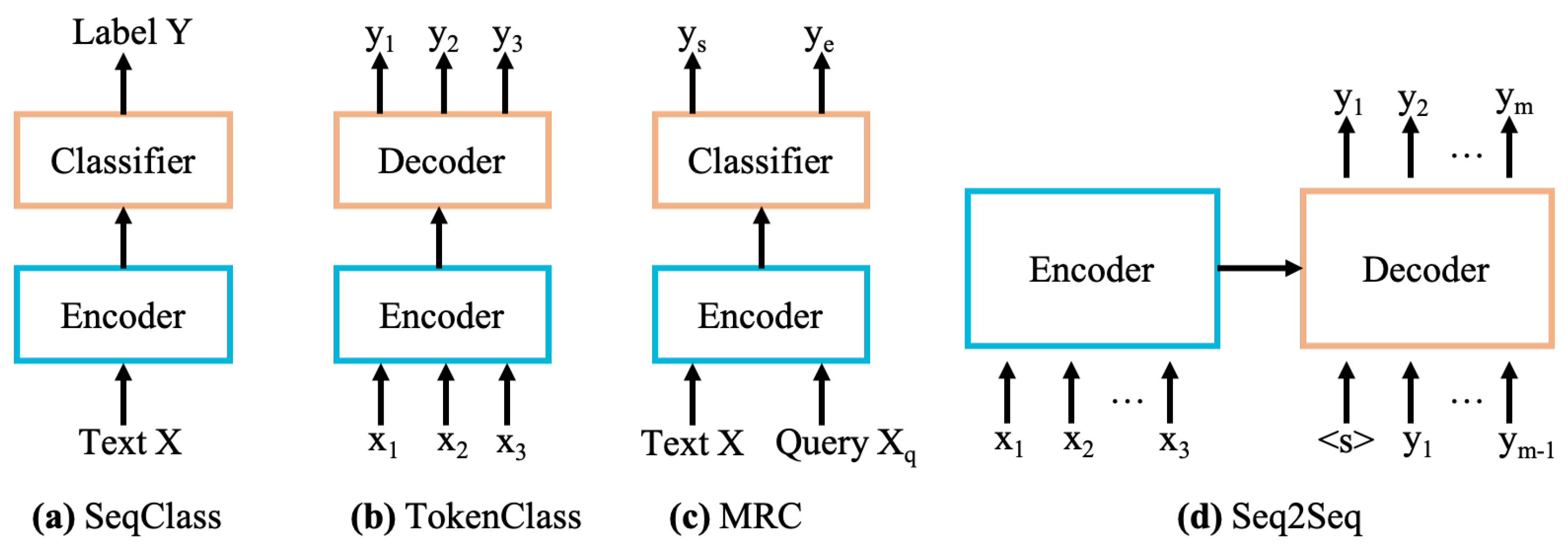

2. Related Work

3. Methodology

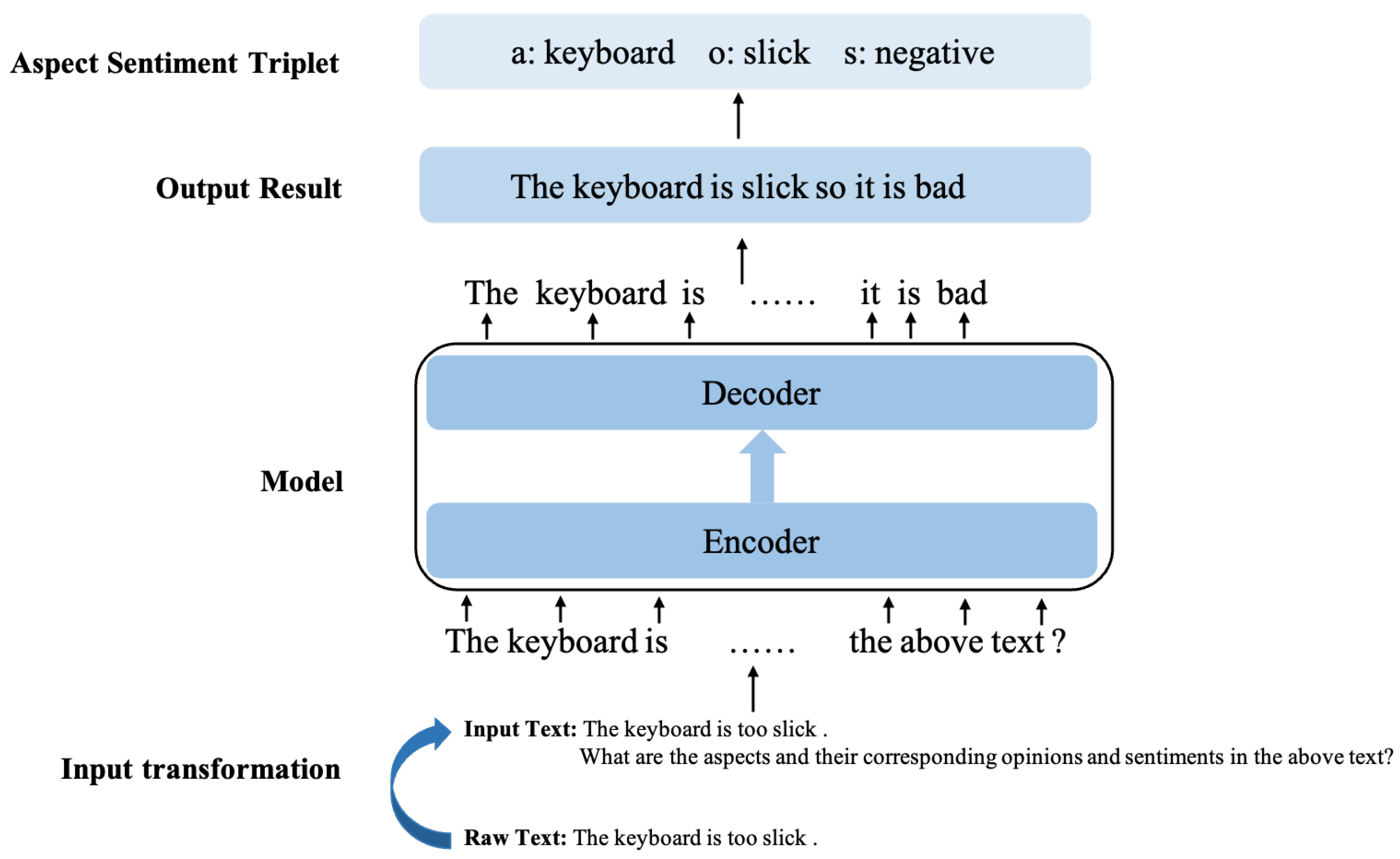

3.1. Input Transformation

3.2. Sequence-to-Sequence Learning

4. Experiments

4.1. Dataset

4.2. Evaluation Metrics

4.3. Experiment Details

4.4. Baselines and Models for Comparison

5. Results and Discussions

5.1. Main Results

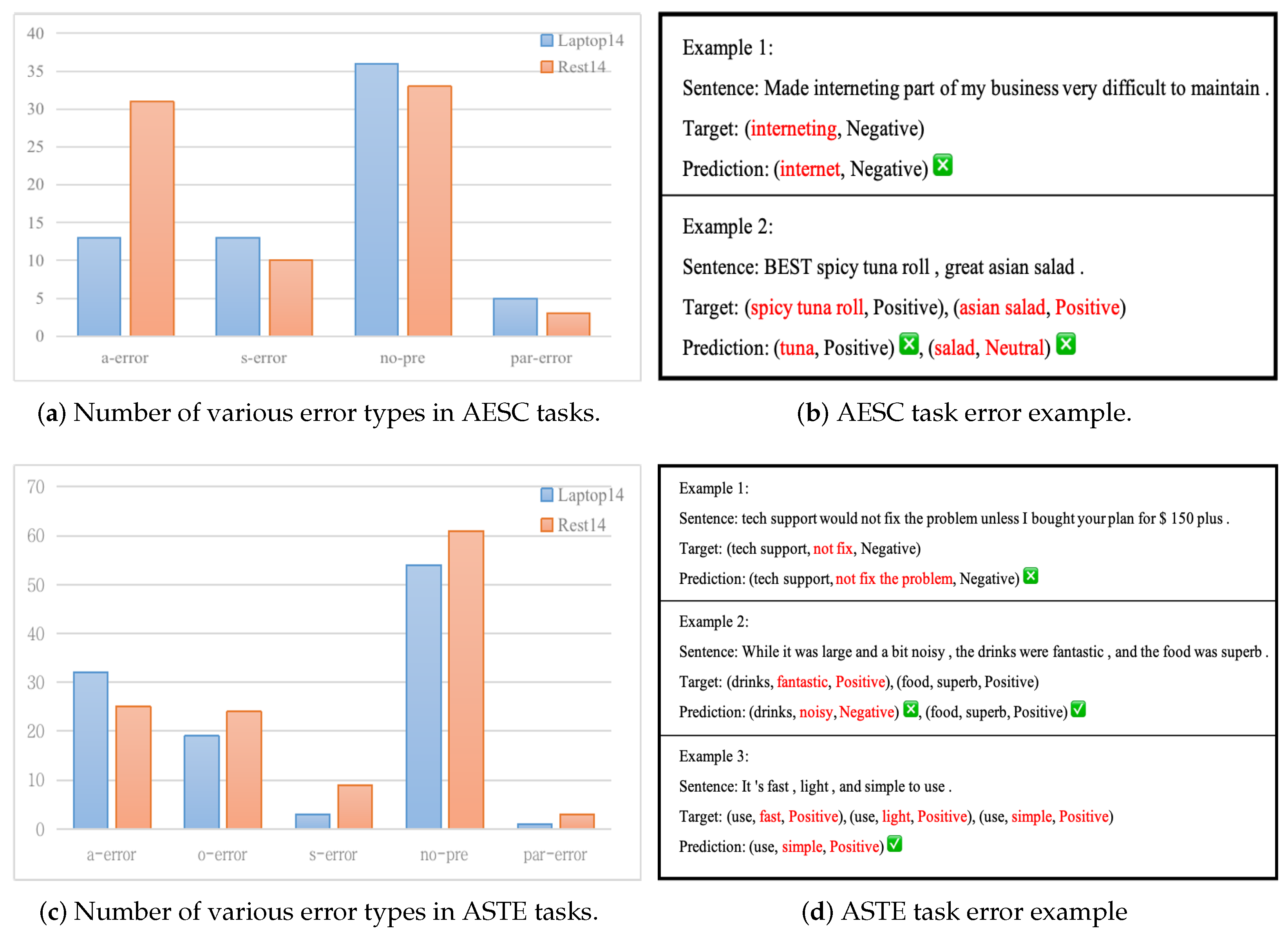

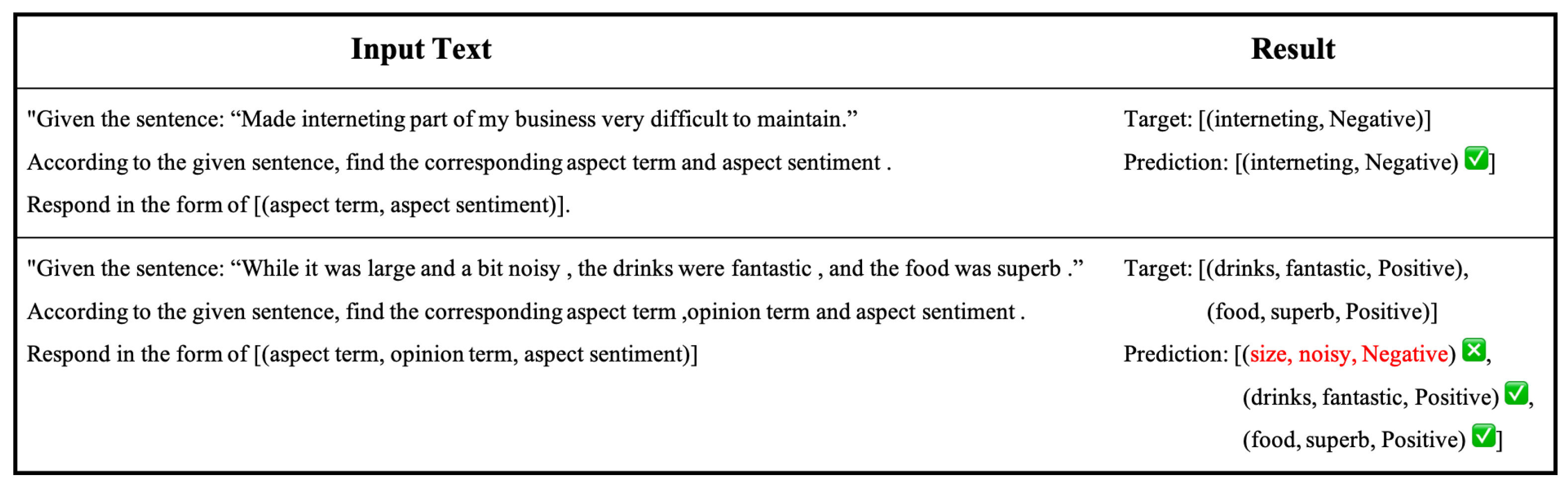

5.2. Error Analysis and Case Study

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhao, H.; Huang, L.; Zhang, R.; Lu, Q.; Xue, H. Spanmlt: A span-based multi-task learning framework for pair-wise aspect and opinion terms extraction. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 3239–3248. [Google Scholar]

- Peng, H.; Xu, L.; Bing, L.; Huang, F.; Lu, W.; Si, L. Knowing what, how and why: A near complete solution for aspect-based sentiment analysis. Proc. Aaai Conf. Artif. Intell. 2020, 34, 8600–8607. [Google Scholar] [CrossRef]

- Li, X.; Lam, W. Deep multi-task learning for aspect term extraction with memory interaction. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 9–11 September 2017; pp. 2886–2892. [Google Scholar]

- Xu, H.; Liu, B.; Shu, L.; Philip, S.Y. Double Embeddings and CNN-based Sequence Labeling for Aspect Extraction. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), Melbourne, Australia, 15–20 July 2018; pp. 592–598. [Google Scholar]

- Wang, Y.; Huang, M.; Zhu, X.; Zhao, L. Attention-based LSTM for aspect-level sentiment classification. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–5 November 2016; pp. 606–615. [Google Scholar]

- Xue, W.; Li, T. Aspect Based Sentiment Analysis with Gated Convolutional Networks. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; pp. 2514–2523. [Google Scholar]

- Zhang, W.; Li, X.; Deng, Y.; Bing, L.; Lam, W. Towards generative aspect-based sentiment analysis. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 2: Short Papers), Online, 1–6 August 2021; pp. 504–510. [Google Scholar]

- Athiwaratkun, B.; dos Santos, C.; Krone, J.; Xiang, B. Augmented Natural Language for Generative Sequence Labeling. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 375–385. [Google Scholar]

- Liu, X.; Zheng, Y.; Du, Z.; Ding, M.; Qian, Y.; Yang, Z.; Tang, J. GPT understands, too. arXiv 2021, arXiv:2103.10385. [Google Scholar] [CrossRef]

- Yan, H.; Dai, J.; Ji, T.; Qiu, X.; Zhang, Z. A Unified Generative Framework for Aspect-based Sentiment Analysis. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1–6 August 2021; pp. 2416–2429. [Google Scholar]

- Mitroi, M.; Truică, C.O.; Apostol, E.S.; Florea, A.M. Sentiment analysis using topic-document embeddings. In Proceedings of the 2020 IEEE 16th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 3–5 September 2020; pp. 75–82. [Google Scholar]

- Truică, C.O.; Apostol, E.S.; Șerban, M.L.; Paschke, A. Topic-based document-level sentiment analysis using contextual cues. Mathematics 2021, 9, 2722. [Google Scholar] [CrossRef]

- Petrescu, A.; Truică, C.O.; Apostol, E.S.; Paschke, A. EDSA-Ensemble: An Event Detection Sentiment Analysis Ensemble Architecture. arXiv 2023, arXiv:2301.12805. [Google Scholar]

- Liu, P.; Joty, S.; Meng, H. Fine-grained opinion mining with recurrent neural networks and word embeddings. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1433–1443. [Google Scholar]

- Apostol, E.S.; Pisică, A.G.; Truică, C.O. ATESA-B {∖AE} RT: A Heterogeneous Ensemble Learning Model for Aspect-Based Sentiment Analysis. arXiv 2023, arXiv:2307.15920. [Google Scholar]

- Jiang, L.; Yu, M.; Zhou, M.; Liu, X.; Zhao, T. Target-dependent twitter sentiment classification. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, Portland, OR, USA, 19–24 June 2011; pp. 151–160. [Google Scholar]

- Gao, L.; Wang, Y.; Liu, T.; Wang, J.; Zhang, L.; Liao, J. Question-driven span labeling model for aspect–opinion pair extraction. Proc. AAAI Conf. Artif. Intell. 2021, 35, 12875–12883. [Google Scholar] [CrossRef]

- Chen, S.; Wang, Y.; Liu, J.; Wang, Y. Bidirectional machine reading comprehension for aspect sentiment triplet extraction. Proc. AAAI Conf. Artif. Intell. 2021, 35, 12666–12674. [Google Scholar] [CrossRef]

- Mao, Y.; Shen, Y.; Yu, C.; Cai, L. A joint training dual-mrc framework for aspect based sentiment analysis. Proc. AAAI Conf. Artif. Intell. 2021, 35, 13543–13551. [Google Scholar] [CrossRef]

- Li, X.; Bing, L.; Zhang, W.; Lam, W. Exploiting BERT for End-to-End Aspect-based Sentiment Analysis. In Proceedings of the 5th Workshop on Noisy User-Generated Text (W-NUT 2019), Hong Kong, China, 4 November 2019; pp. 34–41. [Google Scholar]

- Zeng, Z.; Ma, J.; Chen, M.; Li, X. Joint learning for aspect category detection and sentiment analysis in chinese reviews. In Information Retrieval, Proceedings of the 25th China Conference, CCIR 2019, Fuzhou, China, 20–22 September 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 108–120. [Google Scholar]

- Yang, H.; Zeng, B.; Yang, J.; Song, Y.; Xu, R. A multi-task learning model for chinese-oriented aspect polarity classification and aspect term extraction. Neurocomputing 2021, 419, 344–356. [Google Scholar] [CrossRef]

- Huang, J.; Cui, Y.; Wang, S. Adaptive Local Context and Syntactic Feature Modeling for Aspect-Based Sentiment Analysis. Appl. Sci. 2023, 13, 603. [Google Scholar] [CrossRef]

- Zhang, W.; Deng, Y.; Li, X.; Yuan, Y.; Bing, L.; Lam, W. Aspect Sentiment Quad Prediction as Paraphrase Generation. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Online, 7–11 November 2021; pp. 9209–9219. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Liu, P.; Yuan, W.; Fu, J.; Jiang, Z.; Hayashi, H.; Neubig, G. Pre-train, prompt, and predict: A systematic survey of prompting methods in natural language processing. ACM Comput. Surv. 2023, 55, 195. [Google Scholar] [CrossRef]

- Schick, T.; Schütze, H. Exploiting Cloze-Questions for Few-Shot Text Classification and Natural Language Inference. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, Online, 19–23 April 2021; pp. 255–269. [Google Scholar]

- Gao, T.; Fisch, A.; Chen, D. Making pre-trained language models better few-shot learners. In Proceedings of the Joint Conference of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, ACL-IJCNLP 2021, Online, 1–6 August 2021; pp. 3816–3830. [Google Scholar]

- Han, X.; Zhao, W.; Ding, N.; Liu, Z.; Sun, M. Ptr: Prompt tuning with rules for text classification. AI Open 2022, 3, 182–192. [Google Scholar] [CrossRef]

- Chen, X.; Li, L.; Deng, S.; Tan, C.; Xu, C.; Huang, F.; Si, L.; Chen, H.; Zhang, N. LightNER: A Lightweight Tuning Paradigm for Low-resource NER via Pluggable Prompting. In Proceedings of the 29th International Conference on Computational Linguistics, Gyeongju, Republic of Korea, 12–17 October 2022; pp. 2374–2387. [Google Scholar]

- Zhang, C.; Li, Q.; Song, D. Aspect-based Sentiment Classification with Aspect-specific Graph Convolutional Networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3 November 2019; pp. 4568–4578. [Google Scholar]

- Li, R.; Chen, H.; Feng, F.; Ma, Z.; Wang, X.; Hovy, E. Dual graph convolutional networks for aspect-based sentiment analysis. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1–6 August 2021; pp. 6319–6329. [Google Scholar]

- He, R.; Lee, W.S.; Ng, H.T.; Dahlmeier, D. An Interactive Multi-Task Learning Network for End-to-End Aspect-Based Sentiment Analysis. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 504–515. [Google Scholar]

- Chen, Z.; Qian, T. Relation-aware collaborative learning for unified aspect-based sentiment analysis. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 3685–3694. [Google Scholar]

- Yu, G.; Li, J.; Luo, L.; Meng, Y.; Ao, X.; He, Q. Self question-answering: Aspect-based sentiment analysis by role flipped machine reading comprehension. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2021, Punta Cana, Dominican Republic, 16-20 November 2021; pp. 1331–1342. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 5485–5551. [Google Scholar]

- Pontiki, M.; Papageorgiou, H.; Galanis, D.; Androutsopoulos, I.; Pavlopoulos, J.; Manandhar, S. SemEval-2014 Task 4: Aspect Based Sentiment Analysis. In Proceedings of the 8th International Workshop on Semantic Evaluation (SemEval 2014), Dublin, Ireland, 23–24 August 2014; p. 27. [Google Scholar]

- Pontiki, M.; Galanis, D.; Papageorgiou, H.; Manandhar, S.; Androutsopoulos, I. Semeval-2015 task 12: Aspect based sentiment analysis. In Proceedings of the 9th International Workshop on Semantic Evaluation (SemEval 2015), Denver, CO, USA, 4–5 June 2015; pp. 486–495. [Google Scholar]

- Pontiki, M.; Galanis, D.; Papageorgiou, H.; Androutsopoulos, I.; Manandhar, S.; AL-Smadi, M.; Al-Ayyoub, M.; Zhao, Y.; Qin, B.; De Clercq, O.; et al. Semeval-2016 task 5: Aspect based sentiment analysis. In Proceedings of the ProWorkshop on Semantic Evaluation (SemEval-2016), San Diego, CA, USA, 16–17 June 2016; pp. 19–30. [Google Scholar]

- Phan, M.H.; Ogunbona, P.O. Modelling context and syntactical features for aspect-based sentiment analysis. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 3211–3220. [Google Scholar]

- Xu, L.; Li, H.; Lu, W.; Bing, L. Position-Aware Tagging for Aspect Sentiment Triplet Extraction. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 2339–2349. [Google Scholar]

- Wei, J.; Tay, Y.; Bommasani, R.; Raffel, C.; Zoph, B.; Borgeaud, S.; Yogatama, D.; Bosma, M.; Zhou, D.; Metzler, D.; et al. Emergent Abilities of Large Language Models. arXiv 2022, arXiv:2206.07682. [Google Scholar]

| Prompt Template Name | Prompt Template—Input | Prompt Template—Output | Example—Input | Example—Output | Target—Ouput |

|---|---|---|---|---|---|

| ASC-IPT-a | [T] What is the sentiment of the [a] in the above text? | The [a] is [s] | The keyboard is too slick. What is the sentiment of the keyboard in the above text? | The keyboard is bad. | [negative] |

| ASC-IPT-b | Given the text: [T] ([a]) What is the meaning of the above text? | Summary: the [a] is [s] | Given the text: The keyboard is too slick. (keyboard) What is the meaning of the above text? | Summary: the keyboard is bad. | [negative] |

| ASC-IPT-c | Given the text: [T] ([a]) Please briefly summarize the above text. | In summary, the [a] is [s] | Given the text: The keyboard is too slick. (keyboard) Please briefly summarize the above text. | In summary, the keyboard is bad. | [negative] |

| Prompt Template Name | Prompt Template—Input | Prompt Template—Output | Example—Input | Example—Output | Target—Ouput |

|---|---|---|---|---|---|

| AESC-IPT-a | [T] What are the aspects and sentiments in the above text? | The [a] is [s] | But the staff was so horrible to us. What are the aspects and sentiments in the above text? | The staff is bad. | [staff, negative] |

| AESC-IPT-b | Given the text: [T] What is the meaning of the above text? | Summary: the [a] is [s] | Given the text: But the staff was so horrible to us. What is the meaning of the above text? | Summary: the staff is bad. | [staff, negative] |

| AESC-IPT-c | Given the text: [T] Please briefly summarize the above text. | In summary, the [a] is [s] | Given the text: But the staff was so horrible to us. Please briefly summarize the above text. | In summary, the staff is bad. | [staff, negative] |

| Prompt Template Name | Prompt Template—Input | Prompt Template—Output | Example—Input | Example—Output | Target—Ouput |

|---|---|---|---|---|---|

| ASTE-IPT-a | [T] What are the aspects and their corresponding opinions and sentiments in the above text? | The [a] is [o] so it is [s] | This place is incredibly tiny. What are the aspects and their corresponding opinions and sentiments in the above text? | The place is tiny so it is bad | [place, tiny, negative] |

| ASTE-IPT-b | Given the text: [T] What is the meaning of the above text? | Summary: the [a] is [o] so it is [s] | Given the text: This place is incredibly tiny. What is the meaning of the above text? | Summary: the place is tiny so it is bad | [place, tiny, negative] |

| ASTE-IPT-c | Given the text: [T] Please briefly summarize the above text. | In summary, the [a] is [o] so it is [s] | Given the text: This place is incredibly tiny. Please briefly summarize the above text. | In summary, the place is tiny so it is bad | [place, tiny, negative] |

| (a) Distribution of the Laptop 14 and Rest 14 Few-Sample Datasets | ||||||||||

| Laptop 14 | Rest 14 | |||||||||

| K = 5 | K = 10 | K = 20 | K = 40 | Full | K = 5 | K = 10 | K = 20 | K = 40 | Full | |

| Train | 20 | 35 | 68 | 129 | 1309 | 18 | 35 | 62 | 125 | 1796 |

| Dev | 24 | 33 | 64 | 123 | 150 | 23 | 31 | 67 | 128 | 180 |

| Test | 411 | 600 | ||||||||

| (b) Distribution of the Rest 15 and Rest 16 Few-Sample Datasets | ||||||||||

| Rest 15 | Rest 16 | |||||||||

| K = 5 | K = 10 | K = 20 | K = 40 | Full | K = 5 | K = 10 | K = 20 | K = 40 | Full | |

| Train | 18 | 33 | 63 | 122 | 750 | 16 | 32 | 62 | 126 | 1114 |

| Dev | 23 | 37 | 62 | 82 | 82 | 19 | 36 | 65 | 118 | 118 |

| Test | 401 | 420 | ||||||||

| (a) Laptop 14 | |||

| Model | ASC | AESC | ASTE |

| LCFS | 77.13 | - | - |

| A-ABSA | 77.3 | - | - |

| RACL | - | 63.4 | - |

| Dual-MRC | - | 65.94 | - |

| Pipeline | - | - | 42.87 |

| Jet+BERT | - | - | 51.04 |

| GAS | 86.36 | 66.72 | 53.48 |

| PARA | 86.31 | 68.93 | 59.75 |

| IPT-a | 85.53 | 70.8 | 61.21 |

| IPT-b | 86.36 | 69.05 | 60.42 |

| IPT-c | 86.56 | 70.36 | 60.45 |

| (b) Rest 14 | |||

| Model | ASC | AESC | ASTE |

| LCFS | 80.31 | - | - |

| A-ABSA | 81.21 | - | - |

| RACL | - | 75.42 | - |

| Dual-MRC | - | 75.95 | - |

| Pipeline | - | - | 51.46 |

| Jet+BERT | - | - | 62.4 |

| GAS | 90.84 | 74.52 | 66.45 |

| PARA | 90.8 | 76.96 | 71.42 |

| IPT-a | 90.13 | 77.6 | 71.53 |

| IPT-b | 90.65 | 76.9 | 71.44 |

| IPT-c | 91.17 | 77.16 | 71.4 |

| (c) Rest 15 | |||

| Model | ASC | AESC | ASTE |

| RACL | - | 66.05 | - |

| Dual-MRC | - | 65.08 | - |

| Pipeline | - | 42.87 | |

| Jet+BERT | - | 51.04 | |

| GAS | 90.57 | 64.45 | 53.48 |

| PARA | 91.79 | 66.96 | 59.75 |

| IPT-a | 91.48 | 67.98 | 61.21 |

| IPT-b | 91.74 | 67.87 | 60.42 |

| IPT-c | 91.94 | 68.47 | 60.45 |

| (d) Rest 16 | |||

| Model | ASC | AESC | ASTE |

| Pipeline | - | - | 54.21 |

| Jet+BERT | - | - | 63.83 |

| GAS | 91 | 69.21 | 64.71 |

| PARA | 91.42 | 73.86 | 70.99 |

| IPT-a | 91.55 | 73.83 | 70.58 |

| IPT-b | 91.81 | 74.1 | 71.03 |

| IPT-c | 91.82 | 74.38 | 71.14 |

| Dataset | Task | Prompt Template | K = 5 | K = 10 | K = 20 | K = 40 |

|---|---|---|---|---|---|---|

| Laptop 14 | ASC | IPT-a | 73.8 | 77.67 | 80.08 | 83.19 |

| IPT-b | 74.43 | 77.6 | 80.9 | 83.33 | ||

| IPT-c | 75.32 | 78.52 | 81.26 | 84.73 | ||

| AESC | IPT-a | 31.64 | 38.02 | 43.65 | 52.24 | |

| IPT-b | 26.31 | 32.09 | 40.55 | 47.47 | ||

| IPT-c | 26.76 | 31.62 | 39.53 | 48.75 | ||

| ASTE | IPT-a | 30.72 | 37.11 | 40.48 | 50.62 | |

| IPT-b | 30.83 | 35.24 | 39.25 | 49.15 | ||

| IPT-c | 31.35 | 35.45 | 41.3 | 48.14 | ||

| Rest 14 | ASC | IPT-a | 74.73 | 79.46 | 86.48 | 86.63 |

| IPT-b | 76.6 | 81.8 | 87.76 | 88.05 | ||

| IPT-c | 78.46 | 83.24 | 86.94 | 87.64 | ||

| AESC | IPT-a | 44.79 | 50.71 | 58.27 | 61.96 | |

| IPT-b | 41.91 | 48.95 | 56.52 | 62 | ||

| IPT-c | 44.81 | 47.97 | 57.48 | 62.52 | ||

| ASTE | IPT-a | 44.52 | 48.08 | 54.51 | 58.54 | |

| IPT-b | 43.84 | 49.56 | 53.37 | 59.05 | ||

| IPT-c | 43.66 | 49.64 | 53.64 | 59.25 | ||

| Rest 15 | ASC | IPT-a | 73.65 | 79.03 | 83.48 | 89.34 |

| IPT-b | 71.05 | 77.95 | 83.99 | 88.65 | ||

| IPT-c | 70.96 | 76.89 | 84.41 | 87.37 | ||

| AESC | IPT-a | 39.94 | 50.61 | 54.04 | 56.62 | |

| IPT-b | 35.54 | 45.8 | 49.01 | 53.19 | ||

| IPT-c | 35.58 | 45.02 | 48.27 | 56.66 | ||

| ASTE | IPT-a | 31.91 | 40.38 | 48.61 | 56.6 | |

| IPT-b | 34.11 | 39.1 | 47.18 | 57.26 | ||

| IPT-c | 34.89 | 41.17 | 46.66 | 54.91 | ||

| Rest 16 | ASC | IPT-a | 69.16 | 81.24 | 83.74 | 89.72 |

| IPT-b | 69.49 | 81.52 | 84.74 | 88.87 | ||

| IPT-c | 64.08 | 79.4 | 84.93 | 89.02 | ||

| AESC | IPT-a | 41.61 | 52.01 | 55.87 | 60.64 | |

| IPT-b | 42.53 | 51.46 | 52.33 | 60.37 | ||

| IPT-c | 40.94 | 50.36 | 54.17 | 60.68 | ||

| ASTE | IPT-a | 37.11 | 43.55 | 53.32 | 62.78 | |

| IPT-b | 39.1 | 44.91 | 52.72 | 63.49 | ||

| IPT-c | 39.46 | 45.06 | 54.13 | 63.51 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, J.; Cui, Y.; Liu, J.; Liu, M. Supervised and Few-Shot Learning for Aspect-Based Sentiment Analysis of Instruction Prompt. Electronics 2024, 13, 1924. https://doi.org/10.3390/electronics13101924

Huang J, Cui Y, Liu J, Liu M. Supervised and Few-Shot Learning for Aspect-Based Sentiment Analysis of Instruction Prompt. Electronics. 2024; 13(10):1924. https://doi.org/10.3390/electronics13101924

Chicago/Turabian StyleHuang, Jie, Yunpeng Cui, Juan Liu, and Ming Liu. 2024. "Supervised and Few-Shot Learning for Aspect-Based Sentiment Analysis of Instruction Prompt" Electronics 13, no. 10: 1924. https://doi.org/10.3390/electronics13101924

APA StyleHuang, J., Cui, Y., Liu, J., & Liu, M. (2024). Supervised and Few-Shot Learning for Aspect-Based Sentiment Analysis of Instruction Prompt. Electronics, 13(10), 1924. https://doi.org/10.3390/electronics13101924