Abstract

In system processing, video inevitably suffers from distortion, which leads to quality degradation and affects the user experience. Therefore, it is of great importance to design an accurate and effective objective video quality assessment (VQA) method. In this paper, by considering the multi-dimensional characteristics for video and visual perceptual mechanism, a two-stream convolutional network for VQA is proposed based on spatial–temporal analysis, named TSCNN-VQA. Specifically, for feature extraction, TSCNN-VQA first extracts spatial and temporal features by two different convolutional neural network branches, respectively. After that, the spatial–temporal joint feature fusion is constructed to obtain the joint spatial–temporal features. Meanwhile, the TSCNN-VQA also integrates an attention module to guarantee that the process conforms to the mechanism that the visual system perceives video information. Finally, the overall quality is obtained by non-linear regression. The experimental results in both the LIVE and CSIQ VQA datasets show that the performance indicators obtained by TSCNN-VQA are higher than those of existing VQA methods, which demonstrates that TSCNN-VQA can accurately evaluate video quality and has better consistency with the human visual system.

1. Introduction

In recent decades, video has become the most important way for humans to access information. However, due to devices performance and processing methods, video will inevitably suffer from distortion in acquisition, compression, transmission, and display, which will result in quality degradation and affect the user experience. Therefore, the design of an accurate and effective VQA method is crucial for performance optimization and application promotion of all kinds of video systems.

In general, there are two categories of VQA methods, that is, subjective assessment and objective assessment. Specifically, subjective methods are conducted by watching and scoring the video according to human eyes [1], while objective methods are implemented by designing an algorithm to make the computer automatically calculate video quality. As the human eye is the ultimate receiver of video, subjective VQA is of the highest reliability. However, its implementation is time-consuming and cannot be integrated into the system. In contrast, objective methods are more suitable for application.

For the research of objective quality assessment methods, the earliest work was focused on image. In image quality assessment (IQA), most methods were based on image content information [2] and natural scene statistics [3]. Recently, deep learning [4] techniques have been integrated into IQA methods to explore the image spatial distortion more deeply and achieve satisfactory performance. Different from image, video not only contains spatial information within each frame but also the temporal information generated by scene changes between frames. Thus, both spatial and temporal quality should be considered, which makes the design of a VQA method more difficult. To begin with, the VQA method was mainly based on the well-performing IQA method. In detail, the quality of each frame was evaluated by the IQA method, and the final video quality was obtained by weighing the frame qualities. Although there is a strong correlation between frame quality and overall video quality, the temporal weighting strategy is hardly an accurate description of the temporal characteristics in videos. Therefore, for performance improvement, later studies attempted to integrate temporal perceptual characteristics when designing a VQA method. However, research on visual perception is still incomplete, so this category of methods also has room for improvement. Similar to IQA, deep learning has also been applied in VQA recently. Specifically, to avoid the inadequacy of 2D convolution in temporal information learning, 3D convolution is used instead of 2D in network design [5]. However, it is not simple to use 3D convolution for temporal information learning, thus affecting the evaluation accuracy. Therefore, specific network structures can be considered to learn the temporal information and improve the performance of the VQA method.

Based on the above considerations, in this work, we evaluated video quality by extracting spatial and temporal features with purposely designed convolutional neural networks (CNNs). Considering that the two-stream network structure [6] has achieved good performance in behavior recognition, in this work, a VQA method is proposed by adopting the two-stream structure with spatial and temporal features extraction, which is named TSCNN-VQA. Specifically, TSCNN-VQA takes full advantage of the convolutional neural network by extracting spatial and temporal features with two different fully convolutional branches. Then, the extracted spatial and temporal features are continuously fused to obtain the spatial–temporal joint features. Finally, the spatial–temporal joint features are used to predict the quality score by a fully connected (FC) layer. The contributions of this work can be summarized as below:

- (1)

- Different to traditional video quality assessment methods that evaluate video as a whole, considering that video contains information in different dimensions, we separately take spatial and temporal residual and distorted maps as inputs. Hence, we construct a new two-stream framework for VQA, which makes the model more consistent with the visual perception for videos.

- (2)

- Different to traditional image content-based feature extraction, considering that the human visual system (HVS) has a different perceptual complexity and mechanism for temporal and spatial information, we designed two convolutional feature extraction branches for spatial and temporal information, respectively, so that the model can extract temporal and spatial distortion features more accurately.

- (3)

- Different to the feature extraction strategy guided by an attention module, considering the impact of distortion and other visual factors in the quality assessment, we guided the feature extraction by distorted maps for corresponding residual maps. Meanwhile, we also designed a new spatial–temporal feature fusion model, so that different dimensions of features can jointly represent the distortion degree of the video.

2. Related Works

In this section, a brief overview of both traditional and deep learning-based VQA methods will be provided.

2.1. Traditional Quality Assessment Methods

In the early stage of quality assessment, methods such as Mean Square Error (MSE) and Peak Signal to Noise Ratio (PSNR) [7], which have low complexity and clear physical meaning, were first applied. However, this category of methods only considers the difference between the pixels at the same location and ignores the structure information in the image, and such inconsistent visual perception led to poor performance. For this shortcoming, Wang et al. [8] proposed the Structural Similarity Index Measure (SSIM) method based on structural similarity, allowing the evaluation strategy based on structural distortion to gradually became the focus of IQA research. Afterwards, some visual perceptual models and natural scene statistics were also used in IQA research [9].

As an ordered arrangement of images in temporal direction, video not only contains the spatial information within each frame but also the motion information in the temporal domain. Therefore, the early stage of VQA research mainly considered two aspects. Firstly, the VQA methods usually refer to the existing IQA methods for the measurement of spatial distortion. On the other hand, the weighting of frame quality or 3D transform is adopted to describe the temporal information. Due to the success in IQA, many methods integrate the structure-information-based approach directly into video. Among them, Wang et al. [10] calculated the SSIM score of each distorted frame and used the motion vector to weight the frame quality as the final video quality. Similarly, in temporal pooling, Moorthy et al. [11] proposed a motion-compensation-based temporal quality evaluation method. Seshadrinathan et al. [12] exploited the delay effect of human eyes for temporal pooling. They also proposed the MOVIE (MOtion-based Video Integrity Evaluation) [13] method, which used a spatial spectral local multi-scale framework for the evaluation of dynamic video fidelity. Different to the above idea of evaluating temporal and spatial quality separately, Wang et al. [14] extracted structural information directly in the spatial–temporal domain for quality assessment, which also reported a solid performance.

As above, the VQA methods based on image structure focus on the measurement of spatial quality, and temporal quality is only considered by temporal pooling, which results in temporal information loss. Therefore, researchers tend to integrate the temporal perceptual mechanism in VQA methods. Among them, by analyzing the luminance adaptation and visual masking mechanisms, Aydin et al. [15] proposed a VQA method based on luminance adaptation, spatial–temporal contrast sensitivity, and visual masking. He et al. [16] explored and exploited the compact representation of energy in the 3D-DCT domain and evaluated video quality with three different types of statistical features. Since motion information is crucial in video perception, methods based on motion distortion have been gradually proposed. Vu et al. [17] proposed the ST-MAD (Spatial–Temporal MAD) method, which used the motion vectors as weights, and performed a weighted mapping of spatial features to obtain the final quality. Manasa et al. [18] evaluated video quality by quantifying the degradation of the local-optical-flow-based motion statistics. While Yan et al. [19] divided the motion information into simple and complex parts and estimated the motion information of different complexities using the gradient standard deviation.

In summary, according to the characteristics of video, traditional VQA methods complete the distortion feature extraction from different perspectives and can evaluate the video quality more accurately. However, such a feature extraction strategy cannot fully describe video characteristics, resulting in the loss of video information. This also makes the traditional VQA methods still have some room for performance improvements.

2.2. Deep Learning Methods

Recently, deep learning has been widely used in many aspects of computer vision, such as image segmentation and video understanding, and has shown excellent performance. Consequently, many VQA methods based on deep learning have also been proposed. Among them, Kim et al. [20] proposed DeepVQA, which simulated spatial–temporal visual perception by a convolutional neural aggregation network (CNAN). However, traditional 2D convolution may be inadequate for multi-dimensional information description; therefore, 3D convolution has been adopted in video feature extraction. For example, Xu et al. [5] proposed the C3DVQA (Convolutional neural network with 3D kernels for Video Quality Assessment) method. In C3DVQA, the visual quality perception for video is simulated by using 3D convolution to learn the spatial–temporal features, which significantly improves the performance of the model. However, C3DVQA needs to be generalized to larger datasets for more comprehensive experiments to validate the model’s performance. Additionally, Chen et al. [21] extracted distortion by 2D convolution to predict local quality and then combined with a 3D convolution-based STAN (Spatial Temporal Aggregation Network) to assign adaptive weights and obtain overall quality. Meanwhile, the STAN described the spatial–temporal relationships between adjacent patches directly from the 3D fractional tensor by using two 3D convolutional layers, which achieved a solid performance.

In summary, with the continuous application of deep learning, the deep learning-based spatial and temporal features enable the VQA method to obtain a more satisfactory evaluation performance. However, the existing methods do not consider the difference between the spatial and temporal perceptual mechanism. Therefore, the proposed method refers to the idea of a two-stream network and designs two different feature extraction branches to extract spatial and temporal features, respectively, which is proposed to achieve better performance.

3. Proposed TSCNN-VQA Method

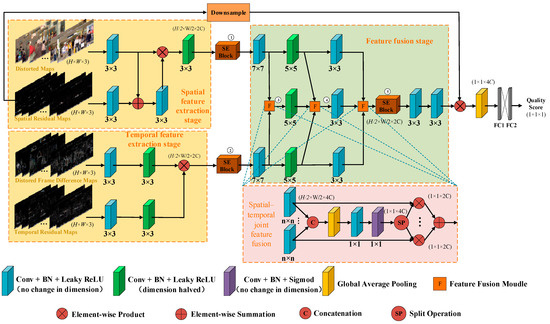

In video perceiving, the HVS processes spatial and temporal information simultaneously. Therefore, video quality can also be considered as a combination of spatial and temporal quality. Based on such a conclusion, a new VQA method with two streams of convolutional neural networks is designed, named TSCNN-VQA. The framework of the proposed TSCNN-VQA is shown in Figure 1. In detail, firstly, we modified the network inputs by integrating the distorted map with residual maps for both spatial and temporal feature extraction branches, so that the distortion could be used as a guidance in feature extraction. Then, two newly designed network branches were constructed for spatial and temporal feature extraction, respectively, inspired by the difference mechanism in visual perception for spatial and temporal information. Meanwhile, by analyzing the performance, several classical SE-Blocks were employed to achieve better feature extraction efficiency. Afterwards, in order to simulate the processing of spatial–temporal information by the HVS, a new spatial–temporal joint feature fusion module was constructed. Finally, the features were regressed to obtain video quality. The detailed implementation is described next.

Figure 1.

Architecture of the proposed TSCNN-VQA network.

3.1. Preprocessing for Distorted Video

Previous studies on visual physiology have revealed that there is a rapid decrease in visual sensitivity for lower-frequency components [22]. Therefore, from the perspective of visual perception, we first preprocessed distorted videos so that the input could better reflect the temporal and spatial information. In detail, the distorted frames can be normalized by subtracting the low-pass filtered frames from their scaled gray-scale frames (ranging from [0, 1]). Let and denote the distorted and reference frames, then the i-th normalized frames can be denoted as and .

Generally speaking, in video processing with deep neural networks, it is necessary to take the whole video as the input. However, such a process will obviously lead to a large increase in computational complexity. Meanwhile, there is a lot of redundant information between adjacent frames. Consequently, in this work, we attempt to use sampled frames to reduce the computational cost. Based on the above considerations, we conduct temporal uniform sampling for the original video. By performance tuning, the sampling period is set as 12 in this work. A detailed performance comparison and analysis are provided in the experimental section. According to previous work on deep learning-based video processing, a patch-based network training strategy is commonly used [23]. Therefore, in this work, the sampled video frames are patched as the input of the proposed network. Meanwhile, to avoid overlapping in the final spatial–temporal feature map, the video frames are chunked by a sliding window. The step length of the sliding window can be expressed as

where N is the ignored pixels number, and R is the ratio between the size of the input and the final spatial–temporal feature map. In this work, N and R are both set to four. The video patch size, sizepatch, is set as 112 × 112.

3.2. Spatial and Temporal Feature Extraction

In VQA, both spatial and temporal information needs to be considered in network designing. At the same time, there are differences in the visual mechanism that the HVS uses to perceive spatial and temporal information. Therefore, different feature extraction branches needed to be designed to extract spatial and temporal features.

Specifically, in this work, TSCNN-VQA adopts a two-stream network structure for spatial and temporal feature extraction. In order to extract features more accurately, distorted video frames are used as the input for spatial feature extraction, and the difference map between the reference and distorted frames is also inputted as a guide. For temporal feature extraction, considering the high complexity and unstable optical flow in temporal description, the frame difference is directly used as the input in this work. Meanwhile, the difference maps between the distorted frame difference and reference frame difference are also inputted to make the temporal distortion more significant. Moreover, considering the difference for spatial and temporal perception, different structures are designed to extract the spatial and temporal features, respectively. Next, the structure of the proposed network will be described in detail.

3.2.1. Spatial Feature Extraction

As previously mentioned, distorted frames and spatial residual maps Rs are used as the inputs for spatial feature extraction. To further illustrate the relationship between the spatial residual map and distortion, the corresponding input images are illustrated in Figure 2. Specifically, Figure 2a,b show the corresponding frames in the reference and distorted video. Figure 2c shows the spatial residuals map obtained by subtracting (a) and (b). In the right half of the distorted frame, distortion introduced by packet loss can be clearly noticed, especially the two pedestrians in the scene. In the spatial residual map, the distortion is even more clearly visible at the locations corresponding to the distortion in (b). This indicates that the distortion is more prominent in the spatial residual map. Therefore, the TSCNN-VQA uses the spatial residual map as a guide to locate the distortion in the distorted frames.

Figure 2.

Input of spatial feature extraction branch. (a) Reference frame, ; (b) distorted frame, ; (c) spatial residuals map, .

As for feature extraction, we designed a network structure, and Figure 1 shows TSCNN-VQA. In detail, the input set of the spatial residual maps is first subjected to normalized log-difference, which can be expressed as

Then, the distorted map and normalized spatial residual map are each subjected to a single convolution to obtain the corresponding feature as

where and represent the distorted map features and the spatial residual map features after one convolution, respectively. Conv represents the convolution operation.

In spatial residual map acquisition, the subtractive operation may lead to a lack of distortion information. In order to make the extracted features more complete, the distorted map features are then added to the spatial residual map features to provide complementary information to the spatial residual map features. A further convolution is then performed to obtain the complementary feature information, which is defined as FI,e. The calculation can be expressed as

Finally, the distortion information in the distorted map is emphasized by multiplying it with the distorted map features. The preliminary spatial features can be obtained by one convolution as

where represents the corresponding element multiplication operation, and represents the initial spatial feature obtained.

3.2.2. Temporal Feature Extraction

For temporal feature extraction, the distorted frame difference map, denoted as fd, and the temporal residual map, denoted as et, are used as inputs. Similar to the spatial residual map, the temporal residual map also serves as the guide and is used to locate the distortion in the distorted video frame difference map. For further illustration, we list the input images in Figure 3.

Figure 3.

Input of temporal feature extraction branch. (a) Reference frame difference map, ; (b) distorted frame difference map, ; (c) temporal residual map, .

In Figure 3, (a) the reference frame difference map obtained by subtracting two adjacent frames is shown. Similarly, (b) is the distorted frame difference map. (c) is the temporal residual map obtained by making a difference between (a) and (b). By comparing the reference and distorted frame difference map, it can be found that distortion can be clearly detected at the same locations. Moreover, the outline of the pedestrian can also be noticed, which indicates that the temporal residual map not only locates the temporal distortion but also contains motion information. Generally speaking, both distortion and motion information play a significant role in temporal quality. Therefore, with the temporal residual map as the input, the temporal feature extraction branch can be more effective in TSCNN-VQA.

In addition, considering the different mechanism in which the human eye perceives spatial and temporal information, a different structure is designed for temporal feature extraction than for spatial feature extraction. Firstly, to describe the temporal information, the frame difference between adjacent frames is first computed. To eliminate the frate rate variation in different videos, the frame difference map can be obtained as , where . Consequently, the temporal residual map can be defined as .

Then, considering the large redundant information existing in the temporal direction, the distorted frame difference map and the temporal residual map are each convolved twice to obtain their respective shallow features, denoted as shallow temporal feature fd’ and shallow residual feature et’. The calculation can be expressed as

To emphasize the temporal distortion, the shallow temporal features are then multiplied with the shallow temporal residuals, and the initial temporal features can be obtained as

where Ft denotes the initial temporal feature.

In detailed implementation, the convolutional layers used in the feature extraction stage all consist of a 3 × 3 convolutional layer, a batch normalization (BN) layer, and a Leaky ReLU layer. Among them, the BN layer is used to speed up the convergence of the model during training and make the training process more stable. Meanwhile, the Leaky ReLU layer is chosen as the activation function because its derivative is always non-zero, which can be used to enhance the learning ability of the unit.

Compared with the two structures designed for the extraction of different dimensional features, with an interaction structure being integrated, it can be found that the spatial feature extraction structure is slightly more complex than the temporal feature extraction structure. This is because the human eye perceives spatial information more intuitively than temporal information. Also, due to the temporal masking effect, spatial distortions may be ignored when intensive motion exists. Consequently, if the temporal features are extracted in the same network structure as a spatial feature, this may have a negative impact on the final evaluation performance. Therefore, we designed a slightly more complex structure to extract the spatial features.

3.3. Spatial–Temporal Feature Fusion

As the HVS evaluates video quality, the quality can be simultaneously determined by both the temporal and spatial perception. Therefore, in this subsection, we fuse the features obtained by different feature extraction network structures for spatial and temporal features, respectively.

In fact, the HVS works with a multi-channel mechanism, in which each channel processes a different visual stimulus. Then, all the channels are interconnected to obtain the optimal visual effect. In this process, different visual cortices have different trigger features and obtain different information features. Therefore, we first processed the obtained initial spatial features and temporal features with three different convolutional layers. Convolutional layers are designed with different convolutional kernels to obtain deeper spatial and temporal features with different receptive fields. Detail implementation can be expressed as

where and denote the deep spatial and temporal features after n convolutions, respectively.

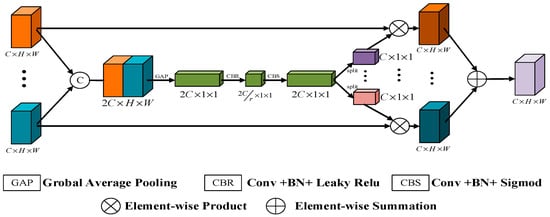

Consider that the spatial and temporal information is interleaved in a visual system and having a masking effect; therefore, a spatial–temporal joint feature fusion module is designed, as shown in Figure 4. The module has two main purposes. Firstly, the spatial–temporal joint feature fusion fuses the initial spatial and temporal features. In addition, additional valid information is extracted by adaptively learning different weights for different features.

Figure 4.

Architecture of spatial–temporal joint feature fusion module.

In detailed implementation, firstly, initial spatial and temporal features are concatenated together and compressed to a single spatial channel with a size of H × W by global average pooling. Then, the compressed feature is passed through a gating mechanism with two 1 × 1 convolutions. Among them, the first convolution is followed by a BN layer and a Leaky ReLU activation layer, and the second convolution is followed by a BN layer and a Sigmoid function to obtain the channel weights. After that, the split operation is applied to divide the weights into several parts corresponding to the input features according to the splicing order. Considering that the spatial and temporal qualities contribute unevenly to the overall video quality, the divided weights are multiplied by the corresponding original input features to obtain a more accurate feature. Finally, the summation of features is implemented for feature fusion. With the above structure, the spatial and temporal information can be more flexible to handle, thus improving the performance of the network.

Furthermore, to guarantee that the proposed network is more consistent with the multi-channel mechanism in visual perception, a total of three spatial–temporal joint feature fusion modules are constructed. Specifically, the first module is to obtain spatial–temporal joint features by fusing the initial spatial and temporal features, while the next two modules not only fuse the spatial and temporal features but also combine the spatial–temporal joint features from the previous fusion modules. Let Fst denote the final spatial–temporal joint feature, then the calculation can be expressed as

where fst-Fusion(·) represents the spatial–temporal joint feature fusion module.

Then, two convolutions can be implemented to the spatial–temporal joint feature to obtain the final spatial–temporal joint distortion feature, which is denoted as Fst’.

Meanwhile, considering that distortions in high-sensitivity regions can be perceived more easily by the HVS, the spatial residual map is also adopted as an attention mask. Consequently, an element-wise multiplication between the spatial–temporal joint distortion feature map and spatial residual map is conducted to modify the perceived distortion feature, denoted as Fp, which can be expressed as

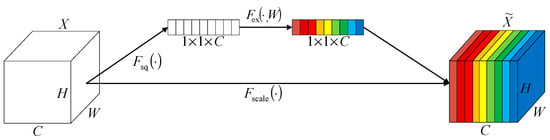

3.4. Attention Module

Considering the vital role of contrast sensitivity and visual attention characteristics in VQA [24], TSCNN-VQA also integrates an attention module to extract both the spatial and temporal salient information. Moreover, given that the channel attention module SE-Block (Squeeze and Excitation Block) [25] has shown good performance in many image-oriented models, we also adopt the SE-Block for the attention mechanism’s simulation. The block diagram of the SE-Block model is shown in Figure 5.

Figure 5.

Architecture of the SE-Block model.

In Figure 5, X is the input feature map, which has a size of H × W with C channels. It is first implemented by the Squeeze operation with global average pooling, denoted by Fsq(·). The detail calculation can be expressed as

In detail, feature compression is performed along the spatial dimension to transform the two-dimensional feature into one dimension. Here, the compressed feature dimension somehow has a global receptive field. It characterizes the global distribution of the responses over the feature channels. It is then subjected to the Excitation operation, denoted as Fex(·, W).

where denotes the Sigmoid activation function. The weights are generated for each feature channel by means of parameter W, which is learned to model the correlation between the feature channels. In this work, and .

Then, two FC layers are employed. Among them, the first FC layer is used for dimensionality reduction. The second FC layer recovers the original dimensionality. With Sigmoid activation, a weight value between 0 and 1 can be obtained. Each original element is multiplied by the weight of the corresponding channel to obtain the channel weighting features. Finally, a Reweight operation, denoted as Fscale(·), is performed to obtain the final feature map .

In summary, the whole operation in SE-Block can be seen as learning the weight coefficients of each channel, thus making the model more discriminative to the features in each channel. It is obvious that the SE-Block can model the inter-channel dependencies and adaptively perform channel feature recalibration. Here, the SE-Block is integrated after both feature extraction and fusion to simulate this attention mechanism of human vision.

3.5. Quality Score Prediction

To obtain the overall distorted video quality, firstly, the global average pooling operation is performed on Fp, which can be used to describe the perceived distortion. Then, two FC layers are constructed to learn the non-linear relationship between the extracted features and subjective quality. Then, the block-level quality can be obtained. Specifically, in the FC layer, the final target loss function is defined as the weighted summation of the loss function and the regularization term.

where , , , and are sequences of each input. is the regression function with parameter Φ, and Ssub is the subjective score of the distorted video. In addition, the total variation and L2 norm of the parameters are used to mitigate high-frequency noise in the distortion feature maps and avoid overfitting [26]. λ1 and λ2 are their weight parameters, respectively.

Eventually, the average of all the block’s quality is calculated as the overall video quality.

4. Experimental Results

In this section, the dataset and experimental settings used for performance validation are described. Moreover, several ablation experiments are conducted to illustrate the effectiveness of the proposed TSCNN-VQA. Finally, a performance comparison between the proposed TSCNN-VQA and existing state-of-the-art VQA methods is provided to further demonstrate the performance of TSCNN-VQA.

4.1. Datasets and Training Protocols

In this work, VQA performance is tested on commonly used VQA datasets, namely the LIVE VQA dataset [27] and the CSIQ VQA dataset [28]. Specifically, the LIVE VQA dataset contains 10 reference videos and 150 distorted videos with four distortion types, including wireless transmission, IP transmission, H.264 compression, and MPEG-2 compression distortion, while the CSIQ VQA dataset contains 12 reference videos and 216 distorted videos with six distortion types, that is Motion JPEG (MJPEG), H.264, HEVC, SNOW codec wavelet compression, packet loss in wireless network, and additive white Gaussian noise (AWGN). The first frames of the reference videos in both the LIVE VQA dataset and the CSIQ VQA dataset are shown in Figure 6. It can be found that both datasets include videos with different types of scenes, which can be adequate to be used as the benchmark of quality evaluation.

Figure 6.

First frames of reference videos in LIVE VQA dataset and CSIQ VQA dataset. (a) LIVE VQA dataset; (b) CSIQ VQA dataset.

In the experimental section, we assess the performance of the VQA method by calculating the correlation coefficient between the subjective quality scores of the distorted videos provided in the video quality database and the objective quality scores calculated using the objective VQA method. Specifically, we use the Pearson Linear Correlation Coefficient (PLCC) and the Spearman Rank Order Correlation Coefficient (SROCC) as performance indicators. The PLCC measures the linear correlation between subjective and objective quality scores, indicating the degree of similarity between the two sets of data. The SROCC assesses the consistency of the ranking between subjective and objective quality scores, revealing the sensitivity of quality assessment methods to changes in the quality of the distorted videos. Both the PLCC and SROCC have values ranging from 0 to 1. The closer the value is to 1, the better the evaluation performance of the VQA method.

In model training, we divide all the reference videos into two random subsets, and the distorted videos are also divided in the same way to guarantee that there is no overlap between the two subsets. Then, a non-distortion-specific method is adopted in training so ensure that all the distortion types can be used randomly. The training is iterated over 100 epochs, and the model with the lowest validation error is selected. The final performance indicator is set as the median value in the 10 times repeat of the test model. Meanwhile, the training and test sets are randomly divided (80% for training and 20% for testing) to eliminate performance bias. In this work, the proposed TSCNN-VQA was implemented in Theano and training with NVIDIA RTX3080. The models were optimized using the Adam optimizer [29] with the weight parameters in the loss function taken as and for training. The initial learning rate was 3 × 10−4 for the LIVE VQA dataset and 5 × 10−4 for the CSIQ VQA dataset. When the validation loss stopped decreasing within five epochs, the learning rate was reduced to the original as 0.9.

4.2. Ablation Experiments for TSCNN-VQA

In this subsection, several ablation experiments are conducted on the LIVE VQA dataset to validate the effectiveness of each module in TSCNN-VQA.

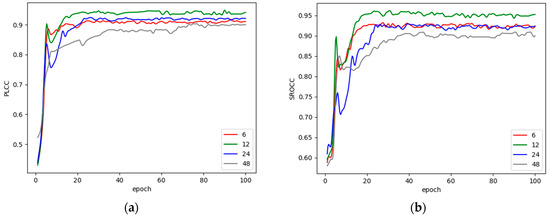

4.2.1. The Effect of Frame Sample Number on Performance and Complexity

The number of video frames used to train the model has a significant impact on both the computational cost and the final performance. Considering that there is a large amount of redundant information between adjacent frames in the video, too many training frames will increase the time cost of the evaluation network. On the contrary, fewer sampled frames may result in information loss, thus affecting the evaluation performance. To investigate the impact of the sampled frame number on computational complexity and evaluation performance, we compare the performance of TSCNN-VQA with different sampled frame numbers. Here, the numbers of 6, 12, 24, and 48 are tested. The results after 100 epochs of training are shown in Table 1.

Table 1.

Effects of different frame sample numbers in LIVE VQA dataset.

The first two rows in Table 1 list the PLCC and SROCC with different frame sample numbers, while the bottom row provides the time consumed per epoch of training in seconds. It can be seen that the PLCC and SROCC are slightly higher when the frame sample number is 12, and it can also be noticed that the time consumed increases with the increase in the frame sample number. Meanwhile, Figure 7 shows the tendency of the SROCC and PLCC with a different frame sample number in 100 epochs. From Figure 7, it can be found that after about 25 epochs of training, both the SROCC and PLCC tend to be stable. It is also clear that the performance with a frame sample number of 12 is significantly higher than the other cases. Consequently, considering the performance and complexity, a frame sample number of 12 is adopted in this work.

Figure 7.

Tendency of PLCC and SROCC with different frame sample numbers in 100 epochs. (a) PLCC; (b) SROCC.

4.2.2. The Effect of Different Structures of the Feature Extraction on Performance

The HVS perceives the spatial and temporal information with different mechanisms. Hence, in this part, ablation experiments are conducted on the network structures for spatial and temporal feature extraction. Specifically, the structure of the spatial feature extraction in Figure 1 is defined as Structure 1, while the structure of the temporal feature extraction is defined as Structure 2. Four comparative schemes were designed. In detail, in Scheme 1, Structure 2 is used for spatial feature extraction and Structure 1 is used for temporal feature extraction. In Scheme 2, Structure 1 is used for both spatial and temporal feature extraction. Similar to Scheme 2, Scheme 3 uses Structure 2 for both spatial and temporal feature extraction. Then, the different schemes are compared with the proposed TSCNN-VQA, and the experimental results are shown in Table 2.

Table 2.

Effect of different feature extraction structures on model performance.

For better readability, the best results in Table 2 are marked in bold. By comparing the proposed method with Schemes 2 and 3, it can be seen that feature extraction with different structures for spatial and temporal features extraction achieves better results than feature extraction with the same structure. This result consists of the conclusion that there are differences perceptual mechanisms for the spatial and temporal information. Hence, it is reasonable to adopt different structures for temporal and spatial feature extraction. Furthermore, by comparing the proposed method with Scheme 1, it can be found that the experimental results are better when the spatial feature extraction structure uses a more complex structure than temporal feature extraction. This result proves that the previous considerations in this paper are reasonable. That is, the HVS perceives the spatial information more intuitively than the temporal information. Also, with a temporal masking effect, some distortions may be ignored by the HVS in the video with intensive motion. Therefore, compared with the temporal feature extraction structure, the proposed TSCNN-VQA uses a more complex structure for the extraction of spatial features.

4.2.3. The Effect of Attention Module Location on Performance

To investigate the effect of the attention module on the performance of the proposed TSCNN-VQA, we experimented by integrating the attention module at different locations in the proposed network. Specifically, the locations labelled with ➀, ➁, ➂, ➃ and ➄ in Figure 1 are selected for this experiment. The results of the experiments are shown in Table 3.

Table 3.

Effect of the attention module in different locations.

In Table 3, the first column represents the location where the attention module was integrated, and None means that there was no attention module in the framework. The best results are shown in bold. From Table 3, it can be seen that the best performance is obtained by integrating the attention module at location ➀, ➁ and ➄. Specifically, the performance of the model is improved by 4% and 5% in the PLCC and SROCC, respectively. Obviously, the integration of the attention module is consistent with the visual perception, that is, the human eye pays more attention to the regions that are of interest. Additionally, the appearance of severe distortion also leads to a change in visual attention. Accordingly, location ➀ is behind spatial feature extraction. Integrating the attention module at this location can make the distortion learned from the spatial features more prominent. Similarly, location ➁ makes the distortion learned in the temporal features more prominent. For location ➄, at this point the network learns the spatial–temporal joint features after feature fusion. For the HVS, when the human eye perceives video, it perceives both spatial and temporal information. Consequently, in spatial–temporal information description, it will likewise focus on the parts that interest it and are heavily distorted. We simulate this phenomenon by adding an attention module to this location.

4.2.4. Effect of Spatial Residual Map on Performance

Psycho-visual studies suggest that the HVS is more sensitive with regions with a high level of quality degradation [22]. Hence, as a representation of distortion, the residual map between reference and distorted frames is used as an input in the proposed TSCNN-VQA. In this subsection, ablation experiments with three comparative schemes are conducted to explore the performance contribution of the residual map. For detailed implementation, Scheme 4 uses the temporal residual map and the learned distortion feature map with an element-wise multiplication. In Scheme 5, the network simultaneously conducts the element-wise multiplication between the spatial and temporal residual maps with the learned distortion feature map. Last, neither the error map nor the learned distortion feature map is applied for element-wise multiplication in Scheme 6. The results are shown in Table 4.

Table 4.

Effect of the spatial residual maps on model performance.

In Table 4, obviously, the proposed TSCNN-VQA reports the best performance. It can be concluded that guiding the distortion with a spatial residual map in spatial–temporal joint feature extraction does improve the performance of the model, which proves that the spatial residual provides good spatial attention guidance for the model. By comparing the experimental results with Schemes 4 and 5, it can be found that multiplying with the temporal residual map will slightly decrease performance. From the analysis, we derive that the reason is the existence of the temporal masking effect. With the temporal masking effect, the HVS tends to be less sensitive to the distortion for the existence of intensive moving objects, thus negatively affecting the model’s performance.

4.3. Comparison with the State-of-the-Art Quality Assessment Methods

Based on the above ablation experiments, the validity and rationality of the proposed TSCNN-VQA framework have been verified. In this subsection, we attempt to prove the effectiveness of the proposed method by comparing the performance of the LIVE and CSIQ VQA datasets with the existing state-of-the-art quality assessment methods. To make the experimental results more convincing, different categories of quality methods are selected. In detail, the comparative methods include: (1) classical IQA methods (PSNR [7], SSIM [2], and VIF [30]), (2) classical VQA methods (MOVIE [13], ST-MAD [18], VMAF [31]), and (3) deep learning-based VQA methods (DeepVQA [20], C3DVQA [5], and the method in [21]). The results are shown in Table 5.

Table 5.

Performance comparison (median value) of the LIVE and CSIQ VQA datasets.

To better analyze the comparison results, the best PLCC and SROCC are shown in bold, and the second highest results are underlined. Among the three categories of methods, the traditional IQA methods perform the worst. The reason for this is that IQA methods completely ignore the impact of temporal information on video quality. Compared with traditional IQA methods, the VQA methods take the temporal visual perception or temporal effect into consideration. Hence, the performance indicators are higher than the traditional IQA methods. Recently, the employment of deep learning has further improved performance. For three comparative methods, DeepVQA is an FR-VQA network model using CNNs and attention mechanisms, and C3DVQA applies 3D convolution instead of 2D convolution for feature extraction. Compared with 2D convolution, 3D convolution can describe both temporal and spatial information; hence, C3DVQA outperforms DeepVQA, while the method in [21] combines the advantages of the above two methods, that is, both 3D convolution and attention mechanisms are integrated into the network. Consequently, in terms of performance, the method in [21] is better than DeepVQA and C3DVQA, especially in the CSIQ VQA dataset. As for the proposed TSCNN-VQA, in feature extraction, we not only consider the existence of spatial and temporal information but the difference between the spatial and temporal perception is taken into account. Meanwhile, the integration of SE-Block also improves the efficiency of the feature extraction. Therefore, the proposed TSCNN-VQA reports the best performance indicators in both the LIVE VQA dataset and the CSIQ VQA dataset.

5. Conclusions

In this paper, an end-to-end two-stream convolutional network, named TSCNN-VQA, is proposed for video quality assessment. Firstly, since the spatial and temporal information is perceived by the HVS with different mechanisms, two purposely designed branches are constructed for spatial and temporal feature extraction. Then, to simulate the joint process of spatial and temporal information by the HVS, the extracted spatial and temporal features are continuously fused by the feature fusion module to obtain spatial–temporal joint features. In addition, the channel attention module is integrated to describe the effect that the human eye is more attentive to regions of interest and regions of severe distortion. At the same time, the spatial–temporal joint feature maps are multiplied by the spatial residual maps to make the distortion information be a guide in feature extraction. Finally, non-linear regression is employed to acquire quality scores. The experimental results on the LIVE and CSIQ VQA datasets validate the rationality of each module in TSCNN-VQA, while the performance comparison with the existing quality assessment methods demonstrates its effectiveness.

In the future, we will continue to improve the performance of the proposed VQA methods by exploring the spatial and temporal perception in depth, thus designing better feature extraction networks according to their respective characteristics. We also plan to modify and apply the proposed network to a no-reference video quality assessment. Moreover, to fully demonstrate the research significance of video quality evaluation methods, we will attempt to apply these methods to optimize the performance of practical video systems. On the one hand, the evaluation results from the VQA method can be fed back into the video processing system, such as by optimizing encoding and compression parameters, to enhance processing efficiency. On the other hand, using the VQA method for the real-time evaluation of video quality can help determine parameter settings for streaming platforms and choose compression levels or transmission strategies during network transmission. Ultimately, implementing the VQA method will optimize system performance and enhance user experience.

Author Contributions

Conceptualization, J.H. and Y.S.; methodology, J.H.; software, J.H. and Y.L.; validation, Z.W. and Y.L.; formal analysis, J.H. and Y.S.; data curation, J.H. and Z.W.; writing—original draft preparation, J.H.; writing—review and editing, Z.W., Y.L., and Y.S..; visualization, J.H. and Z.W.; supervision, Y.S.; funding acquisition, Y.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Zhejiang Province, grant number LQ20F010002.

Data Availability Statement

The used datasets were obtained from public open-source datasets.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, J.; Li, X. Study on no-reference video quality assessment method incorporating dual deep learning networks. Multimed. Tools Appl. 2023, 82, 3081–3100. [Google Scholar] [CrossRef]

- Ding, K.; Ma, K.; Wang, S.; Simoncelli, E.P. Image quality assessment: Unifying structure and texture similarity. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 2567–2581. [Google Scholar] [CrossRef] [PubMed]

- Fang, Y.; Yan, J.; Du, R.; Zuo, Y.; Wen, W.; Zeng, Y.; Li, L. Blind quality assessment for tone-mapped images by analysis of gradient and chromatic statistics. IEEE Trans. Multimed. 2020, 23, 955–966. [Google Scholar] [CrossRef]

- Ahn, S.; Choi, Y.; Yoon, K. Deep learning-based distortion sensitivity prediction for full-reference image quality assessment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 344–353. [Google Scholar]

- Xu, M.; Chen, J.; Wang, H.; Liu, S.; Li, G.; Bai, Z. C3DVQA: Full-reference video quality assessment with 3D convolutional neural network. In Proceedings of the 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 4447–4451. [Google Scholar]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. Adv. Neural Inf. Process. Syst. 2014, 27, 568–576. [Google Scholar]

- Qian, J.; Wu, D.; Li, L.; Cheng, D.; Wang, X. Image quality assessment based on multi-scale representation of structure. Digit. Signal Process. 2014, 33, 125–133. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef]

- Wang, Z.; Li, Q. Video quality assessment using a statistical model of human visual speed perception. J. Opt. Soc. Am. A 2007, 24, B61–B69. [Google Scholar] [CrossRef]

- Moorthy, A.K.; Bovik, A.C. Efficient video quality assessment along temporal trajectories. IEEE Trans. Circuits Syst. Video Technol. 2010, 20, 1653–1658. [Google Scholar] [CrossRef]

- Seshadrinathan, K.; Soundararajan, R.; Bovik, A.C.; Cormack, L.K. A subjective study to evaluate video quality assessment algorithms. In Proceedings of the Human Vision and Electronic Imaging XV, San Jose, CA, USA, 18–21 January 2010; Volume 7527, pp. 128–137. [Google Scholar]

- Seshadrinathan, K.; Bovik, A.C. Motion tuned spatio-temporal quality assessment of natural videos. IEEE Trans. Image Process. 2009, 19, 335–350. [Google Scholar] [CrossRef]

- Wang, Y.; Jiang, T.; Ma, S.; Gao, W. Novel spatio-temporal structural information based video quality metric. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 989–998. [Google Scholar] [CrossRef]

- Aydin, T.O.; Čadík, M.; Myszkowski, K.; Seidel, H.-P. Video quality assessment for computer graphics applications. ACM Trans. Graph. 2010, 29, 1–12. [Google Scholar] [CrossRef]

- He, L.; Lu, W.; Jia, C.; Hao, L. Video quality assessment by compact representation of energy in 3D-DCT domain. Neurocomputing 2017, 269, 108–116. [Google Scholar] [CrossRef]

- Vu, P.V.; Vu, C.T.; Chandler, D.M. A spatiotemporal most-apparent-distortion model for video quality assessment. In Proceedings of the 2011 18th IEEE International Conference on Image Processing (ICIP), Brussels, Belgium, 11–14 September 2011; pp. 2505–2508. [Google Scholar]

- Manasa, K.; Channappayya, S.S. An optical flow-based full reference video quality assessment algorithm. IEEE Trans. Image Process. 2016, 25, 2480–2492. [Google Scholar] [CrossRef]

- Yan, P.; Mou, X. Video quality assessment based on motion structure partition similarity of spatiotemporal slice images. J. Electron. Imaging 2018, 27, 033019. [Google Scholar] [CrossRef]

- Kim, W.; Kim, J.; Ahn, S.; Kim, L.; Lee, S. Deep video quality assessor: From spatio-temporal visual sensitivity to a convolutional neural aggregation network. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 219–234. [Google Scholar]

- Chen, J.; Wang, H.; Xu, M.; Li, G.; Liu, S. Deep Neural Networks for End-to-End Spatiotemporal Video Quality Prediction and Aggregation. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo (ICME), Shenzhen, China, 5–9 July 2021; pp. 1–6. [Google Scholar]

- Daly, S.J. Visible differences predictor: An algorithm for the assessment of image fidelity. In Proceedings of the Human Vision, Visual Processing, and Digital Display III, San Jose, CA, USA, 10–13 February 1992; Volume 1666, pp. 2–15. [Google Scholar]

- Kim, J.; Nguyen, A.D.; Lee, S. Deep CNN-based blind image quality predictor. IEEE Trans. Neural Netw. Learn. Syst. 2018, 30, 11–24. [Google Scholar] [CrossRef]

- You, J.; Ebrahimi, T.; Perkis, A. Attention driven foveated video quality assessment. IEEE Trans. Image Process. 2013, 23, 200–213. [Google Scholar] [PubMed]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data. 2019, 6, 60. [Google Scholar] [CrossRef]

- Seshadrinathan, K.; Soundararajan, R.; Bovik, A.C.; Cormack, L.K. Study of subjective and objective quality assessment of video. IEEE Trans. Image Process. 2010, 19, 1427–1441. [Google Scholar] [CrossRef]

- Vu, P.V.; Chandler, D.M. ViS3: An algorithm for video quality assessment via analysis of spatial and spatiotemporal slices. J. Electron. Imaging 2014, 23, 013016. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Sheikh, H.R.; Bovik, A.C. Image information and visual quality. IEEE Trans. Image Process. 2006, 15, 430–444. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Aaron, A.; Katsavounidis, I.; Morthy, A.; Manohara, M. Toward a Practical Perceptual Video Quality Metric, September 2019. Available online: https://medium.com/netflix-techblog/toward-a-practical-perceptual-video-quality-metric-653f208b9652 (accessed on 6 June 2016).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).