1. Introduction

Lithium-ion batteries, as established energy storage devices, are extensively employed in various industrial and domestic applications. Consequently, the market’s demand for these batteries and stringent quality requirements continue escalating [

1]. The manufacturing process for lithium battery electrode sheets is intricate, as it is vulnerable to various factors such as environmental conditions, equipment reliability, and human intervention. These factors often result in defects like metal leakage, holes, and scratches on the electrode sheet surface [

2]. Since electrodes are crucial components of lithium batteries, surface defects can significantly impair the battery’s performance and longevity, potentially posing safety hazards [

3]. Hence, detecting defects in lithium battery electrodes is imperative to ensure the reliability and safety of these batteries.

The defect detection technology of lithium battery electrodes is mainly divided into traditional and deep learning-based defect detection algorithms. In lithium battery electrode defect detection, the traditional defect detection algorithm makes it difficult to meet the defect detection task of the high-speed moving electrode in the industrial production environment. The faults on the lithium battery electrode are minor and complex, with many defects. Traditional defect detection algorithms rely on predefined feature extraction rules and may not be able to capture complex or subtle defect features. The traditional method is slow in complex defect detection tasks, and it is not easy to meet the requirement of real-time detection in industrial production. More and more scholars have applied deep learning-based defect detection technology to the surface defect detection of lithium batteries. Defect detection technology in the context of object detection algorithms is bifurcated into two primary categories: single-stage and two-stage object detection algorithms. Prominent among two-stage algorithms are R-CNN, Fast R-CNN, and Faster R-CNN, which are widely recognized for their precision [

4,

5,

6]. On the other hand, standard single-stage methods like You Only Look Once (YOLO) and Single Shot MultiBox Detector (SSD) are notable for their speed [

7,

8]. While two-stage algorithms excel in detection accuracy, they are generally slower compared to their single-stage counterparts [

9,

10,

11]. This makes single-stage algorithms particularly attractive for applications like lithium battery pole chip defect detection, where speed is crucial. The latest iteration in the YOLO series, the YOLOv8, emerges as a fitting solution for this application, balancing superior detection speed with enhanced accuracy, thus meeting the industrial demands for lithium battery pole chip defect detection.

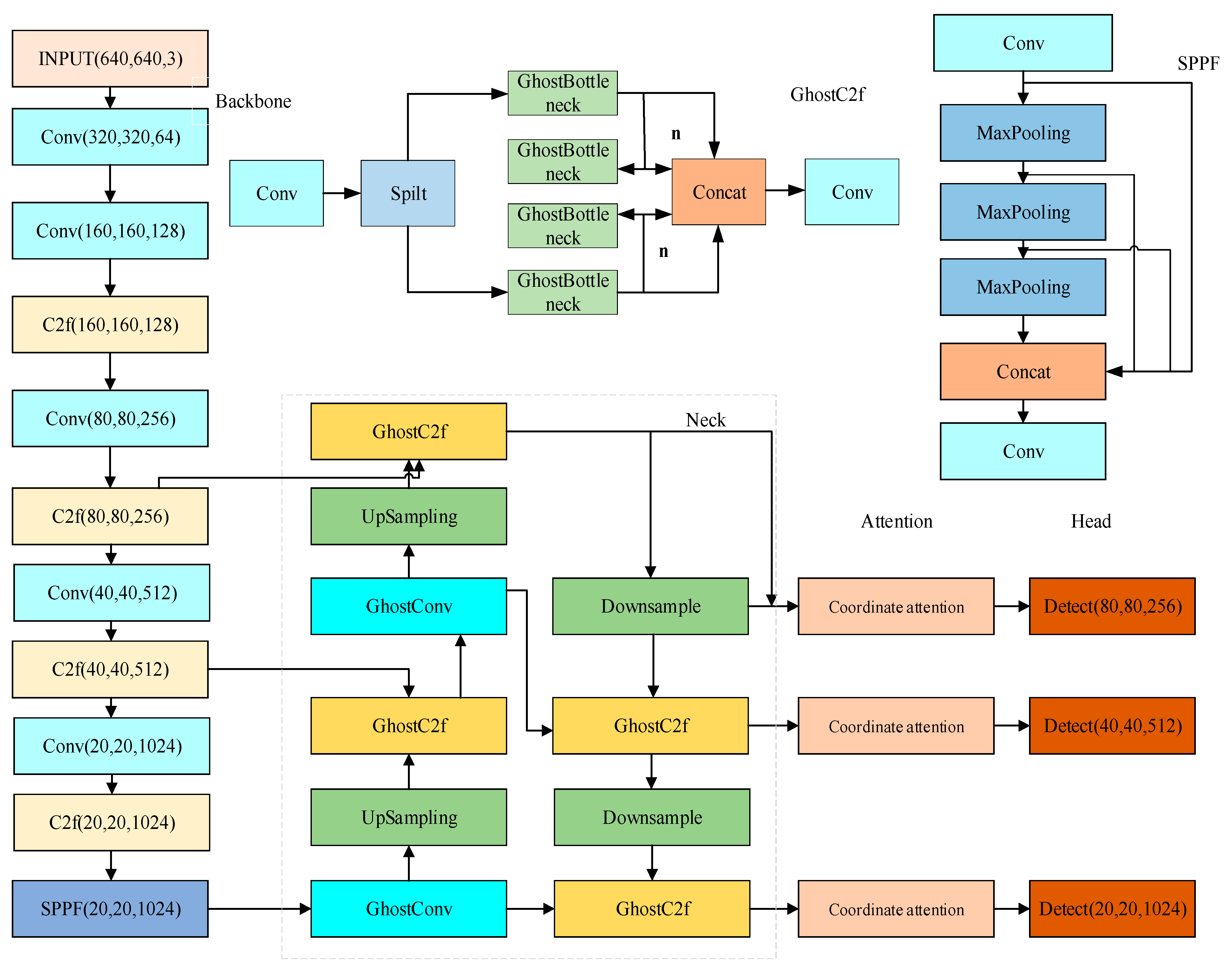

To address the challenge posed by traditional target detection methods, particularly their inefficiency in detecting small targets within lithium battery electrode defect detection, this study introduces an innovative model: YOLOv8-GCE(Ghost-CA-EIoU), an enhancement based on the YOLOv8. The primary contributions of this algorithm are as follows:

Firstly, the lightweight convolutional GhostConv (GhostNet Convolution) is used to replace the standard convolution, and the GhostC2f (GhostNet C2f) module is designed to replace part of the C2f (CSP Darknet53 to 2-Stage FPN), which reduces model computation and improves feature expression performance.

Then, the CA module is incorporated into the neck network, amplifying the feature extraction efficiency of the improved model.

Finally, the EIoU loss function is added to improve the regression performance of the network, resulting in faster convergence.

This paper is organized as follows:

Section 2 provides an overview of the related work in the field.

Section 3 delves into the YOLOv8 network model, detailing the strategies employed for its improvement.

Section 4 initially describes the experimental setup, including the environment and parameter configurations. This section then proceeds to present a series of experiments: comparative, ablation, module position analysis, and algorithm visualization, all conducted on the dataset to validate the efficacy of the improved algorithm. Finally,

Section 5 summarizes the study and outlines prospective directions for future research.

2. Related Work

The defect detection methodologies for lithium battery electrode plates predominantly fall into two categories: traditional defect detection algorithms and those based on deep learning. The latter is garnering increasing attention from scholars for its application in detecting surface defects of lithium batteries [

12]. Presently, lithium battery electrode chip defect detection research primarily utilizes traditional detection algorithms and image segmentation technology. For instance, Xu et al. utilized standard image processing tools like contrast adjustment, the Canny operator, and logic operations to process images of lithium battery pole slices. By extracting texture features, edge features, and HOG (Histogram of Oriented Gradients) features of the defect area, their method achieved an average recognition rate of 98.3% on the test set [

13]. Additionally, Liu et al. developed a rapid background compensation algorithm to counteract issues caused by uneven thickness in lithium battery pole slices and inconsistent lighting conditions during imaging [

14]. They employed an adaptive threshold segmentation algorithm based on gray histogram reconstruction, demonstrating significant improvements in detection outcomes and operational speed compared to classical methods like Otsu’s algorithm. Nevertheless, as the new energy industry rapidly evolves, traditional algorithms are increasingly falling short of meeting the escalating demands for detection accuracy and real-time performance. Consequently, deep learning-based defect detection algorithms, with their robust characterization capabilities and superior adaptability, are progressively supplanting traditional methods and have emerged as a focal point in research on surface defect detection.

Initially introduced by Joseph et al. in 2016, the YOLO (You Only Look Once) algorithm marked a significant advancement in object detection. Following its introduction, subsequent researchers have continuously enhanced the original framework, leading to the development of YOLOv2 and YOLOv3. These iterations have seen a steady improvement in detection performance, garnering extensive attention and becoming a focal point of research within the industry [

15,

16]. Cao et al. improved the YOLOv3 network model to solve the problem of difficult detection of acceptable defects on the wafer surface, and mAP increased by 13 percentage points and improved the detection speed [

17]. Lan et al. utilized YOLOv3 as a foundational model and introduced the integration of the batch normalization (BN) layer with the convolutional layer. This strategic combination shortened the model’s training duration and involved enhancements to the loss function, optimizing the overall performance [

18]. In 2020, Alexey et al. innovatively integrated multiple practical modules based on traditional YOLO. They proposed the YOLOv4 algorithm with higher efficiency and a more accurate detection effect, which has been studied and improved by many experts and scholars [

19]. Huang et al. focused on optimizing the PANet structure within the YOLOv4 framework. Their approach involved the expansion of novel shallow features, which were then integrated with the existing feature layers. This innovation reduced the model’s complexity and effectively enhanced the network’s performance in defect detection. As a result of these improvements, the modified model exhibited a 3.4% increase in mean average precision (mAP) and a notable 29% boost in detection speed [

20]. Zhang et al. used a cross-stage local bottleneck module and a small target prediction head combined with YOLOv5 to detect panel defects and obtained good accuracy on multi-scale targets [

21,

22]. Dai et al. proposed a tripartite feature enhancement pyramid network and introduced a feature calibration module to calibrate the up-sampled features to achieve an accurate correspondence of feature fusion [

23].

The YOLOv8 algorithm, building upon the successes of its predecessors, introduces a series of novel functions and enhancements. These advancements aim to augment the model’s structural flexibility and improve its detection accuracy. This evolution represents a significant stride in the ongoing development of the YOLO series, continually pushing the boundaries in object detection performance. In addressing the challenge of small target detection in memorable scenes, Lou et al. introduced an innovative downsampling technique and a feature fusion network tailored to the YOLOv8 framework. This approach uniquely assimilates shallow and deep information, concurrently preserving essential background feature details and facilitating a nuanced and effective detection strategy within complex visual environments [

24]. Li et al. advanced the YOLOv8 framework by refining its lightweight backbone network and integrating the Bi-PAN-FPN (Bi-Directional Path Aggregation Network-Feature Pyramid Network) module into the neck network. This innovation effectively mitigated the loss of information during long-distance feature transmission, thereby enhancing the overall efficiency and accuracy of the model [

25]. Previous research in target detection has yielded significant advancements in model recognition precision and efficiency. However, challenges persist, particularly in detecting small targets with variable morphology and low contrast. Addressing these issues, the algorithm proposed in this paper uses YOLOv8 as the foundational model and introduces several key enhancements. Initially, the model undergoes lightweight modifications in its neck structure, employing the GhostConv convolutional layer to replace standard convolutions and introducing the novel GhostC2f module instead of part of the C2f. This facilitates learning residual features and enriches the network with diverse gradient flow information. Subsequently, the CA module is integrated into the neck network, significantly bolstering its feature extraction capabilities [

26]. Finally, the EIoU loss function is employed, superseding the original YOLOv8 loss function. This change accelerates convergence speed, enhances positioning accuracy, and aligns the model more closely with the practical demands of industrial applications, where detecting speed and accuracy are paramount.

3. YOLOv8 Algorithm Improvement Strategy

YOLOv8, representing the most recent iteration in the YOLO series, is versatile enough to be applied to object detection, image classification, and instance segmentation tasks. In the context of this paper, its application is focused on object detection tasks. The structure diagram of the YOLOv8 model is illustrated in

Figure 1.

The YOLOv8 model, as depicted in

Figure 1, comprises four key components: Input, Backbone, Neck, and Head. At the Input stage, YOLOv8 undertakes data preprocessing, employing four primary augmentation methods during training: Mosaic enhancement, Mix-up enhancement, Random Perspective enhancement, and HSV (Hue, Saturation, Value) augmentation. These methods enrich the detection background of images, thereby increasing the number of targets and enhancing the diversity of the image dataset [

27]. The Anchor-Free thought is used to improve the prediction strategy to accelerate NMS (Non-Maximum Suppression) [

28].

The backbone of the YOLOv8 model is primarily composed of convolution layers, C2f, and SPPF (Spatial Pyramid Pooling Fast), which collectively contribute to an enhanced feature expression capability. This is achieved through a dense residual structure, enabling the model to capture and process complex input data features effectively. The C2f module adds more branches to enrich tributaries of gradient backpasses. According to the scaling coefficient, splitting and splicing operations change the number of channels to reduce the computational complexity and model capacity. In the tail of the backbone, the rapid spatial pyramid pooling layer is employed to enhance the receptive field and capture feature information from multiple levels of the picture [

29].

The neck, serving as an intermediary layer bridging the backbone and the detection head in the YOLOv8 model, is intricately structured. It comprises two up-sampling layers and multiple C2f modules, which are instrumental in further extracting multi-scale features. YOLOv8 continues to utilize the FPN-PAN architecture to construct its feature pyramid. This design choice ensures a comprehensive integration of the network’s information flow from upper and lower levels, thereby significantly augmenting the model’s detection efficiency [

30,

31].

As the terminal component responsible for predictions, the head of the YOLOv8 model employs an Anchor-Free approach to determine the location and classification of targets, drawing on the feature attributes processed by the neck. This method is particularly effective in identifying targets with atypical lengths and widths. In the output phase, the head employs a decoupled structure, distinctly segregating the detection and classification tasks. Furthermore, the model’s accuracy is refined by identifying positive and negative samples based on scores weighted by classification and regression metrics. This approach streamlines the prediction process and significantly enhances the model’s overall performance.

3.1. Introduce the GhostNet Module to Improve the Network Module

The Huawei team has proposed the lightweight CNN network known as the Ghostnet [

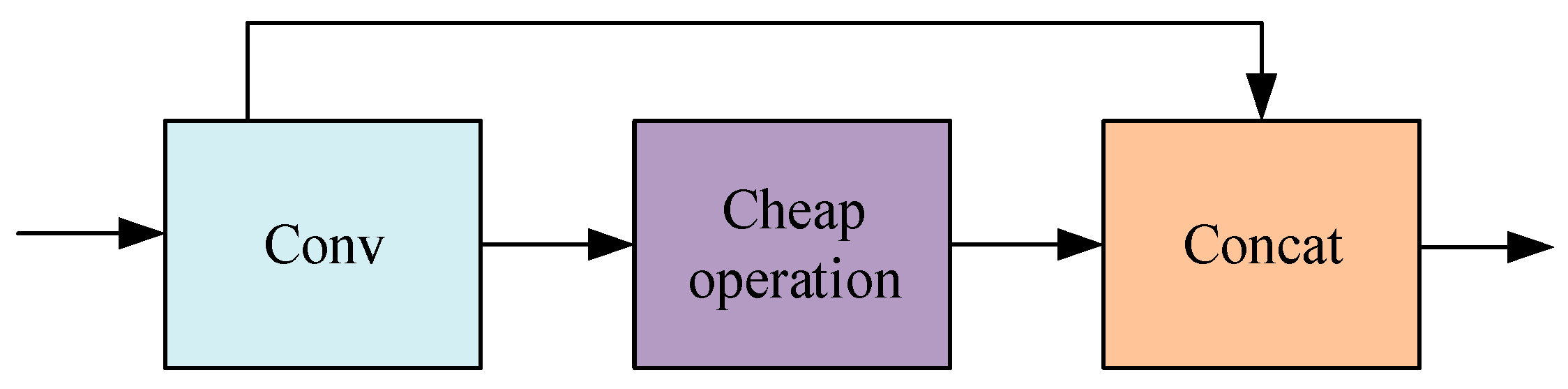

32]. As shown in

Figure 2, its core Ghost module is to generate feature maps through original convolution and cheap operation. Cheap operation can be a linear transformation of the remaining feature maps, or it can also be the result of the original convolution generate similar feature diagrams through depth-wise convolution. This article uses the GhostConv’s new convolutional method. The convolutional method uses uniformly mixed operations to penetrate the standard convolution information into the information generated by depth, which can be separated. Keep information interaction between channels, design the GhostC2f structure based on GhostConv, use GhostConv instead of ordinary convolution operations on the neck’s side, and swap out the initial C2f module with the GhostC2f module while maintaining the complexity of the model calculation and the accuracy of the reasoning time.

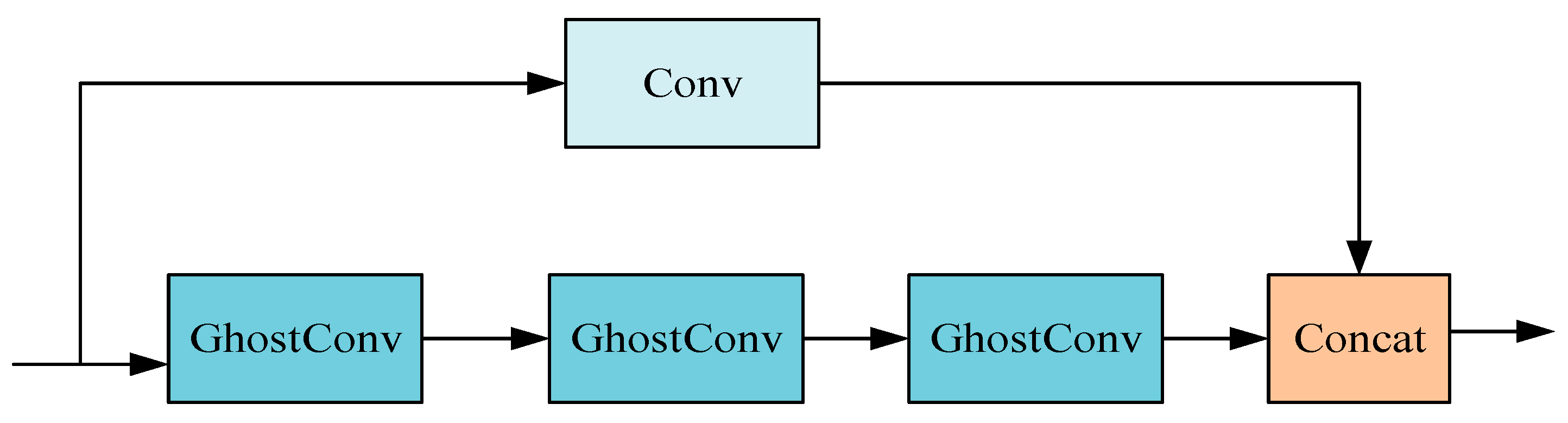

As depicted in

Figure 3, the study introduces a novel double-layer bottleneck structure, GhostBottleneck (GhostNet Bottleneck), comprising one convolution layer coupled with multiple GhostConv layers. This innovative design synergizes the robust feature extraction capabilities of conventional convolution with the efficient computational performance of the GhostConv. The structure is adept at extracting rich features across various levels and scales, thereby enhancing the speed of processing redundant information. This functionality is particularly beneficial for detecting minor and complex multi-type defects on lithium battery electrodes, meeting the stringent requirements of precision and efficiency necessitated in such applications.

As illustrated in

Figure 4, the GhostC2f module designed in this paper replaces all bottlenecks in the C2f module in the original network with the Ghostbottleneck. The redesigned GhostC2f module employs an interstage feature fusion strategy and truncated gradient flow technology, which are instrumental in amplifying the variation in learning characteristics across different network layers, effectively minimizing the impact of redundant gradient information and bolstering the network’s learning capacity. The integration of the GhostConv and GhostC2f modules significantly reduces the count of conventional 3 × 3 convolutions found in the original architecture, leading to substantial savings in computational resources and training time and markedly reducing the overall model size. Furthermore, the strategic combination of hierarchical design and residual connections facilitates improved gradient flow, enhancing the ability to train deeper network models effectively.

3.2. CA Module

To enhance the model’s capacity for expressing features, the CA module was added to the YOLOv8 model for local feature enhancement so that the network model could ignore the interference of irrelevant information [

33]. The CA module, as shown in

Figure 5, is a new channel attention module that can be flexibly added to multiple positions in the existing model. The use of the GhostConv to maximize the hidden link between each channel at the front-end network can deepen the network layer of the model, which will exacerbate the resistance to the data flow. When these characteristic charts run to the neck part, the channel dimension has reached compared to the neck. To a large extent, the width dimension is small. Therefore, integrating the CA module after the neck module significantly enhances the model’s ability to learn regional features specific to surface defects on lithium battery electrodes. This strategic placement allows for a more focused extraction of defect features from the electrode plates, enabling more relevant feature data aggregation. Consequently, this enhances the precision of the algorithm’s detection capabilities.

First, the initial feature map is input, global average pooling is carried out so that the CA module can obtain attention weights in different directions, and the two-dimensional global pool is deconstructed to obtain feature maps in two directions, as shown in Formulas (1) and (2).

Subsequently, the two feature graphs of width and height that determine the direction of the global receptive field are combined. These feature graphs are then incorporated into the convolution module, which uses a 1 × 1 shared convolution kernel. The dimensions of the feature graphs

F1 are returned to their original

C/r after batch normalization. Ultimately, by feeding the Sigmoid activation function, a feature graph f in the form of 1

× (W + H) × C/r is produced, as indicated by Formula (3).

Next, concerning the initial dimensions, the feature graph f is processed using a 1 × 1 convolution kernel, producing feature graphs

and

, both maintaining an equal number of channels. The attention weights for these feature graphs, denoted as

and

for the height and width dimensions, respectively, are derived through the Sigmoid activation function. This process is further elaborated in Formulas (4) and (5) presented below.

Following the computation above, the attention weights

and

for the input feature map are determined, corresponding to the height and width dimensions, respectively. Subsequently, the initial feature graph undergoes a multiplication weighting operation, producing a new feature graph that incorporates the attention weights for width and height dimensions, as specified in the Formula (6).

3.3. Improved Loss Function

The loss function calculates the difference between the neural network’s prediction and actual frames [

34,

35]. The value of the loss function decreases as the two get closer. Improving the loss function can optimize the model performance and training effect. EIoU consists of IoU loss (

, distance loss (

, and orientation loss (

). The IoU loss function is calculated as Formula (7).

In this context,

A represents the size of the predicted bounding box, while

B denotes the size of the actual bounding box. The term

is used as a weight function representation, and

v is utilized to calculate the comparability of the aspect ratio. Additionally,

C is defined as the minimum area of overlap between the predicted and actual boxes. In this model,

w and

h denote the width and height of the predicted box, respectively, while

and

represent the width and height of the actual box. The formulas for

and

v are given in Formulas (8) and (9).

The EIoU loss function is calculated as Formula (10).

C is the minimum overlapping area between the predicted and actual boxes. wc and hc represent the smallest external rectangle’s width and height, respectively. In the model, the center points of the actual box and the predicted box are denoted by and , respectively.

Given the problems that the current network model needs to improve the detection accuracy and speed of lithium battery electrodes, the number of model parameters and the size of the model are too large, the algorithm in this paper takes YOLOv8 as the base model framework, improves the network backbone network to be consistent with the backbone network of YOLOv8, and replaces all the C2f modules in the neck network with GhostC2f modules. All the standard convolutions in the neck network are replaced by the GhostConv, which can effectively reduce the complexity of the neck network. The CA module is incorporated into the neck network, amplifying the feature extraction efficiency of the improved model. The EIoU loss function is employed to swap out the initial YOLOv8 loss function, which improves the regression performance of the network. Integrating the aforementioned improved module with YOLOv8 has led to the proposal of a high-precision, real-time electrode defect detection method for lithium-ion batteries, termed YOLOv8-GCE, whose network structure is depicted in

Figure 6.

4. Experiment

4.1. Experimental Environment and Parameter Configuration

This experiment utilized a self-constructed dataset of lithium battery pole slice images, encompassing various defect types such as holes, scratches, and metal leakage.

Table 1 displays the configuration of the experimental environment.

The data set prepared for this experiment was partitioned into training and test sets, following an 8:2 ratio. The specific parameter settings used in the experiment are detailed in

Table 2.

4.2. Evaluation Index

The evaluation indexes chosen for model detection accuracy were mean average precision (mAP@0.5), recall rate (

R), and precision rate (

P). FPS is chosen as the real-time detection evaluation index based on model detection performance. FPS stands for frames detected per second, and the amount of this value depends on the hardware setup of the experimental apparatus as well as the algorithm’s weight. The precision rate is calculated as the proportion of accurately predicted positive samples (

TP) to the total number of predicted positive samples (

TP + FP), as delineated in Formula (11).

The recall rate is the ratio of correctly predicting

TP to being

TP + FN, as shown in Formula (12).

In the context of mean precision, ‘m’ denotes the average, and AP@0.5 refers to the average accuracy of samples when the IoU threshold in the confusion matrix is set at 0.5. The term mAP@0.5, representing the mean Average Precision for all test samples at this IoU threshold, illustrates the relationship between the model’s precision rate (

P) and its recall rate (

R). The model finds it easier to keep a high accuracy rate in a high recall rate when the value is higher, as shown in Formulas (13) and (14).

4.3. Experimental Results and Analysis

4.3.1. Contrast Experiment

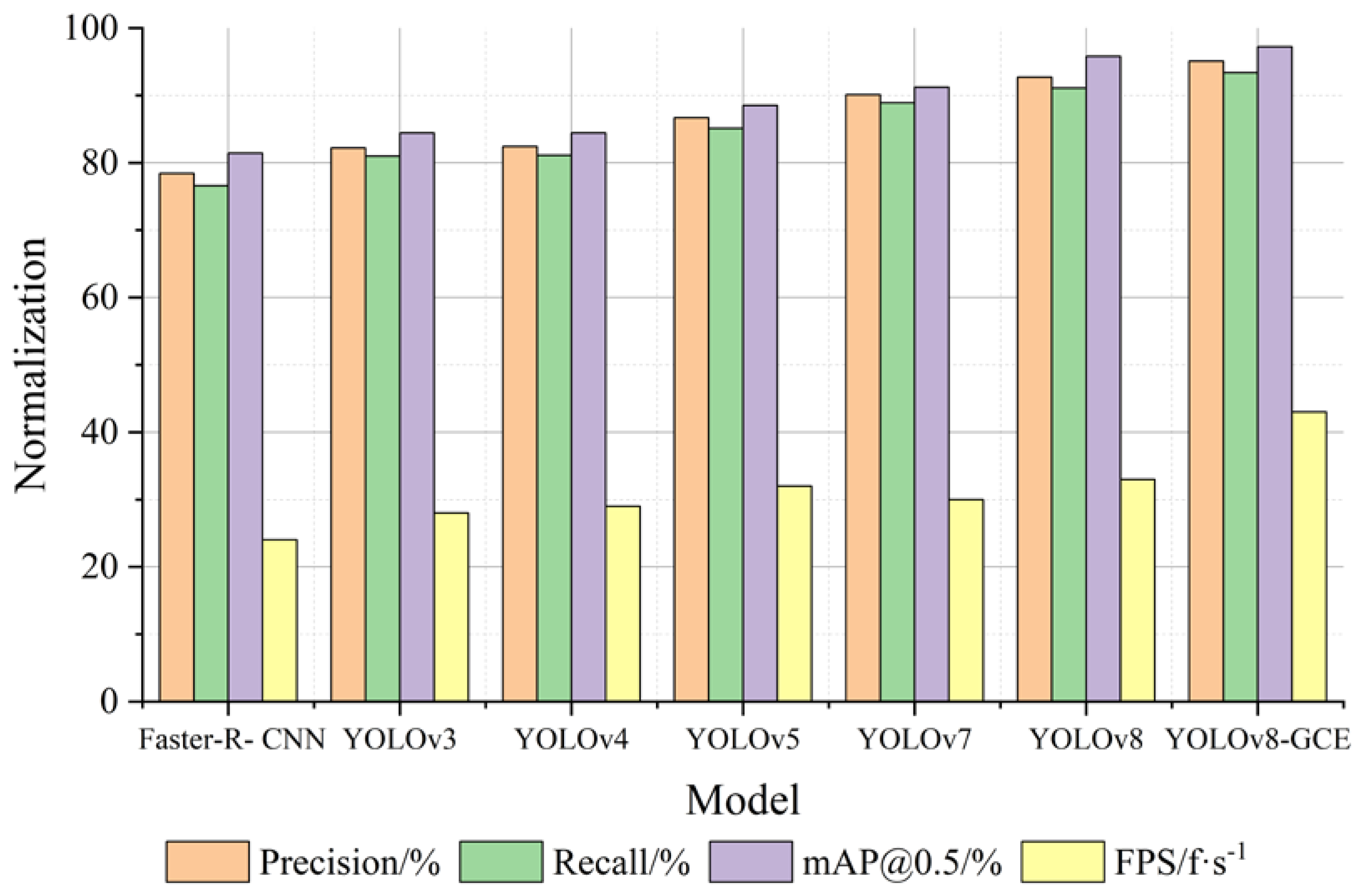

To test and verify the superiority of this algorithm, the algorithm in this paper and Faster-R-CNN, YOLOv3, YOLOv4, YOLOv5, YOLOv7, YOLOv8 under the identical experimental setting (unified configuration with the same data set) using experimental comparison. According to

Table 3 and

Figure 7, YOLOv8-GCE inaccurate rate, homing rate, mAP@0.5, and detection rate (FPS) on the comprehensive performance is superior to Faster-R-CNN, YOLOv3, YOLOv4, YOLOv5, YOLOv7, YOLOv8 algorithm.

4.3.2. Ablation Experiment

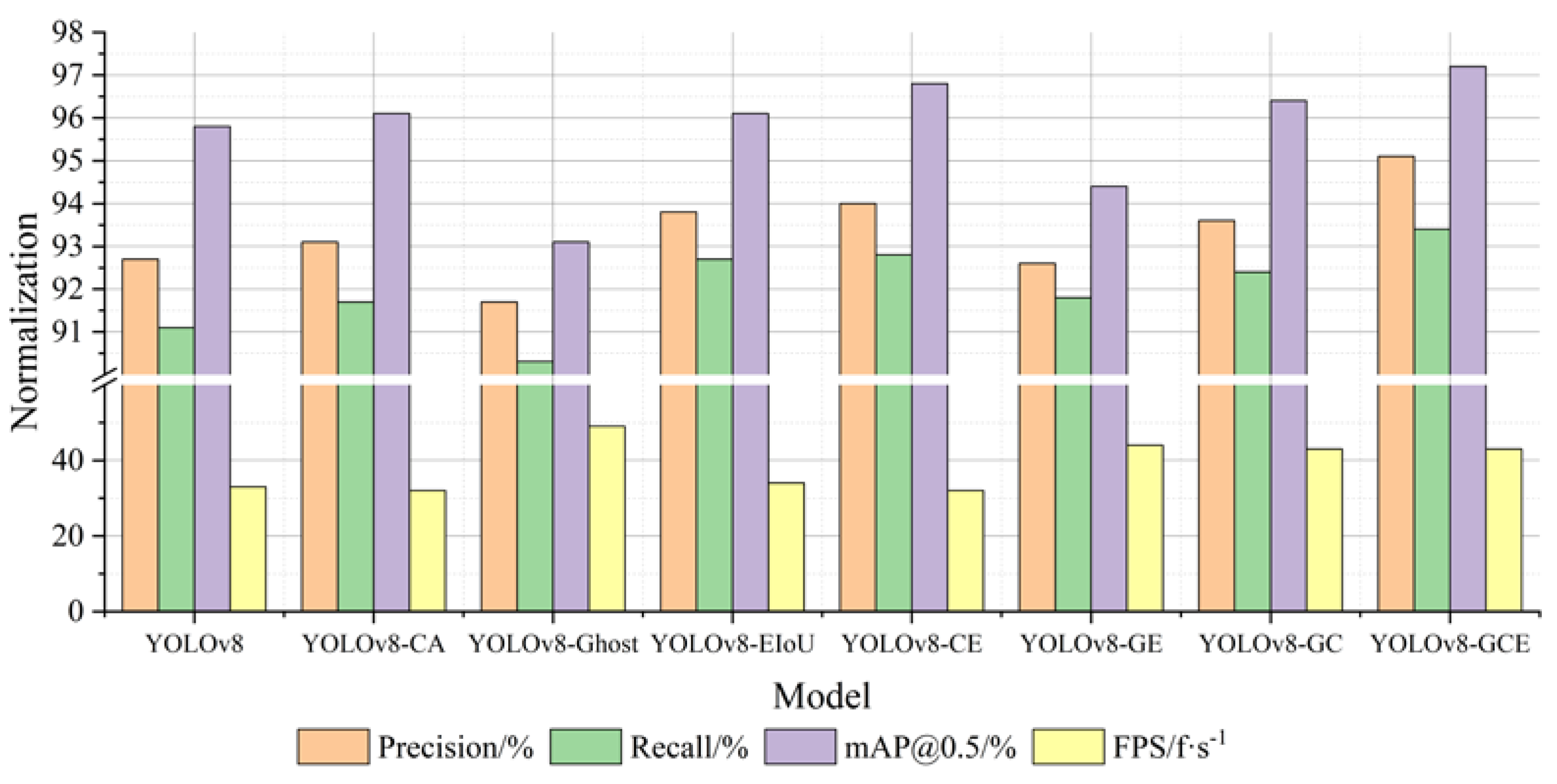

The ablation experiment was designed to confirm the efficacy of the modified portion of the YOLOv8-GCE network model. As indicated in

Table 4 below, ablation experiments were carried out using various combinations of multiple improved modules, with the original model YOLOv8 serving as the baseline model. The checkmark indicates that the network model contains this module, and the cross mark indicates that the network model does not contain this module.

As depicted in

Figure 8, the YOLOv8-GCE model employs a more efficient network structure than the standard YOLOv8. Compared with the YOLOv8-CA model with the CA module and the YOLOv8-EIoU model with the EIoU, the improved algorithm adopts a more efficient network structure. However, the performance of the detection rate (FPS) is superior. It is evident that increasing the detection rate is significantly impacted by the addition of GhostNet to enhance the network. YOLOv8-CE, YOLOv8-GE, and YOLOv8-GC, which combine the three improvement strategies in two, have a good performance in accuracy and a significant improvement in FPS, indicating that the addition of the CA module and EIoU in the YOLOv8 model has a significant effect on enhancing detection accuracy. The expression of YOLOv8-GCE is the best. The accuracy, recall rate, and average accuracy are all improved by 2.4%, 2.3%, and 1.4%, respectively, in comparison to the YOLOv8 model, and the detection rate FPS is significantly improved, which demonstrates the enhanced algorithm’s efficacy as suggested in this paper.

4.3.3. CA Module Position Analysis

This paper includes the design of a position comparison test specifically to check into the influence of the CA module’s placement on the detection results. The CA module is placed in three different locations: behind the backbone’s SPPF, inside the neck’s GhostC2f, and behind the neck’s GhostC2f.

Table 5 displays the comparison test results. When the CA module is added to the neck, the model achieves higher accuracy and recall rate than when the CA module is added to the backbone. This is because the backbone network mainly extracts the image’s underlying characteristics and cannot obtain global information on the defect features of the pole film well. The neck network uses the output of the backbone network to better extract and fuse features. Moreover, positioning the CA module within the neck’s GhostC2f module yields substantial improvements. Adding an attention mechanism to the C2f module post-network allows for more refined adjustments during feature fusion. Consequently, this enables the model to focus more intently on the target’s feature information, thereby significantly enhancing the precision of target detection.

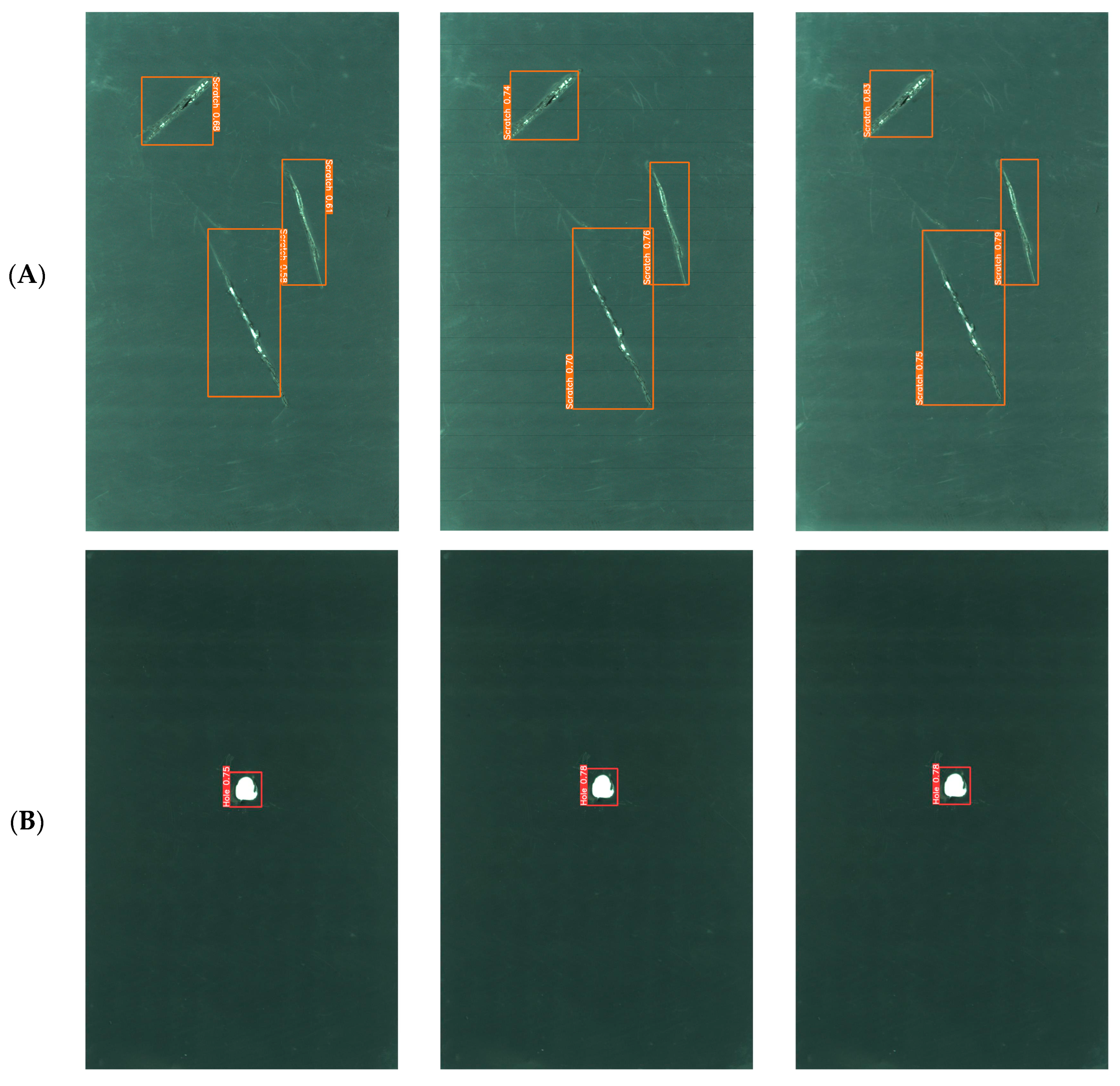

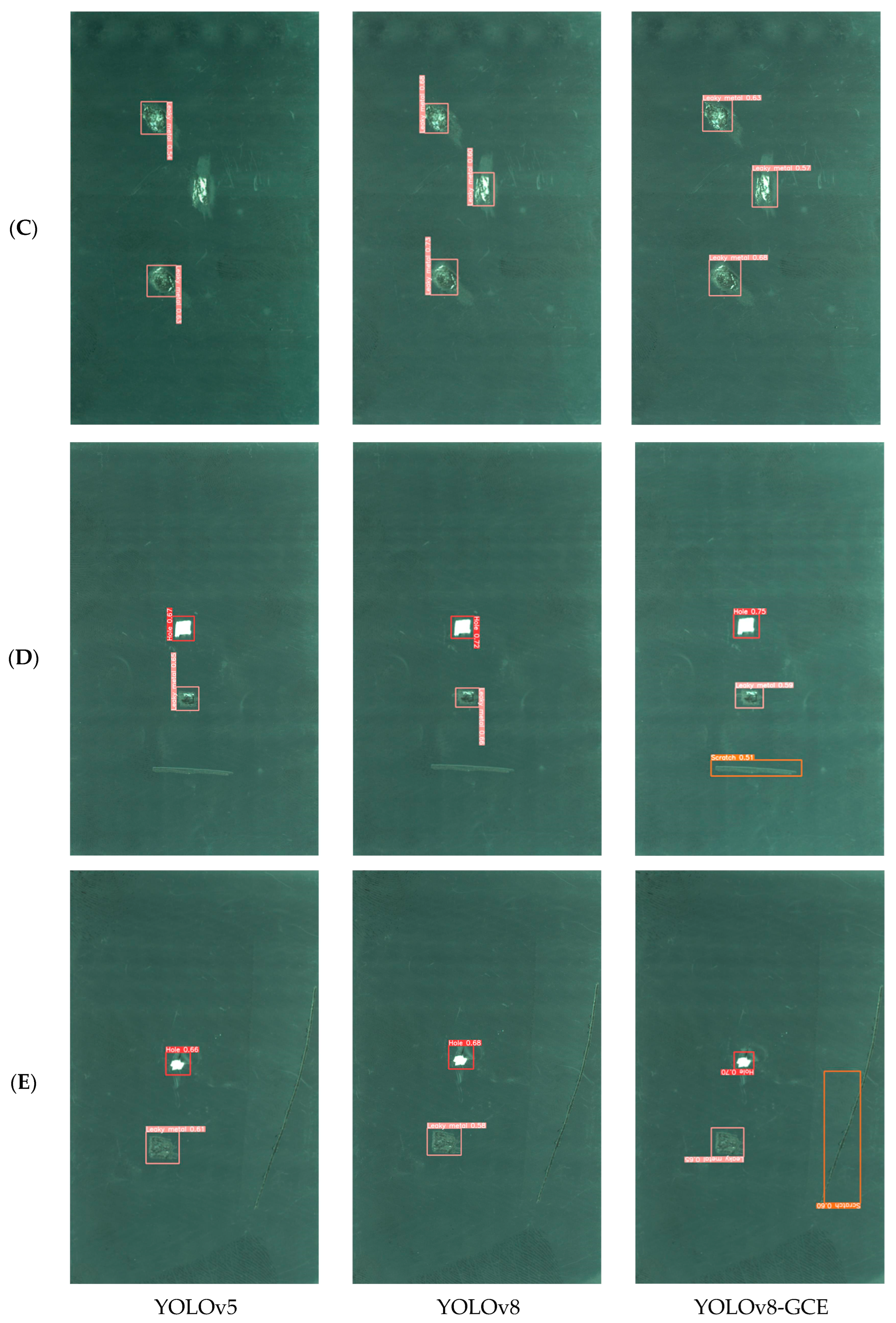

4.4. Algorithm Verification

Compared with YOLOv3 and YOLOv4, YOLOv5 is a more mature and complete YOLO series model. YOLOv8 is improved based on YOLOv5. The network structure of YOLOv7 is shallow. Therefore, YOLOv5, YOLOv8, and the improved YOLOv8-GCE algorithm were selected to contrast the findings of detection of lithium battery electrode plate defects. As shown in

Figure 9, Group A contrasts the experimental effect of the single scratch defect, Group B contrasts the experimental effect of the single hole defect, Group C contrasts the experimental effect of the metal leakage defect, and Group D and Group E contrast the experimental effect of mixed defects.

The comparative analysis of experimental results from Groups A, B, and C reveals distinct outcomes. In Group A, all three algorithms successfully detected the single scratch defect, while in Group B, they identified the single hole defect. The experimental results of Group C show that the three algorithms all detect the single metal leakage defect, proving that the YOLO algorithm has a high accuracy for detecting lithium battery pole chip defects. In this experiment, IoU is set to 0.5, and the detection confidence of the improved YOLOv8-GCE algorithm is significantly higher than that of the YOLOv5 and YOLOv8 algorithms.

According to the comparison of experimental results between Group D and Group E, it is evident that targets that other models are unable to identify can be found by the enhanced YOLOv8-GCE algorithm. In the experiments of Group D and Group E, the mixed defect images containing holes, metal leakage, and scratches were detected, and the performance of the YOLOv5 algorithm was poor; not only did it not detect scratch defects, but it also was inferior to the other two algorithms in the defect detection of holes and metal leakage. The YOLOv8 algorithm also failed to detect scratch defects in relatively complex mixed defect detection images, but its performance was better than the YOLOv5 algorithm. YOLOv8-GCE algorithm can detect all types of defects in mixed defect detection images and performs better in detecting scratch defects in mixed defect images. The YOLOv8-GCE algorithm presented in this paper can solve the issues with incorrect and missing detection of lithium battery electrode chip defects, demonstrating the algorithm’s dependability and ability to satisfy industrial development requirements in industrial applications.

5. Conclusions and Future Work

This essay suggests an enhanced and optimized YOLOv8-GCE battery electrode defect detection model based on YOLOv8. Firstly, the lightweight GhostCony is used to replace the standard convolution, and the GhostC2f module is designed to replace part of the C2f, which reduces model computation and improves feature expression performance. Then, the CA module is incorporated into the neck network, amplifying the feature extraction efficiency of the improved model. Finally, the EIoU loss function is employed to swap out the initial YOLOv8 loss function, which improves the regression performance of the network. According to the experimental findings, the mAP of the YOLOv8-GCE battery pole chip defect detection model in the self-built data set reaches 97.2%, and the FPS is maintained at 43f·s−1. In contrast to the current model, the method has higher detection accuracy and reduces the requirement for platform computing power. In the future, the focus of the research is to deploy the improved model to the automatic detection equipment of lithium batteries and improve the proposed algorithm in practical applications.