3.1. The Structure and Working Mechanism of the FNN

The FNN is a hybrid system composed of artificial neural networks and fuzzy systems, where the former provides learning ability [

55], and the latter provides interpretability and handles uncertainty. Typically, the structure of the FNN is divided into layers, with each layer responsible for executing specific tasks; it can be broadly categorized into two parts: layers that implement the premise part of the rule, and layers that implement the consequent part of the rule [

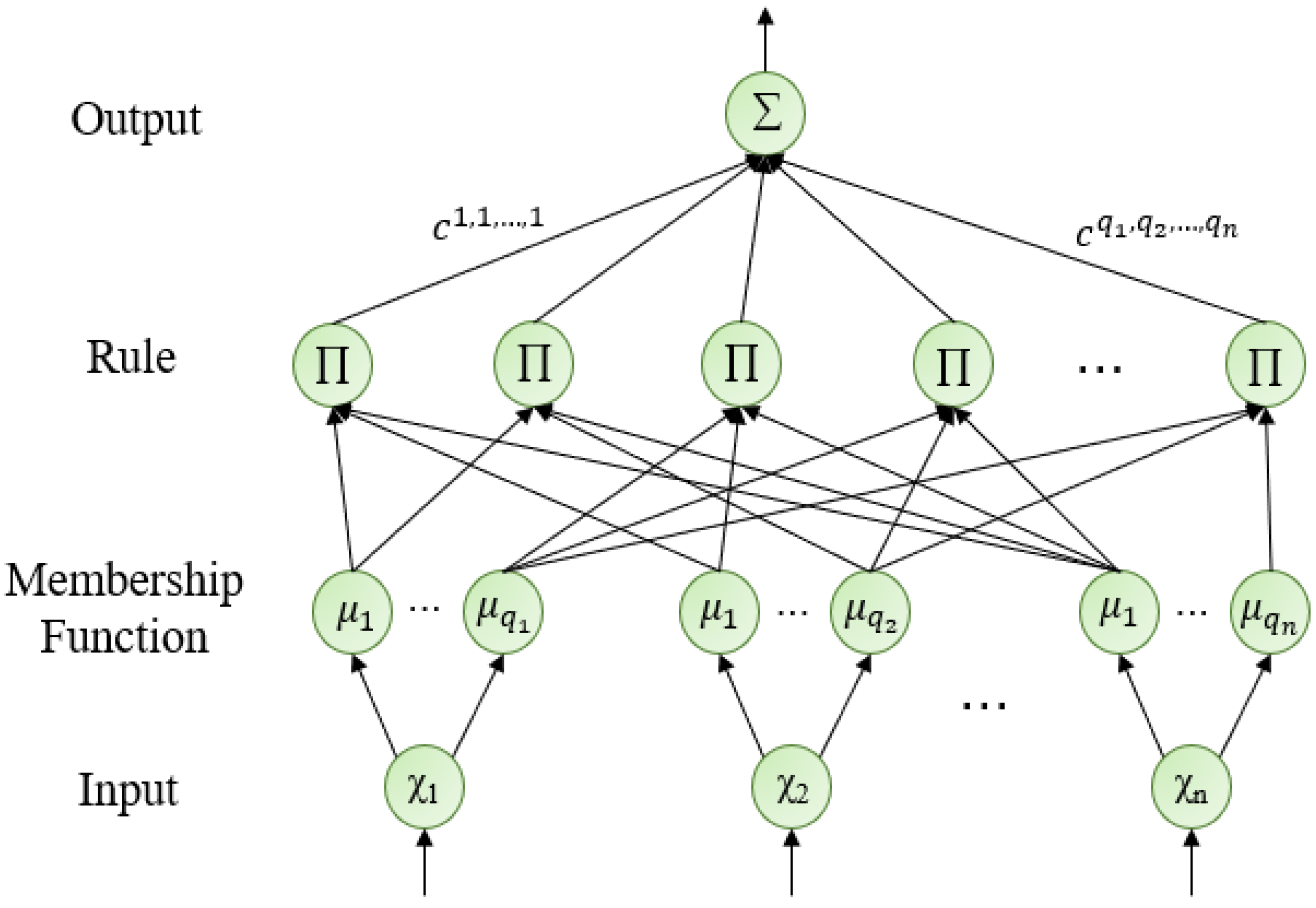

37]. While the number of layers in the FNN design can vary in specific implementations, the functions implemented are the same. In this section, we introduce a commonly-used four-layer structure model known as the IMRO structure, as illustrated in

Figure 1.

The IMRO structure of FNN is divided into four layers: input layer, membership function layer, rule layer and output layer. In the input layer, the raw input is fed to the next layer without any manipulation. In the membership-function layer, each input

is input into

different membership functions. Each membership function represents a kind of semantics, and its output is the membership degree that the input

satisfies this semantics, and its value is between 0 and 1. In the rule layer, each rule node selects a membership function

from the membership function corresponding to each input, which constitutes the precondition of a fuzzy rule, and each rule node also corresponds to a rule parameter

. The rule corresponding to this rule node is:

where

is the input of FNN,

;

represents a kind of fuzzy semantics, such as “fast”, “slow”, “high” or “low” that cannot be accurately expressed, which is mathematically represented as a membership function,

; the rule parameter

is a precise value.

The output of the rule node is the activation strength

of the rule, and the formula for calculating

is:

In this formula,

represents the membership function that represents the fuzzy semantic

. The expression

means the membership degree of the

-th sample for the fuzzy proposition “

”. The symbol

represents the fuzzy intersection operator, which means “

”. Therefore, the activation strength

represents the degree to which the input data meets the preconditions of the rule “

”.

Finally, in the output layer, we accumulate the outputs of each rule to obtain the overall output

of FNN, where the output of each rule is the activation strength

of the rule multiplied by the rule parameter

. The calculation formula for

is:

3.2. Modified Wang–Mendel Method

In 1992, Wang and Mendel proposed the Wang–Mendel (WM) method for extracting rules from data to form a rule base for fuzzy systems [

53]. Wang further improved the method in 2003 [

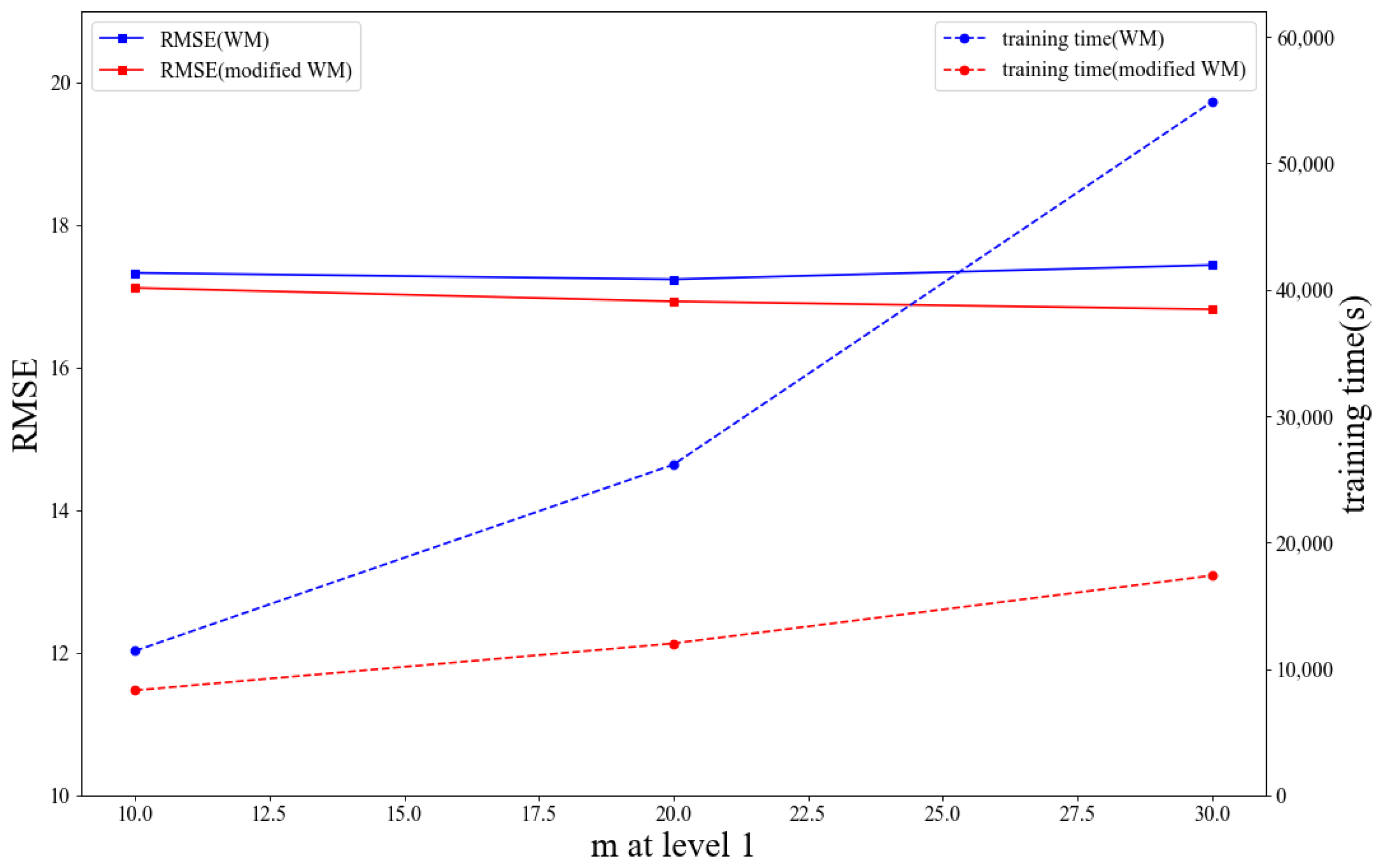

54]. The WM method has the advantage of training the fuzzy system parameters using only one pass through the training data, making it faster than iterative algorithms. However, it faces difficulties with high-dimensional problems due to rule explosion. In 2020, Wang proposed the deep convolutional fuzzy system (DCFS) model to address this issue by designing the low-dimensional fuzzy systems in a bottom-up, layer-by-layer fashion using the Wang–Mendel method. However, the membership function generated by the Wang–Mendel method in DCFS does not consider the regional differences in data, leading to many redundant rules and increased training time. To address this, we modified the membership function division of the Wang–Mendel method to generate uneven rules. The modified Wang–Mendel method is presented below.

Step 1: Given

training input-output data pairs, each data pair is denoted as:

where

is the input of data,

is the output,

,

.

Determine a preset number of membership functions for each input dimension , expressed as . The is related to the physical meaning of the input dimension. Specifically, the more complex the input dimension, the larger the should be set.

Step 2: Calculate the maximum value

and minimum value

of each dimensional variable x of the input data, and divide the region

into

blocks:

where

,

. Calculate the variance of output

corresponding to the sample in each region

in (5) and record it as

, where

. Note:

where

and

are the maximum and minimum values in

, respectively, and

is a hyperparameter,

. The

will be used as a pruning related threshold in subsequent steps.

Step 3: For the dimension whose partition number

satisfies

, we perform a pruning operation, where

is a hyperparameter. We traverse all regions from the first region

. If the current traversal region is

, and the region

has not been traversed, and satisfy:

Then merge region

and region

into region

as

where

,

and

are the variances of the output of corresponding regions

,

and

, and

is a hyperparameter. The merged region must be less than

times of the original region. After region merging, we will check whether

can be merged with the next region

. If not, we will traverse the next region until all regions are traversed.

Step 4: For each merged region, we take the center of the region as the vertex of the membership function, and the center of the adjacent region as the endpoint of the membership function to construct a triangle membership function. For the two regions on the edge, the left and right endpoints of their corresponding membership functions are

and

, respectively. Then, we will obtain

membership functions

; each membership function represents a semantic

.

Figure 2 shows the trigonometric membership function cluster obtained by merging some inputs.

Step 5: Generate a rule space, which contains rule parameters , and the rule corresponding to each rule parameter is shown in (1).

Step 6: Traverse all training data, and for each training data

, calculate the activation strength

of each rule according to Equation (2), and save the maximum activation strength

and output

. The formula for calculating

is

After traversing all

training samples, if a rule has saved at least one

data pair, the rule parameter

of this rule can be calculated according to the following equation:

where

is the total number of training samples,

represents the coordinates of this rule in the rule space, and

is the activation intensity of the

-th sample on the rule, and is the maximum activation intensity among all rules triggered by the

-th sample.

is the output of the

-th sample.

Step 7: For a rule whose rule parameter is not calculated in Step 6, we will obtain its rule parameter from its neighbor rules. Specifically, its rule parameter is the arithmetic mean of the rule parameters of the neighbor rules with rule parameters. Repeat this step until all rules in the rule space have a rule parameter. The sufficient and necessary condition for rule and to be neighbors is that there is and only one , so that or .

For the trained FNN, we use Equation (3) to obtain its output .

In contrast to the ordinary Wang–Mendel method, our proposed improvement involves adding pruning operations consisting of Steps 2, 3 and 4. The ordinary Wang–Mendel method employs triangle membership functions to uniformly cover the data, without considering the regional differences within the data. In our approach, we first partition the input data into uniform regions, and then evaluate the influence of each region using variance analysis. We combine adjacent regions with low influence to form larger regions, for which we then obtain a membership function. This method reduces the number of rules and accelerates the training speed. Our experiments show that the modified Wang–Mendel method reduces the training time by more than half compared to the ordinary Wang–Mendel method. The flowchart of the Modified Wang–Mendel Method is presented in

Figure 3.

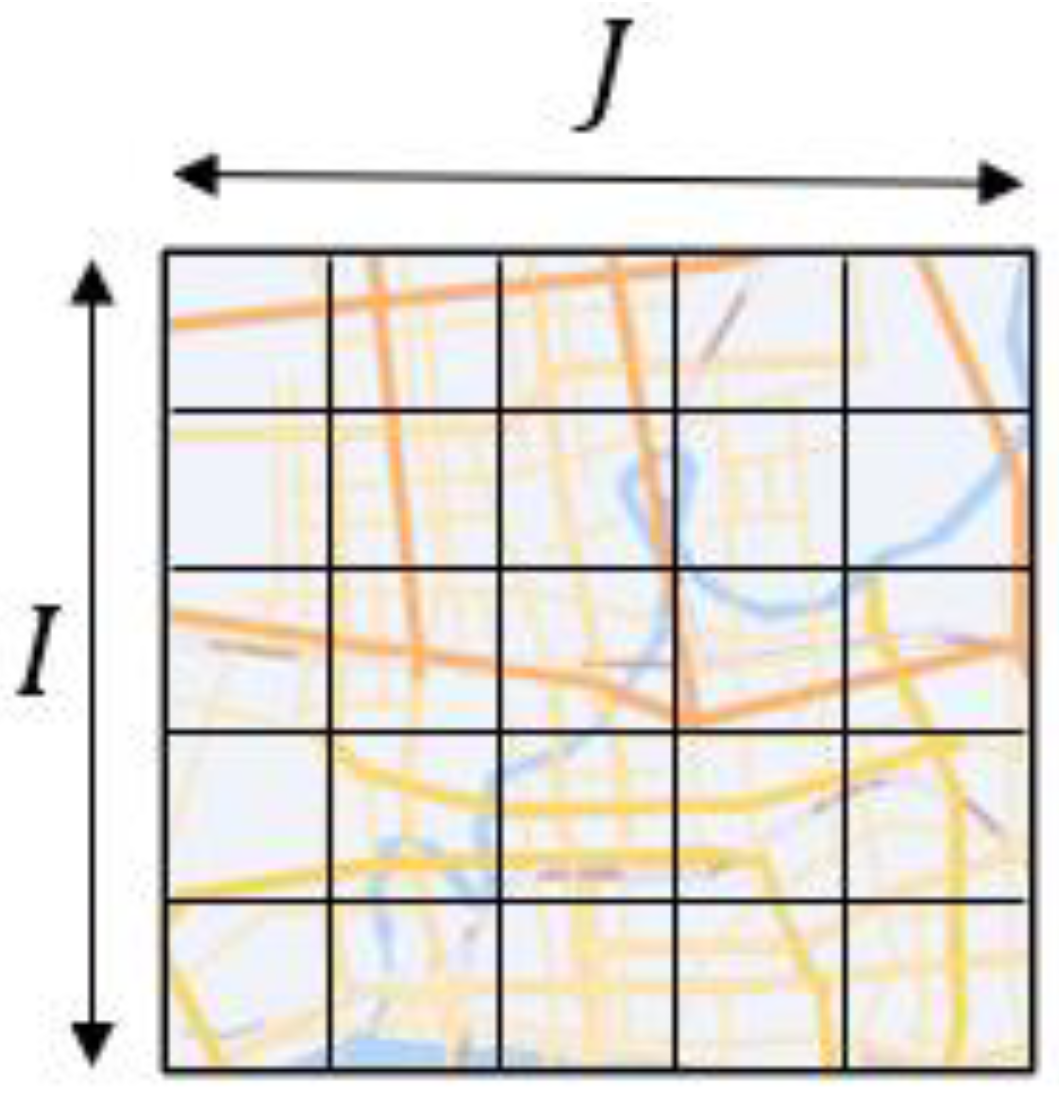

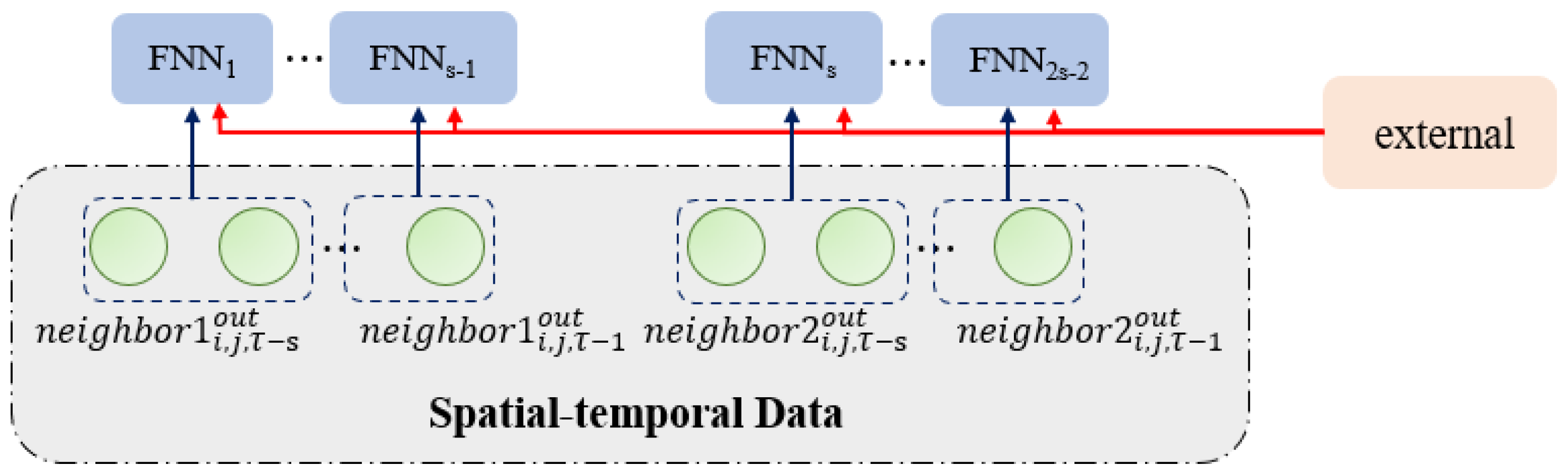

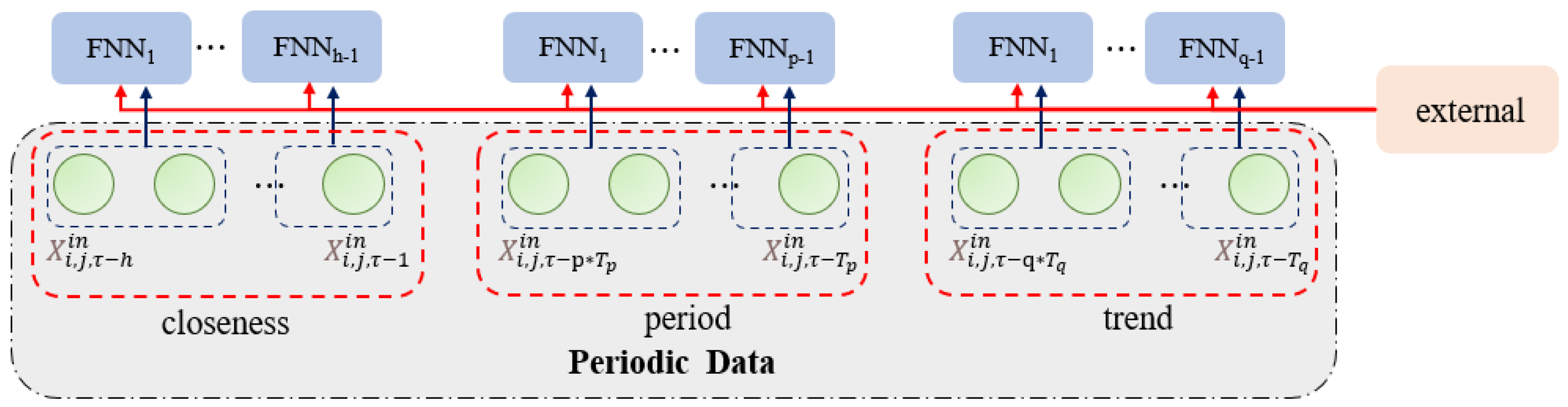

3.3. Problem Definition

In traffic flow prediction, two map representations are commonly used: the topology map structure and the grid map structure. The topology map structure represents intersections as nodes, and the roads as edges, making it suitable for graph convolution (GCN) to capture spatial information. The grid map structure, on the other hand, divides the map into equally-sized areas based on longitude and latitude, with each area considered as a research object. After a comparative analysis, we found that the grid map structure is more appropriate for our study. We divided the map into

grid map using longitude and latitude information, as illustrated in

Figure 4.

Let the set of vehicles contained in the grid at time be , then the inflow of the grid at time is . Similarly, the outflow of the grid at time is .

In addition, we use to represent the inflow and outflow at time , and to represent the external factors at time , where includes the weather, weekend, holiday and time location at time . Then the traffic flow prediction problem can be described by Definition 1.

Definition 1. Given historical values , Predict .

Because fuzzy neural network is suitable for single output model, we decompose the problem described by Definition 1 into 2 × × single output prediction tasks described by Definition 2.

Definition 2. Given historical values , predict .