SVD-SLAM: Stereo Visual SLAM Algorithm Based on Dynamic Feature Filtering for Autonomous Driving

Abstract

1. Introduction

- (1)

- We propose a dynamic feature point screening method that combines multi-view geometry and instance segmentation as a reference for the dynamic feature point rejection module.

- (2)

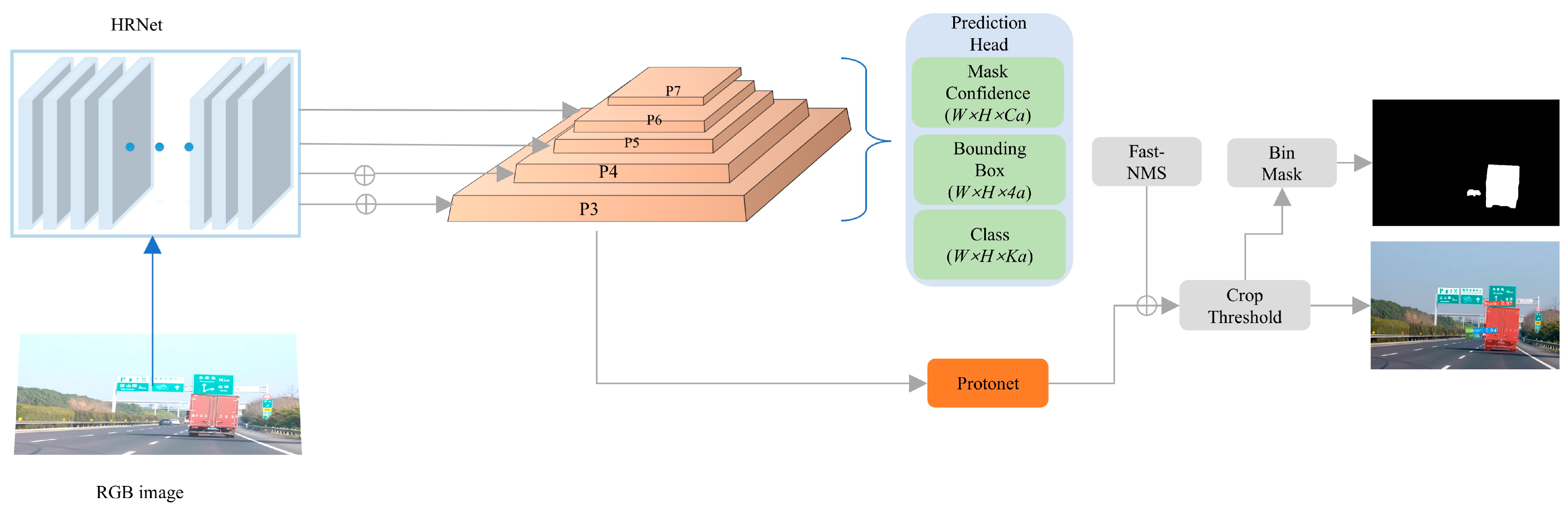

- Incorporating the improved YOLACT++ lightweight instance segmentation network into the SLAM system enables accurate and real-time processing of semantic table bounding boxes of potential dynamic objects.

- (3)

- A SLAM algorithm for autonomous driving scenes based on dynamic feature point filtering is proposed to enhance the localization precision and robustness of self-driving cars in dynamic traffic scenes.

2. Related Works

2.1. Dynamic SLAM Based on Optical Flow

2.2. Dynamic SLAM Based on Semantic Segmentation

3. SVD-SLAM

3.1. System Overview

3.2. Dynamic Feature Point Detection

| Algorithm 1 RANSAC-based method for optimal estimation of fundamental matrix | |

| Require: Set of matching feature points: | |

| Ensure: | |

| 1: | number of iterations |

| 2: | |

| 3: | while () do |

| 4: | randomly selected 8 feature points from |

| 5: | eightpoints |

| 6: | compute fundamental matrix |

| 7: | count = 0 |

| 8: | for in do |

| 9: | Calculate the distance between point P and the polar line |

| 10: | then |

| 11: | count += 1 |

| 12: | Step += 1 |

| 13: | End if |

| 14: | |

| 15: | end for |

| 16: | end while |

| 17: | ifthen |

| 18: | |

| 19: | End if |

3.3. Improved Instance Partitioning Network Based on YOLACT++

3.4. SLAM Algorithm for Dynamic Feature Point Filtering

4. Experimental Section and Analysis

4.1. Traffic Scene Instance Segmentation Effect Analysis

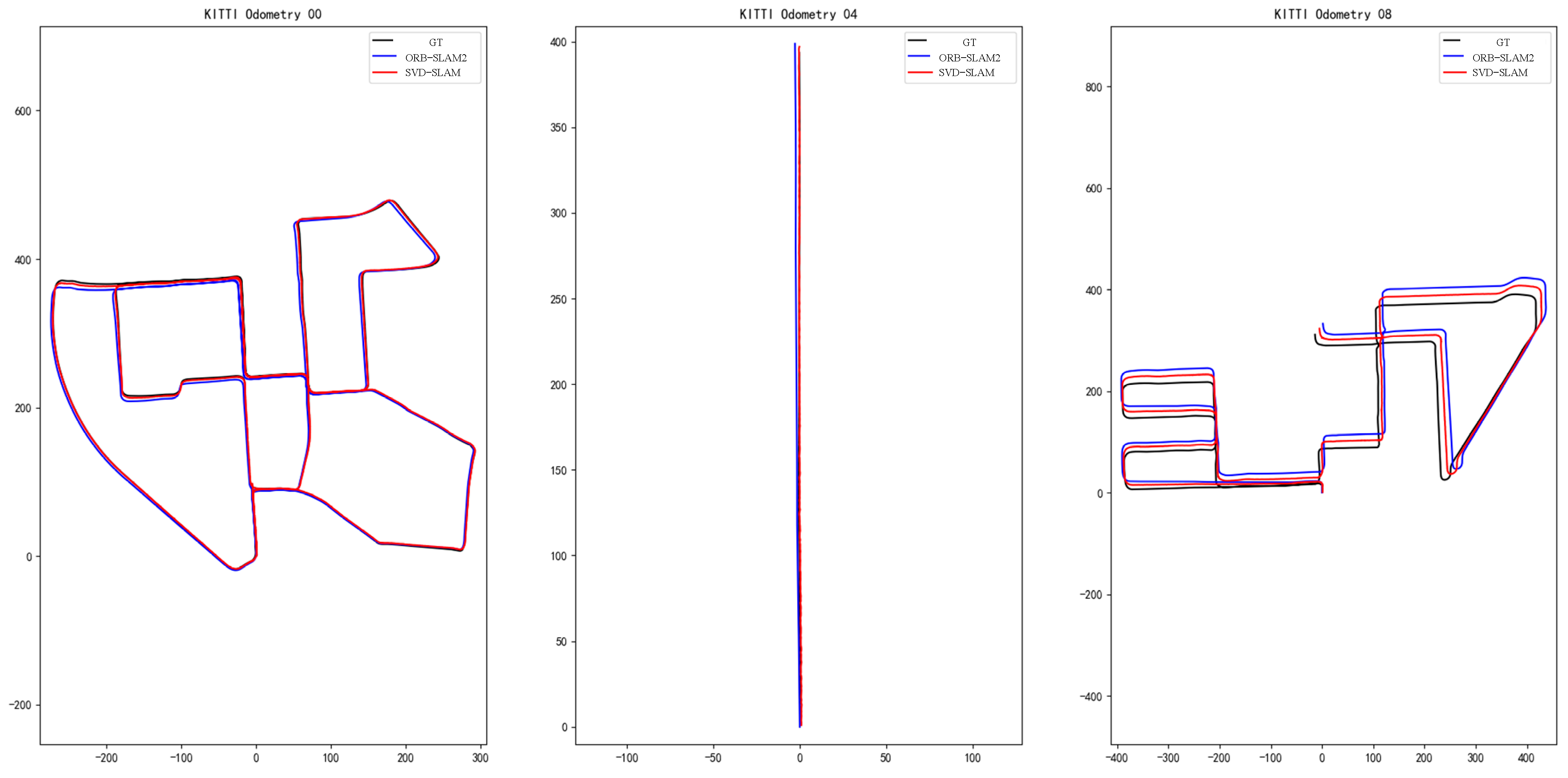

4.2. Comparison with ORB-SLAM2

4.3. Real-Time Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wirbel, E.; Steux, B.; Bonnabel, S.; de La Fortelle, A. Humanoid Robot Navigation: From a Visual SLAM to a Visual Compass. In Proceedings of the 2013 10th IEEE International Conference on Networking, Sensing and Control (ICNSC), Evry, France, 10–12 April 2013; pp. 678–683. [Google Scholar]

- Li, Y.; Zhu, S.; Yu, Y. Improved Visual SLAM Algorithm in Factory Environment. Robot 2019, 41, 95–103. [Google Scholar]

- Macario Barros, A.; Michel, M.; Moline, Y.; Corre, G.; Carrel, F. A Comprehensive Survey of Visual Slam Algorithms. Robotics 2022, 11, 24. [Google Scholar] [CrossRef]

- Smith, R.C.; Cheeseman, P. On the Representation and Estimation of Spatial Uncertainty. Int. J. Robot. Res. 1986, 5, 56–68. [Google Scholar] [CrossRef]

- Fuentes-Pacheco, J.; Ruiz-Ascencio, J.; Rendón-Mancha, J.M. Visual Simultaneous Localization and Mapping: A Survey. Artif. Intell. Rev. 2015, 43, 55–81. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D. Parallel Tracking and Mapping for Small AR Workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 225–234. [Google Scholar]

- Yu, C.; Liu, Z.; Liu, X.-J.; Xie, F.; Yang, Y.; Wei, Q.; Fei, Q. DS-SLAM: A Semantic Visual SLAM towards Dynamic Environments. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1168–1174. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. Orb-Slam2: An Open-Source Slam System for Monocular, Stereo, and Rgb-d Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. Orb-Slam3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multimap Slam. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. Vins-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle Adjustment—A Modern Synthesis. In Proceedings of the Vision Algorithms: Theory and Practice: International Workshop on Vision Algorithms, Corfu, Greece, 21–22 September 1999; Springer: Berlin/Heidelberg, Germany, 2000; pp. 298–372. [Google Scholar]

- Chapel, M.-N.; Bouwmans, T. Moving Objects Detection with a Moving Camera: A Comprehensive Review. Comput. Sci. Rev. 2020, 38, 100310. [Google Scholar] [CrossRef]

- Zhai, C.; Wang, M.; Yang, Y.; Shen, K. Robust Vision-Aided Inertial Navigation System for Protection against Ego-Motion Uncertainty of Unmanned Ground Vehicle. IEEE Trans. Ind. Electron. 2020, 68, 12462–12471. [Google Scholar] [CrossRef]

- OUYANG, Y DOF algorithm based on moving object detection programmed by Python. Mod. Electron. Tech. 2021, 44, 78–82.

- Wei, T.; Li, X. Binocular Vision SLAM Algorithm Based on Dynamic Region Elimination in Dynamic Environment. Robot 2020, 42, 336–345. [Google Scholar]

- Bescos, B.; Fácil, J.M.; Civera, J.; Neira, J. DynaSLAM: Tracking, Mapping, and Inpainting in Dynamic Scenes. IEEE Robot. Autom. Lett. 2018, 3, 4076–4083. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-Cnn. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Liu, L.; Guo, J.; Zhang, R. YKP-SLAM: A Visual SLAM Based on Static Probability Update Strategy for Dynamic Environments. Electronics 2022, 11, 2872. [Google Scholar] [CrossRef]

- Liu, X.; Song, L.; Liu, S.; Zhang, Y. A Review of Deep-Learning-Based Medical Image Segmentation Methods. Sustainability 2021, 13, 1224. [Google Scholar] [CrossRef]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. Yolact: Real-Time Instance Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, South Korea, 27 October–2 November 2019; pp. 9157–9166. [Google Scholar]

- Chen, B.; Li, S.; Zhao, H.; Liu, L. Map Merging with Suppositional Box for Multi-Robot Indoor Mapping. Electronics 2021, 10, 815. [Google Scholar] [CrossRef]

- Huang, Z.; Huang, L.; Gong, Y.; Huang, C.; Wang, X. Mask Scoring R-Cnn. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6409–6418. [Google Scholar]

- Yu, C.; Xiao, B.; Gao, C.; Yuan, L.; Zhang, L.; Sang, N.; Wang, J. Lite-Hrnet: A Lightweight High-Resolution Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual Conference, 19–25 June 2021; pp. 10440–10450. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. The KITTI Vision Benchmark Suite. 2015. Available online: http://www.Cvlibs.Net/Datasets/Kitti (accessed on 24 February 2023).

| Model | Backbone | Model Size/MB | Average Precision (AP)/% | Speed/fps |

|---|---|---|---|---|

| YOLACT (base) | ResNet101+FPN | 192.8 | 28.62 | 34.71 |

| YOLACT-R50 | ResNet50+FPN | 118.4 | 26.45 | 47.54 |

| YOLACT-Road(ours) | HRNet+HRFPN | 118.3 | 32.41 | 46.30 |

| YOLACT-darknet | Darknet53+FPN | 182.4 | 27.61 | 29.32 |

| Sequences | ORB-SLAM2/m | SVD-SLAM/m | Improvement/% | ||||||

|---|---|---|---|---|---|---|---|---|---|

| RMSE | Mean | Std | RMSE | Mean | Std | RMSE | Mean | Std | |

| 00 | 0.7662 | 0.7135 | 0.2797 | 0.5351 | 0.5245 | 0.1257 | 30.2 | 26.7 | 55.1 |

| 04 | 0.0132 | 0.0087 | 0.057 | 0.0076 | 0.0068 | 0.0029 | 42.4 | 53.6 | 14.0 |

| 08 | 0.0442 | 0.0358 | 0.0153 | 0.0157 | 0.0128 | 0.0080 | 64.5 | 64.2 | 47.7 |

| Sequences | ORB-SLAM2/m | SVD-SLAM/m | Improvement/% | ||||||

|---|---|---|---|---|---|---|---|---|---|

| RMSE | Mean | Std | RMSE | Mean | Std | RMSE | Mean | Std | |

| 00 | 0.0146 | 0.0117 | 0.067 | 0.0088 | 0.0100 | 0.0040 | 39.6 | 10.4 | 54.8 |

| 04 | 0.0231 | 0.0115 | 0.0204 | 0.0132 | 0.0080 | 0.0081 | 42.7 | 30.5 | 60.1 |

| 08 | 0.479 | 0.3910 | 0.0372 | 0.0179 | 0.0150 | 0.0121 | 62.4 | 61.7 | 67.4 |

| Instance Segmentation/ms | ORB Feature Extraction/ms | Dynamic Point Deaction/ms | Tracking Module/ms | Overall/ms | |

|---|---|---|---|---|---|

| ORB-SLAM2 | / | 17.2 | / | 24.7 | 41.9 |

| SVD-SLAM | 6.9 | 17.2 | 18.1 | 24.7 | 66.78 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, L.; Yan, Y.; Li, H. SVD-SLAM: Stereo Visual SLAM Algorithm Based on Dynamic Feature Filtering for Autonomous Driving. Electronics 2023, 12, 1883. https://doi.org/10.3390/electronics12081883

Tian L, Yan Y, Li H. SVD-SLAM: Stereo Visual SLAM Algorithm Based on Dynamic Feature Filtering for Autonomous Driving. Electronics. 2023; 12(8):1883. https://doi.org/10.3390/electronics12081883

Chicago/Turabian StyleTian, Liangyu, Yunbing Yan, and Haoran Li. 2023. "SVD-SLAM: Stereo Visual SLAM Algorithm Based on Dynamic Feature Filtering for Autonomous Driving" Electronics 12, no. 8: 1883. https://doi.org/10.3390/electronics12081883

APA StyleTian, L., Yan, Y., & Li, H. (2023). SVD-SLAM: Stereo Visual SLAM Algorithm Based on Dynamic Feature Filtering for Autonomous Driving. Electronics, 12(8), 1883. https://doi.org/10.3390/electronics12081883