Abstract

Person re-identification can identify specific pedestrians across cameras and solve the visual limitations of a single fixed camera scene. It achieves trajectory analysis of target pedestrians, facilitating case analysis by public security personnel. Person re-identification has become a challenging problem due to occlusion, blur, posture change, etc. The key to affecting the accuracy is whether sufficient information of pedestrians can be obtained. Most existing deep learning methods only consider a single category of characteristics, resulting in problems of information loss and single feature expression. This paper proposed a dual-branch person re-identification algorithm based on multi-scale and multi-granularity branches to obtain complete pedestrian information, called MSMG-Net. The multi-scale branch extracted features from different layers and fused them through the bidirectional cross-pyramid structure. It made up for the loss of information caused by using only high-level semantic features. The multi-granularity branch combined the convolutional neural network (CNN) and Transformer module based on improved self-attention mechanisms to obtain global information, and it performed horizontal segmentation of feature maps to obtain local information. It combined global and local features and solved the problem of single feature expression. The experimental results show that mAP/Rank-1 of MSMG-Net reached 88.6%/96.3% on the Market-1501 dataset and 80.1%/89.9% on the DukeMTMC-ReID dataset. Compared with many state-of-the-art methods, the performance of the proposed algorithm was improved significantly.

1. Introduction

In recent years, with the development and progress of society, public safety has become increasingly important. Video surveillance has played a crucial role in security. Surveillance cameras produce a large number of video files every day, and relying on relevant personnel for video viewing is inefficient, making it challenging to locate and track target pedestrians. Meanwhile, the pedestrian image captured by the surveillance camera has a low resolution. Therefore, face recognition technology cannot determine identity. It is necessary to combine the overall characteristics to locate the target pedestrian. Thus, person re-identification has become an important research objective in video surveillance. Person re-identification is an image retrieval technology that realizes cross-camera recognition of specific pedestrians. It extracts features from a given pedestrian image and performs similarity ranking to retrieve all photos under its cross-device. Person re-identification technology can solve the visual limitations of the single fixed camera scene to a certain extent. It can be combined with other computer vision technologies, such as pedestrian detection, face recognition, and crowd density estimation, to form an image pedestrian analysis system, which will have critical applications in public security. Problems such as occlusion, light change, and camera angle difference in the actual scene [1,2,3] result in the changeable posture of pedestrians in the image, which leads to insufficient feature extraction of pedestrians and makes it difficult to measure the similarity later. All these problems result in low accuracy of the obtained results. Therefore, obtaining more comprehensive pedestrian characteristics is a great difficulty in person re-identification research.

For person re-identification, the main task is to extract more discriminant feature representation from pedestrian images. According to the different feature extraction strategies, the person re-identification algorithm model can be divided into classification and verification models. Generally, the classification model takes the instance loss [4] as the loss function. The verification model inputs two images at a time. It uses the Siamese neural network to extract feature representation [5] and perform feature fusion, then calculates the binary loss. According to different feature extraction methods, existing methods can be divided into global and local feature representation learning. Global feature representation learning extracts a global feature representation for each pedestrian image. Since early studies regard person re-identification as an image classification problem, most early methods use global feature representation learning methods. However, in the actual scene, the pedestrian image captured by the camera is usually incomplete, and the noise area in the image will cause significant interference to the global features. Meanwhile, due to the change in pedestrian attitude, the inconsistency of image frame attitude detected by multiple cameras will also make the global features unable to be matched, so the local features have been widely applied [6,7,8]. The global feature can obtain the overall information perception, while the local feature can obtain the image details. By synthesizing the two features, combining the global feature and the local feature can achieve better results.

In convolutional neural networks, deep networks easily respond to semantic features, while shallow networks easily respond to image features. However, deep features have less spatial information, which is not conducive to feature acquisition. Meanwhile, shallow features contain fewer semantic features, which is not conducive to image classification. Therefore, combining deep and shallow features can prevent the loss of crucial information in the image [9,10] and better meet the needs of person re-identification.

In practical applications, the CNN operator has the problem of local receptive field limitation. To obtain global information, multi-layer stacking is needed, but the information will gradually disappear with the increase in layers. To solve this problem, researchers have applied Transformer to the field of computer vision [11,12]. Transformer contains multi-head self-attention mechanisms, which can effectively obtain global information. Furthermore, multi-head self-attention replaces operations such as convolution and pooling, which cannot easily cause image information loss and can enhance model expression ability. However, due to the low computing efficiency of the Transformer module, it cannot completely replace CNN. Image and video have more information than text, so the computing cost of image processing using Transformer is still huge.

Aiming at the problems existing in the field of person re-identification, this paper proposed a new algorithm with the main contributions as follows:

- We proposed a person re-identification network based on a dual-branch structure, called MSMG-Net. The multi-scale branch realized the complementary of features from different layers. It retained semantic information while avoiding the loss of details. The multi-granularity branch obtained output features of varying granularity. It took into account both global and local information, which increased the diversity of features.

- In the multi-scale branch, the bidirectional cross-feature pyramid network achieved feature fusion. It obtained the shallow position supervision feature and the deep semantic supervision feature. Meanwhile, it combined the dual attention mechanism of position and channel to make the network pay more attention to the identity characteristics of pedestrians.

- In the multi-granularity branch, we combined CNN and Transformer to capture long-distance dependencies between image pixels and obtained global local features by horizontally splitting the feature map. Meanwhile, we improved the multi-head self-attention mechanism in Transformer, adding relative position coding in width and height directions. In this way, the Transformer structure can obtain more accurate relative position information and improve feature expression ability while capturing long-distance dependence.

The algorithm in this article proposed a new idea, which adopted a dual-branch structure and combined multi-scale and multi-granularity branches. In the multi-scale branch, we proposed a bidirectional cross-pyramid structure that combined the attention mechanism to achieve the fusion of multi-scale features. In the multi-granularity branch, CNN and Transformer were combined to improve the multi head self-attention mechanism, adding relative position encoding on the width and height dimensions, and we used horizontal segmentation to obtain local features. We considered more complete feature categories and achieved more effective feature extraction, achieving higher accuracy.

The chapters of this article are arranged as follows:

- 1.

- Section 1 introduces the research background and value of person re-identification algorithms, then introduces its main ideas and technical routes, and finally summarizes the research content and innovation points.

- 2.

- Section 2 introduces person re-identification algorithms based on traditional and deep learning algorithms, then introduces the ideas of classic network applications. Finally, it analyzes the shortcomings of existing algorithms and research directions that can be improved.

- 3.

- Section 3 provides the main structure of a dual-branch person re-identification algorithm based on multiple feature representations. The network structure includes two parts: multi-scale and multi-granularity branches. The multi-scale branch compensates for the loss of feature information caused by using only high-level semantic features. The multi-granularity branch makes the extracted pedestrian information more comprehensive.

- 4.

- Section 4 introduces person re-identification datasets, algorithm evaluation indicators, and experimental configuration. Then, we designed ablation and comparative experiments and analyzed the results. Finally, the experimental results are visually displayed to verify the algorithm’s performance.

- 5.

- Section 5 summarizes the paper’s main research content and innovations. Then we analyze the shortcomings of the algorithm and future research directions.

2. Related Work

2.1. Person Re-Identification Based on Feature Representation Learning

The traditional person re-identification methods obtain manual features of pedestrians in the feature extraction stage. These include methods such as Scale-invariant Feature Transform (SIFT) [13], Histogram of Oriented Gradient (HOG) [14], Integral Channel Feature (ICF) [15], etc. Then, they realize distance measurement learning based on the extracted features. Traditional methods work well on some small datasets but are unsuitable for person re-identification in complex scenes with great limitations. At present, deep learning technology has excellent performance in various research in computer vision. Deep learning methods can obtain better similarity measurement in person re-identification by extracting more accurate and comprehensive pedestrian features so that all images of the designated pedestrian across devices can be found more accurately, breaking through the limitations of traditional methods. The original person re-identification method is based on global information in the image and takes the pedestrian as a whole to obtain the global features. Some researchers have proposed a cross-image representation (CIR) method. It inputs an image pair into the Siamese neural network [5], obtained their respective output features, and then measures them to determine whether the selected image pair belongs to the same pedestrian [16]. Zheng et al. [4] proposed to obtain the global features by taking the pedestrian as a whole and used pedestrian ID category labels to obtain the final loss, achieving the classification of pedestrians.

The deep learning method based on global features has achieved certain effects. However, the person re-identification data have problems such as severe occlusion, attitude distortion, light difference, and other issues in key areas. These will lead to the loss of crucial information in the global features and failure to obtain a high accuracy rate. Therefore, the researchers introduced local features to make the network focus more on critical local areas of the image. The main methods based on local features include image segmentation, skeleton key point location, and attitude correction [17]. Zheng et al. [18] proposed a method to locate critical points in pedestrian images. Firstly, it used the pose estimation model to calculate the critical points of the pedestrian and then used the affine transformation to register the same key points in the image. Zhao et al. [19] proposed SpindleNet to extract the local features containing critical information about the human body and generate the target area of interest based on this crucial information. However, most of these methods based on local feature alignment need to introduce a skeleton critical point or attitude estimation model for additional network training, which increases cost and is unsuitable for large-scale person re-identification applications. Sun et al. [6] proposed a Part-based Convolutional Baseline (PCB) network, which horizontally split the output feature map into six regions and proposed a Re-fined Part Pooling (RPP) strategy to solve the problem of uneven features caused by horizontal segmentation. This method will add a little computation, but the network with only local features will lack global information. Meanwhile, too many image blocks will further weaken global information. Fu et al. [7] proposed the Horizontal Pyramid Matching (HPM) algorithm, using multiple horizontal split structures to fully utilize various bits of local information on pedestrians. However, the network model would be challenging to converge in the training process due to too many branches. Wang et al. [8] proposed the Multiple Granularity Network (MGN), which consisted of three branches, to obtain global features and local features of different granularity, considering both global and local information. However, the convolutional neural network will cause the loss of the original image information in the forward propagation process. Although the high-level features contain the richest semantic information, some detailed features in the original image will be ignored. Therefore, selecting only a single level of semantic features will cause a deficiency of features.

Based on the above information, this paper proposes a dual-branch network, including the multi-scale and multi-granular branches. The multi-scale branch combines the characteristics of different layers and considers both spatial and semantic information. The multi-granularity branch considers both global information and local details. Combining them increases the diversity of features and obtains complete pedestrian identity information.

2.2. Transformer Applied to Computer Vision

Recently, researchers have proposed new methods to solve the problems encountered in image processing. Dosovitskiy et al. [11] applied the Transformer module in natural language processing to computer vision for the first time. With dynamic and global receptive field, the model can perform well in the task of image classification and can effectively learn the dependencies of different locations in images. In 2021, Microsoft Asia Research Institute put forward the Swin Transform [12]. Its ideas, such as displacement window and hierarchical design, effectively solved the problem that different feature areas cannot establish long-distance dependence on global information. The Transformer module shows excellent potential in various visual tasks and performs well in datasets of multiple tasks. However, the Transformer module lacks inductive bias and has high computational complexity. Its model must be pre-trained on a large dataset to achieve excellent results. Therefore, only using the Transformer module cannot meet the needs of person re-identification.

Based on the above information, this paper adopted the fusion method of CNN and Transformer to obtain global information and carried out the reweighting operation of features. While retaining the fast reasoning speed and feature extraction ability of CNN, the global receptive field of the Transformer was used to enhance the extraction ability of global features and improve the algorithm’s accuracy.

3. Proposed Method

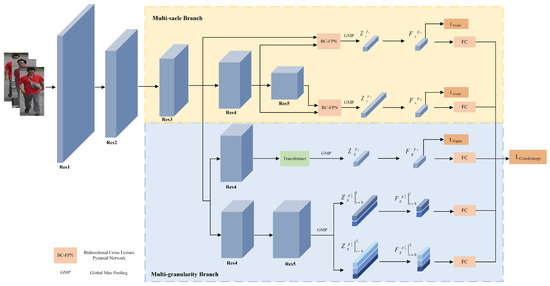

The algorithm proposed in this paper took ResNet50 as the backbone network and adopted a dual-branch structure: multi-scale and multi-granularity branches. The specific network structure is shown in Figure 1. In the multi-scale branch, we used the bidirectional cross-feature pyramid network to achieve feature fusion and obtained the multi-scale features of pedestrians. In the multi-granularity branch, we obtained the local features through horizontal splitting of the feature map. Meanwhile, we combined CNN and Transformer modules to establish connections between long-distance pixels and obtain global features using the improved multi-head self-attention mechanism. Finally, the cross-entropy loss and triple loss were combined to train the network.

Figure 1.

Network structure of this paper.

3.1. Multi-Scale Branch

The high-level features of the convolutional neural network contain the richest semantic information but will lose detailed features in the original image in the forward propagation process. Shallow features contain rich image details but will introduce more noise interference, affecting the discriminability of features. The middle-layer features can balance the problems between them and compensate for the insufficient feature representation caused by only using the semantic features of a single layer [9]. Therefore, this paper proposed the multi-scale branch and adopted the bidirectional cross-feature pyramid network to achieve the feature fusion of different layers and avoid the loss of feature information.

3.1.1. Bidirectional Cross Feature Pyramid Network

Inspired by Bidirectional Feature Pyramid Network (BiFPN) [20], the bidirectional cross-feature pyramid network proposed in this paper added bidirectional cross-scale connections based on the original Feature Pyramid Network (FPN). Unlike FPN, the bidirectional cross-feature pyramid network aimed to integrate features of different scales into the final match. So, only the fused features of the deeper side were selected as the output and introduce the attention module to improve the network performance. The shallow features contain too little semantic information, so we applied the output features of Res3, Res4, and Res5 for feature fusion. The output features of Res3 and Res4 were fused to obtain the shallow position supervision feature, and the output features of Res4 and Res5 were fused to obtain the deep semantic supervision feature. The specific network structure is shown in Figure 2.

Figure 2.

Bidirectional cross-feature pyramid network.

Firstly, the shallower feature and deeper feature were obtained, and the feature dimension was converted to 256. They had different resolutions, so it was necessary to realize a cross-scale connection between the two layers. We adopted the global max pooling with pooling kernel to downsample and the Nearest Interpolation to upsample for feature fusion. Meanwhile, as shown by the curved arrow in the figure, the original feature map was added to the output in each layer of FPN, providing additional discriminative information for the output feature. Because different feature maps have various contributions to the output in the feature fusion process, this paper used the fast normalization fusion method to add weights for each feature input. The calculation formulas are shown in Equations (1) and (2).

where , , , , and are additional weights for each feature input and parameter is set to ensure numerical stability.

Finally, the bidirectional cross-feature pyramid network took the characteristics of the deeper side as the output and restored its dimension to the same as the deeper input . Finally, the shallow position supervision feature and the deep semantic supervision feature obtained from the multi-scale branch worked together to make up for the insufficient feature representation caused by using only a single-layer feature.

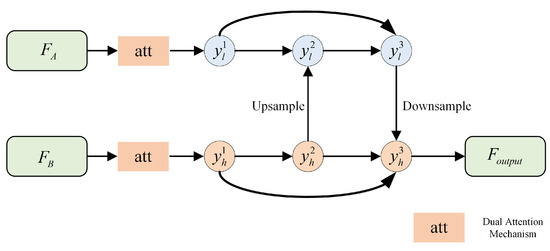

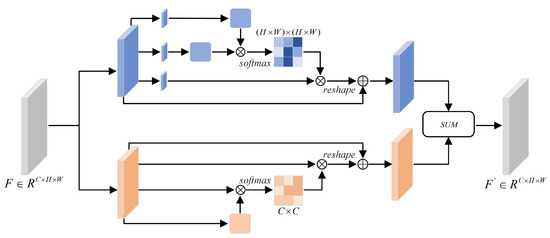

3.1.2. Dual Attention Mechanism

In the actual scene, the pedestrians may be blocked by various obstructions, so the network should pay more attention to the characteristics of the pedestrian’s body parts. The attention mechanism can use the correlation between features to help the model focus on more relevant features and reduce the ambiguity of features in the final task. In the task of person re-identification, the attention mechanism focuses on areas highly related to pedestrian information with high weight. It ignores irrelevant information with low weight to distinguish pedestrians with different identities. Based on this, we add the attention mechanism to the multi-scale branch. Before the feature is input into the bidirectional cross-feature pyramid network for feature fusion, the information is reweighted through the attention mechanism. Inspired by Fu et al. [21], we used the dual attention mechanism, as shown in Figure 3. The blue part is the Position Attention Module (PAM), and the orange is the Channel Attention Module (CAM). An effective combination of them can solve the problem of local feature dependency and spatial mismatch of the image.

Figure 3.

Dual attention mechanism.

In the position attention module, the input feature was divided into three branches, and the new feature map was obtained through convolution operation. The output feature map of the first branch was transposed and multiplied by the second branch feature map. Then the result was calculated through the softmax layer to get the spatial attention map. Then it multiplied the third branch feature map by the transposition of the spatial attention map and adjusted the output size to be the same as the input feature map. Finally, we multiplied it by a learnable parameter and added the result to the input feature map. The calculation formula of is shown in Equation (3).

where is the output of the attention module, F is the input feature map, and is initialized to 0 and updated gradually.

The channel attention module directly took the original feature map as input, multiplied the original feature map and its transposition, and obtained the channel attention map through the softmax layer. Finally, it multiplied the transposition of the input feature map and the attention map, and the result was multiplied by a learnable parameter . The calculation formula of is shown in Equation (4).

where is the output of the attention module, F is the input feature, and is initialized to 0 and updated gradually.

Finally, the outputs of the two modules were fused to obtain the final output feature map . Before the feature map is fused through the bidirectional cross-feature pyramid network, using the attention mechanism retains more effective information and avoids the interference of irrelevant features.

3.2. Multi-Granularity Branch

Due to the complex environment in the realistic monitoring scene, some key details may be ignored if only using global features, and the overall perception of global information is missing if only using local features. Therefore, this paper proposed the multi-granularity branch to obtain both global and local features of pedestrians to avoid the loss of detailed features.

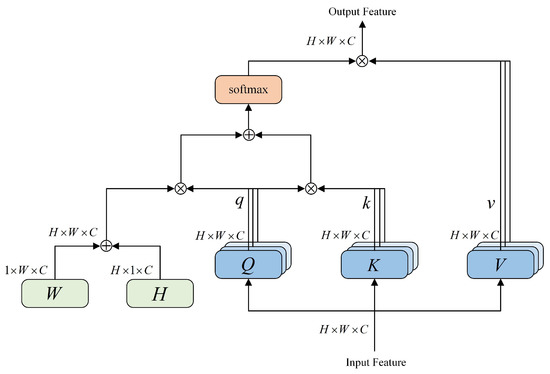

3.2.1. Global Feature Branch

The convolutional neural network uses convolution and pooling operations to acquire information but cannot acquire advanced features. Based on this, this paper combined CNN with Transformer based on the improved multi-head self-attention mechanism. After the CNN structure, the Transformer module was connected with a depth of 8. It took the output feature of Res4 as the input of the Transformer module and re-weighted it to obtain the global feature of pedestrians. The Transformer module effectively solved the problem that different feature regions cannot establish long-distance dependence on global information so that the model can accurately identify the information of the pedestrian during the training process.

The Transformer structure mainly comprises multi-head self-attention mechanisms and feedforward neural networks. The feedforward neural network includes linear transformation and the ReLU activation function. It can enhance the nonlinear representation ability. The multi-headed self-attention mechanism includes multiple self-attention modules. The self-attention module is a special case of the attention mechanism because the sequence matches itself to extract the semantic dependency between each part. Its input is the feature map of CNN output and can obtain each pixel’s weight using the nonlinear function. In the training process, the influence size of each pixel in the feature map is determined by the weight value so that the model pays attention to the relationship between features and effectively improves the model’s generalization ability. The calculation process maps the request and key values from input to output. The request function and corresponding key value determine the weight assigned to each value. Its calculation formula is shown in Equation (5).

where Q, K, and V are query, key, and value, respectively, and is the dimension coefficient.

The multi-headed self-attention mechanism uses multiple self-attention modules in parallel. Its calculation formula is shown in Equation (6).

where . We set .

The self-attention layer does not include the location information coding of the two-dimensional image, which limits its expression ability in processing visual tasks. Therefore, when constructing the multi-head attention layer, two-dimensional relative position coding was added to improve the ability of the multi-head self-attention mechanism to understand two-dimensional images. Its structure is shown in Figure 4. The two-dimensional relative position coding considered the two-dimensional features of the image as a combination of vectors. It constructed vectors H and W to represent the relative positions of the two-dimensional features in the height and width directions, respectively. The constructed two-dimensional relative position coding can inject relative position information into each pixel when processing the two-dimensional features. Each attention head used a pair of trainable two-dimensional relative position codes, whose value depended on the distance between pixels and was constantly updated during the network training process to enhance the understanding of two-dimensional features.

Figure 4.

The improved multi-head self-attention mechanism with two-dimensional relative position coding.

Finally, in the testing process, the multi-scale features obtained from the multi-scale branch and the global and local features obtained from the multi-scale branch were concatenated. After information fusion from the double-branch structure, we obtained a more complete and rich pedestrian feature representation.

3.2.2. Local Feature Branch

To retain more details, the downsampling factor of the Res5 stage was set to 1 in the local feature branch so that the resolution of the output feature map of Res4 and Res5 was the same. Then the output feature map of Res5 was split horizontally. The method proposed by PCB [6] network divided the feature map into six parts from top to bottom. However, excessive segmentation will lead to a lack of connection among local features. In addition, if there are too many segmentation particles, the feature scale of each region is too small, which makes it challenging to learn discriminative features from the local area. Therefore, the algorithm in this paper selected two processing methods of horizontal segmentation into two parts and three parts, respectively. The output feature map of Res5 was pooled by global max pooling with and kernels, respectively. So the output features of and were obtained. Then we split them horizontally to obtain 2048-dimensional feature vectors, and , and reduced their vectors to 256. Finally, we obtained the outputs through the fully connected layer.

3.3. Loss Function

In the training process, we took the cross-entropy loss and triplet loss [22] as the loss function.

We applied the cross-entropy loss to classification learning in the feature learning stage and regarded the training process as a multi-class classification problem, and the network applied the cross-entropy loss to all output features of all branches. Firstly, it processed the features by the softmax function and constructed the probability distribution to obtain the classification result of pedestrian identity. The calculation formula of the softmax function is shown in Equation (7).

where N is the batch size, f is the learning feature, is the weight vector corresponding to category j of the fully connected layer, and K is the number of categories.

Finally, we used the cross-entropy loss as the objective function, which calculated the distance between the actual and expected output. The smaller the value was, the closer the output was to the expected result. Its calculation formula is shown in Equation (8).

where N is the number of pedestrian categories, is the prediction vector, and is the real label vector.

The triplet loss was applied to the measurement learning phase. An input triplet consisted of three images: Anchor, Positive, and Negative. Through optimization, the distance between Anchor and Positive was smaller than between Anchor and Negative, so the intra-class gap was smaller, and the inter-class gap was larger. It realized the feature clustering of the same pedestrian. The triple loss randomly selected samples from the training set, and it was easy to select a relatively simple triplet that cannot realize the optimization of the network. To solve this problem, [23] proposed a triplet loss based on difficult sample mining. In each training batch, it selected P pedestrians and K images belonging to these pedestrians randomly. For each Anchor in this batch, it selected a Positive with the farthest distance and a Negative with the nearest distance to form a triplet. Its calculation formula is shown in Equation (9).

where , , and are the characteristics of Anchor, Positive, and Negative, respectively, is the Euclidean distance between calculated features, and is the boundary parameter controlling the minimum interval between positive and negative sample pairs.

The triplet loss was used to train the global features of all outputs but not for local features. Because local features may have problems such as feature misalignment, if the background part was taken as a sample, the model may learn wrong information, affecting the accuracy.

4. Experiments

4.1. Datasets

Common pedestrian datasets were captured by few cameras and have insufficient images and pedestrian identities on a small scale. At the retrieval stage, common pedestrian datasets have a single query image for each identity and lack practicality in images. To avoid the above problems, we selected Market-1501 and Duke MTMC-ReID datasets for the experiment. These two datasets contain sufficient images and pedestrian identities, were taken from multiple cameras, and have adequate query images collected from actual scenes.

- Market-1501 The images in the Market-1501 [24] dataset were taken on the campus of Tsinghua University, including 32,668 images of 1501 identities. Training sets include 12,936 images of 751 people, and test sets include 19,732 images of 750 people. Six cameras capture the images in the dataset. The average number of training datapoints per identity in the training set is 17.2, and the average number of test datapoints per identity in the test set is 26.3.

- DukeMTMC-ReID DukeMTMC-ReID [25] dataset is a subset of pedestrian recognition in the Duke dataset, which includes 36,411 images collected by 8 high-resolution cameras. Among them, the training set contains 16,522 images of 702 pedestrians randomly sampled, and the test set contains 17,661 images of another 702 pedestrians. The average number of training datapoints per identity in the training set is 23.5, and the average number of test datapoints per identity in the test set is 25.2.

4.2. Evaluation Metrics

In terms of performance evaluation, MSMG-Net is evaluated using Cumulative Matching Characteristic (CMC) and mean Average Precision (mAP). Rank-k accuracy in CMC refers to including the matched image in the first k results according to the similarity score. This paper uses Rank-1 to evaluate the proposed method. MAP calculates the area under the precision–recall curve for each query, called the average precision, and its calculation formula is shown in Equation (10).

where is the area under the curve of accuracy and recall, k is the total number of categories, and is the average of all kinds of .

4.3. Experimental Configuration

We implemented the algorithm on the PyTorch framework and use NVIDIA Quadro RTX 8000 GPU for acceleration. We used the ADAM optimization algorithm to optimize the model’s training and set the momentum to 0.9. Experiments show that initializing the network structure with the pre-trained weight parameters can accelerate the network convergence speed and improve the network performance. Therefore, the algorithm model chose to fine-tune the results of the trained ResNet50. In the training process, we set the resolution of the input image to and expanded the datasets by random erasure and horizontal flip. The size of each training batch was 32, with a total of 160 iterations. The network dynamically adjusted the learning rate. The initial learning rate was set to . When the number of iterations reached 120, the learning rate was adjusted to . When the number of iterations reached 140, the learning rate was adjusted to . The edge hyperparameter of the triplet loss function was set to 0.3.

4.4. Comparision with State-of-the-Art Methods

The experimental results of the algorithm model on the two datasets are shown in Table 1. We compared the experimental results of MSMG-Net and other mainstream person re-identification algorithms on the same datasets. Then we analyzed the existing model’s shortcomings and completed the algorithm’s evaluation in this paper. The optimal results are shown in bold font.

Table 1.

Comparison of the experimental results between MSMG-Net and other mainstream algorithms.

We can see from the table that for the mAP and Rank-1 indicators, the results on the Market-1501 dataset were 88.6% and 96.3%, respectively, and the results on the DukeMTMC-ReID dataset were 80.1% and 89.9%, respectively. Compared with the PCB+RPP [6] and HPM [7] algorithms that apply local features, MSMG-Net achieved more significant performance improvement on both datasets for mAP and Rank-1 indicators. As can be seen from the experimental results, excessive block granularity will lead to the loss of discrimination of local features, and only considering local features without global information will lead to a lack of connections between features. Combining global features with local features is a better feature extraction method. Meanwhile, compared with the MGN [8] network using global and local features, MSMG-Net performed better, especially in the mAP index, indicating that the proposed algorithm showed a more stable detection effect in the overall detection process. Compared with MSFNet [9], MSMG-Net performed better using multi-scale features. Meanwhile, compared with Vit-Transformer [11] and Swin-Transformer [12] algorithms that apply the Transformer module, MSMG-Net combined CNN with the Transformer module based on improved multi-head self-attention mechanisms and combined local features and multi-scale features. It achieved better performance than simply using the Transformer module.

Compared with the ISP [29] algorithm with better performance in the mainstream algorithm, the mAP of our algorithm on the Market-1501 dataset had no improvement, and the Rank-1 improved by 1.0%, while the mAP and Rank-1 on the DukeMTMC-ReID dataset improved 0.1% and 0.3%. Regarding the mAP index, the algorithm in this paper did not achieved a relatively noticeable performance improvement. However, the ISP [29] algorithm introduced a semantic analysis method for the segmentation of human parts and objects and the calculation process was relatively complex.

MSMG-Net considered more types of features. The combination of multi-scale and multi-granularity branches could achieve sufficient feature extraction and obtain more complete and comprehensive pedestrian information. At the same time, the various structures proposed in the network can help improve accuracy. It can be seen that the bidirectional cross-pyramid structure can effectively combine features of different scales in the process of feature fusion. The combination of CNN and Transformer can effectively capture long-distance dependencies between image pixels and obtain complete global information. Local information can be effectively obtained by splitting the feature map horizontally. Based on the above experimental results, compared with the current mainstream algorithms, MSMG-Net showed better results on the two datasets, which can verify its effectiveness and advancement.

4.5. Ablation Study

4.5.1. Validity Verification of Each Branch

MSMG-Net comprised the multi-scale branch and the multi-granularity branch, among which the multi-granularity branch also included the global and local feature branches. To prove the effectiveness of each part proposed in this paper, model simplification experiments were carried out on two datasets, as shown in Table 2. The baseline used ResNet-50 as the backbone and took cross-entropy loss and triplet loss as the loss function. The optimal results are shown in bold font. √ indicates that the network applied this module during the experiment process.

Table 2.

Validity verification of each branch.

It can be seen from the table that the performance gradually improved by adding multi-scale features, global features, and local features based on the baseline. Compared with the baseline, combining the two branches increased 15.4%/6.7% and 17.6%/10.1% in mAP/Rank-1 on the Market-1501 and DukeMTMC-ReID datasets, respectively. According to the analysis of the experimental results, the multi-scale branch solved the problem of insufficient feature expression caused by the single-layer semantic features and reduced the loss of feature information; the multi-granularity branch simultaneously obtained global and local features of pedestrians. Global features enhanced the overall information perception of pedestrian images, and local features focused on image details, increasing feature diversity. Finally, the comprehensive network combined multi-scale and multi-granularity features to complement each other and obtained sufficient pedestrian identity information.

4.5.2. Validity Verification of Each Part in the Multi-Scale Branch

We used the bidirectional cross-feature pyramid network for feature fusion in the multi-scale branch. The output features of Res3 and Res4 were fused to obtain the shallow position supervision feature, and the output features of Res4 and Res5 were fused to obtain the deep semantic supervision feature. As shown in Table 3, these two features’ effectiveness was verified when other branches remained unchanged. √ indicates that the network applied this module during the experiment process. It can be seen from the table that both the shallow position supervision feature and deep semantic supervision feature can improve the accuracy of person re-identification. However, the combination of the two features can obtain optimal performance.

Table 3.

Comparative experiment of feature fusion module.

The multi-scale branch added a dual attention mechanism based on feature fusion. As shown in Table 4, the effectiveness of position and channel attention was verified, with other branches unchanged. √ indicates that the network applied this module during the experiment process. It can be seen from the table that adding an attention mechanism to give different weights to different features and fuse them can further improve the accuracy. Meanwhile, the dual attention mechanism can achieve the best performance compared with the application of position and channel attention alone.

Table 4.

Comparative experiment of attention module.

4.5.3. Validity Verification of Each Part in the Multi-Granularity Branch

The multi-granularity branch acquired global features by combining CNN and Transformer. It added relative position coding in the width and height directions to improve the multi-head self-attention mechanism and obtained global features. To verify the effectiveness of the proposed method, the single-dimensional and two-dimensional relative position coding were compared, with other branches unchanged. As shown in Table 5, applying two-dimensional relative position coding can achieve higher accuracy.

Table 5.

Comparative experiment of the global feature branch.

When obtaining local features, the multi-granularity branch horizontally split the feature map into two and three parts. To verify the effectiveness of the proposed method, we split the feature map into two, three, and four parts horizontally. As shown in Table 6, we combined the different segmentation scales and kept other branches unchanged. √ indicates that the network applied this module during the experiment process. It can be seen from the table that splitting the feature map horizontally into two, three, and four parts can obtain the best performance. However, performance improvement was insignificant compared to the segmentation into two and three parts. Therefore, we combined the feature maps split to two and three parts to reduce the calculation amount while ensuring performance.

Table 6.

Comparative experiment of the local feature branch.

4.6. Visualization Result

The Rank-10 retrieval results of the same query image using baseline and the algorithm in this paper on two datasets are shown in Figure 5 and Figure 6. The green number in the figure represents the correct query result, and the red number represents the wrong query result. As shown in the figures, the retrieval results of this paper’s algorithm achieved higher accuracy than the baseline.

Figure 5.

Comparison of retrieval results on the Market-1501 dataset.

Figure 6.

Comparison of retrieval results on the DukeMTMC-ReID dataset.

5. Conclusions

This paper proposed a new dual-branch person re-identification algorithm, called MSMG-Net. It combined multi-scale and multi-granularity branches. The multi-scale branch obtained multi-scale features of different layers, and the multi-granularity branch obtained multiple fine-grained features. The network can extract more comprehensive and sufficient pedestrian information and improve the accuracy of person re-identification. In addition, a dual attention module was added to the multi-scale branch to make the multi-scale feature pay more attention to the critical information in the pedestrian image. In the multi-granularity branch, the Transformer module with improved multi-head self-attention mechanisms was applied to capture the long-distance dependence between image pixels and obtain a more accurate relative position relationship. The experimental results showed that compared with most existing person re-identification algorithms, MSMG-Net improved performance on the Market-1501 and DukeMTMC-ReID datasets.

However, the current algorithm still has certain limitations and requires further research and experiments:

- Each module proposed in this article has improved the accuracy of the pedestrian recognition algorithm to a certain extent. Still, it increases the complexity of the model and is unsuitable for large-scale deployment and application. In the subsequent improvement process, lightweight networks can be introduced to ensure accuracy while improving the real-time performance of the algorithm.

- The algorithm in this article has been tested on public datasets with relatively limited data volume and has not been tested in actual monitoring scenarios. Testing data in real environments is also necessary if applied to practical systems. Therefore, improving the model’s generalization ability is also an important research objective.

Author Contributions

Conceptualization, X.C. and Y.L.; methodology, X.C. and W.Z.; software, X.C.; validation, X.C. and Y.L.; formal analysis, X.C.; investigation, X.C.; resources, X.C. and Y.L.; data curation, X.C.; writing—original draft preparation, X.C.; writing—review and editing, W.Z.; visualization, X.C.; supervision, Y.L.; project administration, Y.L.; funding acquisition, W.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The publicly available datasets used in this research can be obtained through the following links: Market-1501: http://www.liangzheng.com.cn. DukeMTMC-ReID: http://vision.cs.duke.edu/DukeMTMC (accessed date: 9 June 2022).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CNN | Convolutional Neural Network |

| SIFT | Scale-invariant Feature Transform |

| HOG | Histogram of Oriented Gradient |

| ICF | Integral Channel Feature |

| CIR | Cross-image Representation |

| PCB | Part-based Convolutional Baseline |

| RPP | Re-fined Part Pooling |

| HPM | Horizontal Pyramid Matching |

| MGN | Multiple Granularity Network |

| BiFPN | Bidirectional Feature Pyramid Network |

| FPN | Feature Pyramid Network |

| PAM | Position Attention Module |

| CAM | Channel Attention Module |

| CMC | Cumulative Matching Characteristic |

| mAP | mean Average Precision |

| SVDNet | Singular Value Decomposition Network |

| AlignedReID | Aligned re-identification |

| AANet | Attribute Attention Network |

| GASM | Guided Adaptive Spatial Matching |

| ISP | Identity-guided human Semantic Parsing approach |

| DG-Net | Joint Discriminative and Generative Net |

| MSFNet | Multi-scale Feature Fusion Network |

| CDNet | Combined Depth Space Network |

| PAT | Part-Aware Transformer |

| AOPS | Attribute-guided Occlusion-sensitive Pedestrian Segmentation |

References

- Jin, H.; Lai, S.; Qian, X. Occlusion-sensitive Person Re-identification via Attribute-based Shift Attention. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 2170–2185. [Google Scholar] [CrossRef]

- Xu, B.; He, L.; Liang, J.; Sun, Z. Learning Feature Recovery Transformer for Occluded Person Re-Identification. IEEE Trans. Image Process. 2022, 31, 4651–4662. [Google Scholar] [CrossRef] [PubMed]

- Tan, H.; Liu, X.; Yin, B.; Li, X. MHSA-Net: Multihead Self-Attention Network for Occluded Person Re-Identification. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Zheng, L.; Yang, Y.; Hauptmann, A.G. Person Re-identification: Past, Present and Future. arXiv 2016, arXiv:1610.02984. [Google Scholar]

- Filkovic, I.; Kalafatic, Z.; Hrkac, T. Deep metric learning for person Re-identification and De-identification. In Proceedings of the 2016 39th International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 30 May–3 June 2016; pp. 1360–1364. [Google Scholar]

- Sun, Y.; Zheng, L.; Yang, Y.; Tian, Q.; Wang, S. Beyond Part Models: Person Retrieval with Refined Part Pooling. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 480–496. [Google Scholar]

- Fu, Y.; Wei, Y.; Zhou, Y.; Shi, H.; Huang, G.; Wang, X.; Yao, Z.; Huang, T. Horizontal pyramid matching for person re-identification. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 8295–8302. [Google Scholar]

- Wang, G.; Yuan, Y.; Chen, X.; Li, J.; Zhou, X. Learning discriminative features with multiple granularities for person re-identification. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Republic of Kerea, 22–26 October 2018; pp. 274–282. [Google Scholar]

- Wang, Y.; Zhang, W.; Liu, Y. Multi-scale feature fusion network for person re-identification. IET Image Process. 2021, 14, 4614–4620. [Google Scholar] [CrossRef]

- Wu, Y.; Zhang, K.; Wu, D.; Wang, C.; Yuan, C.; Qin, X.; Zhu, T.; Du, Y.; Wang, H.; Huang, D. Person reidentification by multiscale feature representation learning with random batch feature mask. IEEE Trans. Cogn. Dev. Syst. 2020, 13, 865–874. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Lowe, D. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Dollar, P.; Tu, Z.; Perona, P.; Belongie, S. Integral Channel Features; BMVC Press: London, UK, 2009. [Google Scholar]

- Li, Q.; Hu, W.; Li, J. A survey of person re-identification based on deep learing. Chin. J. Eng. 2022, 44, 920–932. [Google Scholar]

- Zhang, X.; Luo, H.; Fan, X.; Xiang, W.; Sun, Y.; Xiao, Q.; Jiang, W.; Zhang, C.; Sun, J. Alignedreid: Surpassing human-level performance in person re-identification. arXiv 2017, arXiv:1711.08184. [Google Scholar]

- Zheng, L.; Huang, Y.; Lu, H.; Yang, Y. Pose-invariant embedding for deep person re-identification. IEEE Trans. Image Process. 2019, 28, 4500–4509. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Tian, M.; Sun, S.; Shao, J.; Yan, J.; Yi, S.; Wang, X.; Tang, X. Spindle Net: Person Re-identification with Human Body Region Guided Feature Decomposition and Fusion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA,, 21–26 July 2017; pp. 1077–1085. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3146–3154. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. Facenet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Hermans, A.; Beyer, L.; Leibe, B. In defense of the triplet loss for person re-identification. arXiv 2017, arXiv:1703.07737. [Google Scholar]

- Zheng, L.; Shen, L.; Tian, L.; Wang, S.; Wang, J.; Tian, Q. Scalable person re-identification: A benchmark. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1116–1124. [Google Scholar]

- Ristani, E.; Solera, F.; Zou, R.; Cucchiara, R.; Tomasi, C. Performance measures and a data set for multi-target, multi-camera tracking. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 8–10 October 2016; pp. 17–35. [Google Scholar]

- Sun, Y.; Zheng, L.; Deng, W.; Wang, S. SVDNet for Pedestrian Retrieval. In Proceedings of the IEEE international Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3800–3808. [Google Scholar]

- Tay, C.; Roy, S.; Yap, K. AANet: Attribute Attention Network for Person Re-Identifications. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7134–7143. [Google Scholar]

- He, L.; Liu, W. Guided saliency feature learning for person re-identification in crowded scenes. In Proceedings of the 16th European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 357–373. [Google Scholar]

- Zhu, K.; Guo, H.; Liu, Z.; Tang, M.; Wang, J. Identity-guided human semantic parsing for person re-identification. In Proceedings of the 16th European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 346–363. [Google Scholar]

- Zheng, Z.; Yang, X.; Yu, Z.; Zheng, L.; Yang, Y.; Kautz, J. Joint discriminative and generative learning for person re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2138–2147. [Google Scholar]

- Li, H.; Wu, G.; Zheng, W. Combined depth space based architecture search for person re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Kuala Lumpur, Malaysia, 20–25 June 2021; pp. 6729–6738. [Google Scholar]

- Li, Y.; He, J.; Zhang, T.; Liu, X.; Zhang, Y.; Wu, F. Diverse part discovery: Occluded person re-identification with part-aware transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Kuala Lumpur, Malaysia, 20–25 June 2021; pp. 2898–2907. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).