Abstract

In the current world of the Internet of Things, cyberspace, mobile devices, businesses, social media platforms, healthcare systems, etc., there is a lot of data online today. Machine learning (ML) is something we need to understand to do smart analyses of these data and make smart, automated applications that use them. There are many different kinds of machine learning algorithms. The most well-known ones are supervised, unsupervised, semi-supervised, and reinforcement learning. This article goes over all the different kinds of machine-learning problems and the machine-learning algorithms that are used to solve them. The main thing this study adds is a better understanding of the theory behind many machine learning methods and how they can be used in the real world, such as in energy, healthcare, finance, autonomous driving, e-commerce, and many more fields. This article is meant to be a go-to resource for academic researchers, data scientists, and machine learning engineers when it comes to making decisions about a wide range of data and methods to start extracting information from the data and figuring out what kind of machine learning algorithm will work best for their problem and what results they can expect. Additionally, this article presents the major challenges in building machine learning models and explores the research gaps in this area. In this article, we also provided a brief overview of data protection laws and their provisions in different countries.

1. Introduction

In the modern world, the price of data storage is decreasing, there is a rapid increase in the speed of data processing, and with the increase in the integration of the Internet of Things, a massive amount of data is generated and stored in data repositories around the globe. In addition, the cost of data storage is decreasing, which means that it will become more affordable in the near future. A new subfield of research known as machine learning has been given the opportunity to flourish as a result of the availability of this massive dataset.

If we take a glance around, we can see examples of machine learning in almost every field. For instance, if we are watching a movie or web series, there is a recommender system that will suggest movies similar to the one we are watching. Likewise, if we buy online, it will suggest products that are either similar to the product we bought or products that were sold most frequently alongside the product we bought. This is done with association rule mining. The internet search engine is another application of machine learning that we use on a daily basis; for example, we used it to forecast tourism based on internet search [1]. When we enter a query into the search engine, the list of links returned to us is arranged from most relevant to least relevant to our search. This is accomplished through a ranking system that will be discussed further in this article. The techniques of machine learning are also helpful in the field of cybersecurity [2]. Algorithms such as isolation forests, support vector machines, neural networks, and others have been shown to be very useful in predicting any intrusion into the network. Therefore, we can say that machine learning is in every sector.

The data are the most important component of machine learning. These data can originate from a wide range of areas, including social networks, logs, blogs, and various sensors such as temperature sensors, current sensors, and humidity sensors, among others. The properties of these data can vary quite a bit. For instance, the data from social networks can come in the form of logs, speech data, image data, video data (a sequence of images), and text data. The data from sensors comes in vectors, and their data type can be float, integer, or string. The data can also be saved in the form of lists or some other format; the specifics of how the data are stored are determined by the conventions that are followed by the data storage repository. In a nutshell, we can say that a complete machine-learning framework consists of three phases: data scraping, model building, tuning and testing, and model deployment. Before model building, tasks such as preprocessing, algorithm selection, statistical analysis, and correlation analysis are carried out.

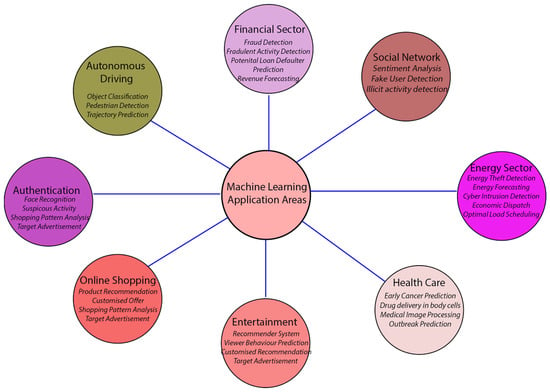

Figure 1 gives a concise overview of the field in which machine learning is now being applied. Machine learning is becoming an increasingly important tool in the banking industry for identifying potentially fraudulent activities. A few of the criteria that play a role in determining whether or not someone is engaging in fraudulent activity include the following: the time of the transaction, the amount involved in the transaction, the geographical location, and information on previous transactions. In a similar vein, the probability that the borrower will fail on their loan may be forecasted if the model is fed with the borrower’s previous credit history as well as his behavior with his previous lenders. The energy industry is another industry that makes extensive use of machine learning. Machine learning is extremely important in many aspects of the energy industry, including but not limited to economic dispatch, customer grouping, optimal load scheduling, and the detection of energy theft. In addition, the implementation of the smart grid, which incorporates a significant amount of renewable resources, has resulted in their network being flooded with thousands of sensors. These sensors send every piece of information to the utility server via a communication network, which has resulted in a new challenge for cybersecurity in the smart grid. The smart grid network is susceptible to a wide variety of cyberattacks, the most prevalent of which include false data injection, denial of service attacks, and botnets. The algorithms for machine learning have a very high degree of accuracy when predicting these types of attacks [2,3,4]. In the field of healthcare, machine learning is utilized to make predictions regarding the early stages of cancer, the prediction of outbreaks, the performance of drugs, etc. In a manner analogous to this, there are other industries like entertainment, e-commerce, autonomous driving, social networks, email service providers, cloud service providers, and others that use machine learning for a variety of activities.

Figure 1.

Machine Learning Applications In Different Domains.

The following are the primary contributions of this paper: (1) an in-depth analysis of the current machine learning technique that is being applied to solve a wide range of classification, regression, and clustering issues. (2) The machine learning engineer can understand the reasoning behind all machine learning algorithms by reading this paper. This will allow them to determine which machine learning algorithms they can apply to their problem based on the type of data they have. (3) In this study, we also studied the current issues in machine learning, which, if not addressed, can deteriorate the performance of any machine learning model. (4) Finally, we provide a method for dealing with the difficulties encountered while developing a machine-learning model.

The rest of the paper is organized as follows. In Section 2, we discussed the types of machine learning, followed by Section 3, which presented the need for data analysis before ML model building and various tools for data analysis and visualization. In Section 4, we presented various machine learning problems. In Section 5, we described the machine learning algorithms for regression, classification, and clustering along with their mathematical models. Section 6 is about the challenges and solutions faced during the training of machine learning models; this is followed by Section 8, which gives an overview of the machine learning tools available for building the model. Section 9 provides current research in machine learning and use cases. Lastly, Section 10 concludes our work.

2. Types of Machine Learning

Primarily, there are four types of machine learning: supervised learning, unsupervised learning, semi-supervised learning, and reinforcement learning. In supervised learning, the data contains features known as the independent variable and a target variable, or dependent variable. Whereas in unsupervised learning, there is no target variable. Semi-supervised learning is when some of the training data include target labels. In reinforcement learning, data with fewer labels is fed into the model, such as in unsupervised learning. However, we provide punishments and rewards based on the output of the algorithm.

2.1. Supervised Learning

During the training phase of a supervised learning system, the training data will be comprised of inputs coupled with target variables or labels. During the training phase, the algorithm will look for patterns in the data that are associated with the intended outputs and exploit them. When new inputs are introduced after training, a supervised learning algorithm will identify which label the new inputs should be categorized under based on the existing training data. The objective of a supervised learning model is to predict the proper label in case of a classification problem or the estimated value of the output in case of a regression problem for fresh input data given to the model. The purpose of the supervised learning paradigm is to infer a function from a set of data such that

where denotes output at instance and , denotes input and set of parameters.

2.2. Unsupervised Learning

Unsupervised learning is used when labels are not present in the data. The data is provided to the machine to find the relationships among the data. Some of the most common unsupervised learning algorithms include clustering algorithms such as K-Nearest Neighbour, K-Means clustering, anomaly detection, such as isolation forest, and association rule learning, such as the apriori algorithm. Dimensionality reduction is another field in which unsupervised learning may be used. The process of limiting the features or combining different features in such a way that they don’t lose their characteristics contained within a dataset is referred to as “dimensionality reduction.” When it comes to machine learning tasks such as regression or classification, there are frequently an excessive number of variables to work with. This phenomenon, which is referred to as the “curse of dimensionality,” describes how the complexity of modeling anything increases in direct proportion to the number of characteristics that it has. Therefore, the dimensionality reduction assists in making the model more efficient and quick to train without losing any critical information or with just a slight deterioration in the model [5]. Some standard dimensionality reduction techniques include Principal Component Analysis (PCA), Single Value Decomposition (SVD), and Linear Discriminant Analysis (LDA).

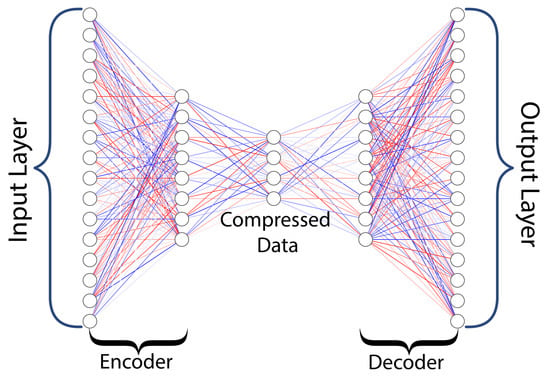

Autoencoders are a form of unsupervised learning that uses neural networks to perform the task of representation learning. In autoencoders, there are two parts. One part is an encoder, and the other part is a decoder. The primary use of the encoder is to generate more datasets of similar types. There is a design for neural networks that allows for the induction of a bottleneck in the network, which compels a condensed knowledge representation of the initial input. This compression and subsequent reconstruction would be an extremely tough task to perform if each of the input features could be considered independent of the others. However, if the data contain some structure, such as correlations between the input features, this structure may be learned. As a result, it can be utilized when attempting to force the input through the bottleneck in the network [6]. There are various types of autoencoders, such as variational autoencoders, denoising autoencoders, and Long Short-Term Memory (LSTM) autoencoders, that are discussed in this article [6].

2.3. Semi-Supervised Learning(SSL)

When most labels in the dataset are absent and only a select few are present, it is necessary to apply semi-supervised learning since the dataset only includes a partial quantity of the label. For instance, in voice recognition, there may only be a few examples of each voice; in this case, the SSL method will utilize the examples that are available to label the rest of the data. Another example of this would be classifying texts, such as determining the genre of a movie based on its synopsis. Because there are specific labels that are missing, the outcomes of semi-supervised learning are not as accurate as the results of supervised learning [7].

2.4. Reinforcement Learning

The learner in reinforcement learning is an agent that makes decisions, performs actions in an environment, and is rewarded (or penalized) for those actions as it tries to solve a problem. This kind of learning is known as “action-based learning”. Following a series of iterations based on the trial-and-error learning strategy, it should eventually discover the optimal policy, which is the chain of behaviors that results in the greatest overall reward [8].

A comprehensive survey has been done by the authors of ref. [9] to show the applications and challenges of reinforcement learning in robotics. A framework is proposed in ref. [10] for dynamic pricing demand response using reinforcement learning.

3. Exploratory Data Analysis In Machine Learning

Exploratory Data Analysis (EDA) is a crucial tool for machine learning engineers to gain insights from their data. It helps them make informed decisions about their data and build models that accurately reflect it. The raw data may not immediately show patterns, correlations, or abnormalities, but with EDA, data scientists can use various visualizations to uncover hidden insights and better understand the structure of the data. This includes studying the data distribution, identifying outliers and missing values, and exploring relationships between features. A comprehensive explanation of the importance of data analysis and procedure is presented in [11].

The use of EDA in machine learning has gained popularity in recent years, as it helps machine learning scientists understand the data before building a model. By having a clear understanding of the data, they can improve their models and avoid making inaccurate assumptions. EDA can also identify any problems with the data that could affect the accuracy of the model.

EDA’s ability to recognize correlations between variables is precious in machine learning. By analyzing these correlations, ML engineers can build more accurate models and determine which features are most important. EDA can also detect outliers and missing values, which can improve the model’s accuracy.

For ML engineers, EDA provides a visual representation of the data, making it easier to identify trends, patterns, and abnormalities. It can also help determine the most critical aspects of a machine-learning model by investigating the data’s distribution patterns. This saves time and resources by focusing on the most critical aspects while reducing the amount of data used in the model.

Moreover, EDA can be used to evaluate the performance of machine learning models. By visualizing the outcomes, data engineers can identify areas where the model is performing poorly and make improvements to increase accuracy and address potential flaws with the data. Some of the tools and libraries for EDA include:

- Python libraries: Pandas, Numpy, Matplotlib, Seaborn, Plotly

- R libraries: ggplot2, dplyr, tidyr, caret

- Tableau

- Excel

- SAS

- IBM SPSS

- Weka

- Matlab

- Statistica

- Minitab

Table 1 presents a list of the machine learning tools and programming languages. These tools are also used for data visualization.

Table 1.

Some of the machine learning tools and supporting programming languages available.

4. Machine Learning Problems

Many problems fall under the scope of machine learning; these include regression, clustering, image segmentation and classification, association rule learning, and ranking. These are developed to create intelligent systems that can solve advanced problems that, pre-ML, would require a human to solve or would be impossible without computers. The ability to classify unknown input data is very useful; one common example is natural language programming (NLP). The scope of NLP is large, and in this subject, ML classification can be used to extract the bias of an article or convert speech to text. Therefore, classification is a potent ML technology that many people interact with daily in various ways.

4.1. Classification

Classification is a key technology in ML that attempts to identify some input data into a set of categories correctly. The classification model is trained for binary or multi-class problems. While the binary approach is the simplest and most highly useful, multi-class is also very powerful and enables larger sets of applications derived from classification technology.

Within ML, classification is done by models driven by data. In one such model, the support vector machine (SVM), a hyperplane separates data points and is a very commonly used and powerful classification tool. Neural networks are also commonly used for classification, and they have greater applicability when it comes to image-based classification as compared to SVM. Neural networks can have a slower computing time due to the extensive network and many weights and biases to correct over training epochs. In some real-time-dependent classification problems, the SVM may outperform the neural network if online training is needed to update the model. Classification has its own robust set of metrics; the well-known accuracy metric applies to classification. F1 score Precision, recall sensitivity, and specificity are more advanced computed metrics of the classifier’s performance. These metrics are computed considering the actual class and predicted class, true positives, true negatives, false positives, and false negatives. To visualize the performance of a classifier, a confusion matrix can be used.

The act of classifying or identifying an object is very useful in many contexts and is a major application of machine learning. Classification can be applied to all types of data and widely, e.g., to identify events in a time series sequence, to determine if a piece of writing has a bias, or to label the objects in an image. Real-time classification of visual data is a major problem in machine learning and is very relevant in autonomous vehicle systems. A self-driving car equipped with omnidirectional cameras should use machine learning to classify the objects nearby with the utmost accuracy, as the implementation of this technology will have life-and-death consequences. There are different types of classification in machine learning; binary classification is common and simple, in which an object is determined to belong to one of two categories. For example, an email is either delivered to an inbox or labeled as spam and moved to the spam folder. Multi-class classifiers are able to classify an object into three or more classes. A multi-class classifier might be used for identifying the genre of music that a song belongs to.

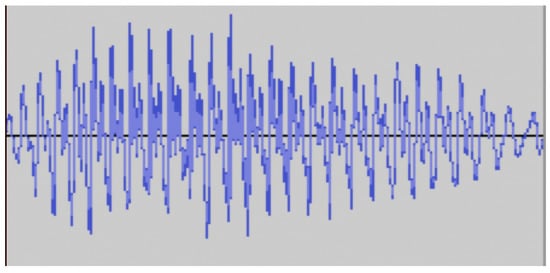

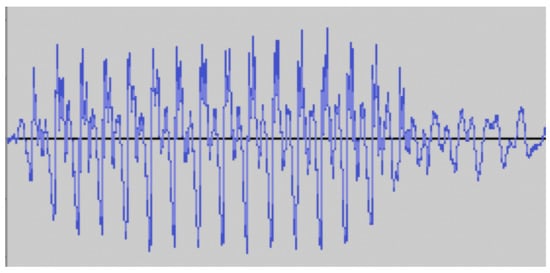

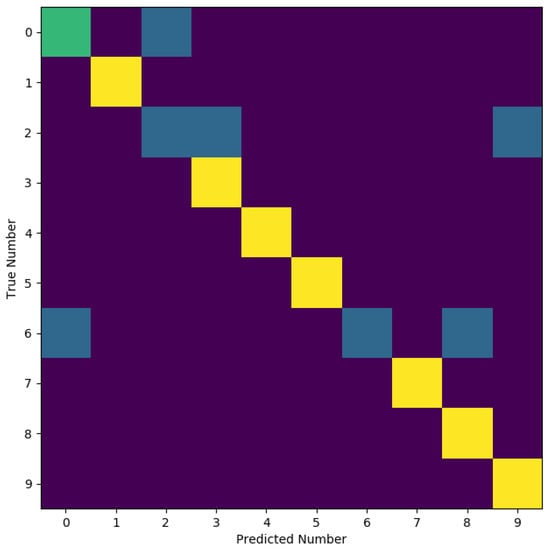

In an example of classification, the spoken digit to numerical digit classifier is created by the authors, and some results are shown. The spoken digit classifier is widely used by spoken language classifiers, such as those installed on smartphones. While those use more advanced cloud-based artificial intelligence systems, this example is based on a local instance of a three-layer recurrent neural network. The network is trained in a supervised manner on the multi-class problem of 0–9 spoken digit classification. The classifier takes as input the sound waveform and outputs the predicted number. Some of the input data is shown in Figure 2 and Figure 3, where the input waveform data is shown for the spoken digits nine and zero, respectively. The RNN network is trained, and after 80 epochs, the classifier has some success (≥80% accuracy), as shown in the confusion matrix in Figure 4.

Figure 2.

Waveform of spoken digit nine.

Figure 3.

Waveform of spoken digit zero.

Figure 4.

Confusion matrix of spoken digit classifier.

4.2. Regression

The objective of doing a regression analysis is to get an understanding of the relationship that exists between a number of independent variables and a dependent variable, sometimes referred to as the target feature. Regression methods will be used throughout the training process of models intended to anticipate or predict future trends and events. Using labeled training data, these models figure out how the input data relates to the output data. It is, therefore, possible to estimate future patterns, predict outcomes based on data that has not yet been seen, or utilize it to comprehend gaps in data that have already been collected.

The predicted target feature in the regression problem is a constant value. For example, if we know the number of rooms, the size of the house, the neighborhood, the zip code, and the number of people who live there, we can figure out how much the house costs since the labels or outputs are already known, so regression problems are classified as supervised learning. Linear regression is the most basic type of regression technique. The root mean square error (RMSE) value is a common way to determine how accurate a model is. There are also algorithms like decision trees, random forests, support vector regression, and neural networks that are not linear and can be used to solve regression problems [24,25,26,27].

4.3. Clustering

Clustering falls under the category of unsupervised learning, as the dataset we provide to the cluster does not contain any labels. Cluster analysis categorizes data items only based on the information inside the data itself that characterizes the objects and their relationships to one another. The key requirement is that the items in one cluster should be comparable or have some other relationship, while the items in other clusters should be distinct from one another or have no such relationship. The clustering is considered to be more effective or distinct when there is a greater degree of resemblance or homogeneity within a group as well as a greater degree of diversity across clusters. A comprehensive survey on clustering algorithms was presented by the authors of ref. [28] in 2005. They discussed various weaknesses and strengths in the clustering algorithms, which include squared error-based, hierarchical clustering, neural networks-based, density-based clustering, and some other clustering algorithms, including fuzzy c-means. Furthermore, it presented the key factors that should be considered prior to selecting the clustering algorithm for a particular set of problems. In this article, K-Means, K-Nearest Neighbor, and DBSCAN algorithms are demonstrated in the next section using the dataset from the sci-kit learn library.

4.4. Image Classification and Segmentation

Image classification can be used to label an image as belonging to a set of categories; more detailed information can be extracted through image segmentation, which identifies specific objects in the image. Within image classification, deep convolutional neural networks are state-of-the-art for high-performance multi-class detection within an image. These network neurons use local receptive fields that input a limited region of the image data. These neurons are typically situated in layers to perform down-convolutions and up-convolutions first, reducing and expanding the image data. This model architecture allows for the abstraction of shapes and the learning of shapes and boundaries. To realize the real-time processing requirements for image classification in self-driving vehicles and other real-time applications, the use of heterogeneous and parallel programming for computing technology can be leveraged. This essentially takes the graphics processing unit, which operates on many more processing cores simultaneously as compared to the standard central processing units. The graphics processing unit is then used to compute the classifications of objects in the image [29].

4.5. Object Detection

Object detection can be a sensor-initiated process in which such sensors capture data about the object. Then that data is run through the machine learning process to identify the object. Based on the amount of data on the object, the ability to correctly detect and categorize the object can be changed. A partial data capture would reduce the ability to detect that object successfully. Our minds are constantly detecting objects and even identifying objects based on only a partial view of the object. For example, by seeing the front of a car, we can know the whole car is also there, or by feeling a keyboard, we may know where the ‘f’ and ‘j’ keys are and, from there, how to type. Furthermore, those skilled at cooking by smell and taste can detect ingredients. Similarly, given partial data from sensors, a machine learning process that is sufficiently complex and well-trained can successfully detect an object. This has huge implications in technology, from fully self-driving vehicles to robotics and to time-series event analysis and classifications, such as real-time network analysis for identifying bad actors on the network.

4.6. Association Rule Learning

This technique of association rule learning draws on patterns in the dataset to extract a set of rules that are generally true about the dataset. This is a highly useful technique for data mining datasets and using the rules to draw inferences about outcomes from new data. These inferences can improve decision-making in planning for events ahead of time. Classically, the example of grocery items is provided. Such associations have been extracted, such that if milk is purchased, bread will likely be purchased by the same customer. Modeling inventory in this way can help stores keep their items stocked appropriately for the demand they expect from consumers. An association rule might be very strong but not 100% true in the dataset, yet such a rule could still prove useful to know. For this reason, association rules can be extracted based on an interestingness metric that can allow for strong rules to be elected or rejected based on a threshold.

4.7. Ranking

The ranking creates a hierarchy of importance for a set of information retrieved. ML ranking applications can be prepared through semi-supervised, supervised, or reinforced learning. The ranking is distinguished by type, either pointwise, pairwise, or listwise. Key to these is the application of a metric to score retrieved items; commonly, the mean average precision (MAP) is used.

There are numerous models for ranking, including ANN, SVM, extreme gradient boost, and other custom or hybrid models. In the point-wise approach, ranking is handled as a regression problem. Pairwise pairs of retrieved documents are compared in a binary classification problem. Whereas listwise, the loss is computed on a list of documents’ predicted ranks. In pairwise retrieval, binary cross entropy (BCE) is calculated for the retrieved document pairs utilizing is a binary variable of document preference or and is a logistic function:

In the listwise ranking, discounted cumulative gain (DCG) or variants of this form are used; it grades the relevance of documents set to evaluate the gain of a document based on its position; the DCG is defined as:

The ranking is highly implemented in our modern world, and ranking systems are trained for online systems that take user clicks as input in determining the precision of the ranking of retrieved items. Just some of the applications for ML-based ranking include:

- Web search results ranking

- Writing sentiment analysis

- Travel agency booking availability based on search criteria

4.8. Optimization Problems

Machine learning to automate solutions to optimization problems will search through the solution space for an optimal solution. Evolutionary algorithms are used to do this. The evolutionary algorithm (EA) includes genetic mutation and particle swarm algorithms. The genetic algorithm (GA) will model every solution as an individual in a population. There is a fitness function for evaluating an individual from the population; depending on the fitness score, individuals from a population will be chosen for their genetics when creating the next population. This heuristic leverages the theory of natural evolution into a computer program for automatically finding solutions to optimization problems. Particle swarm optimization (PSO) is an evolutionary algorithm like the GA. However, PSO defines particles instead of individuals and incorporates velocity into these particle objects. One hard requirement of EAs is that a fitness function can be used to evaluate a potential solution. Some other optimization algorithms are Vortices Search Algorithm, Simulated Annealing, Pattern Search, and Artificial Bee Colony, which are discussed later in this article.

5. Machine Learning Model Designs

In this section, the ML model designs are presented with their mathematical implementations. Many models have variants of their formulation, and all variants are not covered in this paper, but the essence or base models are shown along with some of the variant designs.

5.1. Regression and Classification Problems

Both regression and classification problems are predictive models that involve the learning of a mapping function from the inputs to an output approximation. However, classification problems predict a discrete class label, whereas regression problems predict a continuous quantity.

5.1.1. Linear Regression

Linear regression is a type of predictive analysis that is simple and often used. In this kind of regression analysis, the number of independent variables is kept to a minimum to focus on the existence of a linear correlation between the independent variables and the dependent variable, also known as the target variable.

where y is the target variable, m is the slope of the line, and c is the y-intercept.

The most significant benefit of using linear regression is that it takes a very short time to train and is a very simple model to implement. It has been observed in the literature that in some cases, linear regression performs the same as another statistical model, such as a decision tree or a random forest, or with just slight inaccuracy. However, the training time of linear regression is significantly lower compared to other models. Therefore, the linear regression machine learning model is often considered a baseline model to compare the performance of other machine learning models. The least squared error loss function used in linear regression is represented as shown in Equation (6).

where shows the predicted value for a given point ad represents the actual value. The cost function is the sum of all the LSE for all the data points in the dataset and can be represented as in Equation (7), where n represents a number of data points.

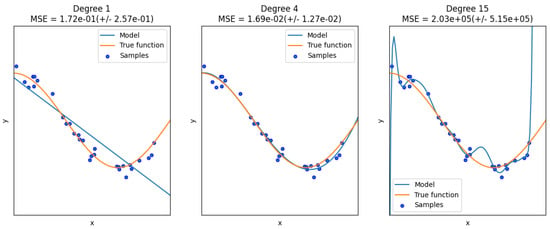

A more complex linear regression variant is polynomial regression. It can be represented as shown in Equation (8). However, it is observed that with a higher degree of polynomial equation, the model tends to overfit. To combat the overfitting problem, lasso and ridge regression techniques are used.

5.1.2. Logistic Regression

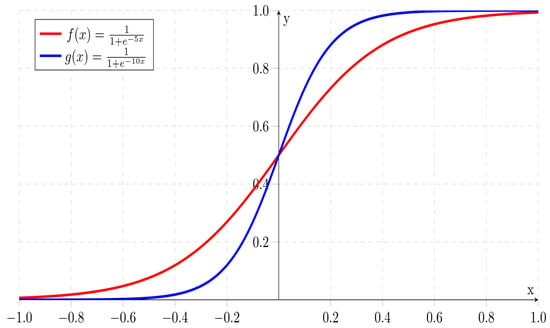

Like linear regression, logistic regression is the simplest machine-learning model for classification problems. Logistic regression is an example of supervised machine learning and works when the labels are available during the training process. The logistic regression model calculates the weighted sum of the input characteristics (plus a bias term) and then produces the logistic of this result rather than the actual result itself [30]. The input variable is the crucial element in this process. Predicting a binary result is one of the most common applications of logistic regression. When there is a possibility of more than two outcomes coming from a condition, multinomial logistic regression is a useful tool to employ to predict the output class [31]. The logistic function is represented as in Equation (9):

The output can be defined as .

5.1.3. Decision Tree

To assess the model performance of the classification tree, the gini index and entropy are two measures that are preferred.

Decision trees are also used for a regression problem. A process called binary recursive partitioning is used to build a regression tree. This is an iterative process that divides the data into partitions or branches and divides each partition into smaller groups as the method goes up each branch. First, all the records in the training set are put into the same partition. Using every possible binary split on every column, the algorithm allocates data to the first two partitions or branches. Once all of the data has been allocated, the process repeats. This method selects partitioning that results in the least overall increase in total squared residuals from the mean in both subgroups. For the reason that the tree is developed from the training set, a completely grown-up tree will typically have issues with over-fitting after it has reached its full potential. Due to this, the tree has to have its branches pruned using the validation set to minimize the effects of overfitting.

5.1.4. Random Forest

Random forest is a complex version of the decision tree. Like a decision tree, it also falls under supervised machine learning. The main idea of random forest is to build many decision trees using multiple data samples, using the majority vote of each group for categorization and the average if regression is performed. The mean importance feature is calculated from all the trees in the random forest and is represented as shown in Equation (13).

where denotes the mean feature importance for all the trees, denotes the feature importance of i in tree and n shows the number of trees in the forest.

In the random forest, there is no rule to find the optimal number of trees. However, ref. [32] suggests starting with ten times the number of features, increasing the size of the tree in each iteration, and comparing the accuracy.

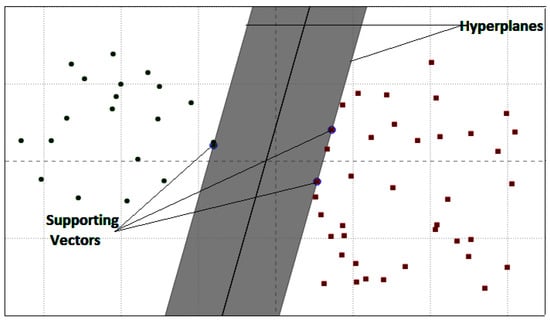

5.1.5. Support Vector

Support Vector Machines, or SVMs for short, are a model used in machine learning characterized by their robustness and adaptability. It can conduct linear or nonlinear classificational and regression and even identify outliers with decent accuracy.

The core idea of SVM is to put each feature vector in high-dimensional space and draw an imaginary high-dimensional line [33], known as a hyperplane. The expression of hyperplane is given in Equation (14)

where w represents the real valued vector, x is the input feature vector, b is a real number, and n is the number of dimensions of the feature vector. For example, when we consider binary classification with labels −1 and 1, the predicted label can be represented as

Therefore, the primary objective is to find the optimal value of w and b. Thus, Equation (16) can be translated as

where when the output is 1 and when the output is −1. The difference between these parallel hyperplanes is known as the margin. The main idea is to maximize the margin. The distance between two parallel hyperplanes can be represented as . Therefore, to maximize the distance between the hyperplanes, w has to be minimized. The vectors that support making the hyperplane are known as support vectors.

However, there are very rare instances where data is linearly separable, so for that SVM uses the kernel to classify non-linearly separable data. There are mainly three types of kernels.

- 1.

- Linear Kernel

- 2.

- Polynomial Kernel

- 3.

- Radial Basis Functions Kernels

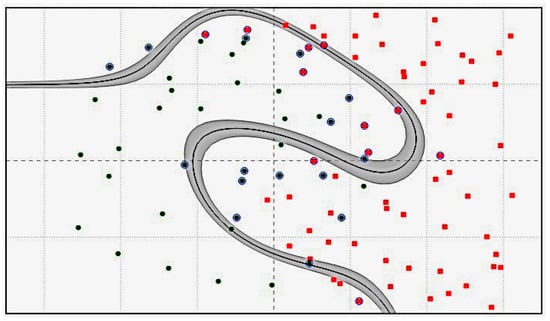

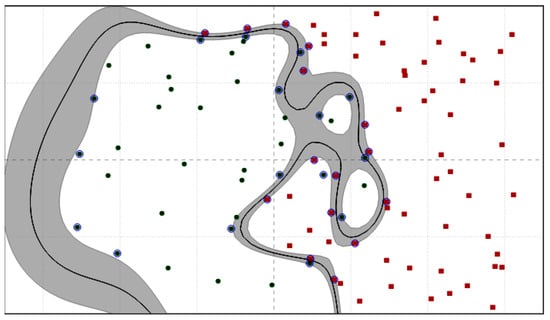

The linear kernel works well for linearly separable data, as shown in Figure 5, whereas the polynomial kernel represents kernels with more than one degree and is represented as , where k denotes the degree of the polynomial. Radial Basis Function (RBF) kernels are one of the most commonly used kernels in SVM problems. They don’t require any prior information about the data and perform well for linearly separable data as well as non-linearly separable data. RBFs are represented as , where is the euclidean distance between the vectors and is a hyper-parameter equivalent to , where is a free parameter. is a parameter of the RBF kernel that controls the decision region. When gamma is small, the ‘curve’ of the decision boundary has a very low slope, which results in the decision zone having a relatively wide breadth. There are big regions of decision boundaries surrounding data points when the gamma is big because the ‘curve’ of the border is likewise high, as shown in Figure 6 and Figure 7.

Figure 5.

The depiction of the SVM with the linear kernel, supporting vectors, and hyperplanes.

Figure 6.

SVM with RBF ( = 0.1).

Figure 7.

SVM with RBF ( = 1.0).

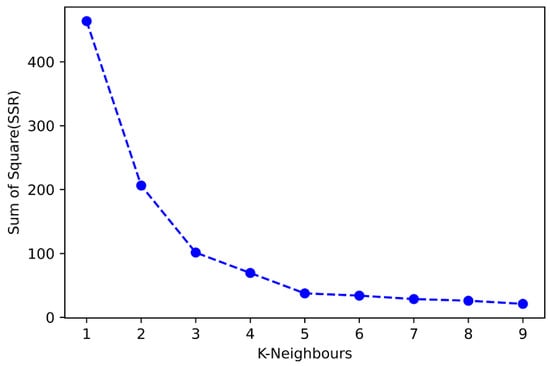

5.1.6. K-Nearest Neighbour

The k-nearest neighbors (KNN) algorithm has gained much popularity because it is a basic and easy-to-implement algorithm. It comes under the category of supervised machine learning as the target variable is present in the dataset. It is used to tackle both classification and regression problems. The k in the KNN algorithm is the number of neighbors to analyze for predicting the class or estimating the output value. In the classification problem, KNN assigns the category of the majority of its neighbors, whereas for the regression problem, it takes the mean of the target variable of its neighbors. Therefore, to begin, KNN starts to find its neighbor by calculating the distance between the vector for which prediction has to be performed and all the other vectors for which labels are present.

The distance can be calculated with any of the following distance matrices, Euclidean distance, Manhattan distance, Minkowski distance, or Hamming distance. The K-number of neighbors is selected based on their closeness to the vector for prediction. It is recommended to take K as an odd integer value to avoid any ties among the classes. The challenge with KNN is to find an optimal number of neighbors. Therefore, to find an optimum number of neighbors, elbow methods are used. It uses the sum of squares (SSR) to search for the best value of K as shown in Figure 8.

Figure 8.

Elbow method.

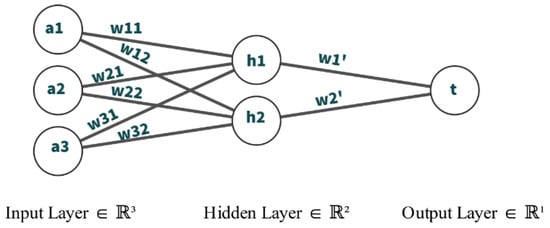

5.1.7. Neural Networks

Neural networks can be trained to classify based on supervised or unsupervised learning, and specialized neural network models are the leading approach in the visual classification of objects. Every neural network contains at least three layers: (1) Input Layer, (2) Hidden Layer, and (3) Output Layer. These networks’ generalizability makes them well-suited for all types of classification problems, from binary to multi-class, with all types of data. Some of the best computer vision models include u-networks, so called based on the ’U’ shaped structure of their design. Another type of network is a recurrent neural network. One popular form of this network uses so-called long short-term memory cells in its hidden layers. These types of cells are able to capture or learn relations between events that are occurring over more extended time separations. This is applied to time-series classification. The neural network can also work for numerous regression problems with higher accuracy than linear regression, decision trees, random forests, etc. In this article, we have explained six main types of neural networks.

Feed Forward: This is the simplest form of all neural work. The most basic feed-forward neural network contains one input layer, one hidden layer, and one output layer. The processing of data only occurs in one way. Although the data could travel via a number of the hidden layers. The output of the neuron is represented by Equation (18)

where z is the output of the neuron, n is the number of neurons in the previous layer, a in the input vector, w is the weight vector, and b is the bias.

Figure 9 illustrates the workings of a feed-forward neural network.

The output of the hidden neuron is the sum of the dot product of the corresponding weight and the input. Similarly the the output, t, if the summation of the dot product of the hidden neuron output and its corresponding weight.

Figure 9.

Basic Neural Network Structure.

- 1.

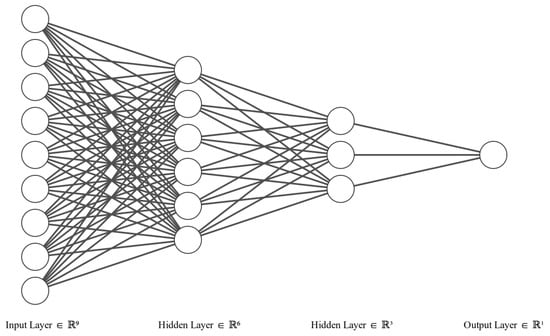

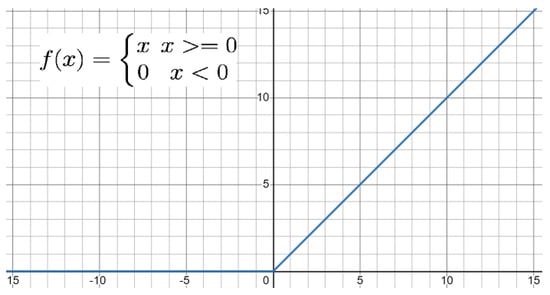

- Multi Layer Perceptron(MLP)/ Deep Neural Network: Multi layer perceptrons are similar to feed-forward neural network, but in this network there are more than one hidden layer.In the backpropogation algorithm, the output from the output layer is compared with the real value, and then weights are adjusted according to the residual. This step is repeated until there is no improvement in the output. Figure 10 shows a typical example of a deep neural network. In the real world, the data is rarely linear, so to handle non-linear data, non-linear activation functions are required. Usually two types of activation functions are applied in the MLP model: one for the hidden layer, which is generally the same for all the layers, but there is no compulsion, and one for the output layer. The most commonly used activation functions are (1) linear activation function, (2) sigmoid, and (3) relu. The output of these activation functions decides whether the neuron will fire or not. For example, in one of the most common activation functions denoted by Equation (11) the neuron is activated or fired only when the output of the neuron is greater than or equal to zero. Figure 11 shows the graphical representation of the Relu activation function. And Figure 12 representation of Sigmoid activation function.

Figure 10. Neural Network Structure With Two Hidden Layer.

Figure 10. Neural Network Structure With Two Hidden Layer. Figure 11. Relu Activation Function.

Figure 11. Relu Activation Function. Figure 12. Sigmoid Activation Function.

Figure 12. Sigmoid Activation Function. - 2.

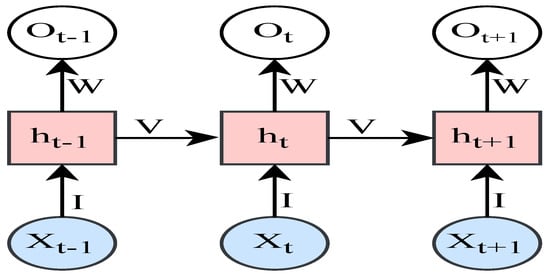

- Recurrent Neural Networks (RNN): Recurrent Neural Networks are derived from the feed-forward neural network. These networks have additional connections in their layers that enhance an ANN’s ability to learn from sequential data. This can be any form of sequential data, such as numerical time-series data or video and audio. As seen in Figure 13, a hidden layer of RNN cells passes the output to the next layer and to the adjacent cell in series. This enables the network to learn from patterns that appear in sequence. There are a few different formulations for simple RNN networks, such as the following: The W, I, and b are the weights and recurrent connection matrices.

Figure 13. RNN structure.The hidden layer vector is calculated, including the recurrent connections as . The output vector is , and is the input vector.The activation functions are and [34].

Figure 13. RNN structure.The hidden layer vector is calculated, including the recurrent connections as . The output vector is , and is the input vector.The activation functions are and [34]. - 3.

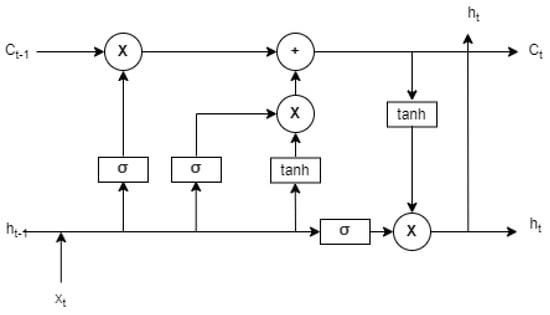

- Long Short-Term Memory (LSTM): Long short-term memory is an artificial neural network cell type that maintains memory and has input-output and forgets gates as shown in Figure 14. The LSTM is a further developed form of the RNN that incorporates the memory cell. The memory is able to carry forward over various temporal intervals and learn relations between dependent events that are not directly temporally sequential. The LSTM is also an enhanced ANN cell for overcoming the vanishing gradient problem in deep ANNs. The vanishing gradient problem refers to the exponentially smaller error signal that propagates back through the ANN during the supervised learning process as small error values (0,1) are multiplied together. Through its cell formulation, the forget gate does not have an exponential decay factor and thus counters the vanishing gradient problem. ANN using LSTM has shown record-breaking performance in language processing, such as speech recognition, in chatbots for responding to input text, and machine translation.

Figure 14. LSTM Cell Design.The formulation of an LSTM cell is as follows: let W represent weight matrices and U represent the cell’s connection matrices. The components of the cell are the forget gate, input gate, output gate to the next recurrent cell in the layer, and memory cell input gate, represented respectively in the equations below.The cell state is maintained as . The output vector that goes to the next layer in the network . The variable state of the memory cell is an element of real numbers, as with the network input and . All vectors except the hidden state, output, and cell input activation vectors range from (0,1). The hidden state is in the range of [35,36,37].

Figure 14. LSTM Cell Design.The formulation of an LSTM cell is as follows: let W represent weight matrices and U represent the cell’s connection matrices. The components of the cell are the forget gate, input gate, output gate to the next recurrent cell in the layer, and memory cell input gate, represented respectively in the equations below.The cell state is maintained as . The output vector that goes to the next layer in the network . The variable state of the memory cell is an element of real numbers, as with the network input and . All vectors except the hidden state, output, and cell input activation vectors range from (0,1). The hidden state is in the range of [35,36,37]. - 4.

- Autoencoders: Autoencoders are an unsupervised learning technique in which neural networks are utilized for representation learning. There is a neural network architecture that imposes a bottleneck in the network and forces a compressed knowledge representation of the original input as shown in Figure 15. If the input features were independent of one another, this compression and subsequent reconstruction would be very difficult. However, if a structure exists in the data, such as correlations between input features, this structure can be learned and applied when moving the input through the network’s bottleneck. An auto-encoder comprises two parts that are encoders and decoders [38].

Figure 15. Basic Autoencoder Architecture.The objective of the encoder is to compress the data in such a way that only minimal information is lost, whereas the objective of the decoder is to utilize the compressed information and regenerate the original data. Data compression, image denoising, dimensionality reduction, feature extraction, new data generation, image colorization, and anomaly detection are a few applications of autoencoders.The basic autoencoder consists of five parts: input layer, encoder hidden layer, compressed data, also known as a latent vector, decode hidden layer, and output layer. In autoencoders, the number of input features is always equal to the number of output features, and the output layer will always have fewer neurons than the input layer; otherwise, there is the possibility of data replication and there won’t be any compression. Mathematically, autoencoders can be expressed as shown in Equation (23)x is the input vector, is the encoder function, z is the compressed data, is the decoder function and is the output. Deep autoencoders are similar to basic autoencoders, but they have multiple hidden layers for encoders and decoders, where the number of hidden layers for both the encoder and the decoder should be equal and should have the same number of neurons. It’s similar to a mirror image, where the latent variable vector acts as a mirror. Some common types of autoencoders include denoising the autoencoder, the variational autoencoder, and the convolutional autoencoder [6].

Figure 15. Basic Autoencoder Architecture.The objective of the encoder is to compress the data in such a way that only minimal information is lost, whereas the objective of the decoder is to utilize the compressed information and regenerate the original data. Data compression, image denoising, dimensionality reduction, feature extraction, new data generation, image colorization, and anomaly detection are a few applications of autoencoders.The basic autoencoder consists of five parts: input layer, encoder hidden layer, compressed data, also known as a latent vector, decode hidden layer, and output layer. In autoencoders, the number of input features is always equal to the number of output features, and the output layer will always have fewer neurons than the input layer; otherwise, there is the possibility of data replication and there won’t be any compression. Mathematically, autoencoders can be expressed as shown in Equation (23)x is the input vector, is the encoder function, z is the compressed data, is the decoder function and is the output. Deep autoencoders are similar to basic autoencoders, but they have multiple hidden layers for encoders and decoders, where the number of hidden layers for both the encoder and the decoder should be equal and should have the same number of neurons. It’s similar to a mirror image, where the latent variable vector acts as a mirror. Some common types of autoencoders include denoising the autoencoder, the variational autoencoder, and the convolutional autoencoder [6]. - 5.

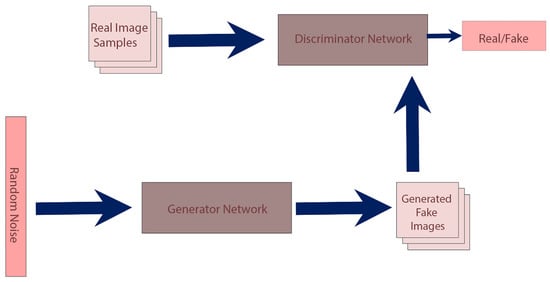

- Generative Adversarial Network (GAN): Like autoencoders, the primary application of GAN is to generate a new dataset from a given set of data. However, the architecture of GAN is very different from autoencoders. In GAN, there are two parts: the generator and discriminator. These are two different neural networks that run in parallel. The generator starts with random noise and generates a sample. Based on the feedback from the discriminator, the generator trains to generate more realistic data, and the discriminator learns from the real sample of data and the feedback from the generator. With the improvement in the performance of the generator, the performance of the discriminator deteriorates, and for a perfect generator, the accuracy of the discriminator is 50%. The basic architecture is shown in Figure 16.

Figure 16. GAN Architecture.In GAN, two loss functions work in parallel, one for the generator and the other for the discriminator. The generator’s objective is to minimize the loss, whereas the objective of the discriminator is to maximize the loss. The mathematical formulation for the loss function is shown in Equation (24).where is the discriminators’ probability that the real data is real, is the expected value of the real data, z is the noise, is the generator output with z, is discriminator’s probability estimate that the fake data is real, is the expected output of the random samples from the generator.

Figure 16. GAN Architecture.In GAN, two loss functions work in parallel, one for the generator and the other for the discriminator. The generator’s objective is to minimize the loss, whereas the objective of the discriminator is to maximize the loss. The mathematical formulation for the loss function is shown in Equation (24).where is the discriminators’ probability that the real data is real, is the expected value of the real data, z is the noise, is the generator output with z, is discriminator’s probability estimate that the fake data is real, is the expected output of the random samples from the generator. - 6.

- Convolutional networks (CNN): CNNs have great applications in computer vision. These networks receive an image as input and perform a sequence of convolution and max pool operations to progressively smaller scales, descending the initial part of the ‘U’ followed by up-convolution and convolution in sequence to ascend the later part of the ‘U’. This model design has proven highly accurate at classifying objects in an image. The convolution network captures spatial and temporal dependencies in an image and performs better at classifying image data when compared to a flattened feed-forward neural network. In a convolutional network, a kernel or matrix passes over the 2-D data array and performs an up or down convolution on the data; alternatively, in a color image, the convolution kernel can be 3-dimensional. These networks are trained in supervised learning and classify images using the softmax classification technique. The ability to automatically do feature extraction and determination is the strength of these models over any competing model. The top image classification AI is based on CNNs, including LeNet, AlexNet, VGGNet, GoogleLeNet, ResNet, and ZFNet.

- 7.

- Transformer Neural Network: Transformer networks are more effective at handling long data sequences because they handle the incoming data in parallel, while standard neural networks process the data sequentially. These types of neural networks are heavily used in Natural Language (NLP) for language translation and text summarization. Transformative networks are unique in that they can prioritize different subsets of incoming data while making decisions. This is called the self-attention show in Equation (25). An attention function is a mapping between a query and a collection of key-value pairs to an output, where the query, the key-value pairs, and the result are all vectors. The output is calculated as a weighted sum of the values, where a compatibility function between the query and the corresponding key determines the weight assigned to each value.This equation computes the attention weights for a given input sequence by calculating the dot product of the query matrix Q and the key matrix K, scaling the result by the square root of the dimension of the key matrix , and applying the softmax function. The resulting attention weights are then applied to the value matrix V to weigh it and compute the output.The Transformer network consists of an encoder and a decoder. The encoder computes a sequence of hidden representations from the input sequence, utilizing several self-attention layers and feed-forward layers. After receiving the encoder’s output, the decoder generates the output sequence by attending to the encoder’s hidden representations and employing additional self-attention and feed-forward layers.Some of this network’s significant advantages are the ability to handle long sequences of data efficiently, its parallel processing capabilities, and its effectiveness in natural language processing tasks. However, it also comes with some disadvantages, including the high computational resources s required for training and the need for large amounts of data for effective training.

- 8.

- Graph Neural Network: Graph Neural Networks (GNNs) are a class of deep learning models designed to handle data structured as graphs. GNNs are particularly useful for tasks involving relational or structured data, such as social networks, citation networks, and molecular structures. The core principles of Graph Neural Networks (GNNs) and their operation in the context of graph-structured data.

- (a)

- Information diffusion mechanism: GNNs work by iteratively propagating information between nodes in the graph, simulating a process similar to information diffusion or spreading across the graph.

- (b)

- Graph processing: In a GNN, each node in the graph is associated with a processing unit that maintains a state and is connected to other units according to the graph’s connectivity.

- (c)

- State updates and information exchange: The processing units (or nodes) update their states and exchange information with their neighbors in the graph. This process is iteratively performed until the network reaches a stable equilibrium, meaning the node states no longer change significantly between iterations.

- (d)

- Output computation: Once the network reaches a stable equilibrium, the output for each node is computed locally based on its final state. This can be used for various tasks, such as node classification, link prediction, or graph classification.

- (e)

- Unique stable equilibrium: The GNN’s information diffusion mechanism is designed so that a unique stable equilibrium always exists. This ensures that the iterative process of state updates and information exchange eventually converges to a stable solution, making the GNN’s output consistent and reliable.

One simple example of a Graph Neural Network (GNN) is the Graph Convolutional Network (GCN), introduced in [39]. The GCN operates on graph-structured data and updates each node’s representation based on its neighbors’ representations. The update rule for the hidden representation of a node in a GCN can be expressed as:Here:denotes the hidden representation of nodes at the l-th layer, where is the input node features. is the adjacency matrix of the graph with added self-connections, computed as , where is the original adjacency matrix and is the identity matrix. is the degree matrix of , with . is the trainable weight matrix at the l-th layer. is an activation function, such as ReLU. In a GCN, the node representations are updated iteratively for a fixed number of layers or iterations. After the final layer, the node representations can be used for various tasks, such as node classification, link prediction, or graph classification.

5.2. Clustering

Clustering is an example of unsupervised machine learning. When we have to find out the category but no information regarding the labels is available, clustering is a very beneficial algorithm. The clustering algorithms divide the data points into different groups such that the properties of the points in each group/cluster are similar, that is, intra-cluster properties are maximized but inter-cluster properties are minimized. This mechanism of clustering assists in labeling the data points that are added to the dataset but do not carry any information regarding their labels. The common clustering techniques include K-means clustering, DBSCAN, and OPTICS.

5.2.1. K-Means

K-means clustering is a common top-down clustering algorithm due to its low computational cost and simplicity in implementation.

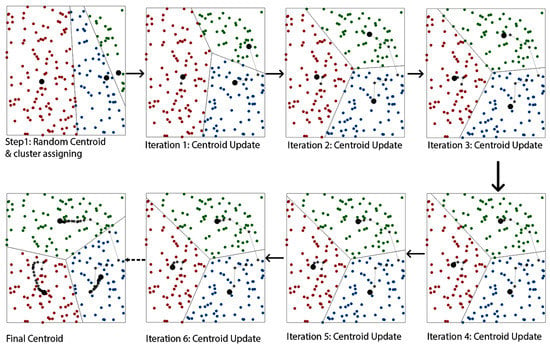

This step is repeated until there is no change in the centroid position. The demonstration of K-means is shown in Figure 17.

Figure 17.

K-Means Clustering.

5.2.2. Density Based Clustering

When using density-based clustering, the clusters that are produced are determined by the data points that have the highest density. Outliers are considered to be any points in the data that do not fit into any of the clusters. This sort of clustering suffers from the major shortcoming that it does not function well when there are data of different densities and large dimensions. Two types of density-based clustering algorithms, DBSCAN and OPTICS, are explained in this article.

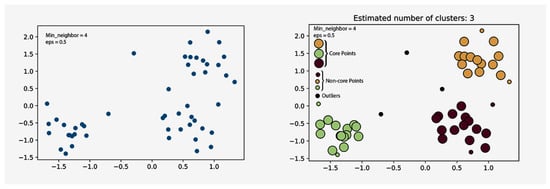

- Density-based spatial clustering of applications with noise (DBSCAN): DBSCAN starts with any object in the dataset and looks at its neighbors within a certain distance and is mostly denoted by eplison (Eps). If the points around it in that Eps are more than the minimum number of neighbors, then these points are marked as core points. The cluster is formed by core objects and non-core objects. The non-core points are part of the cluster, but they don’t extend the cluster, which means they cannot include other non-core points in the cluster. The other objects that are not part of any clusters are marked as outliers. The distance between the points is usually the Euclidean distance. The of DBSCAN is presetned in Figure 18.

Figure 18. Left: Before Clustering, Right: After DBSCAN Clustering.K-means is a centroid-based clustering algorithm, and it starts with the initialization of the number of clusters, followed by assigning a random centroid to each cluster. In the next step, we assign the points to the nearest centroid cluster, and once all the points are assigned, we update the centroid.

Figure 18. Left: Before Clustering, Right: After DBSCAN Clustering.K-means is a centroid-based clustering algorithm, and it starts with the initialization of the number of clusters, followed by assigning a random centroid to each cluster. In the next step, we assign the points to the nearest centroid cluster, and once all the points are assigned, we update the centroid. - Ordering Points To Identify Cluster Structure(OPTICS): OPTICS is an extended version of DBSCAN. In OPTICS two more hyperparameters are added. (1) Core Distance (2) Reachability Distance. Core distance is defined as the minimum radius value in order to mark the point as a core point, which means it will see if there is any sub-cluster within a cluster that satisfies the minimum neighbor condition. Reachability is the maxima of the core distance and the Euclidean distance between the points. Reachability and core distance are not defined for non-core points. The OPTICS algorithm works well compared to its predecessor, DBSCAN, as it is able to handle data with varying densities.

5.3. Optimization

Optimization problems are solved by EA; there are two key implementations of EA: the GA and the PSO. The GA uses the theory of biological natural selection to evolve solutions.

5.3.1. Genetic Algorithm

The GA creates an initial population then loops the following methods:

- 1.

- Selection

- 2.

- Crossover

- 3.

- Mutation

- 4.

- Fitness function

The fittest solutions are most likely to be selected for cross-over of genetics, and mutation will alter the genetics of the child. The initial population may be randomized combinations of variables in the solution. Crossover creates new combinations from the parent solutions, showing characteristics from both parents. The mutation is crucial for the introduction of new genetic material into the population. In practice, the cross-over loop will produce solutions that find local optimal solutions, whereas mutation will drive the population toward global optimal solutions. In the implementation of GA, there are two rate values and , which change the cross-over and mutation rates [40].

5.3.2. Particle Swarm Optimization

The PSO randomly initializes a particle swarm in the decision space, similar to the GA the PSO must be able to evaluate a particle’s performance in terms of its solution. Each particle has a speed and position associated with it. The speed and position of a particle are changed in this way so that each particle represents a solution vector that travels through the solution space. A particle’s velocity is changed by relating that particle’s position vector and velocity to the global best particle. In this way, the swarm will move together through the search space, traversing the solution space, and honing in on the global best solution. Metaheuristics of PSO exist as ant colonies and firefly swarm optimization [41,42].

5.3.3. Vortex Search

The Vortex Search method was developed by Doan and Olmez [43] and inspired by the forms of vortices. VS is a better method for solving single-objective continuous problems.The VS method searches inside the boundaries of a radius adaptively calculated around the vortex’s center to achieve a balance between global and local searches. In the early iterations, a global search is conducted while the radius is initially set to a large number; in subsequent iterations, the radius is progressively decreased to enhance the effectiveness of the local search. Hence, the quest for the optimal global solution is increased. The major advantages of VSA are that it handles high-dimensional problems efficiently and is easy to implement as it does not require much parameter tuning. In 2017, a Multi Objection VS(MOVS) was proposed [44]. A few challenges in VSA include getting stuck in a local optimal solution, a slow convergence rate, and low accuracy. To address these issues chaotic vortex search was proposed in 2022 [45].

5.3.4. Simulated Annealing

Simulated Annealing (SA) is a stochastic optimization algorithm inspired by the annealing process in metallurgy, where a material is cooled slowly to reach its optimal crystalline structure [46]. SA explores the search space by accepting solutions based on a probability that depends on the current temperature. As the temperature decreases over time, the algorithm becomes less likely to accept worse solutions, converging to a near-optimal solution [47].

5.3.5. Pattern Search

Pattern Search (PS) is a deterministic optimization algorithm that performs a step-by-step exploration of the search space [48]. The method starts from an initial point and searches in a pattern around it. If a better solution is found, the search moves to the new point, and the pattern is updated. The search continues until a termination criterion is met, such as a maximum number of iterations or when the improvement is below a threshold.

5.3.6. Artificial Bee Colony

Artificial Bee Colony (ABC) is a swarm intelligence-based optimization algorithm that simulates the foraging behavior of honey bees [49]. In ABC, the search agents are “bees” exploring the solution space for “food sources” (candidate solutions). The algorithm consists of three types of bees: employed, onlooker, and scout bees. Employed bees explore the vicinity of their current food source; onlooker bees select and exploit promising food sources based on the information provided by employed bees; and scout bees randomly search for new food sources when a food source is exhausted.

5.4. Hyperparamter Optimization Algorithms

The goal of hyperparameter tuning methods is to optimize the performance of a machine learning model by determining the optimal values for the model’s hyperparameters. The most widely used hyperparameter tuning algorithms include:

- 1.

- Grid Search: An exhaustive search technique that evaluates all possible combinations of hyperparameter values specified in a predefined grid [50].

- 2.

- Random Search: A simple yet effective method that samples random combinations of hyperparameter values from specified distributions, often more efficient than grid search [50].

- 3.

- Bayesian Optimization: A model-based optimization technique that uses surrogate models, typically Gaussian processes or tree-structured Parzen estimators, to guide the search for optimal hyperparameters [51].

- 4.

- Gaussian Processes: A Bayesian optimization variant that employs Gaussian processes as the surrogate model to capture the underlying function of hyperparameters and the objective [52].

- 5.

- Tree-structured Parzen Estimators (TPE): A Bayesian optimization variant that uses TPE to model the probability distribution of hyperparameters conditioned on the objective function values [53].

- 6.

- HyperBand: A bandit-based approach to hyperparameter optimization that adaptively allocates resources to different configurations and performs early stopping to improve efficiency [54].

- 7.

- Successive Halving: Successive Halving is a hyperparameter optimization approach used to identify the optimal hyperparameter combination for a particular machine learning model. The technique seeks to determine the optimal choice of hyperparameters given a constrained budget, which is often the total number of times the model can be trained and evaluated.The primary goal of successive halving is to more efficiently allocate resources by swiftly removing configurations with subpar hyperparameters. This is accomplished by running the model in parallel with various hyperparameter values and lowering the number of analyzed configurations iteratively [55].

6. Major Challenges in Machine Learning and Research Gaps

Machine learning is often difficult to apply or formulate for the problem, requiring iterations of models or variants of models before arriving at a good design for the problem being solved by the ML. Careful attention to detail in the training process must be done by the ML programmer to ensure a good fit of the model to the data. Underfitting or overfitting a model will result in poor performance from the model. Model selection is important and may require some experimentation to compare competing models’ performances. Data can also be a challenge, such as the availability of data or missing data. Finally, there is a hardware requirement for ML. To run applications of ML in time to be practical, there must be sufficient computer availability.

6.1. Underfitting

When the training time for a model is low, the model will not learn enough to perform well. For example, if the number of epochs in a neural network training is low, the model will not have sufficient passes over the training data to adjust its weight, and bias variables towards an optimal setting.

Underfitting Prevention Techniques

- 1.

- More training data: Increasing the amount of data used in the training process can significantly improve model accuracy [56]. However, it is recommended that the complexity of the model be enhanced in order to get the most out of the data [57].

- 2.

- Training Time: If a model has an under-fitting problem, increasing its training time can improve its accuracy [58].

- 3.

- Feature Engineering: A poor selection of features or data can mean that regardless of the model chosen, the ML will struggle to learn from the data. Feature engineering can include limiting input data through selection via principle component analysis as well as scaling input data features to the same ranges. Other feature engineering may also be done.

- 4.

- Outliers Removal: outliers can produce an unwanted effect on the training of a regression model or yield bad forecast results if taken as true input in a predictor. Filtering outliers can be necessary.

- 5.

- Increased Model Complexity: Compared with less complicated models, more sophisticated machine learning models, such as multi-layer perceptrons, can sometimes produce higher levels of accuracy. On the other hand, the training procedure itself needs to be optimized; otherwise, there is a risk of overfitting [59].

6.2. Overfitting

Overfitting is the consequence of training excessively on your dataset and having the model very well fit to the training data; as a result, the model performs worse on test, validation, or real-world data. A typical overfitting curve compared to performance on real data is shown in Figure 19. The problem is identified when the error of the ML on the testing data begins to rise again after having passed a minimum. Over-fitting is a classic problem in ML and can easily occur if the number of training epochs is set very high without a stopping criteria on the performance of the test data set.

Figure 19.

Under, good, and overfitting a polynomial regression model of various degrees.

Overfitting Prevention Techniques

To avoid overfitting and thus train the best ML model that reaches an error minimum on testing data and stops training the model before the error begins to rise again as a result of overfitting on the training data, it is possible to stop training. Monitoring a quantity for early stopping of training is a good approach to prevent overfitting. For example, the error metric can be monitored, and when the minimum is reached, that model is saved and used as it performs the best on test and real data. A typical approach is to monitor validation loss during training and use this metric for early stopping. Another technique is known as cross-validation, and it uses the segmentation of the dataset over various training runs. It moves the target data into the training set and the training data into the target set during training. This maximizes the utility of the training data during training because it reuses the data without fitting it into a small training target set. Rather, the full training data is used in place of the target over consecutive folds. It has the benefit of mixing the target signal and thus limiting the development of a model overfit for a specific target [60,61,62,63].

- 1.

- More training data

- 2.

- Regularisation

- 3.

- Cross Validation

- 4.

- Early Stopping

- 5.

- Dropout Layer

- 6.

- Reduce Model Complexity

- 7.

- Ensemble Learning

6.3. Model Selection

The machine learning models can be very diverse based on the type of problem. It’s not like one model fits all. It has been observed that for the same data, different machine learning algorithms yield different accuracy. Moreover, as the complexity of the model increases, there is an exponential increase in training time and hardware resources. Therefore, for more robust machine learning models, the model accuracy, training time, and resources required should be considered.

In order to find the best model for the problem, prior to model building, data preprocesing and data visualization should be performed in order to see the distribution and other statistical measures. Furthermore, dimensionality reduction and outlier removal should be performed for the most generalized model. Additionally, methods based on information criteria, such as model selection techniques can also be used to compare and choose the best model among a set of candidate models. These criteria balance the goodness of fit of the model with the complexity of the model, penalizing overly complex models that may overfit the data. Some of the widely used information criteria methods are:

- 1.

- Akaike Information Criterion (AIC): AIC is a model selection criterion introduced by Akaike [64]. It estimates the relative quality of a set of models and is given by:where k is the number of parameters in the model, and L is the maximized value of the likelihood function for the model.

- 2.

- Bayesian Information Criterion (BIC): Also known as Schwarz Information Criterion (SIC), BIC is a model selection criterion introduced by Schwarz [65]. It is similar to AIC but has a stronger penalty for model complexity:where n is the number of samples, k is the number of parameters in the model, and L is the maximized value of the likelihood function for the model.

- 3.

- Minimum Description Length (MDL): MDL is an information-theoretic model selection criterion based on the idea of data compression [66]. It aims to find the model that can represent the data with the shortest description length (the sum of model complexity and data encoding length). MDL works well for dataset model selection. It is better than the Akaike Information Criterion (AIC) and the Bayesian Information Criterion (BIC) since it does not require assumptions about data distribution or model prior probability.

6.4. Scalability

Scalability is a major challenge in machine learning because as the size of the dataset and the complexity of models increase, the computational resources and time required for training and inference also grow exponentially. Large-scale datasets and complex models demand high memory capacity, processing power, and storage, which may not be readily available or affordable for all users. Additionally, the need to distribute and parallelize computations across multiple machines or GPUs adds to the complexity of the problem, requiring efficient algorithms and infrastructure to handle communication and synchronization among the computing resources [67].

6.5. Hyperparameter Tuning

Hyperparameter tuning is one of the most challenging task in machine learning due to several reasons. First, the complexity of the hyperparameters themselves can be daunting, as they control various aspects of model architecture and training, such as learning rate, regularization, and network depth. The number and variety of hyperparameters often result in a vast search space, making it difficult to find the optimal combination. Second, the evaluation of each hyperparameter setting can be computationally expensive, as it requires training and validating the model, which can take a significant amount of time and resources, especially for large-scale datasets and complex models. Third, the optimal hyperparameters may differ across datasets, tasks, and even model initialization, making it difficult to generalize the results of tuning for one problem to another. Lastly, many hyperparameter tuning algorithms, such as grid search and random search, can be inefficient as they require testing numerous combinations without considering the interactions between different hyperparameters or leveraging prior knowledge. This has led to the development of more advanced techniques, like Bayesian optimization, to improve the efficiency of hyperparameter tuning. Nevertheless, hyperparameter tuning remains a challenging and time-consuming aspect of machine learning.

6.6. Data availability and Missing Data

Another major bottleneck in machine learning research is a lack of data. In sectors like healthcare, defense, or energy, the data is very sensitive as they have some very confidential information about the stakeholders. Therefore, in such cases, to use the data for machine learning, it has to be anonymized in such a way that there is no exposure of personal information.

The missing data causes model underfitting. Some of the potential causes of missing data are faulty equipment that is responsible for recording data, communication failure that results in failure in sending data to the data storage from where the machine learning model is scraping the data, and power cut-off. Furthermore, there are human causes for missing data, such as an employee who forgot to make the data entry or accidental deletion of data due to an untrained employee.

The missing data problem is tacked on with data imputation. There are many data imputation techniques proposed, such as LSTM-based, mean-based value, sliding window imputation in time series data, and historical mean.

One Model Doesn’t Fit All

The same algorithm cannot be used for all models in machine learning because different models have different characteristics and requirements. The following are a few reasons for this:

- 1.

- Different model architectures: Different machine learning models have different architectures and learning algorithms optimized for specific problems.

- 2.

- Model objectives: Different models have different objectives, such as minimizing mean squared error, maximizing likelihood, or maximizing accuracy. Therefore, different algorithms are used to optimize each model’s objective function.

- 3.

- Model complexity: Different models have varying levels of complexity, and a more complex model requires a different algorithm to optimize its parameters. For example, simple linear models can be optimized using gradient descent, while complex deep learning models require more sophisticated algorithms such as backpropagation.

- 4.