1. Introduction

Writing scientific works is strongly related to knowledge acquisition, finding appropriate information, and particularly detecting scientific gaps. The vast amount of published scientific works and the related amount of information leads to deciding which information is relevant and which can be omitted. The capability of humans to process large amounts of data and the adverse effects of information overload have been discussed in previous studies [

1,

2]. Information is vital in any decision-making process [

3,

4]. However, according to Buchanan and Kock, the vast amount of information available is more than required [

2] and leads to deciding which information should be considered valuable. To better grasp this issue, studies have shown that visualizations [

5] and recommendation systems [

6,

7] can be utilized as countermeasures. Recommendation systems utilize various approaches such as deep learning [

7], similarity algorithms, and natural language processing to identify and recommend relevant items of interest.

Having relevant information gives scientists a wide range of benefits and abilities. It allows scientists to find research gaps, assist the development of innovations, identify potential challenges, better understand possible future implications, create new insights, and improve the quality of research projects.

In our previous work, we examined how to identify relevant topics based on the interests of researchers and how to present the found relevant publications in a manner that allows a fast annotation during exploration [

8]. In our current work, we develop our idea further by analyzing publications or text as they are being written. As the researcher writes their work, a topic modeling approach analyzes the text in intervals. Through similarity algorithms, similar publications can be found. Using topic-modeling approaches allows for searches to be conducted using latent topics. This allows the search for relevant publications to be independent of keywords that researchers might or might not know and perform a manual search. Furthermore, the combination of autonomous topic modeling and similarity calculation allows for searches to be carried out on-the-fly as the researcher is writing.

Given the scenario that a researcher is writing a short passage of text, the system provides publication recommendations that assist her in finding topically relevant publications. She can use these to begin the research process. Alternatively, a scenario is given that the researcher has already conducted initial research and is writing the results. As he writes, the system provides precise recommendations he did not find during his initial search. He reads the overseen publications, leading him to new insight that helps him decide on improvements or new approaches that optimize their knowledge and research.

Our approach demonstrates how relevant publications can be found by utilizing similarity algorithms on topics extracted in real-time from a publication as it is being written. This approach would allow researchers to get publications recommended that might yet be unknown. We face this problem with our approach and a three-fold contribution: (1) a novel approach to publication recommendations based on real-time text analysis, (2) examine what combinations of topic modeling and similarity algorithms provide adequate recommendations, and (3) what impact text length has on topic modeling.

2. Related Work

In this section, we briefly outline how information is searched for with a focus on exploratory searches, and we describe approaches and proposals that are topically connected to our approach. As this approach is novel and no real-time recommender for scientific texts has yet to be proposed by others based on our research, we define related work as approaches and proposals that attempt to provide a real-time text analysis, utilizing topic modeling approaches in science or recommendation systems for scientific publications.

2.1. Exploratory Search

According to Janiszewski, two different types of human search can be distinguished, namely goal-directed search and exploratory search [

9]. An exploratory search aims to create a deeper insight into a topic and discover various related aspects of the topic, while a goal-directed search usually aims to answer a specific question [

10]. Marchionini, on the other hand, divides human search into three different types or “tasks” [

11]. Marchionini refers to these tasks as looking up (“lookup”), learning (“learn”), and investigating (“investigate”). The latter two are subordinated to exploratory search. Marchionini depicts the three tasks of human search in the form of overlapping clouds, because humans, by combining individual activities of different tasks, can perform different activities of a search task in parallel. This model is based on the assumption that people seek information due to internal psychological motivations and needs. A motivating factor may be a specific problem that the seeker wants to solve, such as closing an information gap on a topic that is unknown to the seeker.

In lookup search tasks, less complex activities using carefully specified search queries usually produce well-structured results in the form of numbers, names, short statements, or specific files [

11]. The exploratory search tasks, on the other hand, are usually used for complex activities where the search outcome is not known in advance. White and Roth divide this into two main activities, namely exploratory browsing and focused search [

12]. Exploratory browsing is performed so that a searcher can better define their information needs and, based on that, new ideas and insights can be gained. In focused browsing, the search results are examined in more detail than in exploratory browsing by extracting relevant information from the search results.

According to White and Roth, people tend to search exploratively when they are unfamiliar with the domain or topic of their target or do not know, or are unsure how to reach their search goal [

12]. Furthermore, uncertainty about the search goal itself can lead people to search exploratively. Due to this uncertainty, search queries in exploratory search are usually non-specific and broader in scope, which leads to a large number of results, many of which are not relevant to the searcher.

2.2. Publication Recommenders in Science

Shoja et al. published a literature review on tag-based recommendation systems by presenting and comparing different industries and system approaches from 2011 to 2018 [

13]. The data selected for the investigation came from the databases ACM, Gartner, IEEE Xplore, Lynda.com, ProQuest Science, and SpringerLink. Included were the filtering methods collaborative filtering, content filtering, and hybrid filtering. The individual sectors were music, movies, e-learning, documents, social media, food, and tourism. Their work showed that book and article sectors use tag-based recommendations, but these recommenders are not as popular as in the web, movie, and music sectors and still hold potential. For evaluating results, Root Mean Square Error (RMSE), Mean Reciprocal Rank (MRR), Precision, and F-Score were identified as common methods.

Kieu et al. examined various existing recommendation systems and tried to improve them further [

14]. They used pre-trained Bidirectional Encoder Representations from Transformers (BERT) models to analyze how they can be further optimized. These models make recommendations based on titles, abstracts, or entire contents of documents. The dataset for this study was the ACL Anthology Network Corpus, consisting of 22,085 publications, which was further reduced after removing publications due to lack of relevance. The data used included information on title, abstract, authors, and venues. The different BERT models were analyzed using MRR, the F1 score, and Precision and Recall. The focus of the similarity metrics was on Cosine similarity and Euclidean distance. The best result with all metrics used was achieved by the BERT+SubRef model. The MRR improved by 6.56%, and the F1 score showed an improvement of 3.88%, 3.67%, 3.68%, and 3.69% for F1@10, F1@20, F1@50, and F1@100, respectively.

Guo et al. used a two-layer graphical vector model to investigate whether more accurate predictions can be made for a citation recommendation system using this model [

15]. This model used both document titles and abstracts. The output of this model is a top-N ranking list with the corresponding recommendations. The test dataset for the model was the ACL Anthology Network (AAN) 2013 dataset, which contains over 20,000 publications published from 1965 to 2013. The procedure for preparing the data was as follows: abstracts and document titles were extracted, then all words containing three or fewer letters were removed. In the next step, stop words were removed, and then a stemming procedure was applied based with the Porter-Stemmer algorithm. Furthermore, words that occurred in the dataset less than ten times were removed, reducing the dataset from over 20,000 documents to approximately 12,500 documents. Recall and accuracy were used for evaluation, and Euclidean distance and normalized discounted gain (NDCG) were used for similarity calculations.

Nair et al. investigated whether converting data into binary code is helpful in terms of improving similarity and accuracy [

16]. The data used, which includes author names, keywords, contents, and abstracts, were represented in binary form or ASCII values using a Similarity Analysis Using Binary Encoded Data (SABED) algorithm. The data that were analyzed came from IEEE, ACM, and Willy. The similarity metrics Jaccard similarity and Jaro similarity were used for the analysis, and Accuracy was used for the evaluation. The SABED method was compared with user-based collaborative filtering (UBCF) and item-based collaborative filtering (IBCF) in the evaluation. The result showed that the SABED method achieved significantly better values for Accuracy compared to UBCF and IBCF. UBCF achieved values of 0.86, 0.5, and 0.35, and IBCF achieved values of 0.68, 0.545, and 0.4. The measurements of SABED resulted in values of 0.95, 0.7, and 0.47. Thus, the SABED algorithm achieved the best value in all measurements.

2.3. Topic Modeling in Science

In a comparative study, Syed and Spruit investigated Latent Dirichlet Allocation (LDA) with both scientific abstracts and full texts [

17]. On scientific full texts only, Ferri et al., among others, applied LDA to classify a total of 1800 articles from history journals [

18]. Asmussen and Møller developed a framework based on LDA and scientific full texts to support researchers in literature retrieval tasks [

19].

In the context of scientific publications, Bellaouar et al. investigated LDA and Latent Semantic Analysis (LSA) with a data set that consisted of conference papers from the “Neural Information Processing Systems” conference [

20]. The influence of creating bi-grams and applying lemmatization on topic model coherence values, was also investigated in the context of this work. Four different datasets were created, one with bi-grams and lemmatization, a second without bi-grams and lemmatization, and a third and fourth with either bi-grams or lemmatization. LDA and LSA were applied to each of these data sets, and then coherence values were compared using C_v and C_umass, with the LDA-based models providing better values in all use cases. Furthermore, performing lemmatization in the data preparation step was found to have a positive impact on the C_v coherence value.

Anantharaman et al. tested LDA, LSA, and Non-negative Matrix Factorization (NMF) on a data set with shorter texts in the form of short news reports and on a data set with short summaries of medical papers, i.e., somewhat longer texts [

21]. Furthermore, the two methods, BoW and TF-IDF, were compared for vectorizing the words. Altogether, six models were trained and evaluated via precision, recall, F1 Score, accuracy, Cohen’s Kappa Score, and Matthews Correlation Coefficient. LSA achieved the best results when applied to shorter texts, while LDA achieved the best values for longer texts.

Arif used LDA topic modeling and WordNet to examine how accurate predictions can be made with respect to citation recommendation systems [

22]. The dataset used for this was from ieeexplore and contained over 8000 citations from 200 publications. For the evaluation of the results, the accuracy was calculated.

2.4. Real-Time Text Analysis

The search for real-time text analysis approaches in science and academia most commonly led to sentiment analysis use cases in which social networks were monitored. For example, Aslam et al. developed a program that used live Twitter data to average tweet sentiment and identify popular tweets [

23]. For this purpose, tweets on any topic were collected in real-time and rated as “positive”, “neutral”, or “negative” after the data preprocessing step using sentiment analysis. In their work, Haripriya and Kumari [

24] created a similar system that used real-time Twitter data to determine the current top trending topic and analyzed the tweets associated with the topic using sentiment analysis. A real-time analysis of comments in social media is addressed in the work of Liu et al., in which an algorithm was presented and implemented that clusters the comments of a post and updates the results as soon as new comments on that post are uploaded by users [

25].

Work in the context of real-time text processing without reference to social network data was done by Rajpirathap et al. [

26]. Their work aimed to design and implement a program for real-time translation of Sri Lanka’s Sinhala and Tamil languages based on a statistical machine translation model. The model was trained on a corpus of parliamentary documents. This corpus was chosen explicitly because grammatical and lexical correctness could be guaranteed for the documents it contained.

Hucko et al. presented a tool intended to optimize the communication process between teachers and learners by utilizing real-time analysis of short answers [

27]. In the first step, a trained k-nearest-neighbor classifier was used to classify answers from learners into “correct answers” and “incorrect answers”. The answers were given by learners via cell phone in response to a question from the teacher in the Slovak language and then classified. In the second step, the answers classified as “wrong” by the classifier were clustered using an unsupervised algorithm in combination with affinity propagation. The aim was to show the teacher in real time which difficulties the learners had in answering the question so that he could react immediately based on this.

3. General Approach

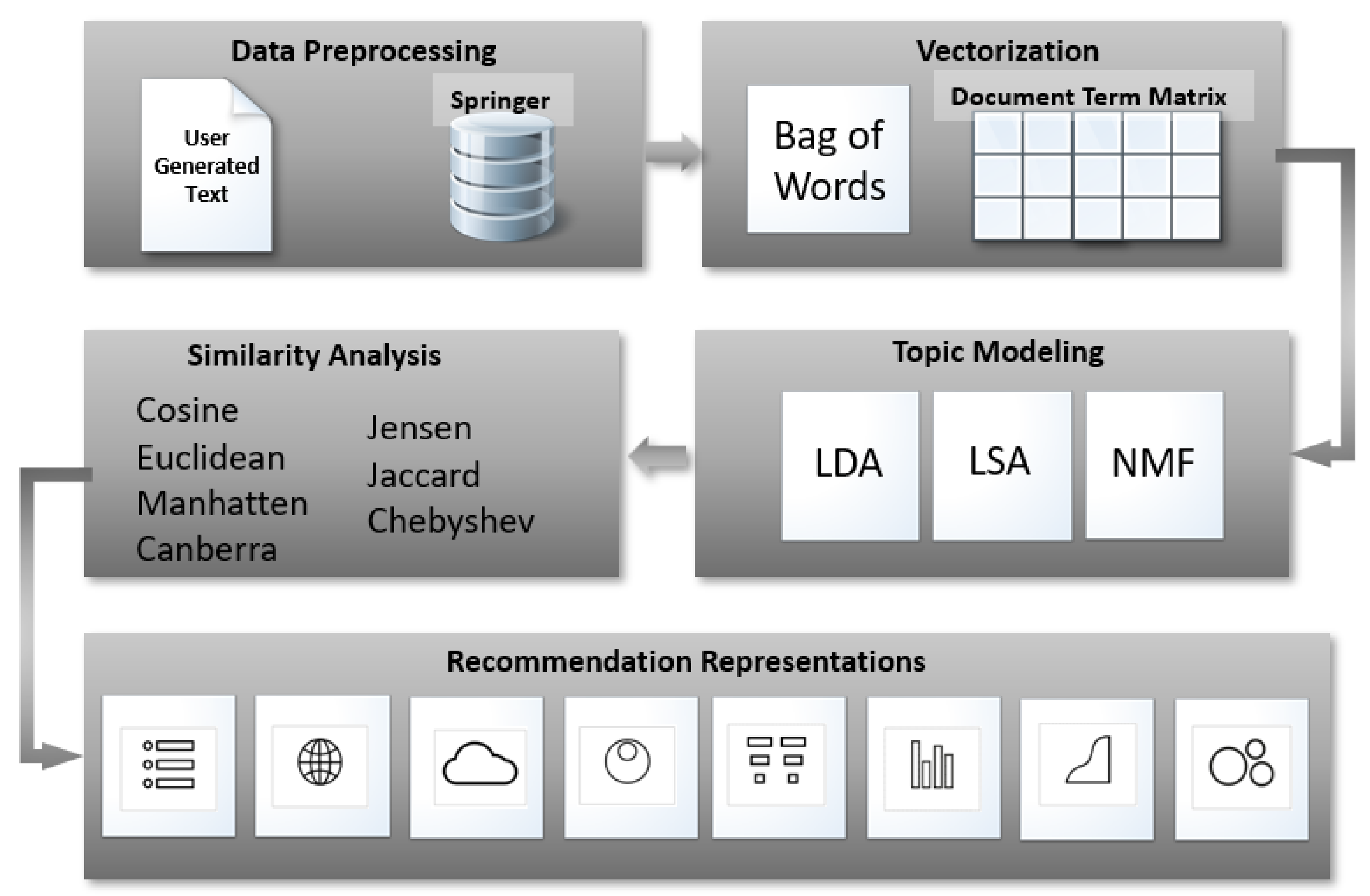

The general approach is illustrated in

Figure 1. This illustration shows that the proposal uses topic modeling to identify latent topics within two sources: the publications stored in a database and the written text. Both written text and incoming publications stored in a database must first be vectorized as a prerequisite for conducting topic modeling. To save resources and time, the identified latent topics are stored in the database after each topic modeling process, which takes place once a week on weekends. Each of the three available topic models is carried out and their results are stored. The topic modeling process is routinely carried out to consider new publications, topics, and words that can arise with each new publication. The topic modeling of the written text is carried out every two minutes from the moment the researcher begins writing. The weekly training of the topic models takes place using all publications stored in our database at the initialization time. Both the database topics and the written text topics are then compared. Advanced users can choose what topic modeling approaches and similarity measurements are used, whereas the default approach utilizes LDA and Cosine. This comparison identifies and lists the ten most topically similar publications for the user. Every two minutes, that list is updated. This means that as the researcher keeps writing and thus expanding their text, the recommended publications are also being shaped and becoming more and more precise.

3.1. Data Extraction

The data extraction is divided into two parts: data extraction for our database and data extraction from a latex editor in real-time. The data extraction for our publication database describes the process of gathering published publications. The data extracted from the latex editor in real-time are the textual data that are being written by the researcher in our implemented prototype. The publication data used are extracted from the Springer Nature Application Programming Interface (API), which provides peer-reviewed publications of high quality. The prototype of our approach holds 92,651 scientific publications. Overall, 5455 were removed by data cleaning for various reasons, with the most common reason being that documents were too short, less than 300 words, as is illustrated in

Figure 2. These were commonly corrections or short additions to already published publications. The remaining publications held 225,354,555 words, of which 297,227 are considered unique. Later on, the publications that are written with the system should be stored and used as well, increasing the number of publications that can be recommended.

3.2. Preprocessing

All dataset documents were preprocessed by removing all special characters using the Python module “regular expressions”. This step follows a tokenization of the words and a conversion of upper case letters to lower case letters. This process step is completed with the removal of stop words utilizing a stop word list from the library “NLTK” (Natural Language Toolkit) and the reduction of all words to their root words by using the “WordNetLemmartizer”. For the user-generated text, the preprocessing is essentially identical. Only the used stop word list differs because it contains additional Latex commands. The commands used within latex are removed (the characters beginning with a backslash).

3.3. Vectorization

A crucial element of NLP is the vectorization of text and terms. This is considered a requirement for topic modeling, which is described in the following subsection. The vectorization of all data set documents is therefore carried out by means of the “CountVectorizer” of scikit-learn. This vectorizes the words in the documents using the “fit_transform” method based on the Bag of Words (BoW) procedure and generates a document term matrix for the data set. All words that occur in more than 50 percent of the documents or occur in less than two documents are removed during the vectorization process. The words removed utilizing “max_df” or “min_df” are based on empirical values.

The creation of the document term matrix of the user-generated text is based on the vectorizer trained by the documents of the dataset using the application of the “transform” method. The term matrix generated there has the same term dimension through the application of the same vectorizer as the document term matrix of the data set. An identical term dimension of the matrices is essential for the process steps.

3.4. Topic Modeling

Our approach examined LSA, LDA, and NMF topic models. Our decision to examine these three was due to their popularity. First, based on the trained topic model the user selects, the probabilistic distribution of the text’s general topics is determined using the “transform” method for the preprocessed user-generated text. Then, the method “argmax” identifies the topic for which the text has the highest probabilistic distribution. The text is then assigned to this topic. This process is also executed for all documents of the data set. If both the user-generated text and all documents of the data set are assigned to exactly one topic, the determination of the documents that belong to the same topic as the user-generated text is performed. The determined works are stored in a Pandas data frame and represent the objects of comparison to the user-generated text in the similarity analysis.

At this time, the training of these models must be started manually by the web application administrator. This task ensures that this time-consuming process is only started when necessary. After the experimental phase, this will be conducted automatically once every week. This necessity can arise, for example, when new documents are added to the data set. The topic models generated in this process step are saved as “pickle” files after completing the training using the “joblib” program library. The models were implemented using the “scikit-learn” program library. The parameter values for the learning rate and the number of maximum iterations were determined in the models based on empirical values and scientific studies on similar data sets, such as the work of Asmussen and Møller [

19]. The parameter value for the number of topics to be extracted was determined using the C_v coherence values. It was determined that, when applied to this dataset, the optimal number of topics is 8 for LSA and 10 for LDA and NMF, described in detail in the following chapter.

3.5. Similarity Analysis

The similarity measurement was performed, using the metric selected by the user, between the user-generated text and the documents of the dataset that have been assigned to the same topic as the user-generated text based on a topic model. Seven different metrics—cosine similarity, Euclidean distance, Manhattan distance, Jaccard distance, Chebyshev distance, Canberra distance, and Jenson–Shannon divergence—can be selected for similarity comparison. These metrics were implemented using the “scikit-learn” or “scipy” program libraries. As a result, this process step generates a JSON (JavaScript Object Notation) file containing the titles of the ten papers that, depending on the selected metric, have the highest similarities or lowest distances to the user-generated text.

3.6. Result Presentation

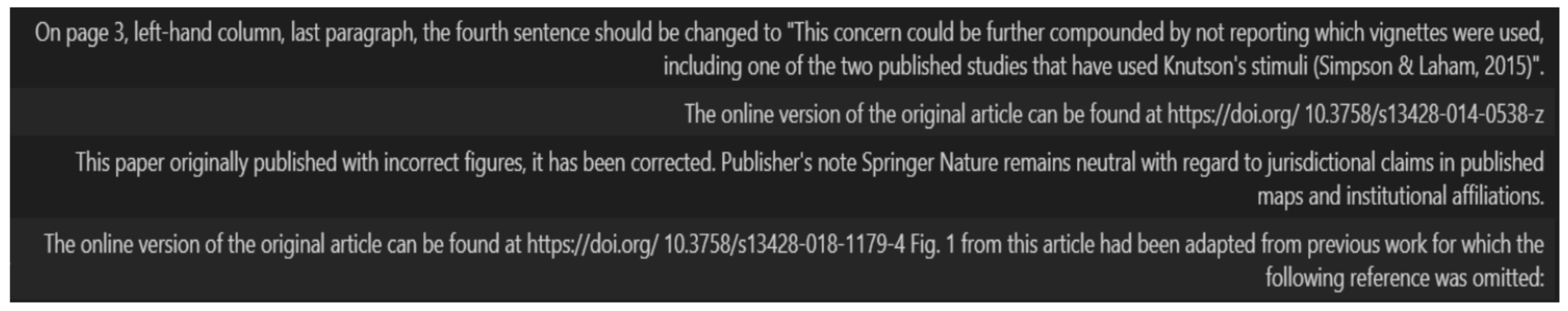

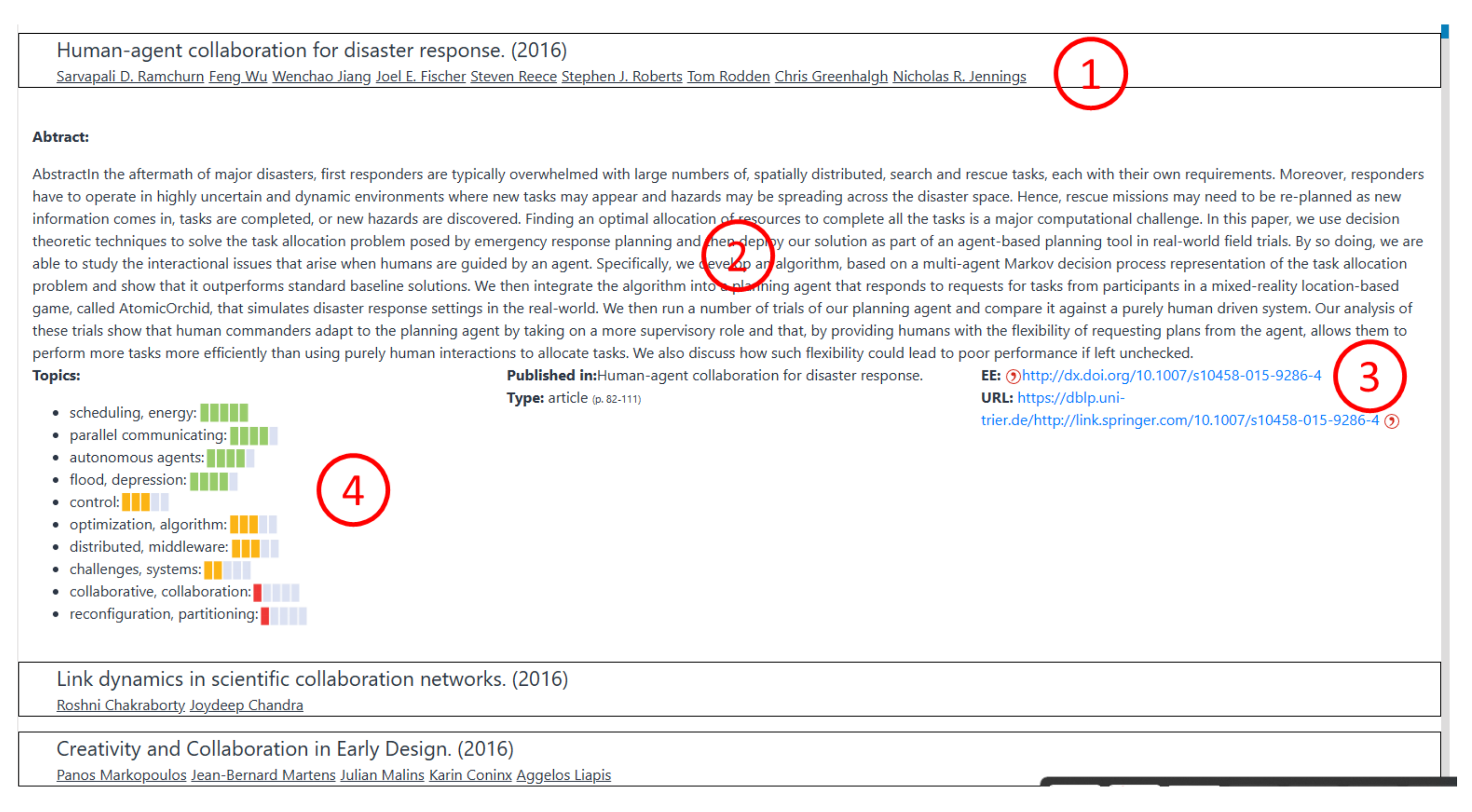

The results of the similarity measurement were transmitted to the Python “Flask” file utilizing a JSON file and forwarded from there to the frontend. There, the titles of the ten most similar scientific papers were mapped and compared to the user-generated text and the numerical results of this similarity measurement. The latter is displayed in the prototype of the system shown in

Figure 3 for evaluation purposes. To identify the best possible presentation approach we designed different frontends. Each frontend utilizes the approach but presents the same results in a different way.

The result presentation was conducted alongside the latex editor workspace as illustrated in

Figure 3. We decided to use the open-source Ace Editor as our Latex Editor, which we adapted for our test purposes. The editor is structured as the well-known Latex editors are commonly structured, with the editing or writing area and a PDF viewer (1). Adaptions are found below and above these two known areas. Below the editing area, the results are presented (2). The titles are clickable and are linked to the Digital Object Identifier (DOI). Once clicked upon, a new tab is opened, and the researchers can read the publication. Furthermore, for testing purposes, the similarity score is shown, which describes how similar the respective publications are compared to the text written thus far. The list of results is updated every two minutes. The researchers can choose their desired topic modeling approach via a dropdown (3). The current system has the three models described in this work; LDA, LSA, and NMF. The research can also choose a desired similarity algorithm via dropdown (4). Currently, there are seven similarity algorithms; Manhattan, Cosine, Jensen, Jaccard, Euclidean, Chebyshev, and Canberra. The standard choice, or predefined, combines Cosine and LDA. This decision is due to the evaluation results described in this work.

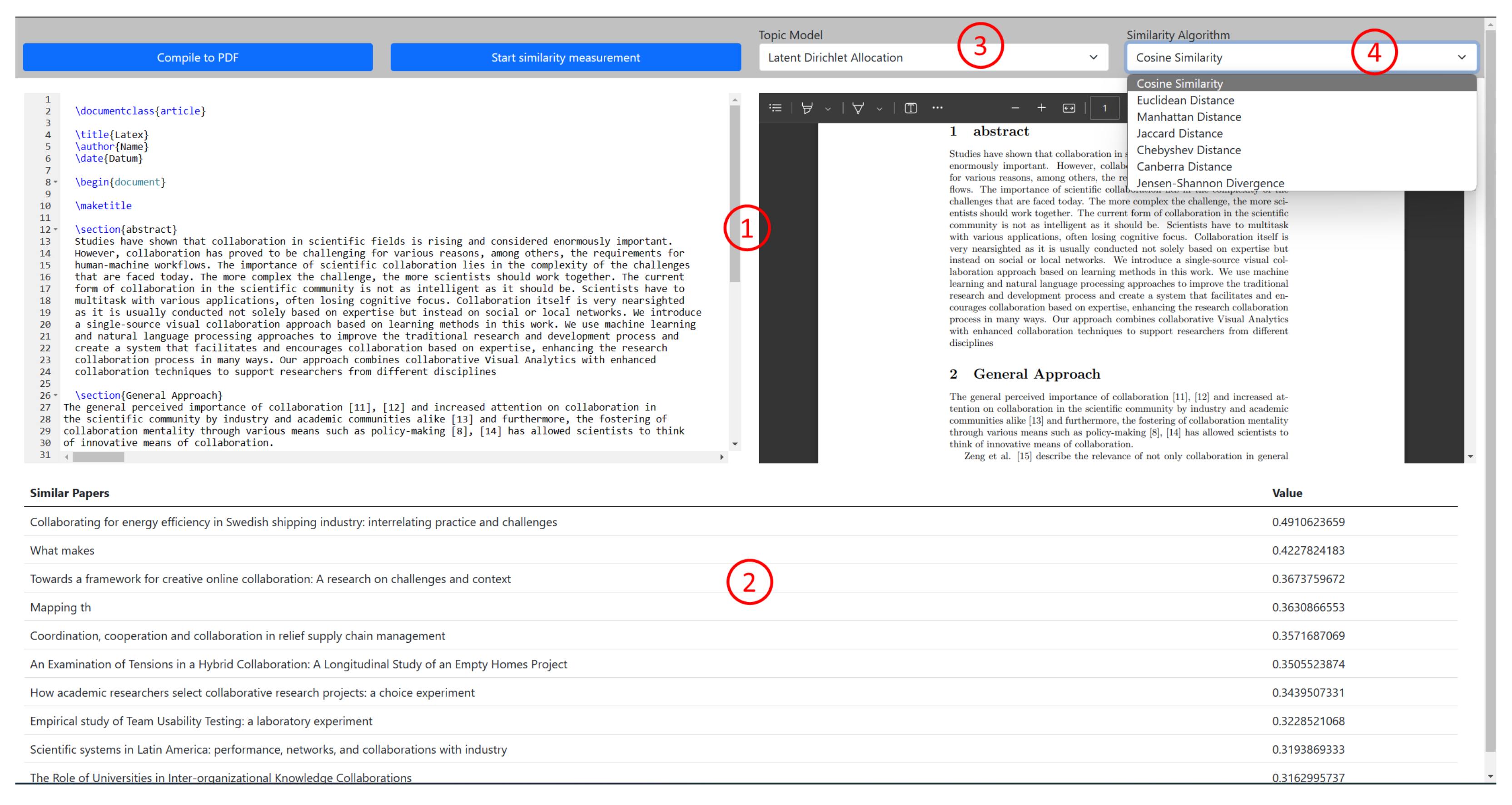

Figure 4 shows an alternative version of the UI focusing on visual analytics. The calculations and backend are the same; however, the frontend that is based on our previous works [

8,

28] grants the user the ability to explore the results better. The user has various filtering options seen in (2) while at the same time having a listed view seen in (1) that shows more information than

Figure 3. Furthermore, the various visualizations shown in (3) allow them to explore and grasp information more efficiently. They can choose the visualizations they desire, which allows the system to adapt better to the cognitive needs of the researcher. Furthermore, by hovering over the visualization in (4), they can see further information, such as the number of publications connected to the respective topic.

Figure 5 shows a listed view’s publication in detail. The scientists can access further information by clicking on a title (1) which opens the detailed view. By clicking on an author in (1), they commence a search for the clicked-upon author. (2) shows an abstract which is useful for research when a title is not very clear. If more information or the full text is required they can click on the DOI in (3) to be sent to the publication’s website. To better understand why a publication has been recommended the similarity score that was previously shown in

Figure 3 is shown as a colored icon (4) in

Figure 5. The three-color scheme shows how relevant a topic is to the scientist’s current topic, with green being highly relevant and red being not relevant. Furthermore, there is differentiation within the colors based on how full the line is. The fuller the line, the more similar the topic is.

4. Evaluation

In this section, we present the evaluation results. To provide an adequate evaluation not only on the bases of calculations but also on real-world experiences, we decided to use two popular methods: coherence calculations and human judgment.

4.1. Coherence

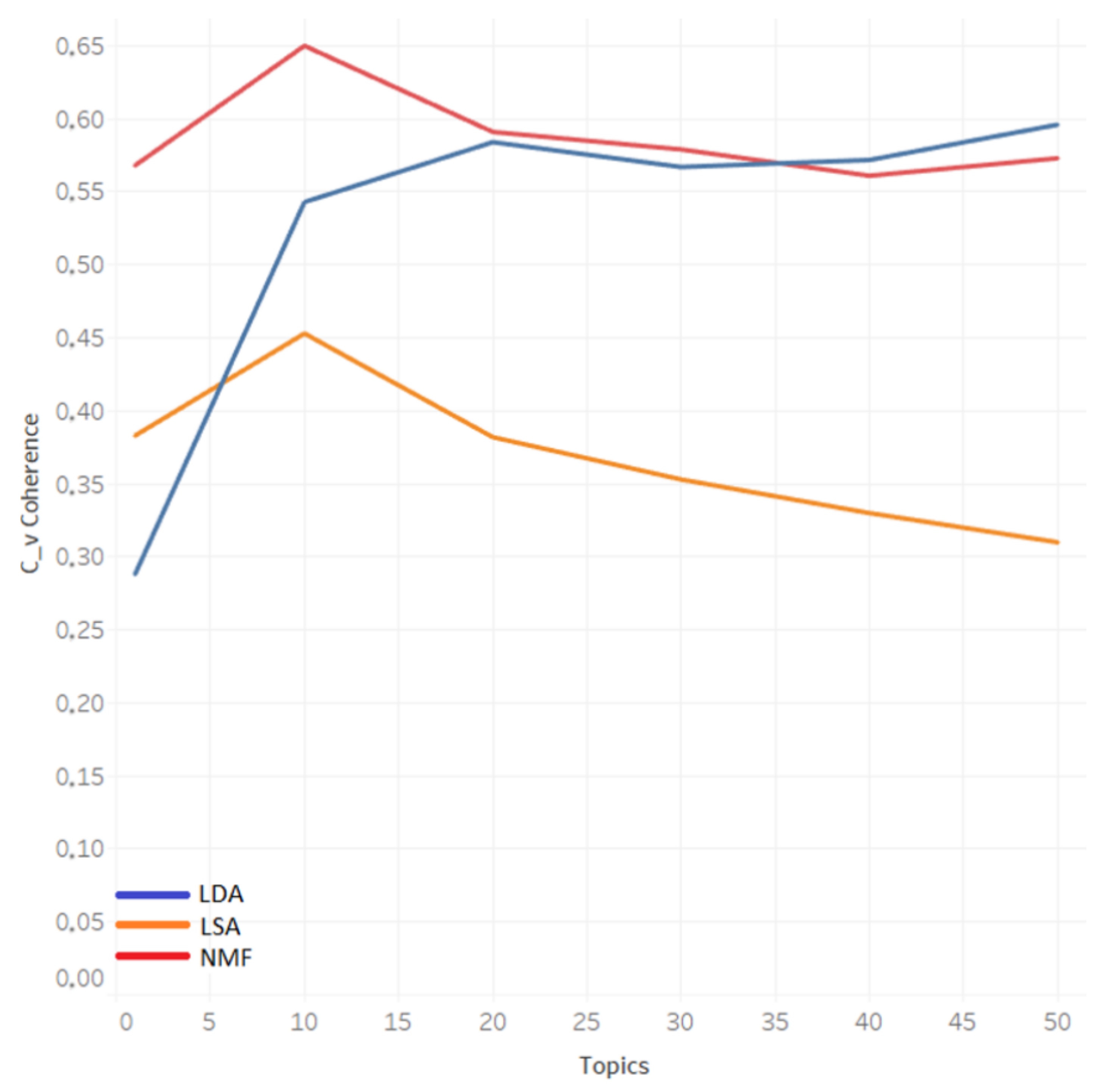

Determining the C_v coherence is a popular method for evaluating topic models [

29], and was therefore also applied in this work. The results obtained in this process also served as a criterion for identifying the optimal number of topics to be extracted. In the first step, a total of 18 topic models were trained based on a scientific publication dataset, with six for each topic modeling method investigated. The six models of a method differed in the number of topics to be extracted. Thus, for each Topic Modeling method, one model was trained with 1, 10, 20, 30, and 50 topics, of which the C_v coherence value was subsequently determined. The other manually adjusted parameter values were the same for all 18 models.

Figure 6 shows the plotted results of the C_v calculations. C_v utilizes the normalized pointwise mutual information (NPMI) score based on sliding windows to examine the top words in a document and the probability of them co-occurring. Based on these NPMI scores, topic vectors and topic word vectors are compared using cosine similarity. The average of these cosine similarities results in the C_v score. It can be seen that the NMF models were able to achieve the best C_v coherence values on average, while the LSA models performed significantly worse. The best NMF model was with ten topics and a value of 0.65. For LDA, the peak was 20 topics and a value of 0.57. LSA’s best model was with ten topics and a value of 0.45. In a second step, based on the results just described, ten additional models with 8 to 26 topics were trained using the data set for each topic modeling method. The goal was to determine the number of optimal topics as precisely as possible using the coherence values. Based on the results of the second step, we found that the NMF models also provided the highest average coherence values in this comparison. The NMF model with ten topics was the best model overall. The best model from LDA also consisted of 10 topics and performed slightly worse with a coherence value of 0.62. In comparison, the best model from LSA extracted eight topics, which received an overall coherence value of 0.49.

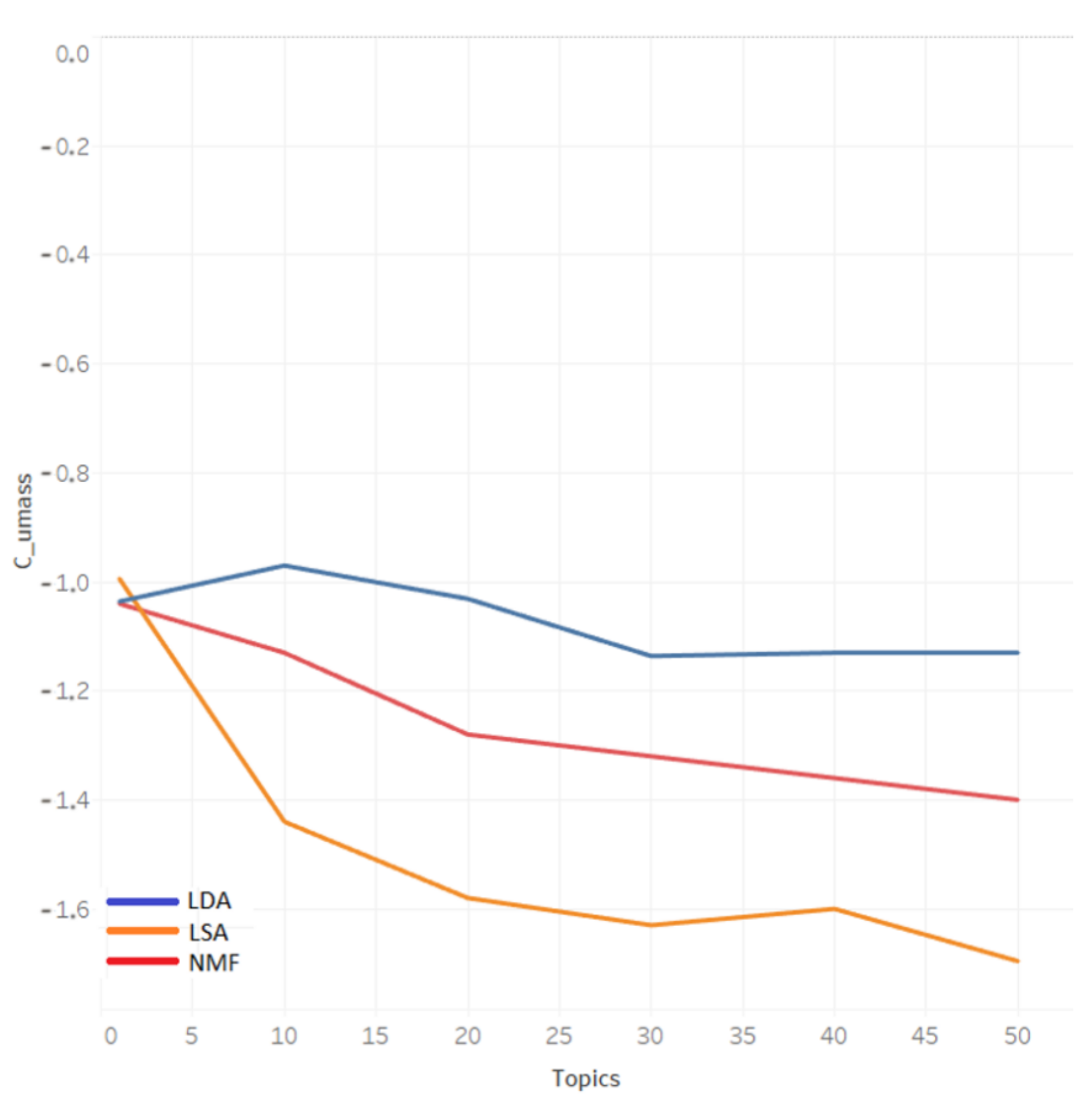

The procedure for calculating C_umass coherence is similar to the procedure for calculating C_v coherence described in the first part of the previous chapter. It also examines word co-occurrences, but it examines how often two words appear together in a corpus. It must be noted in the results that for C_umass, in contrast to C_v and C_uci, it is not the maximum value but the minimum value that is considered the optimum. As seen in

Figure 7, this optimum was −1.7 for the 50-subject LSA model when LDA, LSA, and NMF were applied to the scientific data set. The LSA models performed significantly better than the LDA and NMF models, except for the model with precisely one topic extracted. On average, the LDA models provided the weakest C_umass coherence values, with 30 topics and a coherence value of −1.14. The best NMF model had a coherence value of −1.4 and, like the LSA model, consisted of 50 topics.

C_uci coherence represents the third variant of the coherence value determination used in this work to compare the topic model of LDA, LSA, and NMF. The procedure for calculating the C_uci coherence is similar to the procedure for calculating the C_v. C_uci uses a sliding window with pointwise mutual information (PMI) to compare word pairs.

Figure 8 shows that NMF’s models produced significantly better results for each number of subjects, peaking at a model with a coherence value of 0.786 and 30 subjects. LDA provided moderately weaker C_uci coherence values, except for the models with one and five subjects, respectively. The best LDA model included 20 topics and came up with a coherence value of 0.67. The models of LSA, on the other hand, provided strikingly lower C_uci values than LDA and NMF.

4.2. Human Judgement

Human judgment allows us to evaluate our approach with human knowledge. Studies have utilized human judgment in various ways [

30,

31], but a popular approach is rating [

32]. For example, rating in the context of topic modeling is presenting the testers with a list of extracted topics and asking them to rate them. They rate them based on their understanding of the quality and interoperability of the respective topics. Newman et al. [

33] and Alertas and Stevenson [

34] used a rating of one to three scale to rate. The value of one being the worst and defined as useless and three as applicable.

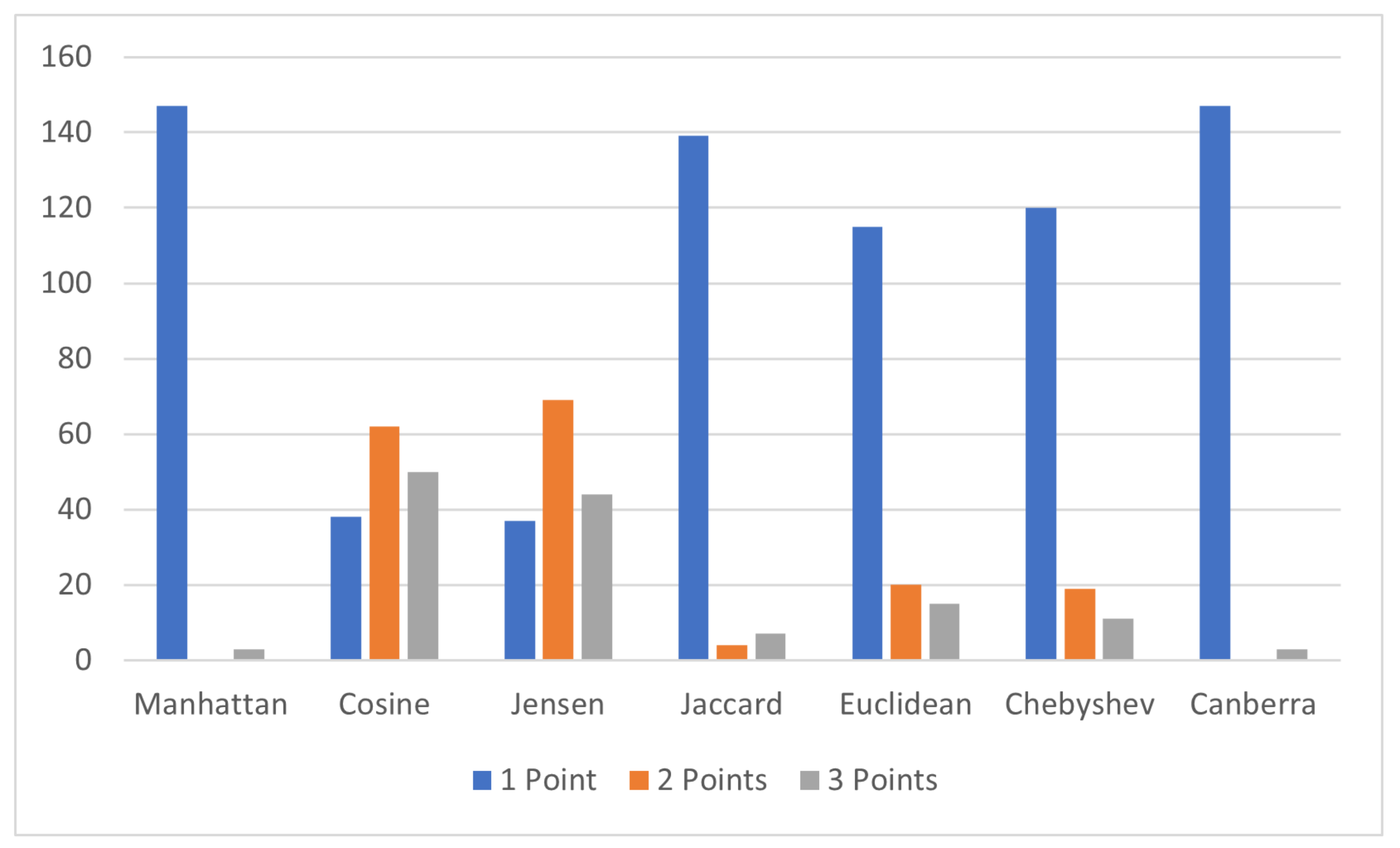

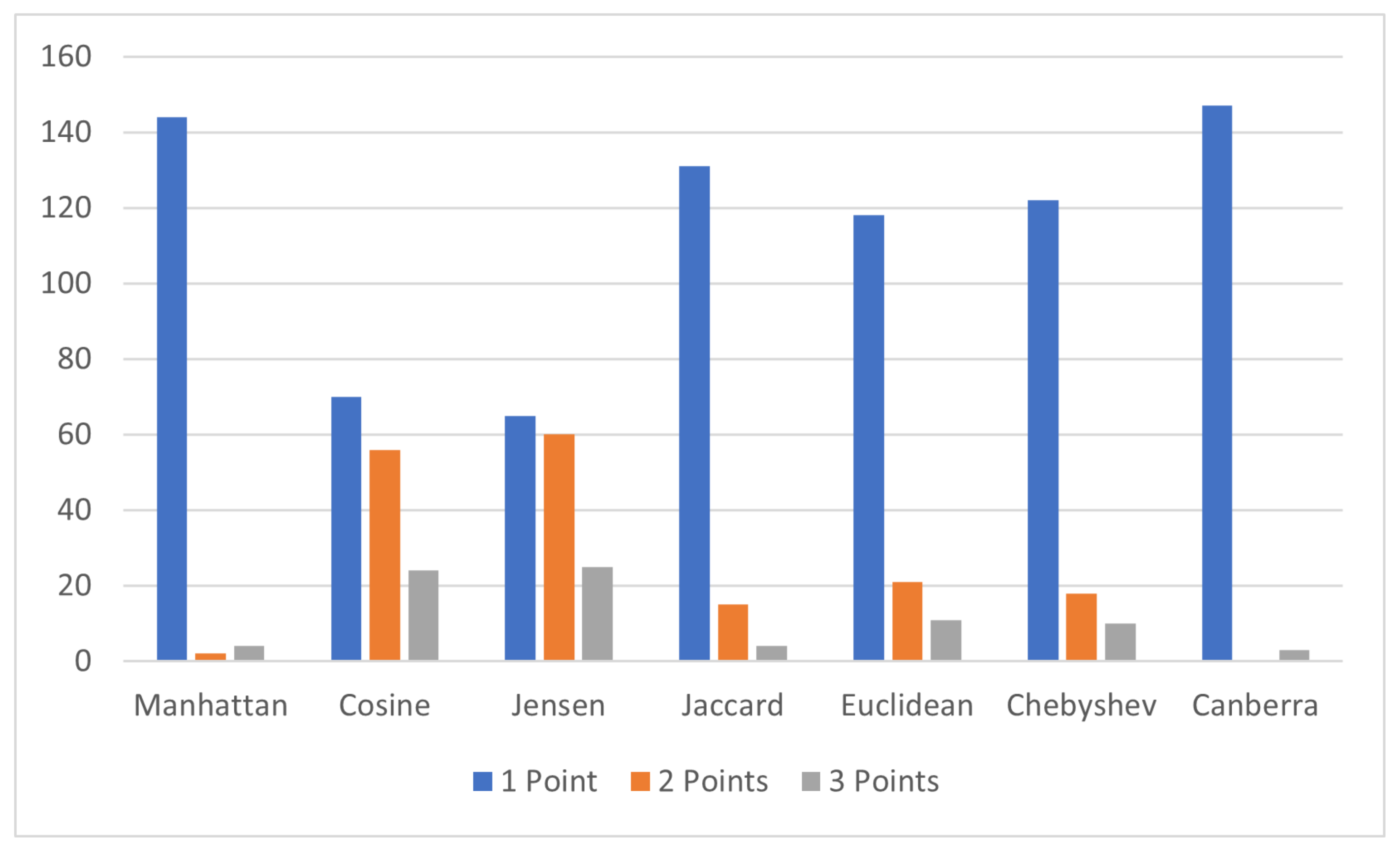

We utilized and expanded upon the three-point system by not judging the topics but instead judging the recommended papers based on their content. We took five publications from well known domains and used every combination of our approach to identify similar publications. This evaluation approach was carried out three times to examine the text length’s impact on the topic modeling and similarity algorithm combinations. We used abstracts as short text, abstracts in combination with introductions for mid-size text length, and full text for long texts. This evaluation approach led to 150 publications per topic model and similarity algorithm combination to be examined. We examined each identified publication’s content to determine on a scale of one to three how similar it is to each of the five publications. The recommended publications that are “useless”, meaning they do not have any similarity, and are rated with one point. Publications of high similarity are considered useful and are rated with three points. Publications that are somewhat similar receive a two-point rating and are considered potentially useful.

Figure 9 shows that most LDA combinations yield results that cannot adequately identify relevant publications. Manhattan, Canberra, and Jaccard had over 140 publications rated with one point. Euclidean and Chebyshev combinations also yielded a majority of one-point results, although with less than the first mentioned three. The overwhelming number of “useless” results presented by these five combinations comes from the testing with short and mid-size texts. In tests with short and mid-length texts, they only provided one-point publications. They only provided good results comparable to Cosine and Jensen when testing on full texts. Cosine and Jensen provided overall the best results. Jensen provided slightly better results when testing with short and mid-length texts, but Cosine had better results when testing with full text.

Figure 10 shows similar results to LDA with minimal differences. The previously mentioned Manhattan, Canberra, and Jaccard still cannot adequately identify relevant results; however, Jaccard’s results have slightly improved. During LDA testing, it yielded more than 140 publications considered useless, whereas, with LSA, it is slightly below 140, with an increase in results considered useful with three points. This slight improvement comes from the mid-length testing, which saw a slight improvement compared to LDA. Another difference is shown when examining the results of Cosine. Here Cosine has a decrease in two-point publications but an increase in useless and useful publications. Precisely four publications became useless, and two became useful. Jensen had a slight decrease in the one and two-point sections and an increase in the three-point section. This indicates that LSA had a slightly positive effect on Jensen and a slightly negative effect on Cosine.

Figure 11 generally shows similar results to LSA and LDA, being that Manhattan, Euclidean, Chebyshev, Jaccard, and Canberra still yield unsatisfactory results. Jaccard’s results have improved yet again when examining the number of useless publications; however, there is also a negative shift when examining its number of useful results. Both useful and useless results have declined and increased the number of potentially useful results in Jaccard’s case. Another key result is the significant decrease in publications in the two and three-point sections of Cosine and Jensen and increases in the one-point section. This is seen in the results for short and mid-length texts. Most results in mid-length text consist of useless publications for both Cosine and Jensen. The number of useless publications found in short-length tests has also almost doubled for both Cosine and Jensen.

The figures generally show a clear picture when identifying the best possible combination. Cosine and Jensen present the best results throughout the tested topic modeling approaches. Both present significantly better results than the other five tested similarity algorithms. Regarding topic models, testing shows that LSA and LDA are very similar and provide good results, with LDA being slightly better. NMF tends to provide more “useless” results than LDA and LSA, which can be seen when examining the Cosine and Jensen combinations. Testing also shows that the longer texts are, the better the results become throughout all combinations. However, short to mid-length texts still provide adequate results when utilizing Cosine or Jensen with LDA or LSA.

5. Results

Our approach shows that real-time text analysis can be combined with topic modeling and similarity algorithms to provide researchers with on-the-fly publication recommendations. The extensive evaluation of possible combinations of topic modeling and similarity algorithms shows that cosine similarity with LDA is the best approach. This combination can be used for various text lengths, making it suitable for the entire publication creation process. Our testing does show that results improve as the text length increases, but this does not mean that the results from the analysis of short texts are useless. Most results with Cosine and Jensen combined with LDA, or LSA, consisted of potentially useful or useful publications.

Canberra and Manhattan yielded the worst results with all three models, with almost all of the recommended publications being useless. Jaccard, Euclidean, and Chebyshev also presented recommendations that were mostly useless. However, their results were somewhat better than Manhattan and Canberra. The negative results of these five similarity algorithms from testing make them unsuitable for this approach. Cosine and Jensen, on the other hand, yielded the best results throughout all three models. Cosine and Jensen did, however, struggle when using NMF, in which they recommended significantly more useless publications and less useful ones. Regarding topic models, LSA and LDA both yielded positive and similar results. As previously mentioned, NMF had a negative on Cosine and Jensen and a slightly positive impact on Jaccard and Manhattan. The tendencies of the similarity algorithms were constant with all models, even though more negative with NMF than with LDA or LSA. This indicates that the negative results that were identified when using NMF were caused by the NMF model and its decomposition process. It has also been previously documented that NMF has difficulties working with lengthier texts, which we can confirm. The evaluation with short texts presented results that were slightly worse than LDA and LSA, and as we continued with lengthier texts, the gap between NMF and LDA, and LSA grew.

The implications of our approach can be best seen in the decision-making of scientists and research projects. Scientists examine gathered data to identify research gaps or search with a challenge in mind. The information gathered paves the way for innovative ideas or products. This approach provides scientists with a more optimal approach to gather the information they require to make decisions. Combining a topic model and similarity algorithm that works with any text size and only gets better as more and more is written guarantees that researchers will find relevant publications and knowledge with each paragraph written without manually conducting search iterations. The approach does not remove manual searches but instead builds upon those searches and attempts to fill in the missing pieces. This approach slightly changes the way searches are conducted. The researcher is assisted and provided support via additional material, but input is still required.

6. Discussion

The results of our work describe a novel approach to providing researchers with a tool that can ease their workload during research while simultaneously improving the research quality. The knowledge that a publication contains can be enhanced by analyzing what is being written and providing a recommendation for additional material. The sheer number of publications makes it challenging to accumulate relevant knowledge, but allowing an automatic system to propose knowledge that might have been overlooked increases the chances of finding it. This raises the question of how publications, and thus knowledge, are ranked and made discoverable. In our approach, we examined the similarity based on extracted topics, but further research should examine the utilization of the approach in combination with collaborative, content-based, and hybrid filtering approaches from the field of recommendation systems. Utilizing various filtering approaches can yield more precise results, allowing more overseen knowledge to be presented.

It is also important to point out that other vectorization and preprocessing approaches exist and can impact the results. Further testing with the various other approaches such as TF/IDF and Word2Vec should be conducted. The results of our approach with other vectorization or preprocessing approaches can yield different results and might make combinations of topic modeling and similarity algorithms more viable. Furthermore, more experimentation must be conducted with NMF and, in general, the similarity algorithms that yielded the worst results. NMF’s general negative results in our evaluation also show a necessity to further optimize the NMF model and examine if another vectorization approach yields better results.

Since our work is novel at the time of writing, questions of effectiveness, use cases, and impact on the exploratory research process also remain. Regarding use cases, we focused on creating recommendations on the fly while writing publications; however, our results show that this only scratches the surface of the potential use cases. The results of the evaluation underline that short texts, such as notes, could also be used to create recommendations. Remaining on the task of writing publications, we only examined the quality of the recommendations. However, we should, in the future, examine the impact on aspects such as task completion time, researcher satisfaction, and usability or make comparisons of created publications to examine changes in the quality of the work.

Another crucial aspect regarding decision-making is the utilization of visual analytics. We briefly described using a visual system as an alternative view of the results. Visual analytics systems yield various benefits we described in our previous work [

28] that impact decision-making and exploration. However, further studies must be made into our approach’s impact with various frontend versions. Scientists’ utilization of the approach must be observed to identify the areas of improvement that can be, for example, a request to add more visualizations that we are currently unaware of or slightly modify the topic modeling approaches to filter out more publications.

Future use cases that utilize our approach also present a discussion point. We have proven that the approach works and confirmed that it works with texts of various lengths. This presents an opportunity to use this approach with various text categories and tasks. This approach could analyze researchers’ notes, communication, or entire projects. This analysis would allow for topics and similarities to be identified and recommended, which could change how scientists work and search for information.

7. Conclusions

The results show that the utilization of topic modeling and similarity algorithms is a viable approach to assist scientists. The recommendations that the prototype system presents by using the combination of LDA or LSA and Cosine or Jensen are useful and can provide additional information. Furthermore, based on our human judgment results, we can state that the best results came from the combination of LDA and cosine similarity, although both LSA and Jensen also provided useful results. As stated in the results chapter, text length did impact the results. The tested approaches found it more challenging to work with shorter texts, but even when working only with an abstract the results proved to be useful.

Furthermore, our work also shows a research gap in the field of recommendation systems in science and academia. The supportive capabilities of recommendations are not being utilized in science and academia, as shown in the related work chapter. To the best of our knowledge, the novel approach is the first of its kind, however we expect that other studies will expand on our work. In the future, we will focus on introducing more recommendation systems based on this approach to the research process to attempt to improve the quality of research.

Author Contributions

Conceptualization, M.B., L.B.S., C.A.S. and K.N.; methodology, M.B. and K.N.; software, M.B., L.B.S., C.A.S. and K.N.; validation, M.B., L.B.S., C.A.S. and K.N.; formal analysis, M.B., L.B.S., C.A.S. and K.N.; investigation, M.B., L.B.S., C.A.S. and K.N.; resources, M.B., L.B.S., C.A.S. and K.N.; data curation, M.B., L.B.S., C.A.S. and K.N.; writing—original draft preparation M.B., L.B.S., C.A.S. and K.N.; writing—review and editing, M.B., L.B.S., C.A.S. and K.N.; visualization, M.B., L.B.S., C.A.S. and K.N.; supervision, K.N.; project administration, M.B., L.B.S., C.A.S. and K.N.; All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data not applicable.

Acknowledgments

We thank Kjell Kunz and Sascha Haas from the Darmstadt University of Applied Sciences who contributed to this research. This work was conducted within the research group on Human-Computer Interaction and Visual Analytics at the Darmstadt University of Applied Sciences (

https://vis.h-da.de, accessed on 3 April 2023).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| NLP | Natural Language Processing |

| LDA | Latent Dirichlet allocation |

| LSA | Latent Semantic Analysis |

| NMF | Non-negative Matrix Factorization |

| RMSE | Root Mean Square Error |

| MRR | Mean Reciprocal Rank |

| BERT | Bidirectional Encoder Representations from Transformers |

| AAN | ACL Anthology Network |

| NDCG | Normalized Discounted Gain |

| API | Application Programming Interface |

| NLTK | Natural Language Toolkit |

| BoW | Bag of Words |

| JSON | JavaScript Object Notation |

| DOI | Digital Object Identifier |

| UI | User Interface |

| SABED | Similarity Analysis Using Binary Encoded Data |

| UBCF | User-Based Collaborative Filtering |

| IBCF | Item-Based Collaborative Filtering |

| PMI | Pointwise Mutual Information |

| NPMI | Normalized Pointwise Mutual Information |

| TF-IDF | Term Frequency Inverse Document Frequency |

References

- Peng, M.; Xu, Z.; Huang, H. How Does Information Overload Affect Consumers’ Online Decision Process? An Event-Related Potentials Study. Front. Neurosci. 2021, 15, 695852. [Google Scholar] [CrossRef] [PubMed]

- Buchanan, J.; Kock, N. Information overload: A decision making perspective. In Multiple Criteria Decision Making in the New Millennium; Lecture Notes in Economics and Mathematical Systems; Köksalan, M., Zionts, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2001; Volume 507, pp. 49–58. [Google Scholar] [CrossRef]

- Shinnick, E.; Ryan, G. The role of information in decision making. In Encyclopedia of Decision Making and Decision Support Technologies; Adam, F., Humphreys, P., Eds.; Information Science Reference: Hershey, PA, USA, 2008; pp. 776–782. [Google Scholar] [CrossRef]

- de Medeiros, M.M.; Hoppen, N.; Maçada, A.C.G. Data science for business: Benefits, challenges and opportunities. Bottom Line 2020, 33, 149–163. [Google Scholar] [CrossRef]

- Falschlunger, L.; Lehner, O.M.; Treiblmaier, H. InfoVis: The Impact of Information Overload on Decision Making Outcome in High Complexity Settings. In Proceedings of the Fifteenth Annual Pre-ICIS Workshop on HCI Research in MIS, Dublin, Ireland, 11 December 2016. [Google Scholar]

- Borchers, A.; Herlocker, J.; Konstan, J.; Reidl, J. Ganging up on information overload. Computer 1998, 31, 106–108. [Google Scholar] [CrossRef]

- Zhang, C.; Li, J.; Wu, J.; Liu, D.; Chang, J.; Gao, R. Deep Recommendation With Adversarial Training. IEEE Trans. Emerg. Top. Comput. 2022, 10, 1966–1978. [Google Scholar] [CrossRef]

- Blazevic, M.; Sina, L.B.; Burkhardt, D.; Siegel, M.; Nazemi, K. Visual Analytics and Similarity Search - Interest-based Similarity Search in Scientific Data. In Proceedings of the 2021 25th International Conference Information Visualisation (IV), Sydney, Australia, 5–9 July 2021; pp. 211–217. [Google Scholar] [CrossRef]

- Janiszewski, C. The Influence of Display Characteristics on Visual Exploratory Search Behavior. J. Consum. Res. 1998, 25, 290–301. [Google Scholar] [CrossRef]

- Krestel, R.; Demartini, G.; Herder, E. Visual Interfaces for Stimulating Exploratory Search. In Proceedings of the 11th Annual International ACM/IEEE Joint Conference on Digital Libraries (JCDL’11), Ottawa, ON, Canada, 13–17 June 2011; Association for Computing Machinery: New York, NY, USA, 2011; pp. 393–394. [Google Scholar] [CrossRef]

- Marchionini, G. Exploratory search. Commun. ACM 2006, 49, 41–46. [Google Scholar] [CrossRef]

- White, R.W.; Roth, R.A. Exploratory Search: Beyond the Query-Response Paradigm. Synth. Lect. Inf. Concepts Retr. Serv. 2009, 1, 98. [Google Scholar] [CrossRef]

- Shoja, B.M.; Tabrizi, N. Tags-Aware Recommender Systems: A Systematic Review. In Proceedings of the 2019 IEEE International Conference on Big Data, Cloud Computing, Data Science and Engineering (BCD), Honolulu, HI, USA, 29–30 May 2019; pp. 11–18. [Google Scholar] [CrossRef]

- Kieu, B.T.; Unanue, I.J.; Pham, S.B.; Phan, H.X.; Piccardi, M. Learning Neural Textual Representations for Citation Recommendation. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021. [Google Scholar] [CrossRef]

- Guo, L.; Cai, X.; Qin, H.; Guo, Y.; Li, F.; Tian, G. Citation Recommendation with a Content-Sensitive DeepWalk Based Approach. In Proceedings of the 2019 International Conference on Data Mining Workshops (ICDMW), Beijing, China, 8–11 November 2019; pp. 538–543. [Google Scholar] [CrossRef]

- Nair, A.M.; George, J.P.; Gaikwad, S.M. Similarity Analysis for Citation Recommendation System using Binary Encoded Data. In Proceedings of the 2020 International Conference on Electrical Communication and Computer Engineering (ICECCE), Istanbul, Turkey, 12–13 June 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Syed, S.; Spruit, M. Full-Text or Abstract? Examining Topic Coherence Scores Using Latent Dirichlet Allocation. In Proceedings of the 2017 IEEE International Conference on Data Science and Advanced Analytics (DSAA), Tokyo, Japan, 19–21 October 2017; pp. 165–174. [Google Scholar] [CrossRef]

- Ferri, P.; Lusiani, M.; Pareschi, L. Shades of theory: A topic modelling of ways of theorizing in accounting history research. Account. Hist. 2021, 26, 484–519. [Google Scholar] [CrossRef]

- Asmussen, C.B.; Møller, C. Smart literature review: A practical topic modelling approach to exploratory literature review. J. Big Data 2019, 6, 93. [Google Scholar] [CrossRef]

- Bellaouar, S.; Bellaouar, M.M.; Ghada, I.E. Topic Modeling: Comparison of LSA and LDA on Scientific Publications. In Proceedings of the 2021 4th International Conference on Data Storage and Data Engineering (DSDE’21), Barcelona, Spain, 18–20 February 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 59–64. [Google Scholar] [CrossRef]

- Anantharaman, A.; Jadiya, A.; Siri, C.T.S.; Adikar, B.N.; Mohan, B. Performance Evaluation of Topic Modeling Algorithms for Text Classification. In Proceedings of the 2019 3rd International Conference on Trends in Electronics and Informatics (ICOEI), Tirunelveli, India, 23–25 April 2019; pp. 704–708. [Google Scholar] [CrossRef]

- Arif, M.A. Content aware citation recommendation system. In Proceedings of the 2016 International Conference on Emerging Technological Trends (ICETT), Kollam, India, 21–22 October 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Aslam, A.; Qamar, U.; Khan, R.A.; Saqib, P.; Ahmad, A.; Qadeer, A. Opinion Mining Using Live Twitter Data. In Proceedings of the 2019 IEEE International Conference on Computational Science and Engineering (CSE) and IEEE International Conference on Embedded and Ubiquitous Computing (EUC), New York, NY, USA, 1–3 August 2019; IEEE: New York, NY, USA, 2019; pp. 36–39. [Google Scholar] [CrossRef]

- Haripriya, A.; Kumari, S. Real time analysis of top trending event on Twitter: Lexicon based approach. In Proceedings of the 2017 8th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Delhi, India, 3–5 July 2017; IEEE: New York, NY, USA, 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Liu, C.Y.; Chen, M.S.; Tseng, C.Y. IncreSTS: Towards Real-Time Incremental Short Text Summarization on Comment Streams from Social Network Services. IEEE Trans. Knowl. Data Eng. 2015, 27, 2986–3000. [Google Scholar] [CrossRef]

- Sakthithasan, R.; Sheeyam, S.; Kanthasamy, U.; Chelvarajah, A. Real-time Direct Translation System for Tamil and Sinhala Languages. In Proceedings of the 2015 Federated Conference on Computer Science and Information Systems, Lodz, Poland, 13–16 September 2015; Annals of Computer Science and Information Systems; IEEE: New York, NY, USA, 2015; pp. 1437–1443. [Google Scholar] [CrossRef]

- Hucko, M.; Gaspar, P.; Pikuliak, M.; Triglianos, V.; Pautasso, C.; Bielikova, M. Short Texts Analysis for Teacher Assistance During Live Interactive Classroom Presentations. In Proceedings of the 2018 World Symposium on Digital Intelligence for Systems and Machines (DISA), Kosice, Slovakia, 23–25 August 2018; IEEE: New York, NY, USA, 2018; pp. 239–244. [Google Scholar] [CrossRef]

- Blazevic, M.; Sina, L.B.; Nazemi, K. Visual Collaboration—An Approach for Visual Analytical Collaborative Research. In Proceedings of the 2022 26th International Conference Information Visualisation (IV), Vienna, Austria, 19–22 July 2022; pp. 293–299. [Google Scholar] [CrossRef]

- Blair, S.J.; Bi, Y.; Mulvenna, M.D. Aggregated topic models for increasing social media topic coherence. Appl. Intell. 2020, 2020. 50, 138–156. [Google Scholar] [CrossRef]

- Williams, T.; Betak, J. A Comparison of LSA and LDA for the Analysis of Railroad Accident Text. Procedia Comput. Sci. 2018, 130, 98–102. [Google Scholar] [CrossRef]

- Moodley, A.; Marivate, V. Topic Modelling of News Articles for Two Consecutive Elections in South Africa. In Proceedings of the 2019 6th International Conference on Soft Computing and Machine Intelligence (ISCMI), Johannesburg, South Africa, 19–20 November 2019; pp. 131–136. [Google Scholar] [CrossRef]

- Hoyle, A.; Goel, P.; Peskov, D.; Hian-Cheong, A.; Boyd-Graber, J.; Resnik, P. Is Automated Topic Model Evaluation Broken? The Incoherence of Coherence. arXiv 2021, arXiv:2107.02173. [Google Scholar]

- Newman, D.; Lau, J.H.; Grieser, K.; Baldwin, T. Automatic Evaluation of Topic Coherence. In Proceedings of the Human Language Technologies: The 2010 Annual Conference of the North American Chapter of the Association for Computational Linguistics (HLT’10), Los Angeles, CA, USA, 1–6 June 2010; Association for Computational Linguistics: Los Angeles, CA, USA, 2010; pp. 100–108. [Google Scholar]

- Aletras, N.; Stevenson, M. Evaluating Topic Coherence Using Distributional Semantics. In Proceedings of the 10th International Conference on Computational Semantics (IWCS 2013)—Long Papers, Potsdam, Germany, 19–22 March 2013; Association for Computational Linguistics: Potsdam, Germany, 2013; pp. 13–22. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).