A Comparative Analysis of Word Embedding and Deep Learning for Arabic Sentiment Classification

Abstract

1. Introduction

- Present a comprehensive evaluation of the most widely used embedding (classical and contextualized) models for the Arabic language.

- Assess their performances among different benchmark datasets that represent different review lengths, Arabic language formats, and different domains. This incorporation of datasets from diverse domains can surmount the challenge of a potential lack of diversity in the obtainable datasets.

2. Related Work

3. Methodology

4. Experimental Setup

4.1. Datasets

- HARD [31]: The Hotel Arabic-Reviews Dataset (HARD) contains nearly 106,000 hotel reviews, written in Arabic, collected from the Booking.com website during June and July of 2016. The reviews are expressed in Modern Standard Arabic as well as dialectal Arabic.

- Khooli 3 (https://www.kaggle.com/abedkhooli/ar-reviews-100k/data (accessed on 11 March 2022)): The Arabic 100K Reviews dataset is a collection of reviews, collected from different services, concerning hotels, movies, books, and products. It is a three-class balanced set.

- AJGT (Arabic Jordanian General Tweets) [32]: Created by Alomari in 2017, the Arabic Jordanian General Tweets dataset consists of 1800 tweets categorized as positive and negative.

- ArSAS [33]: The Arabic Speech-Act and Sentiment Corpus of Tweets dataset is a sizable set of nearly 20,000 Arabic tweets, concerning multiple topics, collected, prepared, and annotated with four classes of sentiment. ArSAS tweets, belonging to one of twenty topics, consist of three main archetypes: long standing (topics about articles that are commonly discussed over a long period), entity (topics about celebrities or organizations), and event (topics about an important incident).

- ASTD (Arabic Sentiment Tweets Dataset) [34]: The Arabic Sentiment Tweets Dataset is a set of Arabic tweets containing around 10,000 entries. They can be identified as objective, subjective negative, subjective positive, and subjective mixed. These tweets were labelled as 6691 objective, 1684 subjective negative, 832 subjective mixed, and 799 subjective positives. For the sake of this study, only positive, negative, and mixed reviews were used. Table 2 shows a summary of all used datasets.

4.2. Model Building and Training

- Batch size represents the number of instances to be considered before the model’s parameters are updated.

- The number of epochs is the number of times the algorithm will work for the training dataset.

- Optimizer is used to bridge the gap between updating model parameters and the loss function.

- Dropout enhances the model’s ability to generalize and reduces the probability of overfitting.

- Classifier is the final layer that projects all the input into predicted classes. Thus, choosing this layer greatly impacts the overall performance. Sigmoid and Softmax are the options that are considered to be the most suitable when labels are independently distributed.

- Batches of size 32, 64, and 128 were tested, with 128 achieving the highest level of performance.

- Adam and Nadam optimizers were assessed, resulting in Adam attaining the best performance with a learning rate of 0.001.

- An evaluation of 25, 40, and 50 training epochs was conducted, and the performances were optimum at 50 epochs.

- Dropout values of 0.1, 0.2, and 0.5 were used and a dropout rate of 0.2 was used to constrain the weights of the layers.

- The output logits across all labels were generated, and the probability of a specific sample belonging to one label was optimal when estimated by the Softmax function.

4.3. Word Embedding

- Word2vec: This research employed the AraVec [4], a pre-trained embedding on a Twitter dataset using CBOW and three hundred embedding dimensions.

- GloVe: Currently there is only one, pre-trained GloVe embedding available online for use in the Arabic language (https://archive.org/details/arabic_corpus (accessed on 16 February 2022)). The available pre-trained GloVe vectors consist of 256 dimensions. Pretrained GloVe embedding is generated based on Wikipedia.

- FastText: Pretrained versions of FastText are available for different languages (https://fasttext.cc/docs/en/crawl-vectors.html (accessed on 25 February 2022)). The experimental datasets exist only in Arabic; therefore, the experiment used the available 300-dimension Arabic pre-trained vectors of FastText.

- Custom word embedding: While using the pre-trained word embedding, it may be the case that some words in the training dataset may not be available in the pre-trained vectors. Those missing vocabularies are replaced with a zero vector, which means that they will not be used. These vocabularies are vital for accurate classification. To solve this issue, custom word embedding was employed, using the training data, so that the semantic relationship of the used training corpus can be better represented. Hence, for the sake of this study’s experiments, custom embeddings are considered to study their impact on performance.

4.4. Performance Evaluation Metrics

- Accuracy measures the ratio of correctly predicted observations to the total number of observations calculated by the (1).

- Precision/PPV (Positive predictive value) represents the ratio of correctly predicted, positive observations to the total predicted positive observations. High precision means a low, false positive rate and can be calculated as in (2).

- Recall (Sensitivity) is the ratio of correctly predicted positive observations compared with all the actual positive observations. The recall answer question is: of all the actual positive observations how many were accurately predicted? Recall is calculated by (3).

- F1 score is the weighted average of precision and recall as in (4). It takes both false positives and false negatives into account. F1 is more indicative than accuracy in the case of uneven class distribution.

5. Results and Discussion

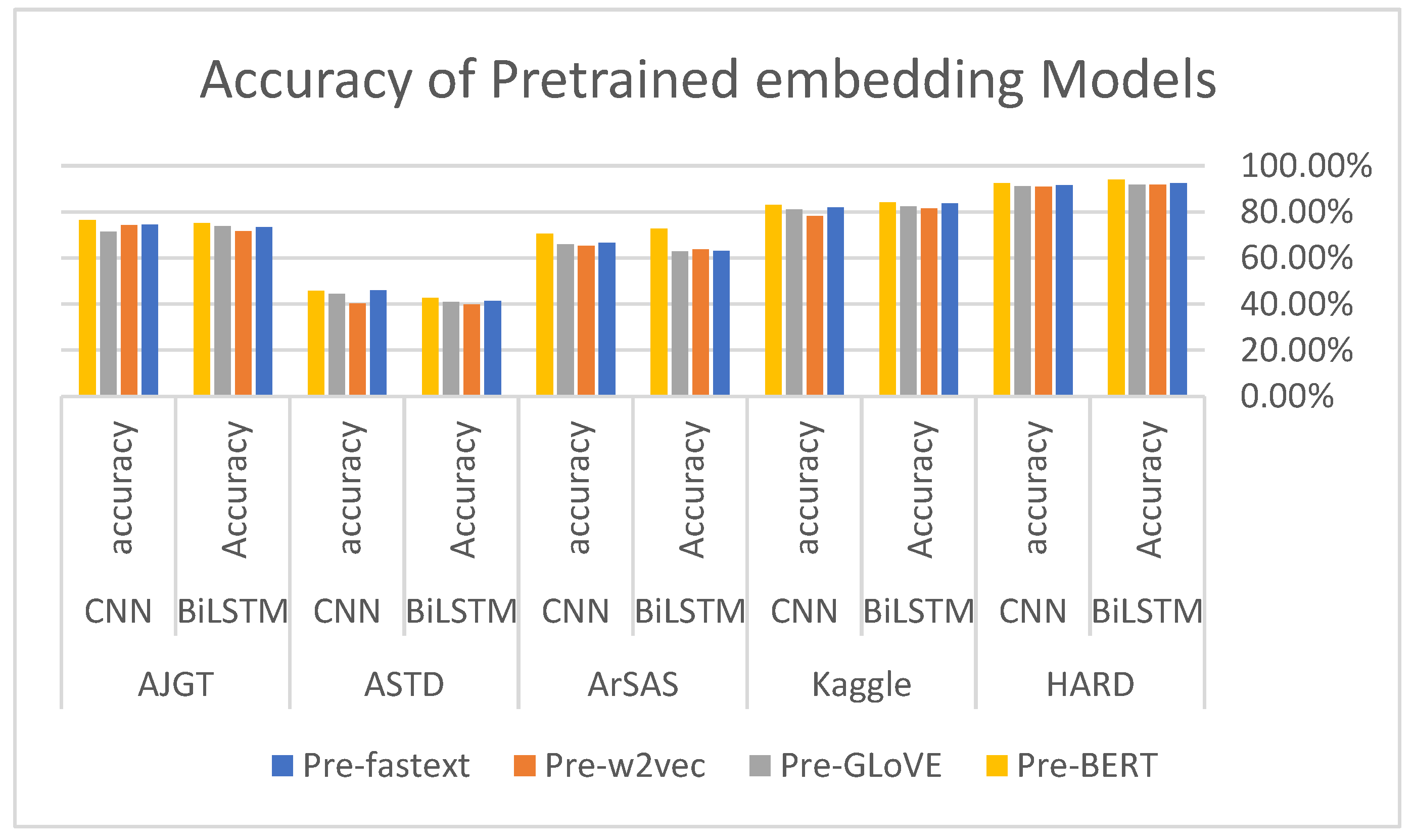

5.1. Pretrained Embeddings

5.2. Custom Embeddings

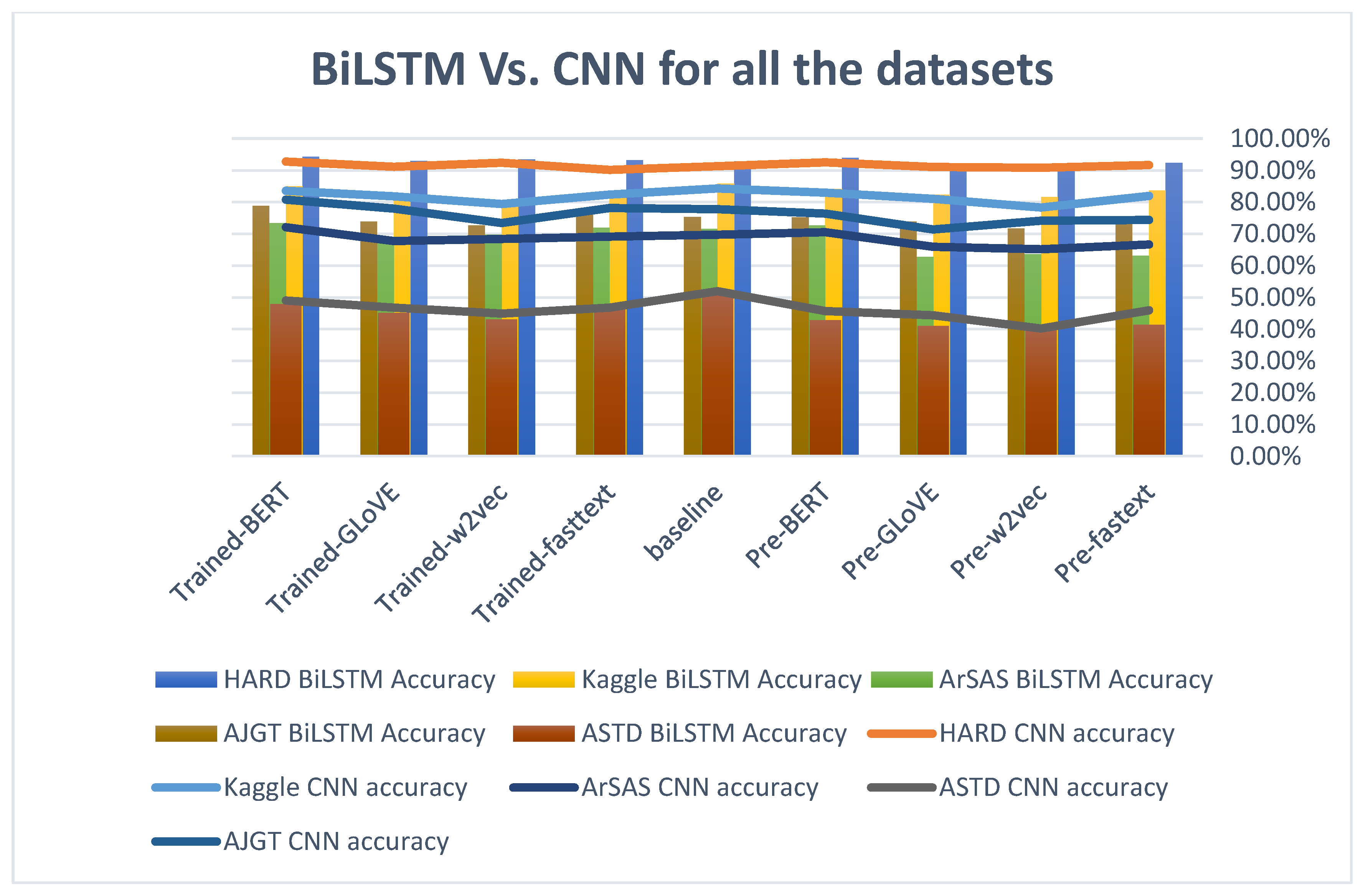

5.3. Trained vs. Pretrained Embeddings

5.4. Classic Embeddings

5.5. Contextualized vs. Classic Embeddings

5.6. Impact of the Deep Learning Model

5.7. Contextualized vs. Classic Embeddings

5.8. Impact of the Deep Learning Model

6. Conclusions and Future Directions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Torregrossa, F.; Allesiardo, R.; Claveau, V.; Kooli, N.; Gravier, G. A survey on training and evaluation of word embeddings. Int. J. Data Sci. Anal. 2021, 11, 85–103. [Google Scholar] [CrossRef]

- Shahi, T.B.; Sitaula, C.; Paudel, N. A Hybrid Feature Extraction Method for Nepali COVID-19-Related Tweets Classification. Comput. Intell. Neurosci. 2022, 2022, 5681574. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Nulty, P.; Lillis, D. A Comparative Study on Word Embeddings in Deep Learning for Text Classification. In Proceedings of the 4th International Conference on Natural Language Processing and Information Retrieval, Seoul, Republic of Korea, 18–20 December 2020; pp. 37–46. [Google Scholar] [CrossRef]

- Soliman, A.B.; Eissa, K.; El-Beltagy, S.R. AraVec: A set of Arabic Word Embedding Models for use in Arabic NLP. Procedia Comput. Sci. 2017, 117, 256–265. [Google Scholar] [CrossRef]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Santos, I.; Nedjah, N.; de Macedo Mourelle, L. Sentiment analysis using convolutional neural network with fastText embeddings. In Proceedings of the 2017 IEEE Latin American Conference on Computational Intelligence (LA-CCI), Arequipa, Peru, 8–10 November 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar] [CrossRef]

- Lan, Z.; Chen, M.; Goodman, S.; Gimpel, K.; Sharma, P.; Soricut, R. ALBERT: A Lite BERT for Self-supervised Learning of Language Representations. arXiv 2019, arXiv:1909.11942. [Google Scholar] [CrossRef]

- Yang, Z.; Dai, Z.; Yang, Y.; Carbonell, J.; Salakhutdinov, R.; Le, Q.V. XLNet: Generalized Autoregressive Pretraining for Language Understanding. arXiv 2020, arXiv:1906.08237. Available online: http://arxiv.org/abs/1906.08237 (accessed on 11 May 2022).

- Wang, B.; Wang, A.; Chen, F.; Wang, Y.; Kuo, C.-C.J. Evaluating word embedding models: Methods and experimental results. APSIPA Trans. Signal Inf. Process. 2019, 8, e19. [Google Scholar] [CrossRef]

- Bian, J.; Gao, B.; Liu, T.-Y. Knowledge-powered deep learning for word embedding. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases; Springer: Berlin/Heidelberg, Germany, 2014; pp. 132–148. [Google Scholar]

- Alamoudi, E.S.; Alghamdi, N.S. Sentiment classification and aspect-based sentiment analysis on yelp reviews using deep learning and word embedding. J. Decis. Syst. 2021, 30, 259–281. [Google Scholar] [CrossRef]

- Kilimci, Z.H.; Akyokuş, S. Deep learning-and word embedding-based heterogeneous classifier ensembles for text classification. Complexity 2018, 2018, 7130146. [Google Scholar] [CrossRef]

- Al-Ayyoub, M.; Khamaiseh, A.A.; Jararweh, Y.; Al-Kabi, M.N. A comprehensive survey of arabic sentiment analysis. Inf. Process. Manag. 2019, 56, 320–342. [Google Scholar] [CrossRef]

- Badaro, G.; Baly, R.; Hajj, H.; El-Hajj, W.; Shaban, K.B.; Habash, N.; Al-Sallab, A.; Hamdi, A. A Survey of Opinion Mining in Arabic: A Comprehensive System Perspective Covering Challenges and Advances in Tools, Resources, Models, Applications, and Visualizations. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2019, 18, 1–52. [Google Scholar] [CrossRef]

- Rajput, V.S.; Dubey, S.M. An Overview of Use of Natural Language Processing in Sentiment Analysis based on User Opinions. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2016, 6, 5. [Google Scholar]

- Alnawas, A.; Arici, N. Effect of Word Embedding Variable Parameters on Arabic Sentiment Analysis Performance. arXiv 2021, arXiv:2101.02906. [Google Scholar] [CrossRef]

- Barhoumi, A.; Estève, Y.; Aloulou, C.; Belguith, L. Document embeddings for Arabic Sentiment Analysis. In Proceedings of the Conference on Language Processing and Knowledge Man-agement, LPKM 2017, Sfax, Tunisia, 8–10 September 2017. [Google Scholar]

- Alayba, A.M.; Palade, V.; England, M.; Iqbal, R. Improving Sentiment Analysis in Arabic Using Word Representation. In Proceedings of the 2018 IEEE 2nd International Workshop on Arabic and Derived Script Analysis and Recognition (ASAR), London, UK, 12–14 March 2018; pp. 13–18. [Google Scholar] [CrossRef]

- Altowayan, A.A.; Tao, L. Word embedding for Arabic sentiment analysis. In Proceedings of the 2016 IEEE International Conference on Big Data (Big Data), Washington, DC, USA, 5–8 December 2016; pp. 3820–3825. [Google Scholar]

- El Mekki, A.; El Mahdaouy, A.; Berrada, I.; Khoumsi, A. Domain Adaptation for Arabic Cross-Domain and Cross-Dialect Sentiment Analysis from Contextualized Word Embedding. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies; Association for Computational Linguistics, Online, 6–11 June 2021; pp. 2824–2837. [Google Scholar]

- Antoun, W.; Baly, F.; Hajj, H. AraBERT: Transformer-based Model for Arabic Language Understanding. In Proceedings of the 4th Workshop on Open-Source Arabic Corpora and Processing Tools, with a Shared Task on Offensive Language Detection; European Language Resource Association: Marseille, France, 2020; pp. 9–15. Available online: https://aclanthology.org/2020.osact-1.2 (accessed on 11 November 2022).

- Fouad, K.M.; Sabbeh, S.F.; Medhat, W. Arabic Fake News Detection Using Deep Learning. Comput. Mater. Contin. 2022, 71, 3647–3665. [Google Scholar] [CrossRef]

- Dahou, A.; Xiong, S.; Zhou, J.; Haddoud, M.H.; Duan, P. Word Embeddings and Convolutional Neural Network for Arabic Sentiment Classification. In Proceedings of the COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers, Osaka, Japan, 11–16 December 2016; pp. 2418–2427. Available online: https://aclanthology.org/C16-1228 (accessed on 15 December 2022).

- Sallab, A.; Hajj, H.; Badaro, G.; Baly, R.; El Hajj, W.; Shaban, B.K. Deep Learning Models for Sentiment Analysis in Arabic. In Proceedings of the Second Workshop on Arabic Natural Language Processing; Association for Computational Linguistics, Beijing, China, 30 July 2015; pp. 9–17. [Google Scholar] [CrossRef]

- Abdul-Mageed, M.; Zhang, C.; Hashemi, A.; Nagoudi, E.M.B. AraNet: A Deep Learning Toolkit for Arabic Social Media. In Proceedings of the 4th Workshop on Open-Source Arabic Corpora and Processing Tools, with a Shared Task on Offensive Language Detection; European Language Resource Association: Marseille, France, 2020; pp. 16–23. Available online: https://aclanthology.org/2020.osact-1.3 (accessed on 15 December 2022).

- Alayba, A.M.; Palade, V. Leveraging Arabic sentiment classification using an enhanced CNN-LSTM approach and effective Arabic text preparation. J. King Saud Univ. Comput. Inf. Sci. 2021, 34, 9710–9722. [Google Scholar] [CrossRef]

- Al-Azani, S.; El-Alfy, E.-S.M. Hybrid Deep Learning for Sentiment Polarity Determination of Arabic Microblogs. In Neural Information Processing; Lecture Notes in Computer Science; Liu, D., Xie, S., Li, Y., Zhao, D., El-Alfy, E.-S.M., Eds.; Springer International Publishing: Cham, Switzerland, 2017; Volume 10635, pp. 491–500. [Google Scholar] [CrossRef]

- Al-Smadi, M.; Qawasmeh, O.; Al-Ayyoub, M.; Jararweh, Y.; Gupta, B. Deep Recurrent neural network vs. support vector machine for aspect-based sentiment analysis of Arabic hotels’ reviews. J. Comput. Sci. 2018, 27, 386–393. [Google Scholar] [CrossRef]

- Safaya, A.; Abdullatif, M.; Yuret, D. KUISAIL at SemEval-2020 Task 12: BERT-CNN for Offensive Speech Identification in Social Media. arXiv 2020, arXiv:2007.13184. [Google Scholar] [CrossRef]

- Elnagar, A.; Khalifa, Y.S.; Einea, A. Hotel Arabic-Reviews Dataset Construction for Sentiment Analysis Applications. In Intelligent Natural Language Processing: Trends and Applications. Studies in Computational Intelligence; Shaalan, K., Hassanien, A., Tolba, F., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; Volume 740, pp. 35–52. [Google Scholar] [CrossRef]

- Alomari, K.M.; Elsherif, H.M.; Shaalan, K. Arabic Tweets Sentimental Analysis Using Ma-chine Learning. In Proceedings of the International Conference on Industrial, Engineering and Other Applications of Applied Intelligent Systems, Arras, France, 27–30 June 2017. [Google Scholar] [CrossRef]

- Elmadany, A.; Mubarak, H.; Magdy, W. ArSAS: An Arabic SpeechAct and Sentiment Corpus of Tweets. In Proceedings of the 3rd Workshop on OpenSource Arabic Corpora and Processing Tools, Miyazaki, Japan, 15 January 2018; p. 20. [Google Scholar]

- Nabil, M.; Aly, M.; Atiya, A. ASTD: Arabic Sentiment Tweets Dataset. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 2515–2519. [Google Scholar]

- Kim, Y. Convolutional neural networks for sentence classification. arXiv 2014, arXiv:1408.5882. [Google Scholar]

- Vizcarra, G.; Mauricio, A.; Mauricio, L. A deep learning approach for sentiment analysis in Spanish tweets. In International Conference on Artificial Neural Networks; Springer: Berlin/Heidelberg, Germany, 2018; pp. 622–629. [Google Scholar]

- Heikal, M.; Torki, M.; El-Makky, N. Sentiment analysis of Arabic tweets using deep learning. Procedia Comput. Sci. 2018, 142, 114–122. [Google Scholar] [CrossRef]

- Alayba, A.M.; Palade, V.; England, M.; Iqbal, R. A combined CNN and LSTM model for Arabic sentiment analysis. In Proceedings of the International Cross-Domain Conference for Machine Learning and Knowledge Extraction, Hamburg, Germany, 27–30 August 2018; pp. 179–191. [Google Scholar] [CrossRef]

- Hourrane, O.; Idrissi, N.; Benlahmar, E.H. An empirical study of deep neural networks models for sentiment classification on movie reviews. In Proceedings of the 2019 1st International Conference on Smart Systems and Data Science (ICSSD), Rabat, Morocco, 3–4 October 2019. [Google Scholar]

- Mohammed, A.; Kora, R. Deep learning approaches for Arabic sentiment analysis. Soc. Netw. Anal. Min. 2019, 9, 52. [Google Scholar] [CrossRef]

| Study | Experimental Model | Shortcomings | Main Findings |

|---|---|---|---|

| [17] |

|

|

|

| [18] |

|

| Achieved human-like translation results with a correlation between 0.75 and 0.79. |

| [20] |

|

| AraBERT achieved state-of-the-art performance on most tested Arabic NLP tasks. |

| [21] | Domain adversarial neural network (DANN) |

| Success of the proposed domain adaptation method. |

| [22] | Compared various classifiers in addition to deep learning models (LSTM and BiLSTM) |

| The model outperforms the baseline on both large and small datasets. |

| [24] | CNN |

| The proposed scheme outperforms the existing methods on four out of five balanced and unbalanced datasets. |

| [25] | Bag-of-word, deep belief networks, deep auto encoders, and recursive auto encoder |

| High improvement with the recursive auto encoder. |

| [26] | AraNet based on BERT |

| High performance in detecting dialect, gender, emotion, irony, and sentiment from social media posts. |

| [27] | CNN + BiLSTM |

| Demonstrated advanced results by combining CNN and BiLSTM to prepare Arabic text and better represent associated text features. |

| [28] | CNN + LSTM |

| Promising results have been attained when combining LSTMs when compared with other models. |

| [29] |

|

| Results showed that the SVM approach outperforms the RNN approach in the research-investigated tasks, whereas the RNN execution time is swifter. |

| Dataset | Count | Class Labels | Vocab Size | AVG Token Length | Max Text Length | Text Type |

|---|---|---|---|---|---|---|

| HARD | ~106K reviews | Positive: 52,849 Negative: 52,849 | 130,654 | 200 | 507 | MSA Egyptian and gulf dialects |

| Khooli | ~67K reviews | Negative:33,333 Positive: 33,333 Mixed: 33,333 | 238,801 | 150 | 1445 | MSA and Egyptian dialects |

| AJGT | 1800 reviews | Negative 900 Positive 900 | 6806 | 40 | 129 | Modern Standard Arabic (MSA) or Jordanian dialect |

| ArSAS | ~20K reviews | Negative 7384 Neutral 6894 Positive 4400 Mixed 1219 | 62,861 | 50 | 288 | MSA, Egyptian and Gulf dialects |

| ASTD | 3315 reviews | NEG: 1684 Mixed: 832 POS: 799 | 19,235 | 15 | 28 | Egyptian dialects |

| Batch Size | Optimizer | Epochs | Dropout | Classifier |

|---|---|---|---|---|

| 128 | Adam | 50 | 0.2 | Softmax |

| Predicted Class | |||

|---|---|---|---|

| Actual Class | Class = yes | Class = no | |

| Class = yes | TP (true positive) | FN (false negative) | |

| Class = No | FP (false positive) | TN (true negative) | |

| Accuracy | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| HARD | Khooli | ArSAS | ASTD | AJGT | ||||||

| BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | |

| Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | |

| FasText | 92.33% | 91.65% | 83.64% | 81.96% | 63.14% | 66.65% | 41.41% | 45.94% | 73.44% | 74.38% |

| AraVec | 91.68% | 90.83% | 81.56% | 78.29% | 63.65% | 65.16% | 39.84% | 40.20% | 71.66% | 74.16% |

| GLoVE | 91.73% | 91.07% | 82.27% | 81.04% | 62.76% | 65.94% | 41.02% | 44.33% | 73.88% | 71.38% |

| AraBERT | 93.97% | 92.54% | 84.15% | 82.95% | 72.63% | 70.53% | 42.78% | 45.68% | 75.14% | 76.35% |

| Precision | ||||||||||

| HARD | Khooli | ArSAS | ASTD | AJGT | ||||||

| BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | |

| Precision | Precision | Precision | Precision | Precision | Precision | Precision | Precision | Precision | Precision | |

| FasText | 93.67% | 92.06% | 83.00% | 81.26% | 69.59% | 66.05% | 42.72% | 45.71% | 74.07% | 75.92% |

| AraVec | 91.14% | 90.45% | 80.23% | 79.21% | 64.07% | 64.19% | 40.41% | 41.22% | 71.03% | 73.11% |

| GLoVE | 92.00% | 91.42% | 81.90% | 80.72% | 66.82% | 65.43% | 41.95% | 43.17% | 73.77% | 75.08% |

| AraBERT | 93.85% | 93.12% | 83.99% | 82.32% | 72.42% | 69.00% | 43.71% | 46.20% | 75.11% | 76.00% |

| Recall | ||||||||||

| HARD | Khooli | ArSAS | ASTD | AJGT | ||||||

| BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | |

| Recall | Recall | Recall | Recall | Recall | Recall | Recall | Recall | Recall | Recall | |

| FasText | 93.44% | 92.20% | 82.56% | 80.12% | 68.13% | 66.81% | 42.13% | 46.69% | 74.73% | 75.31% |

| AraVec | 91.04% | 90.31% | 80.72% | 78.74% | 64.25% | 63.71% | 40.09% | 41.84% | 73.48% | 72.51% |

| GLoVE | 92.31% | 91.54% | 82.31% | 81.52% | 66.44% | 65.18% | 41.97% | 42.69% | 73.22% | 74.47% |

| AraBERT | 93.58% | 91.72% | 83.42% | 82.78% | 71.51% | 69.00% | 43.39% | 46.84% | 74.32% | 76.00% |

| F1-Measure | ||||||||||

| HARD | Khooli | ArSAS | ASTD | AJGT | ||||||

| BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | |

| Fmeasure | Fmeasure | Fmeasure | Fmeasure | Fmeasure | Fmeasure | Fmeasure | Fmeasure | Fmeasure | Fmeasure | |

| FasText | 93.55% | 92.13% | 81.96% | 81.44% | 68.85% | 65.28% | 41.58% | 46.19% | 73.90% | 74.76% |

| AraVec | 91.69% | 91.38% | 82.28% | 80.97% | 64.31% | 65.95% | 40.25% | 41.03% | 72.25% | 75.31% |

| GLoVE | 90.89% | 90.48% | 81.47% | 78.13% | 66.12% | 64.30% | 41.66% | 46.19% | 73.14% | 74.22% |

| AraBERT | 93.61% | 92.41% | 83.70% | 82.55% | 71.96% | 69.00% | 43.55% | 43.35% | 74.71% | 76.00% |

| HARD | Khooli | ArSAS | ASTD | AJGT | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | |

| Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | |

| Baseline | 92.82% | 91.29% | 83.78% | 84.29% | 71.59% | 69.71% | 50.78% | 51.95% | 75.34% | 77.78% |

| FasText | 93.21% | 90.14% | 85.07% | 82.36% | 71.97% | 69.13% | 46.75% | 46.75% | 76.92% | 78.12% |

| Word2vec | 93.47% | 92.40% | 82.11% | 81.81% | 69.72% | 68.39% | 43.08% | 44.92% | 72.66% | 73.44% |

| GLoVE | 92.91% | 91.14% | 81.48% | 79.40% | 68.08% | 67.72% | 45.13% | 46.77% | 73.88% | 77.97% |

| BERT | 94.25% | 92.75% | 84.97% | 83.54% | 73.35% | 72.07% | 47.91% | 49.00% | 78.76% | 80.76% |

| Precision | ||||||||||

| HARD | Khooli | ArSAS | ASTD | AJGT | ||||||

| BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | |

| Precision | Precision | Precision | Precision | Precision | Precision | Precision | Precision | Precision | Precision | |

| Baseline | 91.12% | 91.55% | 83.20% | 82.96% | 70.65% | 69.11% | 49.30% | 50.07% | 74.44% | 75.28% |

| FasText | 94.00% | 93.00% | 83.81% | 82.00% | 70.00% | 69.00% | 46.00% | 48.00% | 77.87% | 78.00% |

| word2vec | 93.51% | 92.00% | 82.33% | 81.03% | 71.00% | 69.00% | 43.00% | 46.00% | 72.53% | 74.00% |

| GLoVE | 92.17% | 91.00% | 81.00% | 79.78% | 68.00% | 64.00% | 45.34% | 46.02% | 74.15% | 76.00% |

| BERT | 95.00% | 93.00% | 85.23% | 84.00% | 72.00% | 70.00% | 46.00% | 48.00% | 77.90% | 79.00% |

| Recall | ||||||||||

| HARD | Khooli | ArSAS | ASTD | AJGT | ||||||

| BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | |

| Recall | Recall | Recall | Recall | Recall | Recall | Recall | Recall | Recall | Recall | |

| Baseline | 93.13% | 92.91% | 86.40% | 83.45% | 71.00% | 69.32% | 56.78% | 53.00% | 80.00% | 80.00% |

| FasText | 93.00% | 90.00% | 82.97% | 82.00% | 70.00% | 67.00% | 46.00% | 47.00% | 78.00% | 78.00% |

| Word2vec | 93.00% | 92.00% | 80.00% | 78.00% | 70.00% | 68.00% | 44.71% | 45.63% | 73.28% | 73.00% |

| GLoVE | 93.88% | 91.00% | 82.56% | 82.00% | 68.00% | 64.00% | 44.00% | 45.19% | 78.00% | 77.80% |

| BERT | 94.00% | 92.00% | 85.11% | 83.00% | 71.00% | 69.00% | 45.00% | 47.41% | 73.28% | 79.00% |

| F1-Measure | ||||||||||

| HARD | Khooli | ArSAS | ASTD | AJGT | ||||||

| BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | |

| Fmeasure | Fmeasure | Fmeasure | Fmeasure | Fmeasure | Fmeasure | Fmeasure | Fmeasure | Fmeasure | Fmeasure | |

| Baseline | 92.12% | 90.22% | 80.74% | 80.42% | 69.03% | 67.21% | 44.74% | 46.09% | 76.12% | 77.17% |

| FasText | 93.50% | 92.00% | 83.97% | 83.06% | 71.80% | 69.99% | 46.30% | 47.49% | 77.93% | 78.00% |

| Word2vec | 93.00% | 91.50% | 82.70% | 82.03% | 70.50% | 68.50% | 43.84% | 45.81% | 72.90% | 73.50% |

| GLoVE | 92.52% | 90.52% | 81.56% | 80.51% | 68.78% | 66.80% | 44.62% | 45.60% | 75.03% | 76.89% |

| BERT | 94.50% | 92.50% | 85.11% | 83.00% | 72.50% | 69.50% | 47.49% | 48.70% | 75.52% | 79.00% |

| HARD | Khooli | ArSAS | ASTD | AJGT | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | |

| Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | |

| BERT | 94.25% | 92.75% | 84.97% | 83.54% | 73.35% | 72.07% | 47.91% | 49.00% | 78.76% | 80.76% |

| AraBERT | 93.97% | 92.54% | 84.15% | 82.95% | 72.63% | 70.53% | 42.78% | 45.68% | 75.14% | 76.35% |

| Train_FasText | 93.21% | 90.14% | 85.07% | 82.36% | 71.97% | 69.13% | 46.75% | 46.75% | 76.92% | 78.12% |

| FasText | 92.33% | 91.65% | 83.64% | 81.96% | 63.14% | 66.65% | 41.41% | 45.94% | 73.44% | 74.38% |

| Word2vec | 93.47% | 92.40% | 82.11% | 81.81% | 69.72% | 68.39% | 43.08% | 44.92% | 72.66% | 73.44% |

| AraVec | 91.68% | 90.83% | 81.56% | 78.29% | 63.65% | 65.16% | 39.84% | 40.20% | 71.66% | 74.16% |

| Train_GLoVE | 92.91% | 91.14% | 81.48% | 79.40% | 68.08% | 67.72% | 45.13% | 46.77% | 73.88% | 77.97% |

| GLoVE | 91.73% | 91.07% | 82.27% | 81.04% | 62.76% | 65.94% | 41.02% | 44.33% | 73.88% | 71.38% |

| HARD | Khooli | ArSAS | ASTD | AJGT | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | BiLSTM | CNN | |

| Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | Accuracy | |

| FasText | 92.33% | 91.65% | 83.64% | 81.96% | 63.14% | 66.65% | 41.41% | 45.94% | 73.44% | 74.38% |

| AraVec | 91.68% | 90.83% | 81.56% | 78.29% | 63.65% | 65.16% | 39.84% | 40.20% | 71.66% | 74.16% |

| GLoVE | 91.73% | 91.07% | 82.27% | 81.04% | 62.76% | 65.94% | 41.02% | 44.33% | 73.88% | 71.38% |

| Train_FasText | 93.21% | 90.14% | 85.07% | 82.36% | 71.97% | 69.13% | 46.75% | 46.75% | 76.92% | 78.12% |

| Word2vec | 93.47% | 92.40% | 82.11% | 81.81% | 69.72% | 68.39% | 43.08% | 44.92% | 72.66% | 73.44% |

| Train_GLoVE | 92.91% | 91.14% | 81.48% | 79.40% | 68.08% | 67.72% | 45.13% | 46.77% | 73.88% | 77.97% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sabbeh, S.F.; Fasihuddin, H.A. A Comparative Analysis of Word Embedding and Deep Learning for Arabic Sentiment Classification. Electronics 2023, 12, 1425. https://doi.org/10.3390/electronics12061425

Sabbeh SF, Fasihuddin HA. A Comparative Analysis of Word Embedding and Deep Learning for Arabic Sentiment Classification. Electronics. 2023; 12(6):1425. https://doi.org/10.3390/electronics12061425

Chicago/Turabian StyleSabbeh, Sahar F., and Heba A. Fasihuddin. 2023. "A Comparative Analysis of Word Embedding and Deep Learning for Arabic Sentiment Classification" Electronics 12, no. 6: 1425. https://doi.org/10.3390/electronics12061425

APA StyleSabbeh, S. F., & Fasihuddin, H. A. (2023). A Comparative Analysis of Word Embedding and Deep Learning for Arabic Sentiment Classification. Electronics, 12(6), 1425. https://doi.org/10.3390/electronics12061425