The BciAi4SLA Project: Towards a User-Centered BCI

Abstract

1. Introduction

2. State-of-the-Art

3. Background and Research Project

- PHASE 1: STUDY AND IMPLEMENTATION OF COGNITIVE TESTS BASED ON BCI. The main goals of this initial phase were to study, design, and implement a set of cognitive tests aimed at evaluating the best mental activities detectable through BCIs (Section 4);

- PHASE 2: TESTING. The main goals of the second phase were to test the above-selected and implemented tests, first, with neuro-typical users and then with patients suffering from ALS in LIS condition with the support of the University Hospital “Città della Salute e della Scienza” of Turin (https://www.cittadellasalute.to.it/, accessed on 23 December 2022) and of the CRESLA, partners of the project (Section 6);

- PHASE 3: ANALYSIS OF COLLECTED DATA. Raw data (brain waves) collected through the BCI headsets have been first analyzed via filtering and then via classification algorithms to extrapolate the input data as accurately as possible. The processed and classified signals have been then correlated with the right requests in order to evaluate the percentage of correctly classified data (accuracy) (Section 5 and Section 6);

- PHASE 4: BCI PLATFORM DEVELOPMENT. Following the results of data analysis, we are developing, from a user-centered perspective, a software platform (BciAi4Sla) that will allow, through the BCI devices, online interaction between the subject with ALS and a dedicated communication system (Section 7).

4. Study and Implementation of Cognitive Tests

4.1. Study of Cognitive Tests Based on BCI

4.1.1. Motor Imagery Task

4.1.2. Spatial Navigation Imagery Task

4.1.3. Music Tune Imagery Task

4.1.4. Face Imagery Task

4.1.5. Object Rotation Imagery Task

4.1.6. Math Counting Task

5. Processing and Implementations

5.1. Connection to Emotiv Epoc+

- Creation of the connection with the WebSocket protocol;

- Authorization of communication by passing information, such as license, client ID, and client secret (all obtainable from the purchased license);

- Placing the headset on the subject;

- Creation of the recording session;

- Start recording with the insertion of markers at the beginning of each task;

- End registration with the disconnection of the headset and request to send the CSV file with the raw data.

5.2. Connection to OpenBCI Mark IV

- Creation of the connection with the headset via USB dongle;

- Acquisition of the information of the headset in use (such as headset id, serial port, IP address, and IP port);

- Placing the headset on the subject;

- Creation of the recording session;

- Start recording with the insertion of markers at the beginning of each trial;

- End registration; the CSV file is automatically parsed and saved.

5.3. General Procedure for Data Acquisition, Processing, and Training

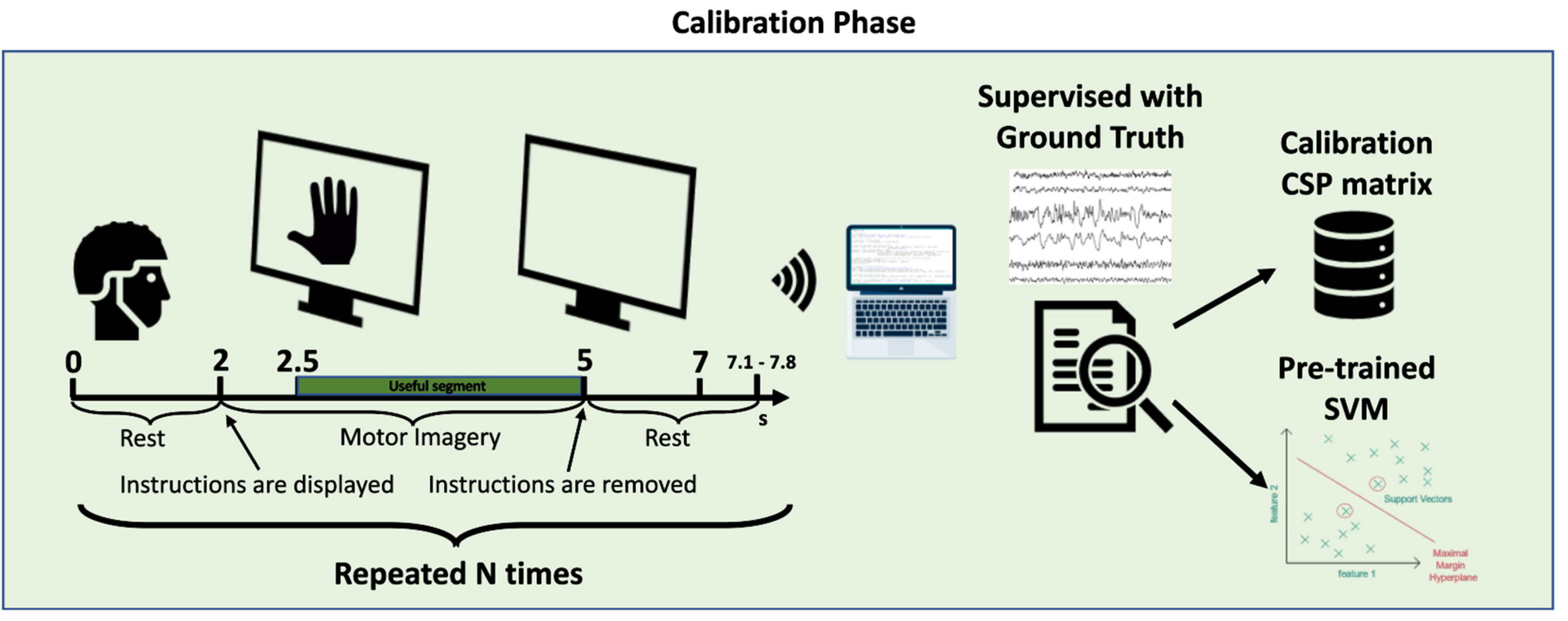

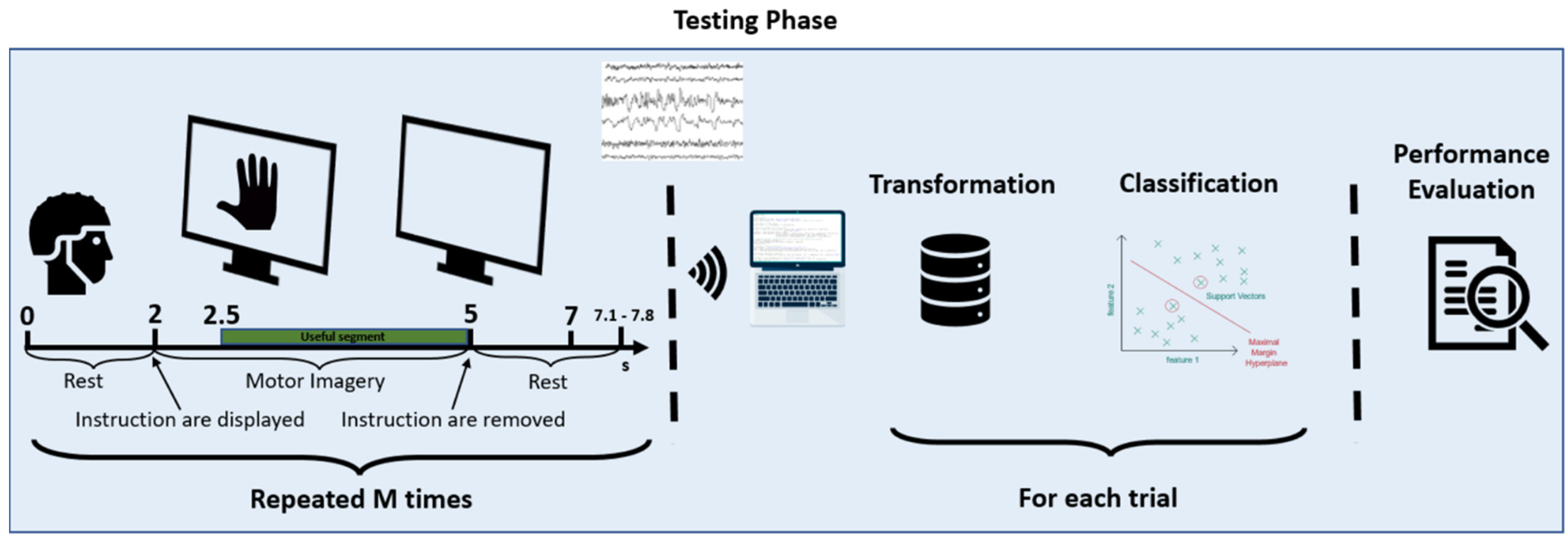

5.3.1. Experimental Protocol

5.3.2. Data Filtering

5.3.3. Extraction of Central Sequences of Imagined Movement

5.3.4. Signal Processing

5.3.5. Feature Extraction

5.3.6. Classification

- The training dataset is parsed, and a CSV file with the union of all the training data is generated as output;

- This CSV file is processed by the signal processing module. The data are cleaned with the various filters described above, and features are extracted from them. Tasks are also divided into imaged-left and imagined-right movements;

- The classifier is trained by the SVM algorithm. To tune the hyperparameters of the training set, we used the Python Optunity library (https://pypi.org/project/Optunity/) (accessed on 23 December 2022), which, having a given dataset as input, finds the best possible range of C and gamma parameters of the Radial Basis Function (RBF) kernel SVM (Intuitively, the gamma parameter defines how far the influence of a single training example reaches, with low values meaning ‘far’ and high values meaning ‘close’. The gamma parameters can be seen as the inverse of the radius of influence of samples selected by the model as support vectors. The C parameter trades off the correct classification of training examples against the maximization of the decision function’s margin. For larger values of C, a smaller margin will be accepted if the decision function is better at classifying all training points correctly. A lower C will encourage a larger margin, therefore encouraging a simpler decision function at the cost of training accuracy. In other words, C behaves as a regularization parameter in the SVM. Source: https://scikit-learn.org/stable/auto_examples/svm/plot_rbf_parameters.html) (accessed on 23 December 2022).

- The result of steps 1 to 3 are two persistent Python objects: the classifier; and the W matrix, created by the CSP algorithm;

- Finally, the trained classifier is loaded, and the accuracy test is performed on the test dataset, parsed and filtered with the same filters used for the training set, with the difference that the previously-created W matrix is used.

6. Processing Settings and Assessment of User’s Performance

- A brief explanation of the test objective;

- An explanation with the demonstration of the movement to be made (both real and then imagined);

- A few minutes of free practice of the movement (both real and then imagined);at this point, the subject started the protocol described above;

- The protocol starts with a slideshow of pre-established images, as described above.

- Tuning of training set/test set;

- Tuning of weights and filters;

- Real-time classification.

6.1. Training Set/Test Set

6.2. Tuning of Weights and Filters

- The classifier’s weights, which were uniform weight, as if it had no weight, and logarithmic weight calculated as the Napierian logarithm of each input value;

- The number of filters, namely, the column’s W matrix, as described in Section 5.3.4, when the signal processing approach with CSP is described, were, respectively, 6, 8, 9, 10, 11 and 12.

6.3. Real-Time Evaluations

6.3.1. Real-Time Evaluation with OpenBCI Mark IV

6.3.2. Apparatus OpenBCI Mark IV

6.4. Discussion

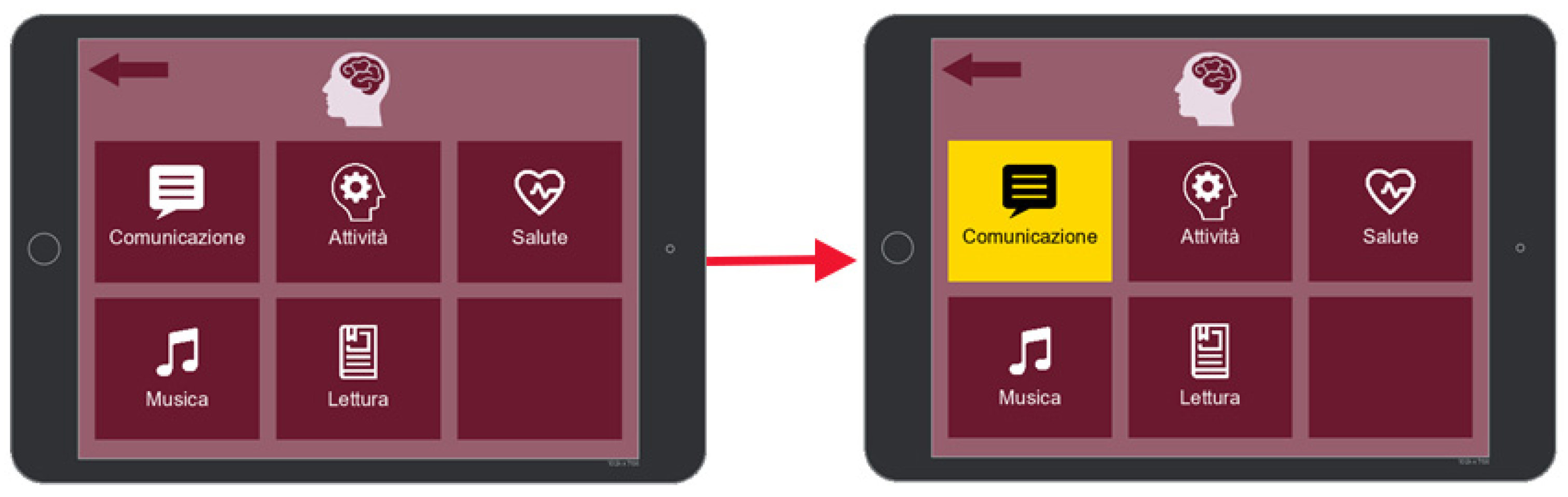

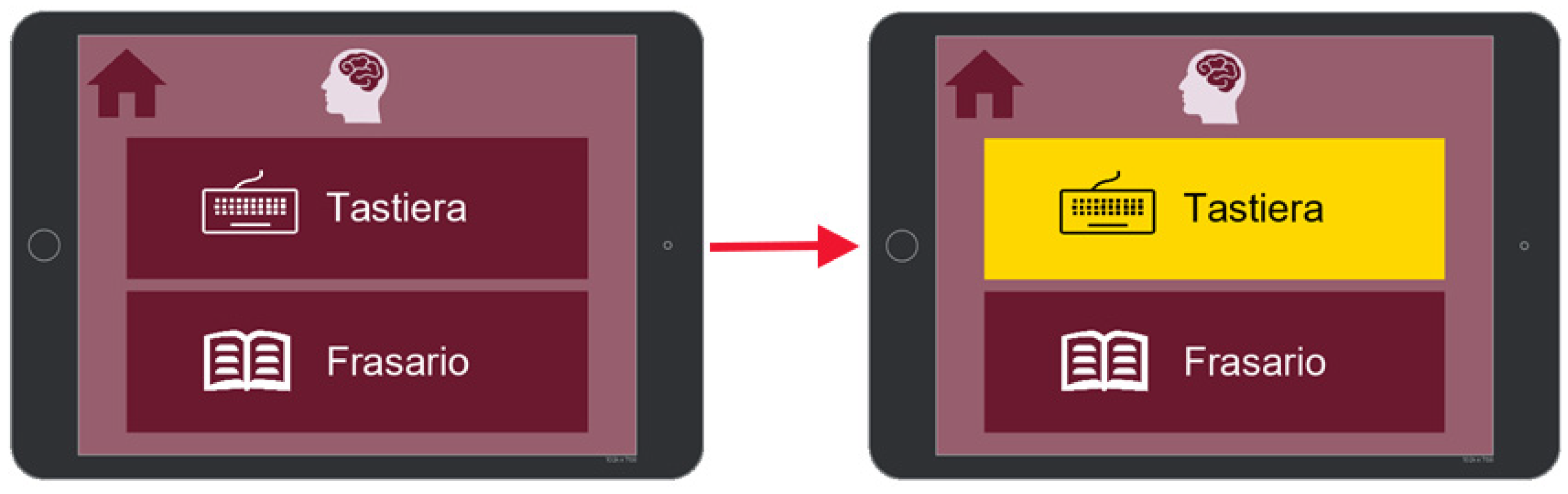

7. BciAi4Sla Proof of Concept Prototype

- Communication;

- Activities;

- Health;

- Music;

- Reading,

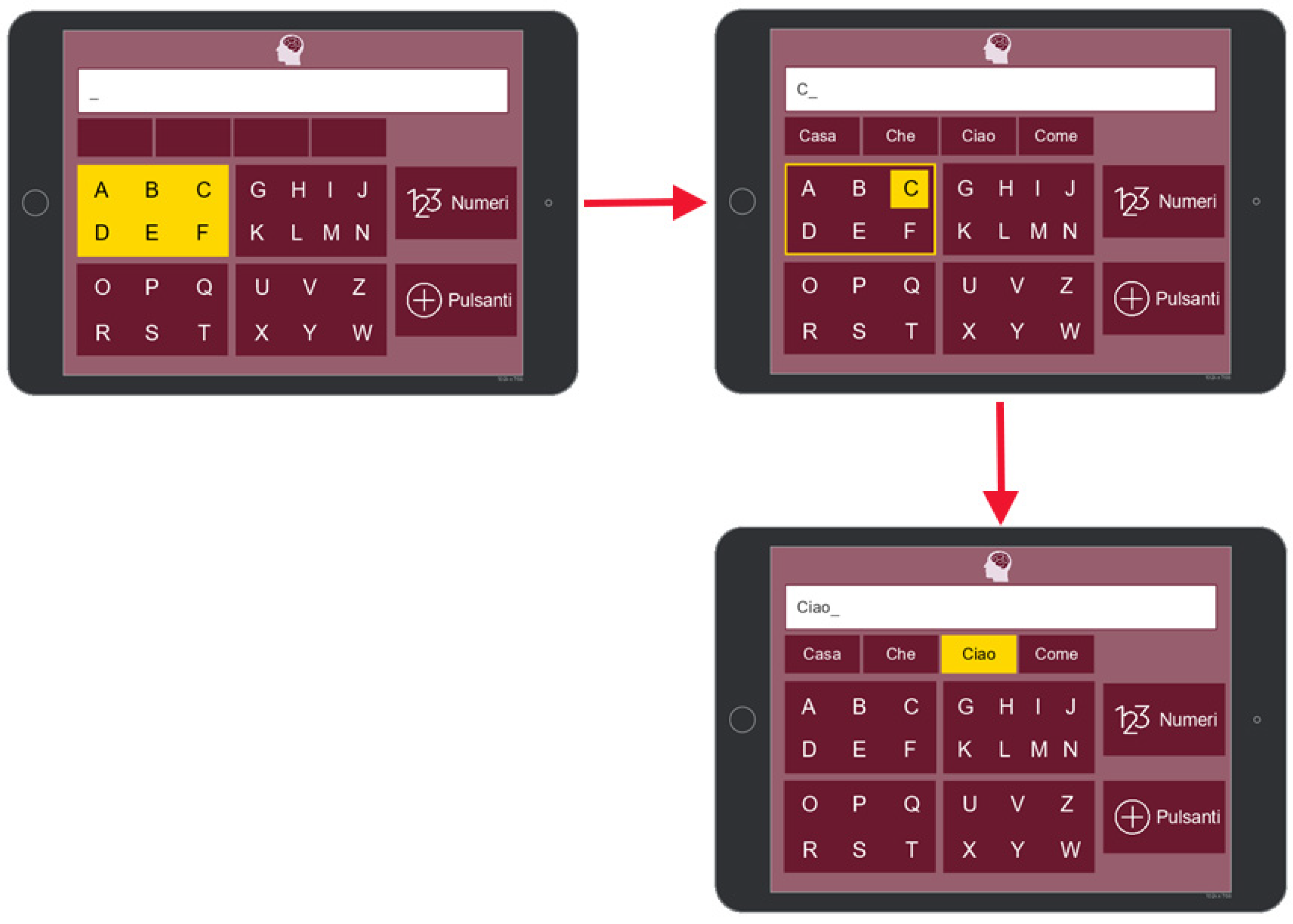

7.1. Communication

7.2. Activities

7.3. Health

7.4. Music and Reading

7.5. Settings

- Adjust the brightness and zoom;

- Add or remove the various categories or sub-categories on the home page to act more quickly on the fundamental actions for the patient;

- Set the order of the sections;

- Add or remove pre-set phrases and possible answers in the various categories, activities, phrasebook, health, etc.;

- Load or remove songs from the playlist;

- Load or remove e-books;

- Set different background and foreground colors;

- Increase/decrease font, etc.

8. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sajda, P.; Pohlmeyer, E.; Wang, J.; Hanna, B.; Parra, L.C.; Chang, S.-F. Cortically-Coupled Computer Vision. In Brain-Computer Interfaces; Springer: London, UK, 2010; pp. 133–148. [Google Scholar] [CrossRef]

- Abiri, R.; Borhani, S.; Sellers, E.W.; Jiang, Y.; Zhao, X. A comprehensive review of EEG-based brain–computer interface paradigms. J. Neural Eng. 2018, 16, 011001. [Google Scholar] [CrossRef] [PubMed]

- Tan, D.; Nijholt, A. Brain-Computer Interfaces and Human-Computer Interaction. In Brain-Computer Interfaces; Tan, D., Nijholt, A., Eds.; Springer: London, UK, 2010; pp. 3–19. [Google Scholar] [CrossRef]

- Zander, T.O.; Kothe, C. Towards passive brain–computer interfaces: Applying brain–computer interface technology to human–machine systems in general. J. Neural Eng. 2011, 8, 025005. [Google Scholar] [CrossRef] [PubMed]

- Zander, T.O.; Jatzev, S. Context-aware brain–computer interfaces: Exploring the information space of user, technical system and environment. J. Neural Eng. 2011, 9, 016003. [Google Scholar] [CrossRef] [PubMed]

- Cho, H.; Ahn, M.; Kwon, M.; Jun, S. A Step-by-Step Tutorial for a Motor Imagery–Based BCI. In Brain—Computer Interfaces Handbook; CRC Press: Boca Raton, FL, USA, 2018; pp. 445–460. [Google Scholar]

- Chio, A.; Mora, G.; Calvo, A.; Mazzini, L.; Bottacchi, E.; Mutani, R.; Parals, O.B.O.T. Epidemiology of ALS in Italy: A 10-year prospective population-based study. Neurology 2009, 72, 725–731. [Google Scholar] [CrossRef] [PubMed]

- Arthur, K.C.; Calvo, A.; Price, T.R.; Geiger, J.T.; Chiò, A.; Traynor, B.J. Projected increase in amyotrophic lateral sclerosis from 2015 to 2040. Nat. Commun. 2016, 7, 12408. [Google Scholar] [CrossRef]

- McFarland, D.J. Brain-computer interfaces for amyotrophic lateral sclerosis. Muscle Nerve 2020, 61, 702–707. [Google Scholar] [CrossRef] [PubMed]

- Grosse-Wentrup, M. The Elusive Goal of BCI-based Communication with CLIS-ALS Patients. In Proceedings of the 7th International Winter Conference on Brain-Computer Interface (BCI 2019), Gangwon, Republic of Korea, 18–20 February 2019. [Google Scholar]

- Fedele, P.; Fedele, C.; Fath, J. Braincontrol Basic Communicator: A Brain-Computer Interface Based Communicator for People with Severe Disabilities. In Universal Access in Human-Computer Interaction. Design and Development Methods for Universal Access; Springer: Cham, Switzerland, 2014; pp. 487–494. [Google Scholar]

- Cipresso, P.; Carelli, L.; Solca, F.; Meazzi, D.; Meriggi, P.; Poletti, B.; Lulé, D.; Ludolph, A.C.; Silani, V.; Riva, G. The use of P300-based BCIs in amyotrophic lateral sclerosis: From augmentative and alternative communication to cognitive assessment. Brain Behav. 2012, 2, 479–498. [Google Scholar] [CrossRef]

- Wolpaw, J.R.; Birbaumer, N.; McFarland, D.J.; Pfurtscheller, G.; Vaughan, T.M. Brain–computer interfaces for communication and control. Clin. Neurophysiol. 2002, 113, 767–791. [Google Scholar] [CrossRef] [PubMed]

- De Massari, D.; Ruf, C.A.; Furdea, A.; Matuz, T.; Van Der Heiden, L.; Halder, S.; Silvoni, S.; Birbaumer, N. Brain communication in the locked-in state. Brain 2013, 136, 1989–2000. [Google Scholar] [CrossRef]

- Guger, C.; Spataro, R.; Allison, B.Z.; Heilinger, A.; Ortner, R.; Cho, W.; La Bella, V. Complete Locked-in and Locked-in Patients: Command Following Assessment and Communication with Vibro-Tactile P300 and Motor Imagery Brain-Computer Interface Tools. Front. Neurosci. 2017, 11, 251. [Google Scholar] [CrossRef]

- Halder, S.; Käthner, I.; Kübler, A. Training leads to increased auditory brain–computer interface performance of end-users with motor impairments. Clin. Neurophysiol. 2015, 127, 1288–1296. [Google Scholar] [CrossRef] [PubMed]

- Friedrich, E.V.; Scherer, R.; Neuper, C. The effect of distinct mental strategies on classification performance for brain–computer interfaces. Int. J. Psychophysiol. 2012, 84, 86–94. [Google Scholar] [CrossRef] [PubMed]

- Kleih, S.C.; Kubler, A. Psychological Factors Influencing Brain-Computer Interface (BCI) Performance. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Hong Kong, China, 9–12 October 2015; pp. 3192–3196. [Google Scholar] [CrossRef]

- Lotte, F.; Larrue, F.; Mühl, C. Flaws in current human training protocols for spontaneous Brain-Computer Interfaces: Lessons learned from instructional design. Front. Hum. Neurosci. 2013, 7, 568. [Google Scholar] [CrossRef]

- Kübler, A.; Birbaumer, N. Brain–computer interfaces and communication in paralysis: Extinction of goal directed thinking in completely paralysed patients? Clin. Neurophysiol. 2008, 119, 2658–2666. [Google Scholar] [CrossRef]

- Birbaumer, N.; Cohen, L.G. Brain-computer interfaces: Communication and restoration of movement in paralysis. J. Physiol. 2007, 579, 621–636. [Google Scholar] [CrossRef] [PubMed]

- Kleih, S.; Nijboer, F.; Halder, S.; Kübler, A. Motivation modulates the P300 amplitude during brain-computer interface use. Clin. Neurophysiol. 2010, 121, 1023–1031. [Google Scholar] [CrossRef] [PubMed]

- Neumann, N.; Kubler, A. Training locked-in patients: A challenge for the use of brain-computer interfaces. IEEE Trans. Neural Syst. Rehabil. Eng. 2003, 11, 169–172. [Google Scholar] [CrossRef]

- Schalk, G.; McFarland, D.; Hinterberger, T.; Birbaumer, N.; Wolpaw, J. BCI2000: A General-Purpose Brain-Computer Interface (BCI) System. IEEE Trans. Biomed. Eng. 2004, 51, 1034–1043. [Google Scholar] [CrossRef]

- Vaughan, T.M.; McFarland, D.J.; Schalk, G.; Sarnacki, W.A.; Robinson, L.; Wolpaw, J.R. EEG-based brain-computer interface: Development of a speller. Soc. Neurosci. Abstr. 2001, 27, 167. [Google Scholar]

- Cheng, M.; Gao, X.; Gao, S.; Xu, D. Design and implementation of a brain-computer interface with high transfer rates. IEEE Trans. Biomed. Eng. 2002, 49, 1181–1186. [Google Scholar] [CrossRef]

- Tan, D.S.; Nijholt, A. Brain-Computer Interfaces: Applying Our Minds to Human-Computer Interaction; Springer: London, UK, 2010. [Google Scholar] [CrossRef]

- Adams, L.; Hunt, L.; Jackson, M. The ‘aware-system’—Prototyping an augmentative communication interface. In Proceedings of the Proceedings of the Rehabilitation Engineering Society of North America (RESNA), Atlanta, Georgia, 19–23 June 2003. [Google Scholar]

- Voznenko, T.I.; Chepin, E.V.; Urvanov, G.A. The Control System Based on Extended BCI for a Robotic Wheelchair. Procedia Comput. Sci. 2018, 123, 522–527. [Google Scholar] [CrossRef]

- Cietto, V.; Pasteris, R.; Locci, S.; Serra, S.; Mattutino, C.; Gena, C. Evaluating commercial BCIs for moving robots. In Proceedings of the 13th Biannual Conference of the Italian SIGCHI Chapter: Designing the Next Interaction, Padova, Italy, 23–25 September 2019; Association for Computing Machinery: New York, NY, USA, 2019. [Google Scholar] [CrossRef]

- Tonin, L.; Carlson, T.; Leeb, R.; Millán, J.D.R. Brain-controlled telepresence robot by motor-disabled people. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; Volume 2011, pp. 4227–4230. [Google Scholar] [CrossRef]

- Faller, J.; Müller-Putz, G.; Schmalstieg, D.; Pfurtscheller, G. An Application Framework for Controlling an Avatar in a Desktop-Based Virtual Environment via a Software SSVEP Brain–Computer Interface. Presence Teleoperators Virtual Environ. 2010, 19, 25–34. [Google Scholar] [CrossRef]

- Catarci, T.; Cincotti, F.; De Leoni, M.; Mecella, M.; Santucci, G. Smart Homes for All: Collaborating Services in a for-All Architecture for Domotics. Collab. Comput. Netw. Appl. Work. 2009, 10, 56–69. [Google Scholar] [CrossRef]

- Catarci, T.; Di Ciccio, C.; Forte, V.; Iacomussi, E.; Mecella, M.; Santucci, G.; Tino, G. Service Composition and Advanced User Interfaces in the Home of Tomorrow: The SM4All Approach. In Proceedings of the Ambient Media and Systems: Second International ICST Conference, AMBI-SYS 2011, Porto, Portugal, 24–25 March 2011; pp. 12–19. [Google Scholar] [CrossRef]

- Edlinger, G.; Holzner, C.; Guger, C. A Hybrid Brain-Computer Interface for Smart Home Control. In Proceedings of the Human-Computer Interaction. Interaction Techniques and Environments: 14th International Conference, HCI International 2011, Orlando, FL, USA, 9–14 July 2011; pp. 417–426. [Google Scholar] [CrossRef]

- Holzner, C.; Guger, C.; Grönegress, C.; Edlinger, G.; Slater, M. Using a P300 Brain Computer Interface for Smart Home Control. In Neuroengineering, Neural Systems, Rehabilitation and Prosthetics, Proceedings of the World Congress on Medical Physics and Biomedical Engineering, Munich, Germany, 7–12 September 2009; Springer: Berlin/Heidelberg, Germany, 2009; Volume 259, pp. 174–177. [Google Scholar] [CrossRef]

- Tools for Brain-Computer Interaction Fact Sheet Project Information. Available online: https://cordis.europa.eu/project/id/224631/en? (accessed on 23 December 2022).

- Mueller-Putz, G.R.; Breitwieser, C.; Cincotti, F.; Leeb, R.; Schreuder, M.; Leotta, F.; Tavella, M.; Bianchi, L.; Kreilinger, A.; Ramsay, A.; et al. Tools for brain-computer interaction: A general concept for a hybrid BCI. Front. Neuroinformatics 2011, 5, 30. [Google Scholar] [CrossRef]

- Soares, D.F.; Henriques, R.; Gromicho, M.; de Carvalho, M.; Madeira, S.C. Learning prognostic models using a mixture of biclustering and triclustering: Predicting the need for non-invasive ventilation in Amyotrophic Lateral Sclerosis. J. Biomed. Inform. 2022, 134, 104172. [Google Scholar] [CrossRef] [PubMed]

- Trescato, I.; Guazzo, A.; Longato, E.; Hazizaj, E. Baseline Machine Learning Approaches to Predict Amyotrophic Lateral Sclerosis Disease Progression Notebook for the iDPP Lab on Intelligent Disease Progression Prediction at CLEF 2022. In Proceedings of the CLEF 2022 Conference and Labs of the Evaluation Forum, Bologna, Italy, 5–8 September 2022. [Google Scholar]

- Chiò, A.; Mora, G.; Moglia, C.; Manera, U.; Canosa, A.; Cammarosano, S.; Ilardi, A.; Bertuzzo, D.; Bersano, E.; Cugnasco, P.; et al. Secular Trends of Amyotrophic Lateral Sclerosis. JAMA Neurol. 2017, 74, 1097–1104. [Google Scholar] [CrossRef] [PubMed]

- Hilviu, D.; Vincenzi, S.; Chiarion, G.; Mattutino, C.; Roatta, S.; Calvo, A.; Bosco, F.; Gena, C. Endogenous Cognitive Tasks for Brain-Computer Interface: A Mini-Review and a New Proposal. In Proceedings of the Proceedings ofthe 5th International Conference on Computer-Human Interaction Research and Applications (CHIRA 2021), Valletta, Malta, 28–29 October 2021; pp. 174–180. [Google Scholar] [CrossRef]

- Moran, A.; O’Shea, H. Motor Imagery Practice and Cognitive Processes. Front. Psychol. 2020, 11, 394. [Google Scholar] [CrossRef] [PubMed]

- Curran, E.; Sykacek, P.; Stokes, M.; Roberts, S.; Penny, W.; Johnsrude, I.; Owen, A. Cognitive tasks for driving a brain-computer interfacing system: A pilot study. IEEE Trans. Neural Syst. Rehabil. Eng. 2004, 12, 48–54. [Google Scholar] [CrossRef]

- Friedrich, E.V.; Scherer, R.; Neuper, C. Long-term evaluation of a 4-class imagery-based brain–computer interface. Clin. Neurophysiol. 2013, 124, 916–927. [Google Scholar] [CrossRef]

- Togha, M.M.; Salehi, M.R.; Abiri, E. Improving the performance of the motor imagery-based brain-computer interfaces using local activities estimation. Biomed. Signal Process. Control 2019, 50, 52–61. [Google Scholar] [CrossRef]

- Attallah, O.; Abougharbia, J.; Tamazin, M.; Nasser, A.A. A BCI System Based on Motor Imagery for Assisting People with Motor Deficiencies in the Limbs. Brain Sci. 2020, 10, 864. [Google Scholar] [CrossRef] [PubMed]

- Lu, R.-R.; Zheng, M.-X.; Li, J.; Gao, T.-H.; Hua, X.-Y.; Liu, G.; Huang, S.-H.; Xu, J.-G.; Wu, Y. Motor imagery based brain-computer interface control of continuous passive motion for wrist extension recovery in chronic stroke patients. Neurosci. Lett. 2020, 718, 134727. [Google Scholar] [CrossRef] [PubMed]

- Cabrera, A.F.; Dremstrup, K. Auditory and spatial navigation imagery in Brain–Computer Interface using optimized wavelets. J. Neurosci. Methods 2008, 174, 135–146. [Google Scholar] [CrossRef]

- Lugo, Z.R.; Pokorny, C.; Pellas, F.; Noirhomme, Q.; Laureys, S.; Müller-Putz, G.; Kübler, A. Mental imagery for brain-computer interface control and communication in non-responsive individuals. Ann. Phys. Rehabil. Med. 2019, 63, 21–27. [Google Scholar] [CrossRef]

- Cona, G.; Scarpazza, C. Where is the “where” in the brain? A meta-analysis of neuroimaging studies on spatial cognition. Hum. Brain Mapp. 2018, 40, 1867–1886. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez, M.; Yu, L. Auditory imagery classification with a non-invasive BCI. In Proceedings of the 2016 IEEE 36th Central American and Panama Convention, CONCAPAN, San Jose, Costa Rica, 9–11 November 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Kraemer, D.J.M.; Macrae, C.N.; Green, A.E.; Kelley, W.M. Sound of silence activates auditory cortex. Nature 2005, 434, 158. [Google Scholar] [CrossRef]

- Purves, D.; Augustine, G.; Fitzpatrick, D.; Katz, L.; LaMantia, A.-S.; McNamara, J.; Williams, M. (Eds.) Neuroscience, 6th ed.; OUP: New York, NY, USA, 2017. [Google Scholar]

- Başar, E.; Özgören, M.; Öniz, A.; Schmiedt, C.; Başar-Eroğlu, C. Brain oscillations differentiate the picture of one’s own grandmother. Int. J. Psychophysiol. 2007, 64, 81–90. [Google Scholar] [CrossRef]

- Özgören, M.; Başar-Eroğlu, C.; Başar, E. Beta oscillations in face recognition. Int. J. Psychophysiol. 2005, 55, 51–59. [Google Scholar] [CrossRef]

- Taylor, M.J.; Arsalidou, M.; Bayless, S.J.; Morris, D.; Evans, J.W.; Barbeau, E.J. Neural correlates of personally familiar faces: Parents, partner and own faces. Hum. Brain Mapp. 2008, 30, 2008–2020. [Google Scholar] [CrossRef]

- Anderson, C.W.; Sijercic, Z. Classification of EEG signals from four subjects during five mental tasks. In Proceedings of the International Conference EANN’96, London, England, 17–19 June 1996. [Google Scholar]

- Palaniappan, R. Brain Computer Interface Design Using Band Powers Extracted During Mental Tasks. In Proceedings of the 2nd International IEEE EMBS Conference on Neural Engineering, Arlington, Virginia, 16–19 March 2005; pp. 321–324. [Google Scholar] [CrossRef]

- Lee, J.C.; Tan, D.S. Using a low-cost electroencephalograph for task classification in HCI research. In Proceedings of the UIST 2006: Proceedings of the 19th Annual ACM Symposium on User Interface Software and Technology, Montreux, Switzerland, 15–18 October 2006. [Google Scholar] [CrossRef]

- Rahman, M.; Fattah, S.A. Mental Task Classification Scheme Utilizing Correlation Coefficient Extracted from Interchannel Intrinsic Mode Function. BioMed Res. Int. 2017, 2017, 3720589. [Google Scholar] [CrossRef]

- Zacks, J.M. Neuroimaging Studies of Mental Rotation: A Meta-analysis and Review. J. Cogn. Neurosci. 2008, 20, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Han, C.-H.; Kim, Y.-W.; Kim, D.Y.; Kim, S.H.; Nenadic, Z.; Im, C.-H. Electroencephalography-based endogenous brain–computer interface for online communication with a completely locked-in patient. J. Neuroeng. Rehabil. 2019, 16, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Roberts, S.J.; Penny, W.D. Real-time brain-computer interfacing: A preliminary study using Bayesian learning. Med Biol. Eng. Comput. 2000, 38, 56–61. [Google Scholar] [CrossRef] [PubMed]

- Arsalidou, M.; Pawliw-Levac, M.; Sadeghi, M.; Pascual-Leone, J. Brain areas associated with numbers and calculations in children: Meta-analyses of fMRI studies. Dev. Cogn. Neurosci. 2018, 30, 239–250. [Google Scholar] [CrossRef] [PubMed]

- Roc, A.; Pillette, L.; Mladenovic, J.; Benaroch, C.; N’Kaoua, B.; Jeunet, C.; Lotte, F. A review of user training methods in brain computer interfaces based on mental tasks. J. Neural Eng. 2020, 18, 011002. [Google Scholar] [CrossRef] [PubMed]

- Tversky, B. Spatial Mental Models. Psychol. Learn. Motiv. 1991, 27, 109–145. [Google Scholar] [CrossRef]

- Balart-Sánchez, S.A.; Vélez-Pérez, H.; Rivera-Tello, S.; Gómez-Velázquez, F.R.; González-Garrido, A.A.; Romo-Vázquez, R. A step forward in the quest for a mobile EEG-designed epoch for psychophysiological studies. Biomed. Eng. Biomed. Tech. 2019, 64, 655–667. [Google Scholar] [CrossRef]

- Johnson, T. A Wireless Marker System to Enable Evoked Potential Recordings Using a Wireless EEG System (EPOC) and a Portable Computer; PeerJ PrePrints; The University of Sydney: Sydney, Australia, 2013. [Google Scholar]

- Frey, J. Comparison of an Open-hardware Electroencephalography Amplifier with Medical Grade Device in Brain-computer Interface Applications. arXiv preprint 2016, arXiv:1606.02438. [Google Scholar] [CrossRef]

- Aldridge, A.; Barnes, E.; Bethel, C.L.; Carruth, D.W.; Kocturova, M.; Pleva, M.; Juhar, J. Accessible Electroencephalograms (EEGs): A Comparative Review with OpenBCI’s Ultracortex Mark IV Headset. In Proceedings of the 2019 29th International Conference Radioelektronika (RADIOELEKTRONIKA), Pardubice, Czech Republic, 16–18 April 2019; pp. 1–6. [Google Scholar]

- Majoros, T.; Oniga, S. Overview of the EEG-Based Classification of Motor Imagery Activities Using Machine Learning Methods and Inference Acceleration with FPGA-Based Cards. Electronics 2022, 11, 2293. [Google Scholar] [CrossRef]

- Arpaia, P.; Esposito, A.; Natalizio, A.; Parvis, M. How to successfully classify EEG in motor imagery BCI: A metrological analysis of the state of the art. J. Neural Eng. 2022, 19, 031002. [Google Scholar] [CrossRef]

- Lotte, F.; Bougrain, L.; Cichocki, A.; Clerc, M.; Congedo, M.; Rakotomamonjy, A.; Yger, F. A review of classification algorithms for EEG-based brain–computer interfaces: A 10 year update. J. Neural Eng. 2018, 15, 031005. [Google Scholar] [CrossRef] [PubMed]

- Yuan, H.; He, B. Brain–Computer Interfaces Using Sensorimotor Rhythms: Current State and Future Perspectives. IEEE Trans. Biomed. Eng. 2014, 61, 1425–1435. [Google Scholar] [CrossRef] [PubMed]

- Qin, J.; Li, Y.; Sun, W. A Semisupervised Support Vector Machines Algorithm for BCI Systems. Comput. Intell. Neurosci. 2007, 2007, 94397. [Google Scholar] [CrossRef] [PubMed]

- Ramoser, H.; Muller-Gerking, J.; Pfurtscheller, G. Optimal spatial filtering of single trial EEG during imagined hand movement. IEEE Trans. Rehabil. Eng. 2000, 8, 441–446. [Google Scholar] [CrossRef] [PubMed]

- Blankertz, B.; Tomioka, R.; Lemm, S.; Kawanabe, M.; Muller, K.-R. Optimizing Spatial filters for Robust EEG Single-Trial Analysis. IEEE Signal Process. Mag. 2007, 25, 41–56. [Google Scholar] [CrossRef]

- Wang, B.; Wong, C.M.; Kang, Z.; Liu, F.; Shui, C.; Wan, F.; Chen, C.L.P. Common Spatial Pattern Reformulated for Regularizations in Brain–Computer Interfaces. IEEE Trans. Cybern. 2020, 51, 5008–5020. [Google Scholar] [CrossRef] [PubMed]

- Oppenheim, A.V.; Schafer, R.W. Digital Signal Processing; Prentice-Hall: Englewood Cliffs, NJ, USA, 1975. [Google Scholar]

- Kübler, A.; Blankertz, B.; Müller, K.-R.; Neuper, C. A model of BCI control. In Proceedings of the 5th International Brain-Computer Interface Conference, Graz, Austria, 22–24 September 2011; pp. 100–102. [Google Scholar]

- Jeunet, C.; N’Kaoua, B.; Subramanian, S.; Hachet, M.; Lotte, F. Predicting Mental Imagery-Based BCI Performance from Personality, Cognitive Profile and Neurophysiological Patterns. PLoS ONE 2015, 10, e0143962. [Google Scholar] [CrossRef]

- Myrden, A.; Chau, T. Effects of user mental state on EEG-BCI performance. Front. Hum. Neurosci. 2015, 9, 308. [Google Scholar] [CrossRef]

- Gena, C.; Grillo, P.; Lieto, A.; Mattutino, C.; Vernero, F. When Personalization Is Not an Option: An In-The-Wild Study on Persuasive News Recommendation. Information 2019, 10, 300. [Google Scholar] [CrossRef]

- Mikael, H. Motor Imagery System Using a Low-Cost EEG Brain Computer Interface. 2021. Available online: https://github.com/mikaelhaji/MotorImagery (accessed on 21 December 2022).

- Behri, M.; Subasi, A.; Qaisar, S.M. Comparison of machine learning methods for two class motor imagery tasks using EEG in brain-computer interface. In Proceedings of the Advances in Science and Engineering Technology International Conferences (ASET), Abu Dhabi, United Arab Emirates, 6 February–5 April 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Assi, E.B.; Rihana, S.; Sawan, M. 33% Classification Accuracy Improvement in a Motor Imagery Brain Computer Interface. J. Biomed. Sci. Eng. 2017, 10, 326–341. [Google Scholar] [CrossRef]

- Costantini, G.; Todisco, M.; Casali, D.; Carota, M.; Saggio, G.; Bianchi, L.; Abbafati, M.; Quitadamo, L. SVM Classification of EEG Signals for Brain Computer Interface. In Frontiers in Artificial Intelligence and Applications; IOS Press: Amsterdam, Netherlands, 2009; Volume 204, pp. 229–233. [Google Scholar] [CrossRef]

- Stock, V.N.; Balbinot, A. Movement imagery classification in EMOTIV cap based system by Naïve Bayes. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 4435–4438. [Google Scholar] [CrossRef]

- Irimia, D.C.; Ortner, R.; Poboroniuc, M.S.; Ignat, B.E.; Guger, C. High Classification Accuracy of a Motor Imagery Based Brain-Computer Interface for Stroke Rehabilitation Training. Front. Robot. AI 2018, 5, 130. [Google Scholar] [CrossRef] [PubMed]

- Mondini, V.; Mangia, A.L.; Cappello, A. EEG-Based BCI System Using Adaptive Features Extraction and Classification Procedures. Comput. Intell. Neurosci. 2016, 2016, 4562601. [Google Scholar] [CrossRef] [PubMed]

- Lehtonen, J.; Jylanki, P.; Kauhanen, L.; Sams, M. Online Classification of Single EEG Trials During Finger Movements. IEEE Trans. Biomed. Eng. 2008, 55, 713–720. [Google Scholar] [CrossRef]

- Hazrati, M.K.; Erfanian, A. An online EEG-based brain–computer interface for controlling hand grasp using an adaptive probabilistic neural network. Med. Eng. Phys. 2010, 32, 730–739. [Google Scholar] [CrossRef] [PubMed]

- Jameson, A.; Gabrielli, S.; Kristensson, P.O.; Reinecke, K.; Cena, F.; Gena, C.; Vernero, F. How can we support users’ preferential choice? In Proceedings of the International Conference on Human Factors in Computing Systems, CHI 2011, Extended Abstracts Volume, Vancouver, BC, Canada, 7–12 May 2011. [Google Scholar] [CrossRef]

- Ponzio, F.; Villalobos, A.E.L.; Mesin, L.; De’Sperati, C.; Roatta, S. A human-computer interface based on the “voluntary” pupil accommodative response. Int. J. Hum. -Comput. Stud. 2019, 126, 53–63. [Google Scholar] [CrossRef]

| Subjects | 80–20% | 60–40% | 40–60% | 20–80% |

|---|---|---|---|---|

| subject 1 | 0.812 | 0.729 | 0.699 | 0.633 |

| subject 2 | 0.645 | 0.614 | 0.573 | 0.565 |

| subject 3 | 0.729 | 0.666 | 0.583 | 0.552 |

| average | 0.73 | 0.67 | 0.62 | 0.58 |

| Subjects | Accuracy |

|---|---|

| subject 1 | 0.83 |

| subject 2 | 0.642 |

| subject 3 | 0.72 |

| average | 0.73 |

| Subjects | Logarithmic Weight | Uniform Weight |

|---|---|---|

| subject 1 | 0.738 | 0.812 |

| subject 2 | 0.371 | 0.454 |

| subject 3 | 0.529 | 0.563 |

| subject 4 | 0.408 | 0.450 |

| subject 5 | 0.796 | 0.892 |

| average | 0.569 | 0.634 |

| Number of Filters | Accuracy |

|---|---|

| 6 | 0.609 |

| 8 | 0.665 |

| 9 | 0.665 |

| 10 | 0.626 |

| 11 | 0.624 |

| 12 | 0.599 |

| Subjects | Accuracy |

|---|---|

| subject 1 | 0.78 |

| subject 2 | 0.88 |

| subject 3 | 0.83 |

| subject 4 | 0.85 |

| subject 5 | 0.62 |

| subject 6 | 0.73 |

| subject 7 | 0.74 |

| subject 8 | 0.6 |

| average | 0.75375 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gena, C.; Hilviu, D.; Chiarion, G.; Roatta, S.; Bosco, F.M.; Calvo, A.; Mattutino, C.; Vincenzi, S. The BciAi4SLA Project: Towards a User-Centered BCI. Electronics 2023, 12, 1234. https://doi.org/10.3390/electronics12051234

Gena C, Hilviu D, Chiarion G, Roatta S, Bosco FM, Calvo A, Mattutino C, Vincenzi S. The BciAi4SLA Project: Towards a User-Centered BCI. Electronics. 2023; 12(5):1234. https://doi.org/10.3390/electronics12051234

Chicago/Turabian StyleGena, Cristina, Dize Hilviu, Giovanni Chiarion, Silvestro Roatta, Francesca M. Bosco, Andrea Calvo, Claudio Mattutino, and Stefano Vincenzi. 2023. "The BciAi4SLA Project: Towards a User-Centered BCI" Electronics 12, no. 5: 1234. https://doi.org/10.3390/electronics12051234

APA StyleGena, C., Hilviu, D., Chiarion, G., Roatta, S., Bosco, F. M., Calvo, A., Mattutino, C., & Vincenzi, S. (2023). The BciAi4SLA Project: Towards a User-Centered BCI. Electronics, 12(5), 1234. https://doi.org/10.3390/electronics12051234