Abstract

Farmland boundary information plays a key role in agricultural remote sensing, and it is of importance to modern agriculture. We collected the relevant research in this field at home and abroad in this review, and we systematically assessed the farmland boundary extraction process, detection algorithms, and influencing factors. In this paper, we first discuss the five parts of the assessment: (1) image acquisition; (2) preprocessing; (3) detection algorithms; (4) postprocessing; (5) the evaluation of the boundary information extraction process. Second, we discuss recognition algorithms. Third, we discuss various detection algorithms. The detection algorithms can be divided into four types: (1) low-level feature extraction algorithms, which only consider the boundary features; (2) high-level feature extraction algorithms, which consider boundary information and other image information simultaneously; (3) visual hierarchy extraction algorithms, which simulate biological vision systems; (4) boundary object extraction algorithms, which recognize boundary object extraction ideas. We can subdivide each type of algorithm into several algorithm subclasses. Fourth, we discuss the technical factors and natural factors that affect boundary extraction. Finally, we summarize the development history of this field, and we analyze the problems that exist, such as the lack of algorithms that can be adapted to higher-resolution images, the lack of algorithms with good practical ability, and the lack of a unified and effective evaluation index system.

1. Introduction

Timely and accurate agricultural land use information is an important prerequisite for the development of agricultural informatization and modernization, and it is also of great strategic relevance for macro-agricultural policy formulation, agricultural planning and management, agricultural resource protection, and sustainable development [1]. With its macroscopic, timely, economical, and nondestructive method of surface information observation, remote sensing has become one of the most effective technical means for cultivated land monitoring [2].

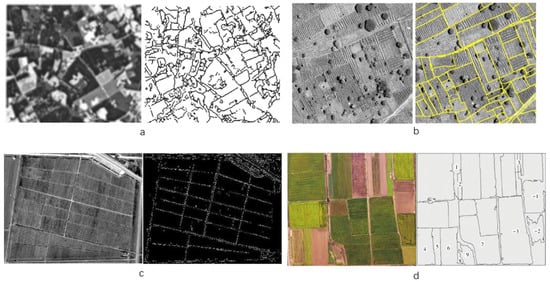

Farmland boundary information is an important part of agricultural land use information. Currently, we lack an authoritative definition of farmland boundary information. In this paper, we define the real-world agricultural farmland boundary as the location where the crop type changes [3]. Mapped onto remote sensing imagery, farmland boundaries correspond to locations that are defined as feature discontinuities, such as grayscale, color, texture, etc. [4]. In specific studies, the farmland boundaries of remote sensing imagery exist in four forms: (1) as threads in farmland imagery [3] (Figure 1a); (2) as demarcation lines between farmlands [5] (Figure 1b); (3) as boundary objects between farmlands [6] (Figure 1c); (4) as the perimeter boundaries of farmland blocks [7] (Figure 1d). There is such a difference between the second type and the fourth type. For the second type, each edge depends on the farmlands on both sides. For the fourth type, the boundary ring is determined by the farmland it wraps.

Figure 1.

Different forms of farmland boundaries in remote sensing imagery: (a) threads in farmland imagery [3]; (b) demarcation lines between farmlands [5]; (c) boundary objects between farmlands [6]; (d) perimeter boundaries of farmland blocks [7] (The number in the picture (d) is the number of the farmland).

Field boundary extraction based on remote sensing images refers to the vectorization and digitization of the field boundaries based on aerial and aerospace images [8]. The technology is divided into human-based manual digitization methods [9,10,11] and computer-based semiautomatic or fully automatic image segmentation methods. Manual digitization refers to the visual interpretation of images with a high spatial resolution by professionals based on prior knowledge and the principle of pixel similarity, as well as on complete and accurate field boundary extraction [12]. However, in the face of a large range of plot boundary data, manual digitization consumes a lot of time and material resources; thus, it is better to use automated extraction methods. In a narrow sense, boundary extraction technology emphasizes that the recognition process revolves around the goal of extracting boundaries, such as various types of edge detection operators, which directly identify the dividing lines of each object in the extracted image. The recognition results correspond to the boundary line in the figure; however, it is not necessary to investigate whether the boundary is a closed graphic or whether the original image contains noise that needs to be corrected during the recognition. In a broad sense, boundary extraction technology refers to the various techniques that researchers use to identify boundaries, and these generally include preprocessing, postprocessing, and other processes to obtain more practical results, such as image segmentation technology, which can be used to divide the image into nonoverlapping closed objects, discarding some of the meaningless noise points and edge lines. In this paper, we primarily discuss automatic field boundary recognition and extraction technology in a broad sense.

Many previous researchers have covered this field, and their work is mainly divided into reviews on image segmentation [13,14,15,16,17,18,19,20,21] and reviews of agricultural remote sensing [2,16,22]; however, we lack relevant reviews on farmland boundary extraction based on remote sensing images. The difference between this paper and the above two types of reviews is that, due to space constraints, there is not a lot of focus in the latter on boundary extraction in the field of agricultural remote sensing. Reviews on image segmentation angle types are often general technical analyses in idealized scenarios [13,18,19,23,24], or their authors comb and summarize the image segmentation applications in different fields [14,17]. There are few analyses in respect of the field of agriculture. As for reviews on agricultural remote sensing [2,16], although the authors describe the application of agricultural remote sensing in detail and also explain the monitoring technology of agricultural remote sensing, they do not explain the image segmentation problem in depth. In this paper, we make up for the shortcomings of these two types of reviews by discussing field boundary extraction technology based on agricultural remote sensing images in more depth.

In this article, we describe the four parts of the extraction process (except for detection algorithms) in Section 2. The extraction process involves five parts: (1) image acquisition; (2) image preprocessing; (3) detection algorithms; (4) postprocessing; (5) evaluation. Because detection algorithms are the core of the boundary extraction process, we discuss these in Section 3, namely low-level feature extraction algorithms, high-level feature extraction algorithms, visual hierarchy extraction algorithms, and boundary object extraction algorithms. Then, in Section 4, we discuss the technical and natural factors that affect boundary extraction. Finally, in Section 5, we summarize the development process of farmland boundary recognition and extraction technology based on remote sensing images, and we analyze some of the existing problems.

2. Extraction Process

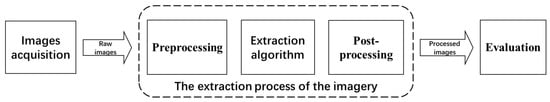

In general, the whole process of field boundary extraction based on remote sensing images can be divided into five parts: (1) image acquisition; (2) preprocessing; (3) detection algorithms; (4) postprocessing; (5) evaluation. We can divide the obtained remote sensing images into two categories: aerospace images (mainly from satellites) and aerial images (mainly from unmanned aerial vehicles). The directly obtained remote sensing images should undergo corresponding preprocessing to minimize image errors, filter image noise, and strengthen image features to meet the processing conditions of the detection algorithm. After that, due to some differences between the processing results of the detection algorithm and the target requirements, postprocessing is required. Finally, it is necessary to perform a systematic evaluation of the extraction process, especially the detection algorithm. The detection algorithm is the most critical part of the five parts, and it plays a decisive role in the recognition results. The preprocessing, postprocessing, and even the evaluation are selected according to the detection algorithm; thus, we elaborate on the detection algorithm in Section 3. We present a schematic diagram of the boundary extraction process in Figure 2.

Figure 2.

The flow of image boundary extraction.

2.1. Image Acquisition

Before 2015, images were mainly captured by satellites, and a small number of images were captured by dedicated remote sensing aircraft [6,25]. The resolution was several meters or tens of meters, and it was difficult to accurately identify field boundaries. The large error of satellite geopositioning [26] brings greater difficulty to the accurate extraction of boundaries. At the same time, some scholars believe that [27] low spatial resolution (tens of meters) is of little value for depicting field boundaries. In high-resolution satellite images, the resolution can generally reach the submeter level [11,28,29], which provides convenience for accurately identifying field boundaries. At the same time, UAVs are rapidly developing, and research is increasingly relying on them. The resolutions of UAVs generally reach the decimeter level, which can not only accurately identify the boundary of a field but can also create conditions for the direct identification of boundary objects, such as footpaths, ditches, and roads. With the development of technology, the future remote sensing images of field blocks will have higher resolutions. The resolution of the UAV images of many teams has reached the centimeter level [25,30,31,32,33], and image resolution is no longer a problem that hinders the extraction of field boundaries.

We counted the aerial remote sensing images and space remote sensing images relevant in the literature. We present an introduction to the satellites with which space remote sensing images have been obtained in Table 1.

Table 1.

Types, names, and resolutions of remote sensing satellites used in the literature.

As shown in Table 1, the source of satellite remote sensing imagery is primarily large national government or commercial satellite imagery. QuickBird is the most popular satellite imagery, which is probably due to its highly competitive resolution. Official satellite remote sensing images are freely available in some countries; however, their resolutions are often lower than those of commercial satellites.

We present an introduction to the UAVs with which aerial remote sensing images have been obtained in Table 2.

Table 2.

Names, sensors, resolutions, and altitudes of remote sensing UAVs used in the literature.

As shown in Table 2, the resolution of UAV images is significantly higher than that of aerospace images. Another advantage of drone imagery is that the resolution can be adjusted by changing the height of the drone, which allows for more flexible resolution acquisition.

Satellite imagery and drone imagery have their advantages. Satellite imagery covers a wide area, has a wide range of wavelengths, and provides temporal images, which allow for field boundary extraction from angles based on different bands and time series [46,47,48]. Drones produce images with high resolution, and they can also shoot multispectral images, similar to satellites [60]. Moreover, the shooting efficiency and pertinence are stronger, and the equipment can be carried by the team so that they can obtain the images and modify the drone as needed. Because satellite imagery is not acquired in real-time, drone photography is often required to understand recent field changes. At the same time, considering that UAV shooting requires the team to reach the target area and is affected by the meteorological conditions and shooting technology, the potential cost can be high; thus, for tasks with low-resolution requirements, satellite imagery should be considered.

2.2. Preprocessing

Preprocessing refers to the correction and organization of obtained images to render them more accurate and reliable, which is conducive to processing by the detection algorithm, which can be divided into targeted preprocessing and general preprocessing.

Targeted preprocessing refers to preprocessing methods that are only used for specific types of detection algorithms and are difficult to use for others. Because of their high correlation with detection algorithms, in this article, we consider them to be part of the detection algorithms, and so we do not cover them in detail in this section.

General preprocessing refers to the low correlation between the preprocessing content and algorithm, and it primarily involves correction of the image accuracy, contrast improvement, fusion of multiple images, and selection of the image size, which can be freely combined with images and algorithms according to the preprocessing needs. For example, for satellite remote sensing images, there is radiometric calibration, atmospheric correction, image clipping, minimum noise separation, and other operations [3,11,29]. For aerial remote sensing images, there is matching, stitching, radiometric correction, geometric correction, orthorectification, and other operations [6,61,62]. For multiband images, there are band-picking or image fusion operations [29,55,63]. For images that are not a suitable size, there is image segmentation cropping [7], matching, stitching [6], downsampling, and chunking [30].

We present some of the common universal preprocessing methods in Table 3.

Table 3.

Introduction to universal preprocessing methods.

In addition to the common general pretreatments, there are some less common pretreatment methods or treatments that fall between the general and targeted pretreatments. Such methods tend to only target some of the more specific algorithm requirements, but they have a certain degree of generality. Examples include the color space of the transformed image [65,68], projection transformation [55], feature extraction [69], the anisotropic diffusion algorithm [35,38], automatic rotation [70], superpixel clustering [56,68], resampling [33], and other processing methods. We do not describe these methods in detail in this article.

Although most preprocessing methods do not play a decisive role in the extraction results, suitable preprocessing methods can improve the accuracy of the detection algorithm to a certain extent and reduce the processing time.

2.3. Postprocessing

The postprocessing involves the processing and sorting of the results obtained by the detection algorithm, and the optimization of the output form so that it meets the needs of the user. The postprocessing process generally does not affect the accuracy of the extraction. Postprocessing can be divided into algorithm-oriented postprocessing and demand-oriented postprocessing, according to the specific algorithms and requirements that it serves. The common demand-oriented postprocessing includes merging the dimensions of the images obtained by the detection algorithm [7], removing non-field objects [64], removing noise [44,64], etc.

We present some of the more common requirements and the corresponding postprocessing methods in Table 4.

Table 4.

Common requirements and corresponding postprocessing methods.

2.4. Evaluation

Researchers can use the evaluation link to analyze the above links and final extraction results to understand the advantages and shortcomings of the method, and to clarify the direction for improvement. Due to the diversity of detection algorithms, the evaluation indicators are also diverse. Early experiments mainly involved qualitative evaluations, which are difficult to accurately evaluate and compare. With the development of this field, many quantitative evaluations have emerged; however, we still lack unified quantitative analysis standards. We discuss quantitative evaluation systems in this section.

Before evaluating the extraction results, the research team needs to obtain the actual boundary information, which is considered correct and is used for comparison with the predicted values (i.e., field boundary information obtained by automated boundary extraction technology). Obtaining the actual plot boundary information through field investigation is undoubtedly a traditional but accurate method [10], and detailed information, such as the crop conditions and bare soil, can be found in the field investigation of the target plot [8], which is conducive to the analysis of and improvement in the research; however, this method is time-consuming and costly. The direct manual annotation of images is a more common practice [57,64] to achieve satisfactory accuracy while maintaining high efficiency, and it is another method of using the data obtained from the work of other scholars as a standard [37,43] or national database [5,47,48] to reduce the workload. However, the data of other researchers or organizations may not match those of a particular experiment, and the accuracy of these data is beyond the control of the investigator. Therefore, it may be appropriate for researchers to manually mark the correct field boundaries in the imagery.

Evaluation can generally be divided into two categories: (1) evaluation of the core competencies; (2) evaluation of the related attributes. Evaluation of the core competencies refers to the relevant evaluation regarding the accuracy of the extraction, which is an important indicator of the extraction effect of the algorithm, and which can be subdivided into one of the following three systems: (1) a system that is based on the correct and false classification of the pixels within the boundary line; (2) a system that is based on the classification of the pixel deviation in the boundary line; (3) a system that is based on the overall classification accuracy rate. Evaluation of the relevant attributes can be used to further assess the practicability and generalization of the algorithm, including the algorithm complexity, operating efficiency, stability, robustness, completeness, extraction quality, etc.

We present some of the commonly used quantitative evaluations of core competencies in Table 5 and evaluations of related attributes in Table 6.

Table 5.

Evaluations of core competencies.

Table 6.

Evaluations of related attributes.

The focus of the existing quantitative evaluations is on the evaluation of the core performance, such as the accuracy of the algorithm. The core performance is indeed the focus of boundary extraction algorithms; however, with the improvement in the algorithm accuracy, boundary extraction algorithms will gradually enter the stage of practicality. The evaluation of the relevant attributes can help researchers to make reasonable judgments on the practicality of the algorithm.

In addition to the abovementioned common quantitative evaluation systems, there are many other types of evaluation systems, such as visual interpretation [34,38,53,74], the rare evaluation index [10,41,64], the self-created evaluation index [33,35,36,71], and the boundary object evaluation system. Visual interpretation evaluation is a subjective evaluation system that often appeared in the early literature but is no longer popular. Rare evaluation indicators refer to evaluation indicators that are not widely accepted and used by other researchers. Because self-created evaluation indexes are used for some experimental designs, the experimental evaluation is more appropriate for one experiment, and comparisons with the same types of experiment are difficult. The boundary object evaluation system is formulated according to the characteristics of the various attributes of the various boundary objects. There are differences in the evaluation ideas between the first three types of boundary extraction (low-level feature extraction algorithms, high-level feature extraction algorithms, and the visual hierarchy extraction algorithm) and the boundary object extraction; evaluation systems based on boundary object extraction are rare, so we will not comment on the boundary object evaluation system.

3. Detection Algorithms

There are many kinds of automatic agricultural field boundary extraction algorithms that are based on remote sensing images, but there have been no reviews of these. Considering that the boundary extraction of agricultural remote sensing images can be regarded as a kind of image segmentation, and there have been many reviews in the field of image segmentation, we can perform classification of the algorithms by referring to an overview of image segmentation.

In the early days, classification and sorting according to specific algorithm principles was a common classification standard for the review of image segmentation classes. K. S. Fu and J. K. Mui [23] divided image segmentation algorithms into three categories in their 1981 literature review: feature threshold, cluster edge detection, and region extraction. In 1994, Koschan A. [20] further divided them into four categories: pixel-based, region-based, edge-based, and region-based, and then further divided them into 51 subcategories. Cheng, H. D., Jiang, X. H., Sun, Y., et al. [21] supplemented the algorithm based on fuzzy-set theory and the artificial neural network algorithm with the large-class segmentation algorithm so that the total algorithm contained six categories in 2001. There are an increasing number of algorithms, and researchers pay too much attention to the classification of specific algorithm principles, which leads to more complex classification results. Therefore, other classification criteria have emerged, such as the smallest image unit used by the algorithm, which we can divide into pixel-based units and object-oriented units [16,19]. According to the driving type of the algorithm, they can be divided into knowledge-driven and data-driven algorithms [15]. Depending on whether the algorithm directly identifies the boundary or extends the recognition route from the inside to the outside, it can be categorized as either an edge-based or region-based algorithm [14]. Based on the idea of the algorithm’s consideration of the image information processing, in this paper, we divide the algorithms into the following: low-level feature extraction algorithms, which only consider the boundary features; high-level feature extraction algorithms, which consider the boundary and internal information simultaneously; visual hierarchy extraction algorithms, which simulate biological vision systems; boundary object extraction algorithms, which extracts boundary objects that can be regarded as boundary lines. We present a schematic table of the algorithm classifications, corresponding characteristics, and information sources in Table 7.

Table 7.

Classification of algorithms, corresponding characteristics, and sources of information.

3.1. Low-Level Feature Extraction Algorithms

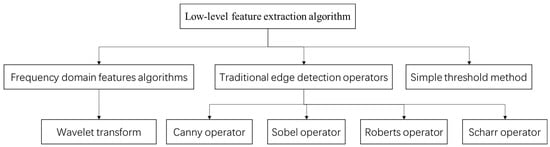

Low-level feature extraction algorithms utilize a boundary extraction method in the narrow sense, which means that they are algorithms that only consider features presented by the boundary on the image to identify the boundary of the extracted field. Low-level feature extraction algorithms often extract field boundaries based on the contrast between neighborhoods, including edge detection operators, frequency domain features, and other algorithms. This kind of algorithm is simple and clear, and it has high computing efficiency and low computing power consumption. It can accurately perform boundary recognition and extraction for field images in simple flat areas, and it has a good extraction effect in low-resolution images. We present the classification of low-level feature extraction algorithms in Figure 3.

Figure 3.

Classification of low-level feature extraction algorithms.

3.1.1. Traditional Edge Detection Operators

Image segmentation algorithms based on the traditional edge detection operator mainly involve locating the edge of the local area by using a differential operator to identify the place where the gray value abruptly changes in the image. The general principle is that between the edges, the pixel properties suddenly change; thus, the mutation is the edge [75], which we can detect by deriving the gray change in the image, such as the peak point of the first derivative, or the zero position of the second derivative. Therefore, the traditional edge detection operator is also called the gradient operator. This kind of algorithm is easy to implement, can accurately reflect the contours of the field [42], and is effective in the extraction of typical simple linear features [76]; however, this kind of algorithm pays too much attention to detail, has poor coherence, has a poor recognition effect in images with complex background textures, and is susceptible to noise interference. This makes it difficult to locate the edges of the field, and edge information is omitted; thus, such algorithms often require corresponding postprocessing.

The extraction effect of the simple traditional edge detection operators is not ideal. Chen Yizhe et al. used Roberts and Sobel operators and found that, although they could detect the edges of the plots well, there was a large amount of noise that was not easily eliminated by filtering [74]. However, with appropriate improvements, the edge detection operator could improve the recognition quality of the field boundary. A. Rydberg et al. proposed a multispectral edge detection algorithm [49]. Barry Watkins et al. combined the Canny and Scharr operators with the sum of 28 graphs in the four bands of RGB and NIR and seven sampling dates to generate a composite multitemporal edge layer [64]. Yu Wang et al. developed a combination of the ADSS and Canny edge detection algorithm, and the preprocessing step replaced the traditional Gaussian-scale space with an anisotropic diffusion scale space to better retain the local characteristics. They then used the multiscale segmentation method for farmland boundary detection, which provides anti-false edge information with a coarse scale and spatially accurate results with a fine scale [38]. Pang Xinhua et al. added an edge closure step based on the mathematical morphology after the recognition of the Canny operator [40]. According to the qualitative evaluation, the improved edge detection algorithm performs better in flat-area field imagery. In fact, under ideal conditions, the boundary extraction accuracy is high. Ruoxian Li et al.’s proposed algorithm first uses edge operators for the edge enhancement, then uses fixed threshold segmentation and combines the image segmentation algorithms based on void removal to perform the region segmentation, and finally performs information extraction and vectorization operations; it has an average accuracy of 94.2% [66].

3.1.2. Frequency Domain Characterization Algorithm

Frequency domain feature algorithms mainly use the wavelet transform, and other algorithms to identify a certain law in the image to extract the boundary information. The principle is that the pattern change in the image follows a certain law, and the information in the image can be decomposed into components of different frequencies through the processing of frequency domain transformation algorithms. Different types of filters are then used to filter out the information that is or is not of interest.

Wavelet transform is a common edge detection method [18]. The wavelet analysis algorithm evolved from Fourier analysis, which has the advantages of multiresolution analysis and has good approximation characteristics for one-dimensional signals [77]. Isida et al. discussed the identification of rice field edges based on a multiresolution wavelet transform algorithm, and they found that the algorithm is more suitable for the recognition of SAR remote sensing images than Gaussian differential DOG filters [44]. C. Y. Ji discussed the method of processing multitemporal remote sensing images based on wavelet transform, and they achieved a certain field boundary extraction effect [50].

3.1.3. Simple Threshold Method

The threshold method is an ancient edge detection method. The basic idea is to classify pixels or objects that belong to the edge by comparing the thresholds, which is straightforward. The threshold method can be used in conjunction with a variety of other algorithms. Chen Yizhe et al. identified the edges of farmland plots based on the adaptive threshold method, and they found that, although the threshold method is inferior to differential operators in boundary recognition, it has better noise immunity [74]. When the image grayscale range overlaps or the image grayscale difference is not obvious, it is difficult to obtain the ideal result with the threshold method; thus, it cannot be directly used for high-resolution images with large data volumes and high spatial variability.

3.1.4. Summary

In general, the actual performance of low-level feature extraction algorithms for simple farmland area images has been satisfactory. Recently, the traditional Canny edge detection, Hough transform, and Suzuki85 contour algorithms were used in the land boundary extraction algorithm developed by Rokgi Hong [72], and the accuracy and completeness rate of the boundary recognition was about 80% in a Korean experiment on regular field boundary extraction. Mustafa Turker [43] proposed the addition of a perceptual grouping algorithm after the Canny operator to identify the field block boundary. The perceptual grouping involves the Gestalt laws of proximity, continuity, symmetry, and closure. The author determined the field block sub-boundary with the help of geometric shapes, and the overall matching accuracies were 76.2% and 82.6% in the images of SPOT4 and SPOT5, respectively. However, such algorithms are generally only suitable for more regular and flat plots and are difficult to generalize for complex areas.

3.2. High-Level Feature Extraction Algorithms

High-level feature extraction algorithms consider both the feature information of the farmland boundary and other feature information contained in the imagery, making full use of both, especially the latter. The other features contain rich feature information, such as the spectrum, space, texture, and topology, making up for the shortcomings of the low-level feature extraction algorithms, which use edge information alone. Therefore, some high-level feature extraction algorithms can form a completely closed rather than a fragmented and meaningless land block boundary. That is, compared with low-level feature extraction algorithms, high-level feature extraction algorithms include the influence of the surrounding information on the edge line (not just the information on the edge) on the edge detection results.

Modern high-level feature extraction algorithms are mostly based on image objects, or primitives for classification [17], which overcomes the “salt and pepper effect” problem (in high-resolution imagery, local spatial heterogeneity is higher. This can be understood to mean that in high-resolution images, the same kind of features are more likely to be classified into different categories. As such, there is a lot of speckle noise which is called “salt and pepper noise” in the image) in high-resolution images to which traditional pixel-based classification algorithms are prone [71]. High-level feature extraction algorithms are one of the mainstream technologies for high-resolution image extraction [14,78]. The principle is to classify the image objects according to the similarity between adjacent cells, such as by merging and dividing cells, comparing the spectral heterogeneity and setting a spectral heterogeneity threshold, and finally forming a target object that is composed of multiple homogeneous pixels [62]. The algorithms have the ability to detect and extract farmland boundaries for images of complex areas, such as mountainous areas and paddy fields.

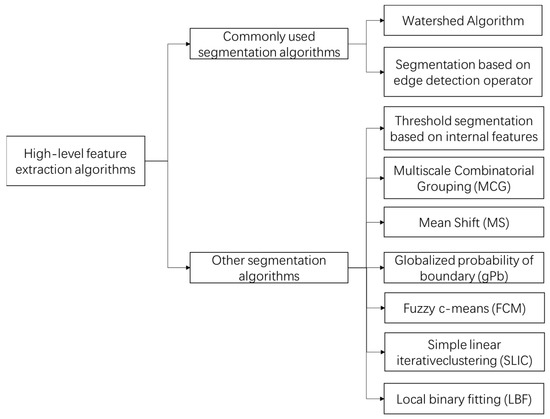

The first step of image segmentation is the most critical. By using various methods to finally determine the optimal segmentation scale, the field boundary can be more accurately extracted [4]. The determination of the optimal segmentation scale is mainly affected by the type of feature, the contrast between the feature and surrounding environment, the internal heterogeneity of the feature, and the image resolution [4,79]. We present the detection algorithms based on their segmentation methods in Figure 4.

Figure 4.

Classification of high-level feature extraction algorithms.

3.2.1. Segmentation Based on Edge Detection Operators

With the help of edge detection operator segmentation, we can obtain an extremely high boundary extraction accuracy, and the boundary can reach the pixel level. Combined with the idea of region segmentation, we can obtain a better regional structure and, at the same time, overcome the defects of the missing and difficult-to-close boundaries of traditional edge detection. As early as 1995, Janssen et al. developed a three-stage strategy to extract the field sub-boundaries and crop types, which first integrates the results of the edge detection with the geometric data in the GIS, then classifies them based on the objects, and finally merges them based on the conditions. The strategy can be used to obtain a field geometry consistency of 87% [80]. Marina Mueller et al. obtained an edge-information-guided region growth algorithm based on an edge detection algorithm to close the gap within the edge and effectively identify large field boundaries [53]. By using a segmentation algorithm that combines gradient edge detectors with an unsupervised classification of iterative self-organizing data analysis (ISODATA), Rydberg et al. obtained highly consistent plot boundaries, with a deviation of 83% within one pixel [4]. Zhang Yanling et al. first used the mathematical morphological operation operator to segment a remote sensing image, then used the regional seed growth method to extract the complete farmland, and then further opened the farmland to smooth, track, and refine the boundary to obtain the complete farmland boundary [34]. Liao Dan used the Sobel operator for multiscale segmentation, and based on the normalized vegetation index, spectrum, texture, and other information, conducted the field boundary identification of paddy fields and dry fields in plain, hilly, and mountainous terrain areas. The field boundary recognition rate in the plain and hilly areas was about 95%, and it was 85% in the mountainous areas [55].

In 2016, Yan et al. improved a complex farmland edge recognition algorithm, which had been developed over 14 years and used throughout the United States, with an overall accuracy of 92.7% per pixel of farmland classification, and overall farmland producer and user accuracies of 93.7% and 94.9%, respectively [47]. The algorithm consists of five steps: (1) generation of the crop description of the farmland edge linearity and edge significance plots; (2) the geometric activity profile based on a variable subregion segmentation algorithm that is applied to the probability plot to obtain the candidate farmland objects; (3) application of a watershed algorithm to decompose the connected candidate field objects into isolated fields; (4) the use of geometry-based algorithms to detect and correlate the fields; (5) the use of two-pixel expansion and single-pixel erosion morphology filters for the plots. However, because the algorithm is based on remote sensing images with a resolution of 30 m, its recognition accuracy needs to be improved.

3.2.2. Watershed Algorithm

The watershed algorithm is a mathematical morphological segmentation algorithm that is based on topology theory [81]. The idea is to simulate the process of water immersion. The pixel gray value in the image indicates the altitude of the point, the water starts from each local minimum point and flows around, and the water boundary that corresponds to the different minimum points forms a watershed. The watershed algorithm can obtain accurate boundary results for single-pixel localization; however, it is prone to over-segmentation. Because the pure watershed algorithm is first used to identify the field boundary and then to create the object area, in this paper, we treat it as an edge-based algorithm; however, it acts as a region-based algorithm for operations such as special over-segmentation and merging in the later stage. Whether the algorithm is an edge-based or region-based algorithm is still controversial [14,24,82,83,84,85]. However, algorithms that are based on mathematical morphology generally perform better than traditional edge detection algorithms, and the boundary recognition results of watershed algorithms are also better than those of traditional differential edge detection algorithms [86].

In 2004, Butenuth et al. used a watershed-based algorithm that employed line detection algorithms and prior geographic information system information for the field boundary extraction operations [25]. In 2010, Deren Li et al. developed a watershed algorithm based on edge embedding markers, and the edge information detected by the edge detector with the embedded confidence could assist in the detection of weakly bounded objects and improve the accuracy of the object boundary positions. The algorithm finally obtained a pixel-level edge accuracy of about 77.6%, which indicated an excellent effect [36]. In 2014, Chen Tieqiao first used watershed segmentation and then a field clustering algorithm, and the extraction accuracy of the fields in hilly areas reached 73%, which was better than the results of the mean-shift segmentation [39]. Recently, Watkins B. et al. found that the watershed algorithm had better segmentation results than multithreshold segmentation and multiresolution segmentation algorithms. The overall accuracy (OA) of the multitemporal segmentation results combined with the watershed algorithm and Canny operator was 92.9% [64].

3.2.3. Other Segmentation Algorithms

In addition to the above two types of algorithms, there are other algorithms, which are presented in Table 8.

Table 8.

Names, introductions, and performances of segmentation algorithms.

3.2.4. Summary

High-level feature extraction algorithms, which are rich in variety, are popular among farmland boundary extraction algorithms, among which segmentation algorithms combined with edge detection operators and watershed segmentation algorithms are the most popular. High-level feature extraction algorithms exhibit good extraction performance and can be used for a variety of terrains. However, due to the complex parameter selection of many algorithms, the different optimal parameters of different target regions, poor transferability, and recognition accuracy in extremely complex regions are still not ideal. Only a few algorithms are currently being used instead of manual visual interpretation methods.

3.3. The Visual Hierarchy Extraction Algorithm

High-level feature boundary extraction algorithms have high recognition accuracy and a certain practical performance; however, they still have many shortcomings. For example, this kind of algorithm lacks the boundary extraction ability of complex images and the generalization application ability of different images. Extracting contours from complex natural scenes is still difficult with the existing computer vision systems; however, human visual systems can quickly and accurately complete this task [90], and boundary detection algorithms that are based on vision principles are a potential breakthrough in contour extraction.

According to the principles of the visual receptive field, these boundary detection algorithms mix the boundary information and internal information of the object in the receptive field at different scales to understand the visual cues, which reflects certain advantages in the generalization of boundary detection in complex scenes. For example, Yang K. F. et al. proposed the DoG (difference of Gaussian) model derived from the classical–nonclassical receptive fields of visual nerve cells [90]. George Azzopardi et al. [91] proposed the combination of the receptive fields, and some scholars have studied the neural coding mode of the visual system with the help of convolutional neural network models [92]. In the field of farmland boundary extraction, the extraction algorithm at the visual hierarchy level is only a convolutional network algorithm for the time being.

A convolutional network is a multilayer feedforward neural network model with powerful feature learning and feature expression capabilities, and it can be used alone or in combination for field boundary recognition, with good potential. A fully convolutional network (FCN) is made up of several processing layers, which include convolution, pooling, dropout, batch normalization, and nonlinearities, and are trained end-to-end to extract semantic information. Khairiya Mudrik Masoud et al. discussed the use of a multiple dilation FCN (MD-FCN) algorithm to predict field boundaries pixel-by-pixel in 10 m resolution remote sensing. Test data from five TSs (tiles for testing the network) show that the average precision is 0.66, the average Recal is 0.61, and the average F-score is 0.63. The proposed super-resolution profile detection network (SRC-Net) can increase the image resolution from 10 m to 5 m and reduce the classification effect [3]. By combining convolutional networks with other algorithms, high accuracy, and robustness can be obtained. C Persello et al. used the fully convolutional network (FCN) to generate fragmentation contours, then used the OWT-UCM process to obtain hierarchical segmentation, and finally used the SCG algorithm to merge regions at different levels. Moreover, the gPb contour algorithm can be incorporated into the process when necessary. The accuracies of the algorithm within 10 pixels of the field boundary deviation in three experimental images were 0.72, 0.706, and 9.790 [5].

In addition to the FCN algorithm, the CNN algorithm can also be used to extract the farmland boundary. Xirong Li et al. proposed a deep boundary combination (DBC) algorithm. They first used a deep convolutional network (CNN) to obtain the edge probability map of the farmland image, then used the OWT-UCM algorithm to divide the edge probability map into the closed boundary hierarchy tree, and finally selected the appropriate threshold (k) to obtain the hierarchy tree image. According to the experiments, the algorithm effectively extracted the field boundary, and the accuracy and integrity rates of the farmland boundary detected in the two images were about 90%. According to the comparison, the correctness, completeness, and recognition quality of the algorithm are higher than the Canny-based recognition algorithm, and the correctness and quality are higher than the MCG-based algorithm [59].

Although convolutional neural network algorithms have produced good recognition results, they have aspects that are not conducive to generalization, they often take a lot of time and computing power to train a model with good performance, and they require a large number of labeled cultivated land sample datasets to train; such datasets are difficult to obtain in a short time. In addition, convolutional neural network models usually do not have good interpretability as they are only black-box simulations, which makes it difficult to interpret suitable features in the semantic sense.

At present, remote-sensing field block boundary extraction algorithms based on neural network technology are still slightly immature, and the accuracy and applicability of the existing models need to be improved. In fact, many High-Level Feature Extraction Algorithms perform better than Visual Hierarchy Extraction Algorithms in terms of accuracy. Low-Level Feature Extraction Algorithms have no obvious advantage in accuracy over Visual Hierarchy Extraction Algorithms, but the computational complexity is smaller. However, with the continuous development of artificial intelligence technology, this field is worthy of more in-depth exploration and will experience more substantial development [16].

3.4. Boundary Object Extraction Algorithm

The mainstream farmland boundary is defined as the connection line from one farmland plot to another, or from one farmland plot to a non-farmland plot [3]. However, there will be non-field boundary objects in the images, such as footpaths, roads, ditches, etc. Therefore, field boundary object extraction is another method of identification. The field boundary object extraction method refers to the idea that the object of the identification extraction is the field boundary rather than the traditional agricultural farmland boundary (AFB). At the same time, the field boundary objects may help with the identification and extraction of the field boundaries. Both Song Jiantao and Chen Jie point out that the use of roads or footpaths between plots can improve the accuracy of farmland extraction [35].

At present, there is not much research in this area because the field requires images with higher-resolution pixels, and it generally requires submeter-resolution pixels. For example, the width of the footpath in a field is 2–4 pixels at a resolution of 0.3 m [6]. High-resolution images of 0.3 m were extremely rare before 2015. In addition to roads, field boundary objects have weak linear characteristics, and they are seen against a complex natural background. Moreover, automatic extraction is difficult to achieve, and the requirements are high. In addition, the field boundary object may serve other needs, such as field road extraction, which is relevant to autonomous driving and has higher requirements. We will now briefly introduce the research status of the identification and extraction of common field boundary objects, such as footpaths, roads, and ditches.

The extraction effect of footpaths in fields in regular plots in flat areas is better than irregular or uneven areas. Zhang Mingjie studied the characteristics and marginal characteristics of footpaths. For edge features, Zhang Mingjie proposed an algorithm that first uses Canny edge detection, then postprocesses the breakpoint edge connection, and finally uses a high length threshold to extract the footpath; the comprehensive footpath extraction quality reached 96%. For the texture features, Zhang Mingjie manually selected the seed points, constructed the directional texture template, calculated the feature value, and then searched and fitted the candidate points of the footpath; the recognition accuracy of the footpath reached 98% [6]. Cai Daoqing et al. used the support vector machine algorithm and the ability of the ground equipment to shoot images at a 90.1% F1 value to achieve the recognition of a paddy field area [68].

In terms of Tiankan extraction (Tiankan is a Chinese name. Tiankan refers to the bulge in the field that is higher than the field, which is mainly used for demarcation, water storage, and pedestrian walking. The geometry, texture, and grayscale features of Tiankans and footpaths are relatively similar.), the Tiankan can be approximated as a footpath with a width greater than 1 m, according to China’s “Technical regulation of the third nationwide land survey”. The width of a Tiankan is larger than those of the footpaths in fields, the identification is less difficult, and the relevant research is more in-depth; however, Tiankans are still more difficult to extract than roads [93]. Tang Lei segmented the images from the Gaofen-2 satellite (GF-2) through multiscale layering, and then extracted them with four characteristic indexes: the angular second-order moment, entropy, contrast, and correlation indexes. The extraction accuracy of the Tiankan was 68.83%, and the kappa coefficient was 0.61 [51]. Yang Yunhui studied the maximum likelihood method, support vector machine, and unsupervised algorithm and found that the rule-based object-oriented method was the best; the extraction accuracy of the Tiankan was 86.42% [60]. Based on the object-oriented algorithm, Liu Changjuan et al. identified Tiankans in mountainous and hilly areas according to the spectral, geometric, and textural features, and the coincidence rate between the measured and actual results was 94.74% [45]. Wang et al. used a similar algorithm to extract the Tiankans of terraces, and the overlap rates of the QuickBird images (resolution: 0.6 m) and SPOT5 images (resolution: 2.5 m) were 92.33% and 88.03%, respectively [41]. However, so far, manual delineation is still the most accurate method. Zhao Yandong et al. manually outlined a Tiankan based on high-resolution images (the result was the predicted value), and they obtained a correlation coefficient (R2) of 0.977, compared with the results measured in the field (actual values) [10].

In terms of field road recognition, because most of the research is aimed at the automation of agricultural machinery, the images are ground-derived, and a good recognition effect has been achieved [65,94,95,96]. Few studies exist in which the authors use remote sensing images as the image sources; however, researchers (e.g., Ren et al. [52]) have achieved good results using GF-2-based remote sensing images to identify field roads, and the recognition rate has reached 90%.

Researchers have performed many studies on interditch identification; however, in mainstream research, the focus has been on identifying larger river ditches rather than on identifying “hairy canals” (very fine little ditches) on farmlands. Liquid-filled ditches and plots can substantially vary and can facilitate the extraction. However, a “hairy canal” has the characteristics of temporary drying, and the images of plants and weeds in the canal are larger, which results in unsatisfactory recognition accuracy. Han Wenting et al. used the object-oriented method to extract a field canal system, and the overall extraction accuracy was 77.8% [69]. Temesgen Gashaw et al. used Feature Analyst software for the identification, which can be used to select the width, linear feature shape attributes, input reflection, and discrete, texture, and elevation bands, and the identification results contained a substantial number of false positives. The accuracy of the classification of the plots with a large number of ditches was only 59% [97]. Ayana E. K. used four steps (image segmentation, morphological processing, binarization, and Hough transform) to detect field trenches, obtaining an overall classification accuracy of 87.6% [98].

Due to the variety of boundary objects and the substantial differences between the different types of boundary objects, the search for related studies is complex, and comparisons with these articles are vague. We present a summary of the above findings in Table 9.

Table 9.

Categories, numbers, performances, and the representative literature of boundary object extraction algorithms.

The algorithms that identify boundary objects may have better generalization abilities than the mainstream boundary extraction algorithms. The extraction effect of the mainstream boundary extraction algorithms is affected by the crop species, which is not conducive to the promotion of different crops. Many crops are planted in small areas and are not worthy of the development of targeted extraction algorithms. Moreover, the traits of many crops are quite different from common research objects, such as rice, wheat, and corn, which may produce unsatisfactory algorithm extraction results. Extraction algorithms that are based on boundary objects are mainly affected by fields, roads, etc. If there are common fields and roads between the crops, then the extraction effect of the algorithm will not be affected.

We use chrysanthemum boundaries as an example to illustrate the advantages of boundary object extraction in generalization. Chrysanthemums have important ornamental, medicinal [99], and cultural [100] values. There are many varieties of chrysanthemums, and there are more than 3000 in China alone [101], with several of the more important varieties (e.g., Hang Bai chrysanthemum) planted in areas of 1000 km2 [102]. For such planting areas, the efficiency of the manual boundary marking method is much lower than the efficiency of the automated boundary extraction method, and thus there is a demand for the latter. However, there are no reports on the automatic boundary extraction of chrysanthemum field blocks because it is not worth developing a special boundary extraction algorithm for areas of 1000 km2. Moreover, chrysanthemums are quite different from crops such as rice, wheat, and corn, and it is difficult to achieve good results in chrysanthemum extraction with the algorithms that are used for these crops. However, the boundary object extraction method is mainly affected by the type of boundary object rather than the chrysanthemum, and chrysanthemum boundary objects are mainly footpaths and roads, which is the ideal extraction type in the boundary object extraction method. The use of this method to extract chrysanthemum boundaries is promising.

3.5. Summary of Extraction Algorithms

The four types of automated boundary extraction algorithms have their own characteristics. Low-level feature boundary extraction algorithms extract the boundaries according to the boundary linear features of the objects in the image, which is the basic technology of boundary extraction and which we can use to effectively extract the boundaries of farmland images of flat and regular areas. High-level feature boundary extraction algorithms fuse the internal information of the object in the image so that the extraction of the boundary line is complete and closed, which is of practical importance. At the same time, the internal information of the object can assist in locating the boundary information of the object so that the algorithm can process more complex terrain images, such as terraces. The boundary detection algorithm based on the principle of vision performs boundary extraction based on visual features, and it is expected to make breakthroughs in the recognition accuracy of complex areas and the generalization of different regions. The boundary object extraction algorithm is a new idea in field boundary extraction, and it has a substantial amount of potential in terms of its generalization ability.

We present the extraction results of some of the algorithms, which will help readers to understand their accuracies, in Table 10.

Table 10.

Research, categories, introductions, evaluation indicators, and performances of boundary extraction algorithms.

Due to the different image sources, types of plots, geographical environments, crop types, and growth stages, the specific criteria for the experimental evaluation are inconsistent, and we could not simply rank the algorithms according to the results. In general, the accuracy of manual annotation is much higher than that of automated annotation, and the accuracy of high-level algorithms do not have superior advantages compared with low-level algorithms, which is because low-level algorithms are used to extract the boundaries of more regular and flat fields, which is less difficult. Moreover, the early low-level research algorithms do not have systematic quantitative evaluation standards; thus, the low-level feature extraction algorithms with quantitative evaluation are often the later stage of the mature research algorithms. In contrast, early advanced feature extraction algorithms already have quantitative evaluation criteria, and the extraction accuracy of these algorithms is low. Therefore, there will be a situation where the advanced feature extraction algorithm does not have a significant advantage in accuracy compared with the low-level feature extraction algorithm. However, high-level algorithms are the mainstream technology, and they have an overwhelming advantage over low-level algorithms in the processing of high-resolution images and images of complex terrains, which has been confirmed by researchers in studies that involve comparisons of different algorithms [59,64].

In terms of use, the advantages of low-level feature extraction algorithms are that they are relatively simple, mature, easy to understand, and have high computational efficiency. Low-level feature extraction algorithms work well for images with good contrast between the object and background and can maintain correspondence with the edge of the object. However, this type of algorithm does not work well for images with smooth transitions, low contrast, and high noise values, and is difficult to use on high-resolution imagery and the imagery of complex areas.

Compared with low-level feature extraction algorithms, high-level feature extraction algorithms are generally insensitive to noise, and they can use the boundary information and graphic internal information simultaneously; thus, they can be applied to higher-resolution images, and they often have better accuracy than low-level feature extraction algorithms. However, the effectiveness of many of the algorithms depends on more suitable parameters, which is a challenge for non-specialists to establish. Moreover, they are more complex and time consuming and have high consumption costs.

Biological visual hierarchy extraction algorithms have better generalization, which makes them suitable for more complex terrain areas. These algorithms have a development potential that cannot be ignored; however, interpreting the appropriate features and rules that are obtained by them is difficult. Sometimes, different image objects, such as water and shadows, have similar spectral values, which cause “semantic gap” problems. The most critical factor is that the biological vision hierarchy extraction algorithm requires a large number of datasets and calculations, as well as the constant adjustment of the parameters.

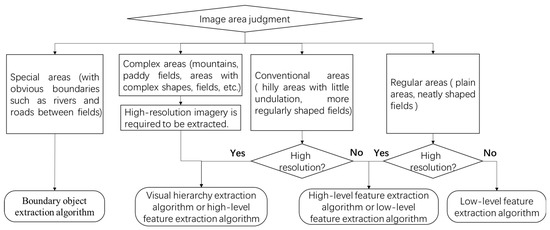

Due to the different terrains, resolutions, and requirements of the imagery, there is currently no general algorithm to recommend [18]. To facilitate readers in the selection of the appropriate algorithm according to the image content and resolution, we present a flowchart of the recommended algorithm category selection process as a reference in Figure 5. It is worth noting that when selecting an optimal algorithm, readers should consider not only algorithm accuracy but also computational complexity and other factors.

Figure 5.

Flowchart of algorithm selection.

For readers who have direct application needs and do not want to design their own algorithms, there are currently only a few software applications or products that can be put to use. We present some of the available algorithms that can be directly used in Table 11.

Table 11.

Introduction to available algorithms (software).

4. Influencing Factors

The influencing factors on the farmland boundary extraction effect can be divided into technical influencing factors and natural influencing factors. The technical factors include two categories: images and algorithms, which are the human design and change factors and are also the focus of the current research. The natural factors, which include crops, climate, topography, etc., also have an impact on the extraction results. Researchers do not study the natural factors as much as the technical factors because they generally determine the former in experiments and are often unable to select the natural factors at all. However, the influence of natural factors on the extraction process also needs to be studied because such research will help with the evaluation of the implementation difficulties of boundary extraction projects and the expected extraction effects, as well as helping with the design of the algorithm in a targeted manner. In this section, we analyze the influence of the technical and natural influences on the extraction results so that users with boundary extraction needs can reasonably obtain or purchase images, design or select algorithms, and evaluate in advance the resistance that the natural factors may produce.

4.1. Technical Factors

4.1.1. Remote Sensing Imagery

Several properties of the image affect the extraction results. In general, the image resolution, image band, and multiphase imagery all have impacts on the extraction results. Among them, the impact of the image resolution is the most critical. The improvement in the image resolution helps with the design and extraction of algorithms. The high image resolution not only makes the specific location of the identified boundary more accurate but also allows for the identification of subfield massifs (small plots inside large complete fields) [80]. In addition, the increase in image resolution has allowed for a decrease in extraction accuracy requirements to some extent [37]. For example, suppose that a boundary buffer of 2 m (i.e., the allowable horizontal offset distance between the boundary extracted by the algorithm and the real boundary) [73] corresponds to ten pixels with a resolution of 0.2 m, or four pixels with a resolution of 0.5 m; for higher-resolution images, the algorithm allows for more pixel shifts.

In addition to image resolution, image bands, and multitemporal images also have certain impacts on the extraction. All three factors can be tradeoffs in the selection of the shooting platform and sensor.

Choice of Platform

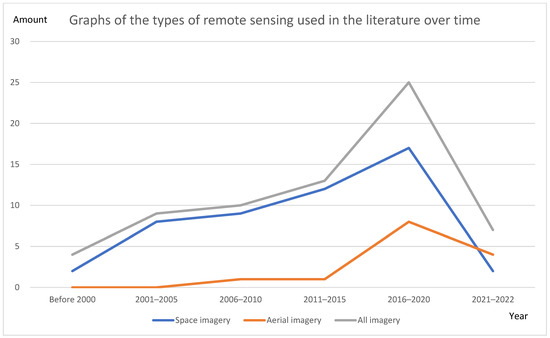

The early remote sensing images were mainly space images that relied on satellite platforms. Nowadays, there are an increasing number of aerial images that rely not only on UAV platforms but also on large aircraft platforms. In Figure 6, we present the trends for the numbers of remote sensing images for the two types with the years (the final number of images is small because the middle number is based on the five-year statistical range, and finally only from 2021 to the present). Since 2015, there has been substantial development of aerial imaging platforms, which is mainly due to the rapidly increasing popularity of drones. Therefore, presently, the two mainstream platforms that can be selected are satellite platforms and unmanned aerial vehicle platforms.

Figure 6.

Graphs of the types of remote sensing used in the literature over time.

Because the type is not stated for some images, the sum of the aerial and aerospace images is less than or equal to all the images.

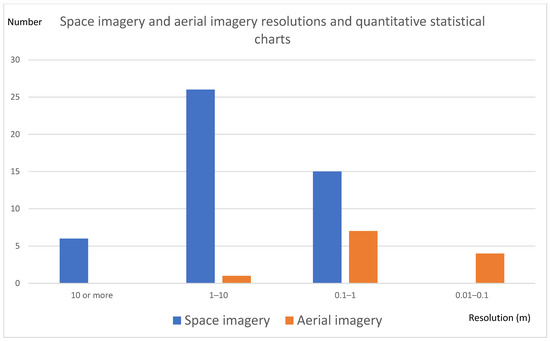

Compared with space imaging platforms, the most important advantage of aerial imaging platforms is the resolution. We present the numbers of images of different resolutions of aerial imagery and aerospace imagery in the literature in Figure 7. The resolutions of the aerial images are generally at the decimeter level or lower, while only about one-third of the space images are at the submeter level.

Figure 7.

Space imagery and aerial imagery resolutions and quantitative statistical charts.

Drones are a fast, low-cost, and a flexible platform for image acquisition, especially when it comes to high-resolution images. In addition, users can change the sensor and altitude to obtain the band [33] and resolution [51,59] that they require to meet their individual needs. The disadvantages of drone platforms include payload limitations, short flight durations due to batteries, uncertain or restricted airspace rules, and the collection of large amounts of data, which is time-consuming. The first two of these drawbacks may be overcome as technology advances. The third disadvantage may be resolved as the relevant provisions are improved. In general, the development of UAV platforms still has considerable potential, especially in terms of fast data capture and high accuracy. Drones are even thought to have the potential to revolutionize remote sensing and its application areas, just as the advent of geographic information systems (GISs) did two decades ago [103].

Satellites are a more traditional image acquisition platform. Although there are disadvantages in terms of image resolution, satellites can easily provide periodic image data and a wide range of images, which makes them suitable for researchers to develop multitemporal image algorithms [47], or to select the best [48] images for boundary extraction. In addition, the acquisition of satellite images does not require researchers to enter the target area to shoot; thus, it is more convenient to obtain satellite images for long-distance research areas.

We present the main features of the two platforms in Table 12.

Table 12.

Features of satellite and UAV platforms.

Choice of Sensor

One of the effects of the development of sensor technology is the increased resolution of the images. The higher the spatial resolution of the images, the more accurate the extraction results [41]. However, for drone platforms, the effect of the sensors on the image resolution is not important.

Another impact of the development of sensor technology is that researchers can obtain multiple bands of image information. The early images often only had one band [70]; however, now researchers use 12 bands of image information [3]. Different bands may have an impact on the performance of the algorithm. For example, Liu Xin [63] found that, when extracting edge information based on the Canny edge operator of three RGB spectra, forest land and fields with crop cover are more suitable for G spectrum segmentation, and fields without crop cover are more suitable for R spectrum processing. In addition to the mainstream RGB-visible-light band images, many researchers have involved other types of wavelengths, such as infrared [3,46,48]. The band has an impact on the extraction results. In addition, different bands can help with image preprocessing, such as the blue band, which provides an additional standard for aerosol detection [46].

There is a lack of direct research on the band selection in this area; however, multiband imagery tends to be advantageous over single-band imagery. For the sensors of satellite platforms, the resolution is also an important factor to pay attention to.

4.1.2. Algorithms

The algorithm is the core factor that affects farmland boundary extraction. The influence mechanism of the extraction effect of the algorithm is more complicated. In terms of the fineness of the extraction, the algorithm needs to be adapted to higher-resolution images. In terms of image complexity, the algorithm needs to be able to better capture the features. From the perspective of the algorithm feasibility, the computational pressure of the algorithm needs to be within the tolerances of the hardware and time.

Adaptability to Image Resolution

High-resolution images often have a positive effect on the improvement in extraction accuracy, as we explained in Section 4.1.1. However, the image resolution is not as high as possible, and an image resolution that is too high may render the algorithm model unusable because the performance of the same algorithm in different-resolution images varies greatly. With the increase in the number of unmanned aerial vehicle platforms, the image resolution span has increased from centimeters to tens of meters, and researchers can now, in a sense, arbitrarily select the resolution of the image. At this point, the algorithm’s inability to adapt to the resolution is a limiting factor in the image selection.

For satellite remote sensing images with meter-level or lower resolutions, only pixel-based algorithms are required to obtain better extraction results [43]. However, for higher-resolution images, the “salt and pepper” effect occurs [71]. At this point, object-based algorithms can be used instead of pixel-based algorithms to fully mine the information of the submeter- and even decimeter-level high-resolution images. The existing object-based algorithms are best suited for resolutions of 20 cm [33] or 30 cm [30]. The centimeter-level resolution images that drones can obtain have no matching algorithm idea. In simple terms, the law of “the higher the resolution, the higher the recognition accuracy ”is not suitable for centimeter-level resolution.

Ability to Capture Complex Features

The algorithm completes the extraction task by mining various features in the image. For example, the boundary of the farmland has gradient characteristics and linear features. We can use the traditional boundary detection operator to detect the gradient features, and we can use the frequency domain class operator to detect the linear features. The location where these features are detected are the field boundary. Each feature can be used by multiple algorithms for detection. For example, the gradient features can be detected using the Canny and Sobel boundary extraction operators. Generally, the stronger the algorithm’s ability to extract features, the wider the range of the feature types covered by the algorithm, and the better the algorithm’s extraction effect on the boundaries of complex fields. We present some of the common features and recommended situations in Table 13.

Table 13.

Suggested scenarios and desired resolutions of features.

Cost of Computation

Researchers discussed techniques for reducing the cost of computation in the early literature [70]. However, with the development of the hardware, most algorithms today are designed and selected without consideration of the computation cost. We present the calculation speeds of some of the algorithms in the literature in Table 14.

Table 14.

Calculation speeds of algorithms.

The overall calculation speed and year are positively correlated, which is most likely due to the hardware development. The memory in the early literature was only 1 GB [70]; however, recently, many researchers have used 8 GB of memory [67,68]. Similarly, CPUs have evolved [67,70]. However, programming languages have not changed much, and mainly C [70] or C++ [43,68,74] are used.

The hardware has been improved so that the computation costs do not need to be considered for regular-sized images or algorithms. The visual-feature-based approach requires special attention to the cost of computation. For example, the MD-FCN method used by Khairiya Mudrik Masoud et al. requires 16 GB of random-access memory [3]; however, even with such a high configuration of hardware, the processing time is a major challenge in the promotion of the method.

4.2. Natural Influencing Factors

4.2.1. Crop Factors

Researchers discuss crop factors in terms of two aspects: crop type and crop growth state.

In terms of the crop type, there are currently no directly related studies on the impact of the crop type on boundary extraction, and there is controversy as to whether the crop type has a non-negligible impact on the research. Some scholars have pointed out that the crop type has little influence on the research [50]; however, others have pointed out that the selection of some images [63] and the design of the algorithms [37] do consider the crop species, which is often correlated with the local topography and climate. The differences in the images, algorithms, and evaluations between the different studies make it difficult to compare them with each other. However, by summarizing the research objects, we can roughly exclude the crops and the literature in Table 15. The crops that appear in the table are included in more than two relevant studies, which proves that boundary extraction studies for these crops are feasible, or at least that there is relevant references available in the literature

Table 15.

Crops and the literature.

In addition, sorghum [47], peanuts[64], pecans [64], licorice [47], sunflowers [46], grapes [70], and cotton [47] all also appear once. In general, the most common crops can be boundary extracted, and we have not found any crop species for which boundary extraction is difficult. A specific influence of the crop species on the algorithm may exist; however, no special attention is required when performing the boundary extraction.

The growth stage of the crop has a substantial impact on the algorithm, and the effect is complex. For example, for a texture-feature-based algorithm [70], the texture features at the seedling stage are completely different from the texture features at the mature stage, and the latter are more conducive to algorithm processing. However, from the perspective of imagery, vigorously growing crops obscure the field boundaries [55], which makes boundary extraction more difficult. Moreover, we cannot obtain the ideal extraction results without vigorous crop growth, and crops that cannot completely cover the bare soil lead to missing separations [32], which is also one of the difficulties of boundary extraction. Different crop growth periods have different difficulties; thus, there is no uniform answer to the suitable image shooting time, which mainly depends on the algorithm being used. A general response to this problem is to use the multitemporal image data from satellite imagery for repeat experiments. If the investigator uses drone imagery, then it is recommended that he/she shoots when the crop is vigorously growing and does not obscure the field boundary [55].

4.2.2. Topographic Factors

The topographic factors are important influencing factors. Flat areas are more conducive to the automated boundary extraction of plots than non-flat areas [46,55]. Areas with a single terrain are more conducive to the automatic boundary extraction of fields than many different combinations of terrain, and regularly shaped farmland is more conducive to boundary extraction than irregularly shaped farmland [66]. The boundary extraction of special farmlands, such as paddy fields [58], irrigated fields [64], terraces [36], and valleys [56], is feasible. Because it is difficult to control for the exact consistency of variables such as the algorithm type and image resolution, these terrains are difficult to study by comparing the experiments of different researchers. In a small number of studies, the researchers focus on the flatness of the terrain. We present a comparison of the extracted results from these studies in Table 16.

Table 16.

Studies focusing on the flatness of the terrain.

As we can see in the table, the topography has a huge influence on the extraction results. Plains or flat areas are the most suitable areas for automated boundary extraction. The high-level feature extraction algorithms are affected by the terrain, and the effect of the terrain on the lower-level feature class algorithms is smaller. At the same time, Li R. et al. [66] found that regular farmland can greatly improve the resolution compared with irregular farmland. Therefore, the most ideal boundary extraction topographic environment is a regular field in a plain area.

4.2.3. Climatic Factors

Whether in respect of aerial or aerospace imagery, climatic conditions are one of the important influencing factors. Li R. et al. [66] demonstrated that the boundary extraction of images with cloud reflection is more challenging than that of conventional farmland images. Harsh weather can severely interfere with the imagery, or even render it completely worthless. Therefore, it is recommended that researchers who use satellite imagery obtain multitemporal satellite imagery and select a surface image that is as cloud-free and unpolluted as possible [48]. In addition, multispectral sensors can detect aerosols, water vapor, cirrus clouds, etc., which may help reduce the impact of harsh environments. In general, environments with small amounts of cloud cover, sufficient light, and no air pollution are the most suitable.

5. Discussion

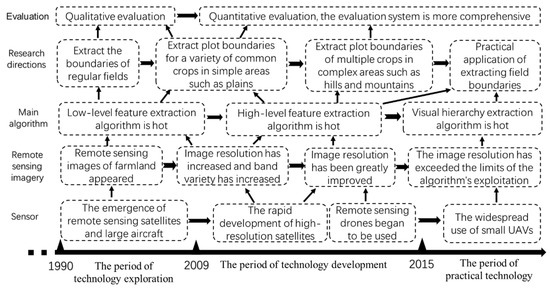

The field of boundary extraction technology based on remote sensing images can be divided into three stages: technology exploration, technology development, and the practical application of the technology. The boundary points of the different stages are blurred. In the technical exploration stage, which was limited by the limited resolution that the early sensors provided, researchers mainly studied low-level feature extraction algorithms, and studies on the quantitative evaluation capabilities are lacking for this stage; thus, researchers could only extract regular plots. During the technological development period, with the development and use of new sensors represented by high-resolution remote sensing satellites and unmanned aerial vehicles, the image resolution has been increased by nearly ten meters, and researchers are using images with different spectra and imaging principles in their experiments, which has promoted the development of the algorithms, and as a result, high-level feature extraction algorithms and comparative scientific quantitative evaluation systems have emerged. During the practical application period of the technology, further improvements in the resolution and extraction accuracy, the emergence of some application cases, and many registrations of related patents [61,104] reflect the practical and landing needs of the algorithms. During this period, visual hierarchy extraction algorithms, with more versatility and with a complex area extraction potential, have become a new algorithm development direction. The evaluation also includes the extraction accuracy, which involves the relevant attributes of the practical requirements, such as the operation time and stability. We present the overall process in Figure 8.

Figure 8.

The development process of farmland boundary extraction technology.