The Prior Model-Guided Network for Bearing Surface Defect Detection

Abstract

1. Introduction

- (1)

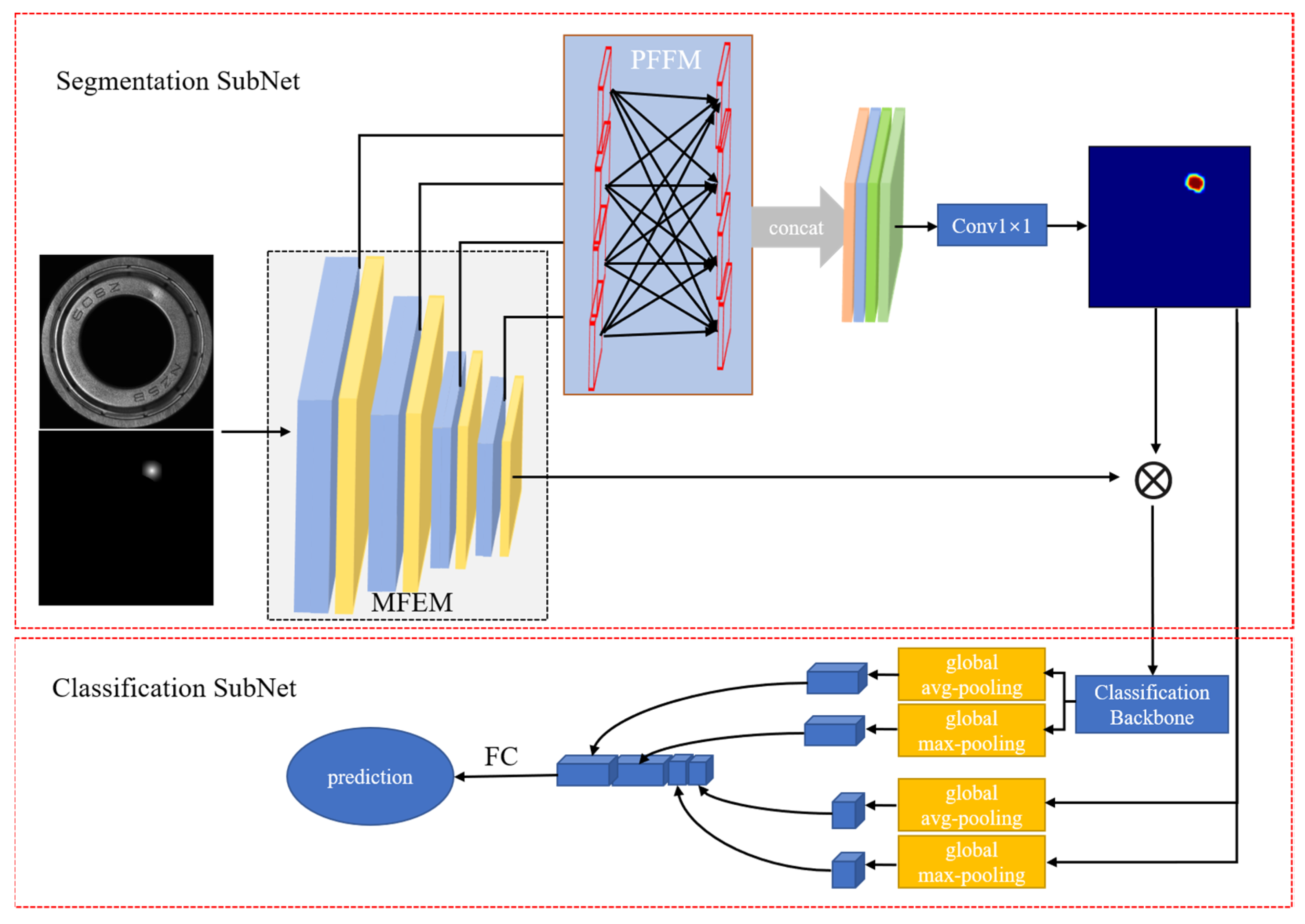

- A prior model-guided network (PMG-Net) was proposed for defect detection, which uses the segmentation network as the prior model to guide the classification network to better learn the features of objects. And a pyramid feature fusion module is introduced in the segmentation network to fully combine context information. This improves the classification accuracy of the proposed method.

- (2)

- To verify the performance of the proposed method, we established a large-scale dataset of pit defects on the bearing cover surfaces in real industrial scenarios for deep learning.

- (3)

- The center distance transformation formula acted as the weight label was introduced to the segmentation network to reduce the label cost.

2. Related Works

2.1. Traditional Method

2.2. Deep Learning

3. Methods

3.1. Overview

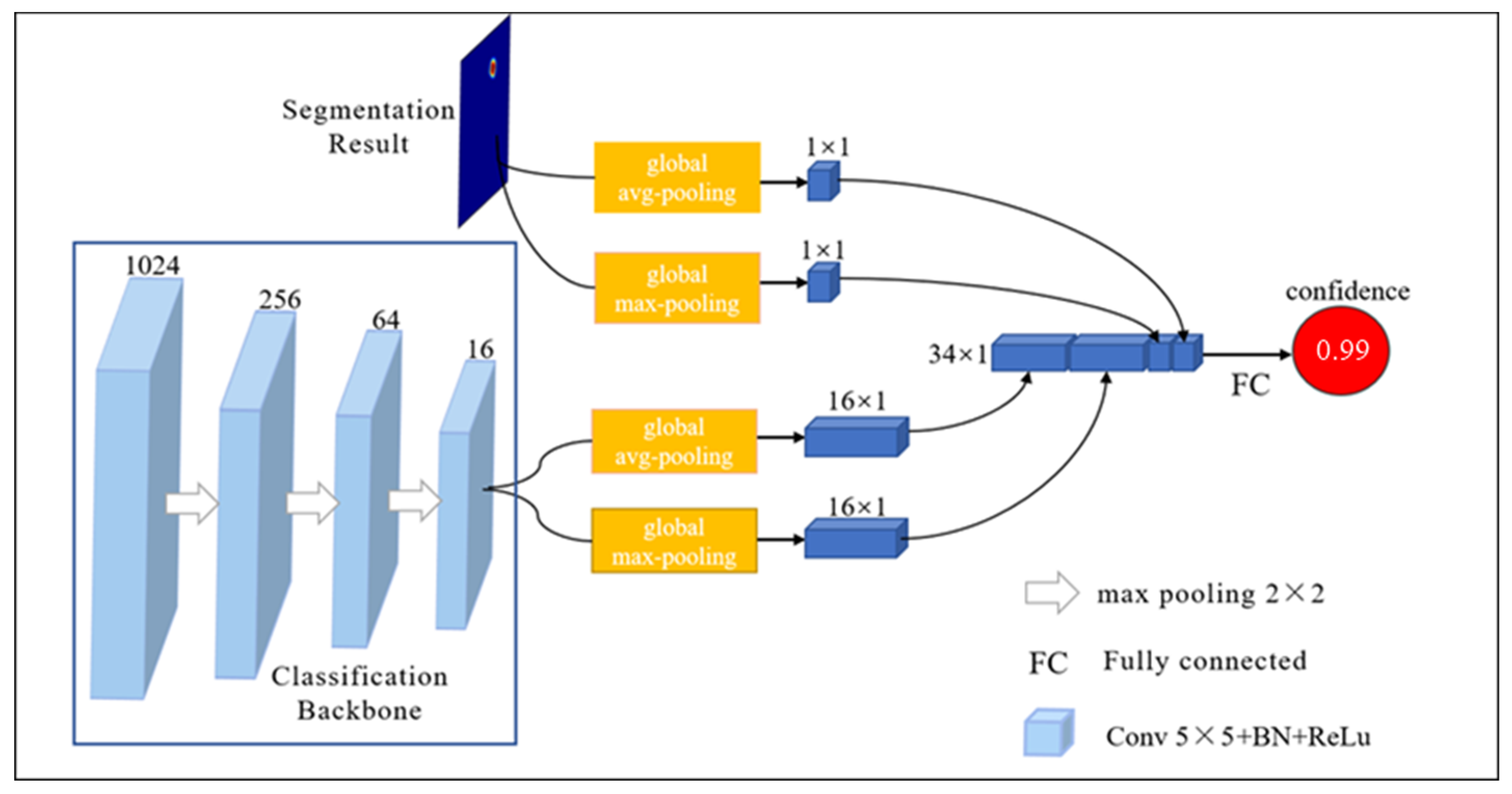

3.2. Multilevel Features Extraction Module (MFEM)

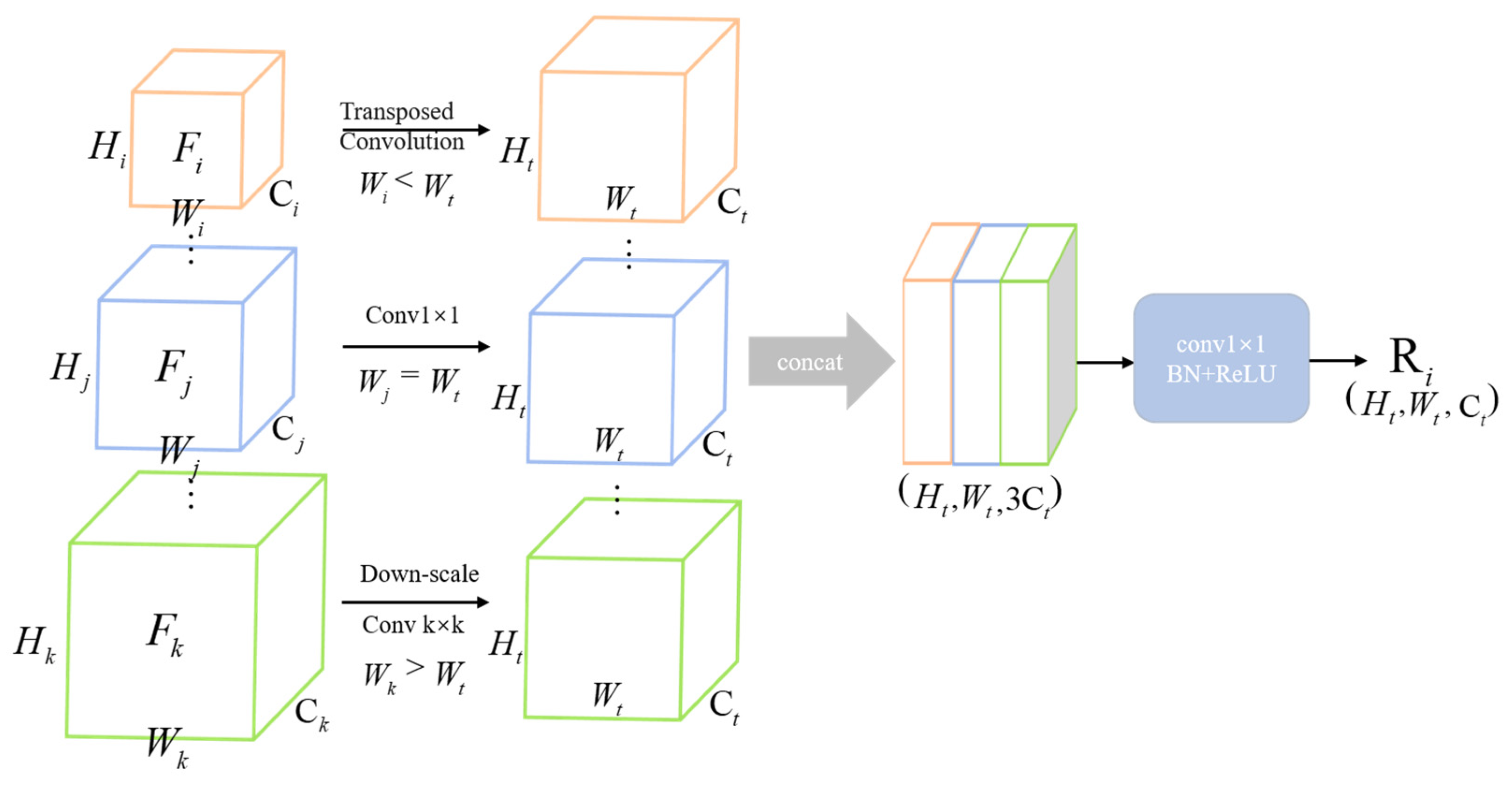

3.3. Pyramid Feature Fusion Module (PFFM)

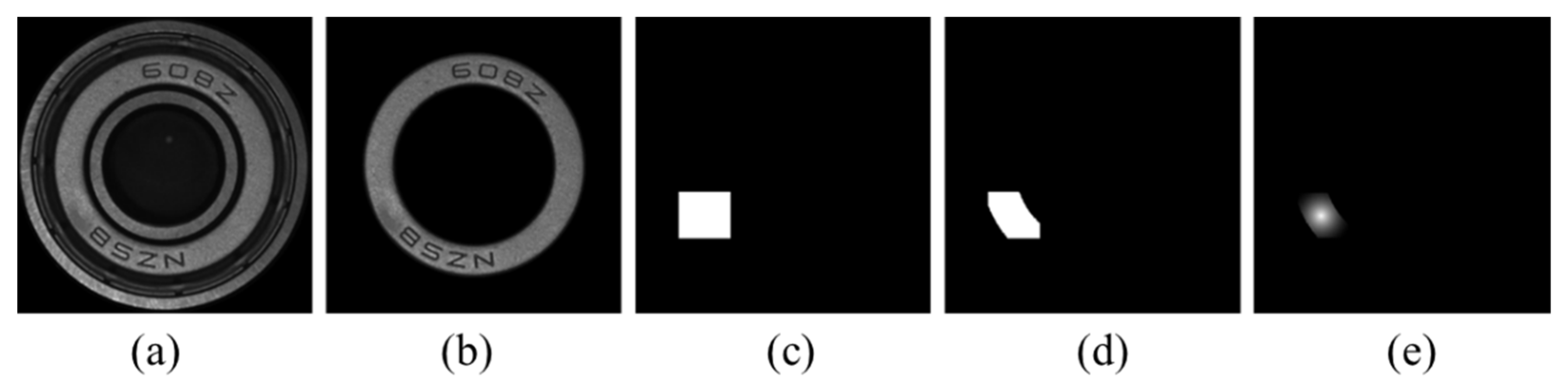

3.4. Weight Label

3.5. Classification Subnet Module

3.6. Training Constraints

4. Experiment

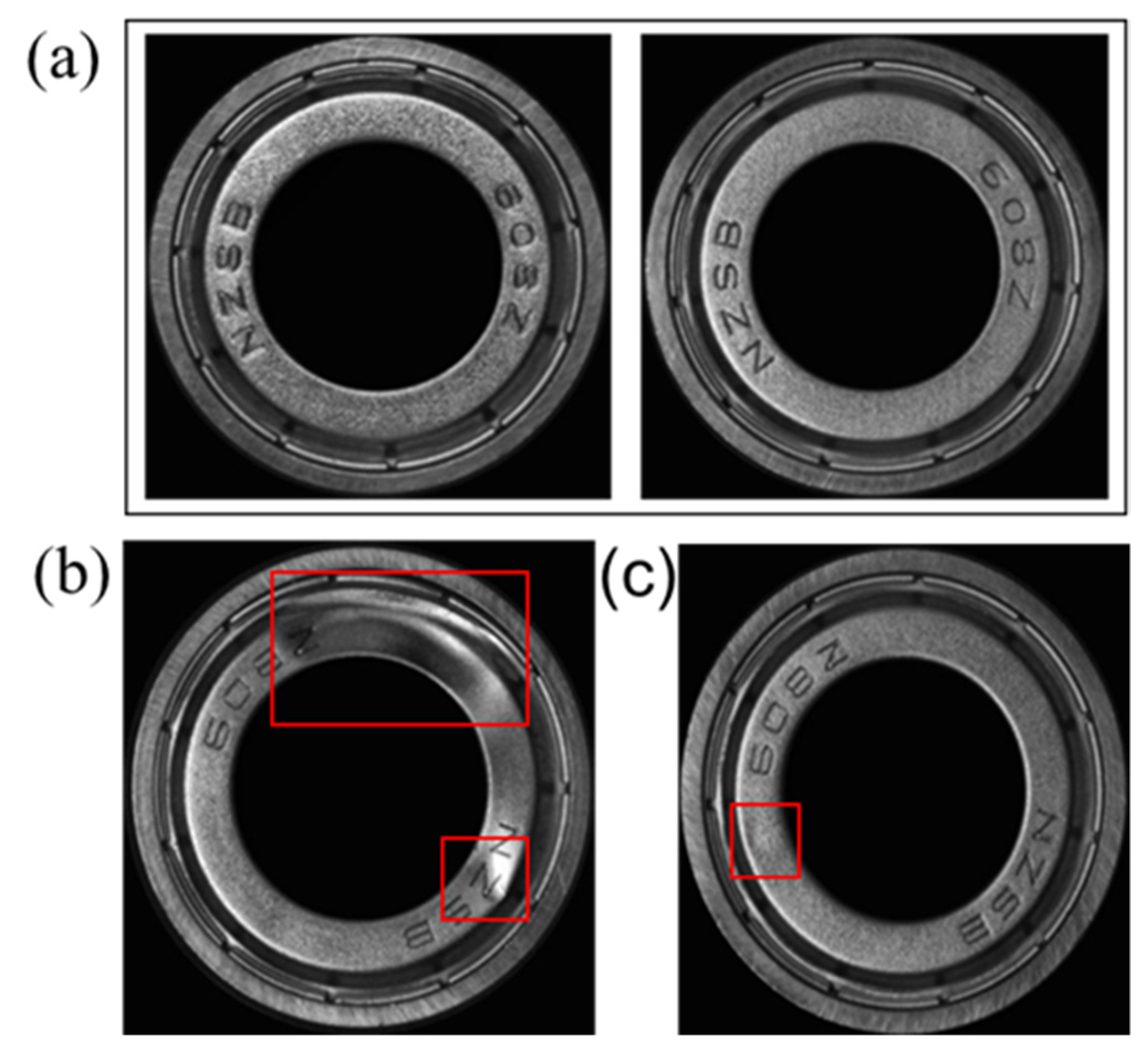

4.1. Dataset

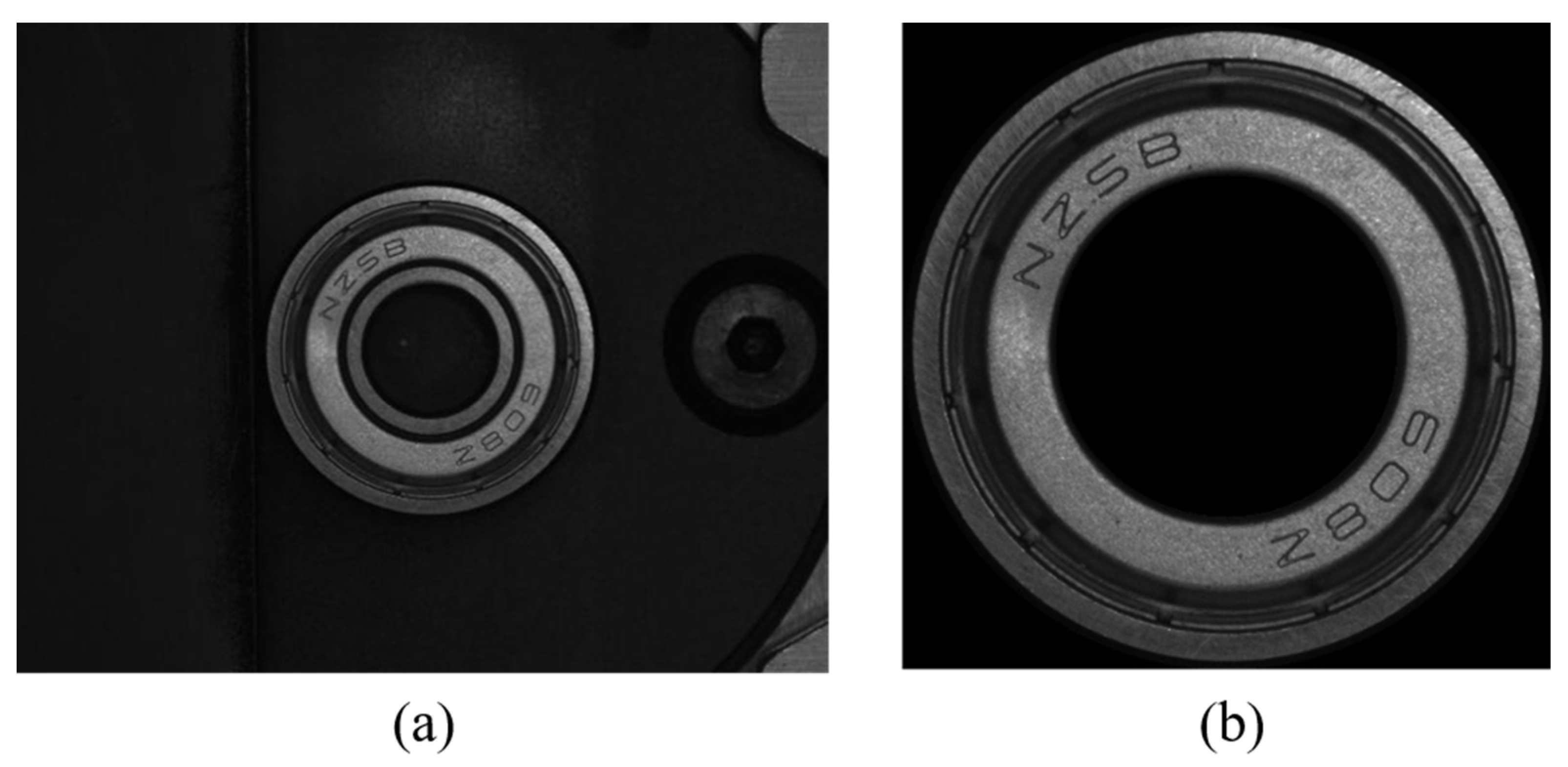

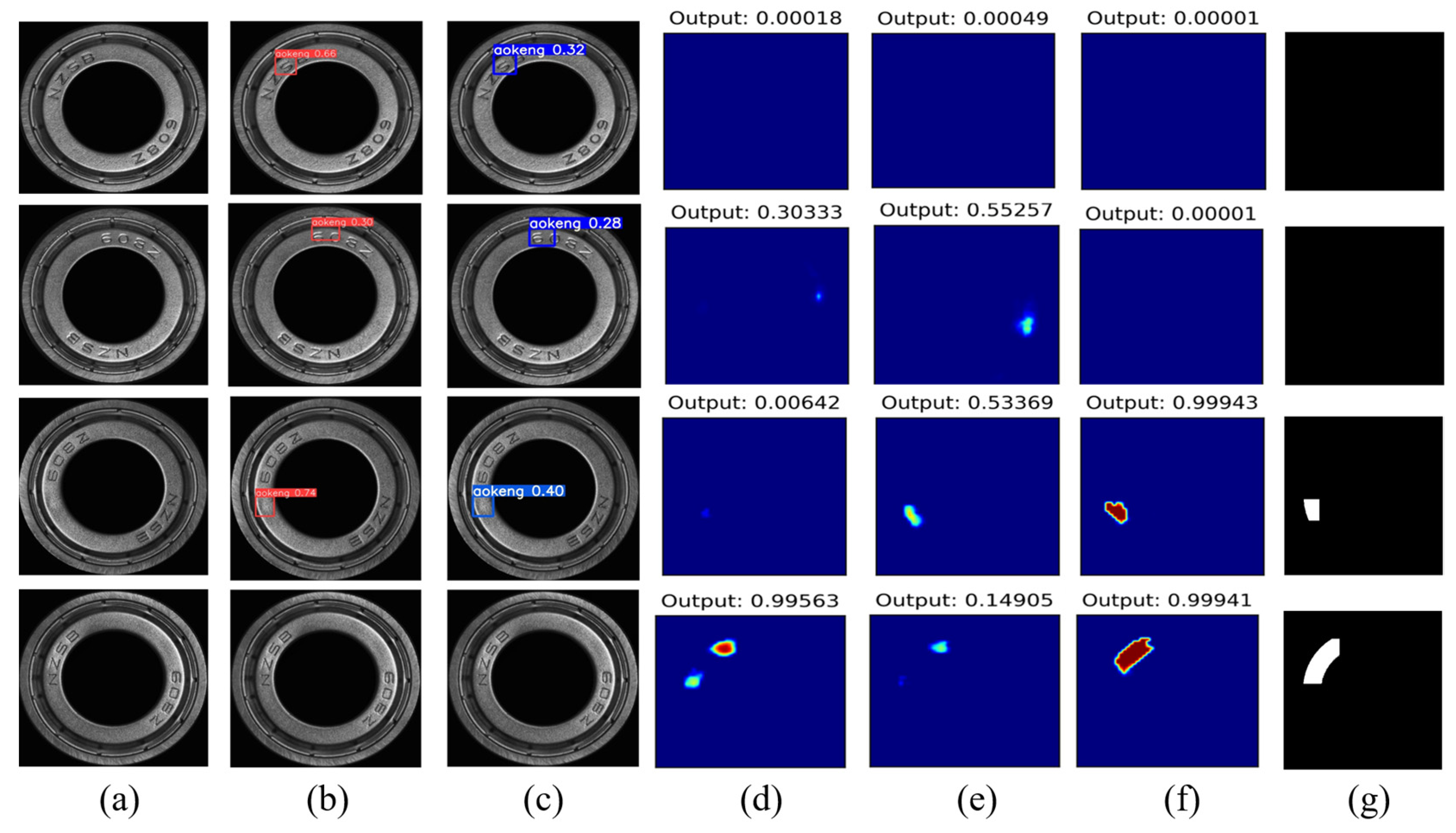

4.1.1. Bearing Dataset

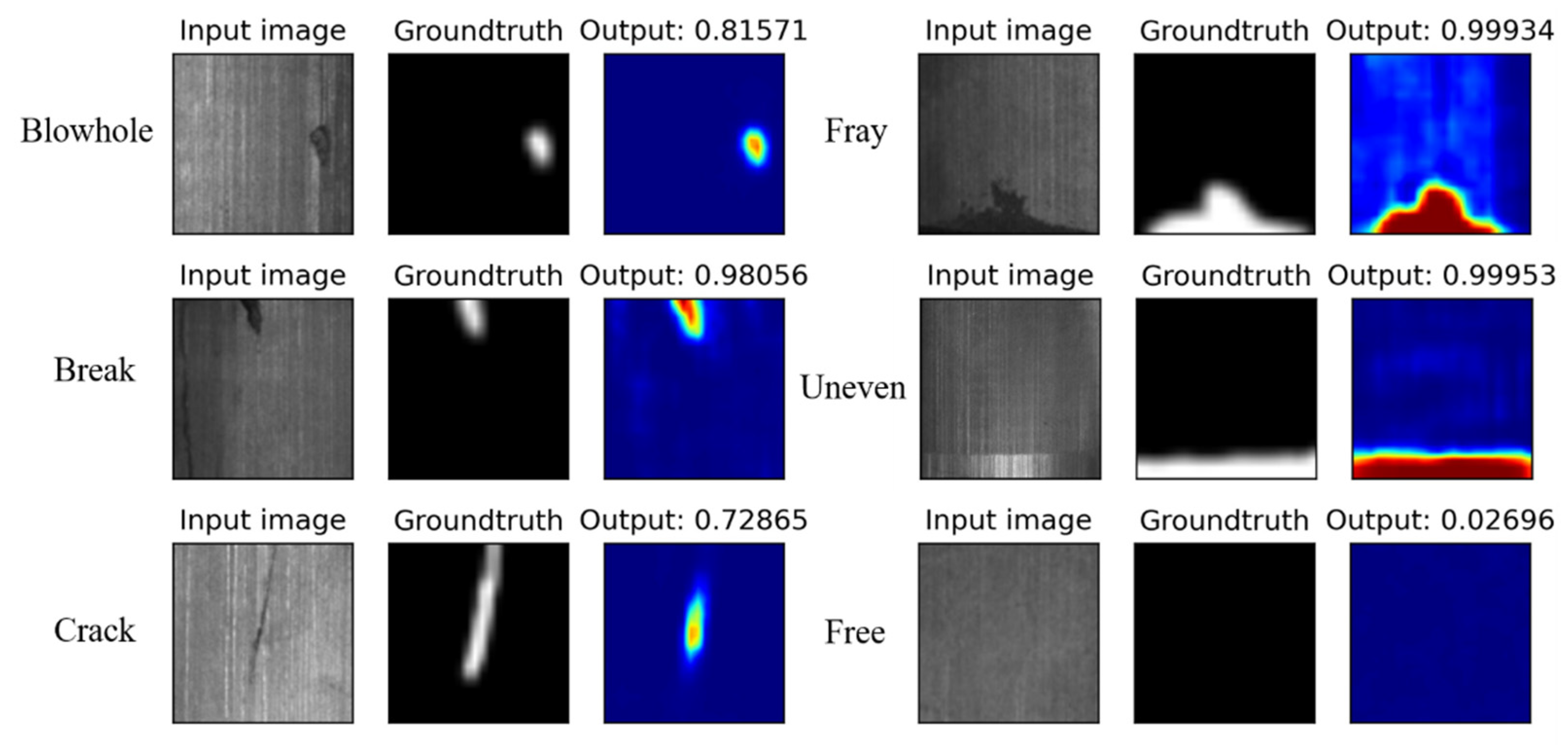

4.1.2. Magnetic-Tile Dataset

4.2. Implementation Details

4.3. Performance Metrics

4.4. Experiment Results and Analysis

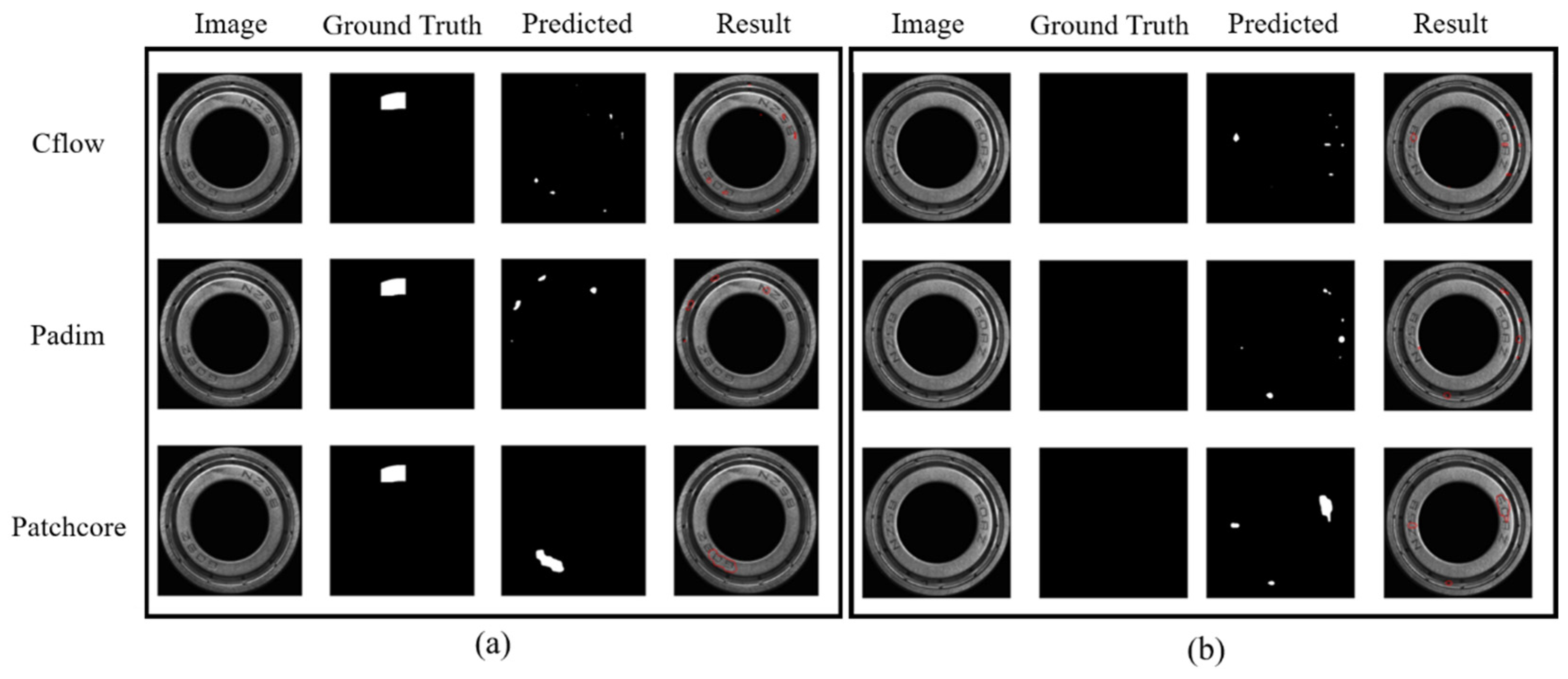

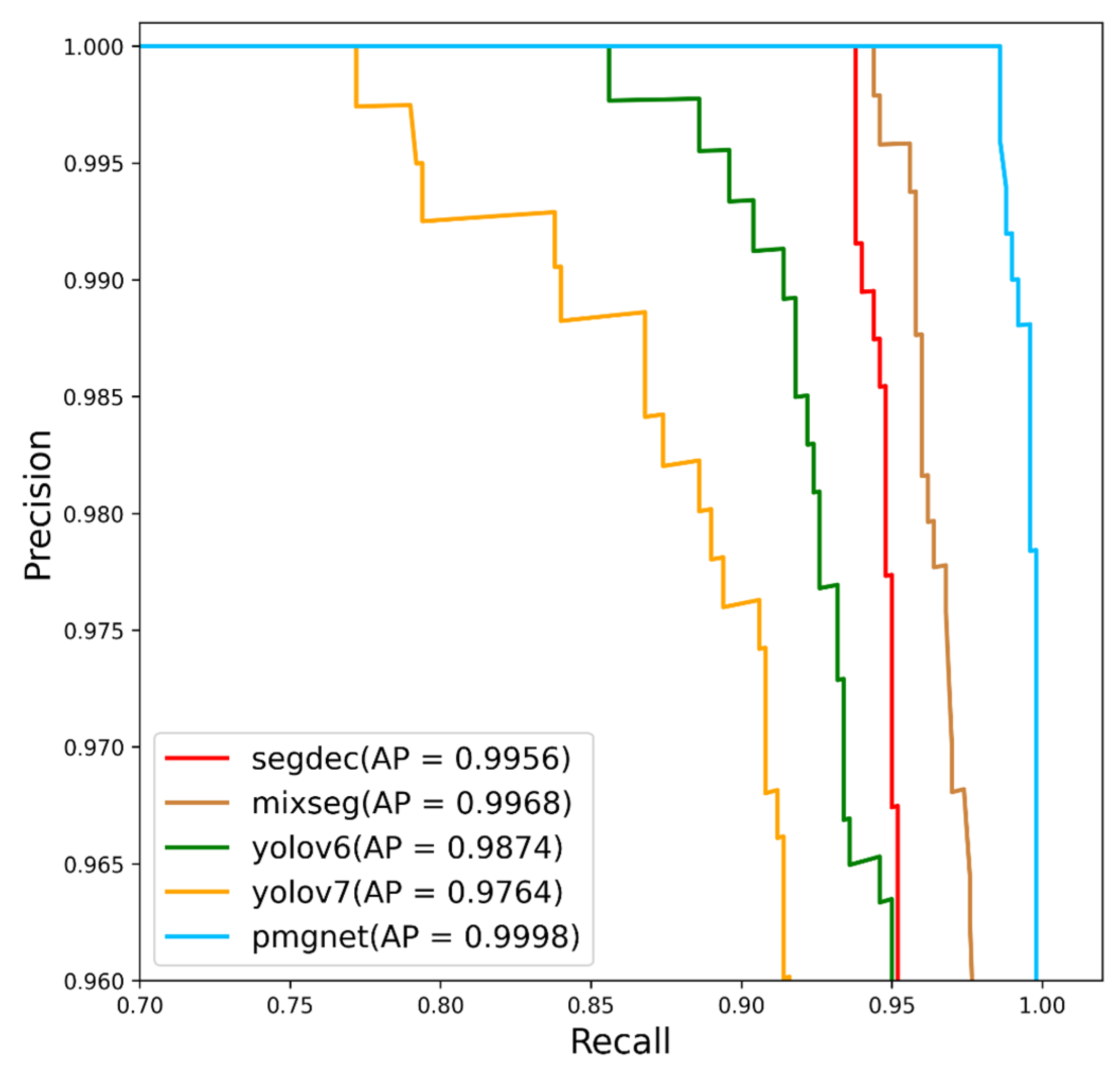

4.4.1. Methods Comparison

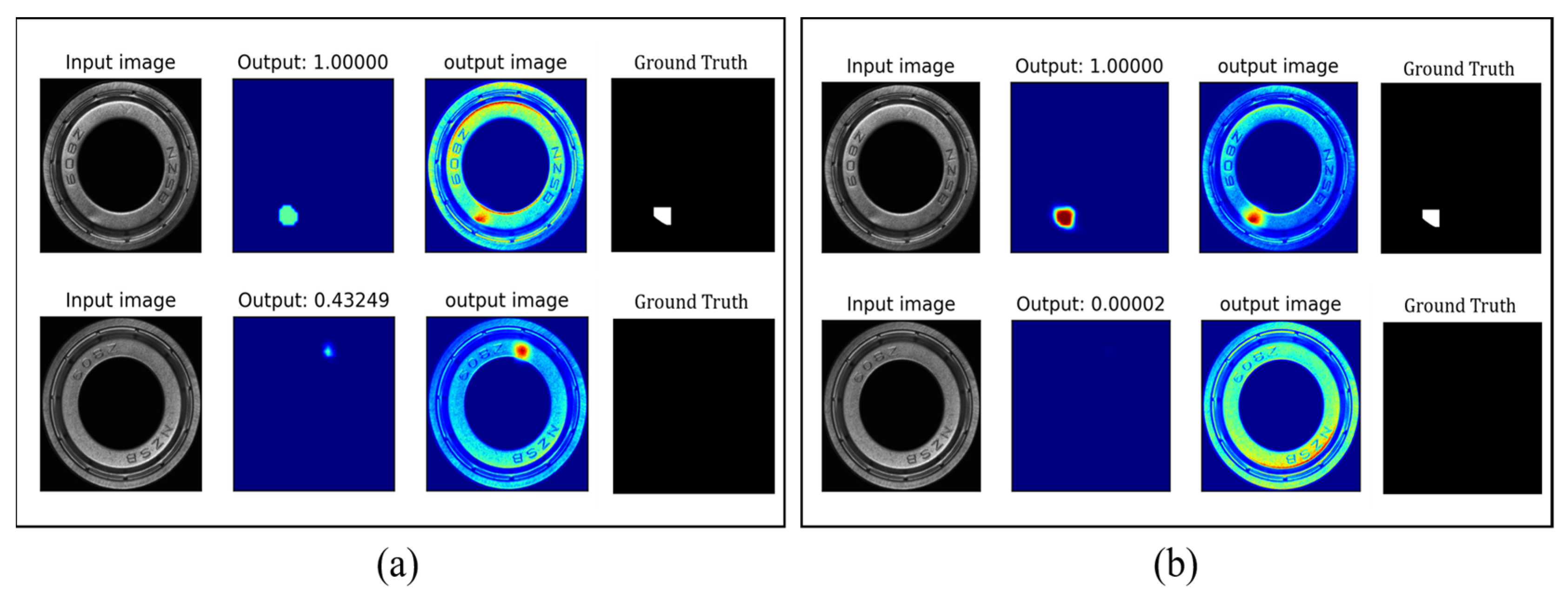

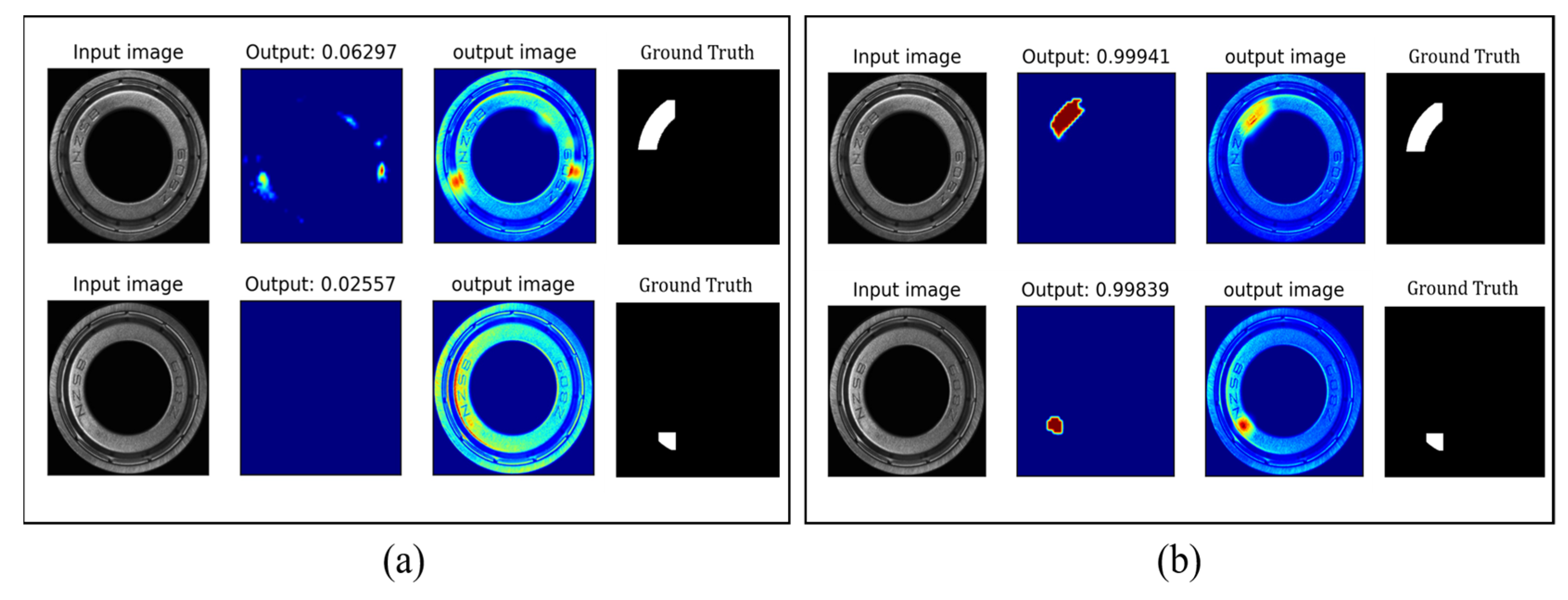

4.4.2. Ablation Experiment

4.4.3. Robustness Experiment

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liang, P.; Deng, C.; Wu, J.; Yang, Z.; Zhu, J. Intelligent fault diagnosis of rolling element bearing based on convolutional neural network and frequency spectrograms. In Proceedings of the 2019 IEEE International Conference on Prognostics and Health Management (ICPHM), San Francisco, CA, USA, 17–20 June 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Volkau, I.; Mujeeb, A.; Dai, W.; Erdt, M.; Sourin, A. The Impact of a Number of Samples on Unsupervised Feature Extraction, Based on Deep Learning for Detection Defects in Printed Circuit Boards. Futur. Internet 2021, 14, 8. [Google Scholar] [CrossRef]

- Onchis, D.M.; Gillich, G.-R. Stable and explainable deep learning damage prediction for prismatic cantilever steel beam. Comput. Ind. 2020, 125, 103359. [Google Scholar] [CrossRef]

- Weimer, D.; Scholz-Reiter, B.; Shpitalni, M. Design of deep convolutional neural network architectures for au-tomated feature extraction in industrial inspection. CIRP Ann. 2016, 65, 417–420. [Google Scholar] [CrossRef]

- Yang, Y.; Yang, R.; Pan, L.; Ma, J.; Zhu, Y.; Diao, T.; Zhang, L. A lightweight deep learning algorithm for inspection of laser welding defects on safety vent of power battery. Comput. Ind. 2020, 123, 103306. [Google Scholar] [CrossRef]

- Yu, J.; Zheng, X.; Liu, J. Stacked convolutional sparse denoising auto-encoder for identification of defect patterns in semiconductor wafer map. Comput. Ind. 2019, 109, 121–133. [Google Scholar] [CrossRef]

- Lin, H.; Li, B.; Wang, X.; Shu, Y.; Niu, S. Automated defect inspection of LED chip using deep con-volutional neural network. J. Intell. Manuf. 2019, 30, 2525–2534. [Google Scholar] [CrossRef]

- Mahendran, A.; Vedaldi, A. Understanding deep image representations by inverting them. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5188–5196. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Li, G.; Yu, Y. Visual saliency based on multiscale deep features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5455–5463. [Google Scholar]

- Zhao, R.; Ouyang, W.; Li, H.; Wang, X. Saliency detection by multi-context deep learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1265–1274. [Google Scholar]

- Deng, C.; Wang, M.; Liu, L.; Liu, Y.; Jiang, Y. Extended Feature Pyramid Network for Small Object Detection. IEEE Trans. Multimedia 2021, 24, 1968–1979. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Dong, H.; Song, K.; He, Y.; Xu, J.; Yan, Y.; Meng, Q. PGA-Net: Pyramid Feature Fusion and Global Context Attention Network for Automated Surface Defect Detection. IEEE Trans. Ind. Informatics 2019, 16, 7448–7458. [Google Scholar] [CrossRef]

- Liu, B.; Yang, Y.; Wang, S.; Bai, Y.; Yang, Y.; Zhang, J. An automatic system for bearing surface tiny defect detection based on multi-angle illuminations. Optik 2020, 208, 164517. [Google Scholar] [CrossRef]

- Shen, H.; Li, S.; Gu, D.; Chang, H. Bearing defect inspection based on machine vision. Measurement 2012, 45, 719–733. [Google Scholar] [CrossRef]

- Van, M.; Kang, H.-J. Wavelet Kernel Local Fisher Discriminant Analysis With Particle Swarm Optimization Algorithm for Bearing Defect Classification. IEEE Trans. Instrum. Meas. 2015, 64, 3588–3600. [Google Scholar] [CrossRef]

- Pacas, M. Sensorless harmonic speed control and detection of bearing faults in repetitive mechanical systems. In Proceedings of the 2017 IEEE 3rd International Future Energy Electronics Conference and ECCE Asia (IFEEC 2017-ECCE Asia), Kaohsiung, Taiwan, 3–7 June 2017; pp. 1646–1651. [Google Scholar]

- Chen, S.-H.; Perng, D.-B. Automatic Surface Inspection for Directional Textures Using Discrete Cosine Transform. In Proceedings of the THE 2009 Chinese Conference on Pattern Recognition, Nanjing, China, 4–6 November 2009; pp. 1–5. [Google Scholar] [CrossRef]

- Huang, Y.; Qiu, C.; Wang, X.; Wang, S.; Yuan, K. A Compact Convolutional Neural Network for Surface Defect Inspection. Sensors 2020, 20, 1974. [Google Scholar] [CrossRef]

- Kim, S.; Kim, W.; Noh, Y.-K.; Park, F. Transfer learning for automated optical inspection. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 2517–2524. [Google Scholar]

- Lin, Z.; Ye, H.; Zhan, B.; Huang, X. An Efficient Network for Surface Defect Detection. Appl. Sci. 2020, 10, 6085. [Google Scholar] [CrossRef]

- Wang, T.; Chen, Y.; Qiao, M.; Snoussi, H. A fast and robust convolutional neural network-based defect detection model in product quality control. Int. J. Adv. Manuf. Technol. 2017, 94, 3465–3471. [Google Scholar] [CrossRef]

- Haselmann, M.; Gruber, D.; Tabatabai, P. Anomaly detection using deep learning based image completion. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 1237–1242. [Google Scholar]

- Youkachen, S.; Ruchanurucks, M.; Phatrapomnant, T.; Kaneko, H. Defect Segmentation of Hot-rolled Steel Strip Surface by using Convolutional Auto-Encoder and Conventional Image processing. In Proceedings of the 2019 10th International Conference of Information and Communication Technology for Embedded Systems (IC-ICTES), Bangkok, Thailand, 25–27 March 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Zhai, W.; Zhu, J.; Cao, Y.; Wang, Z. A Generative Adversarial Network Based Framework for Unsupervised Visual Surface Inspection. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 1283–1287. [Google Scholar] [CrossRef]

- Roth, K.; Pemula, L.; Zepeda, J.; Schölkopf, B.; Brox, T.; Gehler, P. Towards total recall in in-dustrial anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 14318–14328. [Google Scholar]

- Defard, T.; Setkov, A.; Loesch, A.; Audigier, R. PaDiM: A Patch Distribution Modeling Framework for Anomaly Detection and Localization. In Proceedings of the Pattern Recognition. ICPR International Workshops and Challenges, Virtual Event, 10–15 January 2021; LNCS Volume 12664, pp. 475–489. [Google Scholar] [CrossRef]

- Gudovskiy, D.; Ishizaka, S.; Kozuka, K. CFLOW-AD: Real-Time Unsupervised Anomaly Detection with Localization via Conditional Normalizing Flows. In Proceedings of the 2022 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2022; pp. 1819–1828. [Google Scholar] [CrossRef]

- Yang, D.; Cui, Y.; Yu, Z.; Yuan, H. Deep Learning Based Steel Pipe Weld Defect Detection. Appl. Artif. Intell. 2021, 35, 1237–1249. [Google Scholar] [CrossRef]

- Cha, Y.J.; Choi, W.; Suh, G.; Mahmoudkhani, S.; Büyüköztürk, O. Autonomous structural visual in-spection using region-based deep learning for detecting multiple damage types. Comput. Aided Civ. Infrastruct. Eng. 2018, 33, 731–747. [Google Scholar] [CrossRef]

- Zhong, J.; Liu, Z.; Han, Z.; Han, Y.; Zhang, W. A CNN-Based Defect Inspection Method for Catenary Split Pins in High-Speed Railway. IEEE Trans. Instrum. Meas. 2018, 68, 2849–2860. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhao, J.; Li, Y. Research on Detecting Bearing-Cover Defects Based on Improved YOLOv3. IEEE Access 2021, 9, 10304–10315. [Google Scholar] [CrossRef]

- Khan, A.; Chefranov, A.; Demirel, H. Image scene geometry recognition using low-level features fusion at multi-layer deep CNN. Neurocomputing 2021, 440, 111–126. [Google Scholar] [CrossRef]

- Akinlar, C.; Topal, C. EDCircles: A real-time circle detector with a false detection control. Pattern Recognit. 2012, 46, 725–740. [Google Scholar] [CrossRef]

- Božič, J.; Tabernik, D.; Skočaj, D. Mixed supervision for surface-defect detection: From weakly to fully supervised learning. Comput. Ind. 2021, 129, 103459. [Google Scholar] [CrossRef]

- Huang, Y.; Qiu, C.; Yuan, K. Surface defect saliency of magnetic tile. Vis. Comput. 2018, 36, 85–96. [Google Scholar] [CrossRef]

- Tabernik, D.; Šela, S.; Skvarč, J.; Skočaj, D. Segmentation-based deep-learning approach for surface-defect detection. J. Intell. Manuf. 2020, 31, 759–776. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

| Layer | Type |

|---|---|

| Input | C = 1 |

| Layer1 | [Conv 5 × 5 + BN + ReLu, C = 32] × 2 max pooling 2 × 2 |

| Layer2 | [Conv 5 × 5 + BN + ReLu, C = 64] × 3 max pooling 2 × 2 |

| Layer3 | [Conv 5 × 5 + BN + ReLu, C = 128] × 4 max pooling 2 × 2 |

| Layer4 | [Conv 5 × 5 + BN + ReLu, C = 256] × 2 max pooling 2 × 2 |

| Stage | R1 | R2 | R3 | R4 |

|---|---|---|---|---|

| Layer 1 | D: 1 × 1, s = 1 | D: 2 × 2, s = 2 | D: 4 × 4, s = 4 | D: 8 × 8, s = 8 |

| Layer 2 | T: 2 × 2, s = 2 | D: 1 × 1, s = 1 | D: 2 × 2, s = 2 | D: 4 × 4, s = 4 |

| Layer 3 | T: 4 × 4, s = 4 | T: 2 × 2, s = 2 | D: 1 × 1, s = 1 | D: 2 × 2, s = 2 |

| Layer 4 | T: 8 × 8, s = 8 | T: 4 × 4, s = 4 | T: 2 × 2, s = 2 | D: 1 × 1, s = 1 |

| Type | Train | Valid | Test |

|---|---|---|---|

| Defect | 960 | 240 | 500 |

| Non-defect | 2400 | 600 | 500 |

| Method | AP | F1 | ACC | FP + FN |

|---|---|---|---|---|

| MixSeg [37] | 0.996 | 0.972 | 0.973 | 3 + 24 |

| SegDecNet [39] | 0.995 | 0.969 | 0.970 | 5 + 25 |

| Yolov6 [40] | 0.987 | 0.958 | 0.959 | 20 + 21 |

| Yolov7 [41] | 0.976 | 0.939 | 0.942 | 12 + 46 |

| PMG-Net (ours) | 0.999 | 0.993 | 0.993 | 0 + 7 |

| SegPM | PFFM | Weight Label | ACC | FP + FN |

|---|---|---|---|---|

| 0.853 | 75 + 72 | |||

| √ | 0.949 | 21 + 30 | ||

| √ | √ | 0.965 | 10 + 25 | |

| √ | √ | 0.972 | 18 + 10 | |

| √ | √ | √ | 0.993 | 0 + 7 |

| Type | Blowhole | Crack | Break | Fray | Uneven |

|---|---|---|---|---|---|

| AP | 1.0 | 1.0 | 0.996 | 0.954 | 0.975 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, H.; Zhuang, J.; Chen, X.; Song, K.; Xiao, J.; Ye, S. The Prior Model-Guided Network for Bearing Surface Defect Detection. Electronics 2023, 12, 1142. https://doi.org/10.3390/electronics12051142

Feng H, Zhuang J, Chen X, Song K, Xiao J, Ye S. The Prior Model-Guided Network for Bearing Surface Defect Detection. Electronics. 2023; 12(5):1142. https://doi.org/10.3390/electronics12051142

Chicago/Turabian StyleFeng, Hanfeng, Jiayan Zhuang, Xiyu Chen, Kangkang Song, Jiangjian Xiao, and Sichao Ye. 2023. "The Prior Model-Guided Network for Bearing Surface Defect Detection" Electronics 12, no. 5: 1142. https://doi.org/10.3390/electronics12051142

APA StyleFeng, H., Zhuang, J., Chen, X., Song, K., Xiao, J., & Ye, S. (2023). The Prior Model-Guided Network for Bearing Surface Defect Detection. Electronics, 12(5), 1142. https://doi.org/10.3390/electronics12051142