Abstract

Drowsy driving causes many accidents. Driver alertness and automobile control are challenged. Thus, a driver drowsiness detection system is becoming a necessity. In fact, invasive approaches that analyze electroencephalography signals with head electrodes are inconvenient for drivers. Other non-invasive fatigue detection studies focus on yawning or eye blinks. The analysis of several facial components has yielded promising results, but it is not yet enough to predict hypovigilance. In this paper, we propose a “non-invasive” approach based on a deep learning model to classify vigilance into five states. The first step is using MediaPipe Face Mesh to identify the target areas. This step calculates the driver’s gaze and eye state descriptors and the 3D head position. The detection of the iris area of interest allows us to compute a normalized image to identify the state of the eyes relative to the eyelids. A transfer learning step by the MobileNetV3 model is performed on the normalized images to extract more descriptors from the driver’s eyes. Our LSTM network entries are vectors of the previously calculated features. Indeed, this type of learning allows us to determine the state of hypovigilance before it arrives by considering the previous learning steps, classifying the levels of vigilance into five categories, and alerting the driver before the state of hypovigilance’s arrival. Our experimental study shows a 98.4% satisfaction rate compared to the literature. In fact, our experimentation begins with the hyperparameter preselection to improve our results.

1. Introduction

Hypovigilance is a condition in between being awake and sleepy, and it carries with it the danger of falling asleep with one’s eyes closed for a while. This condition is not chosen and causes the individual to become less alert. Hypovigilance manifests itself in a variety of ways [1], including yawning, slow reflexes, droopy eyelids, tingling eyes, a desire to shut the eyes for a second, the requirement to relax, the need to constantly change positions, episodes of “micro-sleeps” (about 2–5 s), and trouble keeping the head up.

Accidents caused by sleepiness were more common on the highways and major roads. They take place both at night and throughout the day. Driver fatigue increases the risk of a vehicle’s skidding, losing control, or not being stopped in time. Due to these merits, we believe that the drowsiness state should be taken seriously. A significant portion of annual traffic accidents may be attributable to drowsiness, according to experts [2]. Drowsy drivers are more likely to make mistakes and cause accidents because they respond more slowly than sober drivers to potential threats, crises, and other events. These drivers’ severely diminished alertness results in poor task performance because they are unable to appraise any given situation.

Indeed, the areas of medicine and psychology have benefited greatly from recent discoveries in computing. In fact, researchers are trying to pin down the specific environmental and interpersonal factors that contribute to people’s emotional states [3,4,5]. The human–computer interaction (HCI) area known as “affective computing” examines how computers may help humans process and understand our emotions. In 1997, Picard [6] established the core ideas of identifying, interpreting, and expressing affect to allow computers to recognize, comprehend, express, and replicate human emotions. Human driving behavior, as a domain of affective computing, may be studied and understood in more detail via the study of driver tiredness detection. The state of hypovigilance is primarily manifested by a reduction in vigilance with the appearance of various behavioral signals, such as decreased reflexes, the presence of yawning, the heaviness of the eyelids, and the inability to keep one’s head in a frontal position. The suggested solutions have the common ground of relying on the monitoring of the driver’s physiological and behavioral cues. Some methods that are considered intrusive are used. In most circumstances, an electroencephalographic signal is used to determine the driver’s psychological and behavioral states. However, so-called non-invasive procedures that depend merely on visual signals related to a drop in attentiveness make use of behavioral indications. A camera installed in the car may record the driver’s facial expressions and other indicators, such as a yawn, blink, or gaze. So-called non-invasive approaches are more amenable to use in real-world settings because they impose less technological limitations on the deployment. The purpose of our work is to employ a non-intrusive approach based only on footage from a camera mounted in front of the vehicle to assess the driver’s level of tiredness. There has to be a way to identify signs of drowsiness in the motorist before they really fall asleep behind the wheel.

The innovative work in this work includes seven main aspects:

- First, the detection of facial landmarks and the 3D head position MediaPipe Face Mesh which uses approaches linked to machine learning and has shown its robustness in terms of its accuracy and speed compared to other approaches in the literature.

- Second, estimating the driver’s gaze based on the relationship between the iris and the eyes makes it possible to get a better idea of how tired or distracted the driver is.

- Third, the calculation of a normalized image of the iris is seen as a way to add more useful features to the MobileNetV3 model.

- Fourth, the concatenation of several features linked to the eyes and head position gives us more information, which makes it easier to find out if the driver is tired.

- Fifth, the choice of the deep neural networks by the LSTM in our system in order to take not only the current state of the driver but also the previous states.

- Sixth, we conduct our first experiment, which lets us pick the best hyperparameters to make our training model better.

- Seventh, a detection of five levels of driver states is realized in this work in order to alert the driver to their state of hypovigilance to avoid accidents.

This paper is distributed as follows:

In Section 2, we describe the approaches linked to the literature, detailing the work carried out in those that analyze the driver’s eye blinks, those that are based on the location of the mouth and the detection of yawns, and those that use several components of the face. A description and discussion of each approach described in the state-of-the-art section is carried out in order to determine the advantages and disadvantages of each work. This study of the literature allows us to justify the choice of our approach because we find it non-invasive.

In Section 3, a detailed description of the proposed methodology is elaborated. In fact, our approach consists first and foremost of justified pre-processing in order to properly determine the descriptors to be extracted, either by a spatiotemporal analysis or by a transfer learning method. These features constitute the input of our deep learning model, which makes it possible to classify five levels of driver fatigue.

In Section 4, a set of experiments on three databases is carried out. These experiments allow us to validate the proposed approach and compare our results with the existing works.

Section 5 summarizes this paper’s contributions and suggests further study.

2. Related Works

Face recognition [7], facial expression [8], and head tracking [9] are all areas of computer vision research that have made big strides in recent years. These steps have helped image-based technologies grow quickly. We can put these methods into three main groups: ones that use blinking, ones that use yawning, and ones that use both. In this section, we discuss several works that are related to our own work, which is centered on the detection of driver fatigue.

2.1. Eye Blinking Approaches

Approaches based on eye blinking to detect driver fatigue are common. Cyganek et al. [10] suggested using two cameras to identify sleepiness levels in drivers. In this method, the eye areas are found by using a pair of recognition models in a cascade. The first model is used to find the position of the eye, and the second model confirms the position of the eye. To identify a driver’s eyes and an estimated eye state, Gou et al. [11] suggested a cascading regression technique. At each iteration, the image pixel value of the eye’s center and features from its corners and eyelids are used to find the eye’s regions and the probability that it is open. After identifying the driver’s face using Haar feature classifiers, Ibrahim et al. [12] utilized a correlation matching technique to find and follow the driver’s eyes based on their size, intensity, and shape. An eye-based sleepiness detection device was suggested by Mandal et al. [13] to keep tabs on bus drivers. This method uses two different ways to find the eyeballs and then uses spectral regression to estimate on a continuous scale how open both eyes are. Next, Mandal et al. [13] utilized adaptive integration to combine the degrees of openness in both eyes in order to calculate the proportion of time that the bus driver’s eyelids were closed. For the purpose of determining whether or not a driver’s eyes are closed, multiscale histograms of principal-oriented gradients were developed by Song et al. [14]. You at al. [15] captured a motorist’s nighttime picture using a near-infrared camera and then utilized a spline function to match the eyelid curve, all in the name of determining whether or not the driver was sleepy. To develop a deep integrated neural network (DINN) for identifying a driver’s eye state, Zhao et al. [16] combined a convolutional neural network (CNN) with a deep neural network (DNN). To boost the DINN’s efficiency, Zhao et al. [16] pretrained the integrated model using transfer learning. In experiments conducted in a driving context, the DINN identification rates reached 97%. Anitha et al. [17] suggested a continuous surveillance system that monitors the eye gaze to address the issue of driver concentration. Once the driver’s face has been found in the video, the driver’s eye movements will be tracked in real time. Images are learned and classified by using the Viola and Jones face recognition algorithm so that a warning signal sounds if the eyes remain closed for an extended length of time.

2.2. Approaches Based on Mouth and Yawning Detection

When pilots begin to feel sleepy, they generally start yawning. A lot of recent research has focused on detecting driver fatigue using mouth status extraction. To determine if a person yawned or not, Akrout et al. [18] utilized an active contours algorithm to locate the driver’s lips and then estimated the lip region to indicate the mouth’s status using the optical flow technique. Omidyeganeh et al. [19] employed the reverse projection concept for detecting the variations in the driver’s mouth status to identify yawns, as well as a customized version of the Viola–Jones detector for mouth and face localization. In order to identify yawning activity in videos of drivers, Zhang et al. [20] employed convolutional neural networks (CNNs) to generate spatial images of mouth states and a long short-term memory (LSTM) network to categorize these images. After employing deep learning and Kalman filter-based methods to identify and follow the driver’s nose and face, Zhang et al. [21] employed a neural network to identify yawning by analyzing the confidence value of the nose monitoring, properties of the face’s movement, and gradients around the mouth’s borders. Wang et al. [22] used a Kalman filter for mouth tracking based on the extraction of geometric features (height, width, and the height between the upper and lower lips). These features are put together into a vector and fed into the BP neural network to figure out how the mouth is moving (yawning, mouth closed, talking, etc.) to find out if the driver is tired.

2.3. Approaches Based on Several Components of the Face

Both of the above methods are often employed to detect human sleepiness due to the significant changes in the eye or mouth behavior that occur when drivers are tired. If you only look at the eyes or lips, though, you might not be able to tell who someone is because you are missing important clues from the other organs. The results of the study showed that combining the features of the eyes, lips, and other parts of the face can make it much easier to tell if someone is tired or not. Torres et al. [23] proposed a non-invasive technique for discreetly differentiating between the vehicle’s driver and passengers during a message read-out. They put information gathered from smartphone sensors into a machine learning model. They tried out different simulations and looked at seven of the most advanced machine learning methods in different situations. The authors’ model uses a convolutional neural network with gradient boosting. To measure anxiety, Benoit et al. [24] created a simulation tool that takes in both visual (facial expression) and physiological information. In addition to the electrocardiogram (ECG), the model used the driver’s blink rate, yaw rate, and head tilt angle to figure out how stressed the driver was. DrowsyDet was introduced by Chaoui et al. [25] and has now become a popular smartphone app used to monitor sleepiness. These are the criteria for this proposal. To begin, they utilize the facial area and landmarks model to retrieve data from the face recognition model. Chaoui et al. developed three (convolutional neural networks) models to detect face tiredness, eye states, and mouth states. The condition of the driver is then figured out by adding up the data from each model (normal, yawn, or sleep). DrowsyDet is less invasive than other methods because it only needs a cell phone and not access to the Internet. In this work, the authors use face data and the human eye state to examine weariness while driving, although the approach is neither real time nor accurate because of time limits. To identify sleepy drivers, Shih et al. [26] presented a spatial–temporal system. First, a spatial convolutional neural network model was used to take features from each video’s face images that were linked to sleepiness. Then, the temporal variation in sleepiness was built using an LSTM network. To round out the process, they used a temporal smoothing approach to average out the projected driving state scores. On the NTHU database, the suggested model had an accuracy rate of 82.61 percent. Using facial and hand localization, Hossain et al. [27] developed a CNN-based approach to detect fatigued drivers and determine what distracted them. For this purpose, they used four different architectures: CNN, VGG-16, ResNet50, and MobileNetV2. They tested how well their proposed model works by first putting it through a long training process using thousands of photos from a public dataset that shows 10 different distracted driving postures or situations. According to the outcomes, the pretrained MobileNetV2 model is the most effective classifier. Long et al. [28] proposed a driver drowsiness detection approach composed of three steps. Indeed, a multitask convolutional neural network (MTCNN) is used to identify human faces, and then an open-source software library (DLIB) is employed to pinpoint facial key points for the purpose of extracting the fatigue feature vector of each frame, which is fed to the long short-term memory network (LSTM) to yield a final fatigue decision. It was shown by Long et al. that the tiredness state identification algorithm has an accuracy of 90%. Akrout et al. [29] developed a work that automates driver sleepiness control. These non-invasive remedies are based only on visual indicators. These indices are computed using video stream spatiotemporal data. This study analyzes driver faces to identify inappropriate conduct. Three databases assess the proposed fusion system and find several successes but some glaring detecting weaknesses that diminish the accuracy caused by the yawning detection part. Weighted ensembles of CNNs were proposed by Eraqi et al. [30], who advocated for the use of four different CNN designs. Following this, CNNs are learned using the five different image sources that make up the AUC distracted driver set of the data. The findings showed that when trained on raw pictures, individual CNNs performed the best. The results then show that the fusion is more accurate than both solo CNNs and the majority voting fusion. This is performed by combining the predictions from the individual CNNs using a weighted genetic algorithm. An ignition lock was proposed by Arunasalam et al. [31] as part of a real-time sleepiness monitoring system for cars. Drowsiness can be detected with the use of a heart rate monitor and a sensor installed into worn eyewear. This study can identify three distinct stages of sleepiness. When an excessive amount of sleepiness is detected, the ignition will be locked. When a level of sleepiness that could kill the driver is found, the car will slow down and stop.

2.4. Discussion

According to our study of the literature, we have noted several weaknesses. Indeed, the work carried out, which is called “invasive,” leaves the driver a little embarrassed by the installation of electronic components on his body. A lot of work is also conducted on finding only yawning or analyzing blinks to make a binary classification of alertness. This does not make it possible to alert the driver in advance of his state of hypovigilance in order to avoid accidents before they happen. This is why we propose calculating spatiotemporal features that analyze the 3D head pose, the blinking of the eyes, the position of the iris to estimate the driver’s gaze, and the normalization of the iris zone in order for a pretrained learning model to extract these descriptors. We also note that several studies using methods based on machine learning or convolutional neural networks have succeeded in achieving satisfactory results. This is also limited by the choice of the model, which leads us to propose a model that takes into consideration the previous states of the driver and not only their current state. In what follows, we describe the proposed model.

3. Methodology

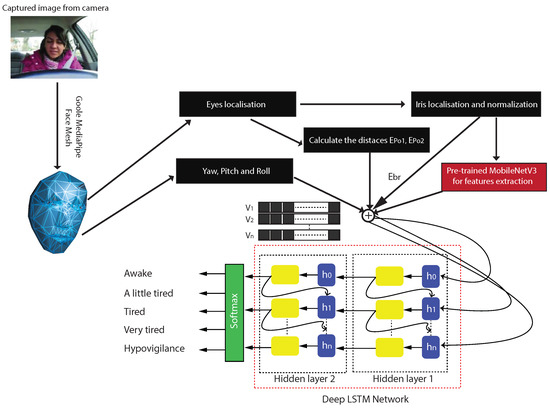

In the following section, we present all the stages of our approach for multilevel driver fatigue detection. First, using the MediaPipe Face Mesh [32], we can estimate the landmarks of the face, detect and track the eye area, and estimate the head pose that will be used for the next stage. A new approach for iris detection and normalization is used for the second step. The resulting image is considered an input for a MobileNetV3 [33] architecture to pretrain the model and extract features that are enhanced by the calculation of the distances , , , , , and . The distances and represent the distance between the center of the iris and the points representing the intersection of the eyelid, is the calculated blinking rate, while , , and represent the head angles calculated by MediaPipe Face Mesh. These traits will be put into a deep LSTM network that will be able to tell when a driver is getting tired. Figure 1 summarizes the whole process.

Figure 1.

Our proposed model for multilevel driver fatigue detection.

3.1. Head Pose Estimation and Facial Features Extraction

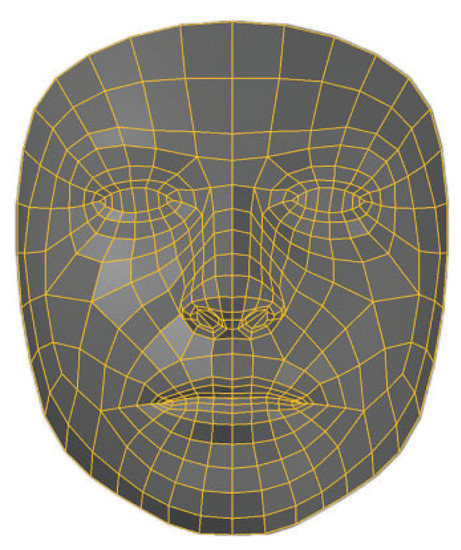

The MediaPipe Face Mesh system can calculate 468 unique 3D points on a person’s face in near real time. With machine learning, the 3D face area can be estimated from a single camera feed, so a separate depth sensor is not needed. The method achieves the necessary performance level for real-life experiences by using lighter design structures and GPU accelerators at every step of the pipeline. Indeed, face alignment, also known as face registration, is the process of comparing a face to a preexisting template to forecast its shape. In most cases, you will be asked to find a certain number of landmarks, called key points. Points such as these either contribute to meaningful facial features or have their own independent meanings [34]. MediaPipe Face Mesh instead makes use of a 3D morphable model (3DMM) [35] whose posture, size, and properties are estimated. Principal component analysis (PCA) is often used to generate a 3D mesh model. However, the variety of the collection of faces collected for the model determines the linear manifold covered by the PCA basis, and hence the range of feasible predictions is restricted. The resultant mesh often has many more points. For instance, unlike MediaPipe Face Mesh, many works are unable to accurately depict a face with just one eye closed. The authors suggest a residual neural network architecture for figuring out where the 3D mesh vertices are. Each vertex would act as its own landmark. The 468 points that make up the mesh network are all organized into squares (Figure 2). No depth sensor data are needed for this job; all the model needs is a frame (or a stream of frames) from a single RGB camera as a feed.

Figure 2.

MediaPipe Face Mesh predicted topology [32].

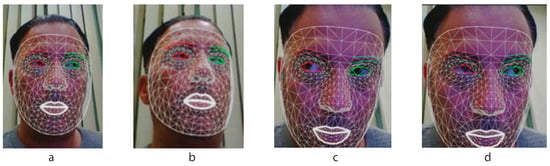

In our case, we use MediaPipe Face Mesh to find and track all the parts of the driver’s face that we need and to figure out the distances we set up before. In this step, the yaw, pitch, and roll are calculated and saved to the variables , , and , respectively. Figure 3 shows some examples of the MediaPipe Face Mesh system, which makes it possible to detect the head pose and locate the eyes and other facial components.

Figure 3.

Examples of MediaPipe Face Mesh detection results realized in our laboratory: (a) frontal face, (b) head rotation, (c) gaze in the left, (d) gaze in the right.

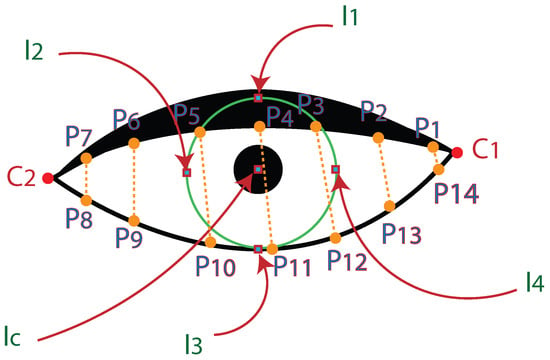

3.2. Eye Blinking Rate Detection

The main objective in this section is to calculate a normalized rate for eye blinking from eye landmark detection with MediaPipe Face Mesh. Indeed, as shown in Figure 4, the Iris Landmark Model [36] from MediaPipe Face Mesh detects 14 points for the upper and lower eyelids, 2 points for the eye corners, and 5 points for the iris to define the iris edges and center. In this step, we measure 7 distances from the eye to figure out how often we think the person blinks. In fact, the normalized rate that is fed to the pretrained MobileNetV3 model makes the training more rapid.

Figure 4.

Eyelids and iris landmarks for MediaPipe Face Mesh.

Obviously, when people’s eyes are open, the distance between the upper and lower eye point features will be relatively large. When the eyes are closed, the distance becomes smaller. The eye rate is calculated using the distance between the eyelid feature points. Figure 4 shows the eye and iris landmarks for an open eye. The following equation is used to calculate the eye blinking rate, :

where

Knowing that and are the eyelid feature points, X and Y are the coordinates of each point {}, as shown in Figure 4.

3.3. Iris Position According to Eye Corners

We can tell how tired a driver is by looking at where the iris is in relation to the corners of the eyes. In the event that the driver’s head is in the road’s field of vision while he is also looking to the left and right by moving his iris, this information may in fact aid the system in making a more accurate choice. Let us start with , the iris center. The eye corners are represented by and . The eye position is calculated using the following Equations (3) and (4):

The Euclidean distance between two points can be represented as (Equation (2)). The distances , , and are calculated as follows:

3.4. Iris and Its Surroundings Features Extraction

The detection of driver fatigue is a complex event that necessitates multiple analyses. In fact, fatigue should not be found in its final stages so that as little damage as possible is done. It is for this reason that we base our general model (Figure 1) on the division of the driver’s drowsiness state into several levels, from 1 to 5. In order to achieve this level of vigilance, our system is fed with several pieces of data that analyze the eyes of the driver. Each of its pieces of data is independent of one another and allows different states of eye blinking to be identified.

In this section, we are interested in analyzing the iris and its surroundings. As a pre-processing step of the inputs to our MobileNetV3 model, we need to segment and normalize the iris. The latter makes it possible to extract these characteristics. In fact, if we normalize the image well before putting it into the deep learning model, we can obtain better results and speed up the learning and testing process afterward.

We notice that the shape of the iris is not a perfect circle. We have decided in our work to treat it as an elliptical shape for better segmentation. In our case, we can calculate the ellipse of the iris by using 2 points and its center, which are detected and explained previously by MediaPipe Face Mesh. Suppose that the center of the iris is presented by the point , and the two points and are the two points of the ellipse that surround the iris of the eye. Let the equation of the ellipse be the following:

with and being the coordinates of a point belonging to an ellipse, a and b being the foci, and and being the center coordinates of the ellipse. Using Equation (8) for the two points and , we obtain the following equations:

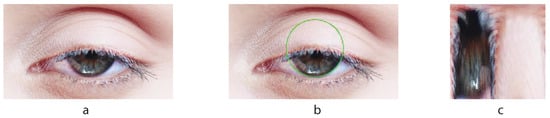

Now, we have a system of equations with 4 variables, a, b, , and , which can be calculated by replacing the coordinates of , , and into Equation (9). The two distances of the local foci of the ellipse are thus calculated, and one can then determine the external contour of the iris. Figure 5 shows a series of driver iris detection examples.

Figure 5.

Examples of iris detection with our proposed method: (a) iris in the center, (b) iris near eyled tip, (c) iris with eye blinking.

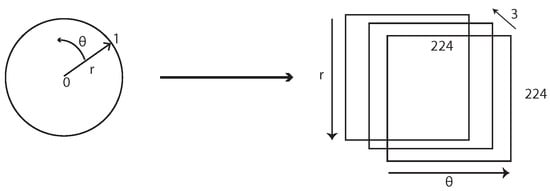

For the MobilenetV3 model to work properly, it is better to standardize the sizes and shapes of the iris localized. In other words, a uniform model would need to be set up to represent any localized iris that also includes part of the eyelids when the person blinks. This kind of model should account for all the different kinds of geometric and colorimetric errors that an iris can show. These deformations are often due to the different shooting conditions, the attitude of the subject, and the behavior of the iris according to its environment. Most of the time, the iris is out of shape, but this can be fixed by using geometric normalization. The goal of this standardization is, among other things, to set up an alignment system that will make it easier to process data in the future. To solve this problem, we propose in our system to use the Daugman technique [37] which consists of linearly unrolling the crown to present the iris in the image, which corresponds to the transition from the Cartesian coordinate system to the polar coordinate system (Figure 6), applied to the 3 matrices, red, green, and blue.

Figure 6.

Our adopted technique for iris normalization.

The iris image normalized is scaled automatically to 224 × 224 × 3 according to the equation and the equation for 3 color matrices.

The point (, ) is located on the iris-sclerotic contour in the same direction (Figure 6), r and are the polar coordinates. After being segmented and normalized, the iris can be seen to be divided into two distinct parts: one containing the iris’s unique properties, and the other including the eyelash and lid hairs, as shown in Figure 7. Indeed, a driver’s blinking varies according to how tired they are.

Figure 7.

The proposed iris localization and normalization method: (a) original eye image, (b) iris localization, (c) iris normalization.

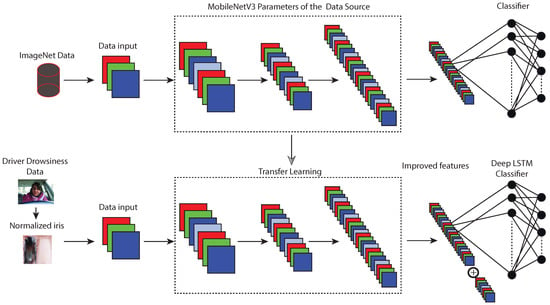

3.5. MobileNetV3 Transfer Learning for Iris Features Extraction

Transfer learning is an example of a way that deep learning can be used. Multitasking and conceptual drift are also examples of how deep learning can be used. Transfer learning has become more popular in the deep learning community. This is because training deep learning models takes a lot of time and effort, and the datasets on which they are trained are large and difficult. Transfer learning in deep learning works best when the model features learned in the first assignment can be used in other situations. During transfer learning, one network is trained on one dataset and one task, and then the features it learns are used to train a second network on a different dataset and a different task.

The skills acquired via transfer learning are not limited to the original employment but may be used in the new one as well. To solve predictive modeling issues, we apply transfer learning. “Pre-trained model” and “develop model” are the two most common ways of transfer learning. For classifying image data, a huge number of high-performing models have been built and used on ImageNet, which is where the images used in the competitions are located. Because of this, the field of building and training convolutional neural networks has made a lot of progress. Not only that, but a lot of the models were made available under an open-source license. When these models are used for computer vision tasks, they provide a solid base for transfer learning. Because of their useful learning characteristics, the models may be trained to recognize recurring patterns in images. The models still do well at the original tasks they were made for, and they are also the best models today. Figure 8 shows our transfer learning model.

Figure 8.

Proposed model transfer learning model for driver fatigue detection.

Pretrained model weights are very easy to use because they are open source and can be downloaded for free. Multiple deep learning libraries, such as Keras, are used to obtain the model weights, which are then used in the same model architecture. We employed transfer learning and the deep learning architecture MobileNetV3 to extract the most informative features possible from the training dataset. After being trained on a large set of pictures, this architecture has a strong representation of low-level features such as edges, rotation, lighting, and patterns that can be used to pull deep features from new, noisy pictures. For this reason, these models might be useful for using transfer learning ideas to pull out the qualities you want.

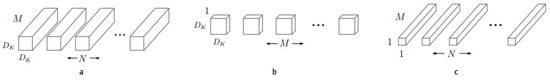

When it comes to apps and embedded devices designed for smartphones, MobileNet [38] is a widely used CNN-based approach. For us, this will be particularly helpful for monitoring the state of our drivers in the event that weariness sets in. Depthwise separable convolution is the main idea that is used to make the computation less complicated. The high computational needs of the classic CNN design are caused by the point-to-point convolution between the image and the filter. Pointwise convolution in MobileNet uses 1 × 1 convolution to make a linear combination of the depthwise-layer output, while depthwise convolution uses a single filter for each input channel. By factorizing the convolutions, both computational time and model size may be drastically reduced, as shown in Figure 9. Consequently, the model is well-suited for use in real-time systems, portable gadgets, and embedded systems.

Figure 9.

Standard and MobileNet [38] filters in CNN: (a) standard CNN’s filters, (b) depthwise convolution, (c) separable depthwise filter.

Two variants of MobileNetV3, MobileNetV3-large and MobileNetV3-small, were presented in [33]. As a successor to the MobileNetV2 framework [39], MobileNetV3 aims to improve upon its predecessor in terms of both latency and precision. MobileNetV3-large, for instance, reduced latency by 20% while improving accuracy by 3.2% compared to MobileNetV2. MobileNetV3-small achieves 6.6% higher accuracy at the same latency. For these reasons, we opted to use MobileNetV3-large in our transfer learning and fine-tuning model. In fact, a network architecture search (NAS) approach to find the best possible network architecture and kernel size for the depthwise convolution was developed in MobileNetV3.

The following components make up MobileNetV3: a 3 × 3 depthwise convolutional kernel, then batch normalization and activation functions, a 1 × 1 convolution (pointwise convolution) for linear combination calculations and feature map extraction within the depthwise separable convolutional layer, and so forth. A decreased feature map is created by a global average pooling layer with a residual block based on the residual skip connection mechanism, which uses bottleneck block networks. Finally, an h-swish activation function with the Rectified Linear Unit (ReLU) activation function.

In reality, the inverted residual block is broken down into the following smaller blocks: The first block makes it possible to learn more sophisticated representations while decreasing the model’s computational load with 1 × 1 expansion and projection convolutional layers and a depthwise convolutional kernel of size 1 × 1. The second block uses a depth-separable convolutional layer and a technique called “residual skip connection” (squeeze-and-excite block) to let an important feature be chosen channel by channel.

3.6. Deep LSTM Network for Multilevel Fatigue Classification

When building the tiredness detection model, much of the prior research neglected to account for the time factor and the effect of the driver’s fatigue condition in earlier time periods. As this was the case, the rate of accurate detection was low. Long periods of driving may cause weariness, which only becomes worse if the driver keeps going. Therefore, LSTM [40] is used in our work as a means of identifying driver fatigue.

The primary benefit of employing LSTM is in the processing of sequence data. In a typical neural network, both the input and output are unrelated to one another. As a result, the results of one instant have no bearing on the results of the next. In LSTM, the current output of a sequence is linked to the previous output because the input of the hidden layer includes both the output of the input layer and the output of the hidden layer from the previous time. Second, unlike the recurrent neural network (RNN) model, LSTM model does not suffer from the issue of gradient dispersion. In reality, LSTM has a quicker learning speed and improved accuracy because the long-term sequence is addressed by inserting a logic gate unit.

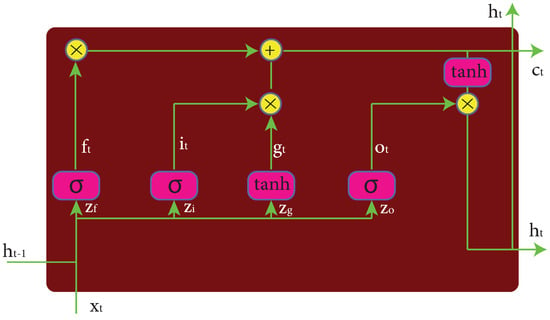

In fact, at each time step, the LSTM cell is fed the input and the hidden status from the previous time step. Because LSTM remembers a unit status , it will carry over the unit status from the previous time step at each time step. At each time step, the above input is weighted to obtain the total input z described by Equation (12).

, , , and are the input gate (Equation (13)), forget gate (Equation (14)), output gate (Equation (15)), and quasi-cell state input (Equation (16)), respectively, where represents the sigmoid activation function and tanh represents the hyperbolic tangent function. These four components of the transformation result z are separated and used in the following order.

The cell state and the hidden state are represented by the following equations:

The following Figure 10 displays the LSTM’s structure.

Figure 10.

LSTM’s cell structure.

Indeed, in our case of fatigue detection, we proposed to use a deep LSTM network with two hidden layers (it will be justified in the experimentation section). The output descriptors of our MobilenetV3-large model are associated with the previously calculated 3D head pose estimation parameters yaw, pitch, and roll; the distances and , which allow us to determine the position of the iris in relation to the eye corners; and finally, the percentage of eyes blinking by the calculated value for each iteration. Figure 1 summarizes all the steps described above in order to achieve a fatigue detection system at five levels: awake, a little tired, tired, very tired, and hypovigilance.

4. Experimentation and Analysis

The suggested method was tested on a 2.9 GHz Intel Core i7 10700 vPro with 32 GB of RAM and a 1 TB solid-state drive. The experimental corpus is first described in detail. Next, we conduct a series of experiments and calculate the metrics used to test the validity of our model and provide the outcomes in detail. The entire model was developed using the Python programming language.

4.1. Corpus and Experimental Data

To identify the various levels of driver fatigue in our experiment, we used the databases MiraclHB [41], YawDD [42], and DEAP [43].

The database MiraclHB [41] was collected in our lab under different lighting conditions. Each video consists of a person sitting between 30 and 50 cm in front of a Sony DSC-W510 digital camera. The recordings were made using a Jetion-style steering wheel, pedals, and a computer with driving simulator software installed. In order to study drowsiness, the participants were requested to remain up until midnight. Indeed, we used the same guidelines put forward by Picot et al. [44] in our simulation. All videos were shot in AVI and are 30 fps at a resolution of 640 × 480. Picot et al. [44] carried out an EEG and video data acquisition campaign to validate their hypovigilance detection algorithms. The video was recorded with a 200 fps camera. Hypovigilance was assessed using a 10 min psychomotor alertness task. This task measures an individual’s reaction time to a specific visual stimulus. The campaign was carried out on six subjects. Each subject was recorded twice: once at the end of the afternoon (around 6 p.m.) and a second time around 1 a.m. The first recording was to serve as a reference for awakening. The subjects were asked to stay awake until 1 a.m. in order to observe the states of hypovigilance.

Abtahi et al. [42] set up two cameras in separate locations to guarantee more data collection for many positions. To collect data for the YawDD database, a camera was placed beneath the front mirror in one case and on the dashboard in the other. Both videos were shot using a Canon A720IS digital camera, with the settings for 640 × 480 pixels and 30 fps. For the purpose of creating a more lifelike environment, the participants were instructed to buckle their seat belts in a car. The dataset includes videos of 57 male and 50 female volunteers who range in age, are of diverse nationalities, and have varying facial traits. The 107 people looked very different from one another. People took part in the activity with and without the use of eyeglasses, men with and without facial hair, men with and without mustaches, women with and without scarves, with varied hairstyles, and wearing different clothes.

The multimodal dataset for the human affective state analysis is presented by Koelstra et al. [43]. The physiological and electroencephalographic (EEG) data of 32 participants were monitored as they watched 40 separate 1 min music videos. On a scale that measured arousal, valence, positive and negative emotions, dominance, and level of familiarity, the participants rated each movie. For 22 of the 32 participants, frontal-facing video was also recorded. The camera can record video in 720p at 50 fps. The recordings are being analyzed by researchers in order to determine what factors contribute to the detection of weariness.

In our experiment, the databases described above were divided into three parts. A total of 10% for the test and 90% for the training and validation. Indeed, during the training process, a percentage of 10% is prepared for validation, split from the training dataset.

4.2. Evaluation Metrics

We used the Recall (Equation (19)), the Precision (Equation (20)), the F-measure (Equation (21)), and the Accuracy (Equation (22)) to evaluate our experimentation, which included finding faces and eyes, as well as our multilevel fatigue detection model.

where TP, TN, FP, and FN represent the True Positives, True Negatives, False Positives, and False Negatives samples, respectively. The effectiveness of the suggested approach is measured by computing the evaluation metrics for the feature extraction, training, and testing. In fact, these measurements help us fully understand the usefulness of the pre-processing that needs to be conducted and how it affects the proposed model as a whole, so that we can get good results and warn the driver before he falls asleep.

4.3. Experiments for Face Detection

Face detection is an essential step for our system. This step changes the results of the feature extraction, which are the inputs to the deep LSTM network. We compared our MediaPipe Face Mesh detection method to those in the literature of Viola and Jone [45] and Dlib Hog [46]. The results of our experiments with the three approaches we just talked about are shown in the table below, using the three selected databases in our experimentation.

Table 1 reveals that MediaPipe Face Mesh is very robust in face localization despite the presence of illumination and head rotation effects in all three datasets. There are drawbacks to using Viola and Jone or Dlib Hog. Indeed, neither method is capable of identifying faces that have been rotated or tilted. Additionally, they are affected by the presence of occlusions and changes in the illumination. Indeed, this experiment argues the choice of the MediaPipe Face Mesh method for the detection of faces, which is a primordial step in the model proposed.

Table 1.

F-measure for face detection experiments using MiraclHB, YawDD, and DEAP databases.

4.4. Hyperparameter Tuning, Results, and Discussion

This second experimentation is responsible for building a fine-tuned HyMobLSTM model (Hybrid model MobileNetV3-large and deep LSTM network) for multilevel driver fatigue detection. The deep LSTM network’s input layer is made up of features extracted from MobileNetV3-large, which are enhanced by iris positions and blink rate features. When fine-tuning a model’s settings, many works often resort to the random-search technique. The context necessitates the definition of a search space for various hyperparameters. Consequently, [1–6], [0.01–0.0001], and [128–256–512–1024] are used as the starting points for the search ranges of the hidden-layer number, the learning rate, and the number of neurons in each hidden LSTM layer, respectively. Through the employment of an Adam optimization algorithm, the effectiveness of the training procedure is increased. In this experimentation, we apply a batch size of 50 and 100 epochs. The optimal settings for our proposed model hyperparameters were found using a random search strategy and are shown in Table 2.

Table 2.

HyMobLSTM model best hyperparameters values for random-search technique.

In fact, the YawDD database was used for this second experiment for the fine-tuned HyMobLSTM model. YawwDD has videos that were recorded in an uncontrolled environment. This allows us to put our model to the test in terms of the image light sensitivity. Table 3 shows the results obtained during our second experiment. These evaluation results are satisfactory but still need to be improved because we have not yet learned our system on the two DEAP and MiraclHB databases.

Table 3.

Evaluation of the HyMobLSTM model for fine-tuned experiment.

In the following third and fourth experiments, we focus on the learning of our proposed model, taking into account the hyperparameters computed empirically by the second experiment described below, using the two databases MiraclHB and DEAP. The Precision, Recall, and F-measure all improved steadily over the course of the third and fourth experimentations (see Table 4 and Table 5). This indicates that our model may be better trained with the classes distributed more similarly in the training and validation. Furthermore, it validates the efficacy of our optimization approach in enhancing both the training procedure and the trained model. Indeed, we find that the iris normalization has played an important role in the improvement in the feature extraction by MobileNetV3-large. The use of the deep LSTM network also made it possible to detect the hypovigilance states with more precision, because the classification findings are based on the study of the driver’s condition, taking into consideration both the present and prior states. Another advantage provided by this work is the multilevel fatigue detection. Indeed, our goal is to alert the driver before arriving at the last final fatigue level.

Table 4.

Evaluation of the HyMobLSTM model using DEAP dataset.

Table 5.

Evaluation of the HyMobLSTM model using MiraclHB dataset.

As can be seen in Table 6, despite the relevance of the vigilance levels in the early identification of the hypovigilance situations, they are often overlooked in the literature. We also find that the author uses an invasive approach that requires sensors to be installed to determine the state of the driver fatigue [31]. The driver does not like to use invasive methods.

Table 6.

Comparison of our approach to others in the literature.

Indeed, when comparing our suggested HyMobLSTM model to other techniques in the literature, its higher accuracy (98.4%) stands out as a strong point in favor of our system. It is generally accepted that a variety of factors influence this value. Firstly, the MediaPipe Face Mesh was selected as the approach for the face and eye localization, in addition to the calculation of the 3D head pose angles , , and , because it had very high success rates. Secondly, we normalized the iris using Daugman’s method to identify the optimal interest area to feed into the pretrained MobileNetV3-large. Third, the output properties of the MobileNetV3-large pretrained model were enhanced by using the calculated values , , , , , and . Finally, the experiments were also performed to select the optimal values for the hyperparameters through the Adam optimization algorithm. We also note that our system is characterized by the detection of fatigue at five levels. Indeed, this specification makes it possible to alert the driver of his hypovigilance state before he falls asleep. Hossain et al. [27] proposed nine classes. Indeed, the levels to be detected are levels of small distractions and not fatigue, which is not a major danger for drivers. Our work shows also robustness compared to Xiao et al. [47]. In fact, the work of Xiao et al. [47] reaches an accuracy of 0.991 for only two levels of vigilance. This remains limited in its use in the case where we want to detect hypovigilance states before they are triggered in order to prevent the driver from falling asleep and avoiding road accidents.

5. Conclusions and Future Works

In this work, an approach based on transfer learning and the deep LSTM network was proposed. We are indeed interested in detecting five levels of driver fatigue. Our work is distinguished by a pre-processing step that involves determining the regions of interest using the Google MediaPipe Face Mesh method as well as normalizing the desired features. This is manifested by the calculation of , , , , , and , as well as the concatenated features of the MobileNetV3-large model transfer learning result. The vectors obtained describe the position of the iris in relation to the eye corners; the blinking behavior; the features of the normalized iris area; and the yaw, pitch, and roll values of the head rotation angles. These vectors are then fed to the deep LSTM network of two hidden layers to classify the five desired fatigue levels: awake, a little tired, tired, very tired, and hypovigilance. We used the Adam optimization algorithm technique to select the most efficient hyperparameters from the three databases used in our experimentation (YawwDD, DEAP, and MiraclHB). The results obtained show the robustness of our proposed HyMobLSTM model compared to the existing work in the literature.

In future work, we hope to take changing light conditions (day and night, for example) into account so that they do not limit our system. The use of an infrared camera can be a possible solution for an evaluation of our system in difficult lighting conditions. The detection of severe states of distraction and the driver’s yawning are considered interesting objectives to improve our system in the future. The metrics that are based on vehicles seem to have a long-term perspective. In fact, a number of metrics, such as departures from the lane position, the steering wheel movement, and the accelerator pedal pressure, can be blended into behavioral measures in order to improve the driver fatigue detection.

Author Contributions

All the authors contributed equally to this work. All authors have read and agreed to the published version of the manuscript.

Funding

This project was supported by the Deanship of Scientific Research at Prince Sattam Bin Abdulaziz University, under the research project PSAU-2022/01/19856.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Acknowledgments

This project was supported by the Deanship of Scientific Research at Prince Sattam Bin Abdulaziz University, under the research project PSAU-2022/01/19856.

Conflicts of Interest

The authors declare that they have no conflict of interest.

References

- Sigari, M.-H.; Fathy, M.; Soryani, M. A Driver Face Monitoring System for Fatigue and Distraction Detection. Int. J. Veh. Technol. 2013, 2013, 1–11. [Google Scholar] [CrossRef]

- Brandt, T.; Stemmer, R.; Rakotonirainy, A. Affordable visual driver monitoring system for fatigue and monotony. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics (SMC), Hague, The Netherlands, 10–13 October 2004; pp. 6451–6456. [Google Scholar]

- Guo, Y.; Xia, Y.; Wang, J.; Yu, H.; Chen, R.-C. Real-Time Facial Affective Computing on Mobile Devices. Sensors 2020, 20, 870. [Google Scholar] [CrossRef] [PubMed]

- Baddar, J.-T.; Man, R.Y. Mode Variational LSTM Robust to Unseen Modes of Variation: Application to Facial Expression Recognition. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence (AAAI), Kilauea Volcanic Site, Honolulu, HI, USA, 26 January 2019; pp. 3215–3223. [Google Scholar]

- Kansizoglou, I.; Bampis, L.; Gasteratos, A. An Active Learning Paradigm for Online Audio-Visual Emotion Recognition. IEEE Trans. Affect. Comput. 2022, 13, 756–768. [Google Scholar] [CrossRef]

- Picard, R.W. Affective Computing, 1st ed.; MIT Press: Cambridge, MA, USA, 1997; pp. 1–30. [Google Scholar]

- Deng, J.; Guo, N.; Zafeiriou, S. ArcFace: Additive Angular Margin Loss for Deep Face Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4685–4694. [Google Scholar]

- Kansizoglou, I.; Bampis, L.; Gasteratos, A. Deep Feature Space: A Geometrical Perspective. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 6823–6838. [Google Scholar] [CrossRef]

- Gochoo, M.; Rizwan, S.A.; Ghadi, Y.Y.; Jalal, A.; Kim, K. A Systematic Deep Learning Based Overhead Tracking and Counting System Using RGB-D Remote Cameras. Appl. Sci. 2021, 11, 5503. [Google Scholar] [CrossRef]

- Cyganek, B.; Gruszczyński, S. Hybrid computer vision system for drivers’ eye recognition and fatigue monitoring. Neurocomputing 2014, 126, 78–94. [Google Scholar] [CrossRef]

- Gou, C.; Wu, Y.; Wang, K.; Wang, F.; Ji, Q. A joint cascaded framework for simultaneous eye detection and eye state estimation. Pattern Recognit. 2017, 67, 23–31. [Google Scholar] [CrossRef]

- Ibrahim, L.F.; Abulkhair, M. Using Haar classifiers to detect driver fatigue and provide alerts. Multimed. Tools Appl. 2014, 71, 1857–1877. [Google Scholar] [CrossRef]

- Mandal, B.; Li, L.; Wang, G.; Lin, J. Towards detection of bus driver fatigue based on robust visual analysis of eye state. IEEE Trans. Intell. Transp. Syst. 2017, 18, 545–557. [Google Scholar] [CrossRef]

- Song, F.; Tan, X.; Liu, X.; Chen, S. Eyes closeness detection from still images with multi-scale histograms of principal oriented gradients. Pattern Recogn. 2014, 47, 2825–2838. [Google Scholar] [CrossRef]

- You, F.; Li, Y.H.; Huang, L.; Chen, K.; Zhang, R.H.; Xu, J.M. Monitoring drivers’ sleepy status at night based on machine vision. Multimed. Tools Appl. 2017, 76, 14869–14886. [Google Scholar] [CrossRef]

- Zhao, L.; Wang, Z.; Zhang, G.; Qi, Y.; Wang, X. Eye state recognition based on deep integrated neural network and transfer learning. Multimed. Tools Appl. 2018, 76, 19415–19438. [Google Scholar] [CrossRef]

- Anitha, J.; Mani, G.; Venkata Rao, K. Driver Drowsiness Detection Using Viola Jones Algorithm. In Smart Intelligent Computing and Applications; Suresh, S., Vikrant, B., Mohanty, J., Siba, K., Eds.; Springer: Singapore, 2020; pp. 583–592. [Google Scholar]

- Akrout, B.; Mahdi, B. Yawning detection by the analysis of variational descriptor for monitoring driver drowsiness. In Proceedings of the IEEE International Image Processing, Applications and Systems (IPAS), Hammamet, Tunisia, 5–7 November 20016; pp. 1–5. [Google Scholar]

- Omidyeganeh, M.; Shirmohammadi, S.; Abtahi, S.; Khurshid, A.; Farhan, M.; Scharcanski, J.; Hariri, B.; Laroche, D.; Martel, L. Yawning detection using embedded smart cameras. IEEE Trans. Instrum. Meas. 2016, 65, 570–582. [Google Scholar] [CrossRef]

- Zhang, W.; Su, J. Driver yawning detection based on long short term memory networks. In Proceedings of the IEEE Symposium Series on Computational Intelligence (SSCI), Honolulu, HI, USA, 27 November–1 December 2017; pp. 1–5. [Google Scholar]

- Zhang, W.; Murphey, Y.L.; Wang, T.; Xu, Q. Driver yawning detection based on deep convolutional neural learning and robust nose tracking. In Proceedings of the IEEE International Joint Conference on Neural Networks (IJCNN), Killarney, Ireland, 12–17 July 2015; pp. 1–8. [Google Scholar]

- Wang, R.; Tong, B.; Jin, L. Monitoring mouth movement for driver fatigue or distraction with one camera. In Proceedings of the International IEEE Conference on Intelligent Transportation Systems, Washington, WA, USA, 3–6 October 2004; pp. 314–319. [Google Scholar]

- Torres, R.; Ohashi, O.; Pessin, G. A Machine-Learning Approach to Distinguish Passengers and Drivers Reading While Driving. Sensors 2019, 19, 3174. [Google Scholar] [CrossRef]

- Benoit, A.; Bonnaud, L.; Caplier, A.; Ngo, P.; Lawson, L.; Trevisan, G.; Levacic, V.; Mancas, C.; Chanel, G. Multimodal focus attention and stress detection and feedback in an augmented driver simulator. Pers. Ubiquitous Comput. 2009, 13, 33–41. [Google Scholar] [CrossRef]

- Yu, C.; Qin, X.; Chen, Y.; Wang, J.; Fan, C. DrowsyDet: A Mobile Application for Real-time Driver Drowsiness Detection. In Proceedings of the IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computing, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation, Leicester, UK, 19–23 August 2019; pp. 425–432. [Google Scholar]

- Shih, T.H.; Hsu, C.T. Multistage Spatial-Temporal Network for Driver Drowsiness Detection. In Proceedings of the Computer Vision–ACCV 2016 Workshops (ACCV), Killarney, Ireland, 20–24 November 2016; pp. 146–153. [Google Scholar]

- Hossain, M.; Rahman, M.; Islam, M.; Arnisha, A.; Uddin, M.; Bikash, P. Automatic driver distraction detection using deep convolutional neural networks. Intell. Syst. Appl. 2022, 14, 200075. [Google Scholar] [CrossRef]

- Chen, L.; Xin, G.; Liu, Y.; Huang, J. Driver Fatigue Detection Based on Facial Key Points and LSTM. Secur. Commun. Netw. 2021, 2021, 5383573. [Google Scholar] [CrossRef]

- Akrout, B.; Mahdi, W. A novel approach for driver fatigue detection based on visual characteristics analysis. J. Ambient. Intell. Human. Comput. 2023, 14, 527–552. [Google Scholar] [CrossRef]

- Eraqi, H.; Abouelnaga, Y.; Saad, H.; Moustafa, N. Driver distraction identification with an ensemble of convolutional neural networks. J. Adv. Transp. 2019, 14, 1–12. [Google Scholar] [CrossRef]

- Arunasalam, M.; Yaakoba, N.; Amir, A.; Elshaikh, M.; Azahar, N.-F. Real-Time Drowsiness Detection System for Driver Monitoring. IOP Conf. Ser. Mater. Sci. Eng. 2020, 767, 1–12. [Google Scholar] [CrossRef]

- Kartynnik, Y.; Ablavatski, A.; Grishchenko, I.; Grundmann, M. Real-time Facial Surface Geometry from Monocular Video on Mobile GPUs. arXiv 2019, arXiv:1907.06724. [Google Scholar]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.-C.; Tan, M.; Chu, G.; Vasudevan, V.; Zhu, Y.; Pang, R.; et al. Searching for MobileNetV3. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Bulat, A.; Tzimiropoulos, G. How Far are We from Solving the 2D & 3D Face Alignment Problem? (and a Dataset of 230,000 3D Facial Landmarks). In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1021–1030. [Google Scholar]

- Volker, B.; Thomas, V. A morphable model for the synthesis of 3D faces. In Proceedings of the Internaional Conference and Exhibition on Computer Graphics and Interactive Techniques (SIGGRAPH), New York, NY, USA, 1 July 1999; pp. 187–194. [Google Scholar]

- Artsiom, A.; Ablavatski, A.; Andrey, V.; Ivan, G.; Karthik, R.; Matsvei, Z. Real-time Pupil Tracking from Monocular Video for Digital Puppetry. arXiv 2020, arXiv:2006.11341, 1. [Google Scholar]

- Daugman, J.G. High Confidence Visual Recognition of Persons by a Test of Statistical Independence. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 14, 1148–1161. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. arXiv 2018, arXiv:1801.04381. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural. Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Akrout, B.; Mahdi, W. Spatio-temporal features for the automatic control of driver drowsiness state and lack of concentration. Mach. Vis. Appl. 2015, 26, 1–13. [Google Scholar] [CrossRef]

- Abtahi, S.; Omidyeganeh, M.; Shirmohammadi, S.; Hariri, B. Yawdd: A yawning detection dataset. In Proceedings of the 5th ACM Multimedia Systems Conference (MMSys), Singapore, 19 March 2014; pp. 24–28. [Google Scholar]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.-S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. DEAP:A Database for Emotion Analysis Using Physiological Signals. IEEE Trans. Affect. Comput. 2012, 3, 18–31. [Google Scholar] [CrossRef]

- Picot, A.; Charbonnier, S.; Caplier, A.; Vu, N.-S. Using Retina Modelling to Characterize Blinking: Comparison between EOG and Video Analysis. Mach. Vis. Appl. 2011, 23, 1195–1208. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Kauai, HI, USA, 8–14 December 2001; p. I. [Google Scholar]

- Boyko, N.; Basystiuk, O.; Shakhovska, N. Performance Evaluation and Comparison of Software for Face Recognition, Based on Dlib and Opencv Library. In Proceedings of the IEEE Second International Conference on Data Stream Mining & Processing (DSMP), Lviv, Ukraine, 21–25 August 2018; pp. 478–482. [Google Scholar]

- Xiao, W.; Liu, H.; Ma, Z.; Chen, W.; Sun, C.; Shi, B. Fatigue Driving Recognition Method Based on Multi-Scale Facial Landmark Detector. Electronics 2022, 11, 4103. [Google Scholar] [CrossRef]

- Shang, Y.; Yang, M.; Cui, J.; Cui, L.; Huang, Z.; Li, X. Driver Emotion and Fatigue State Detection Based on Time Series Fusion. Electronics 2022, 12, 26. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).