LazyRS: Improving the Performance and Reliability of High-Capacity TLC/QLC Flash-Based Storage Systems Using Lazy Reprogramming

Abstract

:1. Introduction

2. Background

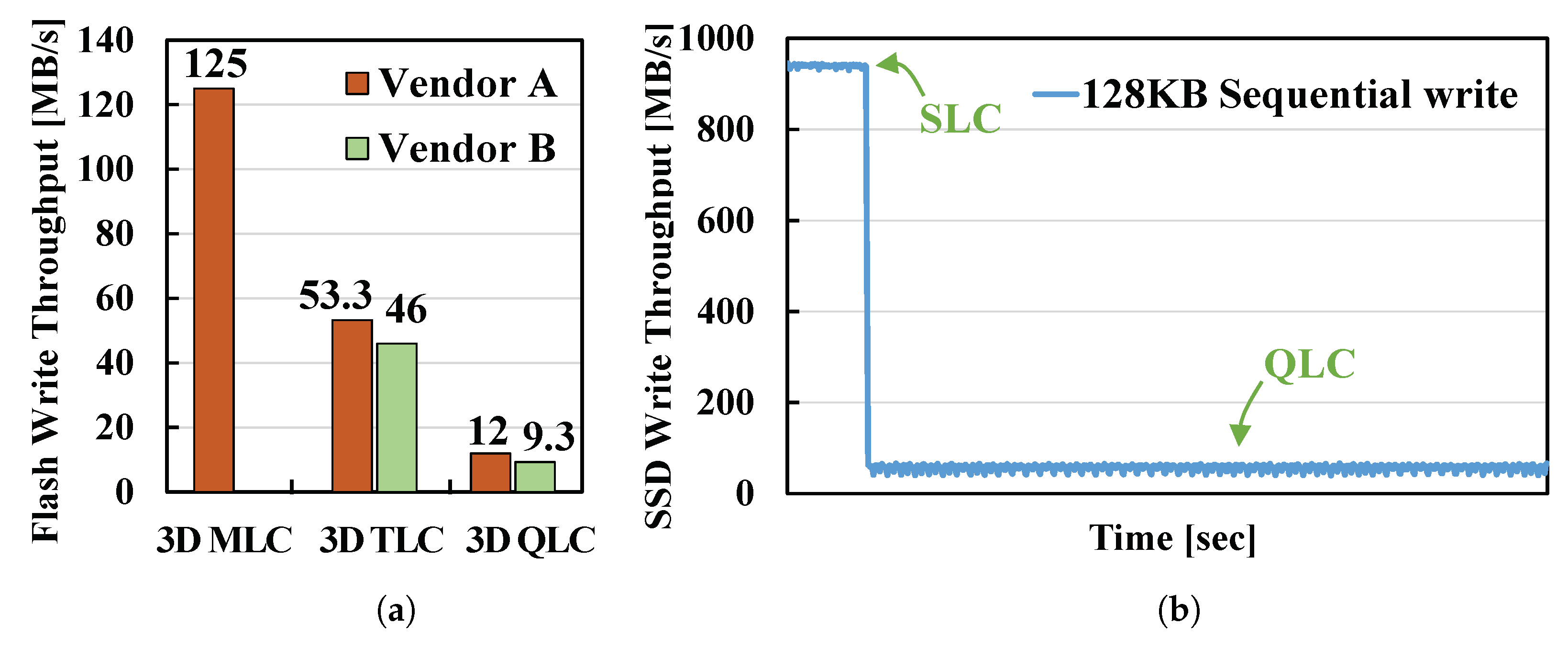

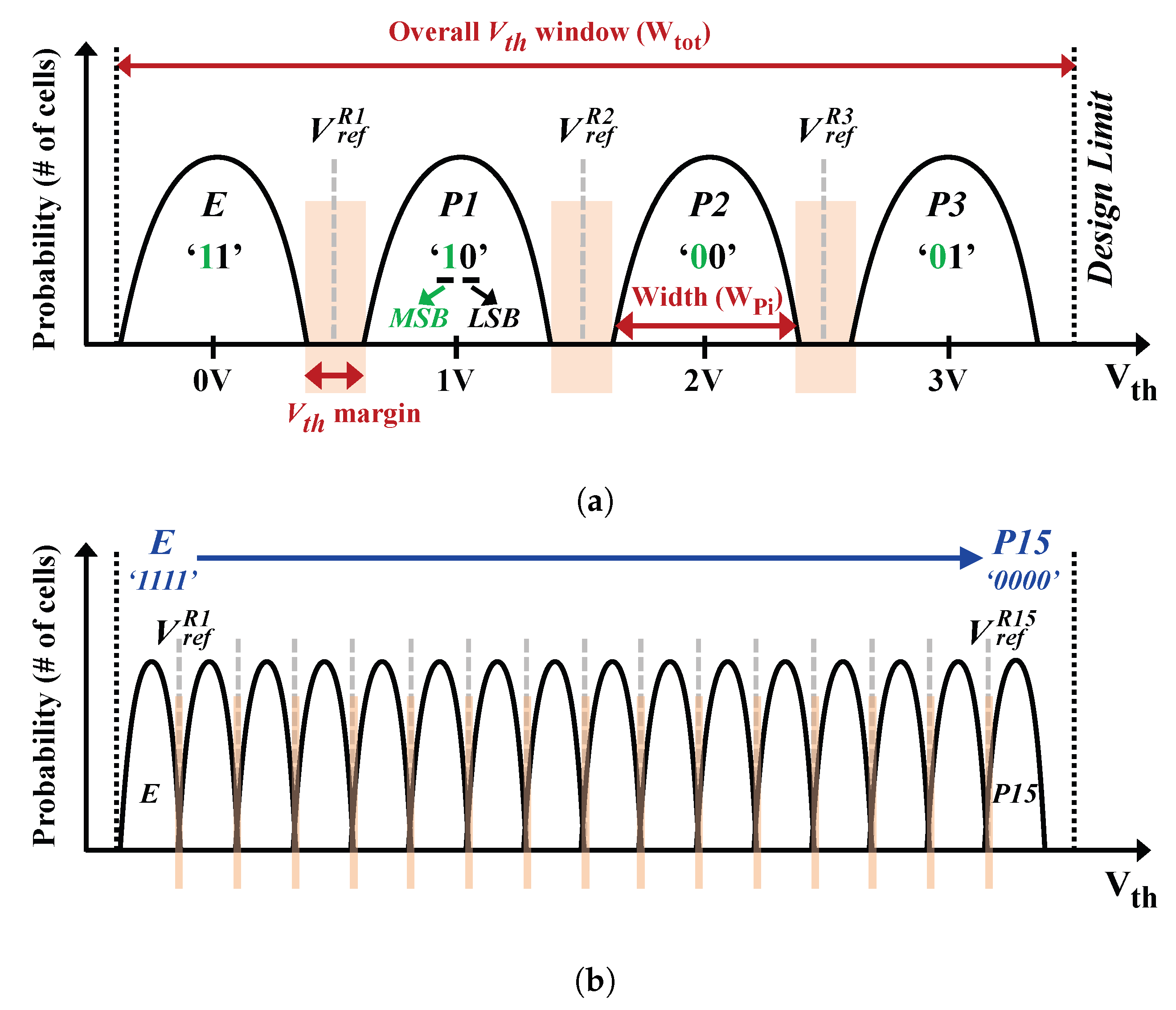

2.1. Multi-Level Flash Memory vs. Program Latency

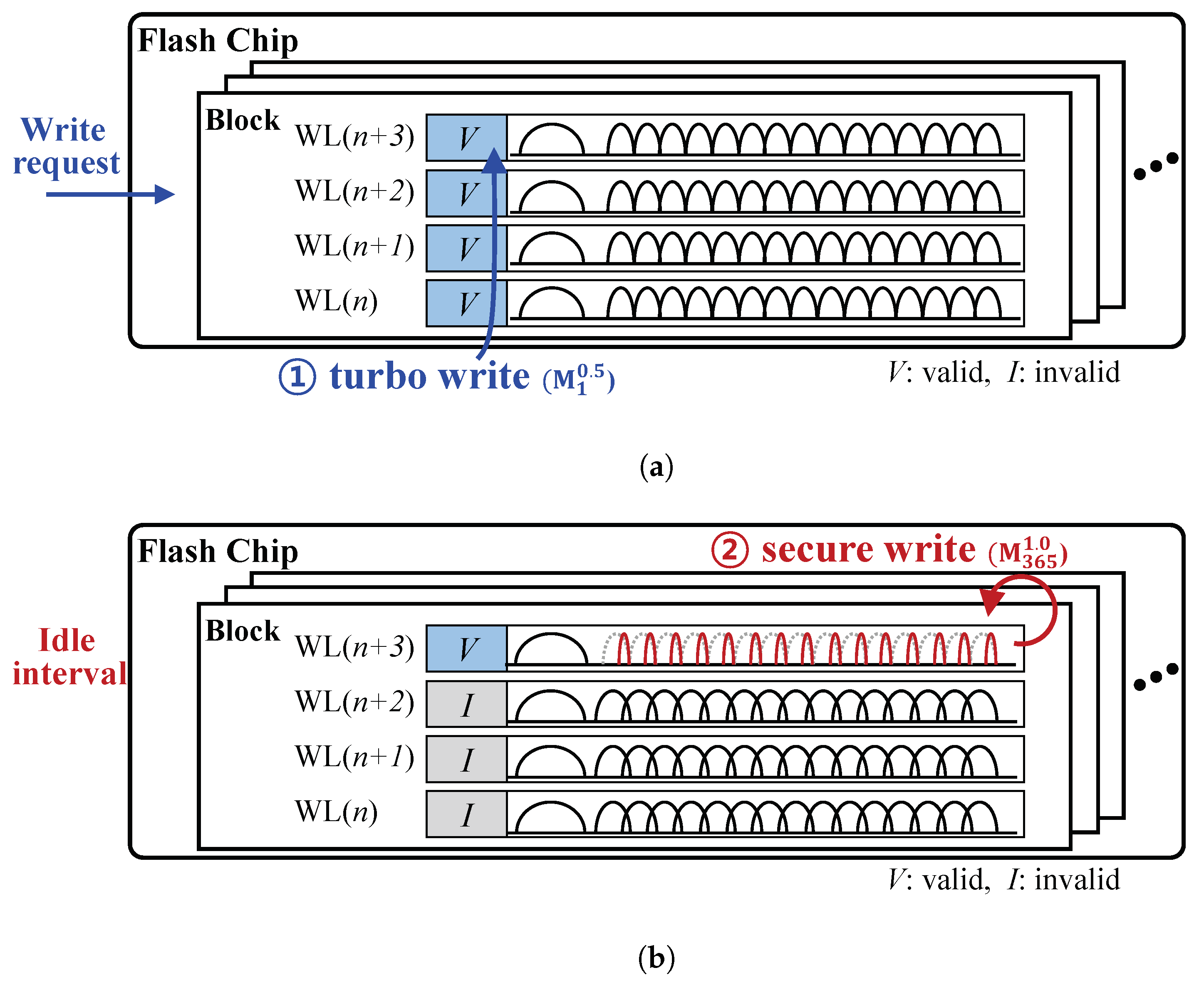

2.2. Conventional Reprogramming Scheme

3. LazyRS: Lazy Reprogramming Scheme

3.1. Basic Idea of the LazyRS

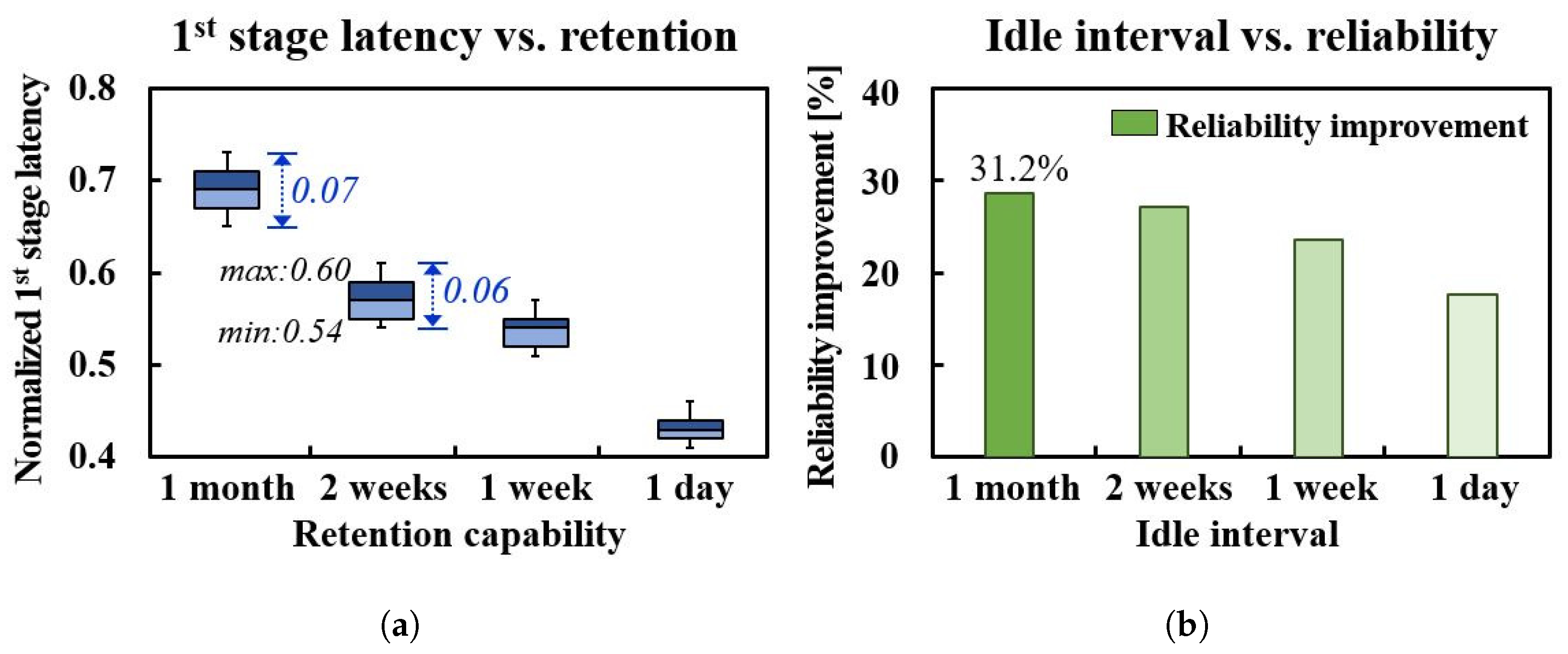

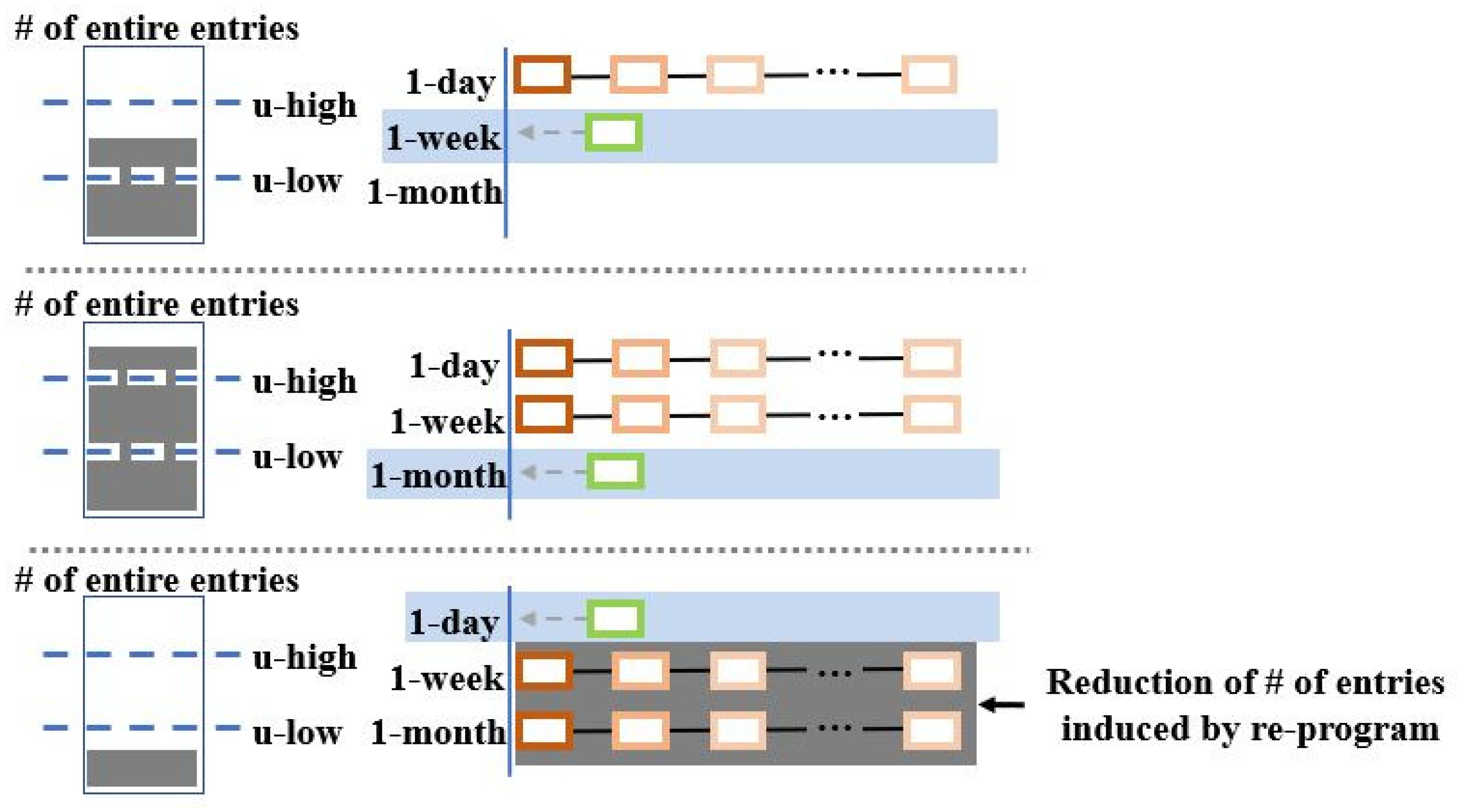

3.2. LazyRS-Aware NAND Retention Model

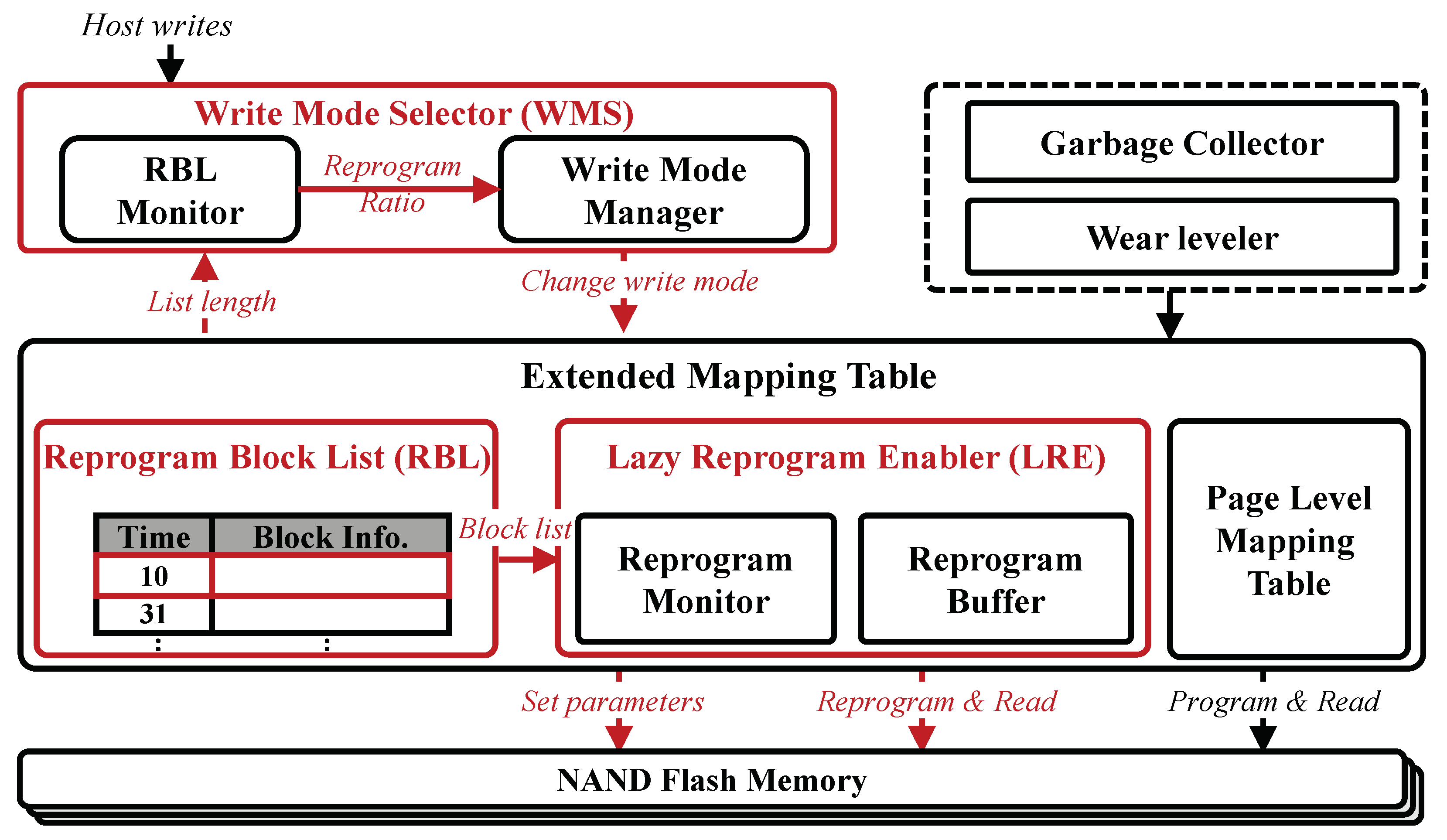

4. LAZYFTL: LazyRS-Aware FTL

4.1. Reprogram Block List (RBL)

4.2. Write Mode Selector (WMS)

4.3. Lazy Reprogram Enabler (LRE)

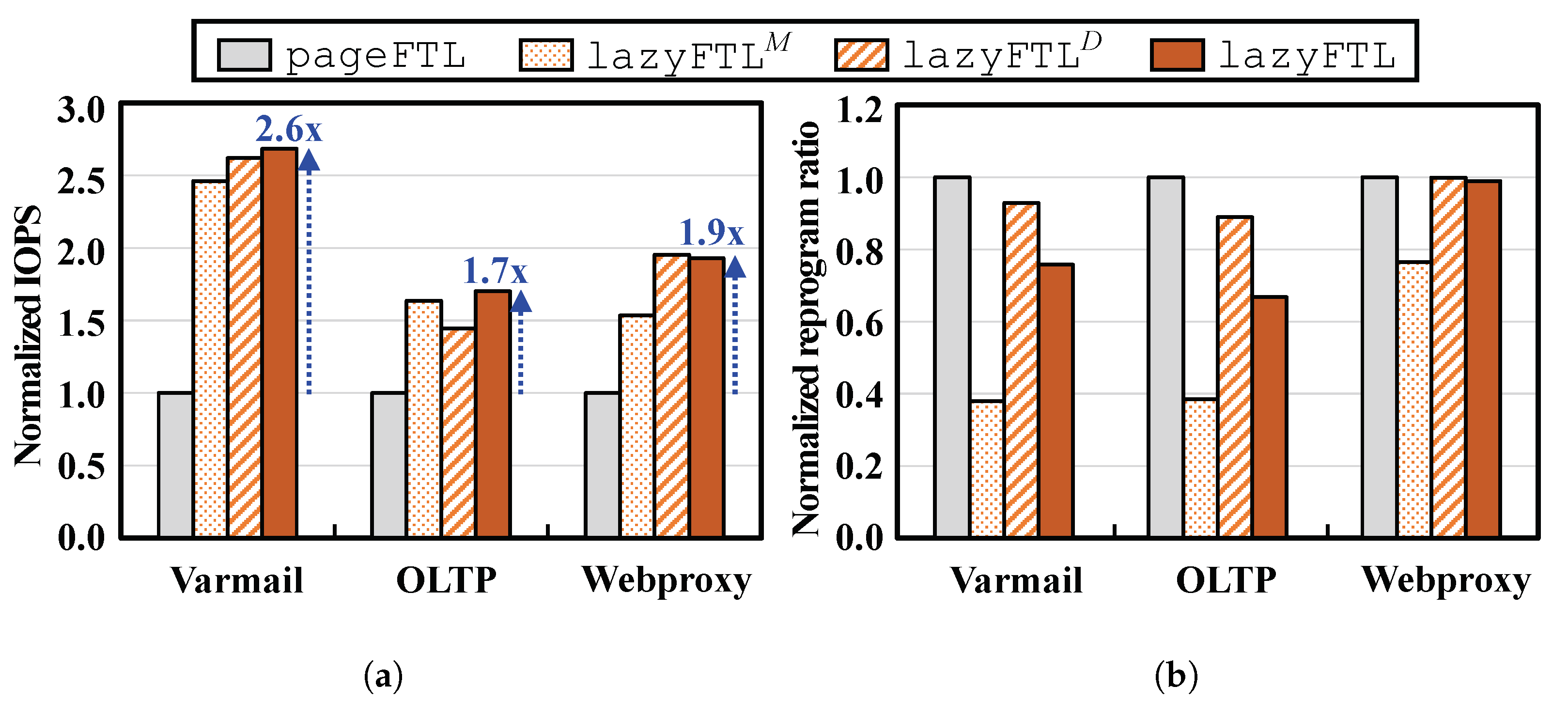

5. Experimental Results

5.1. Experimental Settings

5.2. Experimental Results

6. Related Works

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Shibata, N.; Kanda, K.; Shimizu, T.; Nakai, J.; Nagao, O.; Kobayashi, N.; Miakashi, M.; Nagadomi, Y.; Nakano, T.; Kawabe, T.; et al. 13.1 A 1.33Tb 4-bit/Cell 3D-Flash Memory on a 96-Word-Line-Layer Technology. In Proceedings of the 2019 IEEE International Solid- State Circuits Conference—(ISSCC), San Francisco, CA, USA, 17–21 February 2019; pp. 210–212. [Google Scholar] [CrossRef]

- Lee, S.; Kim, C.; Kim, M.; Joe, S.-M.; Jang, J.; Kim, S.; Lee, K.; Kim, J.; Park, J.; Lee, H.-J.; et al. A 1Tb 4b/cell 64-stacked-WL 3D NAND flash memory with 12MB/s program throughput. In Proceedings of the 2018 IEEE International Solid- State Circuits Conference—(ISSCC), San Francisco, CA, USA, 11–15 February 2018; pp. 340–342. [Google Scholar] [CrossRef]

- Takai, Y.; Fukuchi, M.; Kinoshita, R.; Matsui, C.; Takeuchi, K. Analysis on Heterogeneous SSD Configuration with Quadruple-Level Cell (QLC) NAND Flash Memory. In Proceedings of the 2019 IEEE 11th International Memory Workshop (IMW), Monterey, CA, USA, 12–15 May 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Goda, A. Recent progress on 3D NAND flash technologies. Electronics 2021, 10, 3156. [Google Scholar] [CrossRef]

- Cho, W.; Jung, J.; Kim, J.; Ham, J.; Lee, S.; Noh, Y.; Kim, D.; Lee, W.; Cho, K.; Kim, K.; et al. A 1-Tb, 4b/Cell, 176-Stacked-WL 3D-NAND Flash Memory with Improved Read Latency and a 14.8 Gb/mm2 Density. In Proceedings of the 2022 IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 20–26 February 2022; Volume 65, pp. 134–135. [Google Scholar]

- Im, S.; Shin, D. ComboFTL: Improving performance and lifespan of MLC flash memory using SLC flash buffer. J. Syst. Archit. 2010, 56, 641–653. [Google Scholar] [CrossRef]

- Chang, L.P. A Hybrid Approach to NAND-Flash-Based Solid-State Disks. IEEE Trans. Comput. 2010, 59, 1337–1349. [Google Scholar] [CrossRef]

- Kwon, K.; Kang, D.H.; Eom, Y.I. An advanced SLC-buffering for TLC NAND flash-based storage. IEEE Trans. Consum. Electron. 2017, 63, 459–466. [Google Scholar] [CrossRef]

- Shin, S.-H.; Shim, D.-K.; Jeong, J.-Y.; Kwon, O.-S.; Yoon, S.-Y.; Choi, M.-H.; Kim, T.-Y.; Park, H.-W.; Yoon, H.-J.; Song, Y.-S.; et al. A new 3-bit programming algorithm using SLC-to-TLC migration for 8MB/s high performance TLC NAND flash memory. In Proceedings of the 2012 Symposium on VLSI Circuits (VLSIC), Honolulu, HI, USA, 13–15 June 2012; pp. 132–133. [Google Scholar]

- Intel SSD. Available online: https://www.intel.com/content/www/us/en/products/memory-storage/solid-state-drives/consumer-ssds /6-series/ssd-660p-series.html (accessed on 1 January 2023).

- Kang, D.; Jeong, W.; Kim, C.; Kim, D.-H.; Cho, Y.S.; Kang, K.-T.; Ryu, J.; Lee, S.; Kim, W.; Lee, H.; et al. 7.1 256Gb 3b/cell V-NAND flash memory with 48 stacked WL layers. In Proceedings of the 2016 IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 31 January–4 February 2016; pp. 130–131. [Google Scholar] [CrossRef]

- JEDEC. Electrically Erasable Programmable ROM (EEPROM) Program/Erase Endurance and Data Retention Stress Test (JEDEC22-A117). 2009. Available online: https://www.jedec.org (accessed on 1 January 2023).

- Liu, R.S.; Yang, C.L.; Wu, W. Optimizing NAND Flash-Based SSDs via Retention Relaxation. In Proceedings of the 10th USENIX Conference on File and Storage Technologies, San Jose, CA, USA, 15–17 February 2012; p. 11. [Google Scholar]

- Micheloni, R.; Crippa, L.; Marelli, A. Inside NAND Flash Memories; Springer Science & Business Media: New York, NY, USA, 2010. [Google Scholar]

- Suh, K.-D.; Suh, B.-H.; Lim, Y.-H.; Kim, J.-K.; Choi, Y.-J.; Koh, Y.-N.; Lee, S.-S.; Kwon, S.-C.; Choi, B.-S.; Yum, J.-S.; et al. A 3.3 V 32 Mb NAND flash memory with incremental step pulse programming scheme. IEEE J. -Solid-State Circuits 1995, 30, 1149–1156. [Google Scholar] [CrossRef]

- Kim, M.; Song, Y.; Jung, M.; Kim, J. SARO: A state-aware reliability optimization technique for high density NAND flash memory. In Proceedings of the 2018 on Great Lakes Symposium on VLSI, Chicago IL, USA, 23–25 May 2018; pp. 255–260. [Google Scholar]

- Gao, C.; Ye, M.; Xue, C.J.; Zhang, Y.; Shi, L.; Shu, J.; Yang, J. Reprogramming 3D TLC Flash Memory based Solid State Drives. ACM Trans. Storage (TOS) 2022, 18, 1–33. [Google Scholar] [CrossRef]

- Park, J.K.; Kim, S.E. A Review of Cell Operation Algorithm for 3D NAND Flash Memory. Appl. Sci. 2022, 12, 10697. [Google Scholar] [CrossRef]

- Choi, E.; Park, S. Device considerations for high density and highly reliable 3D NAND flash cell in near future. In Proceedings of the IEEE International Electron Devices Meeting (IEDM), San Francisco, CA, USA, 10–13 December 2012. [Google Scholar]

- Jang, J.; Kim, H.S.; Cho, W.; Cho, H.; Kim, J.; Shim, S.I.; Jeong, J.-H.; Son, B.-K.; Kim, D.W.; Shim, J.-J.; et al. Vertical cell array using TCAT (Terabit Cell Array Transistor) technology for ultra high density NAND flash memory. In Proceedings of the 2009 Symposium on VLSI Technology, Kyoto, Japan, 15–17 June 2009; pp. 192–193. [Google Scholar]

- JEDEC Solid State Technology Association. Solid-State Drive (SSD) Requirements and Endurance Test Method; JEDEC Solid State Technology Association: Arlington, VA, USA, 2022. [Google Scholar]

- Arrhenius, S. Über die Dissociationswärme und den Einfluss der Temperatur auf den Dissociationsgrad der Elektrolyte. Z. Phys. Chem. 1889, 4, 96–116. [Google Scholar] [CrossRef]

- Favalli, M.; Zambelli, C.; Marelli, A.; Micheloni, R.; Olivo, P. A Scalable Bidimensional Randomization Scheme for TLC 3D NAND Flash Memories. Micromachines 2021, 12, 759. [Google Scholar] [CrossRef] [PubMed]

- Micheloni, R.; Marelli, A.; Ravasio, R. Error Correction Codes for Non-Volatile Memories; Springer Science & Business Media: New York, NY, USA, 2008. [Google Scholar]

- Shihab, M.M.; Zhang, J.; Jung, M.; Kandemir, M. ReveNAND: A Fast-Drift-Aware Resilient 3D NAND Flash Design. ACM Trans. Archit. Code Optim. 2018, 15, 1–26. [Google Scholar] [CrossRef]

- Lee, S.; Park, J.; Kim, J. FlashBench: A workbench for a rapid development of flash-based storage devices. In Proceedings of the 2012 23rd IEEE International Symposium on Rapid System Prototyping (RSP), Tampere, Finland, 11–12 October 2012; pp. 163–169. [Google Scholar] [CrossRef]

- Filebench. Available online: http://filebench.sourceforge.net (accessed on 1 January 2023).

- Lee, D.; Chang, I.J.; Yoon, S.-Y.; Jang, J.; Jang, D.-S.; Hahn, W.-G.; Park, J.-Y.; Kim, D.-G.; Yoon, C.; Lim, B.-S.; et al. A 64Gb 533Mb/s DDR interface MLC NAND Flash in sub-20nm technology. In Proceedings of the 2012 IEEE International Solid-State Circuits Conference, San Francisco, CA, USA, 19–23 February 2012; pp. 430–432. [Google Scholar] [CrossRef]

| Workload | Varmail | OLTP | Webproxy |

|---|---|---|---|

| read:write | 1:1 | 1:10 | 5:1 |

| I/O intensity | moderate | high | moderate |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, B.; Kim, M. LazyRS: Improving the Performance and Reliability of High-Capacity TLC/QLC Flash-Based Storage Systems Using Lazy Reprogramming. Electronics 2023, 12, 843. https://doi.org/10.3390/electronics12040843

Kim B, Kim M. LazyRS: Improving the Performance and Reliability of High-Capacity TLC/QLC Flash-Based Storage Systems Using Lazy Reprogramming. Electronics. 2023; 12(4):843. https://doi.org/10.3390/electronics12040843

Chicago/Turabian StyleKim, Beomjun, and Myungsuk Kim. 2023. "LazyRS: Improving the Performance and Reliability of High-Capacity TLC/QLC Flash-Based Storage Systems Using Lazy Reprogramming" Electronics 12, no. 4: 843. https://doi.org/10.3390/electronics12040843

APA StyleKim, B., & Kim, M. (2023). LazyRS: Improving the Performance and Reliability of High-Capacity TLC/QLC Flash-Based Storage Systems Using Lazy Reprogramming. Electronics, 12(4), 843. https://doi.org/10.3390/electronics12040843