A Dynamic Short Cascade Diffusion Prediction Network Based on Meta-Learning-Transformer

Abstract

:1. Introduction

- (1)

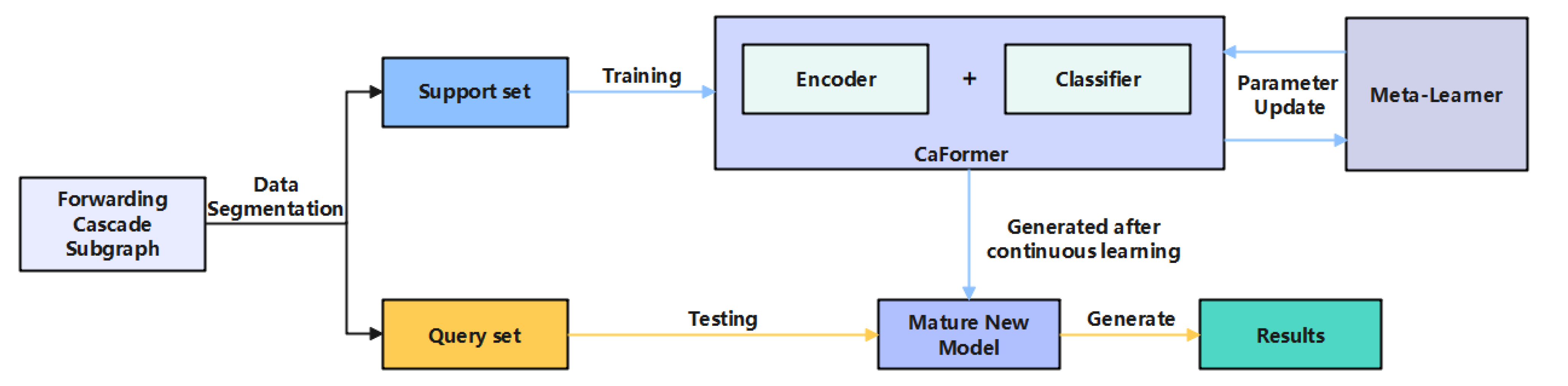

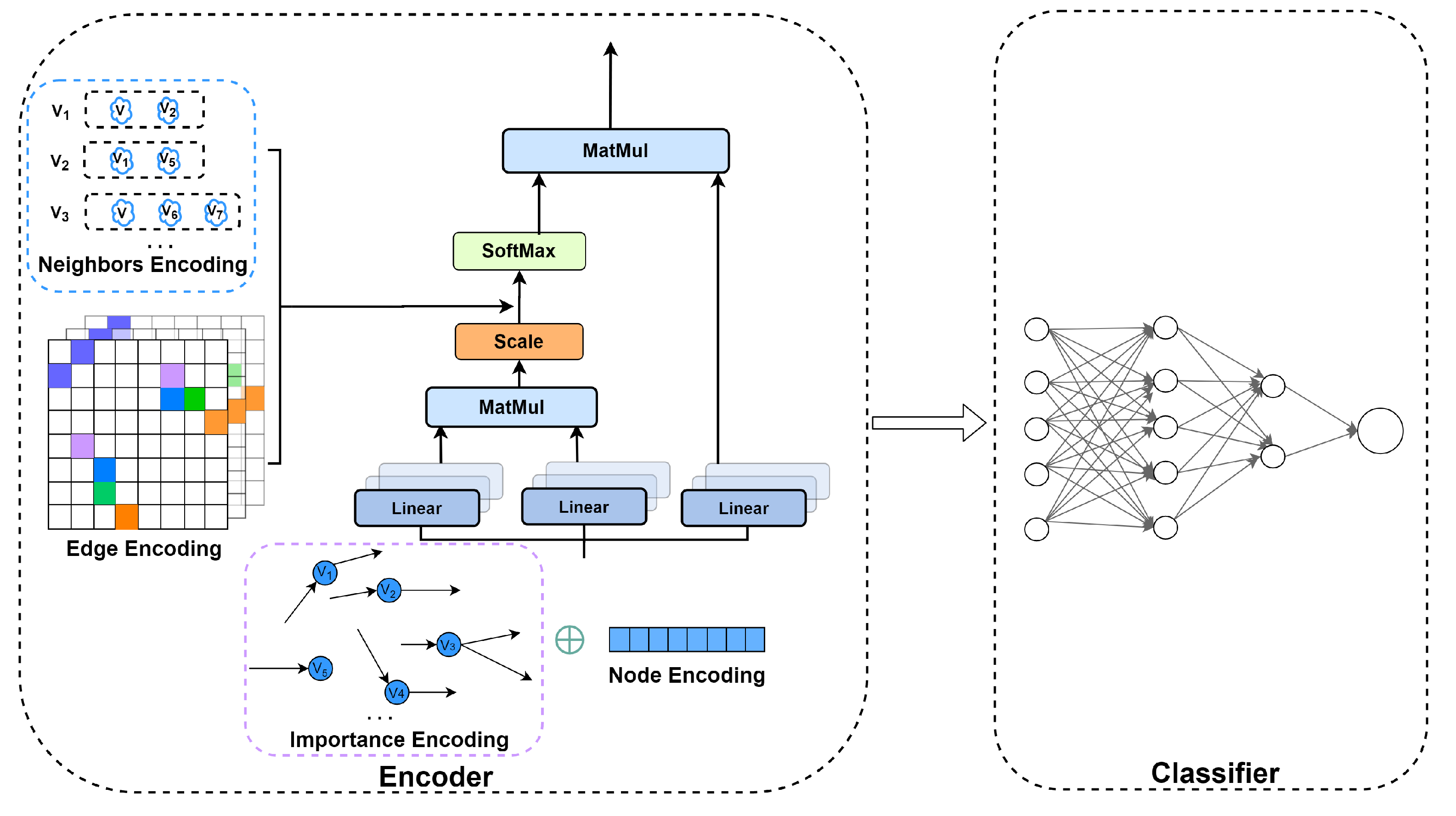

- Considering the way Graphormer processes graph-structured data, this paper creatively proposes a cascaded graph processing model named CaFormer by combining an improved Transformer structure as an encoder and a multilayer perceptron as the classifier. The model’s perception of temporal information is enhanced, making it more applicable to the processing of dynamic cascade data.

- (2)

- This paper organically integrates adaptive meta-learning with CaFormer to create a method called MetaCaFormer to solve the problem that short cascades are challenging to predict in dynamic networks, making the model more sensitive to short cascades and more capable of prediction.

- (3)

- In this paper, sufficient comparison experiments are conducted with existing baseline methods. The experimental results show that MetaCaFormer always gives the best prediction, and sufficient ablation experiments are also conducted to prove the effectiveness of each component of MetaCaFormer.

2. Related Work

2.1. Diagram Structure Processing

2.2. Exploration of Few-Shot Learning in Graph Structure

3. Method

3.1. Definition

3.1.1. Dynamic Cascade Network

3.1.2. Short Cascade Prediction

3.1.3. Parameter Adjustment

3.2. Our Proposal

3.2.1. Task Formulation

3.2.2. CaFormer

3.2.3. Meta-Learner

4. Experiments

4.1. Datasets

4.2. Baseline Methods

4.3. Experimental Settings

5. Results and Discussion

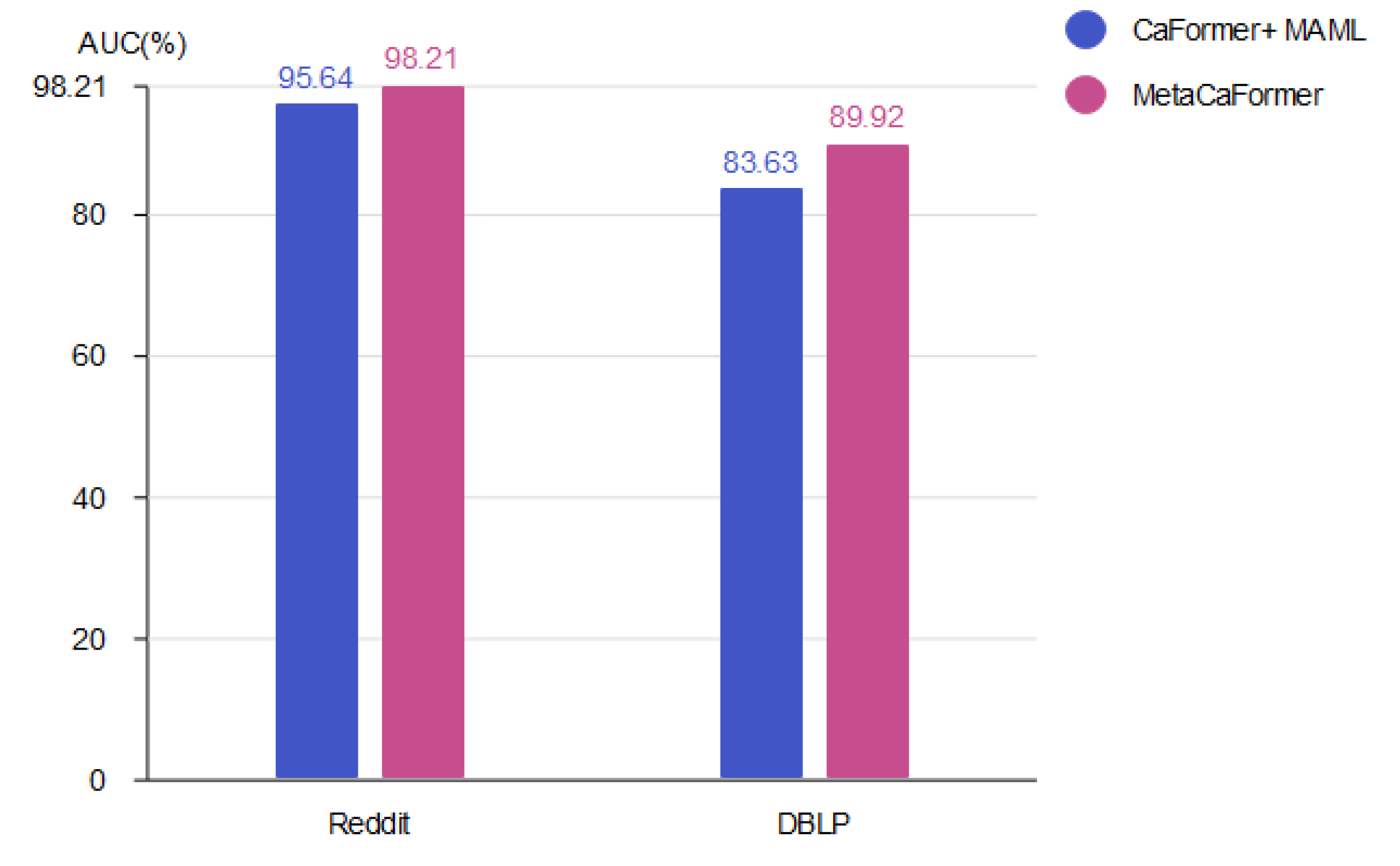

5.1. Comparison Results

5.2. Ablation

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, B.; Yang, D.; Shi, Y.; Wang, Y. Improving Information Cascade Modeling by Social Topology and Dual Role User Dependency. In Proceedings of the Database Systems for Advanced Applications: 27th International Conference, DASFAA 2022, Virtual Event, 11–14 April 2022; Proceedings, Part I. Springer: Berlin/Heidelberg, Germany, 2022; pp. 425–440. [Google Scholar]

- Kumar, S.; Zhang, X.; Leskovec, J. Predicting dynamic embedding trajectory in temporal interaction networks. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 1269–1278. [Google Scholar]

- Zhou, F.; Xu, X.; Trajcevski, G.; Zhang, K. A survey of information cascade analysis: Models, predictions, and recent advances. ACM Comput. Surv. (CSUR) 2021, 54, 1–36. [Google Scholar] [CrossRef]

- Shang, Y.; Zhou, B.; Wang, Y.; Li, A.; Chen, K.; Song, Y.; Lin, C. Popularity prediction of online contents via cascade graph and temporal information. Axioms 2021, 10, 159. [Google Scholar] [CrossRef]

- Chen, L.; Wang, L.; Zeng, C.; Liu, H.; Chen, J. DHGEEP: A Dynamic Heterogeneous Graph-Embedding Method for Evolutionary Prediction. Mathematics 2022, 10, 4193. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, X.; Ran, Y.; Michalski, R.; Jia, T. CasSeqGCN: Combining network structure and temporal sequence to predict information cascades. Expert Syst. Appl. 2022, 206, 117693. [Google Scholar] [CrossRef]

- Wu, Q.; Gao, Y.; Gao, X.; Weng, P.; Chen, G. Dual sequential prediction models linking sequential recommendation and information dissemination. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 447–457. [Google Scholar]

- Robles, J.F.; Chica, M.; Cordon, O. Evolutionary multiobjective optimization to target social network influentials in viral marketing. Expert Syst. Appl. 2020, 147, 113183. [Google Scholar] [CrossRef]

- Zhao, L.; Chen, J.; Chen, F.; Jin, F.; Wang, W.; Lu, C.T.; Ramakrishnan, N. Online flu epidemiological deep modeling on disease contact network. GeoInformatica 2020, 24, 443–475. [Google Scholar] [CrossRef]

- Kumar, R.; Kumar, P.; Tripathi, R.; Gupta, G.P.; Kumar, N.; Hassan, M.M. A privacy-preserving-based secure framework using blockchain-enabled deep-learning in cooperative intelligent transport system. IEEE Trans. Intell. Transp. Syst. 2021, 23, 16492–16503. [Google Scholar] [CrossRef]

- Kumar, R.; Kumar, P.; Aljuhani, A.; Islam, A.; Jolfaei, A.; Garg, S. Deep Learning and Smart Contract-Assisted Secure Data Sharing for IoT-Based Intelligent Agriculture. IEEE Intell. Syst. 2022, 1–8. [Google Scholar] [CrossRef]

- Kumar, R.; Kumar, P.; Aloqaily, M.; Aljuhani, A. Deep Learning-based Blockchain for Secure Zero Touch Networks. IEEE Commun. Mag. 2022, 1–7. [Google Scholar] [CrossRef]

- Pareja, A.; Domeniconi, G.; Chen, J.; Ma, T.; Suzumura, T.; Kanezashi, H.; Kaler, T.; Schardl, T.; Leiserson, C. Evolvegcn: Evolving graph convolutional networks for dynamic graphs. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 5363–5370. [Google Scholar]

- Vilalta, R.; Drissi, Y. A perspective view and survey of meta-learning. Artif. Intell. Rev. 2002, 18, 77–95. [Google Scholar] [CrossRef]

- Zhou, F.; Cao, C.; Zhang, K.; Trajcevski, G.; Zhong, T.; Geng, J. Meta-gnn: On few-shot node classification in graph meta-learning. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019; pp. 2357–2360. [Google Scholar]

- Chauhan, J.; Nathani, D.; Kaul, M. Few-shot learning on graphs via super-classes based on graph spectral measures. arXiv 2020, arXiv:2002.12815. [Google Scholar]

- Huang, K.; Zitnik, M. Graph meta learning via local subgraphs. Adv. Neural Inf. Process. Syst. 2020, 33, 5862–5874. [Google Scholar]

- Yao, H.; Zhang, C.; Wei, Y.; Jiang, M.; Wang, S.; Huang, J.; Chawla, N.; Li, Z. Graph few-shot learning via knowledge transfer. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 6656–6663. [Google Scholar]

- Xu, D.; Ruan, C.; Korpeoglu, E.; Kumar, S.; Achan, K. Inductive representation learning on temporal graphs. arXiv 2020, arXiv:2002.07962. [Google Scholar]

- Yang, C.; Wang, C.; Lu, Y.; Gong, X.; Shi, C.; Wang, W.; Zhang, X. Few-shot Link Prediction in Dynamic Networks. In Proceedings of the Fifteenth ACM International Conference on Web Search and Data Mining, Virtual, 21–25 February 2022; pp. 1245–1255. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Ying, C.; Cai, T.; Luo, S.; Zheng, S.; Ke, G.; He, D.; Shen, Y.; Liu, T.Y. Do transformers really perform bad for graph representation? arXiv 2021, arXiv:2106.05234. [Google Scholar]

- Bruna, J.; Zaremba, W.; Szlam, A.; LeCun, Y. Spectral networks and locally connected networks on graphs. arXiv 2013, arXiv:1312.6203. [Google Scholar]

- Huang, X.; Song, Q.; Li, Y.; Hu, X. Graph recurrent networks with attributed random walks. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 732–740. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Rong, Y.; Bian, Y.; Xu, T.; Xie, W.; Wei, Y.; Huang, W.; Huang, J. Self-supervised graph transformer on large-scale molecular data. Adv. Neural Inf. Process. Syst. 2020, 33, 12559–12571. [Google Scholar]

- Dwivedi, V.P.; Bresson, X. A generalization of transformer networks to graphs. arXiv 2020, arXiv:2012.09699. [Google Scholar]

- Trivedi, R.; Farajtabar, M.; Biswal, P.; Zha, H. Dyrep: Learning representations over dynamic graphs. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Hamilton, W.; Ying, Z.; Leskovec, J. Inductive representation learning on large graphs. Adv. Neural Inf. Process. Syst. 2017, 30, 1024–1034. [Google Scholar]

- Rossi, E.; Chamberlain, B.; Frasca, F.; Eynard, D.; Monti, F.; Bronstein, M. Temporal graph networks for deep learning on dynamic graphs. arXiv 2020, arXiv:2006.10637. [Google Scholar]

- Bose, A.J.; Jain, A.; Molino, P.; Hamilton, W.L. Meta-graph: Few shot link prediction via meta learning. arXiv 2019, arXiv:1912.09867. [Google Scholar]

- Suo, Q.; Chou, J.; Zhong, W.; Zhang, A. Tadanet: Task-adaptive network for graph-enriched meta-learning. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Virtual, 6–10 July 2020; pp. 1789–1799. [Google Scholar]

- Lan, L.; Wang, P.; Du, X.; Song, K.; Tao, J.; Guan, X. Node classification on graphs with few-shot novel labels via meta transformed network embedding. Adv. Neural Inf. Process. Syst. 2020, 33, 16520–16531. [Google Scholar]

- Guo, Z.; Zhang, C.; Yu, W.; Herr, J.; Wiest, O.; Jiang, M.; Chawla, N.V. Few-shot graph learning for molecular property prediction. In Proceedings of the Web Conference 2021, Ljubljana, Slovenia, 19–23 April 2021; pp. 2559–2567. [Google Scholar]

- Kumar, P.; Kumar, R.; Gupta, G.P.; Tripathi, R.; Jolfaei, A.; Islam, A.N. A blockchain-orchestrated deep learning approach for secure data transmission in IoT-enabled healthcare system. J. Parallel Distrib. Comput. 2023, 172, 69–83. [Google Scholar] [CrossRef]

- Gori, M.; Monfardini, G.; Scarselli, F. A new model for learning in graph domains. In Proceedings of the 2005 IEEE International Joint Conference on Neural Networks, Montreal, QC, Canada, 31 July–4 August 2005; Volume 2, pp. 729–734. [Google Scholar]

- Zhou, L.; Yang, Y.; Ren, X.; Wu, F.; Zhuang, Y. Dynamic network embedding by modeling triadic closure process. In Proceedings of the AAAI conference on artificial intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Zuo, Y.; Liu, G.; Lin, H.; Guo, J.; Hu, X.; Wu, J. Embedding temporal network via neighborhood formation. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 2857–2866. [Google Scholar]

- Wang, Y.; Chang, Y.Y.; Liu, Y.; Leskovec, J.; Li, P. Inductive representation learning in temporal networks via causal anonymous walks. arXiv 2021, arXiv:2101.05974. [Google Scholar]

- Goyal, P.; Chhetri, S.R.; Canedo, A. dyngraph2vec: Capturing network dynamics using dynamic graph representation learning. Knowl. Based Syst. 2020, 187, 104816. [Google Scholar] [CrossRef]

- Manessi, F.; Rozza, A.; Manzo, M. Dynamic graph convolutional networks. Pattern Recognit. 2020, 97, 107000. [Google Scholar] [CrossRef]

- Li, Z.; Kumar, M.; Headden, W.; Yin, B.; Wei, Y.; Zhang, Y.; Yang, Q. Learn to cross-lingual transfer with meta graph learning across heterogeneous languages. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 2290–2301. [Google Scholar]

- Chapelle, O.; Scholkopf, B.; Zien, A. Semi-supervised learning (chapelle, o. et al., eds.; 2006) [book reviews]. IEEE Trans. Neural Netw. 2009, 20, 542. [Google Scholar] [CrossRef]

- Li, Z.; Li, X.; Wei, Y.; Bing, L.; Zhang, Y.; Yang, Q. Transferable end-to-end aspect-based sentiment analysis with selective adversarial learning. arXiv 2019, arXiv:1910.14192. [Google Scholar]

- Li, Z.; Wei, Y.; Zhang, Y.; Zhang, X.; Li, X. Exploiting coarse-to-fine task transfer for aspect-level sentiment classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 4253–4260. [Google Scholar]

- Wang, Y.; Yao, Q.; Kwok, J.T.; Ni, L.M. Generalizing from a few examples: A survey on few-shot learning. ACM Comput. Surv. (CSUR) 2020, 53, 1–34. [Google Scholar] [CrossRef]

- Zha, J.; Li, Z.; Wei, Y.; Zhang, Y. Disentangling task relations for few-shot text classification via self-supervised hierarchical task clustering. arXiv 2022, arXiv:2211.08588. [Google Scholar]

- Liu, Y.; Lee, J.; Park, M.; Kim, S.; Yang, E.; Hwang, S.J.; Yang, Y. Learning to propagate labels: Transductive propagation network for few-shot learning. arXiv 2018, arXiv:1805.10002. [Google Scholar]

- Yao, H.; Wu, X.; Tao, Z.; Li, Y.; Ding, B.; Li, R.; Li, Z. Automated relational meta-learning. arXiv 2020, arXiv:2001.00745. [Google Scholar]

- Ding, K.; Wang, J.; Li, J.; Shu, K.; Liu, C.; Liu, H. Graph prototypical networks for few-shot learning on attributed networks. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management, Online, 19–23 October 2020; pp. 295–304. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical Networks for Few-shot Learning. In Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Li, Z.; Zhang, D.; Cao, T.; Wei, Y.; Song, Y.; Yin, B. Metats: Meta teacher-student network for multilingual sequence labeling with minimal supervision. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Online, 7–11 November 2021; pp. 3183–3196. [Google Scholar]

- Finn, C.; Xu, K.; Levine, S. Probabilistic Model-Agnostic Meta-Learning. In Proceedings of the 32nd Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Finn, C.; Abbeel, P.; Levine, S. Model-agnostic meta-learning for fast adaptation of deep networks. In Proceedings of the International conference on machine learning. PMLR, Sydney, Australia, 6–11 August 2017; pp. 1126–1135. [Google Scholar]

- Li, J.; Shao, H.; Sun, D.; Wang, R.; Yan, Y.; Li, J.; Liu, S.; Tong, H.; Abdelzaher, T. Unsupervised Belief Representation Learning in Polarized Networks with Information-Theoretic Variational Graph Auto-Encoders. arXiv 2021, arXiv:2110.00210. [Google Scholar]

- Wang, H.; Wan, R.; Wen, C.; Li, S.; Jia, Y.; Zhang, W.; Wang, X. Author name disambiguation on heterogeneous information network with adversarial representation learning. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 238–245. [Google Scholar]

- Yan, Y.; Zhang, S.; Tong, H. Bright: A bridging algorithm for network alignment. In Proceedings of the Web Conference 2021, Ljubljana, Slovenia, 19–23 April 2021; pp. 3907–3917. [Google Scholar]

- Yang, C.; Li, J.; Wang, R.; Yao, S.; Shao, H.; Liu, D.; Liu, S.; Wang, T.; Abdelzaher, T.F. Hierarchical overlapping belief estimation by structured matrix factorization. In Proceedings of the 2020 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining (ASONAM), The Hague, The Netherlands, 7–10 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 81–88. [Google Scholar]

- Lu, Y.; Wang, X.; Shi, C.; Yu, P.S.; Ye, Y. Temporal network embedding with micro-and macro-dynamics. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019; pp. 469–478. [Google Scholar]

| Method | Structure | Time | Dynamic | Few-Shot Learning | Advantage | Limitation |

|---|---|---|---|---|---|---|

| GCN [23] | ✓ | Perception of global information. | Limited evaluation. | |||

| GRN [24] | ✓ | ✓ | Convert graph data to sequence form. | Need for more understanding of global information. | ||

| GAT [25] | ✓ | Assigning weights to neighboring nodes. | Ignore time information. | |||

| GROVER [26] | ✓ | ✓ | Improved attention mechanism. | Limited assessment capacity. | ||

| GraphTransformer [27] | ✓ | ✓ | Sparsity of the graph is guaranteed. | Limited ability to handle structured data. | ||

| Graphormer [22] | ✓ | ✓ | Improved Transformer. | Limited ability to handle dynamic data. | ||

| DyRep [28] | ✓ | ✓ | Introduction of recurrent neural networks for node representation. | Ignore structural information. | ||

| GraphSAGE [29] | ✓ | ✓ | ✓ | Convert to snapshot form. | Low evaluation performance. | |

| EvolveGCN [13] | ✓ | ✓ | ✓ | Apply to dynamic network node aggregation classification. | It has limitations in short cascades. | |

| TGAT [19] | ✓ | ✓ | ✓ | Superimposed attention mechanism. | There are restrictions on short cascade predictions. | |

| TGN [30] | ✓ | ✓ | ✓ | Efficient parallelism can be maintained. | Insufficient data processing capability for short cascades. | |

| Meta-GNN [15] | ✓ | ✓ | ✓ | Combined with Meta-learning. | Limited performance. | |

| Meta-Graph [31] | ✓ | ✓ | ✓ | Meta-learning and graph neural network fusion. | Limited by simple overlay. | |

| G-Meta [17] | ✓ | ✓ | ✓ | Submap Modeling. | Limited ability to handle dynamic data. | |

| TAdaNet [32] | ✓ | ✓ | ✓ | Multiple graph structure information combined with meta-learning for adaptive classification. | Insufficient assessment capacity. | |

| MetaTNE [33] | ✓ | ✓ | ✓ | Introducing embedded conversion functions. | Limited by static network. | |

| META-MGNN [34] | ✓ | ✓ | ✓ | Combining meta-learning and GCN. | Limited by static network. | |

| MetaDyGNN [20] | ✓ | ✓ | ✓ | ✓ | Effective fusion of meta-learning and graph neural networks. | Limited by the graph network’s ability to process temporal information. |

| MetaCaFormer | ✓ | ✓ | ✓ | ✓ | Adequate combination of structural and temporal information. | Limited ability to forecast macro. |

| Notations | Descriptions |

|---|---|

| G | Static social network |

| V | Users/Nodes |

| E | The set of edges |

| The edge of node and node at time | |

| The set of neighbors of node | |

| The tasks of node V include support set and query set | |

| , | Encoder, Classifier |

| A | The matrix capturing the similarity between Q and K |

| Self-Attentive computing | |

| The shortest path from to | |

| edge encoding | |

| w | weight |

| , | The incoming degree and outgoing degree of node |

| Layer Normalization | |

| Multi-head attention. | |

| The multilayer perceptron | |

| Loss calculation | |

| The possibility of connectivity between nodes and | |

| , | Parameter |

| Dataset | DBLP | |

|---|---|---|

| Node | 10,984 | 28,085 |

| Dynamic edge | 672,448 | 286,894 |

| Timestamp | continuous | 27 snapshots |

| Dataset | DBLP | |||||

|---|---|---|---|---|---|---|

| Model/Result | ACC | AUC | Maco-F1 | ACC | AUC | Maco-F1 |

| GraphSAGE [29] | 88.92% | 93.12% | 87.98% | 72.15% | 76.65% | 71.32% |

| GAT [25] | 88.76% | 92.96% | 88.34% | 73.38% | 76.94% | 72.31% |

| EvolveGCN [13] | 59.21% | 61.64% | 57.02% | 57.88% | 63.15% | 56.53% |

| TGAT [19] | 93.15% | 94.43% | 92.96% | 77.21% | 81.02% | 76.45% |

| Meta-GNN [15] | 85.97% | 91.06% | 85.21% | 74.98% | 79.85% | 74.52% |

| TGAT+MAML [19,54] | 87.85% | 91.56% | 87.42% | 73.53% | 77.46% | 72.62% |

| MetaDyGNN [20] | 95.97% | 97.46% | 95.68% | 83.02% | 87.57% | 82.04% |

| MetaCaFormer | 97.95% | 98.21% | 96.88% | 85.26% | 89.92% | 84.09% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, G.; Meng, T.; Li, M.; Zhou, M.; Han, D. A Dynamic Short Cascade Diffusion Prediction Network Based on Meta-Learning-Transformer. Electronics 2023, 12, 837. https://doi.org/10.3390/electronics12040837

Li G, Meng T, Li M, Zhou M, Han D. A Dynamic Short Cascade Diffusion Prediction Network Based on Meta-Learning-Transformer. Electronics. 2023; 12(4):837. https://doi.org/10.3390/electronics12040837

Chicago/Turabian StyleLi, Gang, Tao Meng, Min Li, Mingle Zhou, and Delong Han. 2023. "A Dynamic Short Cascade Diffusion Prediction Network Based on Meta-Learning-Transformer" Electronics 12, no. 4: 837. https://doi.org/10.3390/electronics12040837

APA StyleLi, G., Meng, T., Li, M., Zhou, M., & Han, D. (2023). A Dynamic Short Cascade Diffusion Prediction Network Based on Meta-Learning-Transformer. Electronics, 12(4), 837. https://doi.org/10.3390/electronics12040837