Abstract

Voice cloning aims to synthesize the voice with a new speaker’s timbre from a small amount of the new speaker’s speech. Current voice cloning methods, which focus on modeling speaker timbre, can synthesize speech with similar speaker timbres. However, the prosody of these methods is flat, lacking expressiveness and the ability to control the expressiveness of cloned speech. To solve this problem, we propose a novel method ZSE-VITS (zero-shot expressive VITS) based on the end-to-end speech synthesis model VITS. Specifically, we use VITS as the backbone network and add the speaker recognition model TitaNet as the speaker encoder to realize zero-shot voice cloning. We use explicit prosody information to avoid effects from the speaker information and adjust speech prosody using the prosody information prediction and prosody fusion methods directly. We widen the pitch distribution of the train datasets using pitch augmentation to improve the generalization ability of the prosody model, and we fine-tune the prosody predictor alone in the emotion corpus to learn prosody prediction of various styles. The objective and subjective evaluations of the open datasets show that our method can generate more expressive speech and adjust prosody information artificially without affecting the similarity of speaker timbre.

1. Introduction

Speech synthesis based on deep learning [1,2,3,4,5] has made significant progress, and the quality, naturalness, and intelligibility of the generated speech have been greatly improved. The training of speech synthesis models involves a lot of high-quality speech text pairs of data, and it needs sufficient data from the new speaker when expanding the new speaker, which dramatically limits the personalized application of this method. Therefore, research on synthesizing speech with a new speaker’s timbre based on a small amount of speech from new speakers has become an attractive field, also known as voice cloning [6]. It can greatly reduce the threshold of personalized speech synthesis and application costs, and is a more efficient application of customized voice assistants, audio-book narration, and user experience in virtual worlds.

There are two main methods for voice cloning, namely speaker adaptation [7,8,9] and speaker encoder [10,11,12,13]. The speaker adaptation method uses the new speaker speech fine-tuning, multi-speaker speech synthesis model, so the model needs to be retrained. The idea of the speaker encoder method is to train a speaker encoder module, which is used to extract speaker embedding that reflects speaker characteristics. Then speaker embedding is applied to the multi-speaker speech synthesis model as a control condition.

These methods focus on speaker timbre modeling and can synthesize speech with similar speaker timbre. However, the synthesized speech prosody is flat and lacking in expressiveness. The main reason for this is that voice cloning and speech synthesis also face a ‘one-too-many’ problem [4]. That is, the same text corresponds to different prosodies of speech. In model training, making the model generate speech according to text and speaker information will force the model to learn the average prosody of the corpus, leading to “dull” synthesized speech. In response to this problem, expressive speech synthesis methods [14,15,16,17] consider the modeling of speech prosody, alleviate the one-too-many problem by inputting additional prosody information, and improve the synthesized speech expressiveness. However, these methods are limited to synthesizing only the voice of the speaker in the training set and cannot be used directly to synthesize the voice of the speaker not seen during the training, which limits the application of these prosody modeling methods in voice cloning.

Expressive voice cloning is a challenging task because it is necessary to consider not only voice cloning but also speaker and prosody decoupling. To the best of our knowledge, only several works have been undertaken to explore expressive voice cloning at present. EVC [18] is a speaker-encoder-based voice cloning method based on the expressive speech synthesis model Mellotron [19], which uses GST [15] and pitch contour as prosody control condition inputs to provide fine-grained style control for synthetic speech. However, the style information that GST captures from the speech spectrum is easily coupled with speaker information because the speaker timbre can also be considered as a global style. Meta-Voice [20] uses a model-agnostic, meta-learning approach [21] to implement rapid model fine-tuning to adapt to new speakers. In addition, the model uses a style encoder to predict scaling and bias parameters of the layernorm to control the style of the synthesized speech. However, the style control of Meta-Voice is global and cannot adjust the local prosody, and the model needs fine-tuning training to adapt to new speakers.

Considering the deficiencies of the above expressive voice cloning research, there are two main problems to be solved in order to further improve the synthesis performance of expressive voice cloning. First is the speaker and prosody information decoupling problem. When the speaker information and prosody information are coupled, the quality of synthetic speech is unstable and the generalization ability to new speakers is weak, which not only reduces the naturalness of synthetic speech, but also affects the timbre similarity of synthetic speech. Secondly, previous methods lack sufficient ability to control speech prosody. The expressiveness of speech prosody is reflected in the information of different levels in speech. The previous global control is relatively coarse-grained and cannot effectively adjust the speech prosody to the desired target. Therefore, to solve the above two key problems, we propose a novel zero-shot expressive voice cloning method. Our method focuses on further improving the prosody modeling capability of voice cloning and realizing the global and local prosody control capability of cloned speech. This specific improvement has the following six points.

- It uses the end-to-end speech synthesis model VITS [22] as the backbone network, which is better than the two-stage speech synthesis method of synthesizer plus vocoder.

- The current speaker recognition model TitaNet [23] with excellent performance is used as the speaker encoder to provide speaker embeddings with richer speaker information for the voice cloning model.

- To solve the problem of speaker and prosody information decoupling, explicit prosody information, such as pitch, energy, and duration is used to realize the prosody transfer of different speakers.

- To improve the generalization ability of the prosody model of unseen speakers, pitch augmentation is used to widen the pitch distribution of the corpus.

- The explicit prosody features of speech can be adjusted directly by using the methods of explicit prosody information prediction and prosody fusion, which does not affect the speaker information of synthesized speech.

- Most of the training corpus is reading-style, and the prosody expressiveness is not as intense as emotion corpus. We fine-tuned the prosody predictor alone in the emotion corpus ESD [24] to learn prosody prediction of various styles, demonstrating the extensibility of the model prosody style prediction.

Finally, we trained a voice cloning model that can control the synthesized speech prosody with fine granularity. We completed several evaluation experiments and they show that our proposed model can clone the expressive voice of unseen speakers and adjust it manually.

2. Related Work

2.1. Voice Cloning

Voice cloning aims to synthesize speech with a new speaker’s timbre based on a small amount of speech from new speakers. There are two main approaches in voice cloning: speaker adaptation methods and speaker encoder methods. The speaker adaptation method uses the new speaker’s speech to fine-tune the multi-speaker speech synthesis model, so it needs to retrain the model. However, the model is prone to overfit when training with a small amount of data. In [7], the speech synthesis module is divided into two cascade modules. The former module is used to predict the acoustic features of the speech, and the latter module is used to model the speaker’s timbre. The two modules are connected by the speaker’s independent phone posteriorgram as the intermediate feature. The advantage of this method is that when only the new speaker’s timbre is learned, only the modules related to speaker timbre need to be fine-tuned, which effectively alleviates the overfitting problem. Adaspeech [8] introduces conditional layer normalization based on FastSpeech2 [4] and joint fine-tuning with speaker embedding. Conditional layer normalization greatly reduces the number of fine-tuning parameters, allowing the model to learn new speaker timbre without lowering voice quality.

The main idea of the speaker encoder method is to train a separate speaker encoder, which is used to extract the speaker embedding, which reflects the speaker characteristics. Then the speaker embedding is used as a control condition on the multi-speaker speech synthesis model. This method does not need to retrain the model and only requires a small amount of new speaker’s speech to extract the speaker embedding, so it is also called the zero-shot voice cloning method. SV2TTS [10] uses the speaker verification model pre-trained by GE2E loss [25] as the speaker encoder and extracts the speaker embedding as the conditional input to the speech synthesis model Tacotron2 [3], so that the model can synthesize speech similar to the new speaker. Subsequently, the authors of [11] test different speaker verification models with Tacotron2 and showed that the LDE (learnable dictionary encoding) embedding method [26] improved naturalness and similarity of synthesized speech of new speakers compared with other embedding models. Attentron [12] refers to multiple speeches rather than single speech and uses two encoders with different granularity to extract more information related to speaker timbre. Based on the fully end-to-end VITS model, YourTTS [27] achieves zero-shot voice cloning by adding a speaker encoder and speaker consistent loss, and multilingual voice cloning by language embedding.

These voice cloning methods preserve speaker-specific features in synthesized speech, but they cannot control other aspects of speech that are not included in text or speaker embeddings, such as varying tone of voice, speaking speed, emphasis, and emotion. This disadvantage affects the application of voice cloning.

2.2. Expressive Speech Synthesis

In short, expressive speech synthesis aims to make synthesized speech more expressive. Current researches aims to provide prosody-related variable information to synthesize richer prosody and more vivid speech, thus alleviating the one-too-many problem. According to different prosody modeling methods, expressive speech synthesis can be divided into explicit modeling and implicit modeling [28]. Explicit modeling uses directly accessible prosody variable information, such as emotion labels and style labels of the corpus, and controls speech prosody obviously directly. FastSpeech2 directly extracts pitch, energy, and phoneme duration of speech as prosody variable information. Implicit modeling generally adopts an unsupervised modeling method, and the prosody latent variables in the reference audio are extracted through the network model as additional inputs for speech synthesis. The authors of [14] propose to use a reference encoder to extract style embeddings from reference audio for expressive speech synthesis. Alternatively, [15] uses global style tokens (GST) on the reference encoder to capture style embedding of the reference audio. Considering the hierarchical structure of prosody, several works considered the combination of different levels of prosody information. Mellotron [19] uses a combination of explicit and latent style variables to provide more fine-grained control over the expressive characteristics of synthesized speech. In [17], global and frame-level reference encoders are used to extract the prosody style information of the reference spectrum, which facilitates generating more natural prosody during inferring. However, the above methods are limited by speakers in the training set and cannot be directly used to synthesize voices from speakers not seen during training.

3. Proposed Method

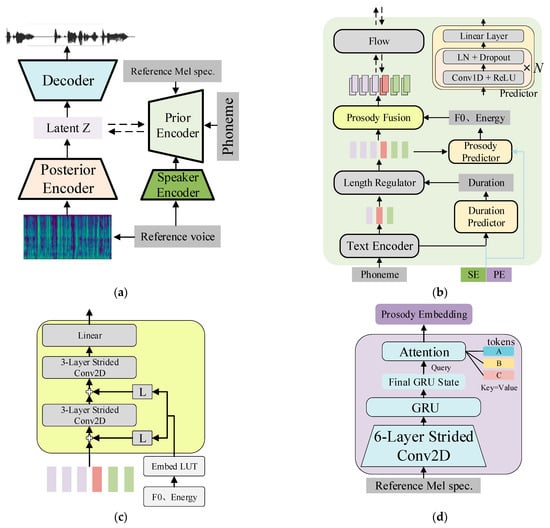

Our expressive voice cloning model ZSE-VITS is built on VITS. The whole model can be expressed as a conditional variational encoder, and the model architecture is shown in Figure 1a. The model can be divided into 4 parts: speaker encoder, posterior encoder, prior encoder, and decoder. The posterior encoder extracts intermediate latent variables. from speech waveform , and the decoder reconstructs speech waveform based on intermediate latent variables , as shown in Equations (1) and (2).

Figure 1.

Model architecture scheme: (a) overall structure; (b) prior encoder (SE: speaker embedding, PE: prosody embedding); (c) prosody fusion (L: linear, LUT: lookup table); (d) prosody style extractor.

To achieve conditional control of generated speech, we model conditional prior distributions using a prior encoder. The conditions c include speaker embedding extracted by the speaker encoder, phoneme sequence ctext, and prosody information cprosody. Conditional variational encoder loss usually uses a reconstruction loss and a prior regularization loss, as shown in Equation (3).

Here, uses the L1 distance of the MEL spectrum of generated and true waveforms as reconstruction loss. is KL divergence. During model inference, the prior encoder samples from prior distributions to obtain intermediate latent variables according to given condition information, and then the decoder generates waveforms according to intermediate variables. The details of the 4 modules are presented below.

3.1. Speaker Encoder

In the current zero-shot voice cloning method, a pre-trained speaker recognition model is generally used as the speaker encoder and embedding in front of the classifier is extracted as speaker embedding. By training on multi-speaker corpus, the speaker encoder learns a continuous speaker embedding space, which can generalize to the timbre representation of unseen speakers. Speaker recognition models with good performance generally provide more robust speaker embeddings for voice cloning models. Therefore, we used TitaNet, a current excellent speaker recognition model, as the speaker encoder to provide speaker embeddings for ZSE-VITS.

3.2. Posterior Encoder

A posterior encoder encodes speech waveforms into intermediate latent variables. Posterior encoders use the same network structure as the posterior encoder of VITS, which is composed of multiple layers of non-causal residual convolution modules, and finally generates the mean and variance of the posterior normal distribution through a linear layer.

3.3. Prior Encoder

As shown in Figure 1b, the prior encoder consists of a text encoder, prosody style extractor, prosody prediction and fusion module, duration predictor, and normalizing flow. The text encoder encodes input phoneme sequences into a text latent feature sequence. The prosody style extractor extracts prosody embedding from reference speech. The duration predictor predicts phoneme duration based on speaker embedding, prosody embedding, and latent text feature sequences. Then, the text latent feature sequence is extended into a frame-level latent feature sequence using duration information. The prosody predictor predicts the pitch and energy of each frame according to the frame-level latent feature sequence, speaker embedding, and prosody embedding. Then, the prosody fusion module embeds pitch and energy into the model, predicting mean and variance of Gaussian prior distribution. Finally, by applying a series of reversible transformation functions and inputting speaker embedding in affine coupling layers, the normalizing flow converts Gaussian prior distribution into a complex distribution with speaker information.

3.3.1. Text Encoder

The text encoder uses a transformer encoder structure [29], converts input phoneme sequences into a one-hot encoding sequence, and generates a text latent feature sequence.

3.3.2. Prosody Style Extractor

The prosody style extractor refers to the style encoder of GST [14] to extract the prosody embedding from reference Mel spectrum. The module firstly extracts high-dimensional sequence features from the spectrum using 6 2D convolutional layers with batch normalization, and then encodes the sequence feature information into a final GRU hidden state vector using GRU. The hidden state vector is input into the attention layer as a query vector. A set of learnable global style label vectors are used as key and value vectors in the attention layer, and prosody embedding is output by the attention layer.

3.3.3. Duration Predictor

The duration predictor predicts phoneme duration based on speaker embedding, prosody embedding, and the text latent feature sequence. Prosody embedding extracted from the reference spectrum as additional conditional control information can produce diverse duration variations. Instead of using the random duration predictor of VITS, we use 2 1D convolutional layers with ReLU activation function, adding layer normalization and dropout after each layer, and finally using a linear layer to predict duration. The duration predictor is optimized by calculating the minimum mean square error (MSE) loss of the predicted and ground truth phoneme durations. Ground phoneme duration was extracted using the MFA speech-text alignment tool (https://github.com/MontrealCorpusTools/Montreal-Forced-Aligner, accessed on 13 April 2022).

3.3.4. Prosody Prediction and Prosody Fusion Module

To achieve prosody diversity and control, it depends on whether the prosody predictor can predict reasonable and diverse prosody information at the inference time, and manually adjust predictive values conveniently. Prosody prediction models include a pitch predictor and energy predictor. Both have same network structure as the duration predictor. However, the pitch predictor uses 6 convolutional layers and adds a linear layer to predict the mean and variance of the pitch. The energy predictor uses 4 convolutional layers. The predictor inputs a text latent feature sequence, speaker embedding, and prosody embedding to predict pitch and energy, which is optimized by calculating MSE loss between predicted values and ground truth values.

As shown in Figure 1c, we quantize pitch and energy of each frame into 256 values, and then convert them into embeddings using an embedding lookup table. Then, the prosody embedding sequence and text latent feature sequence are fused through multiple convolution layers. Finally, the mean and variance of prior Gaussian distributions fused with prosody information are predicted through a linear layer.

3.3.5. Normalizing Flow

Similar to the normalizing flow of VITS, it is composed of multiple affine coupling layers. Each coupling layer inputs speaker embedding to transform normal distribution into a complex prior distribution with speaker information.

3.4. Decoder

The decoder generates audio waveforms based on extracted intermediate latent variable z. The network model structure of decoder is consistent with the generator of HiFi-GAN [5]. Limited by memory limitation, partial segments of intermediate latent variable z are selected as input to the decoder instead of the whole length to generate corresponding audio segments. Similar to VITS, we also use GAN-based [29] training to improve the quality of reconstructed speech. Discriminator follows HiFi-GAN’s multi-period discriminator (MPD) and multi-scale discriminator (MSD).

4. Experiments and Results

4.1. Experiment Settings

4.1.1. Datasets and Preprocessing

We trained and evaluated on a VCTK [30] dataset and LibriTTS [31] dataset. The VCTK dataset included 109 speakers’ English audio clips and corresponding texts. The total audio length was 44 h, and the sampling rate was 44 kHz. During training, three men and three women were retained as unseen new speakers. The LibriTTS dataset had 2456 speakers, with a total audio length of 585 h and sampling rate of 24 kHz. During training, 1151 speakers from the subset train-clean-100 and train-clean-360 were used as the training set, and 10 speakers from the subset clean test were selected as the test set for unseen new speakers. In the experiment, the open-source automatic alignment tool Montreal Forced Aligner1 was used to obtain alignment information of text speech, and Pyworld2 was used to extract speech pitch. As there were many silent clips at the audio starting point in VCTK, we eliminated long silent clips according to alignment information. Then, all speech was downsampled to 22,050 Hz. The linear spectrum Fourier transform length, window length, and window shift were set to 1024, 1024, and 256 sampling points, respectively.

The speaker encoder was trained on large speech recognition datasets Voxceleb1 [32] and Voxceleb2 [33]. Voxceleb1 had 1211 speakers and Voxceleb2 had 5994 speakers with a sampling rate of 16 kHz. The input to the speaker encoder was a Mel-spectrogram, with a dimension, Fourier transform length, window length, and window shift set to 80, 512, 400, and 160 sampling points, respectively. Data augmentation was performed during training with added reverberation and additive noise.

4.1.2. Implementation Details

In this paper, the TitaNet was trained on Voxceleb1 and Voxceleb2, and the EER (equal error rate) in the Vox1 test set was 1.22%. The input of the model was a Mel-spectrogram with 80 dimensions, and the output speaker embedding dimension was 256 dimensions. All voice cloning models were trained for 200 K steps based on the PyTorch framework on four GPUs (NVIDIA Tesla V100 with 16 GB memory) with batch size 32 and ADAM optimizer, where , , ε = 1 × 10−6, and the learning rate was set to 1 × 10−3.

4.1.3. Comparison Method

To experimentally analyze the voice cloning and prosody modeling performance of the ZSE-VITS model, the following models were used for evaluation in this paper:

- (1)

- Ground truth speech: speech segments selected from training and test sets.

- (2)

- VITS: VITS model trained with speaker lookup table can only synthesize speech with corresponding speaker timbre in the lookup table.

- (3)

- EVC: We reimplemented EVC based on Mellotron. The speaker encoder in the original paper uses d-vector and we replaced it with a pretrained TitaNet. The original vocoder uses WaveGlow [2]. We replaced it with HiFi-GAN for higher generation quality. Datasets for training were consistent with our proposed method.

- (4)

- ZS-VITS: The VITS model was trained using speaker embedding extracted by pre-trained TitaNet, which was used as the baseline system to verify the effectiveness of prosody modeling in this paper.

- (5)

- ZSE-VITS: The expressive voice cloning model proposed in this paper.

4.1.4. Evaluation Metric

Voice cloning usually uses the mean opinion score (MOS) and similarity mean opinion score (SMOS) to compare speech synthesis quality and timbre similarity of different models for seen and unseen new speakers in training. The MOS and SMOS scoring criteria are shown in Table 1 and Table 2, respectively.

Table 1.

MOS scoring criteria.

Table 2.

SMOS scoring criteria.

4.2. Cloned Speech Naturalness and Similarity

We selected 20 utterances from the training set as the evaluation set for seen speakers and selected 20 utterances from the test set as the evaluation set for unseen speakers. Subjective ratings were given by 10 listeners who were proficient in English through headphones, and scores were based on quality of speech and timbre similarity with reference speech. A 95% confidence interval was calculated after statistical scoring was averaged, and the results are shown in Table 3. From the table, we can see that ZSE-VITS has the highest naturalness among both seen and unseen speakers, which is due to its explicit modeling of prosody information, which is beneficial to improve timbre similarity because prosody modeling reduces the burden of timbre modeling. At the same time, a similar rhythm makes people feel more similar in timbre. Both timbre and prosody improve the performance of voice cloning. VITS outperforms EVC in naturalness and similarity because end-to-end speech synthesis avoids the mismatch problem in two-stage speech synthesis.

Table 3.

MOS and SMOS scores of different methods.

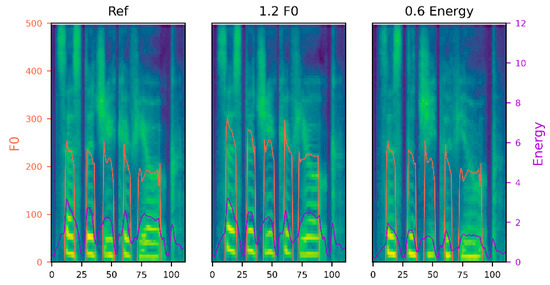

4.3. Pitch and Energy Control of Cloned Speech

We can modify prosody feature values predicted by the model to achieve the purpose of controlling prosody features of cloned speech. To explore the prosody control ability of our proposed model and mutual influence degree of different prosody features on cloned speech, we extended predicted pitch values by 1.2 times, keeping the predicted energy fixed, and enlarged predicted energy values by 1.2 times, keeping predicted pitch unchanged, respectively. The results are shown in Figure 2. Change in an individual prosody feature had little impact on another prosody feature, which indicates that the proposed method can control prosody features independently.

Figure 2.

Spectrums of pitch (F0) and energy control.

We use the objective metrics used in [13] to evaluate prosody and timbre similarity of cloned speech. Among the metrics, MCD (Mel cepstral distortion) reflects the difference between cloned speech and real reference speech, and VDE (voting decision error), GPE (gross pitch error), FFE (F0 frame error) all reflect the difference in pitch prosody characteristics of speech. We selected 10 utterances from each of the 10 speakers in the test set (not visible during training) and extracted the real pitch, energy, duration information, and speaker embedding from these utterances. Based on this information, the model resynthesizes speech. The prosody difference between synthesized speech and real reference speech was evaluated quantitatively. As shown in Table 4, ZSE-VITS achieved the best performance. The MCD value is the lowest because the ZSE-VITS models not only pitch but also energy. The ZS-VITS only models speaker characteristics, and prosody information is supplemented by the network itself. The timbre modeling in the normalizing flow of ZSE-VITS and the fine-grained prosody modeling in the prior encoder both make the performance of ZSE-VITS exceed EVC. The results indicate that our method can synthesize corresponding speech after given prosody information, laying a foundation for prosody adjustment.

Table 4.

Objective prosody evaluation (The down arrow indicates that the lower the metric value, the better the performance).

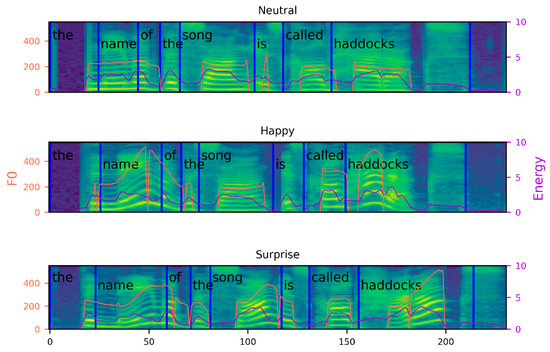

4.4. Prosody Prediction

The reference audio with different emotions from the emotion corpus ESD was input to the prosody predictor, and we predicted and generated different prosody features from the same phoneme sequence, and finally input prosody features into the voice cloning model to generate different styles of speech. As shown in Figure 3, referring to the neutral audio and the happy- and surprised-style audio, we can see the change in predicted pitch for corresponding styles. The average pitch value of the happy style becomes higher, and the pitch dynamic change is large. Similarly, the average pitch value of the surprise style becomes higher, the pitch changes greatly, and the noun part pitch of the sentence shows an upward trend.

Figure 3.

Synthesized speech spectrum referring to different emotional reference speech.

To objectively evaluate the prosody style extraction ability of the model on reference spectrums, we used the emotion recognition model (https://huggingface.co/ehcalabres/wav2vec2-lg-xlsr-en-speech-emotion-recognition, accessed on 18 July 2022.) to recognize speech generated by ZSE-VITS referring to different emotional speech. The recognition results are shown in Table 5. Most synthesized speech can be recognized in the emotion category corresponding to reference speech. It shows that by referring to the corresponding emotion speech, the prosody style extractor can extract meaningful prosody embedding, affect the prosody prediction values of the prosody predictor, and generate speech with the corresponding style, which verifies the effectiveness of the prosody prediction.

Table 5.

Emotion recognition results.

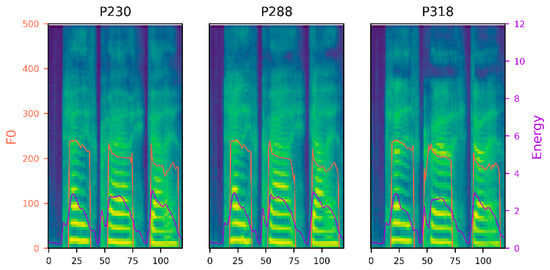

4.5. Decoupling of Prosody Information and Speaker Timbre

The decoupling of prosody information and speaker information is of great significance. It means that we can change the speaker timbre in speech while preserving prosody information, similar to voice conversion. At the same time, we can also transfer prosody style from reference speech to synthesized speech without affecting timbre in synthesized speech. In this section, we test the effect of speaker information change on prosody characteristics and the effect of prosody characteristics change on speaker information in synthesized speech, respectively.

Given the same pitch, energy, and duration information, we input different speaker embeddings to synthesize speech. As shown in Figure 4, the prosody characteristics of the speech synthesized by our proposed method do not change significantly, indicating that a change in speaker embeddings has little impact on prosody characteristics.

Figure 4.

Given the same pitch, energy, and duration information, input different speaker embeddings to synthesize speech.

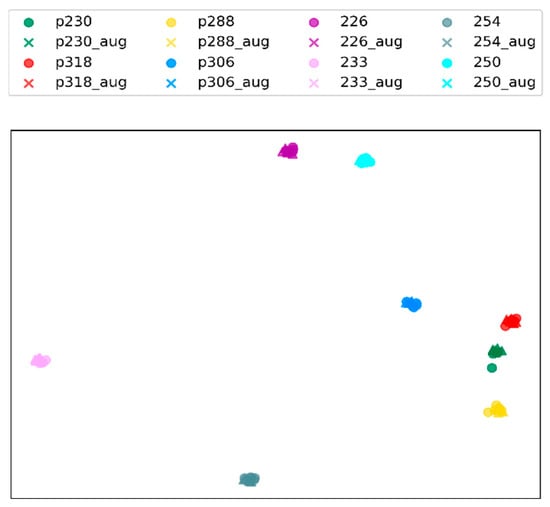

We input the same speaker embedding and scaled the input pitch and energy with a scaling value from 0.8 to 1.3. The corresponding speaker embeddings were then extracted from synthesized speeches. All speaker embeddings are visualized by a UMAP (https://github.com/lmcinnes/umap, accessed on 11 May 2022) in Figure 5, and it can be observed that the speaker embeddings extracted from synthesized speech and the original speaker speech are clearly clustered together, indicating that the moderate change in prosody characteristics has little influence on speaker information.

Figure 5.

Speaker embedding clustering visualization.

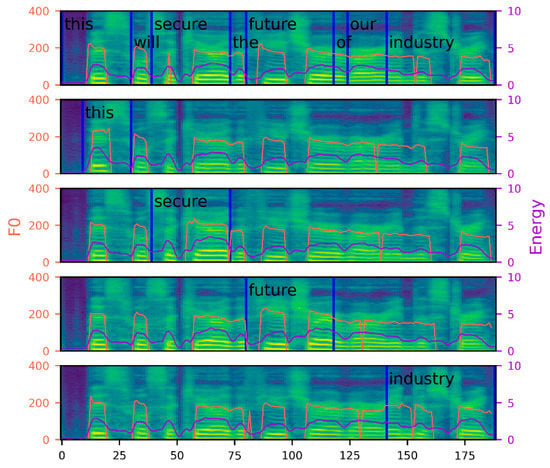

4.6. Local Prosody Adjustment

In expressive speech synthesis, emphasis control (lexical focus) is important to convey information about which part of a sentence contains important information. Our method models frame-level prosody and can adjust local prosody simply by the phoneme segmentation boundaries of phoneme sequences, such as emphasizing certain words in a sentence. We synthesized the text “this will secure the future of our industry,” and, respectively, increased the local predicted pitch and energy corresponding to “this,” “secure,” “future,” and “industry” to 1.2 times to emphasize a single word. As shown in Figure 6, the pitch and energy of corresponding word position in synthesized speech are correspondingly improved, without affecting other parts of the speech, indicating that our method shows good word-level prosody control ability.

Figure 6.

Demonstration spectrums for local prosody emphasis.

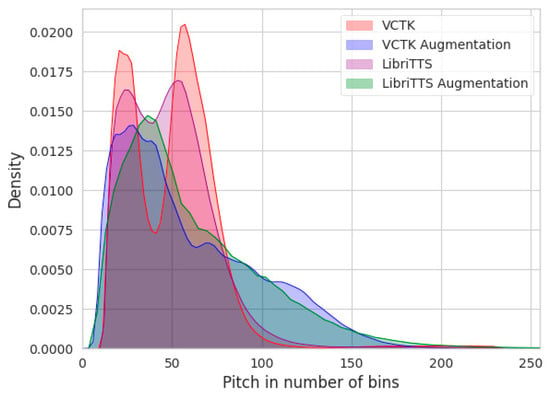

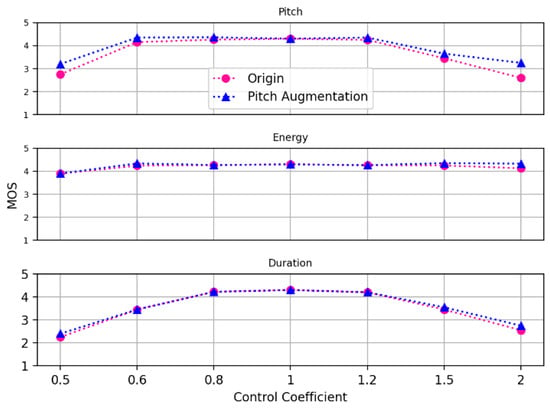

4.7. Influence of Scaling Factor Change on Speech Quality

In the experiments, we found that the model can synthesize corresponding speech by providing real pitch, energy, and duration information, but the synthesis results are not ideal for higher or lower pitches in the corpus. We determined that this is because the training corpus is in reading-style, with less prosody change and less high or low pitch distribution. We used the Pyworld tool (https://github.com/JeremyCCHsu/Python-Wrapper-for-World-Vocoder, accessed on 21 April 2022) to offset the pitch of speech in the corpus, expand the training corpus, and make the pitch distribution range larger. As shown in Figure 7, after the pitch is augmented, the pitch distribution range in the corpus becomes wider.

Figure 7.

Quantized pitch probability distribution diagram.

We found that the adjustment of explicit prosody properties is range limited, and beyond a certain range, the synthesized speech quality will be affected. We extracted 30 speeches from the test set and fed the model to synthesize speech after scaling the prosody properties and phoneme duration predicted by the model by different scaling factors. Finally, the synthesized speech was subjectively scored. The results are shown in Figure 8, and we can see that duration is most sensitive to the adjustment of the scaling factor, followed by pitch. Energy characteristics have the least influence on the adjustment. Controlling scaling factors in reasonable intervals has little effect on speech naturalness, such as the pitch scaling factor in the range of 0.6 to 1.2, the energy scaling factor in the range of 0.6 to 2, and the duration scaling factor in the range of 0.8 to 1.2. Pitch augmentation can improve synthesized speech naturalness when the scaling factor is large or small.

Figure 8.

Subjective rating chart of scaling factor change.

5. Conclusions

A novel expressive voice cloning method, ZSE-VITS, based on VITS is proposed in this paper. ZSE-VITS adopts the speaker recognition model TitaNet as the speaker encoder to provide speaker embeddings for voice cloning. For decoupling speakers and prosody information, ZSE-VITS directly uses explicit prosody information prediction and fusion methods to model prosody information. The method adopts pitch augmentation to improve the generalization ability of the prosody model, and fine-tunes the prosody predictor in the emotion corpus to enhance the diversity of predicted prosody. Given the input text and reference speech sample of an unseen speaker, the expressive cloned speech can be generated in a zero-shot manner. Experiments on multi-speaker datasets show that the proposed method performs better on the timbre similarity in both seen and unseen speakers, especially in unseen speakers. The results also show that pitch and energy of cloned speech can be controlled independently in the proper range, allowing global and local prosody adjustment. It follows that the method improves the expressiveness and prosody control ability in cloned speech, and the interaction between speaker information and prosody information is greatly reduced.

5.1. Practical Application

Our proposed method can generate expressive cloned speech in a zero-shot manner with better speech quality compared with previous expressive voice cloning methods. This excellent feature can greatly reduce the need for high-quality corpus and application costs, thus lowering the threshold of personalized speech synthesis. With only few training data, many applications can be more efficiently realized, such as customized voice assistants, audio-book narration, and movie dubbing, etc. Improvement of expressiveness in generated speech also improves user experience.

5.2. Limitations and Future Development

Our proposed method still has some limitations. Although the method allows to adjust global and local prosody in cloned speech, excessive prosody adjustment will cause speech distortion. This problem has been mitigated to some extent by using pitch augmentation, but there is still room to make further improvements. In addition, there is still a clear gap between the speaker timbre of voice cloning and the real speaker timbre. This may be mainly because speaker embedding extraction is transferred from the speaker recognition task, and there is no speaker embedding extraction specifically for the speaker timbre information required in voice cloning.

The above shortcomings will affect the practical application effect of the proposed method. Therefore, in future work, a more effective prosody information fusion module deserves further research to enhance the robustness of prosody adjustment. At the same time, it can be considered that the prosody adjustment on the prosody predictor can more conveniently and effectively achieve the desired prosody, rather than directly modify the explicit prosody features. For speaker embedding extraction, we can consider jointly fine-tuning the overall model after pre-training the speaker encoder. This may drive the speaker encoder to pay more attention to the speaker information needed in voice cloning.

Author Contributions

Supervision, L.Z.; writing—original draft, J.L.; writing—review and editing, L.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. The VCTK dataset can be found here: https://datashare.ed.ac.uk/handle/10283/3443, accessed on 1 April 2022. The LibriTTS dataset can be found here: http://www.openslr.org/60/, accessed on 1 April 2022.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Oord, A.; Dieleman, S.; Zen, H.; Simonyan, K.; Vinyals, O.; Graves, A.; Kalchbrenner, N.; Senior, A.W.; Kavukcuoglu, K. WaveNet: A Generative Model for Raw Audio. In Proceedings of the 9th ISCA Speech Synthesis Workshop, Sunnyvale, CA, USA, 13–15 September 2016; ISCA: Baixas, France, 2016; p. 125. [Google Scholar]

- Prenger, R.; Valle, R.; Catanzaro, B. Waveglow: A Flow-Based Generative Network for Speech Synthesis. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2019, Brighton, UK, 12–17 May 2019; IEEE: Piscataway Township, NJ, USA, 2019; pp. 3617–3621. [Google Scholar]

- Shen, J.; Pang, R.; Weiss, R.J.; Schuster, M.; Jaitly, N.; Yang, Z.; Chen, Z.; Zhang, Y.; Wang, Y.; Ryan, R.-S.; et al. Natural TTS Synthesis by Conditioning Wavenet on MEL Spectrogram Predictions. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2018, Calgary, AB, Canada, 15–20 April 2018; IEEE: Piscataway Township, NJ, USA, 2018; pp. 4779–4783. [Google Scholar]

- Ren, Y.; Hu, C.; Tan, X.; Qin, T.; Zhao, S.; Zhao, Z.; Liu, T.-Y. FastSpeech 2: Fast and High-Quality End-to-End Text to Speech. In Proceedings of the 9th International Conference on Learning Representations, ICLR 2021, Virtual Event, Austria, 3–7 May 2021. [Google Scholar]

- Kong, J.; Kim, J.; Bae, J. HiFi-GAN: Generative Adversarial Networks for Efficient and High Fidelity Speech Synthesis. In Advances in Neural Information Processing Systems 33, Proceedings of the Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, Virtual, 6–12 December 2020; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M.-F., Lin, H.-T., Eds.; Neural Information Processing Systems Foundation, Inc. (NeurIPS): La Jolla, CA, USA, 2020. [Google Scholar]

- Arik, S.Ö.; Chen, J.; Peng, K.; Ping, W.; Zhou, Y. Neural Voice Cloning with a Few Samples. In Advances in Neural Information Processing Systems 31, Proceedings of the Annual Conference on Neural Information Processing Systems 2018, NeurIPS 2018, Montréal, Canada, 3–8 December 2018; Bengio, S., Wallach, H.M., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R., Eds.; Neural Information Processing Systems Foundation, Inc. (NeurIPS): La Jolla, CA, USA, 2018; pp. 10040–10050. [Google Scholar]

- Wang, T.; Tao, J.; Fu, R.; Yi, J.; Wen, Z.; Zhong, R. Spoken Content and Voice Factorization for Few-Shot Speaker Adaptation. In Interspeech 2020, Proceedings of the 21st Annual Conference of the International Speech Communication Association, Virtual Event, Shanghai, China, 25–29 October 2020; Meng, H., Xu, B., Zheng, T.F., Eds.; ISCA: Baixas, France, 2020; pp. 796–800. [Google Scholar]

- Chen, M.; Tan, X.; Li, B.; Liu, Y.; Qin, T.; Zhao, S.; Liu, T.-Y. AdaSpeech: Adaptive Text to Speech for Custom Voice. In Proceedings of the 9th International Conference on Learning Representations, ICLR 2021, Virtual Event, Austria, 3–7 May 2021. [Google Scholar]

- Yan, Y.; Tan, X.; Li, B.; Qin, T.; Zhao, S.; Shen, Y.; Liu, T.-Y. Adaspeech 2: Adaptive Text to Speech with Untranscribed Data. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2021, Toronto, ON, Canada, 6–11 June 2021; IEEE: Piscataway Township, NJ, USA, 2021; pp. 6613–6617. [Google Scholar]

- Jia, Y.; Zhang, Y.; Weiss, R.J.; Wang, Q.; Shen, J.; Ren, F.; Chen, Z.; Nguyen, P.; Pang, R.; Lopez-Moreno, I.; et al. Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis. In Advances in Neural Information Processing Systems 31, Proceedings of the Annual Conference on Neural Information Processing Systems 2018, NeurIPS 2018, Montréal, Canada, 3–8 December 2018; Bengio, S., Wallach, H.M., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R., Eds.; Neural Information Processing Systems Foundation, Inc. (NeurIPS): La Jolla, CA, USA, 2018; pp. 4485–4495. [Google Scholar]

- Cooper, E.; Lai, C.-I.; Yasuda, Y.; Fang, F.; Wang, X.; Chen, N.; Yamagishi, J. Zero-Shot Multi-Speaker Text-To-Speech with State-Of-The-Art Neural Speaker Embeddings. In Proceedings of the 2020 IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2020, Barcelona, Spain, 4–8 May 2020; IEEE: Piscataway Township, NJ, USA, 2020; pp. 6184–6188. [Google Scholar]

- Choi, S.; Han, S.; Kim, D.; Ha, S. Attentron: Few-Shot Text-to-Speech Utilizing Attention-Based Variable-Length Embedding. In Proceedings of the Interspeech 2020, 21st Annual Conference of the International Speech Communication Association, Virtual Event, Shanghai, China, 25–29 October 2020; Meng, H., Xu, B., Zheng, T.F., Eds.; ISCA: Baixas, France, 2020; pp. 2007–2011. [Google Scholar]

- Min, D.; Lee, D.B.; Yang, E.; Hwang, S.J. Meta-StyleSpeech: Multi-Speaker Adaptive Text-to-Speech Generation. In Proceedings of the 38th International Conference on Machine Learning, ICML 2021, Virtual Event, Honolulu, HI, USA, 18–24 July 2021; Meila, M., Zhang, T., Eds.; PMLR: Mc Kees Rocks, PA, USA, 2021; Volume 139, pp. 7748–7759. [Google Scholar]

- Skerry-Ryan, R.J.; Battenberg, E.; Xiao, Y.; Wang, Y.; Stanton, D.; Shor, J.; Weiss, R.J.; Clark, R.; Saurous, R.A. Towards End-to-End Prosody Transfer for Expressive Speech Synthesis with Tacotron. In Proceedings of the 35th International Conference on Machine Learning, ICML 2018, Stockholmsmässan, Stockholm, Sweden, 10–15 July 2018; Dy, J.G., Krause, A., Eds.; PMLR: Mc Kees Rocks, PA, USA, 2018; Volume 80, pp. 4700–4709. [Google Scholar]

- Wang, Y.; Stanton, D.; Zhang, Y.; Skerry-Ryan, R.J.; Battenberg, E.; Shor, J.; Xiao, Y.; Jia, Y.; Ren, F.; Saurous, R.A. Style Tokens: Unsupervised Style Modeling, Control and Transfer in End-to-End Speech Synthesis. In Proceedings of the 35th International Conference on Machine Learning, ICML 2018, Stockholmsmässan, Stockholm, Sweden, 10–15 July 2018; Dy, J.G., Krause, A., Eds.; PMLR: Mc Kees Rocks, PA, USA, 2018; Volume 80, pp. 5167–5176. [Google Scholar]

- Hono, Y.; Tsuboi, K.; Sawada, K.; Hashimoto, K.; Oura, K.; Nankaku, Y.; Tokuda, K. Hierarchical Multi-Grained Generative Model for Expressive Speech Synthesis. In Proceedings of the Interspeech 2020, 21st Annual Conference of the International Speech Communication Association, Virtual Event, Shanghai, China, 25–29 October 2020; Meng, H., Xu, B., Zheng, T.F., Eds.; ISCA: Baixas, France, 2020; pp. 3441–3445. [Google Scholar]

- Li, X.; Song, C.; Li, J.; Wu, Z.; Jia, J.; Meng, H. Towards Multi-Scale Style Control for Expressive Speech Synthesis. In Proceedings of the Interspeech 2021, 22nd Annual Conference of the International Speech Communication Association, Brno, Czechia, 30 August–3 September 2021; Hermansky, H., Cernocký, H., Burget, L., Lamel, L., Scharenborg, O., Motlícek, P., Eds.; ISCA: Baixas, France, 2021; pp. 4673–4677. [Google Scholar]

- Neekhara, P.; Hussain, S.; Dubnov, S.; Koushanfar, F.; McAuley, J.J. Expressive Neural Voice Cloning. In Proceedings of the Asian Conference on Machine Learning, ACML 2021, Virtual Event, Singapore, 17–19 November 2021; Balasubramanian, V.N., Tsang, I.W., Eds.; PMLR: Mc Kees Rocks, PA, USA, 2021; Volume 157, pp. 252–267. [Google Scholar]

- Valle, R.; Li, J.; Prenger, R.; Catanzaro, B. Mellotron: Multispeaker Expressive Voice Synthesis by Conditioning on Rhythm, Pitch and Global Style Tokens. In Proceedings of the 2020 IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2020, Barcelona, Spain, 4–8 May 2020; IEEE: Piscataway Township, NJ, USA, 2020; pp. 6189–6193. [Google Scholar]

- Liu, S.; Su, D.; Yu, D. Meta-Voice: Fast Few-Shot Style Transfer for Expressive Voice Cloning Using Meta Learning. arXiv 2021, arXiv:2111.07218. [Google Scholar]

- Finn, C.; Abbeel, P.; Levine, S. Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks. In Proceedings of the 34th International Conference on Machine Learning, ICML 2017, Sydney, NSW, Australia, 6–11 August 2017; Precup, D., Teh, Y.W., Eds.; PMLR: Mc Kees Rocks, PA, USA, 2017; Volume 70, pp. 1126–1135. [Google Scholar]

- Kim, J.; Kong, J.; Son, J. Conditional Variational Autoencoder with Adversarial Learning for End-to-End Text-to-Speech. In Proceedings of the 38th International Conference on Machine Learning, ICML 2021, Virtual Event, Honolulu, HI, USA, 18–24 July 2021; Meila, M., Zhang, T., Eds.; PMLR: Mc Kees Rocks, PA, USA, 2021; Volume 139, pp. 5530–5540. [Google Scholar]

- Koluguri, N.R.; Park, T.; Ginsburg, B. TitaNet: Neural Model for Speaker Representation with 1D Depth-Wise Separable Convolutions and Global Context. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2022, Virtual, Singapore, 23–27 May 2022; IEEE: Piscataway Township, NJ, USA, 2022; pp. 8102–8106. [Google Scholar]

- Zhou, K.; Sisman, B.; Liu, R.; Li, H. Seen and Unseen Emotional Style Transfer for Voice Conversion with A New Emotional Speech Dataset. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2021, Toronto, ON, Canada, 6–11 June 2021; IEEE: Piscataway Township, NJ, USA, 2021; pp. 920–924. [Google Scholar]

- Wan, L.; Wang, Q.; Papir, A.; Lopez-Moreno, I. Generalized End-to-End Loss for Speaker Verification. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2018, Calgary, AB, Canada, 15–20 April 2018; IEEE: Piscataway Township, NJ, USA, 2018; pp. 4879–4883. [Google Scholar]

- Cai, W.; Chen, J.; Li, M. Exploring the Encoding Layer and Loss Function in End-to-End Speaker and Language Recognition System. In Proceedings of the Odyssey 2018: The Speaker and Language Recognition Workshop, Les Sables d’Olonne, France, 26–29 June 2018; Larcher, A., Bonastre, J.-F., Eds.; ISCA: Baixas, France, 2018; pp. 74–81. [Google Scholar]

- Casanova, E.; Weber, J.; Shulby, C.D.; Júnior, A.C.; Gölge, E.; Ponti, M.A. YourTTS: Towards Zero-Shot Multi-Speaker TTS and Zero-Shot Voice Conversion for Everyone. In Proceedings of the International Conference on Machine Learning, ICML 2022, Baltimore, MD, USA, 17–23 July 2022; Chaudhuri, K., Jegelka, S., Song, L., Szepesvári, C., Niu, G., Sabato, S., Eds.; PMLR: Mc Kees Rocks, PA, USA, 2022; Volume 162, pp. 2709–2720. [Google Scholar]

- Chien, C.-M.; Lee, H. Hierarchical Prosody Modeling for Non-Autoregressive Speech Synthesis. In Proceedings of the IEEE Spoken Language Technology Workshop, SLT 2021, Shenzhen, China, 19–22 January 2021; IEEE: Piscataway Township, NJ, USA, 2021; pp. 446–453. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.C.; Bengio, Y. Generative Adversarial Nets. In Advances in Neural Information Processing Systems 27, Proceedings of the Annual Conference on Neural Information Processing Systems 2014, Montreal, Quebec, Canada, 8–13 December 2014; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N.D., Weinberger, K.Q., Eds.; Neural Information Processing Systems Foundation, Inc. (NeurIPS): La Jolla, CA, USA, 2014; pp. 2672–2680. [Google Scholar]

- Yamagishi, J.; Veaux, C.; MacDonald, K. Cstr Vctk Corpus: English Multi-Speaker Corpus for Cstr Voice Cloning Toolkit (Version 0.92); University of Edinburgh, The Centre for Speech Technology Research (CSTR): Edinburgh, UK, 2019. [Google Scholar]

- Zen, H.; Dang, V.; Clark, R.; Zhang, Y.; Weiss, R.J.; Jia, Y.; Chen, Z.; Wu, Y. LibriTTS: A Corpus Derived from LibriSpeech for Text-to-Speech. In Proceedings of the Interspeech 2019, 20th Annual Conference of the International Speech Communication Association, Graz, Austria, 15–19 September 2019; Kubin, G., Kacic, Z., Eds.; ISCA: Baixas, France, 2019; pp. 1526–1530. [Google Scholar]

- Nagrani, A.; Chung, J.S.; Xie, W.; Zisserman, A. Voxceleb: Large-Scale Speaker Verification in the Wild. Comput. Speech Lang 2020, 60, 101027. [Google Scholar] [CrossRef]

- Chung, J.S.; Nagrani, A.; Zisserman, A. VoxCeleb2: Deep Speaker Recognition. In Proceedings of the Interspeech 2018, 19th Annual Conference of the International Speech Communication Association, Hyderabad, India, 2–6 September 2018; Yegnanarayana, B., Ed.; ISCA: Baixas, France, 2018; pp. 1086–1090. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).