Abstract

Deep neural networks are susceptible to interference from deliberately crafted noise, which can lead to incorrect classification results. Existing approaches make less use of latent space information and conduct pixel-domain modification in the input space instead, which increases the computational cost and decreases the transferability. In this work, we propose an effective adversarial distribution searching-driven attack (ADSAttack) algorithm to generate adversarial examples against deep neural networks. ADSAttack introduces an affiliated network to search for potential distributions in image latent space for synthesizing adversarial examples. ADSAttack uses an edge-detection algorithm to locate low-level feature mapping in input space to sketch the minimum effective disturbed area. Experimental results demonstrate that ADSAttack achieves higher transferability, better imperceptible visualization, and faster generation speed compared to traditional algorithms. To generate 1000 adversarial examples, ADSAttack takes and, on average, achieves a success rate of .

1. Introduction

Deep neural network (DNN) models have been widely used in advanced applications, such as autonomous driving [1] and medical diagnosis [2]. However, DNN models are not secure and, when they are disturbed by adversarial attacks from high-dimensional distributions [3], they reveal many security problems, such as erroneous recognition results of the models. These adversarial examples are intentionally designed and make the model incorrect [4], which also shows that the DNN model is not completely reliable [5]. Therefore, it is of great interest to study adversarial attacks, which can assist in improving the robustness of the model.

The goal of adversarial attack is to generate adversarial examples that can be recognized by the human eye but are able to deceive the DNN model [6], reflecting the fact that there are differences between artificial neural networks and biological visual neural networks. The adversarial attack process differs significantly from the deep learning training process. More specifically, while the deep learning training process aims to continuously optimize the parameters and, thus, improve the classification ability of the model, the adversarial attack uses the reverse process of gradient updating to misclassify the model. In addition, there is a large body of work that focuses on the transferability of adversarial examples, by which the generation of adversarial examples using white-box model information is able to achieve black-box adversarial attacks [7].

The data in the latent space are not specific to the model structure, but are more relevant to the recognition task, so the adversarial examples generated using this approach have better transferability than those generated using logit layer information feedback [8]. In order to obtain more transferable examples of adversarial attacks, many recent studies have investigated the information in the potential space. However, previous studies working on generating adversarial examples by changing the loss function of the DNN classifier and, thus, generating adversarial examples, require multiple iterative modifications to the input [5], which can greatly reduce the generation efficiency and the time taken when the number of examples is higher.

A generative adversarial network (GAN) is, therefore, suggested for quick adversarial example creation because of its outstanding distribution-mapping capabilities. A GAN consists of a generator and a discriminator that can be trained to converge by Nash equilibrium to learn the mapping relationship from examples to adversarial examples. Using the acquired knowledge, the GAN is able to directly use the network to obtain the output of the adversarial examples, thus skipping the time-consuming iterative process [9,10,11].

A GAN, after effective training, will greatly improve the efficiency of adversarial example generation, but this presents a significant challenge for the convergence of training results because the optimization of the loss function between the generator and the discriminator is not friendly [12]. We then address this problem by transforming the local optimum of the Nash equilibrium into a search global optimum problem. We propose a special method for adding hidden spatial noise from the perspective of visual effects, which differs from previous pixel-level restriction criteria, optimizing noise by using a method based on edge-detection [13] algorithms and the biological properties of the human eye.

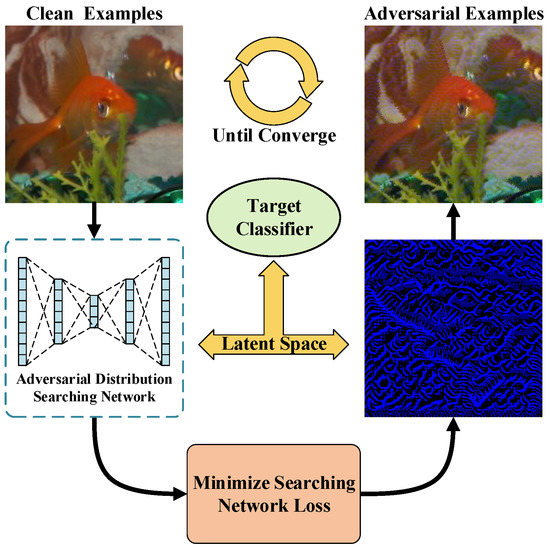

In a recognition task, the hidden layer network in a DNN model is trained to obtain features during the convergence process of the designed loss functions, while the predicted label approaches the true label. Inspired by the above training procedure based on DNN working theory, we propose a new methodology to search for a distribution from the input image’s own latent space as an adversarial perturbation (as shown in Figure 1), which speeds up the generation of adversarial examples that leads to misclassification in most of the real DNN systems with a relatively high success rate, although not significantly perceptible to the human eye. We extract the special distribution, which is the feature of the class with the second confidence. We add it to its original image to emphasize this feature and to mislead the predicted label. Therefore, we turn the generation problem of adversarial examples into a search problem of global optimum distribution for the adversarial attacks. Then, using an edge-detection technique to sketch the input image’s edge area, which is associated with low-level features, we can fully utilize the latent space information. Disturbance on edge areas is, thus, much more misleading than on other areas, which could be used for reducing disturbed areas and, thus, reducing human perception of adversarial perturbation. The following is a summary of the contributions of the paper:

Figure 1.

Searching perturbation distribution in latent space. The main idea of this paper is to train an adversarial distribution searching network. We locate special feature information in latent space by utilizing target classifier parameters to update the searching network and to generate suitable adversarial perturbation against the target classifier.

- We turn the adversarial example generation problem into a search problem and propose training an adversarial distribution searching network which can search an adversarial perturbation distribution in the image’s own latent space. Once trained, our searching network could immediately extract the adversarial distribution of each input image. The adversarial example generation time is, therefore, fast for input images;

- We simultaneously use feedback from multiple target classifiers to update the parameters of the adversarial distribution searching network, thus obtaining universal adversarial examples with high transferability;

- We use a novel approach to utilize an edge-detection algorithm to locate low-level feature mapping in input space to sketch the minimum effective disturbed area and conduct different operations on maps from different image channels. Experimental results obtained show that our method achieves a balance between having an imperceptible visual effect and having a high attack success rate.

The rest of the paper is organized as follows: Section 2 describes related work on adversarial attacks. In Section 3, we provide details of the implementation of the ADSAttack method, and in Section 4 we present extensive experimental results. Finally, in Section 5, we give a general overview of the paper.

2. Related Work

The concept of adversarial attack was introduced in 2013 by Szegedy et al. [5]. They determined that the addition of subtle noise to an example can mislead the classification results of a DNN model, and that adversarial attack attracts more attention.

An adversarial example can be simply defined as one that can mislead a deep learning model but still be correctly identified by the human eye. Goodfellow et al. [3] carried out a further study on adversarial examples and found that adversarial examples have transferability and are able to mislead black-box target classifiers.

2.1. Improvement of Transferability

There have been various studies on transfer-based attacks [14,15] in black-box attacks.Unlike the traditional use of data augmentation [16] to improve transferability, we focus more on the use of generative models to improve transferability. There is an adversarial attack algorithm that uses generative models to improve transferability by interfering with the feature map [8,17] or to extract features as prior knowledge [18] and then modifying the features to obtain generators that can generate high-transferability adversarial examples. In order to use generator optimization mapping to generate adversarial examples, Tu et al. [17] created AutoZOOM. AutoZOOM searches in the latent space when the autoencoder is trained with unlabeled input, adding adversarial perturbations that are then transferred to the high-dimensional space by the decoder. Inkawhich et al. [8], inspired by AutoZOOM, used this framework to acquire a priori perturbations and subsequently employed black-box attack techniques, such as natural evolutionary strategies, via the embedding space, allowing adversarial attacks on the target model.

2.2. GAN-Based Adversarial Attacks

The GAN model architecture involves two sub-models: a generator model for generating new examples and a discriminator model for classifying whether generated examples are real, from the domain, or fake, generated by the generator model. There are many application scenarios for GAN and adversarial attacks can be combined with it. Xiao et al. [9] found that the transformation from original input to adversarial examples can be established using generator mapping and proposed the AdvGAN method to perform adversarial attacks. The discriminator in AdvGAN is responsible for determining whether the examples generated by the combination between original images and perturbations can mislead the model. Mangla et al. proposed AdvGAN++ [10] as an advanced version of AdvGAN, introducing more latent vectors to improve the performance of the generator. Rob-GAN [11] improves the quality of generated images as well as the robustness of the model, while incorporating optimization in the training process to obtain higher efficiency. Zhao et al. [19] used low-dimensional space search to generate more targeted, as well as more natural, effects on the adversarial examples, and named it Natural GAN. Deb et al. [20] then introduced GAN for face synthesis in the field of face recognition to form face adversarial examples. We are more concerned about the efficiency of GAN, so we conducted a more detailed study on the basis of the loss function of GAN.

2.3. Conductions on Visual Effect

For the optimization of visual effects, many studies have considered attack capability and visual effects and explored noise generation methods in an attempt to achieve a balance between the two. Shamsabadi et al. [21] and Bhattad et al. [22] introduced unrestricted perturbations, ignoring the original noise factor evaluation metric, and changed the semantic information of the image by changing the color and texture, thus generating an adversarial example with higher attack rate.

Dong et al. [23] investigated the superpixel and attention mechanisms for image-based defense perspective and steganalysis-based detection as a starting point to generate higher quality adversarial examples. The approaches described above all focus more on the properties of the model-recognized image and ignore the properties of human eye vision in adversarial attacks.

So we add customized noise on maps from different image channels. More specifically, disturbed areas on maps from the B image channel are larger than those from the other two channels, as human eyes are least sensitive to the color blue [24,25]. We also utilize an edge-detection algorithm, which can map internal information to the image surface, to locate the minimum disturbed area.

3. Attack Methodology

In this section, we introduce the proposed ADSAttack, which makes full use of latent space information in each procedure to realize adversarial attacks with better performance, including higher transferability, faster generation speed and better visual effects.

3.1. ADSAttack

In the image classification task, the purpose of the adversarial attack is to use imperceptible noise to deceive the DNN classifier without affecting the correct judgment of the image examples by human-eye vision. We designed our adversarial attack generation architecture ADSAttack based on this criterion.

ADSAttack contains a latent spatial distribution search neural network that searches for special distributions in the original image feature space that mislead DNN misclassification by mapping. Normally, the suitable features which are extracted by a properly trained neural network are expected to be precisely classified in the follow-up procedure. Different feature-extraction methods might guide distinct approaches to the search of latent space and could, therefore, influence the final classification results. To a certain extent, if a neural network is trained to search and generate specific features in a deliberately divergent way from latent space, which is different from the “suitable” features, the final results could be manipulated into a wrong category. We recognize this kind of specific feature as an adversarial feature.

In our ADSAttack approach, we search through the feature distribution with the results of the original view image and use the classifier to give feedback to label the category of the changed example, which tends to point in the wrong direction since the features are directional in nature. Thus, our ADSAttack framework implements an attack process.

The goal of ADSAttack is to train the neural network for better searching of the adversarial feature. This also means that the successful attack of a deep-learning-based classifier depends on whether a misleading feature that is distinct from its label can be found. Therefore, the neural network needs to be trained to search another (adversarial) feature by deliberately considering the designed loss function.

Our loss function utilizes the confidence classification [26] of four networks for adversarial distribution search, which are ResNet-50 [27], VGG-16 [28], GoogleNet [29], and MobileNet-v2 [30]. Furthermore, this leads to higher transferability due to learning more information about the distribution of the networks in the latent space.

AdvGAN is essentially a GAN that contains a generator part and a discriminator part, while the optimization process of its loss function tends to be more time-consuming in order to achieve Nash equilibrium convergence. The main role of the generator in AdvGAN is to prompt the DNN classifier to make errors, while the main task of the discriminator is to limit the range of noise perturbations to within the specified coefficients. This is a challenge for the overall convergence, which tends to be unbalanced because of the gradient direction. Instead, we propose a special noise addition based on different image channels using a selective image region approach and combining it with an edge-detection algorithm to analyze the sensitivity of human visual cells to color, so that a higher convergence efficiency can be achieved, while limiting the range of perturbations to visual effects.

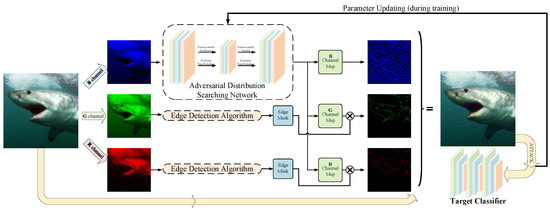

A confrontation attack represents a successful confrontation attack if it achieves a balance between a visual effect and the attack success rate. We focus on two aspects for human perception reduction, including disturbed area reduction and biological characteristics analysis. For disturbed area location, we make full use of the edge-detection algorithm’s ability to map low-level features from latent space to input space and choose an area to be disturbed. We analyze the biological characteristics of human eyes and find that they are differently sensitive to different colors [24]. Based on the above conclusions, we select different color channels to accommodate different noise addition methods for the color regions that are not sensitive to the human eye, such as adding global noise for more sensitive regions and local edge noise for relatively insensitive ones. As shown in Figure 2, we add global perturbation on the B channel input, while edge perturbation is added on the R and G channels, because human eyes are least sensitive to blue color [25]. To create a perturbation prior, an elementwise product is applied to the edge masks of the R and G channel inputs as well as the feature maps of the B channel inputs. The target model in the Figure 2 overview for the subsequent operation is fed the original input along with the previous input.

Figure 2.

Overview of ADSAttack. ADSAttack firstly utilizes an adversarial distribution searching network to extract feature maps (i.e., channel map in the figure) from the input’s B image channel and locates the edge area information (i.e., edge mask in the figure) from the other two image channels of the input. A perturbation prior is then obtained by applying the elementwise product on the feature maps and corresponding edge masks. Next, the original input with its perturbation prior is fed into the target classifier. Given the designed loss function, the parameters of the adversarial distribution searching network are thus able to be updated for adversarial example generation.

In contrast to previous adversarial examples that generate a process which updates the inputs, ADSAttack updates the parameters of the adversarial distribution searching network to generate perturbation distribution for the adversarial attack. When the updating process is finished, ADSAttack is successfully implemented.

3.2. Formal Description

The input x and its adversarial perturbation are updated by the adversarial attack method by minimizing the loss function , defined as follows from C&W attack [26]:

where represents an input x with its label y in a training set, represents our goal classifier, and regulates the strength of the adversarial examples.

Then, using an extractor called , we extract adversarial features for disrupting from latent space using an adversarial distribution searching network. Thus, the perturbation is substituted by , extractor with input and parameter , in Equation (2):

We update the parameters of the extractor directly instead of updating the input image. We use the edge-detection approach to obtain the edge mask M, which is produced by applying the function to the image edge matrix. It is shown as follows:

where represents the edge-detection function and x represents the input image. The adversarial feature perturbation is multiplied in an elementwise fashion by the mask M once the edge mask is ready and the original input picture is then added. Considering different operations on different channels of the input, the loss function can be expressed as follows:

where represents in the R channel, in the G channel and in the B channel, respectively. This can be expressed as:

We update the parameter in the adversarial distribution searching network according to the loss function as follows:

Once given the input images, the trained network is thus able to produce adversarial perturbations. We gather input from the attack process by averaging out the loss functions of the four target classifiers to create a new overall loss function, as illustrated below, for improved transferability among the classifiers.

The detailed procedure is presented in Algorithm 1.

| Algorithm 1: ADSAttack adversarial attack. |

Input: Input examples x and its label y; Target classifier ; Searching Network ; Number of data m; Total number of iterations N Output: Search network parameters

|

3.3. Adversarial Distribution Searching Network

Existing adversarial attack algorithms aim at misleading classifiers by increasing the difference between the predicted label and the true label and conduct multiple iterations on each input to achieve this goal, which is time-consuming and lacking in efficiency. The normal hidden layer network in the classification model is trained to obtain features that benefit follow-up classification. Inspired by its training process, we alter the original feature-extraction network and train it for fast adversarial feature generation.

Adversarial distribution search networks are trained to search for distributions that can be used as input for adversarial perturbations in the latent space. Thus, it requires the network to be capable of learning a mapping relationship with a given guided loss function and resizing the distribution to the original size to ensure global disturbance. Due to the auto-encoder’s excellent performance on mapping transformation, we utilize a simple auto-encoder [31] architecture for the search task. The adversarial distribution search network algorithm borrows directly from the traditional self-encoder structure in the hidden space feature search network structure. It is characterized by an hourglass-type design of dimensionality reduction followed by dimensionality increase, which can better extract the important features of the image itself. In conventional applications, it is desired that the output is the same as the input, when the low-dimensional important information in the middle layer represents the key features of the image and the process of extracting this information is equivalent to the feature-extraction process.

3.4. Edge-Detection Algorithm

Current adversarial attack algorithms usually add perturbations in a global way for all image channels, while we choose a local approach, with the key areas for adding this noise in the R and G channel inputs.

For optimization of our attacking result, we consider the property of edges to selectively disturb the area and propose searching the adversarial distribution guided by an edge-detection algorithm; however, this approach sacrifices a certain amount of edge smoothing, but the attack results are better overall. We use an elementwise product on the adversarial distribution matrix and edge mask extracted by the edge-detection algorithm, so that only the edge area of the R and G channel inputs will be altered after our attack.

More specifically, the key to local noise addition region selection is how to cause the maximum interference effect in the smallest region—so it is necessary to find the most critical location coordinates in the input image matrix. As the low-level information of the image, edge information is very important for image detection. Edge information can be directly obtained by shallow neural networks without any other information and can be used for high-level analysis. Moreover, the edge locations in the image can be obtained in advance by methods other than neural network extraction, so the edge regions are chosen as local noise addition regions in this section. Since the image edge information is mainly concentrated in the high frequency band, the edge information is usually obtained by calculating the difference or gradient in the discrete signal of the digital image in the null domain operation, setting the frequency band threshold, and performing high frequency filtering. The algorithm in this section uses the Canny edge-detection operator for edge-region acquisition.

3.5. Image-Channel-Based Noise Adding Method

We were inspired by the study of Dartnall et al. [24] and found that, in the biology of the human eye, the sensitivity of the human eye’s visual cone cells to unused colors is different. Cicerone et al. [25] further quantified the number of cone cells and identified a ratio of 40:20:1 for red, green and blue, indicating that the human eye is least sensitive to blue. More specifically, we add global perturbation to the blue channel and local edge perturbation to the red and yellow channels. Finally, the convolutional neural network accumulates the different noises of the three channels according to their weights in this process and optimizes the parameters through the training process. Finally, without restricting the pixel perturbation, we obtain a better visualization effect and enhanced success rate of the attack.

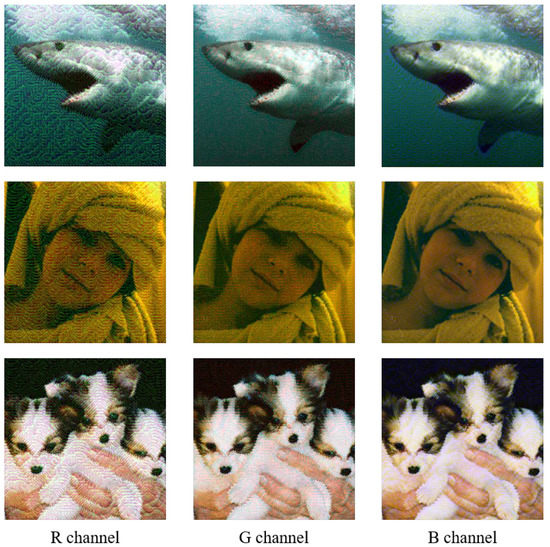

As shown in Figure 3, to verify the validity of the image-channel-based operation, we generate three groups of adversarial examples, corresponding to the R, G, and B channels, each of which contains global single channel perturbation of the current channel with edge perturbation of the remaining two channels. For each example in the B channel group, for instance, the global perturbation is added on this channel, while the edge perturbation is added on the R and the G channels, with a similar approach adopted for the examples in the two other groups.

Figure 3.

Visual comparisons corresponding to R, G and B channels.

Although the perturbation values regarding each pixel are added equally into the three channels, respectively, the three images for the R channel are the most blurred because human eyes are most sensitive to red color, while those of the B channel are most similar to the original input.

4. Experimental Method and Evaluation

4.1. Experimental Setups

Benchmark Baselines: We evaluated the effectiveness of the ADSAttack algorithm on different models. The models were ResNet-50 [27], VGG-16 [28], GoogleNet [29] and MobileNet-v2. The dataset used was ImageNet-1000 [32]. We designed a number of experiments for diverse tasks: (1) For algorithm efficiency evaluation, we compared ADSAttack to FGSM [3], PGD [33]. AdvGAN [9], TREMBA [18] and ColorFool [21] in terms of the successful rate and generation efficiency of the adversarial examples; (2) We produced a transfer matrix table to show the transferability of ADSAttack. For the white-box model, we chose ResNet-50, VGG-16, GoogleNet and MobileNet-v2; for the black-box model, we chose AlexNet [34], DenseNet [35], ResNet-152 [27] and ResNet-34 [27]; (3) To demonstrate the effectiveness in lowering visual perception, we contrasted ADSAttack with AdvGAN [9], TREMBA [18] and ColorFool [21].

Metrics: The evaluation metrics in this paper include the following four criteria to evaluate the algorithm. The attack success rate is the ratio of misclassified examples after the attack to the previously correctly classified examples; time of batch is the batch generation time required to generate 1000 adversarial examples; peak signal-to-noise ratio (PSNR) refers to the degree of difference between two photographs determined using the mean squared error (MSE) of the pixels of interest; structural similarity (SSIM) refers to a measure of the perceptibility of the human eye, using the mean, variance, and covariance to compare differences in brightness, contrast, and the structure of images.

4.2. Image-Channel-Based Noise Adding Method

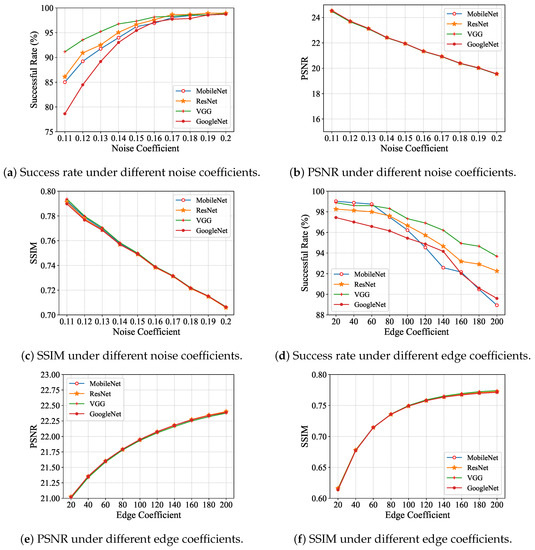

In this section, we describe a series of exploration experiments to select the most suitable visual coefficients for the image-channel-based noise-adding method. To achieve a more successful rate of attack, more noises should be added to the different channels of the adversarial examples with less transparency. This uncontrolled perturbation-adding method, however, would be more apparent with respect to the human eye. Given a certain success rate, the proper value of the sense indicators should be defined to limit the visual difference before and after adding the perturbations in order to balance a highly successful rate and less visual perception. For the two indicators PSNR and SSIM, there are noise coefficients and edge coefficients that influence the visual effect of the generated examples. The former indicates the transparency of the global noise added on the map from the B channel; the latter indicates the edge perturbation area on maps from the R and G channels.

We set up several exploration tests to find the most suitable noise coefficient ranging from 0.11 to 0.20, and edge coefficient ranging from 20 to 200, against different values of PSNR and SSIM for evaluation. The various test results demonstrated that a value of 0.15 for the noise coefficient and 100 for the edge coefficient achieved a better balance between attack ability and visual effect. We show the performance under different comparison settings to explain our choice.

As shown in Figure 4, with increasing values of the x-axis, the global noise perturbation becomes intense, as shown in Figure 4a–c, while the edge perturbation narrows the margin of the disturbed area, as shown in Figure 4d–f. In Figure 4a, the speed of growth in the algorithm’s successful rate (y-axis) slows down with steady increase in the noise coefficient (x-axis) and becomes relatively mild when it reaches 0.15. Both of the PSNR and SSIM values for the noise coefficient, as shown in Figure 4b,c, are approximately smoothly linearly declining for all the algorithms, except for the mild point 0.15.

Figure 4.

Exploration for choosing noise coefficient and edge coefficient.

For the edge coefficient of each algorithm in Figure 4d, the successful rate decreases along with growth in the x-axis, which represents the perturbation edge margin shrinking and dropping faster when it goes beyond 80. Figure 4f shows that the increasing trends for all the algorithms gradually slow down until the value of the edge coefficient goes beyond 100. According to the data presented in Figure 4d, the successful rate decreases by only about 1% when the value of the edge coefficient increases from 80 to 100. Therefore, we chose 100 as the final value of the edge coefficient as the reference for the edge area range added to maps from the R and G channels, and 0.15 for the noise coefficient for the global B channels.

4.3. Algorithm Efficiency and Visual Comparisons

We set 1000 examples as a batch and compared the time taken by different algorithms to generate that batch. From Table 1, it can be seen that the success rate of the ADSAttack attack reaches a better level in the comparison results in the table, with an average attack rate of 98.01% against the four models, and is more advantageous in terms of time consumption, spending less time compared to the other algorithms.

Table 1.

Comparison experiments of six algorithms, including FGSM, PGD, AdvGAN, TREMBA, ColorFool and our ADSAttack. There are two performance metrics that include the attack success rate (%) and the time to batch (1000) generation (±std over 5 random runs).

In addition, TREMBA achieved a 100% attack success rate when attacking a specific model on this test set, which is not overfitting, but is due to its high query count, and does not mean that the algorithm is applicable to any data and model.

In the compared algorithms, FGSM and PGD are classical adversarial attack algorithms and perform excellently with respect to visual effect. Specifically, PGD has a balance on attack ability and visual effect, which, however, is at the expense of cost in terms of time. We take the two algorithms into consideration in transferability evaluation experiments described in Section 4.4.

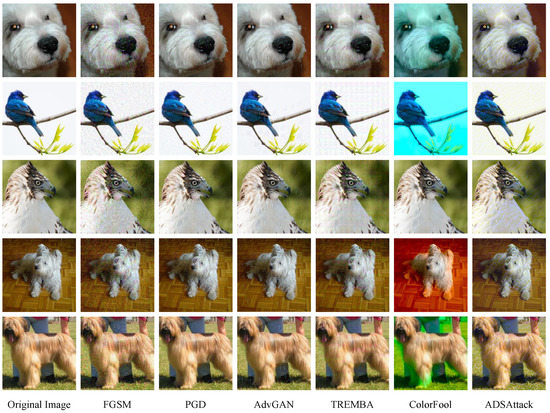

We present a visualization of the different algorithms generating the adversarial examples in Figure 5.

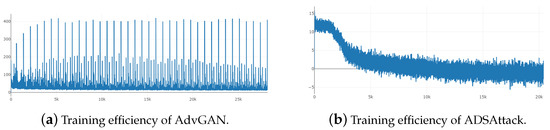

Figure 5.

Training efficiency, illustrated by convergence curves of the loss during training procedure of generative networks, of AdvGAN and ADSAttack.

The loss convergence curves of AdvGAN and ADSAttack, which reflect training efficiency, are shown in Figure 6.

Figure 6.

Visual comparison results. The exhibited images are clean images and their adversarial versions generated by the compared algorithms—FGSM, PGD, AdvGAN, TREMBA, ColorFool and our ADSAttack.

We can see in the figure that these adversarial examples all look very similar, but, comparing the effect of pixel perturbation on the human eye, ADSAttack achieves better invisibility using our special perturbation method. Compared to other adversarial attack algorithms, there is a slight roughness at the edges of the object, but it is the most similar compared to the original image.

4.4. Transferability Evaluation

We first performed a single white-box attack on the source models ResNet-50, VGG-16, GoogleNet and MobileNet-v2, evaluated them based on the adversarial examples generated by these adversarial attack algorithms migrated to the remaining three models, and, finally, computed the transferability of our algorithm.

We then performed a white-box attack on the set of four models. More specifically, we used the feedback design loss functions of the four models and took their average value as the overall loss function to update our adversarial distribution search network to generate more generic adversarial examples using that information. We used the generic adversarial examples generated by this method to evaluate their transferability on different DNN classifiers and further tested their transferability on other black-box models, including AlexNet, DenseNet, ResNet-152 and ResNet-34. Furthermore, we also compared the black-box attack ability of the classical algorithms, FGSM and PGD, with ADSAttack to demonstrate the transferable performance of our algorithm.

As shown in Table 2, each of the four models on the left side represents the source model that generates the adversarial example, while the one above represents the target model that is attacked. For the data in the table, we use the attack success rate as the evaluation metric. From the data in the table, it can be seen that the value on the diagonal line is the highest, which indicates that the attack rate is highest when the target model is the same as the source model and decreases when it is different. The results show that our attack method is capable of attacking when it targets the black-box model structure. The feedback equation for the neural network’s loss function represents the parameters and structure of the network [3]. In our situation, the search is directed to our adversarial distribution by the propagation and convergence of the loss function. As a result, there is a significant correlation between the structure of the network and the adversarial distribution we seek. Target models with similar architecture tend to behave more naturally for the transfer of adversarial examples and vice versa. The transferability of the adversarial instances presented in Table 2 is unstable as a result.

Table 2.

Transferability—represented by the attack success rate (%)—of ADSAttack among different deep learning models.

Table 3’s quantification results demonstrate that the generic adversarial examples we produced are more stable and more transferable. Since the generic adversarial instances are generated from networks that attack these four networks simultaneously, they will contain general information related to the structure of these four models. As a result, the adversarial distribution will more likely include generic structural information from other models, making it easier to attack more models that also share this information.

Table 3.

Transferability—represented by attack success rate (%)—of universal adversarial examples among different deep learning models.

The experimental results show that, not only can the four target models be attacked with a success rate of no less than 95%, but the general adversarial example can successfully attack more neural networks with similar structures to the four target models with a success rate of no less than 75%. ADSAttack has some attack capability against completely black-boxed structures, which also indicates broader use. The comparison results in Table 4 also emphasize the excellent portability of ADSAttack. Therefore, the attack method can also be applied to other domains, such as face-recognition transfer-based attacks [36], where our attacks become more threatening when we learn enough information about the latent space of face-recognition models. However, our ADSAttack is limited when there is less information about the available models. In future work, we intend to obtain higher transferability with more limited model information.

Table 4.

Comparison experiments of five algorithms, including FGSM, PGD, AdvGAN, ColorFool and our ADSAttack with respect to black-box transferability—represented by attack success rate (%)—among different deep learning models.

5. Conclusions

In this paper, we propose an ADSAttack algorithm to operate adversarial attacks with higher transferability, less time consumption, and which is more imperceptible in visualization.The ADSAttack algorithm turns an adversarial example generation problem into a search problem for suitable perturbation in the latent space of the original input image. We trained an adversarial distributed search network for fast generation of adversarial perturbations, saving a significant amount of time over traditional adversarial attack algorithms, which require a large number of iterations to update the pixels for each input. We introduced an edge-detection algorithm to sketch key feature information mapping to the input space and disturb this area to minimize the modifications. To optimize the final results, we add global perturbations on the B channel to ensure attack ability and add edge perturbations on the R and G channels since they are relatively eye-sensitive based on the biological characteristics of the human visual system. We provide new ideas to fight against attacks from the perspective of biological vision. We use ADSAttack to search for generic adversarial distributions of more models that contain general generic structural information. The adversarial examples we generate using these generic adversarial features have high transferability. However, the transferability of ADSAttack is also somewhat limited when the available model information is limited. The experimental results show that our ADSAttack spends an average of 11.08 ± 0.26 s to generate 1000 adversarial examples with an average success rate of 98.01% against four target deep neural models. This poses a greater security challenge to the robustness of models in the field of artificial intelligence. The design of more secure and robust models is a worthwhile project.

Author Contributions

Conceptualization, Y.C. and X.C.; methodology, H.W.; software, H.W.; validation, C.Z.; formal analysis, C.Z. and Y.Z.; investigation, Y.Z. and X.C.; data curation, Y.Z.; writing—original draft, H.W. and C.Z.; writing—review and editing, Y.C. and J.L.; visualization, Y.Z.; supervision, Y.C., Y.Z. and J.L.; project administration, Y.C.; funding acquisition, Y.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China under Grant 61972092 and the Collaborative Innovation Major Project of Zhengzhou (20XTZX06013).

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Maqueda, A.I.; Loquercio, A.; Gallego, G.; García, N.; Scaramuzza, D. Event-based vision meets deep learning on steering prediction for self-driving cars. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5419–5427. [Google Scholar]

- Kononenko, I. Machine learning for medical diagnosis: History, state of the art and perspective. Artif. Intell. Med. 2001, 23, 89–109. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Guo, W.; Tondi, B.; Barni, M. An overview of backdoor attacks against deep neural networks and possible defences. arXiv 2021, arXiv:2111.08429. [Google Scholar] [CrossRef]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Ilyas, A.; Santurkar, S.; Tsipras, D.; Engstrom, L.; Tran, B.; Madry, A. Adversarial examples are not bugs, they are features. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 125–136. [Google Scholar]

- Liu, Y.; Chen, X.; Liu, C.; Song, D. Delving into transferable adversarial examples and black-box attacks. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Inkawhich, N.; Liang, K.; Carin, L.; Chen, Y. Transferable perturbations of deep feature distributions. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26 April–1 May 2020. [Google Scholar]

- Xiao, C.; Li, B.; Zhu, J.Y.; He, W.; Liu, M.; Song, D. Generating adversarial examples with adversarial networks. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, IJCAI-18, Stockholm, Sweden, 13–19 July 2018; pp. 3905–3911. [Google Scholar] [CrossRef]

- Jandial, S.; Mangla, P.; Varshney, S.; Balasubramanian, V. Advgan++: Harnessing latent layers for adversary generation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Liu, X.; Hsieh, C.-J. Rob-gan: Generator, discriminator, and adversarial attacker. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Mescheder, L.; Nowozin, S.; Geiger, A. The numerics of gans. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 1–17. [Google Scholar]

- Liu, C.; Shirowzhan, S.; Sepasgozar, S.M.; Kaboli, A. Evaluation of classical operators and fuzzy logic algorithms for edge detection of panels at exterior cladding of buildings. Buildings 2019, 9, 40. [Google Scholar] [CrossRef]

- Zhu, Y.; Sun, J.; Li, Z. Rethinking adversarial transferability from a data distribution perspective. In Proceedings of the International Conference on Learning Representations, Virtual, 3–7 May 2021. [Google Scholar]

- Wang, Z.; Guo, H.; Zhang, Z.; Liu, W.; Qin, Z.; Ren, K. Feature importance-aware transferable adversarial attacks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 7639–7648. [Google Scholar]

- Xie, C.; Zhang, Z.; Zhou, Y.; Bai, S.; Wang, J.; Ren, Z.; Yuille, A.L. Improving transferability of adversarial examples with input diversity. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2730–2739. [Google Scholar]

- Tu, C.-C.; Ting, P.; Chen, P.-Y.; Liu, S.; Zhang, H.; Yi, J.; Hsieh, C.-J.; Cheng, S.-M. Autozoom: Autoencoder-based zeroth order optimization method for attacking black-box neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 742–749. [Google Scholar]

- Huang, Z.; Zhang, T. Black-box adversarial attack with transferable model-based embedding. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26 April–1 May 2020. [Google Scholar]

- Zhao, Z.; Dua, D.; Singh, S. Generating natural adversarial examples. In Proceedings of the International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Deb, D.; Zhang, J.; Jain, A.K. Advfaces: Adversarial face synthesis. arXiv 2019, arXiv:1908.05008. [Google Scholar]

- Shamsabadi, A.S.; Sanchez-Matilla, R.; Cavallaro, A. Colorfool: Semantic adversarial colorization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Bhattad, A.; Chong, M.J.; Liang, K.; Li, B.; Forsyth, D.A. Unrestricted adversarial examples via semantic manipulation. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26 April–1 May 2020. [Google Scholar]

- Dong, X.; Han, J.; Chen, D.; Liu, J.; Bian, H.; Ma, Z.; Li, H.; Wang, X.; Zhang, W.; Yu, N. Robust superpixel-guided attentional adversarial attack. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Dartnall, H.J.A.; Bowmaker, J.K.; Mollon, J.D. Human visual pigments microspectrophotometric results from the eyes of seven persons. R. Lond. Ser. B 1983, 220, 115–130. [Google Scholar]

- Cicerone, C.M.; Nerger, J.L. The relative numbers of long-wavelength-sensitive to middle-wavelength-sensitive cones in the human fovea centralis. Vis. Res. 1989, 29, 115–128. [Google Scholar] [CrossRef] [PubMed]

- Carlini, N.; Wagner, D. Towards evaluating the robustness of neural networks. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–24 May 2017; pp. 39–57. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.A. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Aleksander, M.; Aleksandar, M.; Ludwig, S.; Dimitris, T.; Adrian, V. Towards deep learning models resistant to adversarial attacks. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. Imagenet classification with deep convolutional neural networks. Adv. Neural Inform. Processing Syst. 2012, 25, 84–90. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Laurens, V.; Weinberger, K.Q. Densely connected convolutional networks. arXiv 2016, arXiv:1608.06993. [Google Scholar]

- Xiao, Z.; Gao, X.; Fu, C.; Dong, Y.; Gao, W.; Zhang, X.; Zhou, J.; Zhu, J. Improving transferability of adversarial patches on face recognition with generative models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11845–11854. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).