APSN: Adversarial Pseudo-Siamese Network for Fake News Stance Detection

Abstract

1. Introduction

- Consistent News: The text body is consistent with the headline;

- Conflicting News: The text body contradicts with the headline;

- Neutral News: The text body discusses the same topic as the headline, but does not take a position;

- Unrelated News: The text body discusses a different topic rather than the headline.

- Size imbalance of headline and text body: We are the first to propose an exponential Pseudo-Siamese network for stance detection of fake news. The news headline is much shorter than its text body, which will lead to the imbalance of information. The exponential Pseudo-Siamese network we proposed can address such an imbalance:

- No human carefully selected features: Our model can learn the features automatically with pre-trained GloVe word vectors;

- Less training data with good performance: With only 60% of the training data, the proposed model can achieve a very good FNC score (89.7%), which is higher than the previous state-of-the-art method (89.0%) using all training data. With all of the data, our model can achieve the best FNC score (93.40%);

- Robustness to perturbation: Adversarial training method makes our model more robust against perturbation.

2. Related Work

3. Approach

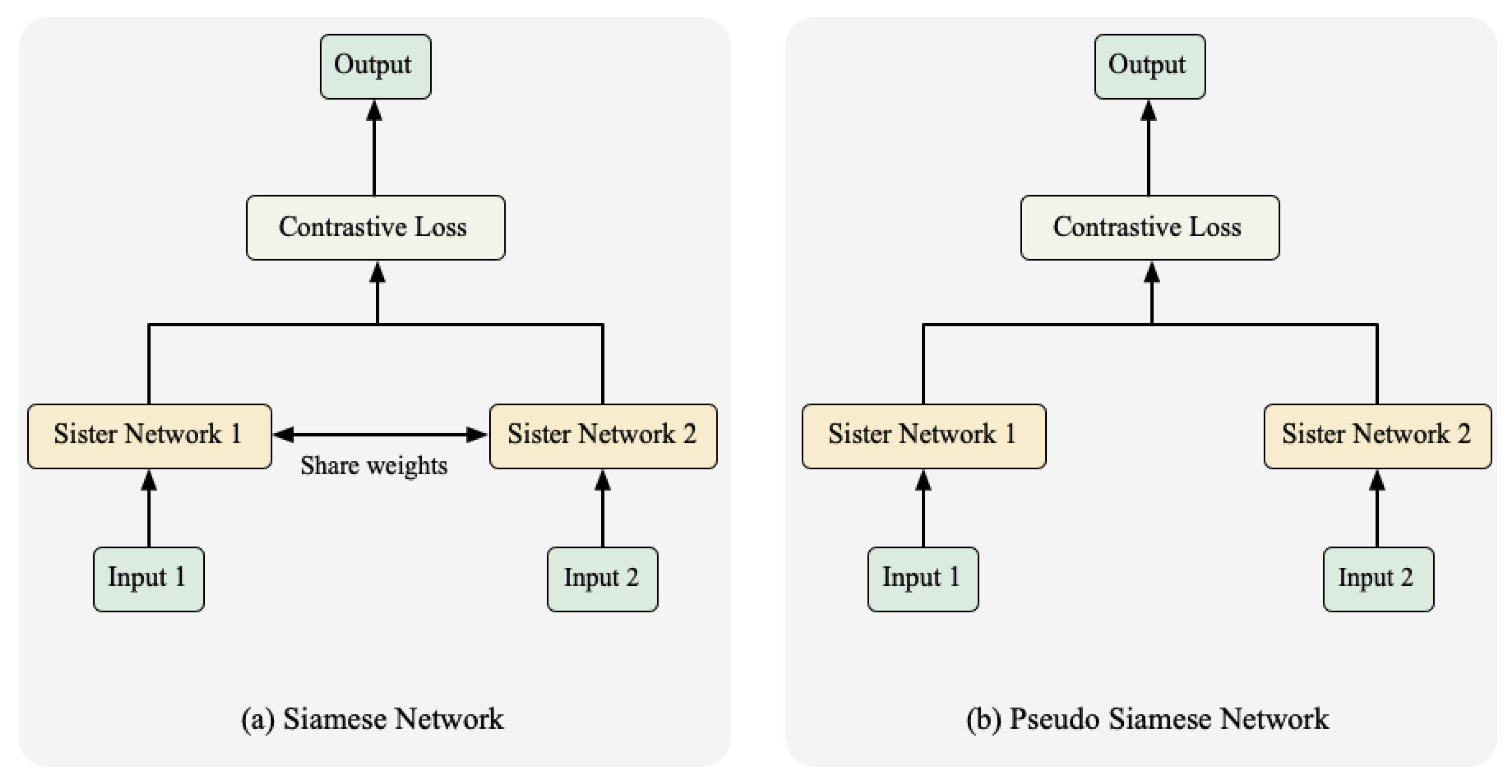

3.1. Pseudo Siamese Network

3.2. Model Architecture

3.2.1. Headline Branch

3.2.2. Text Body Branch

3.2.3. Exponential Distance

3.2.4. Adversarial Training

3.3. Contrastive Loss

4. Experiments

4.1. Case Study

4.2. Experimental Setup

4.3. Evaluation

4.4. Experimental Results

4.5. Sensitivity Analysis

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chang, J.; Lefferman, J.; Pedersen, C.; Martz, G. When fake news stories make real news headlines. Nightline. ABC News, 2016. Available online: https://abcnews.go.com/Technology/fake-news-stories-make-real-news-headlines/story?id=43845383 (accessed on 29 November 2016).

- Rao, S.; Verma, A.K.; Bhatia, T. A review on social spam detection: Challenges, open issues, and future directions. Expert Syst. Appl. 2021, 186, 115742. [Google Scholar] [CrossRef]

- Bindu, P.V.; Mishra, R.; Thilagam, P.S. Discovering spammer communities in twitter. J. Intell. Inf. Syst. 2018, 51, 503–527. [Google Scholar] [CrossRef]

- Gangavarapu, T.; Jaidhar, C.D.; Chanduka, B. Applicability of machine learning in spam and phishing email filtering: Review and approaches. Artif. Intell. Rev. 2020, 53, 5019–5081. [Google Scholar] [CrossRef]

- Ren, Y.; Ji, D. Neural networks for deceptive opinion spam detection: An empirical study. Inf. Sci. 2017, 385, 213–224. [Google Scholar] [CrossRef]

- Kaur, R.; Singh, S.; Kumar, H. Rise of spam and compromised accounts in online social networks: A state-of-the-art review of different combating approaches. J. Netw. Comput. Appl. 2018, 112, 53–88. [Google Scholar] [CrossRef]

- Rathore, S.; Loia, V.; Park, J.H. SpamSpotter: An efficient spammer detection framework based on intelligent decision support system on Facebook. Appl. Soft Comput. 2018, 67, 920–932. [Google Scholar] [CrossRef]

- Ferrara, E. The history of digital spam. Commun. ACM 2019, 62, 82–91. [Google Scholar] [CrossRef]

- Harada, J.; Darmon, D.; Girvan, M.; Rand, W. Prediction of Elevated Activity in Online Social Media Using Aggregated and Individualized Models. In Trends in Social Network Analysis; Springer: Berlin/Heidelberg, Germany, 2017; pp. 169–187. [Google Scholar]

- Fu, M.; Feng, J.; Lande, D.; Dmytrenko, O.; Manko, D.; Prakapovich, R. Dynamic model with super spreaders and lurker users for preferential information propagation analysis. Phys. A Stat. Mech. Its Appl. 2021, 561, 125266. [Google Scholar] [CrossRef]

- Tian, S.; Yin, X.C.; Su, Y.; Hao, H.W. A unified framework for tracking based text detection and recognition from Web videos. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 542–554. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, C.; Shen, W.; Yao, C.; Liu, W.; Bai, X. Multi-oriented text detection with fully convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4159–4167. [Google Scholar]

- Qin, X.; Zhou, Y.; Guo, Y.; Wu, D.; Wang, W. FC 2 RN: A Fully Convolutional Corner Refinement Network for Accurate Multi-Oriented Scene Text Detection. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2021, Toronto, ON, Canada, 6–11 June 2021; pp. 4350–4354. [Google Scholar] [CrossRef]

- Chopra, S.; Hadsell, R.; Lecun, Y. Learning a similarity metric discriminatively, with application to face verification. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 539–546. [Google Scholar]

- Augenstein, I.; Rocktäschel, T.; Vlachos, A.; Bontcheva, K. Stance Detection with Bidirectional Conditional Encoding. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, EMNLP 2016, Austin, TX, USA, 1–4 November 2016; Su, J., Carreras, X., Duh, K., Eds.; The Association for Computational Linguistics: Stroudsburg, PA, USA, 2016; pp. 876–885. [Google Scholar] [CrossRef]

- Zotova, E.; Agerri, R.; Rigau, G. Semi-automatic generation of multilingual datasets for stance detection in Twitter. Expert Syst. Appl. 2021, 170, 114547. [Google Scholar] [CrossRef]

- Al-Ghadir, A.I.; Azmi, A.M.; Hussain, A. A novel approach to stance detection in social media tweets by fusing ranked lists and sentiments. Inf. Fusion 2021, 67, 29–40. [Google Scholar] [CrossRef]

- Mohammad, S.; Kiritchenko, S.; Sobhani, P.; Zhu, X.D.; Cherry, C. SemEval-2016 Task 6: Detecting Stance in Tweets. In Proceedings of the SemEval@ NAACL-HLT, San Diego, CA, USA, 16–17 June 2016; pp. 31–41. [Google Scholar]

- Du, J.; Xu, R.; He, Y.; Gui, L. Stance Classification with Target-Specific Neural Attention Networks. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017. [Google Scholar]

- Yang, Y.; Wu, B.; Zhao, K.; Guo, W. Tweet Stance Detection: A Two-stage DC-BILSTM Model Based on Semantic Attention. In Proceedings of the 5th IEEE International Conference on Data Science in Cyberspace, DSC 2020, Hong Kong, China, 27–30 July 2020; pp. 22–29. [Google Scholar] [CrossRef]

- Wu, Y.; Ngai, E.W.T.; Wu, P.; Wu, C. Fake online reviews: Literature review, synthesis, and directions for future research. Decis. Support Syst. 2020, 132, 113280. [Google Scholar] [CrossRef]

- Mohawesh, R.; Tran, S.N.; Ollington, R.; Xu, S. Analysis of concept drift in fake reviews detection. Expert Syst. Appl. 2021, 169, 114318. [Google Scholar] [CrossRef]

- Abri, F.; Gutiérrez, L.F.; Namin, A.S.; Jones, K.S.; Sears, D.R.W. Linguistic Features for Detecting Fake Reviews. In Proceedings of the 19th IEEE International Conference on Machine Learning and Applications, ICMLA 2020, Miami, FL, USA, 14–17 December 2020; Wani, M.A., Luo, F., Li, X.A., Dou, D., Bonchi, F., Eds.; pp. 352–359. [Google Scholar] [CrossRef]

- Guillory, J.; Hancock, J.T. The effect of Linkedin on deception in resumes. Cyberpsychol. Behav. Soc. Netw. 2012, 15, 135–140. [Google Scholar] [CrossRef]

- Noekhah, S.; Salim, N.B.; Zakaria, N.H. Opinion spam detection: Using multi-iterative graph-based model. Inf. Process. Manag. 2020, 57, 102140. [Google Scholar] [CrossRef]

- Xu, G.; Hu, M.; Ma, C. Secure and smart autonomous multi-robot systems for opinion spammer detection. Inf. Sci. 2021, 576, 681–693. [Google Scholar] [CrossRef]

- Byun, H.; Jeong, S.; Kim, C. SC-Com: Spotting Collusive Community in Opinion Spam Detection. Inf. Process. Manag. 2021, 58, 102593. [Google Scholar] [CrossRef]

- Ojo, A.K. Improved model for detecting fake profiles in online social network: A case study of twitter. J. Adv. Math. Comput. Sci. 2019, 33, 1–17. [Google Scholar] [CrossRef]

- Awan, M.J.; Khan, M.A.; Ansari, Z.K.; Yasin, A.; Shehzad, H.M.F. Fake profile recognition using big data analytics in social media platforms. Int. J. Comput. Appl. Technol. 2021, 68, 215–222. [Google Scholar] [CrossRef]

- Joshi, S.; Nagariya, H.G.; Dhanotiya, N.; Jain, S. Identifying Fake Profile in Online Social Network: An Overview and Survey. In Proceedings of the International Conference on Machine Learning, Image Processing, Network Security and Data Sciences, Silchar, India, 30–31 July 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 17–28. [Google Scholar]

- Toma, C.L.; Hancock, J.T. What lies beneath: The linguistic traces of deception in online dating profiles. J. Commun. 2012, 62, 78–97. [Google Scholar] [CrossRef]

- Rubin, V.L.; Chen, Y.; Conroy, N.J. Deception detection for news: Three types of fakes. Proc. Assoc. Inf. Sci. Technol. 2015, 52, 1–4. [Google Scholar] [CrossRef]

- Zahedi, F.M.; Abbasi, A.; Chen, Y. Fake-Website Detection Tools: Identifying Elements that Promote Individuals’ Use and Enhance Their Performance. J. Arab. Islam. Stud. 2015, 16, 2. [Google Scholar] [CrossRef]

- Chopra, S.; Jain, S.; Sholar, J.M. Towards Automatic Identification of Fake News: Headline-Article Stance Detection with LSTM Attention Models; Tech. Rep.; Stanford Univ.: Stanford, CA, USA, 2017. [Google Scholar]

- Bromley, J.; Guyon, I.; LeCun, Y.; Säckinger, E.; Shah, R. Signature verification using a “Siamese” time delay neural network. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 28 November–1 December 1994; pp. 737–744. [Google Scholar]

- Ji, Y.; Zhang, H.; Jie, Z.; Ma, L.; Wu, Q.M.J. CASNet: A Cross-Attention Siamese Network for Video Salient Object Detection. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 2676–2690. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Wang, Y.; Zheng, K.; Li, W.; Chang, C.; Harrison, A.P.; Xiao, J.; Hager, G.D.; Lu, L.; Liao, C.; et al. Anatomy-Aware Siamese Network: Exploiting Semantic Asymmetry for Accurate Pelvic Fracture Detection in X-ray Images. In Lecture Notes in Computer Science, Proceedings of the Computer Vision—ECCV 2020—16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXIII; Vedaldi, A., Bischof, H., Brox, T., Frahm, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2020; Volume 12368, pp. 239–255. [Google Scholar] [CrossRef]

- Huang, F.; Zhang, X.; Xu, J.; Zhao, Z.; Li, Z. Multimodal Learning of Social Image Representation by Exploiting Social Relations. IEEE Trans. Cybern. 2021, 51, 1506–1518. [Google Scholar] [CrossRef]

- Fu, K.; Fan, D.P.; Ji, G.P.; Zhao, Q.; Shen, J.; Zhu, C. Siamese network for RGB-D salient object detection and beyond. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 4, 5541–5559. [Google Scholar] [CrossRef]

- Wang, Y.; Ma, F.; Jin, Z.; Yuan, Y.; Xun, G.; Jha, K.; Su, L.; Gao, J. Eann: Event adversarial neural networks for multi-modal fake news detection. In Proceedings of the 24th ACM Sigkdd International Conference On Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 849–857. [Google Scholar]

- Song, C.; Ning, N.; Zhang, Y.; Wu, B. Knowledge augmented transformer for adversarial multidomain multiclassification multimodal fake news detection. Neurocomputing 2021, 462, 88–100. [Google Scholar] [CrossRef]

- Wu, L.; Rao, Y.; Nazir, A.; Jin, H. Discovering differential features: Adversarial learning for information credibility evaluation. Inf. Sci. 2020, 516, 453–473. [Google Scholar] [CrossRef]

- Das, A.; Yenala, H.; Chinnakotla, M.K.; Shrivastava, M. Together we stand: Siamese Networks for Similar Question Retrieval. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; pp. 378–387. [Google Scholar]

- Lu, Z.; Li, H. A deep architecture for matching short texts. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–8 December 2013; pp. 1367–1375. [Google Scholar]

- Dadashov, E.; Sakshuwong, S.; Yu, K. Quora Question Duplication. 2017. Available online: https://sukolsak.com/files/quora_question_duplication.pdf (accessed on 1 February 2023).

- Shonibare, O. ASBERT: Siamese and Triplet network embedding for open question answering. arXiv 2021, arXiv:2104.08558. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Wikipedia. Long Short-Term Memory—Wikipedia, The Free Encyclopedia. 2017. Available online: https://en.wikipedia.org/wiki/Long_short-term_memory (accessed on 10 October 2017).

- Bengio, Y.; Simard, P.; Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 1994, 5, 157–166. [Google Scholar] [CrossRef]

- Zaremba, W.; Sutskever, I. Learning to execute. arXiv 2014, arXiv:1410.4615. [Google Scholar]

- Graves, A.; Jaitly, N.; Mohamed, A.R. Hybrid speech recognition with deep bidirectional LSTM. In Proceedings of the 2013 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU), Olomouc, Czech Republic, 8–12 December 2013; pp. 273–278. [Google Scholar]

- Goodfellow, I.J.; Pougetabadie, J.; Mirza, M.; Xu, B.; Wardefarley, D.; Ozair, S.; Courville, A.C.; Bengio, Y. Generative Adversarial Nets. Adv. Neural 2014, 2672–2680. [Google Scholar]

- Huang, F.; Zhang, X.; Li, Z. Learning Joint Multimodal Representation with Adversarial Attention Networks. In Proceedings of the 2018 ACM Multimedia Conference on Multimedia Conference, MM 2018, Seoul, Republic of Korea, 22–26 October 2018; Boll, S., Lee, K.M., Luo, J., Zhu, W., Byun, H., Chen, C.W., Lienhart, R., Mei, T., Eds.; ACM: New York, NY, USA, 2018; pp. 1874–1882. [Google Scholar] [CrossRef]

- Huang, F.; Jolfaei, A.; Bashir, A.K. Robust Multimodal Representation Learning With Evolutionary Adversarial Attention Networks. IEEE Trans. Evol. Comput. 2021, 25, 856–868. [Google Scholar] [CrossRef]

- Miyato, T.; Dai, A.M.; Goodfellow, I.J. Adversarial Training Methods for Semi-Supervised Text Classification. arXiv 2017, arXiv:1605.07725. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning, ICML 2015, Lille, France, 6–11 July 2015; JMLR Workshop and Conference Proceedings. Bach, F.R., Blei, D.M., Eds.; Volume 37, pp. 448–456. [Google Scholar]

- Zhou, Z.; Yang, Y.; Huang, F.; Li, Z.J. APSN: Adversarial Pseudo-Siamese Network for Fake News Stance Detection. Res. Sq. 2022, 1–9. [Google Scholar] [CrossRef]

| Symbol | Symbol Name |

|---|---|

| Word Sequence of News Headline | |

| Word Sequence of News Text Body | |

| Word Vector of Headline | |

| Word Vector of Text Body | |

| Headline Feature of Headline Branch | |

| Text Body Feature of Text Body Branch | |

| Y | Label of News Pair |

| w | Parameters of Model |

| Headline | Text Body | Type |

|---|---|---|

| “Robert Plant Ripped up $800M Led Zeppelin Reunion, Contract” | “… Led Zeppelin’s Robert Plant turned down 500 MILLION to reform supergroup. …” | Consistent |

| “… No, Robert Plant did not rip up an $800 million deal to get Led Zeppelin back together. …” | Conflicting | |

| “… Robert Plant reportedly tore up an $800 million Led Zeppelin reunion deal. …” | Neutral | |

| “… Richard Branson’s Virgin Galactic is set to launch SpaceShipTwo today. …” | Unrelated |

| Type of News | Percentage |

|---|---|

| Consistent | 7.41% |

| Conflicting | 2.04% |

| Neutral | 17.74% |

| Unrelated | 72.81% |

| Model | FNC Score | Data Size (News Pair) | Hand-Coded Features | Table Note |

|---|---|---|---|---|

| GB Classifier | 79.53 | 49,979 | Word(ngram) Overlap Features and Indicator Features for Polarity and Refutation | Models Specification. GBDT: Gradient Boosting Decision Tree. CS: Cosine Siamese network. ES: Exponential Siamese network. AT: Adversarial Training. |

| CNN + GBDT | 82.02 | 49,979 | Count, TF-IDF, Sentiment | |

| CS | 89 | 49979 | Weighted Bag of Word | |

| ES + LSTM | 90.12 | 49,979 | None | |

| ES + LSTM + AT | 89.12 | 33,000 | None | |

| ES + LSTM + AT | 93.40 | 49,979 | None |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, Z.; Yang, Y.; Li, Z. APSN: Adversarial Pseudo-Siamese Network for Fake News Stance Detection. Electronics 2023, 12, 1043. https://doi.org/10.3390/electronics12041043

Zhou Z, Yang Y, Li Z. APSN: Adversarial Pseudo-Siamese Network for Fake News Stance Detection. Electronics. 2023; 12(4):1043. https://doi.org/10.3390/electronics12041043

Chicago/Turabian StyleZhou, Zhibo, Yang Yang, and Zhoujun Li. 2023. "APSN: Adversarial Pseudo-Siamese Network for Fake News Stance Detection" Electronics 12, no. 4: 1043. https://doi.org/10.3390/electronics12041043

APA StyleZhou, Z., Yang, Y., & Li, Z. (2023). APSN: Adversarial Pseudo-Siamese Network for Fake News Stance Detection. Electronics, 12(4), 1043. https://doi.org/10.3390/electronics12041043